the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Towards automatic finite-element methods for geodynamics via Firedrake

D. Rhodri Davies

Stephan C. Kramer

Sia Ghelichkhan

Angus Gibson

Firedrake is an automated system for solving partial differential equations using the finite-element method. By applying sophisticated performance optimisations through automatic code-generation techniques, it provides a means of creating accurate, efficient, flexible, easily extensible, scalable, transparent and reproducible research software that is ideally suited to simulating a wide range of problems in geophysical fluid dynamics. Here, we demonstrate the applicability of Firedrake for geodynamical simulation, with a focus on mantle dynamics. The accuracy and efficiency of the approach are confirmed via comparisons against a suite of analytical and benchmark cases of systematically increasing complexity, whilst parallel scalability is demonstrated up to 12 288 compute cores, where the problem size and the number of processing cores are simultaneously increased. In addition, Firedrake's flexibility is highlighted via straightforward application to different physical (e.g. complex non-linear rheologies, compressibility) and geometrical (2-D and 3-D Cartesian and spherical domains) scenarios. Finally, a representative simulation of global mantle convection is examined, which incorporates 230 Myr of plate motion history as a kinematic surface boundary condition, confirming Firedrake's suitability for addressing research problems at the frontiers of global mantle dynamics research.

- Article

(14328 KB) - Full-text XML

- BibTeX

- EndNote

Since the advent of plate tectonic theory, there has been a long and successful history of research software development within the geodynamics community. The earliest modelling tools provided fundamental new insight into the process of mantle convection, its sensitivity to variations in viscosity, and its role in controlling Earth's surface plate motions and heat transport (e.g. McKenzie, 1969; Minear and Toksoz, 1970; Torrance and Turcotte, 1971; McKenzie et al., 1973). Although transformative at the time, computational and algorithmic limitations dictated that these tools were restricted to a simplified approximation of the underlying physics and, excluding some notable exceptions (e.g. Baumgardner, 1985; Glatzmaier, 1988), to 2-D Cartesian geometries. They were specifically designed to address targeted scientific questions. As such, they offered limited flexibility, were not easily extensible, and were not portable across different platforms. Furthermore, since they were often developed for use by one or two expert practitioners, they were poorly documented: details of the implementation could only be determined by analysing the underlying code, which was often a non-trivial and specialised task.

Growing computational resources and significant theoretical and algorithmic advances have since underpinned the development of more advanced research software, which incorporates, for example, better approximations to the fundamental physical principles, including compressibility (e.g. Jarvis and McKenzie, 1980; Bercovici et al., 1992; Tackley, 1996; Bunge et al., 1997; Gassmoller et al., 2020), mineralogical-phase transformations (e.g. Tackley et al., 1993; Nakagawa et al., 2009; Hunt et al., 2012), multi-phase flow (e.g. Katz and Weatherley, 2012; Wilson et al., 2014), variable and non-linear rheologies (e.g. Moresi and Solomatov, 1995; Bunge et al., 1996; Trompert and Hansen, 1998; Tackley, 2000; Moresi et al., 2002; Jadamec and Billen, 2010; Stadler et al., 2010; Alisic et al., 2010; Le Voci et al., 2014; Garel et al., 2014; Jadamec, 2016), and feedbacks between chemical heterogeneity and buoyancy (e.g. van Keken, 1997; Tackley and Xie, 2002; Davies et al., 2012). In addition, these more recent tools can often be applied in more representative 2-D cylindrical and/or 3-D spherical shell geometries (e.g. Baumgardner, 1985; Bercovici et al., 1989; Jarvis, 1993; Bunge et al., 1997; van Keken and Ballentine, 1998; Zhong et al., 2000, 2008; Tackley, 2008; Wolstencroft et al., 2009; Stadler et al., 2010; Davies et al., 2013). The user base of these tools has rapidly increased, with software development teams emerging to enhance their applicability and ensure their ongoing functionality. These teams have done so by adopting best practices in modern software development, including version control, unit and regression testing across a range of platforms and validation of model predictions against a suite of analytical and benchmark solutions (e.g. Blankenbach et al., 1989; Busse et al., 1994; King et al., 2010; Tosi et al., 2015; Kramer et al., 2021a).

Nonetheless, given rapid and ongoing improvements in algorithmic design and software engineering alongside the development of robust and flexible scientific computing libraries that provide access to much of the low-level numerical functionality required by geodynamical models, a next generation of open-source and community-driven geodynamical research software has emerged, exploiting developments from the forefront of computational engineering. This includes ASPECT (e.g. Kronbichler et al., 2012; Heister et al., 2017; Bangerth et al., 2020), built on the deal.II (Bangerth et al., 2007), p4est (Burstedde et al., 2011) and Trilinos (Heroux et al., 2005) libraries, Fluidity (e.g. Davies et al., 2011; Kramer et al., 2012, 2021a, b), which is underpinned by the PETSc (Balay et al., 1997, 2021a, b) and Spud (Ham et al., 2009) libraries, Underworld2 (e.g. Moresi et al., 2007; Beucher et al., 2019), core aspects of which are built on the St Germain (Quenette et al., 2007) and PETSc libraries, and TerraFERMA (Wilson et al., 2017), which has foundations in the FEniCS (Logg et al., 2012; Alnes et al., 2014), PETSc and Spud libraries. By building on existing computational libraries that are highly efficient, extensively tested and validated, modern geodynamical research software is becoming increasingly reliable and reproducible. Its modular design also facilitates the addition of new features and provides a degree of confidence about the validity of previous developments, as evidenced by growth in the use and applicability of ASPECT over recent years.

However, even with these modern research software frameworks, some fundamental development decisions, such as the core physical equations, numerical approximations and general solution strategy, have been integrated into the basic building blocks of the code. Whilst there remains some flexibility within the context of a single problem, modifications to include different physical approximations or components, which can affect non-linear coupling and associated solution strategies, often require extensive and time-consuming development and testing, using either separate code forks or increasingly complex option systems. This makes reproducibility of a given simulation difficult, resulting in a lack of transparency – even with detailed documentation, specific details of the implementation are sometimes only available by reading the code itself, which, as noted previously, is non-trivial, particularly across different forks or with increasing code complexity (Wilson et al., 2017). This makes scientific studies into the influence of different physical or geometrical scenarios, using a consistent code base, extremely challenging. Those software frameworks that try to maintain some degree of flexibility often do so at the expense of performance: the flexibility to configure different equations, numerical discretisations and solver strategies, in different dimensions and geometries, requires implementation compromises in the choice of optimal algorithms and specific low-level optimisations for all possible configurations.

A challenge that remains central to research software development in geodynamics, therefore, is the need to provide accurate, efficient, flexible, easily extensible, scalable, transparent and reproducible research software that can be applied to simulating a wide range of scenarios, including problems in different geometries and those incorporating different approximations of the underlying physics (e.g. Wilson et al., 2017). However, this requires a large time commitment and knowledge that spans several academic disciplines. Arriving at a physical description of a complex system, such as global mantle convection, demands expertise in geology, geophysics, geochemistry, fluid mechanics and rheology. Discretising the governing partial differential equations (PDEs) to produce a suitable numerical scheme requires proficiency in mathematical analysis, whilst its translation into efficient code for massively parallel systems demands advanced knowledge in low-level code optimisation and computer architectures (e.g. Rathgeber et al., 2016). The consequence of this is that the development of research software for geodynamics has now become a multi-disciplinary effort, and its design must enable scientists across several disciplines to collaborate effectively, without requiring each of them to comprehend all aspects of the system.

Key to achieving this is to abstract, automate and compose the various processes involved in numerically solving the PDEs governing a specific problem (e.g. Logg et al., 2012; Alnes et al., 2014; Rathgeber et al., 2016; Wilson et al., 2017) to enable a separation of concerns between developing a technique and using it. As such, software projects involving automatic code generation have become increasingly popular, as these help to separate different aspects of development. Such an approach facilitates collaboration between computational engineers with expertise in hardware and software, computer scientists and applied mathematicians with expertise in numerical algorithms, and domain-specific scientists, such as geodynamicists.

In this study, we introduce Firedrake to the geodynamical modelling community: a next-generation automated system for solving PDEs using the finite-element method (e.g. Rathgeber et al., 2016; Gibson et al., 2019). As we will show, the finite-element method is well-suited to automatic code-generation techniques: a weak formulation of the governing PDEs, together with a mesh, initial and boundary conditions, and appropriate discrete function spaces, is sufficient to fully represent the problem. The purpose of this paper is to demonstrate the applicability of Firedrake for geodynamical simulation whilst also highlighting its advantages over existing geodynamical research software. We do so via comparisons against a suite of analytical and benchmark cases of systematically increasing complexity.

The remainder of the paper is structured as follows. In Sect. 2, we provide a background to the Firedrake project and the various dependencies of its software stack. In Sect. 3, we introduce the equations governing mantle convection which will be central to the examples developed herein, followed, in Sect. 4, by a description of their discretisation via the finite-element method and the associated solution strategies. In Sect. 5, we introduce a series of benchmark cases in Cartesian and spherical shell geometries. These are commonly examined within the geodynamical modelling community, and we describe the steps involved with setting up these cases in Firedrake, allowing us to highlight its ease of use. Parallel performance is analysed in Sect. 6, with a representative example of global mantle convection described and analysed in Sect. 7. The latter case confirms Firedrake's suitability for addressing research problems at the frontiers of global mantle dynamics research. Other components of Firedrake, which have not been showcased in this paper but which may be beneficial to various future research endeavours, are discussed in Sect. 8.

The Firedrake project is an automated system for solving partial differential equations using the finite-element method (e.g. Rathgeber et al., 2016). Using a high-level language that reflects the mathematical description of the governing equations (e.g. Alnes et al., 2014), the user specifies the finite-element problem symbolically. The high-performance implementation of assembly operations for the discrete operators is then generated “automatically” by a sequence of specialised compiler passes that apply symbolic mathematical transformations to the input equations to ultimately produce C (and C++) code (Rathgeber et al., 2016; Homolya et al., 2018). Firedrake compiles and executes this code to create linear or non-linear systems, which are solved by PETSc (Balay et al., 1997, 2021b, a). As stated by Rathgeber et al. (2016), in comparison to conventional finite-element libraries, and even more so with handwritten code, Firedrake provides a higher-productivity mechanism for solving finite-element problems whilst simultaneously applying sophisticated performance optimisations that few users would have the resources to code by hand.

Firedrake builds on the concepts and some of the code of the FEniCS project (e.g. Logg et al., 2012), particularly its representation of variational problems via the Unified Form Language (UFL) (Alnes et al., 2014). We note that the applicability of FEniCS for geodynamical problems has already been demonstrated (e.g. Vynnytska et al., 2013; Wilson et al., 2017). Both frameworks have the goal of saving users from manually writing low-level code for assembling the systems of equations that discretise their model physics. An important architectural difference is that, while FEniCS has components written in C++ and Python, Firedrake is completely written in Python, including its run-time environment (it is only the automatically generated assembly code that is in C/C++, although it does leverage the PETSc library, written in C, to solve the assembled systems, albeit through its Python interface – petsc4py). This provides a highly flexible user interface with ease of introspection of data structures. We note that the Python environment also allows deployment of handwritten C kernels should the need arise to perform discrete mesh-based operations that cannot be expressed in the finite-element framework, such as sophisticated slope limiters or bespoke sub-grid physics.

Firedrake offers several highly desirable features, rendering it well-suited to problems in geophysical fluid dynamics. As will be illustrated through a series of examples below, of particular importance in the context of this paper is Firedrake's support for a range of different finite-element discretisations, including a highly efficient implementation of those based on extruded meshes, programmable non-linear solvers and composable operator-aware solver preconditioners. As the importance of reproducibility in the computational geosciences is increasingly recognised, we note that Firedrake integrates with Zenodo and GitHub to provide users with the ability to generate a set of DOIs corresponding to the exact set of Firedrake components used to conduct a particular simulation, in full compliance with FAIR (findable, accessible, interoperable, reusable) principles.

2.1 Dependencies

Firedrake treats finite-element problems as a composition of several abstract processes, using separate packages for each. The framework imposes a clear separation of concerns between the definition of the problem (UFL, Firedrake language), the generation of computational kernels used to assemble the coefficients of the discrete equations (Two-Stage Form Compiler – TSFC – and FInAT), the parallel execution of this kernel (PyOP2) over a given mesh topology (DMPlex) and the solution of the resulting linear or non-linear systems (PETSc). These layers allow various types of optimisation to be applied at different stages of the solution process. The key components of this software stack are described next.

-

UFL – as we will see in the examples below, a core part of finite-element problems is the specification of the weak form of the governing PDEs. UFL, a domain-specific symbolic language with well-defined and mathematically consistent semantics that is embedded in Python, provides an elegant solution to this problem. It was pioneered by the FEniCS project (Logg et al., 2012), although Firedrake has added several extensions.

-

Firedrake language – in addition to the weak form of the PDEs, finite-element problems require the user to select appropriate finite elements, specify the mesh to be employed, set field values for initial and boundary conditions and specify the sequence in which solves occur. Firedrake implements its own language for these tasks, which was designed to be to a large extent compatible with DOLFIN (Logg et al., 2012), the runtime application programming interface (API) of the FEniCS project. We note that Firedrake implements various extensions to DOLFIN, whilst some features of DOLFIN are not supported by Firedrake.

-

FInAT (Kirby and Mitchell, 2019) incorporates all information required to evaluate the basis functions of the different finite-element families supported by Firedrake. In earlier versions of Firedrake this was done through tabulation of the basis functions evaluated at Gauss points (FIAT: Kirby, 2004). FInAT, however, provides this information to the form compiler as a combination of symbolic expressions and numerical values, allowing for further optimisations. FInAT allows Firedrake to support a wide range of finite elements, including continuous, discontinuous, H(div) and H(curl) discretisations and elements with continuous derivatives such as the Argyris and Bell elements.

-

TSFC – a form compiler takes a high-level description of the weak form of PDEs (here in the UFL) and produces low-level code that carries out the finite-element assembly. Firedrake uses the TSFC, which was developed specifically for the Firedrake project (Homolya et al., 2018), to generate its local assembly kernels. TSFC invokes two stages, where in the first stage UFL is translated to an intermediate symbolic tensor algebra language before translating this into assembly kernels written in C. In comparison to the form compilers of FEniCS (FFC and UFLACS), TSFC aims to maintain the algebraic structure of the input expression for longer, which opens up additional opportunities for optimisation.

-

PyOP2 – a key component of Firedrake's software stack is PyOP2, a high-level framework that optimises the parallel execution of computational kernels on unstructured meshes (Rathgeber et al., 2012; Markall et al., 2013). Where the local assembly kernels generated by TSFC calculate the values of a local tensor from local input tensors, all associated with the degrees of freedom (DOFs) of a single element, PyOP2 wraps this code in an additional layer responsible for the extraction and addition of these local tensors out of/into global structures such as vectors and sparse matrices. It is also responsible for the maintenance of halo layers, the overlapping regions in a parallel decomposed problem. PyOP2 allows for a clean separation of concerns between the specification of the local kernel functions, in which the numerics of the method are encoded, and their efficient parallel execution. More generally, this separation of concerns is the key novel abstraction that underlies the design of the Firedrake system.

-

DMPlex – PyOP2 has no concept of the topological construction of a mesh. Firedrake derives the required maps through DMPlex, a data management abstraction that represents unstructured mesh data, which is part of the PETSc project (Knepley and Karpeev, 2009). This allows Firedrake to leverage the DMPlex partitioning and data migration interfaces to perform domain decomposition at run time whilst supporting multiple mesh file formats. Moreover, Firedrake reorders mesh entities to ensure computational efficiency (Lange et al., 2016).

-

Linear and non-linear solvers – Firedrake passes solver problems on to PETSc (Balay et al., 1997, 2021a, b), a well-established, high-performance solver library that provides access to several of its own and third-party implementations of solver algorithms. The Python interface to PETSc (Dalcin et al., 2011) makes integration with Firedrake straightforward. We note that employing PETSc for both its solver library and for DMPlex has the additional advantage that the set of library dependencies required by Firedrake is kept small (Rathgeber et al., 2016).

Our focus here is on mantle convection, the slow creeping motion of Earth's mantle over geological timescales. The equations governing mantle convection are derived from the conservation laws of mass, momentum and energy. The simplest mathematical formulation assumes a single incompressible material and the Boussinesq approximation (McKenzie et al., 1973), under which the non-dimensional momentum and continuity equations are given by

where is the stress tensor, u is the velocity and T is the temperature. is the unit vector in the direction opposite to gravity and Ra0 denotes the Rayleigh number, a dimensionless number that quantifies the vigour of convection:

Here, ρ0 denotes the reference density, α the thermal expansion coefficient, ΔT the characteristic temperature change across the domain, g the gravitational acceleration, d the characteristic length, μ0 the reference dynamic viscosity and κ the thermal diffusivity. Note that the above non-dimensional equations are obtained through the following characteristic scales: length d, time and temperature ΔT.

When simulating incompressible flow, the full stress tensor, , is decomposed into deviatoric and volumetric components:

where is the deviatoric stress tensor, p is dynamic pressure and I is the identity matrix. Substituting Eq. (4) into Eq. (1) and utilizing the constitutive relation

which relates the deviatoric stress tensor, , to the strain-rate tensor, , yields

The viscous flow problem can thus be posed in terms of pressure, p, velocity, u, and temperature, T. The evolution of the thermal field is controlled by an advection–diffusion equation:

These governing equations are sufficient to solve for the three unknowns together with adequate boundary and initial conditions.

For the derivation of the finite-element discretisation of Eqs. (6), (2) and (7), we start by writing these in their weak form. We select appropriate function spaces V, W and Q that contain respectively the solution fields for velocity u, pressure p and temperature T and also contain the test functions v,w and q. The weak form is then obtained by multiplying these equations by the test functions and integrating over the domain Ω:

Note that we have integrated by parts the viscosity and pressure gradient terms in Eq. (6) and the diffusion term in Eq. (7) but have omitted the corresponding boundary terms, which will be considered in the following section.

Equations (8)–(10) are a more general representation of the continuous PDEs in strong form (Eqs. 6, 2 and 7), provided suitable function spaces with sufficient regularity are chosen (see for example Zienkiewicz et al., 2005; Elman et al., 2005). Finite-element discretisation proceeds by restricting these function spaces to finite-dimensional subspaces. These are typically constructed by dividing the domain into cells or elements and restricting it to piecewise polynomial subspaces with various continuity requirements between cells. Firedrake offers a very wide range of such finite-element function spaces (see Kirby and Mitchell, 2019, for an overview). It should be noted however that, in practice, this choice is guided by numerical stability considerations in relation to the specific equations that are being solved. In particular, the choice of velocity and pressure function spaces used in the Stokes system is restricted by the Ladyzhenskaya–Babuška–Brezzi (LBB) condition (see Thieulot and Bangerth, 2022, for an overview of common choices for geodynamical flow). In this paper, we focus on the use of the familiar Q2Q1 element pair for velocity and pressure, which employs piecewise continuous bi-quadratic and bilinear polynomials on quadrilaterals or hexahedra for velocity and pressure respectively. In addition, to showcase Firedrake's flexibility, we use the less familiar Q2P1DG pair in a number of cases, in which pressure is discontinuous and piecewise linear (but not bilinear). For temperature, we primarily use a Q2 discretisation but also show some results using a Q1 discretisation.

All that is required for the implementation of these choices is that a basis can be found for the function space such that each solution can be written as a linear combination of basis functions. For example, if we have a basis ϕi of the finite-dimensional function space Qh of temperature solutions, then we can write each temperature solution as

where Ti represents the coefficients that we can collect into a discrete solution vector . Using a Lagrangian polynomial basis, the coefficients Ti correspond to values at the nodes, where each node i is associated with one basis function ϕi, but this is not generally true for other choices of finite-element bases.

In curved domains, boundaries can be approximated with a finite number of triangles, tetrahedrals, quadrilaterals or hexahedrals. This can be seen as a piecewise linear (or bilinear/trilinear) approximation where the domain is approximated by straight lines (edges) between vertices. A more accurate representation of the domain is obtained by allowing higher-order polynomials that describe the physical embedding of the element within the domain. A typical choice is to use a so-called iso-parametric representation in which the polynomial order of the embedding is the same as that of the discretised functions that are solved for.

Finally, we note that it is common to use a subscript h for the discrete, finite-dimensional function subspaces and Ωh for the discretised approximation by the mesh of the domain Ω, but since the remainder of this paper focusses on the details and implementation of this discretisation, we simply drop the h subscripts from here on.

4.1 Boundary conditions

In the Cartesian examples considered below, zero-slip and free-slip boundary conditions for Eqs. (8) and (9) are imposed through strong Dirichlet boundary conditions for velocity u. This is achieved by restricting the velocity function space V to a subspace V0 of vector functions for which all components (zero-slip) or only the normal component (free-slip) are zero at the boundary. Since this restriction also applies to the test functions v, the weak form only needs to be satisfied for all test functions v∈V0 that satisfy the homogeneous boundary conditions. Therefore, the omitted boundary integral

that was required to obtain the integrated-by-parts viscosity term in Eq. (8) automatically vanishes for zero-slip boundary conditions as v=0 at the domain boundary, ∂Ω. In the case of a free-slip boundary condition for which the tangential components of v are non-zero, the boundary term does not vanish, but by omitting that term in Eq. (8), we weakly impose a zero shear stress condition. The boundary term obtained by integrating the pressure gradient term in Eq. (2) by parts,

also vanishes as for v∈V0 in both the zero-slip and free-slip cases.

Similarly, in the examples presented below, we impose strong Dirichlet boundary conditions for temperature at the top and bottom boundaries of our domain. The test functions are restricted to Q0, which consists of temperature functions that satisfy homogeneous boundary conditions at these boundaries, and thus

the boundary term associated with integrating by parts of the diffusion term, vanishes. In Cartesian domains the boundary term does not vanish for the lateral boundaries, but by omitting this term from Eq. (10) we weakly impose a homogeneous Neumann (zero-flux) boundary condition at these boundaries. The temperature solution itself is found in Q0+{Tinhom}, where Tinhom is any representative temperature function that satisfies the required inhomogeneous boundary conditions.

In curved domains, such as the 2-D cylindrical shell and 3-D spherical shell cases examined below, imposing free-slip boundary conditions is complicated by the fact that it is not straightforward to decompose the degrees of freedom of the velocity space V into tangential and lateral components for many finite-element discretisations. For Lagrangian-based discretisations we could define normal vectors at the Lagrangian nodes on the surface and decompose accordingly, but these normal vectors would have to be averaged due to the piecewise approximation of the curved surface. To avoid such complications for our examples in cylindrical and spherical geometries, we employ a symmetric Nitsche penalty method (Nitsche, 1971) where the velocity space is not restricted and, thus, retains all discrete solutions with a non-zero normal component. This entails adding the following three surface integrals to Eq. (8):

The first of these corresponds to the normal component of Eq. (12) associated with integration by parts of the viscosity term. The tangential component, as before, is omitted and weakly imposes a zero shear stress condition. The second term ensures symmetry of Eq. (8) with respect to u and v. The third term penalises the normal component of u and involves a penalty parameter CNitsche>0 that should be sufficiently large to ensure coercivity of the bilinear form FStokes introduced in Sect. 4.3. Lower bounds for CNitsche,f on each face f can be derived for simplicial (Shahbazi, 2005) and quadrilateral/hexahedral (Hillewaert, 2013) meshes respectively:

Af is the facet area of face f, the cell volume of the adjacent cell cf and p the polynomial degree of the velocity discretisation. Here, we introduce an additional factor, Cip, to account for spatial variance of the viscosity μ in the adjacent cell and domain curvature, which are not taken into account in the standard lower bounds (using Cip=1). In all free-slip cylindrical and spherical shell examples presented below, we use Cip=100. Finally, because the normal component of velocity is not restricted in the velocity function space, the boundary term (13) no longer vanishes, and we also need to weakly impose the non-normal flow condition on the continuity equation by adding the following integral to Eq. (9):

4.2 Temporal discretisation and solution process for temperature

For temporal integration, we apply a simple θ scheme to the energy Eq. (10):

where

is interpolated between the temperature solutions Tn and Tn+1 at the beginning and end of the n+1th time step using a parameter . In all examples that follow, we use a Crank–Nicolson scheme, where θ=0.5. It should be noted that the time-dependent energy equation is coupled with the Stokes system through the buoyancy term and, in some cases, the temperature dependence of viscosity. At the same time, the Stokes equation couples to the energy equation through the advective velocity. These combined equations can therefore be considered a coupled system that should be iterated over. The solution algorithm used here follows a standard time-splitting approach. We solve the Stokes system for velocity and pressure with buoyancy and viscosity terms, based on a given prescribed initial temperature field. In a separate step, we solve for the new temperature Tn+1 using the new velocity, advance in time and repeat. The same time loop is used to converge the coupling in steady-state cases.

Because Fenergy is linear in q, if we expand the test function q as a linear combination of basis functions ϕi of Q,

where is the vector with coefficients (i.e. the energy equation tested with the basis functions ϕi). Thus, to satisfy Eq. (19), we need to solve for a temperature T for which the entire vector is zero.

In the general non-linear case (for example, if the thermal diffusivity is temperature-dependent), this can be solved using a Newton solver, but here the system of equations is also linear in Tn+1 and, accordingly, if we also expand the temperature with respect to the same basis, , where we store the coefficients in a vector , we can write it in the usual form as a linear system of equations

with A the matrix that represents the Jacobian with respect to the basis ϕi and the right-hand-side vector containing all terms in Eq. (19) that do not depend on Tn+1, specifically

In the non-linear case, every Newton iteration requires the solution of such a linear system with a Jacobian matrix and a right-hand-side vector based on the residual , both of which are to be reassembled every iteration as Tn+1 is iteratively improved. For the 2-D cases presented in this paper, this asymmetric linear system is solved with a direct solver and in 3-D using a combination of the generalised minimal residual method (GMRES) Krylov subspace method with a symmetric successive over-relaxation (SSOR) preconditioner.

4.3 Solving for velocity and pressure

In a separate step, we solve Eqs. (8) and (9) for velocity and pressure. Since these weak equations need to hold for all test functions v∈V and w∈W, we can equivalently write, using a single residual functional FStokes,

where we have multiplied the continuity equation by −1 to ensure symmetry between the ∇p and ∇⋅u terms. This combined weak form that we simultaneously solve for a velocity u∈V and pressure p∈W is referred to as a mixed problem, and the combined solution (u,p) is said to be found in the mixed function space V⊕W.

As before, we expand the discrete solutions u and p and test functions v and w in terms of basis functions for V and W:

For isoviscous cases, where FStokes is linear in u and p, we then derive a linear system of the following form:

where

For cases with more general rheologies, in particular those with a strain-rate-dependent viscosity, the system is non-linear and can be solved using Newton's method. This requires the solution in every Newton iteration of a linear system of the same form as in Eq. (28) but with an additional term in K associated with . For the strain-rate-dependent cases presented in this paper, this takes the following form:

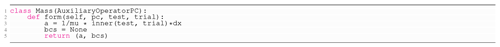

Note that the additional term makes the matrix explicitly dependent on the solution u itself and is asymmetric. Here, for brevity we have not expanded the derivative of μ with respect to the strain-rate tensor . Such additional terms require a significant amount of effort to implement in traditional codes and need adapting to the specific rheological approximation that is used, but this is all handled automatically here through the combination of symbolic differentiation and code generation in Firedrake.

There is a wide-ranging literature on iterative methods for solving saddle point systems of the form in Eq. (28). For an overview of the methods commonly used in geodynamics, see May and Moresi (2008). Here we employ the Schur complement approach, where pressure is determined by solving

It should be noted that K−1 is not assembled explicitly. Rather, in a first step we obtain by solving so that we can construct the right-hand side of the equation. We subsequently apply the flexible GMRES (Saad, 1993) iterative method to the linear system as a whole, in which each iteration requires matrix–vector multiplication by the matrix GTK−1G that again involves the solution of a linear system with matrix K. We also need a suitable preconditioner. Here we follow the inverse scaled-mass matrix approach which uses the following approximation:

Finally, after solving Eq. (33) for , we obtain in a final solve .

Since this solution process involves multiple solves with the matrix K, we also need an efficient algorithm to solve that system. For this, we combine the conjugate gradient method with an algebraic multigrid approach, specifically the geometric algebraic multigrid (GAMG) method implemented in PETSc (Balay et al., 1997, 2021a, b).

Depending on boundary conditions, the linearised Stokes system admits a number of null modes. In the absence of open boundaries, which is the case for all cases examined here, the pressure admits a constant null mode, where any arbitrary constant can be added to the pressure solution and remain a valid solution to the equations. In addition, cylindrical and spherical shell cases with free-slip boundary conditions at both boundaries admit respectively one and three independent rotational null modes in velocity. As these null modes result in singular matrices, preconditioned iterative methods should typically be provided with the null vectors.

In the absence of any Dirichlet conditions on velocity, the null space of the velocity block K also consists of a further two independent translational modes in 2-D and three in 3-D. Even in simulations where boundary conditions do not admit any rotational and translational modes, these solutions remain associated with low-energy modes of the matrix. Some multigrid methods use this information to improve their performance by ensuring that these so-called near-null-space modes are accurately represented at the coarser levels (Vanek et al., 1996). We make use of this in several of the examples considered below.

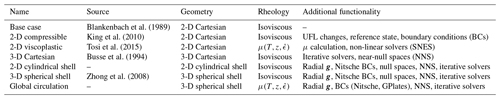

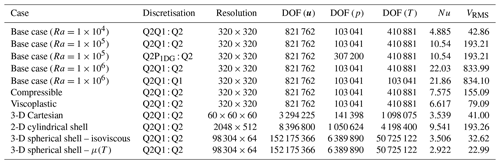

Firedrake provides a complete framework for solving finite-element problems, highlighted in this section through a series of examples. We start in Sect. 5.1 with the most basic problem – isoviscous, incompressible convection, in an enclosed 2-D Cartesian box – and systematically build complexity, initially moving into more realistic physical approximations (Sect. 5.2) and, subsequently, geometries that are more representative of Earth's mantle (Sect. 5.3). The cases examined and the challenges associated with each are summarised in Table 1.

Blankenbach et al. (1989)King et al. (2010)Tosi et al. (2015)Busse et al. (1994)Zhong et al. (2008)Table 1Summary of cases examined here, which systematically increase in complexity. The key differences and challenges differentiating each case from the base case are highlighted in the final column.

5.1 Basic example: 2-D convection in a square box

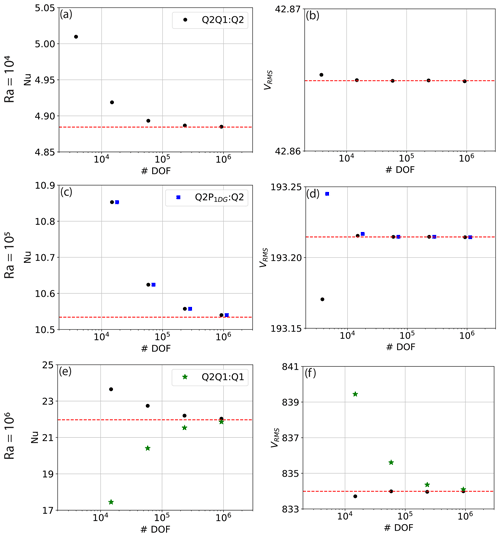

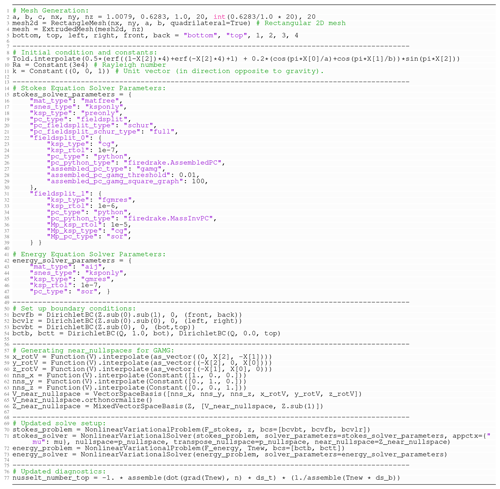

Listing 1Firedrake code required to reproduce 2-D Cartesian incompressible isoviscous benchmark cases from Blankenbach et al. (1989).

A simple 2-D square convection problem, from Blankenbach et al. (1989), for execution in Firedrake, is displayed in Listing 1. The problem is incompressible, isoviscous, heated from below and cooled from above, with closed, free-slip boundaries, on a unit square mesh. Solutions are obtained by solving the Stokes equations for velocity and pressure alongside the energy equation for temperature. The initial temperature distribution is prescribed as follows:

where A=0.05 is the amplitude of the initial perturbation.

We have set up the problem using a bilinear quadrilateral element pair (Q2Q1) for velocity and pressure, with Q2 elements for temperature. Firedrake user code is written in Python, so the first step, illustrated in line 1 of Listing 1, is to import the Firedrake module. We next need a mesh: for simple domains such as the unit square, Firedrake provides built-in meshing functions. As such, line 5 defines the mesh, with 40 quadrilateral elements in the x and y directions. We also need function spaces, which is achieved by associating the mesh with the relevant finite element in lines 11–13: V, W and Q are symbolic variables representing function spaces. They also contain the function space's computational implementation, recording the association of degrees of freedom with the mesh and pointing to the finite-element basis. The user does not usually need to pay any attention to this: the function space just behaves as a mathematical

object (Rathgeber et al., 2016). Function spaces can be combined in the natural way to create mixed function spaces, as we do in line 14, combining the velocity and pressure function spaces to form a function space for the mixed Stokes problem, Z. Here we specify continuous Lagrange elements (CG) of polynomial degree 2 and 1 for velocity and pressure respectively, on a quadrilateral mesh, which gives us the Q2Q1 element pair. Test functions v, w and q are subsequently defined (lines

17–18), and we also specify functions to hold our solutions (lines 19–22): z in the mixed function space, noting that a symbolic representation of the two parts – velocity and pressure – is obtained with split in line 20 and Told and Tnew (line 21), required for the Crank–Nicolson scheme used for temporal discretisation in our energy equation (see Eqs. 19 and 20 in Sect. 4.2), where Tθ is defined in line 22.

We obtain symbolic expressions for coordinates in the physical

mesh (line 25) and subsequently use these to initialise the old temperature field, via Eq. (35), in line 26. This is where Firedrake transforms a symbolic operation into a numerical computation for the first time: the interpolate method generates C code that evaluates this expression in the function space associated with Told and immediately executes it to populate the coefficient values of Told. We initialise Tnew with the values of Told, in line 27, via the assign function. Important constants in this problem (Rayleigh number, Ra; viscosity, μ; thermal diffusivity, κ) and unit vector () are defined in lines 30–31. In addition, we define a constant for the time step (Δt) with an initial value of 10−6. Constant objects define spatial constants, with a value that can be overwritten in later time steps, as we do in this example using an adaptive time step. We note that viscosity could also be a Function if we wanted spatial variation.

We are now in a position to define the variational problems expressed in Eqs. (25) and (19). Although

in this test case the problems are linear, we maintain the more general

non-linear residual form and to allow for straightforward extension to non-linear problems below. The symbolic expressions for FStokes

and FEnergy in the UFL are given in lines 34–38: the resemblance to the mathematical formulation is immediately apparent. Integration over the domain is indicated by multiplication by dx.

Strong Dirichlet boundary conditions for velocity (bcvx, bcvy) and temperature (bctb, bctt) are specified in lines 41–42. A Dirichlet boundary condition is created by constructing a DirichletBC object, where the user must provide the function space with the boundary condition value and the part of the mesh at which it applies. The latter uses integer mesh markers which are commonly used by mesh generation software to tag entities of meshes. Boundaries are automatically tagged by the built-in meshes supported by Firedrake. For UnitSquareMesh being used here, tag 1 corresponds to the plane x=0, 2 to x=1, 3 to y=0 and 4 to y=1 (these integer values are assigned to left, right, bottom and top in line 6). Note how boundary conditions are being applied to the velocity part of the mixed finite-element space Z, indicated by Z.sub(0). Within Z.sub(0) we can further subdivide into Z.sub(0).sub(0) and Z.sub(0).sub(1) to apply boundary conditions to the x and y components of the velocity field only. To apply conditions to the pressure space, we would use Z.sub(1). This problem has a constant pressure null space, and we must ensure that our solver removes this space. To do so, we build a null-space object in line 43, which will subsequently be passed to the solver, and PETSc will seek a solution in the space orthogonal to the provided null space.

We finally come to solving the variational problem, with problems and solver

objects created in lines 59–62. We pass in the residual functions FStokes and FEnergy, solution fields (z,

Tnew), boundary conditions and, for the Stokes system, the null-space object. Solution of the two variational problems is undertaken by the PETSc library (Balay et al., 1997), guided by the solver parameters specified in lines 51–56 (see Balay et al., 2021a, b, for comprehensive documentation of all the PETSc options). The first option in line 52 instructs the Jacobian to be assembled in PETSc's default aij sparse matrix type. Although the Stokes and energy problems in this example are linear, for consistency with the latter cases, we use Firedrake's NonlinearVariationalSolver, which makes use of PETSc's Scalable

Nonlinear Equations Solvers (SNES) interface. However, since we do not actually need a non-linear solver for this case, we choose the ksponly method in line 53 indicating that only a single linear solve needs to be performed. The linear solvers are configured through PETSc's Krylov subspace (KSP) interface, where we can request a direct solver by choosing the preonly KSP method, in combination with lu as the “preconditioner” (PC) type (lines 54–55). The specific implementation of the LU-decomposition-based direct solver is selected in line 56 as the MUMPS library (Amestoy et al., 2001, 2019). As we shall see through subsequent examples, the solution process is fully programmable, enabling the creation of sophisticated solvers by combining multiple layers of Krylov methods and preconditioners (Kirby and Mitchell, 2018).

The time loop is defined in lines 75–84, with the Stokes system solved in line 80 and the energy equation in line 81. These solve calls once again convert symbolic mathematics into computation. The linear systems for both problems are based on the Jacobian matrix and a right-hand-side vector based on the residual, as indicated in Eqs. (22), (23) and (24) for the energy equation and Eqs. (28), (29), (30) and (31) for the Stokes equation. Note, however, that the symbolic expression for the Jacobian is derived automatically in the UFL. Firedrake's TSFC (Homolya et al., 2018) subsequently converts the UFL into highly optimised assembly code, which is then executed to create the matrix and vectors, with the resulting system passed to PETSc for solution. Output is written in lines 78–79 to a .pvd file, initialised in line 46, for visualisation in software such as ParaView (e.g. Ahrens et al., 2005).

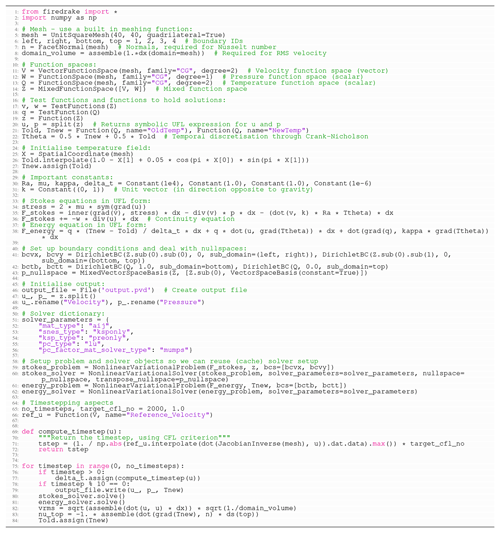

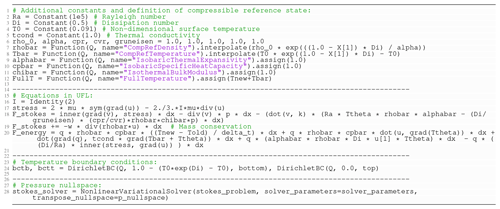

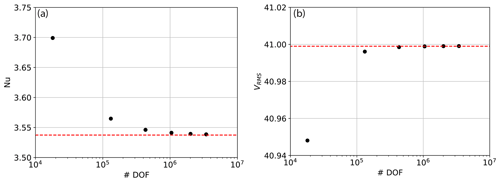

Figure 1Results from 2‐D incompressible isoviscous square convection benchmark cases: (a) Nusselt number vs. number of pressure and velocity degrees of freedom (DOFs) at (Case 1a – Blankenbach et al., 1989) for a series of uniform, structured meshes; (b) rms velocity vs. number of pressure and velocity DOFs at ; (c, d) as in panels (a) and (b) but at (Case 1b – Blankenbach et al., 1989); (e, f) at (Case 1c – Blankenbach et al., 1989). Benchmark values are denoted by dashed red lines. In panels (c) and (d), we also display results from simulations where the Stokes system uses the Q2P1DG finite-element pair (Q2P1DG:Q2) and in panels (e) and (f), where temperature is represented using a Q1 discretisation (Q2Q1 : Q1), for comparison to our standard Q2Q1 : Q2 discretisations.

After the first time step the time-step size Δt is adapted (lines 76–77) to a value computed in the compute_timestep function (lines 69–72). This function computes a Courant–Friedrichs–Lewy (CFL)-bound time step by first computing the velocity transformed from physical coordinates into the local coordinates of the reference element. This transformation is performed by multiplying velocity by the inverse of the Jacobian of the physical coordinate transformation and interpolating this into a predefined vector function u_ref (line 71). Since the dimensions of all quadrilaterals/hexahedrals in local coordinates have unit length in each direction, the CFL

condition now simplifies to , which needs to be satisfied for all components of uref. The maximum allowable

time step can thus be computed by extracting the reference velocity vectors at all nodal locations, obtained by taking the maximum absolute value of the .dat.data property of the interpolated function.

The advantage of this method of computing the time step over one based on the traditional CFL condition in the form of is that it generalises to non-uniform and curved (iso-parametric) meshes.

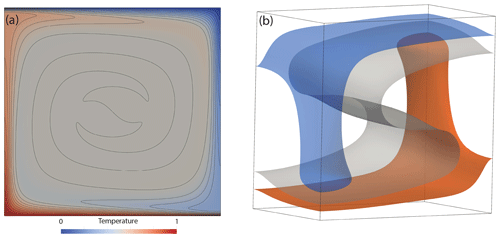

In 84 lines of Python (57 excluding comments and blank lines), we are able to produce a model that can be executed and quantitatively compared to benchmark results from Blankenbach et al. (1989). To do so, we have computed the root mean square (rms) velocity (line 82, using the domain volume specified in line 8) and surface Nusselt number (line 83, using a unit normal vector defined in line 7) at a range of different mesh resolutions and Rayleigh numbers, with results presented in Fig. 1. Results converge towards the benchmark solutions, with increasing resolution. The final steady-state temperature field, at , is illustrated in Fig. 2a.

Figure 2Final steady-state temperature field, in 2-D and 3-D, from Firedrake simulations, designed to match: (a) Case 1a from Blankenbach et al. (1989), with contours spanning temperatures of 0 to 1 at 0.05 intervals. (b) Case 1a is from Busse et al. (1994), with transparent isosurfaces plotted at T=0.3, 0.5 and 0.7.

To further highlight the flexibility of Firedrake, we have also simulated some of these cases using a Q2P1DG discretisation for the Stokes system and a Q1 discretisation for the temperature field. The modifications necessary are minimal: for the former, in line 12, the finite-element family is specified as “DPC”, which instructs Firedrake to use a discontinuous, piecewise linear discretisation for pressure. Note that this choice is distinct from a discontinuous, piecewise bilinear pressure space, which, in combination with Q2 velocities, is not LBB-stable, whereas the Q2P1DG pair is Thieulot and Bangerth (2022). For temperature, the degree specified in line 13 is changed from 2 to 1. Results using a discontinuous linear pressure, at , are presented in Fig. 1c, d, showing a similar trend to those of the Q2Q1 element pair, albeit with rms velocities converging towards benchmark values from above rather than below. Results using a Q1 discretisation for temperature, at , are presented in Fig. 1e, f, converging towards benchmark values with increasing resolution. We find that, as expected, a Q2 temperature discretisation leads to more accurate results, although results converge towards the benchmark solutions from different directions. For the remainder of the examples considered herein, we use a Q2Q1 discretisation for the Stokes system and a Q2 discretisation for temperature.

5.2 Extension: more realistic physics

We next highlight the ease with which simulations can be updated to incorporate more realistic physical approximations. We first account for compressibility under the anelastic liquid approximation (ALA) (e.g. Schubert et al., 2001), simulating a well-established benchmark case from King et al. (2010) (Sect. 5.2.1). We subsequently focus on a case with a more Earth-like approximation of the rheology (Sect. 5.2.2), simulating another well-established benchmark case from Tosi et al. (2015). All cases are set up in an enclosed 2-D Cartesian box with free-slip boundary conditions, with the required changes discussed relative to the base case presented in Sect. 5.1.

5.2.1 Compressibility

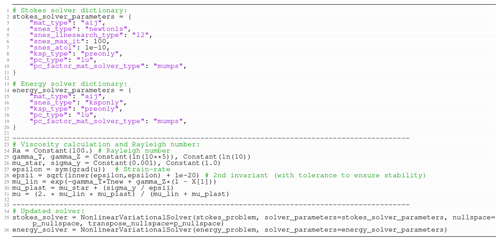

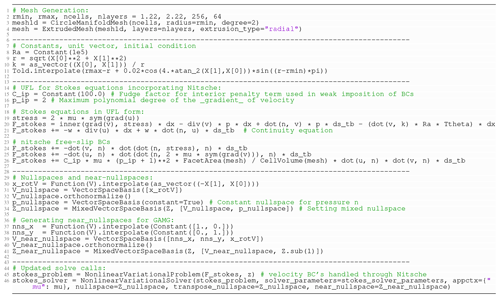

Listing 2Difference in Firedrake code required to reproduce compressible ALA cases from King et al. (2010) relative to our base case.

The governing equations applicable for compressible mantle convection, under the ALA, are presented in Appendix A (based on, for example, Schubert et al., 2001). Their weak forms are derived by multiplying these equations by appropriate test functions and integrating over the domain, as we did with their incompressible counterparts in Sect. 4. They differ appreciably from the incompressible approximations that have been utilised thus far, with important updates to all three governing equations. Despite this, the changes required to incorporate these equations, within the UFL and Firedrake, are minimal.

Although King et al. (2010) examined a number of cases, we focus on one illustrative example here, at Ra=105 and a dissipation number Di=0.5. This allows us to demonstrate the ease with which these cases can be configured within Firedrake. The required changes, relative to the base case, are displayed in Listing 2. They can be summarised as follows.

-

Definition and initialisation of additional constants and the 1-D reference state, derived here via an Adams–Williamson equation of state (lines 1–12). In this benchmark example, several of the key constants and parameters required for compressible convection are assigned values of 1 and could be removed. However, to ensure consistency between the governing equations presented in Appendix A and the UFL, we chose not to omit these constants in Listing 2.

-

The UFL for the momentum, mass conservation and energy equations is updated, emphasising once again the resemblance to the mathematical formulation (lines 16–20). The key changes are as follows: (i) the stress tensor is updated to account for a non-zero velocity divergence (line 17), where

Identityrepresents a unit matrix of a given size (2 in this case) anddivrepresents the symbolic divergence of a field. (ii) The Stokes equations are further modified to account for dynamic pressure's influence on buoyancy (final term in line 18). (iii) The mass conservation equation includes the depth-dependent reference density, (line 19), and (iv) the energy equation is updated to incorporate adiabatic heating and viscous dissipation terms (final two terms in line 20). -

Temperature boundary conditions are updated, noting that we are solving for deviatoric temperature rather than the full temperature, which also includes the reference state.

-

In our Stokes solver, we only specify the

transpose_nullspaceoption (as opposed to both thenullspaceandtranspose_nullspaceoptions for our base case): the incorporation of dynamic pressure's impact on buoyancy implies that the (right-hand-side) pressure null space is no longer the same as the (left-hand-side) transpose null space. The transpose null space remains the same space of constant pressure solutions and is used to project out these modes from the initial residual vector to ensure that the linear system is well-posed. The right-hand-side null space now consists of different modes, which can be found through integration. However, this null space is only required for iterative linear solvers in which the modes are projected out from the solution vector at each iteration to prevent its unbounded growth.

Figure 3Results from Firedrake simulations configured to reproduce the 2‐D compressible benchmark case from King et al. (2010) at Ra=105 and Di=0.5: (a) final steady-state (full) temperature field, with contours spanning temperatures of 0 to 1 at 0.05 intervals; (b) Nusselt number vs. number of pressure and velocity DOFs for a series of uniform, structured meshes; (c) rms velocity vs. number of pressure and velocity DOFs. The range of solutions provided by different codes in the King et al. (2010) benchmark study is bounded by dashed red lines.

We note that, in setting up the Stokes solver as we have, we incorporate the pressure effect on buoyancy implicitly, as advocated by Leng and Zhong (2008). As this term depends on the pressure that we are solving for, an extra term is required in addition to the pressure gradient matrix G in the Jacobian matrix in Eq. (28). The inclusion of in the continuity constraint also means that this term is no longer simply represented by the transpose of G. Such changes are automatically incorporated by Firedrake, highlighting a major benefit of the automatic assembly approach that is utilised. To ensure the validity of our approach, we have computed the rms velocity and Nusselt number at a range of different mesh resolutions, for direct comparison to King et al. (2010), with results presented in Fig. 3, alongside the final steady-state (full) temperature field. As expected, results converge towards the benchmark solutions, with increasing resolution, demonstrating the applicability and accuracy of Firedrake for compressible simulations of this nature.

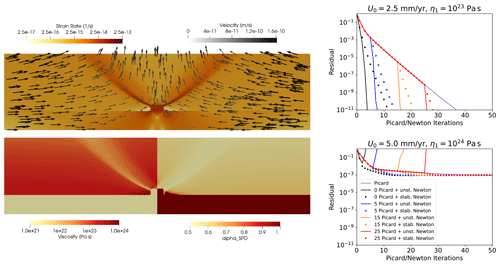

5.2.2 Viscoplastic rheology

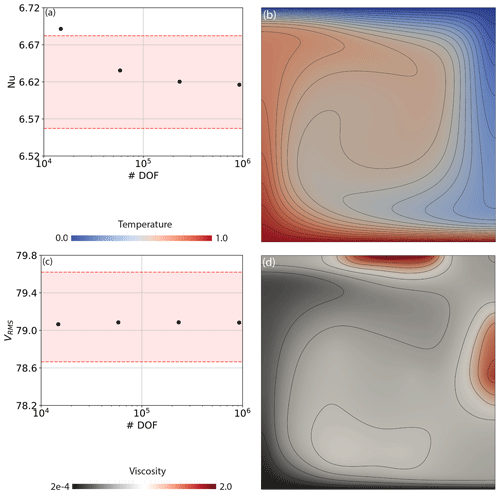

Listing 3Difference in Firedrake code required to reproduce viscoplastic rheology cases from Tosi et al. (2015) relative to our base case.

To illustrate the changes necessary to incorporate a viscoplastic rheology which is more representative of deformation within Earth's mantle and lithosphere, we examine a case from Tosi et al. (2015), a benchmark study intended to form a straightforward extension to Blankenbach et al. (1989). Indeed, aside from the viscosity and reference Rayleigh number (Ra0=102), all other aspects of this case are identical to the case presented in Sect. 5.1. The viscosity field, μ, is calculated as the harmonic mean between a linear component, μlin, and a non-linear plastic component, μplast, which is dependent on the strain rate, as follows:

The linear part is given by an Arrhenius law (the so-called Frank–Kamenetskii approximation):

where γT=ln (ΔμT) and γz=ln (Δμz) are parameters controlling the total viscosity contrast due to temperature and depth respectively. The non-linear component is given by

where μ⋆ is a constant representing the effective viscosity at high stresses and σy is the yield stress. The denominator of the second term in Eq. (38) represents the second invariant of the strain-rate tensor. The viscoplastic flow law (Eq. 36) leads to linear viscous deformation at low stresses and plastic deformation at stresses that exceed σy, with the decrease in viscosity limited by the choice of μ⋆.

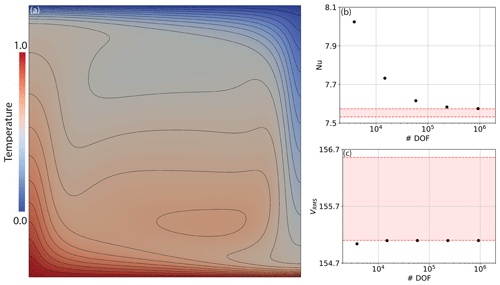

Figure 4Results from the 2‐D benchmark case from Tosi et al. (2015), with a viscoplastic rheology at Ra0=102: (a) Nusselt number vs. number of pressure and velocity DOFs for a series of uniform, structured meshes; (b) final steady-state temperature field, with contours spanning temperatures of 0 to 1, at 0.05 intervals; (c) rms velocity vs. number of pressure and velocity DOFs; (d) final steady-state viscosity field (note logarithmic scale). In panels (a) and (c), the range of solutions provided by different codes in the Tosi et al. (2015) benchmark study is bounded by dashed red lines.

Although Tosi et al. (2015) examined a number of cases, we focus on one here (Case 4: Ra0=102, ΔμT=105, Δμy=10 and ), which allows us to demonstrate how a temperature-, depth- and strain-rate-dependent viscosity is incorporated within Firedrake. The changes required to simulate this case, relative to our base case, are displayed in Listing 3. These are the following.

-

Linear solver options are no longer applicable, given the dependence of viscosity on the flow field, through the strain rate. Accordingly, the solver dictionary is updated to account for the non-linear nature of our Stokes system (lines 2–11). For the first time, we fully exploit the SNES using a set-up based on Newton's method (

"snes_type": "newtonls") with a secant line search over the L2 norm of the function ("snes_linesearch_type": "l2"). As we target a steady-state solution, an absolute tolerance is specified for our non-linear solver ("snes_atol": 1e-10). -

Solver options differ between the (non-linear) Stokes and (linear) energy systems. As such, a separate solver dictionary is specified for solution of the energy equation (lines 13–20). Consistent with our base case, we use a direct solver for solution of the energy equation based on the MUMPS library.

-

Viscosity is calculated as a function of temperature, depth (μlin – line 29) and strain rate (μplast – line 30), using constants specified in lines 25–26. Linear and non-linear components are subsequently combined via a harmonic mean (line 31).

-

Updated solver dictionaries are incorporated into their respective solvers in lines 35 and 36, noting that for this case both the null-space and transpose_nullspace options are provided for the Stokes system, consistent with the base case.

We note that even though the UFL for the Stokes and energy systems remains identical to our base case, assembly of additional terms in the Jacobian, associated with the non-linearity in this system, is once again handled automatically by Firedrake. To compare our results to those of Tosi et al. (2015), we have computed the rms velocity and Nusselt number at a range of different mesh resolutions. These are presented in Fig. 4 and, once again, results converge towards the benchmark solutions, with increasing resolution. Final steady-state temperature and viscosity fields are also illustrated to allow for straightforward comparison to those presented by Tosi et al. (2015), illustrating that viscosity varies by roughly 4 orders of magnitude across the computational domain.

Taken together, our compressible and viscoplastic rheology results demonstrate the accuracy and applicability of Firedrake for problems incorporating a range of different approximations to the underlying physics. They have allowed us to illustrate Firedrake's flexibility: by leveraging the UFL and PETSc, the framework is easily extensible, allowing for straightforward application to scenarios involving different physical approximations, even if they require distinct solution strategies.

5.3 Extension: dimensions and geometry

In this section we highlight the ease with which simulations can be examined in different dimensions and geometries by modifying our basic 2-D case. We primarily simulate benchmark cases that are well-known within the geodynamical community, initially matching the steady-state, isoviscous simulation of Busse et al. (1994) in a 3-D Cartesian domain. There is currently no published community benchmark for simulations in the 2-D cylindrical shell domain. As such, we next compare results for an isoviscous, steady-state case in a 2-D cylindrical shell domain to those of the Fluidity and ASPECT computational modelling frameworks, noting that Fluidity has been carefully validated against the extensive set of analytical solutions introduced by Kramer et al. (2021a) in both cylindrical and spherical shell geometries. Finally, we analyse an isoviscous 3-D spherical shell benchmark case from Zhong et al. (2008). Once again, the changes required to run these cases are discussed relative to our base case (Sect. 5.1) unless noted otherwise.

5.3.1 3-D Cartesian domain

We first examine and validate our set-up in a 3-D Cartesian domain for a steady-state, isoviscous case – specifically Case 1a from Busse et al. (1994). The domain is a box of dimensions . The initial temperature distribution, chosen to produce a single ascending and descending flow, at and respectively is prescribed as

where A=0.2 is the amplitude of the initial perturbation. We note that this initial condition differs from that specified in Busse et al. (1994), through the addition of boundary layers at the bottom and top of the domain (through the erf terms), although it more consistently drives solutions towards the final published steady-state results. Boundary conditions for temperature are T=0 at the surface (z=1) and T=1 at the base (z=0), with insulating (homogeneous Neumann) sidewalls. No‐slip velocity boundary conditions are specified at the top surface and base of the domain, with free‐slip boundary conditions on all sidewalls. The Rayleigh number is .

Listing 4Changes required to reproduce a 3-D Cartesian case from Busse et al. (1994) relative to Listing 1.

In comparison to Listing 1, the changes required to simulate this case, using Q2Q1 elements for velocity and pressure, are minimal. The key differences, summarised in Listing 4, are the following.

-

The creation of the underlying mesh (lines 1–5), which we generate by extruding a 2-D quadrilateral mesh in the z direction to a layered 3-D hexahedral mesh. Our final mesh has elements in the x, y and z directions respectively (noting that the default value for layer height is ). For extruded meshes, top and bottom boundaries are tagged by

topandbottomrespectively, whilst boundary markers from the base mesh can be used to set boundary conditions on the relevant side of the extruded mesh. We note that Firedrake exploits the regularity of extruded meshes to enhance performance. -

Specification of the initial condition for temperature, following Eq. (39), updated values for Ra and definition of the 3-D unit vector (lines 9–11).

-

The inclusion of Python dictionaries that define iterative solver parameters for the Stokes and energy systems (lines 15–47). Although direct solves provide robust performance in the 2-D cases examined above, in 3-D the computational (CPU and memory) requirements quickly become intractable. PETSc's

fieldsplitpc_typeprovides a class of preconditioners for mixed problems that allows one to apply different preconditioners to different blocks of the system. This opens up a large array of potential solver strategies for the Stokes saddle point system (e.g. many of the methods described in May and Moresi, 2008). Here we configure the Schur complement approach as described in Sect. 4.3. We note that thisfieldsplitfunctionality can also be used to provide a stronger coupling between the Stokes system and energy equation in strongly non-linear problems, where the Stokes and energy systems are solved together in a single Newton solve that is decomposed through a series of preconditioner stages.The

fieldsplit_0entries configure solver options for the first of these blocks, the K matrix. The linear systems associated with this matrix are solved using a combination of the conjugate gradient method (cg, line 23) and an algebraic multigrid preconditioner (gamg, line 27). We also specify two options (gamg_thresholdandgamg_square_graph) that control the aggregation method (coarsening strategy) in the GAMG preconditioner, which balance the multigrid effectiveness (convergence rate) with coarse grid complexity (cost per iteration) (Balay et al., 2021a).The

fieldsplit_1entries contain solver options for the Schur complement solve itself. As explained in Sect. 4.3, we do not have explicit access to the Schur complement matrix, GTK−1G, but can compute its action on any vector, at the cost of afieldsplit_0solve with the K matrix, which is sufficient to solve the system using a Krylov method. However, for preconditioning, we do need access to the values of the matrix or its approximation. For this purpose we approximate the Schur complement matrix with a mass matrix scaled by viscosity, which is implemented inMassInvPC(line 35) with the viscosity provided through the optionalappctxargument in line 71. This is a simple example of Firedrake's powerful programmable preconditioner interface, which, in turn, connects with the Python preconditioner interface of PETSc (line 34). In more complex cases the user can specify their own linear operator in the UFL that approximates the true linear operator but is easier to invert. TheMassInvPCpreconditioner step itself is performed through a linear solve with the approximate matrix with options prefixed withMp_to specify a conjugate gradient solver with symmetric SOR (SSOR) preconditioning (lines 36–38). Note that PETSc'ssorpreconditioner type, specified in line 38, defaults to the symmetric SOR variant. Since this preconditioner step now involves an iterative solve, the Krylov method used for the Schur complement needs to be of a flexible type, and we specifyfgmresin line 32.Specification of the matrix type

matfree(line 16) for the combined system ensures that we do not explicitly assemble its associated sparse matrix, instead computing the matrix–vector multiplications required by the Krylov iterations as they arise. For example, the action of the sub-matrix G on a sub-vector can be evaluated as (cf. Eqs. 28, 30)which is assembled by Firedrake directly from the symbolic expression into a discrete vector. Again, for preconditioning in the K-matrix solve, we need access to matrix values, which is achieved using

AssembledPC. This explicitly assembles the K matrix by extracting relevant terms from theF_Stokesform.Finally, the energy solve is performed through a combination of the GMRES (

gmres) Krylov method and SSOR preconditioning (lines 42–47). For all iterative solves we specify a convergence criterion based on the relative reduction of the preconditioned residual (ksp_rtol: lines 24, 33, 36 and 46). -

Velocity boundary conditions, which must be specified along all six faces, are modified in lines 51–53, with temperature boundary conditions specified in line 54.

-

Generating near-null-space information for the GAMG preconditioner (lines 58–66), consisting of three rotational (

x_rotV,y_rotV,z_rotV) and three translational (nns_x,nns_y,nns_z) modes, as outlined in Sect. 4.3. These are combined in the mixed function space in line 66. -

Updating of the Stokes problem (line 70) to account for additional boundary conditions and the Stokes solver (line 71) to include the near-null-space options defined above, in addition to the optional

appctxkeyword argument that passes the viscosity through to ourMassInvPCSchur complement preconditioner. Energy solver options are also updated relative to our base case (lines 72–73), using the dictionary created in lines 42–47.

Figure 5Results from 3‐D isoviscous simulations in Firedrake, configured to reproduce benchmark results from Case 1a of Busse et al. (1994): (a) Nusselt number vs. number of pressure and velocity DOFs at for a series of uniform, structured meshes; (b) rms velocity vs. number of pressure and velocity DOFs. Benchmark values are denoted by dashed red lines.

Listing 5Difference in Firedrake code required to reproduce the isoviscous case in a 2-D cylindrical shell domain.

Our model results can be validated against those of Busse et al. (1994). As with our previous examples, we compute the Nusselt number and rms velocity at a range of different mesh resolutions, with results presented in Fig. 5. We find that results converge towards the benchmark solutions with increasing resolution, as expected. The final steady-state temperature field is illustrated in Fig. 2b.

5.3.2 2-D cylindrical shell domain

We next examine simulations in a 2-D cylindrical shell domain, defined by the radii of the inner (rmin) and outer (rmax) boundaries. These are chosen such that the non-dimensional depth of the mantle is and the ratio of the inner and outer radii is , thus approximating the ratio between the radii of Earth's surface and the core–mantle boundary (CMB). Specifically, we set rmin=1.22 and rmax=2.22. The initial temperature distribution, chosen to produce four equidistant plumes, is prescribed as

where A=0.02 is the amplitude of the initial perturbation. Boundary conditions for temperature are T=0 at the surface (rmax) and T=1 at the base (rmin). Free‐slip velocity boundary conditions are specified on both boundaries, which we incorporate weakly through the Nitsche approximation (see Sect. 4.1). The Rayleigh number is .

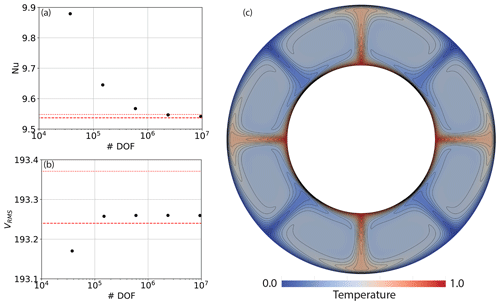

Figure 6(a, b) Nusselt number/rms velocity vs. number of pressure and velocity DOFs at for a series of uniform, structured meshes in a 2-D cylindrical shell domain. High-resolution, adaptive mesh results from the Fluidity computational modelling framework (Davies et al., 2011) are delineated by dashed red lines, with results from ASPECT delineated by dotted red lines (Bangerth et al., 2020); (c) final steady-state temperature field, with contours spanning temperatures of 0 to 1, at intervals of 0.05.

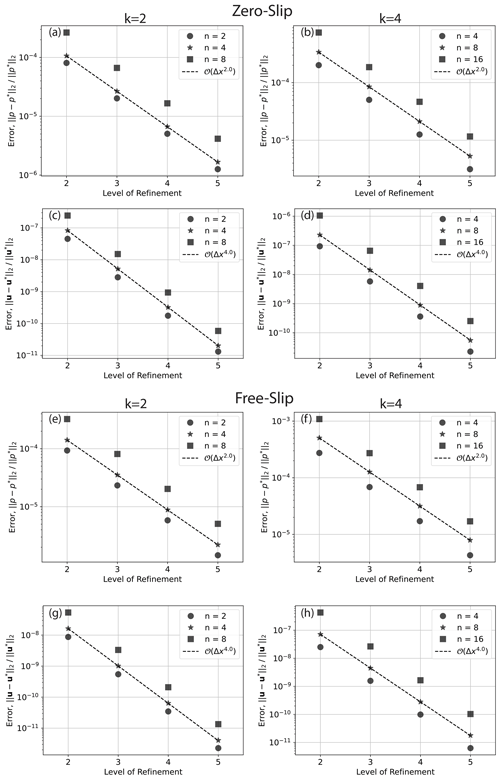

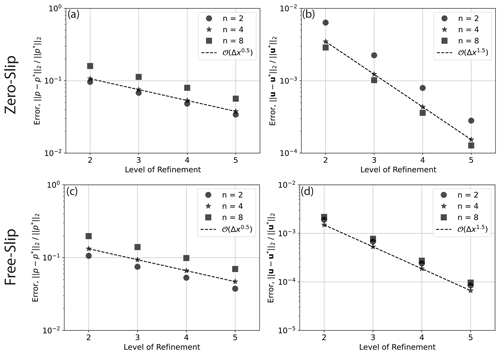

Figure 7Convergence for 2-D cylindrical shell cases with zero-slip (a–d) and free-slip (e–h) boundary conditions, driven by smooth forcing at a series of different wave numbers, n, and different polynomial orders of the radial dependence, k, as indicated in the legend (see Kramer et al., 2021a, for further details). Convergence rate is indicated by dashed lines, with the order of convergence provided in the legend. For the cases plotted, the series of meshes start at refinement level 1, where the mesh consists of 1024 divisions in the tangential direction and 64 radial layers. At each subsequent level the mesh is refined by doubling resolution in both directions.

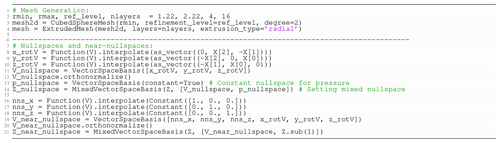

With a free-slip boundary condition on both boundaries, one can add an arbitrary rotation of the form to the velocity solution (i.e. this case incorporates a velocity null space as well as a pressure null space). As noted in Sect. 4, these lead to null modes (eigenvectors) for the linear system, rendering the resulting matrix singular. In preconditioned Krylov methods, these null modes must be subtracted from the approximate solution at every iteration (e.g. Kramer et al., 2021a), which we illustrate through this example. The key changes required to simulate this case, displayed in Listing 5, are the following.

-

Mesh generation: we generate a circular manifold mesh (with 256 elements in this example) and extrude in the radial direction, using the optional keyword argument

extrusion_type, forming 64 layers (lines 2–4). To better represent the curvature of the domain and ensure accuracy of our quadratic representation of velocity, we approximate the curved cylindrical shell domain quadratically, using the optional keyword argumentdegree=2 (see Sect. 4 for further details). -

The unit vector, , points radially in the direction opposite to gravity, as defined in line 10. The temperature field is initialised using Eq. (41) in line 11.

-

Boundary conditions are no longer aligned with Cartesian directions. We use the Nitsche method (see Sect. 4.1) to impose our free-slip boundary conditions weakly (lines 15–27). The fudge factor in the interior penalty term is set to 100 in line 16, with Nitsche-related contributions to the UFL added in lines 24–27. Note that, for extruded meshes in Firedrake,

ds_tbdenotes an integral over both the top and bottom surfaces of the mesh (ds_tandds_bdenote integrals over the top or bottom surface of the mesh respectively).FacetAreaandCellVolumereturn respectively Af and required by Eq. (17). Given that velocity boundary conditions are handled weakly through the UFL, they are no longer passed to the Stokes problem as a separate option (line 46). Note that, in addition to the Nitsche terms, the UFL for the Stokes equations now also includes boundary terms associated with the pressure gradient and velocity divergence terms, which were omitted in Cartesian cases (for details, see Sect. 4.1). -

We define the rotational null space for velocity and combine this with the pressure null space in the mixed finite-element space Z (lines 30–34). Constant and rotational near-null spaces, utilised by our GAMG preconditioner, are also defined in lines 37–41, with this information passed to the solver in line 46. Note that iterative solver parameters, identical to those presented in the previous example, are used (see Sect. 5.3.1).

Listing 6Difference in Firedrake code required to reproduce 3-D spherical shell benchmark cases from Zhong et al. (2008).

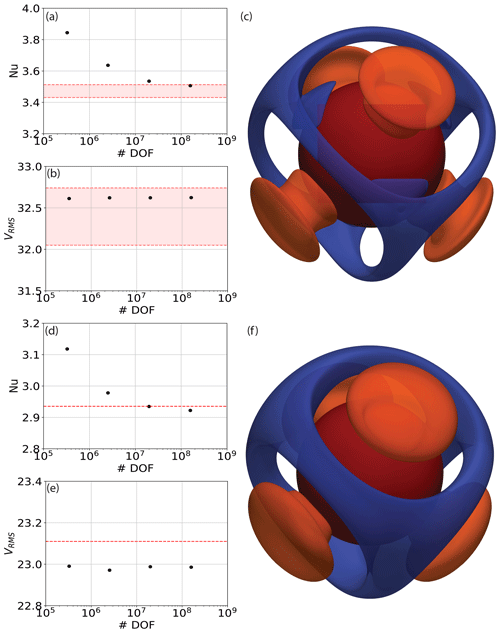

Figure 8(a, b) Nusselt number/rms velocity vs. number of pressure and velocity DOFs, designed to match an isoviscous 3-D spherical shell benchmark case at for a series of uniform, structured meshes. The range of solutions predicted in previous studies is bounded by dashed red lines (Bercovici et al., 1989; Ratcliff et al., 1996; Yoshida and Kageyama, 2004; Stemmer et al., 2006; Choblet et al., 2007; Tackley, 2008; Zhong et al., 2008; Davies et al., 2013; Liu and King, 2019). (c) Final steady-state temperature field highlighted through isosurfaces at temperature anomalies (i.e. away from the radial average) of (blue) and T=0.15 (orange), with the core–mantle boundary at the base of the spherical shell marked by a red surface; (d–f) as in (a)–(c) but for a temperature-dependent viscosity case, with thermally induced viscosity contrasts of 102. Fewer codes have published predictions for this case, but results of Zhong et al. (2008) are marked by dashed red lines for comparison.

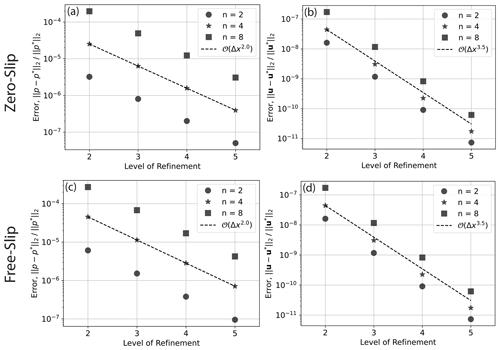

Our predicted Nusselt numbers and rms velocities converge towards those of existing codes with increasing resolution (Fig. 6), demonstrating the accuracy of our approach. To further assess the validity of our set-up, we have confirmed the accuracy of our solutions to the Stokes system in this 2-D cylindrical shell geometry, through comparisons to analytical solutions from Kramer et al. (2021a) for both zero-slip and free-slip boundary conditions. These provide a suite of solutions based upon a smooth forcing term at a range of wave numbers n, with radial dependence formed by a polynomial of arbitrary order k. We study the convergence of our Q2Q1 discretisation with respect to these solutions. Convergence plots are illustrated in Fig. 7. We observe super-convergence for the Q2Q1 element pair at fourth and second order, for velocity and pressure respectively, with both zero-slip and free-slip boundary conditions, which is higher than the theoretical (minimum) expected order of convergence of 3 for velocity and 2 for pressure (we note that super-convergence was also observed in Zhong et al., 2008, and Kramer et al., 2021a). Cases with lower wave number, n, show smaller relative error than those at higher n, as expected. The same observation holds for lower and higher polynomial orders, k=2 and k=4, for the radial density profile. To demonstrate the flexibility of Firedrake, we have also run comparisons against analytical solutions using a (discontinuous) delta-function forcing. In this case, convergence for the Q2Q1 discretisation (Fig. A1) drops to 1.5 and 0.5 for velocity and pressure respectively. However, by employing the Q2P1DG finite-element pair, we observe convergence at 3.5 and 2.0 (Fig. A2). Consistent with Kramer et al. (2021a), this demonstrates that the continuous approximation of pressure can lead to a reduced order of convergence in the presence of discontinuities, which can be overcome using a discontinuous pressure discretisation. Python scripts for these analytical comparisons can be found in the repository accompanying this paper.

5.3.3 3-D spherical shell domain

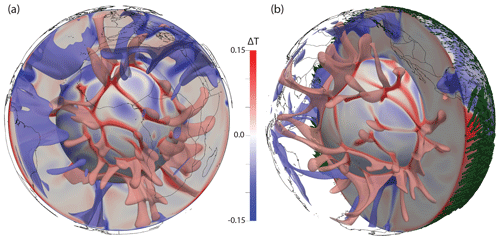

We next move into a 3-D spherical shell geometry, which is required to simulate global mantle convection. We examine a well-known isoviscous community benchmark case (e.g. Bercovici et al., 1989; Ratcliff et al., 1996; Zhong et al., 2008; Davies et al., 2013), at a Rayleigh number of , with free-slip velocity boundary conditions. Temperature boundary conditions are set to 1 at the base of the domain (rmin=1.22) and 0 at the surface (rmax=2.22), with the initial temperature distribution approximating a conductive profile with superimposed perturbations triggering tetrahedral symmetry at spherical harmonic degree l=3 and order m=2 (see Zhong et al., 2008, for further details).