the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

pyESDv1.0.1: an open-source Python framework for empirical-statistical downscaling of climate information

Daniel Boateng

Sebastian G. Mutz

The nature and severity of climate change impacts vary significantly from region to region. Consequently, high-resolution climate information is needed for meaningful impact assessments and the design of mitigation strategies. This demand has led to an increase in the application of empirical-statistical downscaling (ESD) models to general circulation model (GCM) simulations of future climate. In contrast to dynamical downscaling, the perfect prognosis ESD (PP-ESD) approach has several benefits, including low computation costs, the prevention of the propagation of GCM-specific errors, and high compatibility with different GCMs. Despite their advantages, the use of ESD models and the resulting data products is hampered by (1) the lack of accessible and user-friendly downscaling software packages that implement the entire downscaling cycle, (2) difficulties reproducing existing data products and assessing their credibility, and (3) difficulties reconciling different ESD-based predictions for the same region. We address these issues with a new open-source Python PP-ESD modeling framework called pyESD. pyESD implements the entire downscaling cycle, i.e., routines for data preparation, predictor selection and construction, model selection and training, evaluation, utility tools for relevant statistical tests, visualization, and more. The package includes a collection of well-established machine learning algorithms and allows the user to choose a variety of estimators, cross-validation schemes, objective function measures, and hyperparameter optimization in relatively few lines of code. The package is well-documented, highly modular, and flexible. It allows quick and reproducible downscaling of any climate information, such as precipitation, temperature, wind speed, or even short-term glacier length and mass changes. We demonstrate the use and effectiveness of the new PP-ESD framework by generating weather-station-based downscaling products for precipitation and temperature in complex mountainous terrain in southwestern Germany. The application example covers all important steps of the downscaling cycle and different levels of experimental complexity. All scripts and datasets used in the case study are publicly available to (1) ensure the reproducibility and replicability of the modeled results and (2) simplify learning to use the software package.

- Article

(14606 KB) - Full-text XML

- BibTeX

- EndNote

The impacts of anthropogenic climate change are far-reaching and spatially heterogeneous. Consequently, regional- and local-scale predictions of 21st century climate evolution are needed to help guide the design of adaptation measures, vulnerability assessments, and resilience strategies (Field and Barros, 2014; Weaver et al., 2013). General circulation models (GCMs) are well-established tools for simulating climate trends in response to different anthropogenic and natural forcings, such as atmospheric CO2 concentrations, land cover, and orbital changes. They are process-driven models based on our understanding of atmospheric physics. They are commonly used to predict future trends of climate change by prescribing predicted future forcings described by the Representative Concentration Pathways (RCPs). RCPs are greenhouse gas concentration scenarios that quantify the radiative forcing of plausible demographic and technological developments, as well as anthropogenic activities (Meinshausen et al., 2011; Pachauri et al., 2014). While GCMs can produce useful estimates of many climate system elements on the global and synoptic scale (such as circulation patterns), mesoscale atmospheric processes, clouds, and specific climate variables like precipitation are still relatively poorly represented (e.g., Steppeler et al., 2003). Moreover, GCM simulations are affected by systematic biases on the local and regional scale due to their coarse resolutions and model parameterization (e.g., Errico et al., 2001). These can lead to inaccurate predictions on the spatial scales that are relevant for regional climate change impact assessments, such as studies investigating the impacts on the hydrological cycle (Boé et al., 2009), mountain glaciers (Mutz et al., 2016; Mutz and Aschauer, 2022), air quality (e.g., Colette et al., 2012), and agriculture (e.g., Shahhosseini et al., 2020). Therefore, GCM-based predictions are downscaled by performing dynamical downscaling or statistical downscaling, with empirical-statistical downscaling (ESD) being one type of statistical downscaling (Murphy, 2000; Schmidli et al., 2007; Wilby and Dawson, 2013).

Dynamical downscaling involves the nesting of regional climate models (RCMs) into coarse-resolution GCM simulations to produce higher-resolution regional estimates. While RCMs allow an easy exploration of physical processes leading to the predicted climate, they are computationally costly. Furthermore, slight changes in the model domain and boundary conditions require the repetition of the whole process, thereby limiting their application in many climate impact studies (e.g., Giorgi and Mearns, 1991; Xu et al., 2019). ESD is computationally less costly and implicitly considers local conditions, such as topography and vegetation, without the need to parameterize them explicitly. It is widely used for climate change impact studies and relies on establishing empirical transfer functions to relate large-scale atmospheric variables (predictors) to a local-scale observation (predictand). ESD models can be directly coupled to GCMs (e.g., Mutz et al., 2021) or RCMs (e.g., Sunyer et al., 2015; Laflamme et al., 2016; Jakob Themeßl et al., 2011) in a one-way coupling or pipeline with no feedback into the climate models. ESD can be broadly categorized into perfect prognosis (PP) and model output statistics (MOS) approaches (Maraun and Widmann, 2018; Marzban et al., 2006). MOS uses simulated predictors from the GCM or RCM to find the transfer function and generate a predictand time series with bias corrections (e.g., Sachindra et al., 2014; Wilby et al., 1998). Therefore, the MOS-ESD transfer functions are specific to a particular GCM or RCM and not easily transferable to other models. In contrast, the PP-ESD approach is GCM- and RCM-agnostic: ESD models are obtained from observational data for both the predictand and predictors and can therefore be coupled to any GCM or RCM (e.g., Hertig et al., 2019; Mutz et al., 2021; Ramon et al., 2021; Tatli et al., 2004). Therefore, this paper, and the software package presented in it, focuses primarily on the PP-ESD approach.

The PP-ESD modeling framework consists of four critical steps to establish and evaluate the empirical transfer functions that constitute an ESD model (e.g., Maraun et al., 2010; Maraun and Widmann, 2018): (1) the first step involves the selection and construction of predictors. The selection of the most informative and relevant predictors generally increases the performance and robustness of PP-ESD models. Preliminary predictor selection should be guided by knowledge of the atmospheric dynamics that govern a specific regional climate. This selection may be refined using statistical dependency measures such as correlation analysis (e.g., Wilby et al., 2002; Wilby and Wigley, 2002), regularization regression (e.g., Hammami et al., 2012), stepwise multi-linear regression (e.g., Mutz et al., 2021), and decision tree selection (e.g., Nourani et al., 2019). The selected predictors should be able to explain most of the predictand's variability and must be represented well by the GCMs (Maraun and Widmann, 2018; Wilby et al., 2004). (2) The second step involves the selection of the learning algorithms (i.e., the learning model used for training the ESD model). These range from classical regressions and analog models, including parametric and nonparametric models (Gutiérrez et al., 2013; Zorita and Storch, 1999; Lorenz, 1969), to advanced machine learning (ML) algorithms (e.g., Sachindra et al., 2018; Xu et al., 2020). The various techniques vary in complexity, scalability, interpretability, and underlying assumptions. For example, classical regressions and analog models allow better interpretations of the simulated results and are usually simpler to implement. On the other hand, several ML algorithms have the ability to capture more complex links between predictors and predictands and do not require an explicit assumption of the distribution of observational data during the optimization process (Jordan and Mitchell, 2015; Raissi and Karniadakis, 2018). The choice of the optimal PP-ESD training technique depends on the predictand variable (e.g., precipitation and temperature), length of the observational records, spatiotemporal variability, spatial coherence, regional setting, and temporal stationarity of the transfer functions. (3) The third step involves the actual training and validation of the PP-ESD models, and (4) the final step is the PP-ESD model evaluation.

The high demand for climate change information on the regional and local scale has led to the widespread use of ESD methods and an overwhelming body of research to sort through in order to select the most suitable technique for a specific problem. In the past, generalized linear models (GLMs) (e.g., Fealy and Sweeney, 2007), regularization models (e.g., Li et al., 2020), Bayesian regression models (Das et al., 2014; e.g., Zhang and Yan, 2015), support vector machines (SVMs) (e.g., Chen et al., 2010; Ghosh and Mujumdar, 2008), artificial neural networks (ANNs) (e.g., Sachindra et al., 2018; Vu et al., 2016; Xu et al., 2020), homogeneous (e.g., random forest) and heterogeneous (e.g., stacking) ensemble learning models (e.g., Massaoudi et al., 2021; Pang et al., 2017; Zhang et al., 2021), and others have been used to construct PP-ESD models and downscale climate information. However, there is no universal protocol to help choose a robust model for a specific region and climate variable (Gutiérrez et al., 2019), thus making the selection of the most suitable learning algorithm challenging. Moreover, the recent increase in ML algorithms and platforms (e.g., programming languages and software) exacerbates the problem by creating an even wider range of PP-ESD techniques without well-defined protocols. These have shifted the focus toward the establishment of standardized user-friendly tools that would resolve most of the issues related to the development of PP-ESD models. Such tools exist in various forms and tackle a certain aspect of the inherent ESD modeling complexities to ensure fast and efficient climate-impact-related studies. For example, the R-package esd, developed and maintained by the Norwegian Meteorological Institute (MET Norway), comprises many utility functions for data retrieval, manipulation and visualization, commonly used statistical tools, and implementations of GLM and regression techniques for generating ESD models (Benestad et al., 2015b). Moreover, an interactive web-based downscaling tool developed as part of the EU-funded ENSEMBLES project (van der Linden and Mitchell, 2009) provides an end-to-end framework through data access, computing resources, and ESD model alternatives (Gutiérrez et al., 2012). The decision support tool sdsm (Wilby et al., 2002) provides auxiliary downscaling routines like predictor screening, regression, model evaluation, and visualization for near-surface weather variables on a daily scale. Most recently, the climate analysis tool Climate4R has been extended with statistical downscaling functionalities (downscaleR) that provide a wide range of MOS and PP techniques (Bedia et al., 2020). While these tools provide specialist solutions, there is no single tool or modeling framework that provides a wide range of contemporary (and commonly used) algorithms and implements all downscaling steps (i.e., predictor selection and construction, learning algorithm selection, training and validation of ESD models, GCM–ESD model coupling, model evaluation, visualization, and relevant statistical tools). Moreover, there is no user-friendly ESD tool written in a widely used programming language like Python, which would remove barriers for the use of ESD techniques in research and teaching. Many of the Python-based tools currently available are primarily designed for bias correction in MOS downscaling, and extending these tools to the PP-ESD framework would diversify the publicly available downscaling tools (e.g., xclim, Bourgault et al., 2023; ibicus, Spuler et al., 2023; CCdowncaling, Polasky et al., 2023). A complete, user-friendly, robust, and efficient open-source downscaling framework would contribute significantly to climate change impact assessment studies by (a) empowering researchers through accessible software and easy switches between alternative methods, (b) allowing for efficient updating of predictions in a consistent modeling framework, (c) increasing the transparency and reproducibility of results, and (d) removing barriers in teaching in order to familiarize future generations of researchers with the ESD approach.

Here, we introduce a new PP-ESD framework that addresses the gaps highlighted above. It is the thoroughly tested, heavily documented, efficient, and user-friendly open-source Python Empirical-Statistical Downscaling (pyESD) package. pyESD adopts an object-oriented programming (OOP) style and treats the predictand data archives (e.g., the weather station) as objects with many functionalities and attributes relevant to ESD modeling. It is flexible with regards to the training dataset and predictand variable. For example, pyESD's predecessors were successfully applied for the prediction of local temperatures (Mutz et al., 2021) and glacier mass balance (Mutz and Aschauer, 2022) in South America. Here, we additionally demonstrate its capabilities in downscaling precipitation in complex terrain in southwestern Germany. pyESD comprises a collection of utilities and methods for data preparation, predictor selection, data transformation, predictor construction, model selection and training, evaluation, statistical testing, and visualization. Unlike existing packages, pyESD also includes common machine learning algorithms (i.e., different estimators, cross-validation schemes, objective function measures, hyperparameter optimizers, etc.) that can be experimented with in a few lines of code.

In the first part of this paper (Sect. 2), we provide detailed descriptions of the model structure and the theoretical background for the implemented methods. In the second part (Sect. 3), we demonstrate the package's functionalities with an illustrative case study for a hydrological subcatchment in mountainous terrain in southwestern Germany. Here, we walk the reader through a typical downscaling process with pyESD. More specifically, we generate station-based downscaling products for precipitation and temperature changes in response to different RCPs. When discussing downscaling-related tasks, we list the corresponding pyESD routines as italicized function names. We only use publicly available data for a set of weather stations to ensure the reproducibility and replicability of the results (see Sect. 3). Moreover, all the scripts used for the case study are provided and can be easily adapted to suit the researcher's focus. We discuss the application example in Sect. 4 and conclude with a summary and important remarks in Sect. 5.

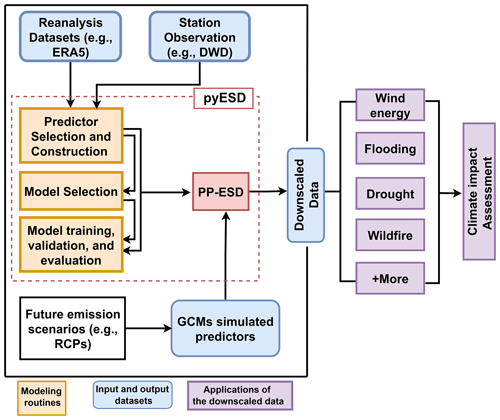

The PP-ESD downscaling cycle involves technical and laborious steps that must be carefully addressed to ensure the robustness and accuracy of local-scale climate predictions. The pyESD package implements all these steps in an efficient modeling pipeline for an easier workflow. In this section, we describe this workflow (Fig. 1) along with the main features of the package.

Figure 1The main features and workflow of PP-ESD implemented in the pyESD package (highlighted by the dashed red box). The weather station and reanalysis datasets are used to select the robust predictors for model training and validation. The trained PP-ESD model is then coupled to GCM simulations forced with different scenarios to predict the local-scale future estimates that can be used for climate change impact assessment (not included in the pyESD package).

2.1 Data structure and preprocessing

PP-ESD modeling requires (1) predictand data from weather stations or other observational systems, (2) reanalysis datasets for the construction of predictors, and (3) GCM or RCM output for the construction of simulated predictors if the PP-ESD models are used for downscaling simulated climates. To understand the workflow demonstrated in later sections, the reader needs to be aware of few important package design choices related to data structure and preprocessing.

-

The package adopts the OOP paradigm and treats every predictand data archive (e.g., weather station or glacier) as an object. Since the current version of the package focuses only on station-based downscaling, we will henceforth describe it only as the weather station object. The package accepts the (typical for weather stations) comma-separated value (CSV) file format. These files contain the predictand time series, such as a temperature record, as well as weather station attributes like the weather station's name, ID, and location. The read_station_csv from the pyESD.weatherstation module initiates each weather station as a separate object using the StationOperator that features all the other functionalities. The weather station object is associated with at least one predictand dataset (i.e., the values of at least one climate variable recorded at that particular station). Furthermore, the initialized object includes all attributes and methods required for the complete downscaling cycle. For instance, the package adopts the fit and predict framework of the scikit-learn Python package (Pedregosa et al., 2011) that can be directly applied to the weather station object.

-

The data needed for predictor construction are read from files in the network Common Data Form (netCDF) format with the Xarray toolkit (Hoyer and Hamman, 2017). Due to the size of these datasets and the computations required to construct the predictors, the memory demand can be very high, and repeating this step every time a new model is trained or applied becomes computationally very costly. This problem is circumvented by storing the constructed predictors for each weather station in pickle files. At the next runtime, these can quickly be read (or unpacked) to reduce the computational costs and facilitate faster experimentation with the package.

-

Since reanalysis datasets, climate model output, and weather station data are provided by different data centers and have varied structures and attributes, it is well outside the scope of our project to write and include a unified data processing function for all. Instead, the preprocessing functions of the current version of pyESD are written for state-of-the-art, representative, and publicly available datasets. More specifically, they work with weather station data from the German Weather Service (Deutscher Wetterdienst, DWD) and the ERA5 reanalysis product (Hersbach et al., 2020). These preprocessing functions are provided as part of the package utilities (pyESD.data_preprocess_utils) and can easily be adapted to work for researchers' preferred datasets. The functions will be expanded in the future to allow experimentation with other popular datasets and assess the sensitivity of ESD model performance to the choice of reanalysis datasets (e.g., Brands et al., 2012).

2.2 Predictor selection and construction

The PP-ESD approach is highly sensitive to the choice of predictors and learning models (Maraun et al., 2019a; Gutiérrez et al., 2019). Moreover, since PP-ESD models are empirical in nature, the predictors serve as proxies for all the relevant physical processes and must be informative enough to account for the local predictand variability (Huth, 1999, 2004; Maraun and Widmann, 2018). Therefore, the selection of potential predictors should be informed by our knowledge of the atmospheric dynamics that control the climate variability of the study area. For example, synoptic-scale climate features, such as atmospheric teleconnection patterns, control much of the regional-scale climate variability. It is therefore recommended to consider these as potential predictors. Statistical techniques, such as methods for feature selection or dimension reduction, may then be applied to reduce the list of physically relevant potential predictors to a smaller selection of predictors that have a robust statistical relationship with the predictand. These steps contribute to the performance of the models and also resolve some of the issues related to multicollinearity and overfitting (e.g., Mutz et al., 2016). The pyESD package adopts three different wrapper feature selection techniques that can be explored for different models: (1) recursive feature elimination (Chen and Jeong, 2007), (2) tree-based feature selection (Zhou et al., 2021), and (3) sequential feature selection (Ferri et al., 1994). The methods are included in pyESD.feature_selection as RecursiveFeatureElimination, TreeBasedSelection, and SequetialFeatureSelection, respectively. Furthermore, classical filter feature selection techniques, such as correlation analyses, are also included as a method of the weather station object.

Predictors are typically constructed by (1) computing the regional means of a physically relevant climate variable or (2) constructing index time series for relevant synoptic-scale climate phenomena. The package allows the user to consider a few important aspects for each type of predictor.

-

The area over which the climate variable is averaged can significantly affect model performance. In complex terrain with high-frequency topography, for example, choosing a smaller spatial extent may result in the predictor having a higher explanatory power. Therefore, a radius (with a default value of 200 km) around the weather station may be defined by the user to determine the size of the area used for the computation of the regional means.

-

Empirical orthogonal function (EOF) analysis is a well-established tool for capturing atmospheric teleconnection patterns and reducing high-dimensional climate datasets to index time series that represent the variability of prominent modes of synoptic-scale climate phenomena (Storch and von Zwiers, 2002). The current version of pyESD includes functions for the extraction of EOF-based index time series for dominant extratropical teleconnection patterns in the Northern Hemisphere (pyESD.teleconnections). More specifically, it allows the computation of index values for the North Atlantic Oscillation (NAO) as well as the East Atlantic (EA), Scandinavian (SCAN), and East Atlantic–Western Russian (EAWR) oscillation patterns (e.g., Boateng et al., 2022). It will be expanded to consider Southern Hemisphere patterns in future versions.

After the selection and construction of predictors, their raw values can be transformed before model training. For instance, MonthlyStandardizer implemented in pyESD.standardizer can be used to remove the seasonal trends in each predictor by centering and scaling the data. Such transformation can reduce biases toward high-variance predictors, ensure generalization, and improve the representation of predictors constructed from GCM output (e.g., Bedia et al., 2020; Benestad et al., 2015a). Principal component analysis (PCA) is another transformation tool included in the package (pyESD.standardizer.PCAScaling). It can be applied to (a) reduce the raw predictor values to information that is relevant to the predictand and (b) prevent multicollinearity-related problems during model training (e.g., Mutz et al., 2016).

2.3 Learning models

The empirical relationship between local predictand and large-scale predictors is often complicated due to the complex dynamics in the climate system. However, ML algorithms have been demonstrated to perform well in extracting hidden patterns in climate data that are relevant for building more complex transfer functions (e.g., Raissi and Karniadakis, 2018). Specifically, neural networks have been explored for downscaling climate information due to their ability to establish a complex and nonlinear relationship between predictands and predictors (e.g., Nourani et al., 2019; Gardner and Dorling, 1998; Vu et al., 2016). Moreover, support vector machine (SVM) models have been used to capture the links between predictors and predictands by mapping the low-dimensional data into a high-dimensional feature space with the use of kernel functions (e.g., Anandhi et al., 2008; Tripathi et al., 2006). Previous studies have also applied multi-model ensembles due to their ability to reduce model variance and capture the distribution of the training data (e.g., Xu et al., 2020; Massaoudi et al., 2021; Gu et al., 2022).

Selecting the most appropriate model or algorithm for a specific location or predictand can be challenging because one needs to consider many case-specific factors like data dimensionality, distribution, temporal resolution, and explainability. This problem is exacerbated by the lack of well-established frameworks for climate information downscaling (Gutiérrez et al., 2019). The pyESD package addresses this challenge with the implementation of many ML models that are different with regard to their theoretical paradigms, assumptions, and model structure. The implementation of commonly used models in the same package allows researchers to experiment with different learning models and to replicate and update their research based on emerging recommendations for specific predictands and geographical locations. The implementation of statistical and ML models in pyESD mainly relies on the open-source scientific framework scikit-learn tool (Pedregosa et al., 2011). In the following subsections, we briefly explain the principles behind the ML methods that are included in the pyESD package.

2.3.1 Regularization regressors

Regularization models are penalized regression techniques that shrink the coefficients of uninformative predictors to improve model accuracy and prediction interpretability (Hastie et al., 2001; Tibshirani, 1996; Gareth et al., 2013). The coefficients of non-robust predictors are set to zero by minimizing the absolute values of regression coefficients or minimizing the sum of squares of the coefficients. The former is referred to as L1 regularization and adopted by the least absolute shrinkage and selection operator (LASSO) method. The latter is referred to as L2 regularization and adopted by the ridge regression method. The regularization term (R) and the updated cost function for a linear equation of p independent variables or predictors, Xi, are defined as

for L1 regularization and

for L2 regularization. Therefore, the updated cost function is defined as

where λ is the tuning parameter that controls the severity of the penalty defined in Eqs. (1) and (2), and βi represents the coefficients. The package features implementations of the LASSO and ridge regression using a cross-validation (CV) scheme with random bootstrapping to iteratively optimize λ. These are included as LassoCV and RidgeCV, respectively. The optimization of the cost function in Eq. (3) is usually based on the coordinate descent algorithm to fit the coefficients (Wu and Lange, 2008). The pyESD package also includes an implementation of LassoCV that uses a less greedy version of the optimizer (LassoLarsCV). It is computationally more efficient by using the least angle regression (Efron et al., 2004) for fitting the coefficients.

2.3.2 Bayesian regression

Bayesian regression employs a type of conditional modeling to obtain the posterior probability (p) of the target variable (y), given a combination of predictor variables (X), regression coefficients (w), and random variables (α) estimated from the data (Bishop and Nasrabadi, 2006; Neal, 2012). In its simplest form, the normal linear model, the predictand yi (given the predictors Xj), follows a Gaussian distribution N(μ,σ). Therefore, to estimate the full probabilistic model, yi is assumed to be normally distributed around Xijw:

This approach also permits the use of regularizers in the optimization process. The Bayesian ridge regression procedure (BayesianRidge) estimates the regression coefficients from a spherical Gaussian and L2 regularization (Eq. 2). The regularizer parameters (α,λ) are estimated by maximizing the log marginal likelihood under a Gaussian prior over w with a precision of λ−1 (Tipping, 2001; MacKay, 1992):

This means that the parameters (α, λ, and w in Eqs. 4 and 5) are estimated jointly in the calibration process. Automatic relevance determination regression (ARD) is an alternative model included in the package. It differs from BayesianRidge in estimating sparse regression coefficients and using centered elliptic Gaussian priors over the coefficients w (Wipf and Nagarajan, 2007; Tipping, 2001). Previous studies have used sparse Bayesian learning (relevance vector machine – RVM) for downscaling climate information (e.g., Das et al., 2014; Ghosh and Mujumdar, 2008).

2.3.3 Artificial neural network

The multilayer perceptron (MLP) is a classical example of a feed-forward ANN, meaning that the flow of data through the neural network is unidirectional without recurrent connections between the layers (Gardner and Dorling, 1998; Pal and Mitra, 1992). MLP is a supervised learning algorithm that consists of three layers (i.e., an input, hidden, and output layer) connected by transformation coefficients (weights) using nonlinear activation such as the hyperbolic function. More specifically, the learning algorithm with one hidden layer for the training sets (X1,y1), (X2,y2), …, (Xn,yn), where XiϵRn and yiϵ{0,1}, can be defined as

where θ is the activation function, and b1 and b2 are the model biases added to the hidden and output layer. The weights connecting the layers are optimized with the backpropagation algorithm (Hecht-Nielsen, 1992; Rumelhart et al., 1986) with a mean squared error loss function. Moreover, the L2 regularization (Eq. 2) method is applied to avoid overfitting by shrinking the weights with higher magnitudes. Therefore, the optimized squared error loss function is defined as

where is the L2 penalty that shrinks the model complexity. Often, the derivative of the loss function with respect to the weights is determined until the residual error of the model is satisfactory. The stochastic gradient descent algorithm (Bottou, 1991; Kingma and Ba, 2014) is used as a solver for updating the weights (defined in Eq. 6) in a maximum number of iterations until a satisfactory loss (Eq. 7) is achieved. Moreover, the choice of the parameters, such as the size of hidden layers, activation function, and learning algorithm, is relevant to the performance of the model (Diaz et al., 2017). The exhaustive search algorithm with CV bootstrapping is a simple and efficient method for parameter optimization (Pontes et al., 2016) and therefore included in the pyESD package (GridSearchCV).

2.3.4 Support vector machine

Support vector regression (SVR) uses the principles of SVM as a regression technique. The learning algorithms are based on Vapnik–Chervonenkis (VC) theory and empirical risk minimization that is designed to solve linear and nonlinear problems. This is achieved by applying kernel functions to map low-dimensional data to higher- or even infinite-dimensional feature space (Vapnik, 2000; Cristianini and Shawe-Taylor, 2000). In principle, the model creates a hyperplane in a vector space containing groups of data points. This hyperplane is a linear classifier that maximizes the group margins. Given finite predictor and predictand data points (X1,y1), (X2,y2), …, (Xn,yn), where XiϵRn and yiϵR, the regressor can be defined as

where the support vectors w and model bias b are the optimal parameters that minimize the cost function in Eqs. (9):

subject to

where ξi, , and i=1…n are the slack variables (the upper and lower training errors) subject to the error tolerance of ε that prevents overfitting. C represents a regularization term that determines the balance between minimal loss and maximal margins. The cost function in Eq. (9) is solved using Lagrange's formula (Balasundaram and Tanveer, 2013) to obtain the optimized function:

where αi and are Lagrange multipliers, and ϕ(Xi,Xj) is the kernel function which implicitly maps the training vectors in Eq. (8) into a higher-dimensional space. The SVR method of the pyESD package includes linear, polynomial, sigmoid, and Gaussian radial basis function (RBF) kernels (Hofmann et al., 2008). Moreover, the degree of regularization (C) and the coefficient of the kernels (γ) is given a range of values so that the hyperparameter optimization algorithm can determine the best model. Due to the expensive nature of SVR, the package uses a randomized search algorithm in a CV setting for the hyperparameter optimization (Bergstra and Bengio, 2012). However, hyperparameters optimization algorithms, such as Bayesian and grid search (Snoek et al., 2012; Pontes et al., 2016; Bergstra et al., 2011) methods, are also provided as alternatives. Previous downscaling projects have taken advantage of the SVR method due to its ability to map data into higher-dimensional space and exclude outliers from the training process (Ghosh and Mujumdar, 2008; Chen et al., 2010; Sachindra et al., 2018; Anandhi et al., 2008; Tripathi et al., 2006).

2.3.5 Ensemble machine learning

Each ML technique is associated with challenges that arise from the method's limitations and underlying assumptions. These have to be considered carefully in the evaluation of the resulting downscaling product. Some of these challenges can be overcome by an integration of different ML models for a specific task (Dietterich, 2000; Zhang and Ma, 2012). Integrated ML models have been suggested to outperform single ML models in downscaling climate information (e.g., Liu et al., 2015). Ensemble models typically use different ML algorithms (base learners) to extract information from the training data, then use a second set of ML algorithms (meta-learners) that learn from the first and combine the individual predictions into an ensemble. Ensemble models can be categorized by (a) the selection of base learners and (b) the method of combining the individual predictions from the base learners. Here, we summarize the more prominent ensemble models that are included in the pyESD package.

Bagging

Bagging ensemble models consist of ML algorithms that generate several instances of base learners using random subsets of the training data and then aggregate the information for the final estimates (Breiman, 1996a; Quinlan, 1996). Such algorithms integrate randomization into the learning process and thereby often ensure the reduction of the variance of the individual base learners (e.g., decision trees). Moreover, bagging techniques constitute a simple way to improve model performance without the need to adapt the underlying base algorithm. Since bagging works well with complex algorithms like decision trees, we also consider tree-based ensembles for the pyESD package. More specifically, we include implementations of the random forest (RandomForest) and extremely randomized tree (ExtraTree) methods in addition to classical bagging.

The RandomForest algorithm builds multiple independent tree-based learners. The trees can be constructed with the full set of predictors or a random subset. Each tree is constructed from a random sample of the training data in a bootstrapping process (Breiman, 2001). The algorithm uses the remaining training data (i.e., out-of-bag data) to estimate the error rate and evaluate the model's robustness. In contrast, the ExtraTree algorithm considers the discriminative thresholds from each predictor rather than the subset of predictors (Geurts et al., 2006). This usually adds more weight to the variance reduction and slightly improves the model bias. Tree-based ensembles are particularly suitable for establishing a nonlinear relationship between predictors and predictands (e.g., Pang et al., 2017; He et al., 2016).

Boosting

In recent years, boosting models have also been applied for the downscaling of climate information (e.g., Fan et al., 2021; Zhang et al., 2021). Boosting models are meta-estimators that are built sequentially from multiple base learners with the primary objective of reducing the model bias and variance. In principle, the method “boosts” weaker base learners (i.e., estimators that perform only slightly better than random guessing) by converting them into strong ones in an iterative process. The technique assumes that the base learning model is distribution-free (Schapire, 1999) and iteratively improves the weaker base learners by applying weights to the training data through the adjustment of the input points with prediction errors from the previous prediction (Schapire, 2003; Schapire and Freund, 2013). There are many boosting algorithms due to the many possible methods of weighting the training data and tuning the weaker base learners. In the pyESD package, we include (1) adaptive boosting (Adaboost), (2) gradient tree boosting (GradientBoost) with a gradient boosting algorithm by Friedman (2001), and (3) extreme gradient boosting (XGBoost). A brief summary of each is provided below.

-

The Adaboost algorithm is a well-established model for improving the accuracy of weak base learners (Freund and Schapire, 1997). The model is adaptive in the sense that the training data are sequentially adjusted based on the previous performance of the weaker model. The model uses a weighted majority vote (or sum) to combine the individual prediction from the weaker learners and produce a robust final prediction. The implemented version uses a decision tree algorithm as the base estimator to develop the boosted ensemble predictions.

-

The GradientBoost algorithm considers the boosting process to be a numerical optimization problem that minimizes a loss function in a stage-wise additive model by adding weaker learners using a gradient descent procedure. This generalization allows the tuning of an arbitrary differentiable loss function which can be selected based on a specific problem. Specifically, in pyESD, squared errors are used in the minimization of the loss function.

-

XGBoost, a recent extension of the GradientBoost algorithm, is designed to reduce computational time and improve model performance (Chen and Guestrin, 2016). The model uses regularization terms to penalize the final weights and prevent overfitting. The algorithm also uses shrinkage and column subsampling techniques to avoid overfitting. Moreover, the model can handle sparse data by using a sparsity-aware split function.

Stacked generalization

The stacked generalization method (or “stacking”) has previously been used for the downscaling climate information and has shown improved prediction robustness over singular models (e.g., Massaoudi et al., 2021; Gu et al., 2022). It is designed to enhance prediction accuracy and generality by taking advantage of the mutual complementarity of the base-model predictions. The approach was introduced by Wolpert (1992) and demonstrated for regression tasks and unsupervised learning by Breiman (1996b) and Leblanc and Tibshirani (1996), respectively. In principle, the following process is implemented: in the first step, the training data and base models, referred to as level-0 data and level-0 models by Wolpert (1992), are used to generate the first set of predictions. Then a meta-learning model (level-1 generalizer) is used to optimally combine the previous predictions (level-1 data) into final estimates. Lastly, the method applies a cross-validation technique and generates new “stacked” datasets for a final learning step. Generally, the performance of stacked generalization is constrained by the attributes used to generate the level-1 data and the type of algorithm used for higher-level learning (Ting and Witten, 1999). We consider these limitations by providing a wide range of models that can be used as the level-0 models and the level-l generalizer. The base learners can be selected from the different ML models presented in the previous sections. The reader is advised that previous studies (e.g., Reid and Grudic, 2009) suggest the use of a more restrictive model like LassoCV and ExtraTree as the meta-learner to prevent overfitting.

2.4 Model training

The process of training and testing the PP-ESD models is the most critical stage in the downscaling procedure, since it determines much of the robustness of the final models, as well as the accuracy of the predictions they generate. The process typically involves the following steps: (1) the observational records are separated into training and testing datasets. (2) The training datasets are used to establish the transfer functions that make up the PP-ESD models. (3) The trained models are then evaluated on the independent testing datasets (Sect. 2.5). In the model training process, hyperparameter optimization techniques (e.g., GridSearchCV) are used to fine-tune the transfer function parameters, such as regression coefficients, to optimize model performance. Cross-validation (CV) techniques are applied to split the whole training dataset into smaller training and validation data sections and allow the assessment and iterative improvement of the model parameters during training while also preventing overfitting (Moore, 2001; Santos et al., 2018). In this category of techniques, the k-fold framework is the most used for climate information downscaling models. It partitions the training data into k equally sized and mutually exclusive subsamples, which are also referred to as folds (Stone, 1976; Markatou et al., 2005). More specifically, for each iteration step, one fold is used for model validation, and the remaining k−1 folds are used for model training. The leave-one-out CV technique (Lachenbruch and Mickey, 1968) is an alternative and has been used for the development of ESD models (e.g., Gutiérrez et al., 2013). Cross-validation techniques rely on the fundamental assumption of independent and identically distributed (i.i.d) data. They, therefore, treat the data as a result of a generative process that has no memory of previously generated samples (Arlot and Celisse, 2010). The assumption of i.i.d might not be valid for time series data (e.g., Bergmeir and Benítez, 2012) due to seasonal effects, for example. To circumvent this problem, monthly bootstrapped resampling and time series splitters are included in the pyESD package. The pyESD.splitter module contains all CV frameworks available for model training, including the k-fold, leave-one-out, and other CV schemes. The validation metrics used for optimizing the model parameters include the coefficient of determination (R2) (Eq. 11), root mean squared error (RMSE) (Eq. 13), mean absolute error (MAE) (Eq. 14), and others that are summarized in Sect. 2.5. The final values for the validation metrics, which reflect the model performance during training, are arithmetic means of the individual values for each iteration. In this paper, we refer to them as CV performance metrics (i.e., CV R2, CV RMSE, and CV MAE).

2.5 Model evaluation

In the process of downscaling climate information, best practice involves the use of stringent model evaluation schemes with independent data outside the training data range (Wilby et al., 2004). Retaining a section of the data as a testing dataset (Sect. 2.4) is recommended if longer records (e.g., ≥30 years) are available. It allows (a) a completely independent evaluation of the trained model's performance and (b) an assessment of the sensitivity of the model to the chosen training dataset. In the case of time series, the latter can provide insights into the model's sensitivity to the calibration period and the temporal stationarity of the model's transfer functions. If the records are short (e.g., <30 years), the CV metrics (Sect. 2.4) can be used, albeit with caveats, as nonideal estimates for the model's performance (e.g., Mutz et al., 2021). For the remainder of this section, however, we will assume that longer records and completely independent testing datasets are available.

The PP-ESD model is evaluated on the basis of the model's predictions and the observed values y. In pyESD, the following performance metrics are implemented.

-

The coefficient of determination (R2) represents the fraction of the predictand's observed variance that can be explained by the predictors. It can be seen as a measure of how well the model predicts the unseen data (Wilks, 2011). The R2 for the predicted values in relation to the observed data yi for i=1, …, n samples is defined as

where is the mean of the observed data, is the sum of squared residuals (SSR), and is the total sum of squares (SST). R2 can range from −∞ to 1, where 1 is the best possible score and negative values are indicative of an arbitrary, worse model. An R2 value of 0 is indicative of a model that would always predict the . In this case, the model represents no improvement over simply using the mean as a model.

Pearson's correlation coefficient (PCC) evaluates the linear correlation between the model predictions yi and observed data xi. The PCC of 1 indicates a perfect positive correlation, −1 indicates a perfect anticorrelation, and 0 indicates no correlation between the predicted and observed values. The PCC for n samples is defined as

where the and are the means of the xi and xi values, respectively.

The root mean squared error (RMSE) estimates the mean magnitude of error between the predictions and observations. The RMSE is given in the physical units of the observed data and not standardized. Smaller values indicate better model performance. The RMSE for predictions and observations yi of n samples is calculated as

The mean absolute error (MAE) is a scale-dependent accuracy measure that also provides information on the errors between the predictions and observations. The MAE is estimated as the sum of absolute errors normalized by the sample size (n). The MAE is calculated as

Additional metrics such as the mean squared error (MSE), mean absolute percentage error (MAPE), maximum error, adjusted R2 (Miles, 2014), and Nash–Sutcliffe efficiency (NSE) (Nash and Sutcliffe, 1970) are included in pyESD. However, the predicted values from the trained model and their corresponding observed values can be evaluated using other metrics not included in pyESD. For example, additional metrics like the model skill score E and the revised R2 (RRS), which combines correlation, bias measure, and the capacity to capture variability, can be used (Onyutha, 2021). We highlight that the limitations and assumptions underpinning these metrics should be considered when interpreting performance metrics. For example, the RMSE is sensitive to outliers because the squaring of errors assigns more weight to large errors. This implies that a single outlier can bias its estimate and lead to a misinterpretation of extreme data points in the predictand. Although MAE is less sensitive to outliers compared to RMSE, its treatment of all errors with equal weight may not adequately account for the impact of extreme errors on model performance. Consequently, both metrics should be interpreted with respect to the mean of the observed values. On the other hand, the Pearson correlation coefficient (PCC) assumes a linear relationship between the predicted and observed values and a bivariate normal distribution. However, distance correlation (Székely et al., 2007), which is more computationally demanding and makes no assumptions about the relationship or distribution, can be considered. Chaudhuri and Hu (2019) demonstrated a fast algorithm that can be used to compute the distance correlation.

2.6 GCM–ESD coupling and local-scale predictions

The developed and tested PP-ESD model can finally be coupled to coarse-scale climate information. If the PP-ESD model was developed with the intention to downscale predictions of future climate change, the next logical step is to couple it to GCM simulations forced with different greenhouse gas concentration scenarios. Since PP-ESD is the bias-free downscaling alternative to MOS-ESD, PP-ESD models may be coupled to all GCMs, provided that the predictors are adequately represented by the GCMs. This condition may be alleviated to an extent by standardizing the simulated predictor (Bedia et al., 2020). An analysis of the distribution similarity between the observed and simulated predictors can be conducted to test the assumption of representation. For example, the Kolmogorov–Smirnov (KS) test, which is implemented as part of the pyESD package utilities, is a nonparametric statistical hypothesis test that can be used to evaluate the null hypothesis (H0) that the observation-based predictors and simulated predictors are of the same theoretical distribution.

The first step in ESD–GCM coupling is to utilize the GCM output to recreate the predictors used in the training of the ESD model. This may involve anything from constructing simple temperature regional means to reconstructing multivariate indices for more complex climate phenomena. In the case of index-based predictors such as NAO, EA, SCAN, and others, the simulated indices are reconstructed by projecting the pressure anomalies of the GCM onto the EOF loading patterns of the predictors (e.g., Mutz et al., 2016). This ensures that the physical meaning of the index values is maintained. The ESD model then takes these simulated predictors as input and generates local-scale predictions according to the model's transfer functions. The added value of the resulting downscaling product can be evaluated by comparing the downscaled values to the raw outputs of different GCMs and RCMs. Finally, the high-resolution local-scale predictions can be used to drive climate change impact assessment models to predict flood frequency (e.g., Padulano et al., 2021; Hodgkins et al., 2017), agricultural changes (e.g., Mearns et al., 1996), changes in water resources (e.g., Dau et al., 2021), and more.

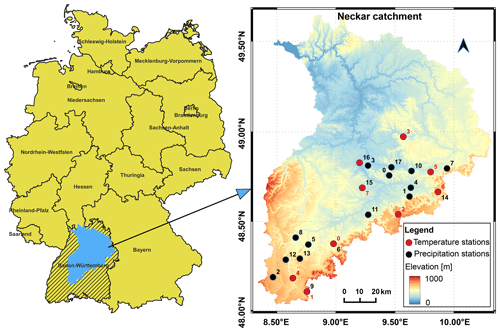

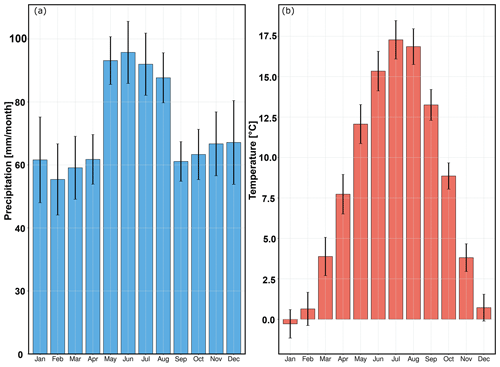

We demonstrate the complete downscaling workflow and highlight most of the functionalities of the pyESD package in an illustrative case study. The study uses the PP-ESD approach and is set in the Neckar catchment, a hydrological catchment in southwestern Germany that consists of complex mountainous terrain with topographic elevations between 200 and 1000 m above sea level (Fig. 2). The region is climatically complex, since local climates are influenced by atmospheric teleconnection patterns (e.g., NAO, EA, and SCAND), orographic effects (e.g., Kunstmann et al., 2004), and the Mediterranean climate (Bárdossy, 2010; Ludwig et al., 2003). The catchment experiences maximum precipitation (80–120 mm per month) and temperature (15–18 ∘C) in the summer months (Fig. 3). The catchment serves as a water supply for drinking and agricultural activities (Selle et al., 2013). We use this catchment for our case study because (a) it is a suitable region to test the strengths and limitations of the pyESD downscaling package, and (b) generating 21st century climate change estimates can contribute to regional climate impact assessments and adaptation.

Figure 2Weather station locations and elevations in the Neckar catchment. The red circles represent temperature stations (ID corresponds to Table 1b), and the black circles represent precipitation stations (ID corresponds to Table 1a). The color map shows the elevation and delineates the extent of the catchment.

Figure 3Long-term (1958–2020) monthly means of (a) precipitation and (b) temperature, averaged over all stations in the catchment. The error bars are the standard deviations that represent inter-station variability. The maximum precipitation and temperature in the catchment are recorded in the summer season (JJA).

In this case study, we apply pyESD to predict local temperature and precipitation changes for 22 weather stations located in the catchment (Table 1) and demonstrate the package's flexibility by performing experiments with the different modeling alternatives. We show most of the PP-ESD steps required for generating robust downscaling products. These steps include (1) predictor selection and construction; (2) model selection, training, and cross-validation; (3) model evaluation; and (4) generating future predictions through ESD–GCM coupling (see Sect. 3.2 for details). We note that the focus of the case study is more on demonstrating the pyESD workflow and functionality and less on detailed discussions of the downscaled results and their implications. In order to allow readers to reproduce and learn from this application example, we only use public and freely available datasets (see Sect. 3.1 for more details about the data). Moreover, all scripts used in this study (i.e., data preprocessing, modeling, and visualization scripts) are provided in the code and data availability section.

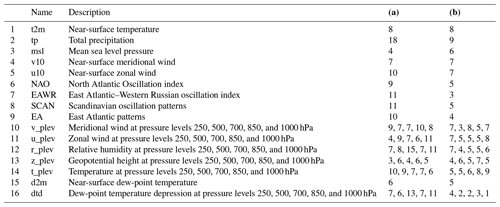

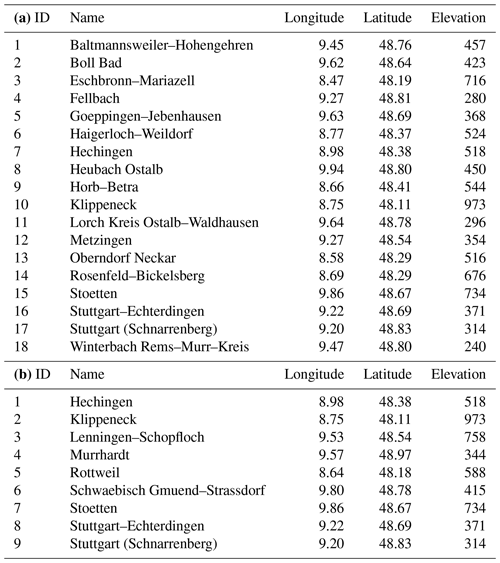

Table 1IDs (specific to this study), names, coordinates, and elevation (m) for weather stations recording (a) precipitation and (b) temperature.

3.1 Datasets

3.1.1 Weather station data

Monthly precipitation and temperature station data from the German Weather Service (Deutscher Wetterdienst, DWD accessible from https://cdc.dwd.de/portal/, last access: 30 October 2023) served as the predictand time series in this study. We considered all weather station records that (a) originated from measurements in the Quelle–Enz subcatchment, (b) covered the time period of 1958 to 2020, and (c) were at least 30 years in length. Even though there is no well-established and universally valid recommendation for the minimum record length in a PP-ESD approach (e.g., Hewitson et al., 2014), we chose a conservative 30-year threshold to ensure the models can be evaluated with truly independent, retained data (see Sect. 2.5). The remaining weather stations are summarized in Table 1. These were loaded into predictand station objects (SOs) as follows.

1 from pyESD.Weatherstation import read_station_csv

2 variable = "Temperature" #or 'Precipitation'

3 SO = read_station_csv(filename, variable)

3.1.2 Reanalysis datasets

The ERA5 reanalysis products, produced and managed by the European Centre for Medium-Range Weather Forecasting (ECMWF), were used to construct the predictors in this study. ERA5 is based on historical records from various observational systems (e.g., oceans buoys, aircraft, weather stations) that are dynamically interpolated with numerical forecasting models in a four-dimensional variational (4D-Var) data assimilation scheme to generate global, homogeneous, spatially gridded datasets (Bell et al., 2021). It has a spatial resolution of approximately 31 km (or TL639) and is available as hourly data, covering 1950 to the present day with a 5 d lag of data availability (Hersbach et al., 2020). For this study, however, mean monthly values were used in the construction of potential predictors (Table 2). These are publicly available from the Copernicus Climate Data Store (CDS) (accessible at https://cds.climate.copernicus.eu, last access: 30 October 2023).

3.1.3 GCM simulation datasets

For the ESD–GCM coupling, the predictors were reconstructed from an MPI-ESM (Max Planck Institute Earth System Model) GCM simulation that follows the protocols of the World Climate Research Programme's (WCRP) Coupled Model Intercomparison Project phase 5 (CMIP5) (Taylor et al., 2012). We highlight that CMIP5 model output was chosen in this illustrative study to enable consistent comparison with previous regional climate models over the region and any GCM outputs (e.g., CMIP6) can be combined with pyESD. For the case study, we consider several simulations (accessible at https://cds.climate.copernicus.eu, last access: 30 October 2023) forced with different RCP scenarios (Moss et al., 2010) to predict the local-scale response to the plausible range of forcings. In order to highlight the added value of the downscaled product, the local-scale future estimates are compared to the coarser predictions of several GCMs (i.e., MPI-ESM, CESM1-CAM5 of the National Center for Atmospheric Research – NCAR, Kay et al., 2015, and HadGE2-ES of the Hadley Centre of the UK Met Office, Collins et al., 2008) and RCMs (CORDEX-Europe simulation with MPI-CSC-REMO2009 driven with boundary conditions from MPI-ESM).

3.2 Methods

3.2.1 Predictor selection and construction

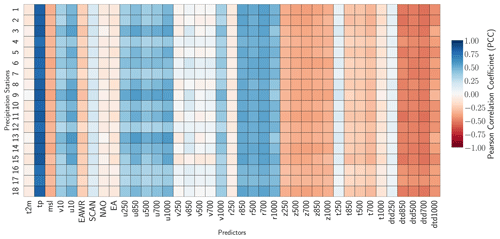

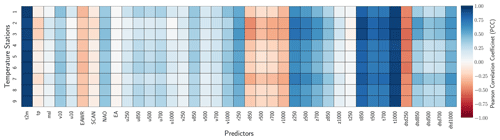

The considered predictors must be large-scale climate elements that are both physically and empirically relevant to predicting the local-scale climate variability in the vicinity of the weather station. The physical relevance of considered predictors (Table 2) is established through previous studies and general climatological merit. We then apply a monthly standardizer transformer to remove the seasonality trends and scale the individual predictors. The empirical relationship with the predictand is then evaluated with PCCs defined in Eq. (12). Finally, first estimates of their predictive skills are obtained through the application of the package's recursive, sequential, and tree-based algorithms in a CV setting. These preliminary experiments are conducted to refine the selection of predictors further. After the predictor selection process, each weather station and predictand is associated with a particular subset of predictors (Table 2) that are later used to train the final ESD model for the station (Sect. 3.2.2).

The steps above are implemented with pyESD as follows.

-

We create a list (predictors) of all considered predictors with physical relevance to the predictand. We then use the set_predictors method of the station object (SO) to read the data in the local directory (predictordir) and construct regional means with a defined radius of 200 km around the station location. These are regional means of relevant climate variables and serve as the simplest type of predictor. For the construction of indices for atmospheric teleconnection patterns (i.e., NAO, EA, SCAN, and EAWR), which serve as further predictors, the package automatically calls the pyESD.teleconnections module if the pattern's acronym is included in the list of predictors.

1 predictors = ["t2m", "tp", "NAO"

,..., "nth predictor"]

2 SO.set_predictors(variable,

predictors, predictordir,

radius=200) # radius in km -

We apply the monthly standardizer and then use the predictor_correlation method to estimate the PCC between the predictand and predictors.

1 SO.set_standardizer(variable,

standardizer = MonthlyStandardizer

(detrending=True, scaling=True))

2 df_corr = SO.predictor_correlation

(variable, predictor_range,

ERA5Data, fit_predictors=True,

fit_predictand=True,

method="pearson") -

The final refinement of the predictor list is implemented as part of the fit method. We use the set_model method to define the ARD regressor, TimeSeriesSplitter CV setting, and call the fit method in a loop through the three types of selector methods.

1 SO.set_model(variable, method="ARD",

cv=TimeSeriesSplit(n_splits=10))

2 selector_methods = ["Recursive",

"TreeBased", "Sequential"]

3 for selector_method in

selector_methods:

4 SO.fit(variable, predictor_range,

ERA5Data, fit_predictors=True,

predictor_selector=True,

selector_method =

selector_method, select_regressor)

3.2.2 Model training and validation

Model training and validation are performed separately for each predictand and weather station. The models are trained in a CV setting for the period 1958–2010 and then assessed on independent retained data for the period 2011–2020. In the training process, we use seven different methods before deciding on an estimator for the final model. These methods include at least one representative for each of the families of ML algorithms (see Sect. 2.3) except SVR. We exclude SVR due to its high computational demands for optimization and to ensure the easy reproducibility of the illustrative example on less powerful computers. We perform the initial model training and validation with the LassoLarsCV, ARD, MLP, RandomForest, XGBoost, bagging, and stacking regressors using a KFold(n_splits=10) validation scheme for hyperparameter optimization. For the stacking regressor, we use all the other regressors as base estimators (i.e., level-0 learners) and ExtraTree as the meta-learner. The final ESD model is then selected based on the CV metrics (i.e., CV R2 and CV RMSE) of the individual models.

The steps above are implemented with pyESD as follows: the models are trained with the fit method as described within Sect. 3.2.2. The cross_validate_and_predict method is applied to calculate the CV metrics and generate the predictions for the training period 1958–2010. The predict method is then used to generate predictions for the 2011–2020 period from the models trained in the 1958–2010 period. Finally, the evaluate method is used to obtain the model performance metrics based on the 2011–2020 predictions and retained data. The R2, RMSE, and MAE (see Sect. 2.5) are used as both CV and evaluation metrics in this study. The ERA5 reanalysis product is specified as the predictor dataset for all these methods.

1 cv_score_1958to2010, predict_1958to2010 = SO.cross_validate_and_predict(variable, from1958to2010, ERA5Data)

2 predict_2011to2020 = SO.predict(variable, from2011to2020, ERA5Data)

3 scores_2011to2020 = SO.evaluate(variable, from2011to2020, ERA5Data)

3.2.3 Future prediction

Future predictions are generated by coupling the final ESD models to GCM simulations for the 21st century. In the illustrative example, we use MPI-ESM simulations that were forced with greenhouse gas concentration scenarios RCP2.6, RCP4.5, and RCP8.5. This coupling is achieved as follows: the predictors selected during model training are reconstructed from the GCM output. These simulated predictors are standardized with the MonthlyStandardizer parameters obtained from the reanalysis predictors to ensure data homogenization. Prediction anomalies are calculated using the training period 1958–2010 as a reference. The resulting RCP-specific 21st century prediction anomaly time series are then used to calculate the annual means (2020–2100), as well as the seasonal (DJF, MAM, JJA, SON) and annual 30-year climatologies for the mid-century (2040–2070) and the end of the century (2070–2100). The predicted anomalies are then back-transformed to their respective absolute values for all stations and compared to the raw outputs of GCMs (i.e., CESM1-CAM5, HadGE2-ES, EURO-CORDEX, and MPI-ESM; see Sect. 3.1.3) using the nearest grid point. In pyESD, a future prediction can be generated by using the predict method (Sect. 3.2.2) and specifying the GCM output as the predictor data source.

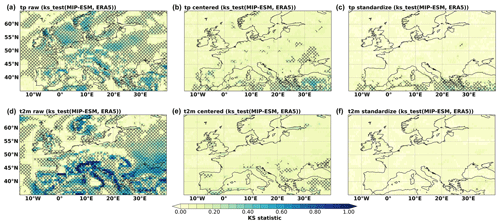

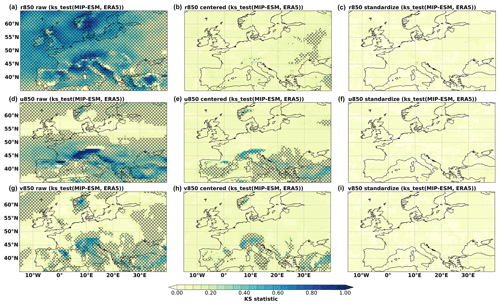

The PP-ESD approach relies on the assumption that the predictors are well-represented by the GCM. We therefore perform KS tests to evaluate the distribution similarity between GCM and ERA5 predictors for the datasets' temporal overlap. The KS statistic lies within the 0–1 range, with lower values indicating greater distribution similarity. For our two-sided tests, we reject the null hypothesis (H0 means the datasets have identical underlying distributions) in the case of p values being smaller than 0.05. We perform the test on the raw monthly time series, monthly anomalies, and standardized anomalies in order to isolate the distributional differences of the first and second moments error propagation (Bedia et al., 2020). The KS_stat function implemented in the pyESD.utils module is used to test several of the informative predictors (such as tp, t2m, r850, u850, and v850).

In this section, we present and discuss the results of the illustrative case study. The discussion places more emphasis on the functionalities of the package than the climatological implications. Specifically, we discuss the results of the predictor selection step (Sect. 4.1), the training and validation of the model (Sect. 4.2), the final model performance (Sect. 4.3), and the future predictions generated through the ESD–GCM coupling (Sect. 4.4).

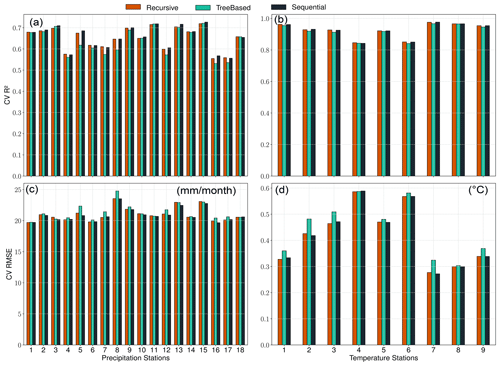

Figure 4Cross-validation R2 and RMSE for the predictor selection methods (recursive in red, tree-based in green, and sequential in black) for precipitation (a, c) and temperature (b, d) station records. The individual methods performed similarly well, suggesting that each of the implemented methods may be used to refine the list of potential predictors.

4.1 Predictor selection

All implemented predictor selection methods demonstrated merit, and the correlation analyses revealed strong linear dependencies between the predictand variables and potential predictors (Figs. A1 and A2). For example, precipitation records are highly correlated (PCC ≥0.5) with large-scale total precipitation (tp), atmospheric relative humidity (r), and zonal wind velocity (u) up to the mid-tropospheric level (i.e., 500–1000 hPa) (Fig. A1). The temperature records are highly correlated (PPC ≥0.7) with near-surface temperature (t2m), atmospheric temperature (t on all levels), and dew-point temperature depression (dtd) up to the mid-troposphere (Fig. A2). Both predictands also show a good correlation (PCC ≥0.25) with the indices of the atmospheric teleconnection patterns (i.e., NAO, EA, EAWR, and SCAN). The predictor selection methods (i.e., recursive, tree-based, and sequential) perform similarly for all the precipitation and temperature stations (Fig. 4). More specifically, the three methods yield CV R2 values of 0.5 to 0.75 (Fig. 4a), CV RMSE values of ≤25 mm per month (Fig. 4c) for precipitation, CV R2 values of ≥0.95 (Fig. 4b), and CV RMSE values of 0.3 to 0.6 ∘C (Fig. 4d) for temperature stations. Since the methods did not show a significant difference in performance, the recursive method was applied for the refinement of the set of predictors, since it allows more flexibility and a stepwise iteration of several combinations of potential predictors (e.g., Mutz et al., 2021; Hammami et al., 2012; Li et al., 2020). The frequencies with which specific predictors were selected using the recursive method are listed in Table 2.

The predictors tp and t2m were included for most of the precipitation and temperature station records, respectively. This indicates that variations in the larger-scale precipitation and temperature fields already explain much of the local-scale predictand variability in the vicinity of the weather stations. Many of the refined predictor sets also included indices of the NAO (9 of 18 precipitation stations, 5 of 9 temperature stations), SCAN (11 of 18 precipitation stations, 5 of 9 temperature stations), EA (10 of 18 precipitation stations, 4 of 9 temperature stations), and EAWR (11 of 18 precipitation stations, 3 of 9 temperature stations). This confirms the strong manifestation of Northern Hemisphere atmospheric teleconnection patterns in the local-scale precipitation and temperature in the catchment (e.g., Bárdossy, 2010; Ludwig et al., 2003). Their exclusion from the other stations is likely due to the fact that their variability might already be captured by zonal and meridional wind speeds and synoptic pressure variables like geopotential height (z) and mean sea level pressure (slp) (Hurrell and Van Loon, 1997; Hurrell, 1995; Barnston and Livezey, 1987; Maraun and Widmann, 2018). Relative humidity was selected as a predictor for most of the precipitation stations. This is consistent with the results of many other studies (e.g., Gutiérrez et al., 2019; Hammami et al., 2012) and our physical understanding of it as a measure of humidity that takes saturation vapor pressure into consideration.

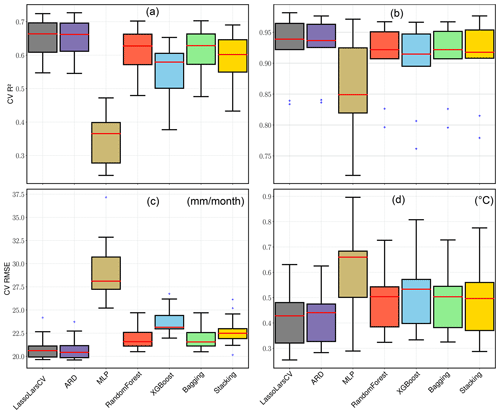

Figure 5Cross-validation R2 and RMSE box plots comparing the experimental regressors' performance for all the precipitation (a, c) and temperature (b, d) stations. The red lines inside the box represent the median, the lower and upper box boundaries indicate the 25th and 75th percentiles, and the lower and upper error lines show the 10th and 90th percentiles, respectively. The black plus marks show the outliers outside the range of the 10th and 90th percentile.

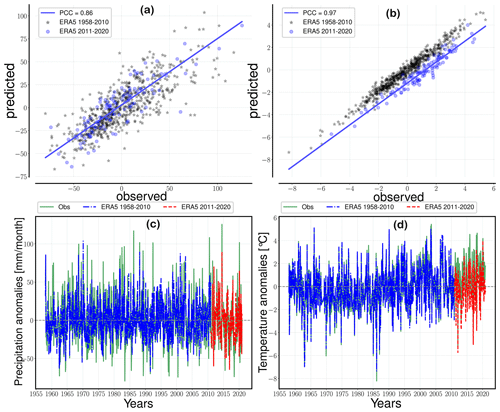

Figure 6Prediction example for the Hechingen station using the final regressor for precipitation (a, c) and temperature (b, d). The top panels (a, b) show the linear relationship between the predictions and observed values, as well as the PCC (R value) for the testing data (blue-colored circles). The bottom panels (c, d) show the 1-year moving average of the observed (green, solid) and ERA5-driven predictions for the training period (blue, dash-dotted) and the testing period (red, dashed).

4.2 Performance of individual estimators

We experimented with seven different regressors before deciding on the regressor that would be used to establish the final ESD models (see Sect.3.2.2). A total of 126 precipitation and 63 temperature experimental models were generated with the seven regressors. Overall, most of the experimental models performed reasonably well with a mean CV R2 of ≥0.5 for precipitation and ≥0.9 for temperature stations (Fig. 5). The MLP models, on the other hand, performed relatively poorly with CV R2 values of ≤0.4 for precipitation and ≤0.9 for temperature. This is due to the fact that MLP model calibration requires longer records and a more complex architecture to capture most of the informative patterns in the training data. This study, however, uses a simplified architecture to make the results reproducible without higher computational requirements. The result can likely be improved with more data (e.g., by using daily values) and an increase in hidden layers (Sect. 2.2.3). The overall performance of the experimental models underlines the methods' suitability for downscaling.

Among the better-performing precipitation models, the LassoLarsCV and ARD methods yielded the best results (CV R2=0.55–0.75, CV RSME = 20–23 mm per month), followed by the RandomForest and bagging ensembles (CV R2=0.48–0.70, CV RSME = 21 to 25 mm per month), as well as the XGBoost ensemble regressor (CV R2=0.39–0.65, CV RMSE = 22–27 mm per month). Stacking all experimental models into a meta-regressor also yields good results (CV R2=0.45–0.7, CV RMSE = 20–26 mm per month) despite the poor performance of the MLP regressors. Based on these results, the LassoLarsCV, ARD, RandomForest, and bagging regressors were selected as the final base learner for the stacking model. ExtaTree was chosen as the final meta-learner to prevent overfitting issues by placing an additional discriminative threshold on all the base regressor's predictions (Geurts et al., 2006).

The experimental temperature models showed similar patterns in performance but performed better overall. LassoLarCV and ARD emerge as the best-performing models (CV R2=0.85–0.98, CV RMSE = 0.2–0.6 ∘C), followed by the RandomForest and bagging regressors (CV R2=0.8–0.96, CV RMSE = 0.3–0.7 ∘C), as well as the XGBoost and stacking ensemble regressors (CV R2=0.75–0.96, CV RMSE = 0.3–0.8 ∘C). Therefore, we also selected stacking (with LassoLarsCV, ARD, RandomForest, bagging) for the final temperature models, too.

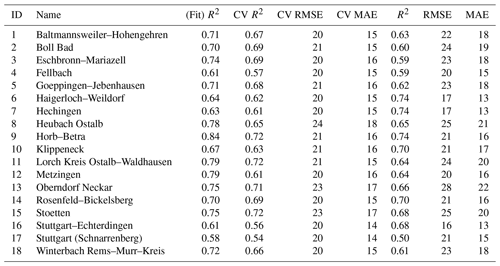

4.3 Performance of the final estimator

Following the analysis of the seven experimental models (Sect. 4.2), the recursive predictor selection method and stacking learning model (with LassoLarsCV, ARD, RandomForest, and bagging) were selected for the generation of the final ESD models. The models were trained on the 1958–2010 data in a CV setting and evaluated on the retained data in the 2011–2020 period. R2, RMSE, and MAE were used as performance metrics for the CV setting and the final evaluation (Tables 3 and 4). The models' performance was good overall but varied notably between different stations. The prediction skill estimates were higher for temperature than for precipitation. For temperature (Table 4), the explained variance estimates (“Fit R2”) are in the range of 0.81–0.98 (μ=0.94), and CV R2 values are in the range of 0.84 to 0.98 (μ=0.93), whereas for precipitation (Table 3), the explained variance estimates are in the range of 0.58–0.84 (μ=0.71), and CV R2 values are in the range of 0.54–0.72 (0.65). The accuracy measures display a similar discrepancy with CV RMSE of 0.3–0.6 ∘C (μ=0.42 ∘C) and CV MAE of 0.2–0.50 ∘C (μ=0.34 ∘C) for temperature, as well as CV RMSE of 20–24 mm per month (μ=21 mm per month) and CV MAE of 14–18 mm per month (μ=16 mm per month) for precipitation.

Table 3Model performance metrics (i.e., R2, RMSE, and MAE) for all the precipitation stations. The final ESD models were trained in a CV setting on datasets from 1958–2010 and evaluated on independent, retained data from 2011–2020.

Table 4Model performance metrics (i.e., R2, RMSE, and MAE) for all the temperature stations. The final ESD models were trained in a CV setting on datasets from 1958–2010 and evaluated on independent, retained data from 2011–2020.

The final model evaluation using independent, retained data from 2011–2020 yielded R2 values of up to 0.95 as well as average RMSE and MAE of ∼1.0 ∘C for temperature and R2 values of up to 0.74, average RMSE of 22 mm per month, and MAE of 17 mm per month for precipitation. The discrepancy in temperature and precipitation model performance is unsurprising, since the thermodynamics and atmospheric dynamics controlling precipitation variability are more difficult to represent (e.g., Shepherd, 2014). Regardless, the overall performance speaks in favor of applying the study's approach to downscale midlatitude climate in complex terrain. Moreover, the models' similar performance during CV and the final evaluation indicates that the models were not overfitted and that the predictand–predictor relationships hold outside the observed period. Finally, it is worth noting that the stacking regressor performed better than the individual base models, even when all the potential regressors of the initial experiments (Sect. 4.2) were stacked into a meta-regressor. Such improvements demonstrate the advantage of the ease of experimentation through a package like pyESD.

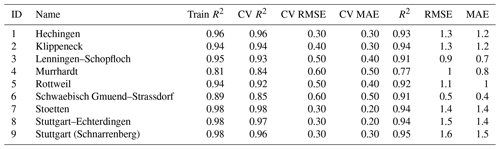

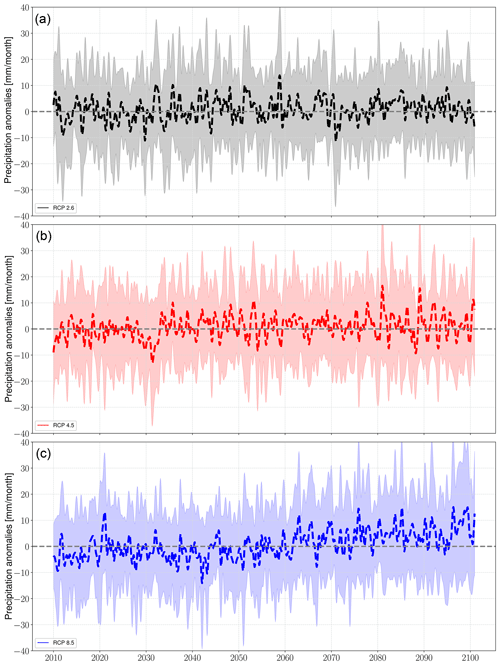

Figure 7Predicted regional annual means of precipitation in response to (a) RCP2.6 (black), (b) RCP4.5 (red), and (c) RCP8.5 (blue). The solid lines represent the values averaged over all stations, and the shaded boundaries indicate the corresponding variability range (1 standard deviation). The time series are smoothed with a 1-year moving average with a centered mean.

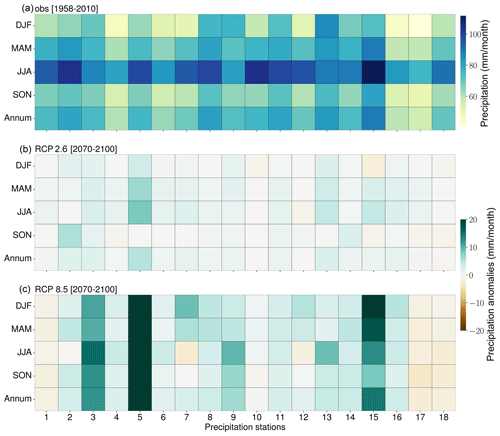

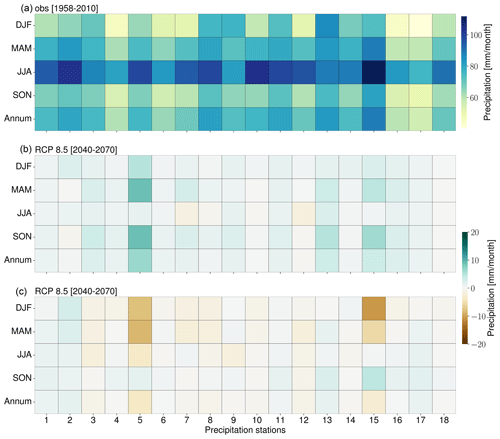

Figure 8(a) Observed precipitation (1958–2100) as well as seasonal (i.e., spring – MAM, summer – JJA, autumn – SON, and winter – DJF) and annual end-of-century (30-year) precipitation climatologies as a result of RCP2.6 (b) and RCP8.5 (c) forcing. Brown (green) indicates a decrease (increase) in precipitation relative to the observed means (1958–2010).

We visualize a prediction example (Fig. 6) to (a) provide a less abstract presentation of these results and (b) demonstrate the type of figure generated by the plotting utility functions in the pyESD.plot module. The figure depicts the predictions generated by the final ESD model for the Hechingen station, a station that records precipitation and temperature (station ID 7 and 1, respectively). The observed and predicted values for 2011–2020 are highly correlated, with PCCs of 0.85 (Fig. 6a) for precipitation and 0.97 (Fig. 6b) for temperature. The time series comparisons also demonstrate the models' abilities to predict the variability of the observed values in both the training and testing period (Fig. 6a and b). Prior to this study, PP-ESD models had not been directly applied to the weather stations in the catchment. However, our models are among the best performing for temperature and precipitation when we compare them to models from other studies across Europe (e.g., Gutiérrez et al., 2019; Hertig et al., 2019; Schmidli et al., 2007). For instance, Gutiérrez et al. (2019) performed an intercomparison of statistical downscaling model performance for 86 stations across Europe using the MOS, PP, and WG methods. The Spearman correlation of the downscaled and observed values yielded R values in the range of ∼0.0–0.7 (with many stations ≤0.5) for precipitation and 0.3–0.95 for temperature. These comparisons also underline the suitability of the pyESD methods for downscaling climate information even in complex mountainous regions.

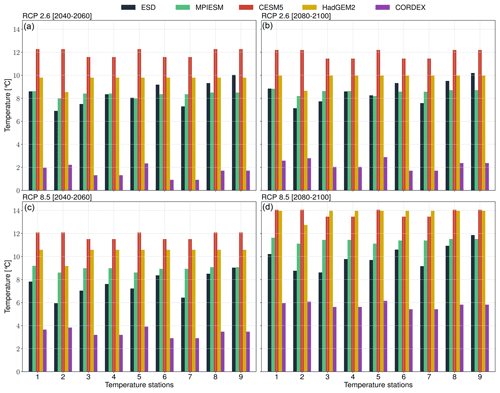

4.4 Prediction of local responses to 21st century climate change

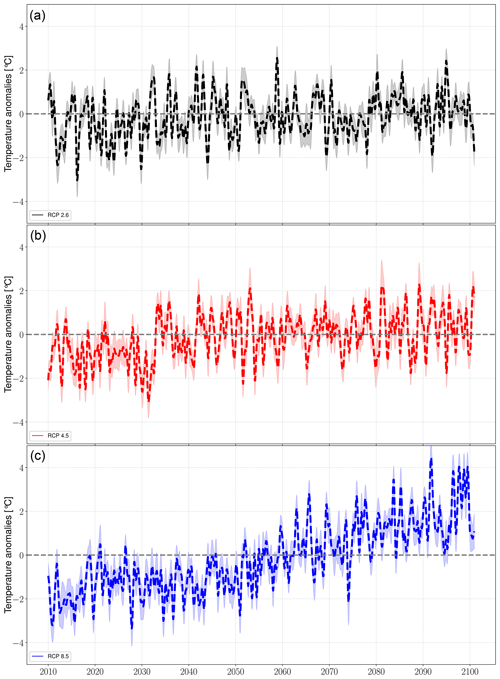

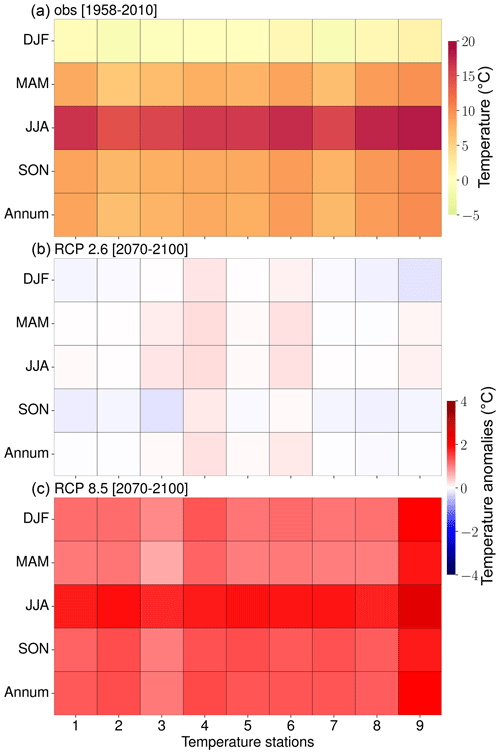

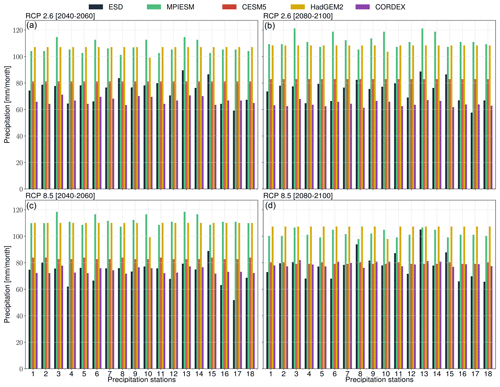

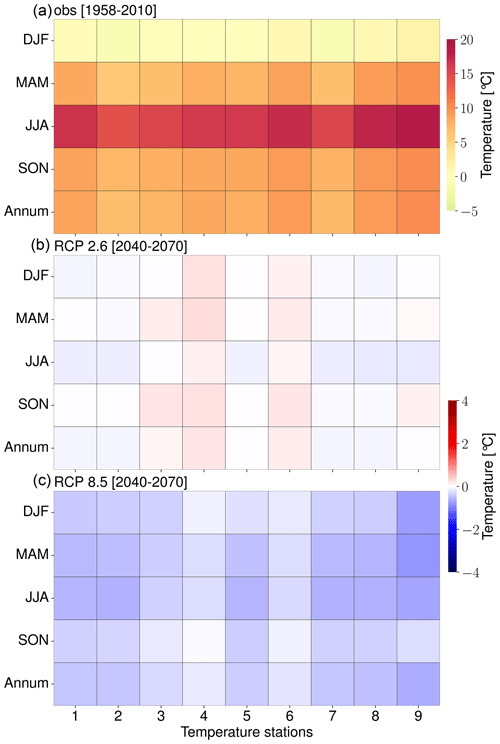

The predictions of local precipitation and temperature responses to 21st century climate change were generated by coupling the final ESD models to MPI-ESM simulations forced with greenhouse gas concentration scenarios RCP2.6, RCP4.5, and RCP8.5 (Sect. 3.2.3). The results are presented as deviations from the monthly long-term means of the training period (1958–2010) and referred to as “anomalies” hereafter. The annual mean anomaly time series were computed with a 1-year moving average with a centered mean (Figs. 7 and 9).

Figure 9Predicted regional annual means of the temperature in response to (a) RCP2.6 (black), (b) RCP4.5 (red), and (c) RCP8.5 (blue). The solid lines represent the values averaged over all stations, and the shaded boundaries indicate the corresponding variability range (1 standard deviation). The time series are smoothed with a 1-year moving average with a centered mean.

Figure 10(a) Observed temperature (1958–2100) as well as seasonal (i.e., spring – MAM, summer – JJA, autumn – SON, and winter – DJF) and annual end-of-century (30-year) temperature climatologies as a result of RCP2.6 (b) and RCP8.5 (c) forcing. Blue (red) indicates a decrease (increase) in temperature relative to the observed means (1958–2010).