the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Process-oriented models of autumn leaf phenology: ways to sound calibration and implications of uncertain projections

Christof Bigler

Autumn leaf phenology marks the end of the growing season, during which trees assimilate atmospheric CO2. The length of the growing season is affected by climate change because autumn phenology responds to climatic conditions. Thus, the timing of autumn phenology is often modeled to assess possible climate change effects on future CO2-mitigating capacities and species compositions of forests. Projected trends have been mainly discussed with regards to model performance and climate change scenarios. However, there has been no systematic and thorough evaluation of how performance and projections are affected by the calibration approach. Here, we analyzed >2.3 million performances and 39 million projections across 21 process-oriented models of autumn leaf phenology, 5 optimization algorithms, ≥7 sampling procedures, and 26 climate model chains from two representative concentration pathways. Calibration and validation were based on >45 000 observations for beech, oak, and larch from 500 central European sites each. Phenology models had the largest influence on model performance. The best-performing models were (1) driven by daily temperature, day length, and partly by seasonal temperature or spring leaf phenology; (2) calibrated with the generalized simulated annealing algorithm; and (3) based on systematically balanced or stratified samples. Autumn phenology was projected to shift between −13 and +20 d by 2080–2099 compared to 1980–1999. Climate scenarios and sites explained more than 80 % of the variance in these shifts and thus had an influence 8 to 22 times greater than the phenology models. Warmer climate scenarios and better-performing models predominantly projected larger backward shifts than cooler scenarios and poorer models. Our results justify inferences from comparisons of process-oriented phenology models to phenology-driving processes, and we advocate for species-specific models for such analyses and subsequent projections. For sound calibration, we recommend a combination of cross-validations and independent tests, using randomly selected sites from stratified bins based on mean annual temperature and average autumn phenology, respectively. Poor performance and little influence of phenology models on autumn phenology projections suggest that current models are overlooking relevant drivers. While the uncertain projections indicate an extension of the growing season, further studies are needed to develop models that adequately consider the relevant processes for autumn phenology.

- Article

(6957 KB) - Full-text XML

-

Supplement

(18131 KB) - BibTeX

- EndNote

This study analyzed the impact of process-oriented models, optimization algorithms, calibration samples, and climate scenarios on the simulated timing of autumn leaf phenology (Fig. 2). The accuracy of the simulated timing was assessed by the root mean square error (RMSE) between the observed and simulated timing of autumn phenology. The future timing was expressed as a projected shift between 1980–1999 and 2080–2099 (Δ100). While the RMSE was related to the models, optimization algorithms, and calibration samples through linear mixed-effects models (LMMs), Δ100 was related to the climate change scenarios, models, optimization algorithms, and calibration samples. The analyzed >2.3 million RMSEs and 39 million Δ100 were derived from site- and species-specific calibrations (i.e., one set of parameters per site and species vs. one set of parameters per species, respectively). The calibrations were based on 17 211, 16 954, and 11 602 observed site years for common beech (Fagus sylvatica L.), pedunculate oak (Quercus robur L.), and European larch (Larix decidua Mill.), respectively, which were recorded at 500 central European sites per species.

Process-oriented models are a useful tool to study leaf senescence. The assessed phenology models differed in their functions and drivers, which had the largest influence on the accuracy of the simulated autumn phenology (i.e., model performance). In all 21 models, autumn phenology occurs when a threshold related to an accumulated daily senescence rate is reached. While the threshold is either a constant or depends linearly on one or two seasonal drivers, the rate depends on daily temperature and, in all but one model, on day length. Depending on the model, the rate is (1) a monotonically increasing response to cooler days and is (i) amplified or (ii) weakened by shorter days, or it is (2) a sigmoidal response to both cooler and shorter days. In the three most accurate models, the threshold was either a constant or was derived from the timing of spring leaf phenology (site-specific calibration) or the average temperature of the growing season (species-specific calibration). Further, the daily rate of all but one of these models was based on monotonically increasing curves, which were both amplified or weakened by shorter days. Overall, the relatively large influence of the models on the performance justifies inferences from comparisons of process-oriented models to the leaf senescence process.

Chosen optimization algorithms must be carefully tuned. The choice of the optimization algorithm and corresponding control settings had the second largest influence on model performance. The models were calibrated with five algorithms (i.e., efficient global optimization based on kriging with or without trust region formation, generalized simulated annealing, particle swarm optimization, and covariance matrix adaptation with evolutionary strategies), each executed with a few and many iterations. In general, generalized simulated annealing found the parameters that led to the best-performing models. Depending on the algorithm, model performance increased with more iterations for calibration. The positive and negative effects of more iterations on subsequent model performance relativize the comparison of algorithms in this study and exemplify the importance of carefully tuning the chosen algorithm to the studied search space.

Stratified samples result in the most accurate calibrations. Model performance was influenced relatively little by the choice of the calibration sample in both the site- and species-specific calibrations. The models were calibrated and validated with site-specific 5-fold cross-validation, as well as with species-specific calibration samples that contained 75 % randomly assigned observations from between 2 and 500 sites and corresponding validation samples that contained the remaining observations of these sites or of all sites of the population. For the site-specific cross-validation, observations were selected in a random or systematic procedure. The random procedure assigned the observations randomly. For the systematic procedure, observations were first ordered based on year, mean annual temperature (MAT), or autumn phenology date (AP). Thus, every fifth observation (i.e., 1+i, 6+i, … with i∈ (0, 1,…, 4) – systematically balanced) or every fifth of the n observations (i.e.,; 1+i, 2+i, …, with i∈ (0, 1, …, ) – systematically continuous) was assigned to one of the cross-validation samples. For the species-specific calibration, sites were selected in a random, systematic, or stratified procedure. The random procedure randomly assigned 2, 5, 10, 20, 50, 100, or 200 sites from the entire or half of the population according to the average MAT or average AP. For the systematic procedure, sites were first ordered based on average MAT or average AP. Thus, every jth site was assigned to a particular calibration sample with the greatest possible difference in MAT or AP between the 2, 5, 10, 20, 50, 100, or 200 sites. For the stratified procedure, the ordered sites were separated into 12 or 17 equal-sized bins based on MAT or AP, respectively (i.e., the smallest possible size that led to at least one site per bin). Thus, one site per bin was randomly selected and assigned to a particular calibration sample. The effects of these procedures on model performance were analyzed together with the effect of sample size. The results show that at least nine observations per free model parameter (i.e., the parameters that are fitted during calibration) should be used, which advocates for the pooling of sites and thus species-specific models. These models likely perform best when (1) sites are selected in a stratified procedure based on MAT for (2) a cross-validation with systematically balanced observations based on site and year, and their performance (3) should be tested with new sites selected in a stratified procedure based on AP.

Projections of autumn leaf phenology are highly uncertain. Projections of autumn leaf phenology to the years 2080–2099 were mostly influenced by the climate change scenarios, whereas the influence of the phenology models was relatively small. The analyzed projections were based on 16 and 10 climate model chains (CMCs) that assume moderate vs. extreme future warming, following the representative concentration pathways (RCPs) 4.5 and 8.5, respectively. Under more extreme warming, the projected autumn leaf phenology occurred 8–9 d later than under moderate warming, specifically shifting by −4 to +20 d (RCP 8.5) vs. −13 to +12 d (RCP 4.5). While autumn phenology was projected to generally occur later according to the better-performing models, the projections were over 6 times more influenced by the climate scenarios than by the phenology models. This small influence of models that differ in their functions and drivers indicates that the modeled relationship between warmer days and slowed senescence rates suppresses the effects of the other drivers considered by the models. However, because some of these drivers are known to considerably influence autumn phenology, the lack of corresponding differences between the projections of current phenology models underscores their uncertainty rather than the reliability of these models.

Leaf phenology of deciduous trees describes the recurrent annual cycle of leaf development from bud set to leaf fall (Lieth, 1974). In temperate and boreal regions, spring and autumn leaf phenology divide this cycle into photosynthetically active and inactive periods, henceforth referred to as the growing and dormant seasons (Lang et al., 1987; Maurya and Bhalerao, 2017). The response of leaf phenology to climate change affects the length of the growing season and thus the amount of atmospheric CO2 taken up by trees (e.g., Richardson et al., 2013; Keenan et al., 2014; Xie et al., 2021), as well as species distribution and species composition (e.g., Chuine and Beaubien, 2001; Chuine, 2010; Keenan, 2015). While several studies found spring phenology to advance due to climate warming (e.g., Y. H. Fu et al., 2014; Meier et al., 2021), findings regarding autumn phenology are more ambiguous (Piao et al., 2019; Menzel et al., 2020) but tend to indicate a backward shift (e.g., Bigler and Vitasse, 2021; Meier et al., 2021).

Various models have been used to study leaf phenology and provide projections, which may be grouped in correlative and process-oriented models (Chuine et al., 2013). Both types of models have served to explore possible underlying processes (e.g., Xie et al., 2015; Lang et al., 2019). The former models have often been used to analyze the effects of past climate change on leaf phenology (e.g., Asse et al., 2018; Meier et al., 2021), while the latter models have usually been applied to study the effects of projected climate change (e.g., Morin et al., 2009; Zani et al., 2020). Popular representatives of the correlative models applied in studies on leaf phenology are based on linear mixed-effects models and generalized additive models (e.g., Xie et al., 2018; Menzel et al., 2020; Meier et al., 2021; Vitasse et al., 2021), while the many different process-oriented phenology models all go back to the growing-degree-day model (Chuine et al., 2013; Chuine and Régnière, 2017; Fu et al., 2020) of de Réaumur (1735).

Different process-oriented models rely on different assumptions regarding the driving processes of leaf phenology (e.g., Meier et al., 2018; Chuine et al., 1999), but their functionality is identical. Process-oriented leaf phenology models typically consist of one or more phases during which daily rates of relevant driver variables are accumulated until a corresponding threshold is reached (Chuine et al., 2013; Chuine and Régnière, 2017). The rate usually depends on daily meteorological drivers and sometimes on day length (Chuine et al., 2013; Fu et al., 2020), while the threshold either is a constant (Chuine et al., 2013) or depends on latitude (Liang and Wu, 2021) or on seasonal drivers (e.g., the timing of spring phenology with respect to autumn phenology; Keenan and Richardson, 2015).

Models of spring phenology regularly outcompete models of autumn phenology by several days when assessed by the root mean square error between observed and modeled dates (4–9 vs. 6–13 d, respectively; Basler, 2016; Liu et al., 2020). These errors have been interpreted in different ways and have multiple sources. Basler (2016) compared over 20 different models and model combinations for the spring leaf phenology of trees. He concluded that the models underestimated the inter-annual variability of the observed dates of spring leaf phenology and were not transferable between sites. Liu et al. (2020) compared six models of the autumn leaf phenology of trees and concluded that the inter-annual variability was well represented by the models, while their representation of the inter-site variability was relatively poor.

Well-calibrated models of autumn leaf phenology are a prerequisite for sound conclusions about phenology-driving processes and for reducing uncertainties in phenological projections under distant climatic conditions. Studies of leaf phenology models generally show that certain models lead to better results and thus conclude that these models consider the relevant phenology-driving processes more accurately or add an important piece to the puzzle (Delpierre et al., 2009; Keenan and Richardson, 2015; Lang et al., 2019; Liu et al., 2019; Zani et al., 2020). Such conclusions can be assumed to be stronger if they are based on sound calibration and validation. However, so far, different calibration and validation methods have been applied (e.g., species- or site-specific calibration; Liu et al., 2019; Zani et al., 2020), which makes the comparison of study results difficult. Moreover, the uncertainty in leaf phenology projections is related to both climate projections and phenology models. While the uncertainty associated with climate projections has been extensively researched (e.g., Palmer et al., 2005; Foley, 2010; Braconnot et al., 2012), so far, the uncertainty associated with process-oriented phenology models has only been described in a few notable studies: Basler (2016) compared spring phenology models calibrated per species and per site, as well as calibrated per species, with pooled sites; Liu et al. (2020) compared autumn phenology models with a focus on inter-site and inter-annual variability; and Liu et al. (2021) focused on sample size and observer bias in observations of spring and autumn phenology. Therefore, this uncertainty and its drivers are arguably largely unknown and thus poorly understood, which may be part of the reason for debates such as the one surrounding the Zani et al. (2020) study (Norby, 2021; Zani et al., 2021; Lu and Keenan, 2022).

When considering phenology data from different sites, one must, in principle, decide between two calibration modes, namely a calibration per site and species or a calibration over various sites with pooled data per species. While the former calibration leads to a set of parameters per species and site, the latter leads to one set of parameters per species. On the one hand, site-specific models may respond to local adaptation (Chuine et al., 2000) without explicitly considering the underlying processes, as well as to relevant but unconsidered drivers. For example, a model based solely on temperature may provide accurately modeled data due to site-specific thresholds, even if the phenological observations at some sites are driven by additional variables such as soil water balance. On the other hand, species-specific models may consider local adaptation via parameters such as day length (Delpierre et al., 2009) and may be better suited to projections to other sites and changed climatic conditions as they apply to the whole species and follow a space-for-time approach (but see Jochner et al., 2013).

Independently of the calibration mode, various optimization algorithms have been used for the calibration of the model parameters. The resulting parameters are often intercorrelated (e.g., the base temperature for the growing-degree-day function and the corresponding threshold value to reach), and the parameter space may have various local optima (Chuine and Régnière, 2017). To calibrate phenology models, different optimization algorithms have been applied to locate the global optimum, such as simulated annealing, particle swarm optimization, or Bayesian optimization methods (e.g., Chuine et al., 1998; Liu et al., 2020; Zhao et al., 2021). Simulated annealing and its derivatives seem to be used the most in the calibration of process-oriented models of tree leaf phenology (e.g., Chuine et al., 1998; Basler, 2016; Liu et al., 2019; Zani et al., 2020). However, a systematic comparison of these different optimization algorithms regarding their influence on model performance and projections has been missing so far.

Previous studies on process-oriented phenology models have generally provided little information on the sampling procedure used to assign observations to the calibration and validation samples. Observations and sites may be sampled according to different procedures, such as random, stratified, or systematic sampling (Taherdoost, 2016). In contrast to random sampling, systematic and stratified sampling require a basis to which they refer. For example, when assigning observations based on year, observations from every ith year or one randomly selected observation from each of the i bins with equal time spans may be selected in systematic or stratified sampling, respectively. Studies on phenology models have usually considered all sites of the underlying dataset and declared the size of calibration and validation samples or the number of groups (k) in a k-fold cross-validation (e.g., Delpierre et al., 2009; Basler, 2016; Meier et al., 2018). However, the applied sampling procedure has not always been specified, but there are notable exceptions, such as Liu et al. (2019) for random sampling, Chuine et al. (1998) for systematic sampling, and Lang et al. (2019) for leave-one-out cross-validation. Moreover, the effects of the sampling procedure on the performance and projections of phenology models have not been studied yet.

Sample size in terms of the number of observations per site and the number of sites may influence the quality of phenology models as well. Studies on phenology models have usually selected sites with at least 10 or 20 observations per site, independent of the calibration mode (e.g., Delpierre et al., 2009; Keenan and Richardson, 2015; Lang et al., 2019). In studies with species-specific models, a wide range of sites have been considered, namely 8 to >800 sites (e.g., Liu et al., 2019, 2020). In site-specific calibration, the number of sites may be neglected as the site-specific models cannot be applied to other sites. However, the number of observations is crucial, as small samples may lead to overfitted models due to the bias–variance trade-off (James et al., 2017, Sect. 2.2.2), i.e., the trade-off between minimizing the prediction error in the validation sample versus the variance of the estimated parameters in the calibrated models. To our knowledge, no study to date has examined possible overfitting in phenology models. In addition, in species-specific calibration, the number of sites could influence the degree to which the population is represented by the species-specific models. While such reasoning appears intuitively right, we are unaware of any study that has systematically researched the correlation between the number of sites and the degree of representativeness.

Phenology models are typically calibrated, their performance is estimated, and some studies project leaf phenology under distant climatic conditions. The performance of phenology models has often been estimated with the root mean square error that is calculated from modeled and observed data (e.g., Delpierre et al., 2009; Lang et al., 2019) and has been generally used for model comparison (e.g., Basler, 2016; Liu et al., 2020) and model selection (e.g., Liu et al., 2019; Zani et al., 2020). When phenology has been subsequently projected under distant climatic conditions, projections may have been compared between models (Zani et al., 2020), but no correlation with model performance has been established yet.

With this study, we take a first step towards closing the gap of unknown uncertainties associated with process-oriented models of autumn tree leaf phenology, which has been left open by current research so far. We focused on uncertainties related to phenology models, optimization algorithms, sampling procedures, and sample sizes, evaluating their effects on model performance and model projection separately in site- and species-specific calibration mode. To this end, we conducted an extensive computer experiment with 21 autumn phenology models from the literature; 5 optimization algorithms, each run with two different settings; and various samples based on random, structured, and stratified sampling procedures and on different sample sizes. We analyzed the performance of >2.3 million combinations of model, algorithm, sample, and calibration modes based on observations for beech, pedunculate oak, and larch from central Europe for the years 1948–2015 (500 sites per species; PEP725; Templ et al., 2018). Further, we analyzed 39 million projections to the year 2099 according to these combinations under 26 different climate model chains, which were split between two different representative concentration pathways (CORDEX EUR-11; RCP 4.5 and RCP 8.5; Riahi et al., 2011; Thomson et al., 2011; Jacob et al., 2014). We addressed the following research questions:

-

What is the effect of the phenology model and calibration approach (i.e., calibration mode, optimization algorithm, and calibration sample) on model performance and projections?

-

What is the effect of sample size on the degree to which models are overfitted or represent the entire population?

-

Do better-performing models lead to more accurate predictions?

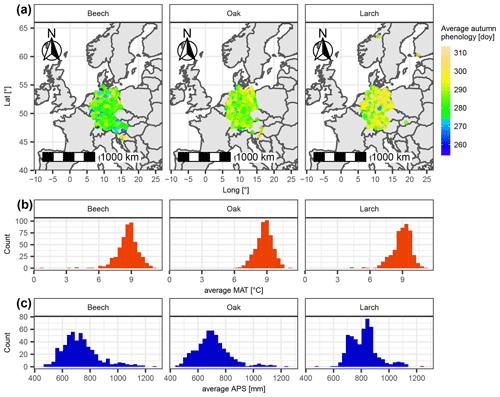

Figure 1Sites of considered leaf phenology data with respective average climatic conditions for beech, oak, and larch. (a) The location of each site is marked with a dot, the color of which indicates the average day of the year of autumn phenology. Panels (b) and (c) show the distribution of average mean annual temperature (MAT [∘C]) and average annual precipitation sum (APS [mm]) per site.

2.1 Data

2.1.1 Phenological observations

We ran our computer experiment with leaf phenology observations from central Europe for common beech (Fagus sylvatica L.), pedunculate oak (Quercus robur L.), and European larch (Larix decidua Mill.). All phenological data were derived from the PEP725 project database (http://www.pep725.eu/; last access: 13 April 2022). The PEP725 dataset mainly comprises data from 1948–2015 that were predominantly collected in Austria, Belgium, Czech Republic, Germany, the Netherlands, Switzerland, and the United Kingdom (Templ et al., 2018). We only considered site years for which the phenological data were in the proper order (i.e., the first leaves have separated before they unfolded (BBCH10 before BBCH11), and 40 % of the leaves have colored or fallen before 50 % of the leaves (BBCH94 before BBCH95); Hack et al., 1992; Meier, 2001) and for which the period between spring and autumn phenology was at least 30 d. Subsequently, we only considered sites with at least 20 years for which both spring and autumn phenology data were available. We randomly selected 500 of these sites per species. Each of these sites comprised 20–65 (beech), 20–64 (oak), or 20–30 (larch) site years, all of which included a datum for spring and autumn phenology. This added up to 17 211 site years for beech, 16 954 site years for oak, and 11 602 site years for larch. Spring phenology corresponded to BBCH11 for beech and oak and BBCH10 for larch, while autumn phenology for all three species was represented by BBCH94, henceforward referred to as leaf coloration (Hack et al., 1992; Meier, 2001).

The 500 selected sites per species differed in location, as well as in leaf phenology and climatic conditions. Most sites were from Germany but also from other countries such as Slovakia or Norway (Fig. 1). Autumn phenology averaged over selected site years per site ranged from day of the year 254 to 308 (beech), 265 to 309 (oak), and 261 to 314 (larch). Corresponding average mean annual temperatures ranged from 0.6 to 11.0 ∘C (beech), 6.3 to 11.0 ∘C (oak), and 4.1 to 11.0 ∘C (larch), and annual precipitation ranged from 470 to 1272 mm (beech), 456 to 1232 mm (oak), and 487 to 1229 mm (larch; Fig. 1).

2.1.2 Model drivers

The daily and seasonal drivers of the phenology models were derived and calculated from interpolated daily weather data, as well as data of different timescales of short- and longwave radiation, atmospheric CO2 concentration, leaf area indices, and soil moisture. Daily drivers are daily minimum air temperature, which is mostly combined with day length (see Sect. 2.2). Some models further consider seasonal drivers, which we derived from daily mean and maximum air temperature, precipitation, soil moisture, net and downwelling shortwave radiation, and net longwave radiation; from monthly leaf area indices; from monthly or yearly atmospheric CO2 concentration data; and from site-specific plant-available water capacity data. We calculated day length according to latitude and the day of the year (Supplement S3, Eq. S1; Brock, 1981). The other daily variables were derived from two NASA global land data assimilation system datasets on a grid (∼25 km; GLDAS-2.0 and GLDAS-2.1 for the years 1948–2000 and 2001–2015, respectively; Rodell et al., 2004; Beaudoing and Rodell, 2019, 2020) for the past and from the CMIP5-based CORDEX-EUR-11 datasets on a rotated grid (∼12.5 km; for the years 2006–2099; Riahi et al., 2011; Thomson et al., 2011; Jacob et al., 2014) for two representative concentration pathways (RCPs). After examining the climate projection data (Supplement. S1, Sect. S2), we were left with 16 and 10 climate model chains (CMCs; i.e., particular combinations of global and regional climate models) for RCPs 4.5 and 8.5, respectively. Atmospheric CO2 concentrations were derived from the historical CMIP6 and observational Mauna Loa datasets for the years 1948–2014 and 2015, respectively (monthly data; Meinshausen et al., 2017; Thoning et al., 2021), for the past and from the CMIP5 datasets for the years 2006–2099 (yearly data; Meinshausen et al., 2011) for the climate projections, matching the RCP 4.5 and RCP 8.5 scenarios (Smith and Wigley, 2006; Clarke et al., 2007; Wise et al., 2009; Riahi et al., 2007). Leaf area indices were derived from the GIMMS LAI3g dataset on a grid, averaged over the years 1981–2015 (Zhu et al., 2013; Mao and Yan, 2019). The plant-available water capacity per site was derived directly or estimated according to soil composition (i.e., volumetric silt, sand, and clay contents) from corresponding ISRIC SoilGrids250m datasets on a 250 m ×250 m grid (versions 2017-03 or 2.0 for water content or soil composition, respectively; Hengl et al., 2017). More detailed information about the applied driver data and driver calculations is given in Supplement S1 and S3, respectively.

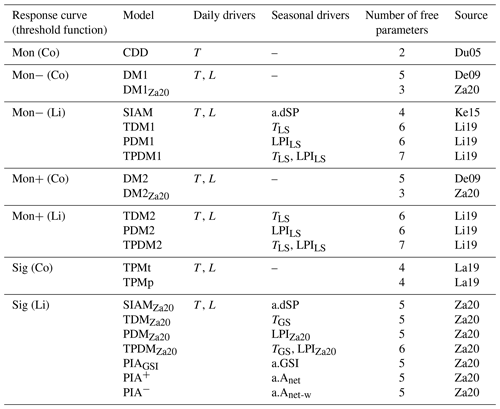

Table 1Compared process-oriented models of autumn phenology grouped according to their response curve for the daily senescence rate and their corresponding threshold function.

Note that daily senescence rate responds to the daily drivers' minimum temperatures (T) and day lengths (L), following either a monotonically increasing curve (Mon) with cooler temperatures, which may be weakened or amplified with shorter days (Mon− or Mon+), or a sigmoidal curve (Sig). The threshold value is either a constant (Co) or a linear function (Li) of one or two of the following seasonal drivers: site-specific anomaly of (1) spring phenology (a.dSP), (2) growing-season index (a.GSI), and/or (3) daytime net photosynthesis accumulated during the growing season ignoring or considering water limitation constraints (a.Anet and a.Anet-w), as well as the actual (4) leafy-season or growing-season mean temperature (TLS and TGS), (5) the low-precipitation index averaged over the leafy season (LPILS), or (6) the adapted low-precipitation index of the growing season (LPIZa20). Further, the number of free parameters fitted during model calibration and the sources for each model are listed (i.e., De09: Delpierre et al. (2009); Du05: Dufrêne et al. (2005); Ke15: Keenan and Richardson (2015); La19: Lang et al. (2019); Li19: Liu et al. (2019); Za20: Zani et al. (2020)). Note that the models CDD, DM1, DM2, SIAM, TDM1, TDM2, PDM1, PDM2, TPDM1, and TPDM2 are originally driven by daily mean rather than daily minimum temperature (see Sect. 4.5.2). All models are explained in detail in Sect S2.

2.2 Phenology models

We based our analysis on 21 process-oriented models of autumn phenology, which differ in their underlying functions and the drivers they consider (Table 1; Meier, 2022). In all models, the projected date for autumn phenology corresponds to the first day of the year, for which an accumulated daily senescence rate (RS) exceeds a corresponding threshold value. RS responds to daily minimum temperature (see Sect. 4.5.2) and, except for the CDD model, to day length (see Table 1 and Supplement S2). While the senescence rate increases with cooler temperatures, it may increase or decrease with shorter days, depending on the response function. Thus, with cooler temperatures, the rate follows either a monotonically increasing response curve (Mon – with RS≥ 0) or a sigmoidal response curve (Sig – with ), with the monotonous increase being weakened or amplified with shorter days (Mon− or Mon+), depending on the model (Dufrêne et al., 2005; Delpierre et al., 2009; Lang et al., 2019). The threshold value for the accumulated rate either is a constant (Co) or depends linearly on one or two seasonal drivers (Li). Accumulation of the daily rate starts on the first day after the 173rd day of the year (summer solstice) or after the 200th day of the year, for which minimum temperature and/or day length fall below corresponding thresholds. The models have between two and seven free parameters, which are jointly fitted during calibration.

While all models differ in their functions and drivers considered, they can be grouped according to the formulation of the response curve of the senescence rate and of the threshold function (Table 1). Models within a particular group differ by the number of free parameters, by the determination of the initial day of the accumulation of the senescence rate, or by the seasonal drivers of the threshold. The difference in the number of free parameters is relevant for the groups Mon− (Co) and Mon+ (Co). These groups contain two models each, which differ by the two exponents for the effects of cooler and shorter days on the senescence rate. Each of these exponents can be calibrated to the values 0, 1, or 2 in the models with more parameters, whereas the exponents are set to 1 in the models with fewer parameters. The initial day of the accumulation of the senescence rate is either defined according to temperature or day length in the two models of the group Sig (Co). The one or two seasonal drivers considered by the models of the groups Mon− (Li), Mon+ (Li), and Sig (Li) are site-specific anomalies of the timing of spring phenology, the growing-season index, and daytime net photosynthesis accumulated during the growing season ignoring or considering water limitation constraints, as well as the actual leafy-season or growing-season mean temperature, the low-precipitation index averaged over the leafy season, or the adapted low-precipitation index of the growing season. All models are explained in detail in Supplement S2.

2.3 Model calibration and validation

2.3.1 Calibration modes

We based our study on both a site- and species-specific calibration mode. In the site-specific mode, we derived for every calibration a species- and site-specific set of parameters (i.e., every combination of optimization algorithm and sample). In the species-specific mode, we derived for every calibration a species-specific set of parameters based on the observations from more than one site, depending on the calibration sample. Model performances were estimated with an external model validation, namely with a 5-fold cross-validation (James et al., 2017, Sect. 5.1.3) and a separate validation sample in the site- and species-specific modes, respectively.

2.3.2 Optimization algorithms

We calibrated the models with five different optimization algorithms, which can be grouped into Bayesian and non-Bayesian algorithms. The two Bayesian algorithms that we evaluated are efficient global optimization algorithms based on kriging (Krige, 1951; Picheny and Ginsbourger, 2014): one is purely Bayesian (EGO), whereas the other combines Bayesian optimization with a deterministic trust region formation (TREGO). The three non-Bayesian algorithms that we evaluated are generalized simulated annealing (GenSA; Xiang et al., 1997; Xiang et al., 2013), particle swarm optimization (PSO; Clerc, 2011, 2012; Marini and Walczak, 2015), and covariance matrix adaptation with evolutionary strategies (CMA-ES; Hansen, 2006, 2016). Every Bayesian and non-Bayesian algorithm was executed in a normal and extended optimization mode, i.e., with few and many iterations or steps (norm. and extd., respectively; Supplement S4, Table S1). In addition, the parameter boundaries within which all the algorithms searched for the global optimum (Supplement S2, Table S1) were scaled to range from 0 to 1. All algorithms optimized the free model parameters to obtain the smallest possible root mean square error (RMSE; Supplement S4, Eq. S1) between the observed and modeled days of the year of autumn phenology. As the Bayesian algorithms cannot handle iterations that produce NA values (i.e., modeled day of year >366), such values were set to day of year =1 for all algorithms and before RMSE calculation.

2.3.3 Calibration and validation samples

Calibration and validation samples can be selected according to different sampling procedures with different bases (e.g., randomly or systematically based on the year of observation) and have different sizes (i.e., number of observations and/or number of sites). Here, we distinguished between the following sampling procedures: random, systematically continuous, systematically balanced, and stratified. Further, our populations consisted of sites that included between 20 and 65 years, which directly affected the sample size in the site-specific calibration mode. In the species-specific mode, we calibrated the models with samples that ranged from 2 to 500 sites.

In the site-specific mode, the observations for the 5-fold cross-validation were selected (1) randomly or (2) systematically (Supplement S4, Fig. S1). For the random sampling procedure, the observations were randomly assigned to one of five validation bins. For the systematic sampling procedure, we ranked the observations based on the year, mean annual temperature (MAT), or autumn phenology date (AP) and created five equally sized samples containing continuous or balanced (i.e., every fifth) observations (see Supplement S4, Sect. 2.1 for further details regarding these procedures). Hence, every model was calibrated seven times for each of the 500 sites per species, namely with a randomized or a time-, phenology-, or temperature-based systematically continuous or systematically balanced cross-validation. This amounted to 2 205 000 calibration runs (i.e., 500 sites ×3 species ×21 models ×5 optimization algorithms ×2 optimization modes ×7 sample selection procedures) that consisted of five cross-validation runs each. Further, for the projections, every model was calibrated with all observations per site and species.

In the species-specific mode, we put aside 25 % randomly selected observations per site and per species (rounded up to the next integer) for external-validation samples and created various calibration samples from the remaining observations, selecting the different sites with different procedures. These calibration samples either contained the remaining observations of all 500 sites (full sample) or of (1) randomly selected, (2) systematically selected, or (3) stratified sites per species (Supplement S4, Fig. S2). The random and systematic samples contained the observations of 2, 5, 10, 20, 50, 100, or 200 sites. Randomly sampled sites were chosen either from the entire or half the population, with the latter being determined according to MAT and AP (i.e., cooler average MAT or earlier or later average AP). The systematically sampled sites were selected according to a balanced procedure in which the greatest possible distance between sites ranked by average MAT or AP was chosen. Note that the distance between the first and last site was 490 and not 500 sites, allowing up to 10 draws with a parallel shift of the first and last site. The stratified samples consisted of one randomly drawn site from each of the 12 MAT- or 17 AP-based bins. The chosen bin widths maximized the number of equal-sized bins so that they still contained at least one site (see Supplement S4, Sect. 2.2 for further details regarding these procedures). We drew five samples per procedure and size, except for the full sample, which we drew only once as it contained fixed sites, namely all sites in the population. Altogether, this amounted to 139 230 calibration runs (i.e., 3 species ×21 models ×5 optimization algorithms ×2 optimization modes × (6 sample selection procedures ×7 sample sizes ×5 draws +2 sample selection procedures ×5 draws +1 sample selection procedure)) that differed in the size and selection procedure of the corresponding sample. Every calibration run was validated with the sample-specific and population-specific external-validation sample. While the former consisted of the same sites as the calibration sample, the latter consisted of all 500 sites and hence was the same for every calibration run per species. Every calibration run was validated with the sample-specific and population-specific external-validation sample, henceforward referred to as “validation within sample” and “validation within population”. While the former consisted of the same sites as the calibration sample, the latter consisted of all 500 sites and hence was the same for every calibration run per species.

2.4 Model projections

We projected autumn phenology to the years 2080–2099 for every combination of phenology model, calibration mode, optimization algorithm, and calibration sample that converged without producing NA values, assuming a linear trend for spring phenology. While non-converging runs did not produce calibrated model parameters, we further excluded the converging runs that resulted in NA values in either the calibration or validation. In addition, we excluded combinations where projected autumn phenology occurred before the 173rd or 200th day of the year (i.e., the earliest possible model-specific day of senescence rate accumulation). Thus, we received 41 901 704 site-specific time series for the years 2080–2099 of autumn phenology projected with site-specific models, hereafter referred to as site-specific projections. These time series differed in terms of climate projection scenario (i.e., per combination of representative concentration pathway and climate model chain), phenology model, optimization algorithm, and calibration sample. Species-specific models led to projections for all 500 sites per species (i.e., the entire population) and thus to 1 574 378 000 time series for the years 2080–2099 that differed in terms of climate projection scenario, model, algorithm, and calibration sample, hereafter referred to as species-specific projections. For site- and species-specific models, we projected the spring phenology relevant for the seasonal drivers assuming a linear trend of −2 d per decade (Piao et al., 2019; Menzel et al., 2020; Meier et al., 2021). This trend was applied from the year after the last observation (ranging from 1969 to 2015, depending on site and species) and was based on the respective site average over the last 10 observations per species.

2.5 Proxies and statistics

2.5.1 Sample size proxies

We approximated the effect of sample size (1) on the bias–variance trade-off and (2) on the degree to which models represent the entire population with the respective size proxies of (1) the number of observations per parameter and (2) the site ratio. The effect of sample size on the bias–variance trade-off may depend on the number of observations in the calibration sample (N) relative to the number of free parameters (q) in the phenology model. In other words, a sample of, say, 50 observations may lead to a better calibration of the CDD model (two free parameters) compared to the TPDM1 model (seven free parameters). In the site-specific calibration, we calculated for each site and model the ratio N:q, with N being 80 % of the total number of observations per site to account for the 5-fold cross-validation. Assuming the 50 observations in the example above are the basis for the 5-fold cross-validation, N becomes 40, resulting in for the CDD model and for the TPDM1 model. With species-specific calibration, we considered the average number of observations per site () and calculated for each calibration sample and model the ratio to separate this ratio from the site ratio explained further below. Assuming the 50 observations in the example above correspond to a calibration sample based on two sites, becomes 25, resulting in for the CDD model and for the TPDM1 model. The effect of sample size on the degree to which models represent the entire population with species-specific calibration may depend on the number of sites in the calibration sample (s) relative to the number of sites in the entire population (S; i.e., site ratio s:S). Thus, we derived the site ratio by dividing s by 500. Note that the combined ratios and s:S account for the effect of the total sample size as .

2.5.2 Model performance

We quantified the performance of each calibrated model according to the root mean square error (RMSE; Supplement S4, Eq. S1). The RMSE was calculated for the calibration and the validation samples (i.e., internal and external RMSE, respectively), as well as at the sample and population level with the species-specific calibration (i.e., validated within the sample or population; external sample RMSE or external population RMSE, respectively). We derived each RMSE per sample at the site level with the site-specific calibration and at the sample level with the species-specific calibration.

To measure the effect (1) on the bias–variance trade-off and (2) on the degree to which models represent the entire population, we derived two respective RMSE ratios. Regarding the bias–variance trade-off and with the site-specific calibration, we divided the external RMSE by the internal RMSE derived from the calibration run with all observations per site and species (Cawley and Talbot, 2010, Sect. 5.2.1; James et al., 2017, Sect. 2.2.2). The numerator was expected to be larger than the denominator, and increasing ratios were associated with an increasing bias, indicating overfitting. Regarding the degree to which models represent the entire population and hence with the species-specific calibration, we divided the external sample RMSE by the external population RMSE. Here, the numerator was expected to be smaller than the denominator, and increasing ratios were associated with increasing representativeness.

We applied two different treatments to calibration runs that led to NA values (i.e., the threshold value was not reached by the accumulated senescence rate until day of year 366) or did not converge at all (Supplement S6, Fig. S1). On the one hand, the exclusion of such runs may bias the results regarding model performance since a non-converging model must certainly be considered to perform worse than a converging one. Therefore, in contrast to the model calibration, we replaced the NA values with the respective observed date +170 d (i.e., a difference that exceeds the largest modeled differences in any calibration or validation sample by 2 d) and assigned an RMSE of 170 to non-converging runs before we analyzed the model performance. On the other hand, replacing NA values with a fixed value leads to an artificial effect and affects the performance analysis as, say, a linear dependence of the RMSE on a predictor is suddenly interrupted. Moreover, projections based on models that converged but produced NA values seem questionable, while projections based on non-converging models are impossible. Therefore, we implemented a second analysis of performance from which we excluded the calibration runs that did not converge or that contained one or more NA values in either the calibration or the validation sample. Our main results regarding model performance were based on the substituted NA values and the RMSE of 170 d for non-converging runs. However, where necessary, we have referred to the results based only on converged runs without NA values (provided in Supplement S6, Sect. S2.1.2 and S2.2.2). Furthermore, our results regarding projections and our comparisons between performance and projections are based solely on converging runs without NA values.

2.5.3 Model projections

We analyzed the site- and species-specific projections of autumn phenology according to a 100-year shift (Δ100) at the site level. Δ100 was defined as the difference between the means of the observations for the years 1980–1999 and of the projections for the years 2080–2099. If observations for the years 1980–1999 were missing, we used the mean of the 20 last observations instead. Thus, the derived shift was linearly adjusted to correspond to 100 years.

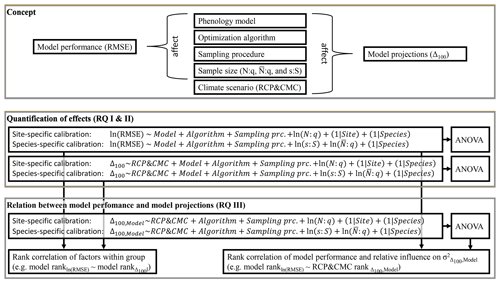

Figure 2Concept and methods applied. This study assumed that, in addition to phenology models and climate scenarios, the choice of optimization algorithm and calibration sample (i.e., sampling procedure and sample size) affect model performance and model projections (i.e., the root mean square error, RMSE, and the shift between autumn leaf phenology in 2080–2099 and 1980–1999, Δ100, respectively). To answer research questions I and II (RQ I and II), the effects of these factors on the RMSE and Δ100 were quantified with linear mixed-effects models. Subsequently, the relative influence of the factors (e.g., all phenology models) on the explained variance (σ2) of RMSE and Δ100 were quantified with type-III ANOVA. To answer RQ III, the effects on the RMSE were related to the effects on Δ100 and the influences on by calculating the Kendall rank correlations (e.g., between the effects of the phenology models on the RMSE and Δ100 or between the effect of the phenology models on the RMSE and the influence of each model on ). The phenology models were calibrated site and species specifically (i.e., one set of parameters per site and species vs. one set of parameters per species, respectively). Sample size was quantified by the number of observations relative to the number of free parameters in the phenology model (N:q), the average number of observations relative to the number of free parameters (), and the number of sites relative to the 500 sites of the entire population (s:S).

2.6 Evaluation of model performance and autumn phenology projections

To answer research question I (RQ I), we calculated the mean, median, standard deviation, and skewness of the RMSE distributed across phenology models, optimization algorithms, sampling procedures, and (binned) sample size proxies. These statistics were derived separately per site- and species-specific calibration validated within the sample or population, giving a first impression of the effects on model performance. Further, the distribution of the RMSE was relevant for subsequent evaluations.

To answer RQ I and RQ II, we estimated the effects of phenology models, optimization algorithms, sampling procedures, and sample size proxies on model performance and, together with climate projection scenarios, on model projections with generalized additive models (GAMs; Wood, 2017) and subsequent analyses of variance (ANOVA; Fig. 2; Chandler and Scott, 2011). We fitted the GAMs separately per calibration and projection mode, i.e., per site- and species-specific calibration validated (projected) within sample or population (Supplement S5, Sect. S1; Supplement S5, Eqs. S1 and S2). The response variables RMSE and Δ100 were assumed to depend linearly on the explanatory factors (1) phenology models, (2) optimization algorithms, (3) sampling procedures, and (4) climate projection scenarios (only regarding Δ100) as well as the explanatory variables of continuous sample size proxies. Sites and species were included as smooth terms, which were set to crossed random effects such that the GAM mimicked linear mixed-effects models with random intercepts (Supplement S5, Sect. S2; Pinheiro and Bates, 2000; see the Discussion section for the reasons and implications of our choice). The RMSE and sample size proxies were log-transformed since they can only take positive values and followed right-skewed distributions (i.e., skew >0; Supplement S6, Tables S1–S4). Coefficients were estimated with fast restricted maximum likelihood (Wood, 2011). Thereafter, the fitted GAMs served as input for corresponding type-III ANOVA (Supplement S5, Sect. S4; Yates, 1934; Herr, 1986; Chandler and Scott, 2011), with which we estimated the influence on the RMSE and the Δ100. The influence was expressed as the relative variance in RMSE or in Δ100, explained by phenology models, optimization algorithms, sampling procedures, sample size proxies, and climate projection scenarios (only regarding Δ100). Regarding model projections, we drew five random samples of 105 projections per climate projection scenario and per Δ100 projected with site- and species-specific models within the sample or population. Thereafter, we fitted a separate GAM and ANOVA for each of these 15 samples (see the Discussion section for the reasons and implications of this approach).

The coefficient estimates of the GAMs expressed (relative) changes towards the corresponding reference levels of RMSE or Δ100. The reference levels were based on the CDD model calibrated with the GenSA (norm.) algorithm on a randomly selected sample and, only regarding Δ100, projected with the CMC 1 of the RCP 4.5. Hence, the estimates of the intercept refer to the estimated log-transformed RMSE or Δ100 according to these reference levels with sample size proxies of 1 (i.e., log-transformed proxies of 0). Regarding the interpretation of model performance, negative coefficient estimates indicated better performance, which was reflected in smaller RMSE values. The effects of the explanatory variables were expressed as relative changes in the reference RMSE due to the log-transformation of the latter (Supplement S5, Sect. S3). Regarding model projections, while negative coefficient estimates in combination with negative reference Δ100 resulted in an accelerated projected advancement of autumn phenology, they weakened a projected delay of autumn phenology or changed it to a projected advancement in combination with positive reference Δ100 and vice versa. In other words, negative coefficient estimates only translated into earlier projected autumn phenology when the corresponding reference Δ100 was negative or when their absolute values were larger than the (positive) reference Δ100 and vice versa.

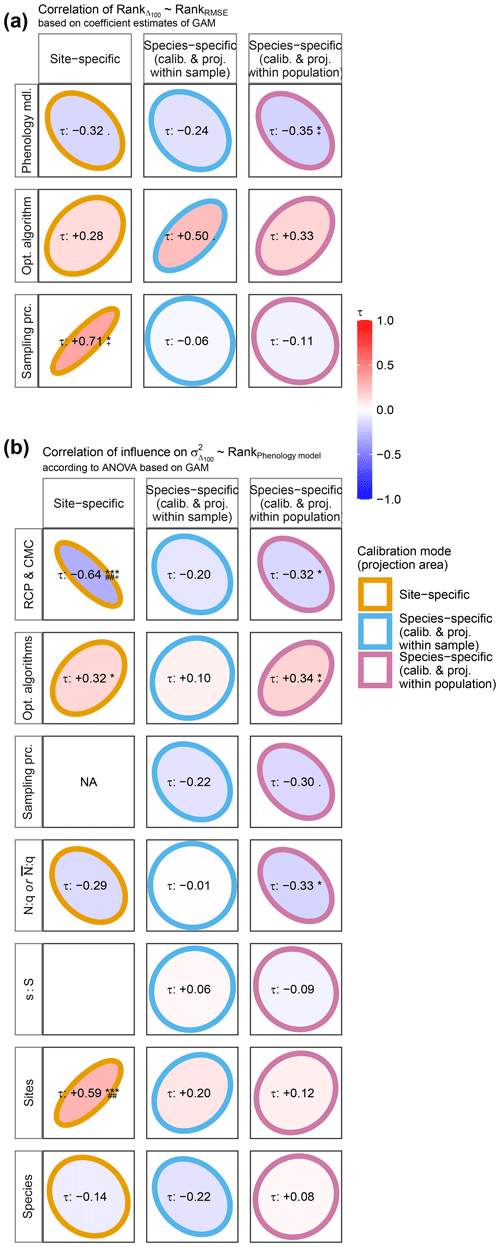

To answer RQ III, we related both (1) the ranked effects on model performance to the ranked effects on model projections and (2) the performance ranks of phenology models to the ranked influence of explanatory variables on Δ100 per model (Fig. 2). First, we ranked phenology models, optimization algorithms, and sampling procedures according to their estimated effects on log-transformed RMSE or Δ100 and calculated the Kendall rank correlation (Kendall, 1938) within each group of factors (e.g., within phenology models). Negative correlations indicated, for example, that models with better performance projected later autumn phenology than models with poorer performance and vice versa. Second, we fitted GAMs per site- and per species-specific phenology model to the response variable Δ100 as described above (Supplement S5, Eq. S1) but excluded phenology models from the explanatory variables. We derived type-III ANOVA per GAM (Supplement S5, Eq. S14) and ranked the influence of optimization algorithms, sampling procedures, sample size proxies, and climate projection scenarios across phenology models. Thus, we calculated the Kendall rank correlation (Kendall, 1938) between these newly derived ranks and the ranks of the phenology models based on their effect on performance. In other words, we analyzed if the ranked influence of, for example, aggregated climate projection scenarios on Δ100 correlated with the ranked performance of phenology models. In this example, negative correlations indicated that climate projection scenarios had a larger relative influence on projected autumn phenology when combined with phenology models that performed better than with models that performed worse and vice versa. As before, each GAM and ANOVA was fitted and derived five times per phenology model and climate projection scenario based on five random samples of 105 corresponding projections per climate projection scenario. Therefore, the ranks of optimization algorithms, sampling procedures, sample size proxies, and climate projection scenarios were based on the mean coefficient estimates or mean relative explained variance.

We chose a low significance level and specified Bayes factors to account for the large GAMs with many explanatory variables and the frequent misinterpretation or over-interpretation of p values (Benjamin and Berger, 2019; Goodman, 2008; Ioannidis, 2005; Wasserstein et al., 2019; Nuzzo, 2015). We applied a lower-than-usual significance level, namely α=0.01 (i.e., p<0.005 for two-sided distributions; Benjamin and Berger, 2019), and included the 99 % confidence intervals in our results. In addition, we complemented the p values with the Bayes factors (BF) to express the degree to which our data changed the odds between the respective null hypothesis H0 and the alternative hypothesis H1 (BF01; Johnson, 2005; Held and Ott, 2018). For example, if we assume a prior probability of 20 % for the alternative hypothesis (i.e., a prior odds ratio H0 : H1 of ), then a BF01 of means that the new data suggest a posterior odds ratio of (i.e., ) and thus a posterior probability of 83.3 % for the alternative hypothesis. Our study was exploratory in nature (Held and Ott, 2018, Sect. 1.3.2); hence, our null hypothesis was that there is no effect as opposed to the alternative hypothesis that there is one, for which a local distribution around zero is assumed (Held and Ott, 2018, Sect. 2.2). We derived the corresponding sample size-adjusted minimum BF01 (denoted BF01) from the p values of the GAM coefficients, ANOVA, and Kendall rank correlations (Johnson, 2005, Eq. 8; Held and Ott, 2016, Sect. 3; 2018, Sect. 3). While BF01 never exceeds the value of 1, BF01 below 1/100 may be considered to be very strong, and BF01 above may be considered to be (very) weak (Held and Ott, 2018, Table 2). Henceforward, we refer to results with p<0.005 as significant and with BF as decisive. Note that the BF01 expresses the most optimistic shift towards the alternative hypothesis.

All computations for data preparations, calculations, and visualizations were conducted in R (versions 4.0.2 and 4.1.3 for scientific computing and data visualizations, respectively; R Core Team, 2022) with different packages. Data were prepared with data.table (Dowle and Srinivasan, 2021). Phenology models were coded based on phenor (Hufkens et al., 2018) and calibrated with DiceDesign and DiceOptim (for the optimization algorithms EGO and TREGO; Dupuy et al., 2015; Picheny et al., 2021), with GenSA via phenor (for GenSA; Xiang et al., 2013; Hufkens et al., 2018), with pso (for PSO; Bendtsen, 2012), and with cmaes (for CMA-ES; Trautmann et al., 2011), while the RMSE was calculated with hydroGOF (Zambrano-Bigiarini, 2020). The formulas for the GAMs were translated from linear mixed-effects models with buildmer (Voeten, 2022), GAMs were fitted with mgcv::bam (Wood, 2011, 2017), the corresponding coefficients and p values were extracted with mixedup (Clark, 2022), and sample size-adjusted BF01 were derived from p values with pCalibrate::tCalibrate (Held and Ott, 2018). Summary statistics, ANOVA, and correlations with respective p values were calculated with stats (R Core Team, 2022). Figures and tables were produced with ggplot2 (Wickham, 2016), ggpubr (Kassambara, 2020), and gtable (Wickham and Pedersen, 2019).

3.1 Model performance

We evaluated 2 205 000 and 139 230 externally validated site- and species-specific calibration runs. Each of these runs represented a unique combination of 21 models, 10 optimization algorithms, 7 calibration samples ×500 sites with the site-specific calibration or 9 calibration samples with the species-specific calibration, and 3 species. All samples for the species-specific calibration were drawn five times, except for the full sample. From the initial site- and species-specific calibration runs, 136 500 and 7048 runs, respectively, did not converge, while another 373 120 and 23 312 runs led to NA values in either the internal or external validation (Supplement S6, Fig. S1).

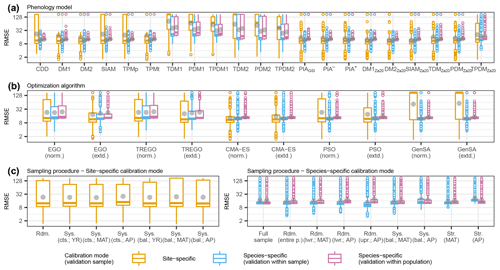

Figure 3Observed distributions of the external root mean square error (RMSE) of the pooled species according to (a) phenology models, (b) optimization algorithms, and (c) sampling procedures. The thick horizontal lines and gray dots indicate the respective median and mean. Boxes cover the inner quartile range, whiskers extend to the most extreme observations or to 1.5 times the inner quartile range, and outliers are indicated as colored circles. Note that the y axes were log-transformed. In all figures, the colors represent the calibration and validation modes. The abbreviations for the models, algorithms, and sampling procedures are explained in following the tables: Supplement S2, Table S1; Supplement S4, Table S1; and Supplement S4, Tables S2 and S3.

3.1.1 Observed effects

Across the phenology models, optimization algorithms, and sampling procedures, the observed distribution of the external root mean square error (RMSE) of the pooled species differed considerably between the calibration and validation modes. Overall, the smallest median RMSEs were similar between the site- and species-specific calibration modes, ranging from 10.1 to 12.3 and from 11.7 to 12.6 or from 12.4 to 12.9 d in the site- and species-specific calibration validated within the sample or within the population (Fig. 3; Supplement S6, Table S1). The smallest mean RMSEs were considerably larger with the site- than with the species-specific calibration (19.0–51.3 vs. 11.7–23.9 or 12.9–24.4 d; gray dots in Fig. 3). Accordingly, standard deviations were larger with the site- than with the species-specific calibration (28.4–66.0 vs. 3.7–36.6 or 1.2–36.0 d; Fig. 3; Supplement S6, Table S1).

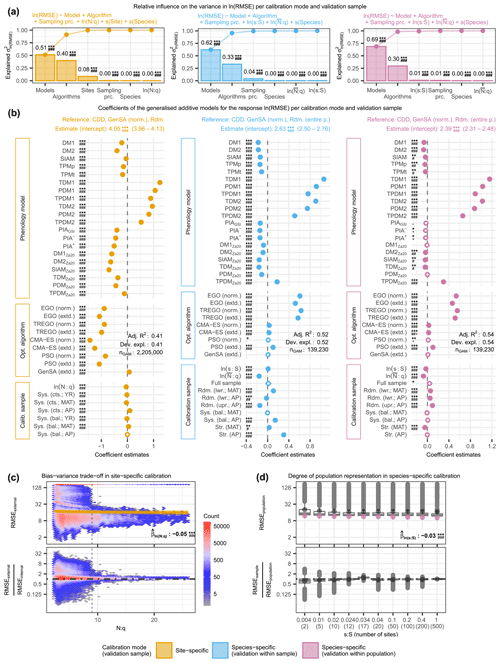

Figure 4The relative variance in the log-transformed external root mean square error (RMSE) explained by phenology models, optimization algorithms, and calibration samples (i.e., sampling procedures and sample size proxies) together with the effects of the individual factors and the observed distribution of the RMSE according to sample size proxies. The relative variance was estimated from analyses of variance (a) based on generalized additive models (GAMs; b) for site-specific calibration (left), as well as for within-sample-validated (middle) and within-population-validated (right) species-specific calibration. The observed distribution is plotted against (c) the number of observations per free model parameter (N:q) and (d) the number of sites relative to the 500 sites of the entire population (s:S), illustrating the bias–variance trade-off with site-specific calibration and the degree to which a model represents the population with species-specific calibration, respectively. In (a), the bars indicate the estimated influence of phenology models, optimization algorithms, sampling procedures, and sample size proxies (N:q, , and s:S), as well as of the random effects of sites and species on the variance in RMSE. The connected dots show the cumulated influence. Panel (b) shows the coefficient estimates (dots or circles) of the GAMs together with their 0.5 %–99.5 % confidence limits (whiskers). Dots represent significant (p<0.005) coefficients. The significance levels of each coefficient are indicated with ., *, **, or ***, corresponding to p<0.1, 0.05, 0.01, or 0.001, respectively. Further, the minimum Bayes factor is indicated with +, #, #+, ##, ##+, or ###, corresponding to BF, 1/10, 1/30, 1/100, 1/300, or 1/1000, respectively. Adjusted R2 and deviance explained are printed (Adj. R2 and Dev. expl., respectively). Note that negative coefficients (i.e., to the right of the dashed black line) indicate lower RMSE and thus better model performance. In (c), the observed distributions of the actual (top) and relative (bottom) external RMSE are plotted against N:q. The color of the hexagons represents the respective number of observations within the area they cover, and the dashed black line indicates . For the actual RMSE, the estimated effect size according to the GAM is plotted with a solid golden line (median) and a golden-shaded area (0.5 %–99.5 % confidence limits). For the relative RMSE, the external RMSE is larger than the internal RMSE in observations above the dot-dashed black line and vice versa. In (d), the observed distributions of the actual (top) and relative (bottom) external population RMSEs are plotted for each s:S. For the actual RMSE, the estimated effect size according to the GAM is plotted with purple dots (median) and purple whiskers (0.5 %–99.5 % confidence limits). For the relative RMSE, the external population RMSE is larger than the external sample RMSE in observations above the dot-dashed black line and vice versa. In (c) and (d), the printed values of the corresponding coefficient estimates () refer to the log-transformed RMSE and respective N:q or s:S. The abbreviations for the models, algorithms, and sampling procedures in all figures are explained in the following tables: Supplement S2, Table S1; Supplement S4, Table S1; and Supplement S4, Tables S2 and S3.

In the site-specific calibration, increasing sample size relative to the number of free model parameters (N:q) first lowered and then increased the RMSE and generally decreased the bias–variance trade-off. Binned mean RMSEs and corresponding standard deviations ranged from 35.8 to 61.1 and from 58.5 to 71.9 d, respectively (Fig. 4c; Supplement S6, Table S2). The binned mean RMSE ratio regarding the bias–variance trade-off (i.e., external RMSE : internal mean RMSE) decreased steadily from 1.5 to 1.0 and at decreasing step sizes with bins of increased N:q. Step sizes between binned N:q were considerably larger for than for (Supplement S6, Table S2), and we observed an abrupt increase in the scatter of the RMSEs and RMSE ratios below an N:q of ∼9 (Fig. 4c).

In species-specific calibration, larger sample sizes generally co-occurred with smaller population RMSEs and higher degrees to which a model represented the population, except for the stratified samples, which led to the best modeled phenology at the population level and the highest degree of population representation. The mean population RMSE and corresponding standard deviation ranged from 24.4 to 30.2 and 36.0 to 40.4 d (Fig. 4d; Supplement S6, Table S2). We observed the smallest mean population RMSE in the stratified sample based on average mean annual temperature (MAT; 12 sites; RMSE = 24.4 d), followed by the stratified sample based on average autumn phenology (AP; 17 sites; RMSE =25.9 d) and then the full sample (500 sites; RMSE =25.9 d). Except for the stratified samples, increasing sample size resulted in a steady increase in the RMSE ratio regarding the degree to which a model represents the population, which indicated a generally better representation of the population with larger samples. The ratio for stratified MAT samples followed just behind that of the full sample and was 0.95, followed by the samples with 200 and 100 sites that led to a ratio of 0.94. The ratio for the stratified AP samples even exceeded that of the full sample and was 1.21 (i.e., the validation within the samples led to larger RMSEs than the validation within the population). Thus, the most accurate modeling of autumn phenology at the population level was achieved with stratified MAT samples rather than with the full sample. Furthermore, models calibrated with AP samples performed better when applied to the whole population, suggesting that the population is better represented with AP samples than with the full sample.

3.1.2 Estimated effects

The evidence in the analyzed data against H0 was significant and decisive for the estimated effects (p<0.005 and BF) and influences (p<0.01 and BF) of most factors, while the deviances explained ranged from 0.41 to 0.54 (Fig. 4; Supplement S6, Fig. S4; Supplement S6, Tables S8–S11 and S15–S18).

Phenology models generally had the largest influence on model performance among optimization algorithms, sampling procedures, sample size, sites, and species, but the degree of influence, as well as the best-performing models depended on the calibration mode (Fig. 4; Supplement S6, Tables S5–S8). Phenology models explained 51 % and 62 % or 69 % of the variance in RMSEs in the site- and species-specific calibration when validated with the sites of the sample or the entire population, respectively (Fig. 4; Supplement S6, Table S8). We estimated the effect on the RMSE of each model relative to the reference CDD model and per calibration mode. In the site-specific calibration, the effects were generally larger than in the species-specific calibration (Fig. 4; Supplement S6, Tables S9–S11). Further, the ranks of the models according to their effects differed between calibration modes (Fig. 4; Supplement S6, Tables S5–S7).

In the site-specific calibration, all 20 phenology models had decisive and significant effects compared to the RMSE of the reference CDD model, which ranged from halving to tripling, and their ranking depended strongly on the treatment of NA values and non-converging runs (Fig. 4; Supplement S6, Fig. S4; Supplement S6, Tables S5 and S12). The reference model CDD led to an RMSE of 52.6–62.1 d in the site-specific calibration (99 % confidence interval, CI99; see Supplement S5, Sect. S3 for the back-transformation of the coefficient estimates; Fig. 4; Supplement S6, Tables S5 and S9). The largest reduction from this RMSE was achieved with the models SIAMZa20, DM2Za20, DM1Za20, and PIA+, ranging from −51 % to −40 % (CI99). Model ranks and effect sizes changed considerably if NA-producing and non-converging calibration runs were excluded. The RMSE of the reference model CDD dropped to 6.0–7.6 d (CI99) and was not reduced by any of the other models (Supplement S6, Fig. S4; Supplement S12, Table S16). In other words, if only calibration runs without NAs were considered, the CDD model performed best, followed by SIAM, DM2Za20, and TPMp.

In the species-specific calibration, all 20 or 9 models had decisive and significant effects compared to the reference model CDD if validated within sample or population, respectively, with effects ranging from a reduction by one-fifth to a tripling and resulting in fairly consistent model ranks between the two NA treatments (Fig. 4; Supplement S6, Fig. S4; Supplement S6, Tables S6–S7 and S13–S14). The RMSE according to the reference model CDD was 12.1–15.7 or 10.1–11.9 d (CI99) if validated within the sample or population, respectively (Fig. 4; Supplement S6, Tables S6–S7 and S10–S11). According to the within-sample validation, the RMSE was reduced the most with the DM1, DM2, TDMZa20, and SIAMZa20, with reductions between −19 % and −11 % (CI99). According to the within-population validation, the models DM2, DM1, TPMp, and TDMZa20 reduced the RMSE the most, and reductions ranged from −10 % to −1 % (CI99; Fig. 4; Supplement S6, Tables S6–S7 and S10–S11). If NA-producing and non-converging runs were excluded, the reference RMSE increased to 16.2–20.2 or 12.9–13.9 d (CI99; validated within the sample or population, respectively), while model ranks were changed in two positions (Supplement S6, Fig. S4; Supplement S6, Tables S13–S14).

Optimization algorithms had the second-largest influence on model performance, explaining about one-third of the variance in RMSEs, with differences between the calibration modes and NA treatments regarding the degree of influence and the ranking of individual algorithms (Fig. 4; Supplement S6, Fig. S4; Supplement S6, Tables S5–S8 and S12–S15). Algorithms explained 40 % and 33 % or 30 % of the variance in RMSEs in site- and species-specific calibrations validated within the sample or population, respectively (Fig. 4; Supplement S6, Table S8). In the site-specific calibration, both CMA-ES algorithms (norm. and extd.) resulted in the smallest RMSEs, which were −76 % to −71 % (CI99) lower than the RMSEs according to the reference GenSA (norm.; CI99 of 52.6–62.1 d – see above; Fig. 4; Supplement S5, Tables S5 and S9). In species-specific calibration, the best results were obtained with both GenSA algorithms (norm. and extd.), whereas the Bayesian algorithms (EGO and TREGO, norm. and extd.) performed the worst and resulted in RMSEs that were +57 % to +91 % (CI99) larger than the reference RMSEs (CI99 of 12.1–15.7 or 10.1–11.9 d if validated within the sample or population – see above; Fig. 4; Supplement S6, Tables S6–S7 and S10–S11). If NA-producing and non-converging calibration runs were excluded, the lowest and largest RMSEs with site-specific calibration were obtained with the GenSA and Bayesian algorithms, respectively. With species-specific calibration, we observed little change when only calibration runs without NAs were analyzed. As before, Bayesian algorithms led to the largest RMSEs, while both GenSA algorithms resulted in the smallest RMSEs (Supplement S6, Fig. S4; Supplement S6, Tables S12–S14).

Sampling procedures had little influence on model performance in general (third or fourth largest) and were more important with species- than with site-specific calibration where stratified sampling procedures led to the best results (Fig. 4; Supplement S6, Tables S5–S8). Sampling procedures explained 0.3 % and 4.0 % or 0.7 % of the variance in RMSEs with site- and species-specific calibrations validated within the sample or population, respectively (Fig. 4; Supplement S6, Table S8). With site-specific calibration, systematically continuous samples based on mean annual temperature (MAT) and year performed best, diverging by −4.4 % to −1.3 % (CI99; Fig. 4; Supplement S6, Tables S5 and S9) from the RMSEs according to the reference random sampling. With species-specific calibration, we received the lowest RMSEs with random samples from half of the population (split according to autumn phenology, AP) when validated within the sample (Fig. 4; Supplement S6, Tables S6 and S10). When validated within the population, stratified samples based on MAT performed best, diverging by −6.9 % to −2.3 % (CI99) from the RMSE according to the reference random sampling from the entire population (Fig. 4,; Supplement S6, Tables S7 and S11). The alternative NA treatment had little effect on these results in general but led to sampling procedures that exhibited an influence of 49 % with species-specific calibration validated within the sample, while systematically balanced samples performed best with site-specific calibration (Supplement S6, Fig. S4; Supplement S6, Tables S12–S15). Note that, for the site-specific calibration, these sampling procedures refer to the allocation of observations for the 5-fold cross-evaluation, whereas for the species-specific calibration, they refer to the selection of sites.

Sample size effects on model performance were very small but showed that more observations per free model parameter led to smaller RMSEs, except with site-specific calibration when NA-producing runs were excluded (Fig. 4; Supplement S6, Fig. S5; Supplement S6, Tables S5–S8 and S12–S15). Among the size proxies of relative (average) number of observations (N:q or ) and site ratio (s:S), only s:S with species-specific calibration validated within the population explained more than 0.15 % of the variance in RMSEs, namely 1.0 % (Fig. 4; Supplement S6, Table S8). An increase of 10 % in N:q reduced the RMSE by approximately −0.7 % to −0.2 % with site-specific calibration, while an increase of 10 % in the led to reductions of approximately −1.8 % to −1.2 % or −1.0 % to −0.4 % (CI99) with species-specific calibration validated within the sample or population (Supplement S6, Tables S5–S7). A 10 % increase in s:S with species-specific calibration increased the RMSE by (CI99) if validated within the sample and decreased it by (CI99) if validated within the population (Supplement S6, Tables S6 and S7). By excluding NA-producing and non-converging runs, a 10 % increase in N:q increased the RMSE in the site-specific calibration by +0.4 % to +0.6 % (CI99; Supplement S6, Fig. S4; Supplement S6, Tables S12–S15).

Sites and species were included as grouping variables for the random effects and had little influence on model performance, except for sites with site-specific calibration. Sites explained 8.5 % of the variance in RMSEs with site-specific calibration and hence had a larger influence than the sampling procedure and sample size (i.e., N:q) combined (Fig. 4; Supplement S6, Table S8). Species only explained more than 0.1 % of the variance in species-specific calibration validated within the sites, namely 0.4 %. Thus, species had a slightly greater influence than and s:S combined (Fig. 4; Supplement S6, Table S8). When only converged calibration runs without NAs were analyzed, sites became the second most important driver of the variance in RMSEs in the site-specific calibration, explaining 27 % (Supplement S6, Fig. S4; Supplement S6, Table S15).

3.2 Model projections

We analyzed the effects on the 100-year shifts at site level (Δ100) in autumn phenology projected with site- and species-specific models within the sample or population and based on five random samples per projection mode. Each sample consisted of 105 projected shifts per climate projection scenario, i.e., 2.6×106 projected shifts per sample, which were drawn from corresponding datasets that consisted of and or projected with site- and species-specific phenology models for the sites within the sample or population, respectively. These datasets were based on 16 climate model chains (CMCs) based on the representative concentration pathway 4.5 (RCP 4.5), and 10 CMCs based on RCP 8.5. The analyzed data (i.e., 3.9×107Δ100) provided significant and decisive evidence against H0 for most estimated effects and most influences (Supplement S6, Tables S22–S25).

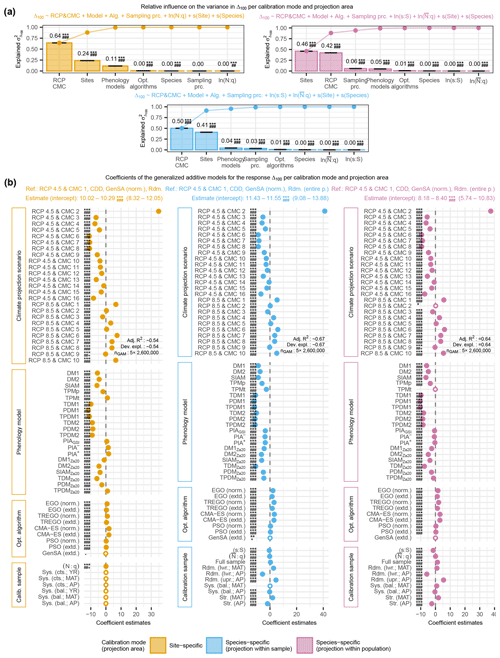

Figure 5The relative variance in the projected 100-year shifts at site level (Δ100) of autumn phenology and corresponding effects explained by climate projection scenarios (i.e., representative concentration pathways, RCPs, and climate model chains, CMCs), phenology models, optimization algorithms, and calibration samples (i.e., sampling procedures and sample sizes). The relative variance was estimated from analyses of variance (a) based on generalized additive models (GAMs; b) for Δ100 according to site-specific models (left), as well as according to species-specific models when projected within the sample (middle) or within the population (right). In (a), the black error bars indicate the range of estimated influence from the five GAMs based on random samples. In (b), the median coefficient estimates from the five GAMs are visualized. If all five estimates were significant (p<0.005), the median is indicated with a dot and with a circle otherwise. None of the 99 % confidence intervals from any of the five GAMs extended beyond either the dot or circle and are thus not shown. Note that coefficients estimate the difference in relation to the reference Δ100. Thus, a negative coefficient estimate may indicate a projected advance or delay in autumn phenology, depending on how it relates to the reference. The abbreviations for the climate projection scenarios, phenology models, optimization algorithms, and sampling procedures in all figures are explained in the following tables: Supplement S1, Table S5; Supplement S2, Table S1; Supplement S4, Table S1; and Supplement S4, Tables S2 and S3. For a further description, see Fig. 4a and b.

3.2.1 Estimated effects