the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Technical descriptions of the experimental dynamical downscaling simulations over North America by the CAM–MPAS variable-resolution model

Koichi Sakaguchi

L. Ruby Leung

Colin M. Zarzycki

Jihyeon Jang

Seth McGinnis

Bryce E. Harrop

William C. Skamarock

Andrew Gettelman

Chun Zhao

William J. Gutowski

Stephen Leak

Linda Mearns

Comprehensive assessment of climate datasets is important for communicating model projections and associated uncertainties to stakeholders. Uncertainties can arise not only from assumptions and biases within the model but also from external factors such as computational constraint and data processing. To understand sources of uncertainties in global variable-resolution (VR) dynamical downscaling, we produced a regional climate dataset using the Model for Prediction Across Scales (MPAS; dynamical core version 4.0) coupled to the Community Atmosphere Model (CAM; version 5.4), which we refer to as CAM–MPAS hereafter. This document provides technical details of the model configuration, simulations, computational requirements, post-processing, and data archive of the experimental CAM–MPAS downscaling data.

The CAM–MPAS model is configured with VR meshes featuring higher resolutions over North America as well as quasi-uniform-resolution meshes across the globe. The dataset includes multiple uniform- (240 and 120 km) and variable-resolution (50–200, 25–100, and 12–46 km) simulations for both the present-day (1990–2010) and future (2080–2100) periods, closely following the protocol of the North American Coordinated Regional Climate Downscaling Experiment. A deviation from the protocol is the pseudo-warming experiment for the future period, using the ocean boundary conditions produced by adding the sea surface temperature and sea-ice changes from the low-resolution version of the Max Planck Institute Earth System Model (MPI-ESM-LR) in the Coupled Model Intercomparison Project Phase 5 to the present-day ocean state from a reanalysis product.

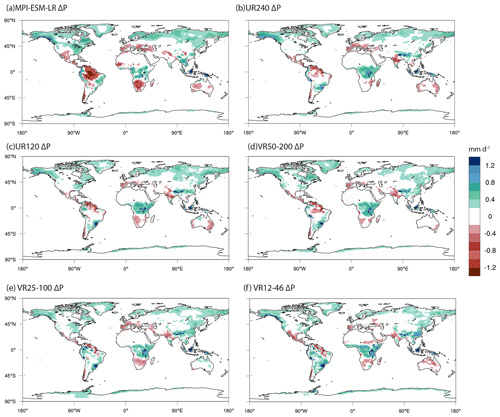

Some unique aspects of global VR models are evaluated to provide background knowledge to data users and to explore good practices for modelers who use VR models for regional downscaling. In the coarse-resolution domain, strong resolution sensitivity of the hydrological cycles exists over the tropics but does not appear to affect the midlatitude circulations in the Northern Hemisphere, including the downscaling target of North America. The pseudo-warming experiment leads to similar responses of large-scale circulations to the imposed radiative and boundary forcings in the CAM–MPAS and MPI-ESM-LR models, but their climatological states in the historical period differ over various regions, including North America. Such differences are carried to the future period, suggesting the importance of the base state climatology. Within the refined domain, precipitation statistics improve with higher resolutions, and such statistical inference is verified to be negligibly influenced by horizontal remapping during post-processing. Limited (≈50 % slower) throughput of the current code is found on a recent many-core/wide-vector high-performance computing system, which limits the lengths of the 12–46 km simulations and indirectly affects sampling uncertainty. Our experience shows that global and technical aspects of the VR downscaling framework require further investigations to reduce uncertainties for regional climate projection.

- Article

(34096 KB) - Full-text XML

- BibTeX

- EndNote

With the increasing frequencies and intensities of extreme events witnessed in the last decades worldwide, there is an increasing need for high-resolution climate information to support risk assessment and climate adaptation and mitigation planning (Gutowski Jr. et al., 2020). However, limited by computing resources and model structures, climate projections produced by global climate and Earth system models, including those in the most recent Coupled Model Intercomparison Project Phase 6 (CMIP6; Eyring et al., 2016), are mostly available at grid spacing of 100–150 km. These models do not adequately resolve regional climate variability associated with forcing, such as mesoscale surface heterogeneities and orography (Roberts et al., 2018). A subset of global models that participated in the High Resolution Model Intercomparison Project feature grid spacing between 25 and 50 km, but the high computational cost leads to smaller ensemble sizes, fewer types of experiments, and shorter simulation lengths than those for the models with standard grid spacing (Haarsma et al., 2016). To bridge the scale gap, diverse statistical and dynamical approaches have been developed to downscale global climate simulations to higher resolutions (4–50 km grid spacing) for different regions around the world (e.g., Wilby and Dawson, 2013; Giorgi and Mearns, 1991; Giorgi and Gutowski, 2015; Prein et al., 2017). These downscaling approaches have been compared to inform methodological development and to provide uncertainty information for users of the downscaled climate data (e.g., Wood et al., 2004; Fowler et al., 2007; Trzaska and Schnarr, 2014; Smid and Costa, 2018). However, few attempts (e.g., Wilby et al., 2000) have been made to compare different statistical and dynamical downscaling methods under the same experimental protocol to reduce the factors confounding interpretation of the results.

The effort described in this work was initiated in a project supported by the US Department of Energy, “A Hierarchical Evaluation Framework for Assessing Climate Simulations Relevant to the Energy–Water–Land Nexus (FACETS)”, which aims to systematically compare representative dynamical and statistical downscaling methods to evaluate and understand their relative credibility for projecting regional climate change. The project has been expanded to a larger project, “A Framework for Improving Analysis and Modeling of Earth System and Intersectoral Dynamics at Regional Scales (HyperFACETS)”, with a larger multi-institutional team (https://hyperfacets.ucdavis.edu/, last access: 11 May 2023). Through both project stages, we produced a model evaluation framework that features a set of structured, hierarchical experiments performed using different statistical and dynamical downscaling methods and models as well as a cascade of metrics informed by the different uses of regional climate information (e.g., Bukovsky et al., 2017; Rhoades et al., 2018a, b; Pendergrass et al., 2020; Pryor et al., 2020; Pryor and Schoof, 2020; Coburn and Pryor, 2021; Feng et al., 2021).

Dynamical downscaling usually refers to numerical simulations over a limited-area domain to achieve a higher resolution than those of global climate models (e.g., Giorgi and Mearns, 1991; Giorgi, 2019). Output from a global model simulation is used to provide the boundary conditions. This one-way nesting approach does not allow interactions between the target high-resolution domain and the rest of the globe, and it needs to deal with various issues from the prescribed lateral boundary conditions (Wang et al., 2004). Another dynamical downscaling approach is global variable-resolution (VR) models. A class of VR models uses the so-called stretched grid that is transformed continuously and nonlocally to achieve finer grid spacings over a specified region while grid cells are “stretched” (coarsened) in other regions of the global domain, retaining the same number of grid columns (e.g., Fox-Rabinovitz et al., 2000; McGregor, 2013). Several models of this class were compared under the Stretched Grid Model Intercomparison Project (Fox-Rabinovitz et al., 2006). The other class of VR models increases the grid density locally over specified region(s) without a compensating reduction in the grid resolution over other parts of the globe. Such a regional refinement is achieved by unstructured grids whose cell distributions are determined to tile the surface of a sphere nearly uniformly, instead of being tied to geographical structures such as latitude and longitude coordinates (Williamson, 2007; Staniforth and Thuburn, 2011; Ju et al., 2011). The regional downscaling dataset described in this study is produced by the latter VR approach.

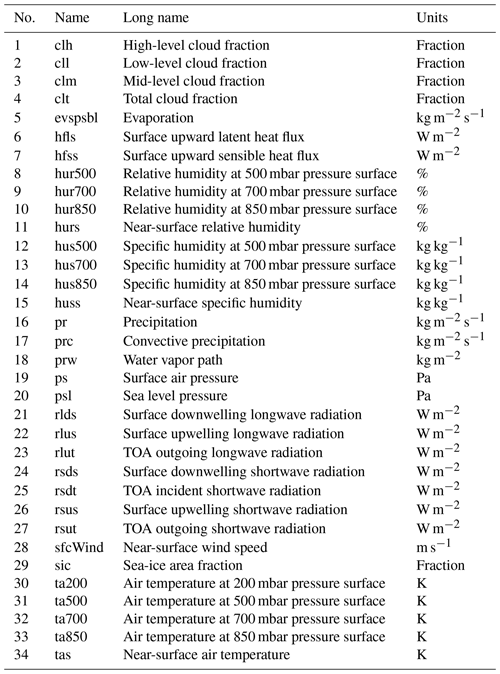

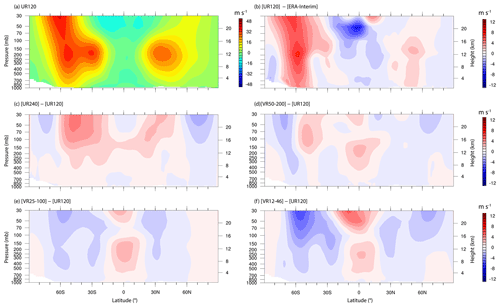

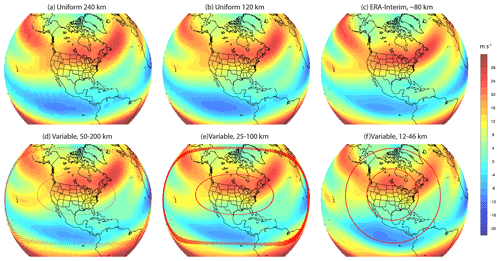

Figure 1June–July–August mean zonal wind at the 200 hPa level in each of the present-day (eval) CAM–MPAS simulations and ERA-Interim: (a) globally uniform 240 km grid, (b) uniform 120 km grid, (c) ERA-Interim, (d) variable-resolution grid with 50 km grid spacing over North America and 200 km in the coarse-resolution domain, (e) variable-resolutions from 100 to 25 km, and (f) variable-resolutions from 46 to 12 km. In panels (d), (e), and (f), grid cells at approximate boundaries between the coarse-resolution, transition, and refined domains are marked by red dots.

As a part of the structured hierarchical experiments, we have produced a regional climate dataset using a global VR dynamical core called Model for Prediction Across Scales (MPAS) coupled with the Community Atmosphere Model (CAM) physics suite. The CAM–MPAS model allows high-resolution regional simulations to be performed using regional refinement facilitated by unstructured grids, along with its non-hydrostatic dynamics, climate-oriented CAM physics parameterizations, and other Earth system component models available in the Community Earth System Model (CESM). For the dataset presented here, the model is configured on VR meshes with regional refinement over North America and quasi-uniform-resolution (UR) meshes across the globe (Figs. 1, 3). The VR configurations allow fine-scale features to be better resolved inside the refinement region; these fine-scale features then interact seamlessly with the large-scale circulations simulated at a coarser resolution outside the refined domain.

The dataset is designed to be compatible with the regional climate simulations produced for the North American Coordinated Regional Climate Downscaling Experiment (CORDEX) program (Mearns et al., 2017) (NA-CORDEX) and additional simulations using the Advanced Research Weather Research and Forecasting (WRF) model and Regional Climate Mode version 4 (RegCM4) models conducted under the HyperFACETS project. Few studies have compared limited-area and global VR dynamical downscaling approaches at the climate timescale (Hagos et al., 2013; Huang et al., 2016; Xu et al., 2018, 2021), making such comparisons an important element of the HyperFACETS project. For example, limited-area models are applied to specific regions conditioned on the global model-simulated large-scale circulation prescribed through lateral boundary conditions. Their lateral boundary conditions are identical, regardless of the resolution of the downscaling grid. In contrast, global VR models simulate both the regional and global climate in a single model. Unlike limited-area models, winds flowing into the regionally refined domain can vary with the resolutions of the coarse-resolution domains and the transition zones and potentially through the upscale effects from the high-resolution domain. As can be seen in Fig. 1, the general pattern of the large-scale winds is similar across simulations at different resolutions and in ERA-Interim (Dee et al., 2011). However, the zonal wind pattern in the eastern Pacific near California shows notable sensitivity to resolution (and bias against ERA-Interim), which could affect downwind regional hydrometeorology.

As a relatively new approach, the VR framework has not been widely used in coordinated downscaling experiments. Therefore, potential users of the CAM–MPAS climate dataset are not expected to be familiar with the characteristics of the model and the specificity regarding the model output. It is also not clear if one can apply an experimental protocol developed for regional models in a straightforward manner to global VR models. Furthermore, the timing of our production simulations coincided with the introduction of new, many-core architectures of the high-performance computing (HPC) system, such as Cori Knights Landing at the National Energy Research Scientific Computing Center (NERSC). Climate simulations of our CAM–MPAS code on such a system revealed challenges that are relevant to the wider global and regional climate simulation community. Hence, the goal of this paper is to provide a reference for not only the users of the experimental CAM–MPAS downscaled climate dataset but also the future users of the CESM2–MPAS and other global VR models for regional downscaling. Specifically, we provide a technical summary of the CAM–MPAS model (Sect. 2), details of the CAM–MPAS downscaling experiments (Sect. 3), a description of the post-processing of model output and archiving (Sect. 4), and general characteristics of model simulations (Sect. 5).

Previous works have already introduced the CAM–MPAS framework (Rauscher et al., 2013; Sakaguchi et al., 2015; Zhao et al., 2016), but, for the convenience of readers and the completeness of this document, we reiterate the descriptions of the MPAS and CAM models and their coupling in this section. More details are available from the cited references.

2.1 The Model for Prediction Across Scales (MPAS)

MPAS is a modeling framework developed to simulate geophysical fluid dynamics over a wide range of scales (Skamarock et al., 2012; Ringler et al., 2013). Currently four models based on the MPAS framework exist: atmosphere, ocean, sea ice, and land ice (The MPAS project, 2013). The atmosphere version (MPAS-Atmosphere) solves the compressible, non-hydrostatic momentum and mass-conservation equations coupled to a thermodynamic energy equation (Skamarock et al., 2012). The novel characteristic of the MPAS framework is a C-grid finite-volume scheme developed for a hexagonal, unstructured grid called Spherical Centroidal Voronoi Tessellations (SCVT; Ringler et al., 2010), accompanied by a new scalar transport scheme by Skamarock and Gassmann (2011). The SCVT mesh can be constructed to have either quasi-uniform grid cell sizes or variable ones with smooth transitions between the coarse- and fine-resolution regions (Ju et al., 2011). The C-grid staggering provides an advantage in resolving divergent flows important to mesoscale features, and the finite-volume formulation guarantees a local conservative property for prognostic variables of the dynamical core (Skamarock et al., 2012). MPAS-Atmosphere is available as a stand-alone global atmosphere model with its own suite of sub-grid parameterizations (Duda et al., 2015), but we use the MPAS-Atmosphere numerical solver as the dynamical core coupled to the CAM physics parameterizations here. Previous studies using VR meshes have demonstrated that the MPAS dynamical core is able to simulate atmospheric flow across coarse- and fine-resolution regions without unphysical signals (Park et al., 2013; Rauscher et al., 2013). This capability of regional mesh refinement is the main feature that we aim to test in the context of dynamical downscaling for regional climate projections.

2.2 The Community Earth System Model version 2 (CESM2) and Community Atmosphere Model version 5.4 (CAM5.4)

The model code base used for our simulations is a beta version of CESM2 (CESM1.5), the same code used by Gettelman et al. (2018), who focused on the regional refinement capability of the spectral element dynamical core. The atmospheric component model CAM has multiple versions of the physics parameterization package. We use the CAM version 5.4, which is an interim version toward CAM version 6 (Bogenschutz et al., 2018). The CAM5.4 physics is the default option for CAM in CESM1.5. The parameterization components in CAM5.4 are summarized in Table 1. Their characteristics are documented in detail by Bogenschutz et al. (2018), and a variety of diagnostic plots are publicly available (Atmosphere Model Working Group, 2015). A major difference between CAM5.4 and the previous version CAM5.0 is the prognostic mass and number concentrations of rain and snow in the new cloud microphysics scheme, MG2 (Gettelman and Morrison, 2015; Gettelman et al., 2015). Prognostic concentrations of precipitating particles make the model more appropriate for high-resolution simulations by removing assumptions necessary for a diagnostic approach (e.g., neglecting the advection of precipitating particles; Rhoades et al., 2018b). The prognostic aerosol scheme is also revised as the four-mode version of the Modal Aerosol Module (MAM4; Liu et al., 2016), but we only use the diagnostic aerosol scheme (Bacmeister et al., 2014) for the simulations documented in this paper. Specifically, the monthly mean aerosol mass concentrations for the year 2000 are derived from a previous simulation using CAM version 4 with the prognostic three-moment MAM (Liu et al., 2012) on a 1∘ grid. Given the prescribed aerosol mass concentrations, aerosol number concentrations are calculated by an empirical relationship between the two concentrations and are then passed to the cloud microphysics.

Bretherton and Park (2009)Park et al. (2014)Gettelman and Morrison (2015)Gettelman et al. (2015)Zhang and McFarlane (1995)Neale et al. (2008)Park and Bretherton (2009)Kiehl et al. (2000)Bacmeister et al. (2014)Iacono et al. (2008)Richter et al. (2010)2.3 CAM–MPAS coupling

An early effort to port the MPAS dynamical core to the CESM/CAM model started in 2011 under the “Development of Frameworks for Robust Regional Climate Modeling” project (Leung et al., 2013). The hydrostatic solver of the pre-released version of MPAS (Park et al., 2013) was coupled to CAM4 by the collaborative work among Los Alamos National Laboratory, Lawrence Livermore National Laboratory, and the National Center for Atmospheric Research. This CAM–MPAS model was extensively evaluated through a hierarchy of experiments (Hagos et al., 2013; Rauscher et al., 2013; Rauscher and Ringler, 2014; Sakaguchi et al., 2015, 2016; Zhao et al., 2016). Those studies demonstrated the ability of VR simulations to reproduce the uniform, globally high-resolution simulations inside the refined domain in terms of the characteristics of atmospheric circulations as well as the sensitivity of the physics parameterizations to horizontal resolution. In the idealized aquaplanet configuration with the older CAM4 physics, the resolution sensitivity of moist physics leads to unphysical upscale effects (Hagos et al., 2013; Rauscher et al., 2013), but these artifacts are mostly muted when an interactive land model is coupled, along with the presence of other forcing such as topography and land–ocean contrast (Sakaguchi et al., 2015). The non-hydrostatic version of the MPAS dynamical core (the released version 2) was later coupled to CESM version 1.5 to understand the behavior of the CAM5 physics under a wide range of resolutions over seasonal or longer timescales (Zhao et al., 2016; Hagos et al., 2018). Hagos et al. (2018) used this model with a convection-permitting VR mesh (4–32 km) to study the sensitivity of extreme precipitation to several parameters in the CAM5 physics, demonstrating stable coupling between the non-hydrostatic MPAS dynamical core and the global model physics package CAM5 at kilometer-scale resolution. The CAM–MPAS model for the present work is similar to the one used by Hagos et al. (2018), except that MPAS v2 is replaced by a more recent version (version 4). The same CAM–MPAS version employed in this study has demonstrated robust performance in simulating the Asian monsoon system using a 30–120 km VR mesh (Liang et al., 2021).

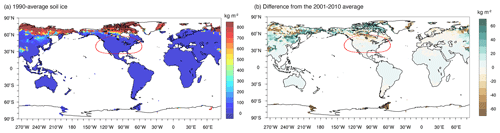

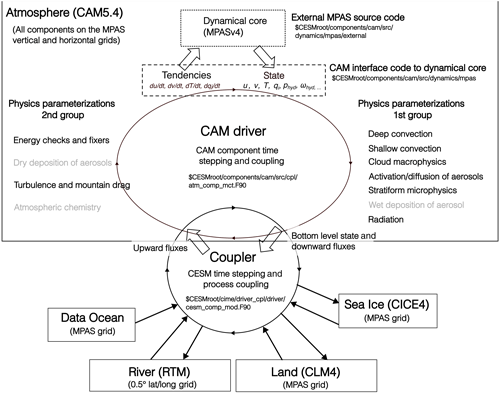

The CAM–MPAS coupling is illustrated in Fig. 2 along with the process-coupling sequence in the host model CESM1.5. The coupling between the non-hydrostatic MPAS and the main driver of CAM uses a Fortran interface and calling sequence similar to the default finite-volume (FV) core and other dynamical cores available in CAM (Neale et al., 2010). With this coupling approach, the dynamical core can be switched from the default FV to MPAS core by simply providing a flag “CAM_DYCORE=mpas” to the CESM build script (env_build.xml), along with an appropriate name of the horizontal grid (e.g., “mp120a” has been defined for the UR120 grid following CESM Software Engineering Group, 2014). The vertical grid in CAM–MPAS follows the height-based coordinate used by MPAS-Atmosphere (Klemp, 2011), but the number of layers (32) and the height of the interface levels are configured to closely match those of the hybrid σ-p coordinate used by other CAM dynamical cores.

The CESM coupler is responsible for time step management and sequential coupling of component models (Fig. 2). When CAM is called by the coupler, the CAM driver cycles the dynamics, physics parameterizations, and communication with the coupler. When the dynamics is called by the CAM driver, the MPAS dynamical core receives tendencies of horizontal momentum, temperature, and mixing ratios that are predicted by physics parameterizations and the other CESM component models and that are summed by the CAM driver prior to the communication with MPAS. MPAS cycles its time steps from the previous atmospheric state with the physics tendencies used as forcing terms. After MPAS completes its (sub) time steps, the updated atmospheric and tracer states are passed to CAM through the interface, including hydrostatic pressure, pressure thickness of each grid box, and geopotential height. The last three variables are required by the CAM physics that operates on a vertical column under hydrostatic balance, without the need to know that the vertical column is discretized in a height-based or hybrid pressure-based coordinate. No vertical interpolation nor extrapolation is performed in coupling CAM and MPAS. The CAM–MPAS interface layer also calculates hydrostatic pressure velocity and performs other required conversions (e.g., converts the prognostic winds normal to cell edges to conventional u and v winds at cell centers and converts mixing ratios defined with dry air in MPAS to those with moist air in CAM). Note that the pressure vertical velocity ω passed from MPAS to the CAM driver is diagnosed under the hydrostatic balance and is different from the non-hydrostatic vertical velocity prognostically simulated in the MPAS dynamical core.

A second-order diffusion is added to the top three model layers to produce the so-called “sponge layers” following other CAM dynamical cores (Jablonowski and Williamson, 2011; Lauritzen et al., 2012, 2018). The top model level is located at about 45 km above sea level. This model top is higher than those typically used in MPAS-Atmosphere (≈30 km). On the other hand, the number of vertical levels in CAM5.4 is smaller than the default number of vertical levels in the MPAS-Atmosphere (41 in version 4), resulting in a relatively coarse vertical resolution for a mesoscale model. However, its vertical resolution is within the range used by regional models participating in NA-CORDEX (18–58 levels across models).

Figure 2Process coupling sequence in the CAM–MPAS model in the AMIP configuration. The MPAS dynamical core receives the time rate of change in zonal and meridional winds (u, v), atmospheric temperature (T), and water in vapor and condensed phases (qi, with , ... for water vapor, cloud liquid, cloud ice, etc.) and returns an updated atmospheric state, in terms of u, v, T, qi, hydrostatic pressure (Phyd), pressure velocity (ωhyd), etc., after integrating adiabatic dynamics. Also shown are the names of the source code files and directories where the coupling operations are carried out. The shell variable “$CESMroot” refers to the top-level directory of the CESM code. The parameterizations shown in gray were not active in our CAM–MPAS simulations.

This experimental version of CAM–MPAS is available from our private repository on GitHub (see the “Code and data availability” section), but it is not an official release and does not offer the same technical support as other CAM versions. Some model structural differences between CAM and MPAS, such as the vertical coordinate, require further work to improve physical consistency throughout the coupling processes. An ongoing effort to port MPAS to CAM/CESM addresses those remaining technical issues as part of the System for Integrated Modeling of the Atmosphere (SIMA) project (Gettelman et al., 2021; Huang et al., 2022).

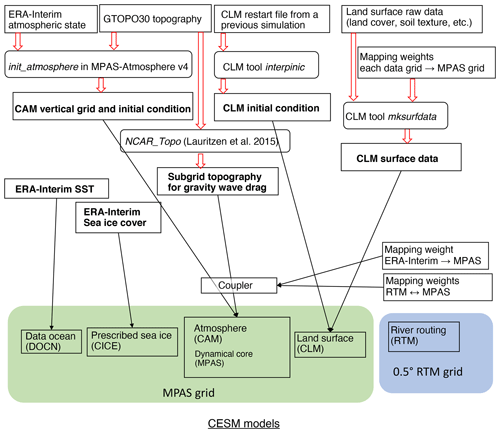

3.1 Model grid and parameters

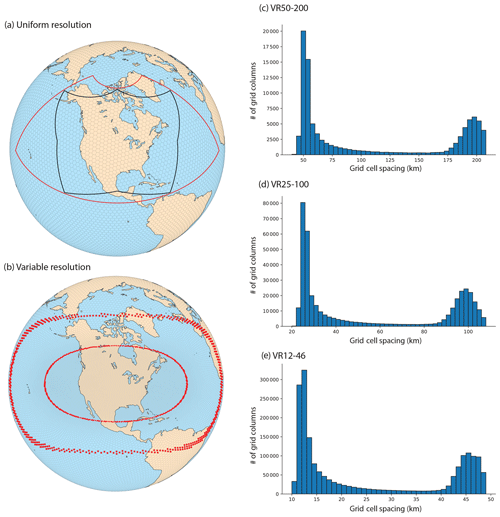

Three VR grids (50–200, 25–100, and 12–46 km) and two UR grids (240 and 120 km) are used for the CAM–MPAS downscaling experiment (Table 2). Figure 3 illustrates the UR and VR grids and the distributions of grid cell spacing in the three VR grids. The five CAM–MPAS model resolutions are named UR240, UR120, VR50-200, VR25-100, and VR12-46. UR240 has a similar grid spacing to the Max Planck Institute Earth System Model low-resolution version (MPI-ESM-LR; Giorgetta et al., 2013), whose ocean and sea-ice output is used as boundary forcing for the future experiment (see below). The UR120 grid has a comparable resolution to those of the majority of CMIP5 and CMIP6 models. Although their grid spacing does not exactly match those of the coarse-resolution domains on the VR grids nor the MPI-ESM-LR model, these two UR meshes are readily available from the MPAS website and serve as a reference for the VR simulations. The two VR grids, VR50-200 and VR25-100, are created for this project because similar VR grids were not available from the MPAS mesh archive when the project started. The two meshes are designed to have a rectangular-shaped high-resolution domain over CONUS (Fig. 3), resembling the regional model domain for NA-CORDEX (CORDEX, 2015). The 12–46 km VR mesh is obtained from the MPAS mesh archive and has a circular and slightly smaller (by ≈30 %) high-resolution domain than the other two VR grids but still covers the most of North America (Fig. 1f).

Figure 3Illustration of the MPAS meshes used for this study: (a) uniform resolution (240 km) and (b) variable resolution (50–200 km). In panel (a), the black line represents the approximate domain for the NA-CORDEX experiment and the red line represents the area covered by the NAM grids for post-processed CAM–MPAS data. In panel (b), approximate boundaries between the 50 km domain and transition zone and between the transition zone and the 200 km domain are marked by red markers. The three histograms show the numbers of grid columns binned by grid cell spacing (km) for (c) VR50-200, (d) VR25-100, and (e) VR12-46.

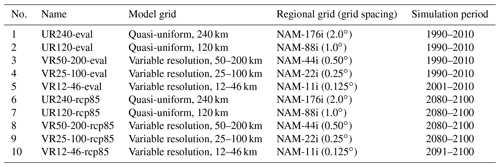

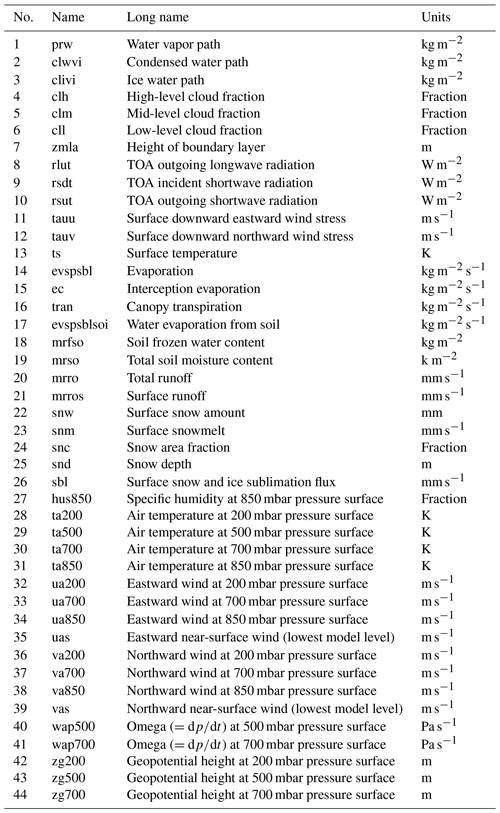

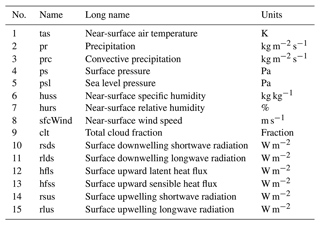

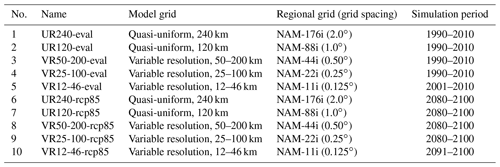

Table 2List of simulations. The simulation period does not include 1–2 spin-up years. Regional grids are used for post-processed data and defined in NA-CORDEX (except for NAM-88i and NAM-176i, which are defined in a similar manner to the other NA-CORDEX grids).

As the default parameters in the CAM5.4 physics are tuned for the prognostic MAM4 model, we retuned CAM5.4 with the prescribed aerosol and the CAM default FV dynamical core on its nominal 1∘ global grid. No attempt has been made to tune model parameters differently for the MPAS dynamical core nor at each resolution. While we are aware that resolution-dependent tuning and/or scale-aware physics schemes are necessary to fully take advantage of increased resolution (Bacmeister et al., 2014; Xie et al., 2018), tuning each resolution for both global and regional climate requires extensive effort (e.g., Hourdin et al., 2017) and is left for future work. We also note that resolution-dependent tuning is not usually done for the limited-area models that participated in NA-CORDEX and HyperFACETS nor in other coordinated projects that cover multiple model resolutions (e.g., Haarsma et al., 2016). The following parameters, however, are changed for each resolution: time step lengths, numerical diffusion coefficients, and the convective timescale used in the Zhang–McFarlane (ZM) deep-convection scheme (Table 3). In VR simulations, the dynamics time step is constrained by the smallest grid spacing in the refined region (Δx). The dynamics time steps are initially set as and further adjusted to avoid numerical instabilities that tend to occur within the stratospheric jet over the Andes. The physics time step is scaled from the default 1800 s for grid spacing using the same ratio as grid spacing changes. The convection timescale is then adjusted to scale with the physics time step in order to reduce sensitivities to horizontal resolution and time step (Mishra and Srinivasan, 2010; Williamson, 2013; Gross et al., 2018).

3.2 Model configurations

For all of our simulations, we use a predefined CESM component set “FAMIPC5” that automatically configures CESM and its input data (e.g., trace gas concentrations) following the protocol of the Atmosphere Model Intercomparison Project (AMIP; Gates, 1992). In this configuration, the atmosphere and land models are active, whereas the sea surface temperature (SST) and sea-ice cover fraction (SIC) are prescribed. The River Transfer Model (RTM) is also active to collect terrestrial runoff into streamflow (Oleson et al., 2010), but it serves only for a diagnostic propose because the ocean model is not active. The so-called “data ocean” model reads, interpolates in time and space, and passes the input SST to the CESM coupler, which calculates fluxes between the atmosphere and ocean (CESM Software Engineering Group, 2014). The Community Ice Code version 4 (CICE4) is run as a partially prognostic model by reading prescribed sea-ice coverage and atmospheric forcing from the coupler to calculate ice–ocean and ice–atmosphere fluxes (Hunke and Lipscomb, 2010).

The land component is the Community Land Model version 4 (CLM4; Lawrence et al., 2011), which simulates vertical exchanges of energy, water, and tracers from the subsurface soil to the atmospheric surface layer. CLM4 takes a hierarchy-tiling approach to represent unresolved surface heterogeneities, distinguishing physical characteristics among different surface land covers (e.g., vegetated, wetland, lake, and urban), soil texture, and vegetation types (Oleson et al., 2010). While CLM4 is able to simulate the carbon and nitrogen cycles and transient land cover types, these biogeochemical functionalities are turned off. Instead, our simulations use a prescribed vegetation state (leaf area index, stem area index, fractional cover, and vegetation height) that roughly represents the conditions around the year 2000 based on remotely sensed products (Lawrence et al., 2011). The land cover types are also prescribed as the conditions around the year 2000 and are fixed throughout the simulations in both the eval and rcp85 experiments. These land surface settings are again consistent with the models that participated in NA-CORDEX. Note that the spatial resolution of the original data to derive CLM's land surface characteristics varies from 1 km to 1.0∘, with 0.5∘ being considered as the base resolution (Oleson et al., 2010). These input data are available from the CESM data repository (CESM Software Engineering Group, 2014).

The process coupling in CESM has already been illustrated in the previous section (Sect. 2.3, Fig. 2). In our experiment, the CLM4 land model, data ocean, and CICE4 sea-ice model are configured to run on the MPAS horizontal grid. This way, the state and flux data between different model components do not need to be horizontally interpolated during the model integration. The RTM model in a diagnostic mode runs on its own 0.5∘ grid. The data ocean and RTM communicate with the coupler once and eight times per day, respectively, while CAM, CLM4, and CICE4 run and communicate through the coupler at the same time step.

3.3 Model experiments and input data

The experiment is composed of decadal simulations for the present day and the end of the 21st century under the Representative Concentration Pathway (RCP) 8.5, featuring a business-as-usual scenario leading to a radiative forcing of 8.5 W m−2 by the end of this century. The two simulations are named following the CORDEX project protocol: “eval” denotes the historical simulations using reanalysis data for boundary conditions for its principal role of model evaluation against observations; “rcp85” denotes the future simulations in which the external forcings follow the RCP8.5 scenario and the ocean and sea-ice boundary conditions are prescribed by adding the global climate model (GCM)-simulated climate change signals to the historical observations, the so-called pseudo-global-warming experiment. We selected the MPI-ESM-LR model from the eight GCMs considered in NA-CORDEX (McGinnis and Mearns, 2021) based on its good performance with respect to the warm-season precipitation over the western and central US (Chang et al., 2015; Sakaguchi et al., 2021).

All of the input data required to reproduce our simulations are publicly available (see the “Code and data availability” section). The SST and SIC for the eval run are taken from the ERA-Interim reanalysis (Dee et al., 2011). The 6 h ERA-Interim SST and SIC data are averaged to daily values and provided to the model as input; they are then bilinearly interpolated to the MPAS grids by the CESM coupler during model integration. Other model input data include surface topography, initial conditions, and remapping weights between different input data and model grids (Appendix A). All of the surface-related input data are remapped to each MPAS grid prior to the simulations following the CESM1.2 and CLM4 user guide (CESM Software Engineering Group, 2014; Kluzek, 2010). A set of high-level scripts is now available to help prepare input data for the FAMIPC5 and other similar CESM experiments (Zarzycki, 2018). Topography input is generated by the stand-alone MPAS-Atmosphere code (init_atmosphere; Duda et al., 2015), which uses the GTOPO global 30s topography data (Gesch and Larson, 1996) as the input. The sub-grid topography information required by the gravity wave drag and turbulent mountain stress parameterizations in CAM5.4 are produced using the NCAR_Topo tool by Lauritzen et al. (2015).

As stated above, the future simulation is conducted using the pseudo-global-warming approach (e.g., Haarsma et al., 2016) based on the climate change signal simulated by the MPI-ESM-LR model from the CMIP5 archive. Specifically, annual cycles of the daily climatological SST and SIC are obtained from the historical and RCP8.5 simulations of the MPI-ESM-LR model (ensemble member id r1i1p1), and differences between the two periods are calculated for each day of the year and each grid point. This daily climatological difference (ΔSST and ΔSIC) is then added to the SST and SIC from the ERA-Interim data and is prescribed to the model. Other external forcings of solar irradiance, greenhouse gas, ozone, and other tracer gas concentrations are the same as the CESM1.2 RCP8.5 simulation conducted for CMIP5, except for the prescribed aerosol concentrations and land cover characteristics being kept the same as the eval simulation.

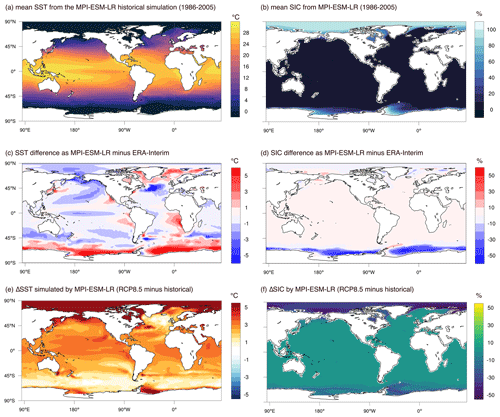

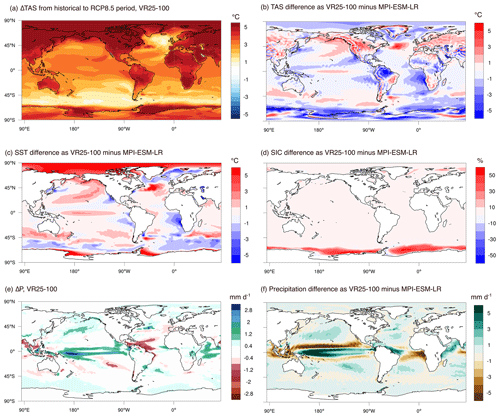

The annual average ΔSST and ΔSIC are shown in Fig. 4e and f, respectively. While the SST and SIC distributions in the present-day period are reasonably simulated by MPI-ESM-LR, regional biases exist over the Southern Ocean, North Atlantic, and off the west coasts of North and South America and South Africa (Fig. 4a–d). Because ΔSST and ΔSIC are added onto the climatology from ERA-Interim, the future SST and SIC forcings given to CAM–MPAS are different from those in the MPI-ESM-LR model over the biased regions. In Sect. 5.2.2 and Appendix E, we briefly compare the CAM–MPAS historical climate and its response to the external forcings with those of the MPI-ESM-LR model. It is shown that, while the base state climate differs between the two models, their changes into the future are rather similar under the same ΔSST and ΔSIC. Also of note is that the SST or near-surface air temperature (TAS) biases and their changes in the MPI-ESM-LR simulations differ from those of fully coupled CESM simulations with CAM5 or CAM6 (Meehl et al., 2012, 2013; Danabasoglu et al., 2020). Specifically, our CAM–MPAS downscaling data describe the response of the atmosphere to the ocean conditions derived from the external data (as is the case for regional model simulations in NA-CORDEX), which may be very different from the climate evolution simulated by CAM–MPAS being coupled to an active ocean model. Because CAM–MPAS and other VR atmosphere models are typically a part of global coupled climate models, it is possible to run a fully coupled VR simulation, which provide climate change signals that have co-evolved with the same atmosphere model.

Figure 4Climatological mean sea surface temperature (SST) and the sea-ice cover fraction (SIC) from the MPI-ESM-LR model: (a) annual mean SST, (b) annual mean SIC, (c) SST bias against ERA-Interim, (d) SIC bias, (e) SST change from the historical to RCP8.5 period, and (f) SIC change over the same time periods. The historical and RCP8.5 averages are calculated over the 1986–2005 and 2080–2099 periods, respectively.

Limited-area models participating in NA-CORDEX include another historical simulation called “hist”, in which the lateral and bottom boundary conditions are provided by the driving global models. Also the rcp85 simulations in NA-CORDEX use GCM output directly for boundary conditions (“direct downscaling”), in contrast to adding the climate change signals to the observed present-day boundary conditions. We do not conduct the hist experiment with CAM–MPAS, as our principal goal is to assess the credibility of dynamically downscaled climate by the CAM–MPAS atmosphere model in comparison to observational and other downscaled data, which, at a minimum, requires (1) the eval run with the prescribed ocean boundary conditions from observations, isolating the CAM–MPAS model's bias without the influence of the GCM's SST and sea-ice biases, and (2) the model response to external forcings associated with global warming, which can be reasonably assessed by the pseudo-global-warming experiment (see the general agreement in the large-scale climate response between the CAM–MPAS and MPI-ESM-LR models in Sect. 5.2.2). An advantage of the global VR simulation in pseudo-global-warming approach is that, unlike adding the mean atmospheric climate change signals to the lateral boundary conditions for regional models, a global VR simulation does include variability and high-order atmospheric responses to the warming. Because our dataset does not have the hist experiment, we will use the terms “eval”, “historical”, and “present-day” interchangeably to refer to the eval simulations.

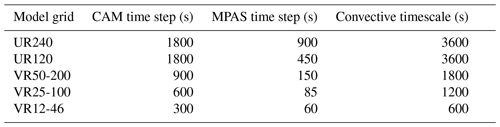

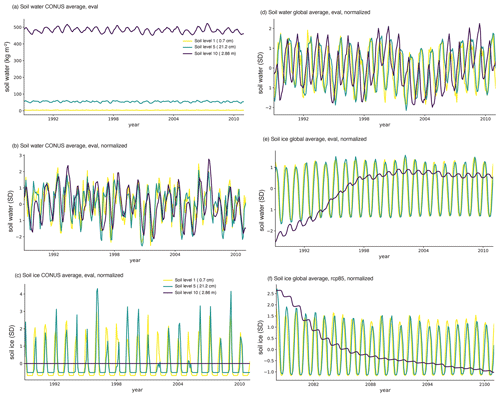

The atmospheric initial condition for the eval experiment is taken from the ERA-Interim data on 1 January 1989 at 00:00 UTC for all simulations except for the VR12-46 simulation that used data from 1 January 2000 at 00:00 UTC. The land initial condition is taken from the output valid for 1 January 2000 at 00:00 UTC from a 0.5∘ fully coupled CCSM4 simulation for the historical period (CESM, 2016). The CLM4 land state on the 0.5∘ grid is remapped to the MPAS grids following Kluzek (2010). Starting from these initial conditions, the model is run for 1 year to spin up the eval simulations. For the future rcp8.5 experiments, the initial condition for each resolution is taken from the 1 January 2011 state of the corresponding eval simulation, followed by 2 years of spin-up simulations. We found that these spin-up lengths are sufficient for the CONUS domain, but they are not necessarily adequate in the deep soil layer for the global domain, particularly at high latitudes (as will be discussed in Sect. 5.2).

4.1 Post-processing

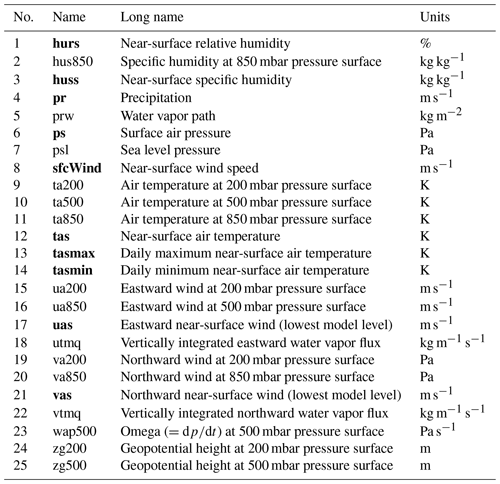

To facilitate comparison with other regional models in the NA-CORDEX model archive, the model output on MPAS's unstructured mesh is remapped to a standard latitude–longitude regional grid defined by the NA-CORDEX project (the so-called NAM grid; Fig. 3 and Table 2). Variable names and units used in CAM/CESM are converted to those of NetCDF Climate and Forecast (CF) Metadata Conventions (version 1.6) that are used by NA-CORDEX. Three-dimensional atmospheric variables defined on the terrain-following model coordinate are vertically interpolated to the NA-CORDEX-requested pressure levels (200, 500, and 850 hPa). The following describes how such post-processing was performed.

We mainly used the Earth System Modeling Framework (ESMF) library (Balaji et al., 2018) through the NCAR Command Language (NCL) (UCAR/NCAR/CISL/TDD, 2017a) for regridding MPAS output. The ESMF library provides several remapping methods, among which the first-order conserve method is used for extensive variables and fluxes, and the patch recovery method is used for all other variables. For variables required at a specified pressure level, we first linearly interpolate from the model height level to the pressure level, followed by horizontal remapping. The order of the vertical vs. horizontal interpolation is not expected to be important for the accuracy of subsequent analyses (Trenberth, 1995). Note that the three pressure levels available in the post-processed archive are not sufficient to close budget equations of vertically integrated quantities such as moisture and energy (Bryce Harrop, unpublished result). For moisture budget analyses, data users are encouraged to use the vertically integrated moisture fluxes and water vapor path available in the daily variables (Appendix C2). For other variables, it is possible to retrieve them at more pressure levels from the monthly or 6-hourly raw model output (Appendix B).

Missing values exist in some variables in the raw model output on the MPAS grids, e.g., soil moisture in the grid points where 100 % of the grid point area is covered by ocean, lake, or glacier. The locations of such missing values do not change with time, and the corresponding grid points are masked when generating regridding weights. Time-varying missing values arise during vertical interpolation to a pressure level over the areas where surface topography crosses the target pressure level. We followed the guidance provided by the NCL website to regrid such time-varying missing values (UCAR/NCAR/CISL/TDD, 2017b). Specifically, we first remap a binary field defined on the source MPAS grid: all values are one where the vertically interpolated pressure-level variable is missing, and they are zero everywhere else. By remapping such a field from the original MPAS grid to the destination grid, we can identify, using nonzero values, which destination grid points are affected by the missing values on the original grid, and the remapped pressure-level variables in these destination grid boxes are set missing. This rather cumbersome procedure can be replaced by a remapping utility recently enhanced in the NetCDF Operator (NCO; Zender, 2017).

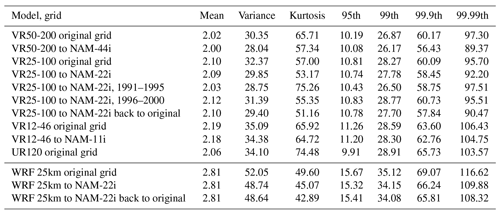

Table 4Mean (mm d−1), variance (mm2 d−2), kurtosis, and selected percentiles (mm d−1) of daily precipitation sampled from the central-eastern United States (30–47∘ N, 85–105∘ W) on the original and remapped grids with grid spacings similar to the original grid. “XXX to YYY” in the row header refers to results on the remapped grid, e.g., “VR25-100 to NAM-22i” means that the statistics are calculated on the NAM-22i grid to which precipitation fields are remapped from the original MPAS grid. The seventh and last rows show the results after remapping twice, whereby precipitation fields are remapped from the VR25-100 (or WRF 25 km) grid to the NAM-22i grid and then remapped back to the original VR25-100 (or WRF 25 km) grid. The first-order conserve remapping method is used for all of the results. The analysis domain covered in the WRF output on the curvilinear grid is slightly smaller than the domain used for MPAS, hence the disagreement in statistics between these two model groups. The statistics are based on the years 2001–2005, except for the fifth and sixth rows where data from the 1991–1995 and 1996–2000 periods are used, respectively.

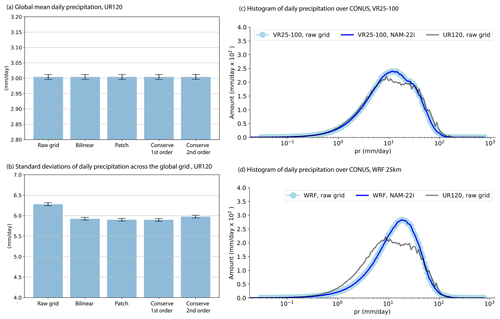

Figure 5Comparison of regridded daily precipitation using four different methods: (a) global annual average of precipitation in UR120 and (b) standard deviations of precipitation across the global grid in UR120; (c) daily rain rate amount distributions over CONUS calculated on the original MPAS grid in VR25-100 (dark blue line), on the remapped (first-order conserve) latitude–longitude NAM22 grid in VR25-100 (light blue circles), and on the original MPAS grid in UR120 (gray line); and (d) daily rain rate amount distributions over CONUS calculated on the original WRF grid in the NA-CORDEX WRF 25km simulation (dark blue), on the remapped NAM22 grid in the WRF 25km simulation, and the UR120 histogram, as in panel (c), for comparison. The statistics are based on a 10-year period from 1990 to 1999, and the error bars in panels (a) and (b) show the 95 % confidence interval based on the year-to-year variance. The distributions of rain rate amount are calculated following Pendergrass and Hartmann (2014), using the minimum rain rate of 0.029 mm d−1 and a 7 % spacing.

NA-CORDEX documents minor artifacts due to interpolation by the patch recovery method (e.g., small negative values for nonnegative variables such as relative humidity) (Mearns et al., 2017). The influence of horizontal regridding, or interpolation, on the statistics has also been noted by previous studies (Chen and Knutson, 2008; Diaconescu et al., 2015). To understand the effect of regridding in our post-processing, Fig. 5 compares selected statistics calculated on the original and remapped daily precipitation using different remapping methods, bilinear, patch recovery, first-order conserve, and second-order conserve, available from the ESMF library (Balaji et al., 2018). The regridding effect on the (spatial) mean is negligibly small using any of the regridding methods. As shown in Fig. 5a, the global annual mean precipitation (3.004 mm d−1) is nearly identical (to the accuracy of 10−3 mm d−1) for the original and the regular 1∘ latitude–longitude grid after remapping.

The variance loss due to remapping is typically ≈6 %–8 % for daily precipitation. The magnitude of variance loss depends on which variable is remapped – a variable with a smoother spatial structure than precipitation (e.g., atmospheric temperature) is less affected by regridding. At the global scale, ≈6 %–8 % loss of variance can be larger than year-to-year sampling variability, as illustrated in Fig. 5b. The second-order conservation method retains the spatial variance slightly better than the other methods. At regional scales, sampling uncertainty from different time periods (each sample is 5 years long here) can be as large as the smoothing effect. This is illustrated in Table 4 (from the third to sixth rows) based on the statistics of daily precipitation in the CONUS sub-domain east of the Rockies, calculated on the original VR25-100 grid and conservatively remapped to the NAM-22i grid. We avoid the Rockies and other mountainous regions where year-to-year variability is so large that our sample size is not long enough to reliably estimate spatial variances. The third and fourth rows present the statistics on these two grids from the years 2001 to 2005, while the fifth and sixth rows are from the years 1991 to 1995 and 1996 to 2000, respectively, on the NAM-22i grid. The 5-year average of the spatial variance is 32.37 mm d−1 on the original grid for 2001–2005, which is reduced to 29.85 mm d−1 after regridding. The spatial variance from the other 5-year period can differ from the variance from 2001 to 2005 by as much as the regridding loss. Similar magnitudes of smoothing effect and sampling uncertainty are also found in kurtosis and extreme values represented by the 95th to 99.99th percentiles. These differences are not visible on the daily precipitation histograms calculated on the original VR25-100 grid, the remapped NAM-22i grid, and the UR120 output on its raw MPAS grid for the same CONUS sub-domain (Fig. 5c). The two histograms of VR25-100 are visually identical, and the difference from the UR120 precipitation is clearly distinguishable.

Two other points notable in Table 4 are as follows: (1) the smoothing effect becomes weaker with finer grid resolutions based on the three VR resolutions and (2) successive remapping back from the regional NAM-22i to the original grid (the seventh row) leads to a further loss of the variance and other moments but to a lesser degree compared with the first remapping. Similar smoothing effects from the first and second remapping are observed in the output from the WRF model on a 25 km grid from the NA-CORDEX archive. Despite the fact that WRF uses a regular latitude–longitude grid that is similar to the NAM-22i grid, regridding effects on the selected statistics resemble those on the CAM–MPAS output. For example, regridding VR25-100 output loses ≈8 % of the daily precipitation variance by the first remapping, while the 25 km WRF simulation loses 6 %. The histograms of daily precipitation in the WRF 25 km simulation are shown in Fig. 5d, again confirming that the histograms are not visually affected by regridding. Given such a priori knowledge of the regridding effect and sampling uncertainty at regional scales, we do not expect that the remapping effect would seriously affect the statistical inference of regional climate metrics.

4.2 Data repositories

Post-processed monthly and daily variables in the “essential” and “high priority” list of the NA-CORDEX archive (Mearns et al., 2017) are accessible from the Pacific Northwest National Laboratory DataHub. All of the variables and temporal frequencies are available from the NERSC High Performance Storage System (HPSS), made accessible through web browsers by the NERSC Science Gateway Service (see the “Code and data availability” section). All variables requested from the experiment protocol are two-dimensional at a single level. Appendix C lists the post-processed variables.

File names, attributes, and coordinates of the reported variables and their file specification follow the CORDEX archive design (Christensen et al., 2014) and NA-CORDEX data description (Mearns et al., 2017). The file name is composed of the following elements: [variable name].[scenario].[driver].[model name].[frequency].[grid].[bias correction].[start month]-[end month].[version].nc. In the CAM–MPAS dataset, the scenario is either eval for the historical period or rcp85 for the pseudo-warming future simulation. The driver is “ERA-Int” for the historical period and “ERA-Int-MPI-ESM-LR” for the rcp85 case. Post-processing of the current CAM–MPAS simulations does not involve any bias corrections; hence, it is labeled as “raw”. The major version refers to different production simulations, and the minor version refers to changes/corrections in the post-processing stage. The publicly available CAM–MPAS output is either “v3” or “v3.1”; the major version is 3 because it was necessary to rerun simulations twice due to major changes in model configurations, and the minor revision involves a different treatment of missing values arising from vertical interpolation to a pressure level (see Sect. 4.1). With the other straightforward file name elements, an example file name for a daily precipitation data in the historical run of CAM–MPAS VR50-200 reads as follows: pr.eval.ERA-Int.cam54-mpas4.day.NAM-44i.raw.198901-201012.v3.nc. In contrast, an example file name for a daily precipitation data in the future pseudo-warming reads as follows: pr.rcp85.ERA-Int-MPI-ESM-LR.cam54-mpas4.day.NAM-44i.raw.207901-210012.v3.nc.

Raw CAM–MPAS output on the global MPAS grid (i.e., not remapped to a regional latitude–longitude grid) is also available from the NERSC HPSS space. Appendix B provides more information about the MPAS unstructured mesh, links to the archive directory, and other resources to help analyze the raw MPAS data. The NERSC data archive also contains example scripts and variables necessary to process model variables on the MPAS grid (e.g., latitude and longitude arrays).

5.1 Computational aspects

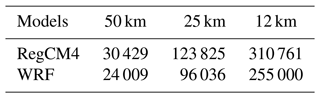

In this section, we discuss some computational aspects of our simulations, as one of the motivations to use a global VR framework is its computational advantage compared with a global high-resolution simulation. On the other hand, global VR simulations are expected to be more expensive than limited-area model simulations if the cost for the host GCM simulations that provide boundary conditions is not considered. For example, the VR grids used in this study have 1.1–2.6 times more grid columns than the limited-area grids used by the RegCM4 and WRF models in the NA-CORDEX and HyperFACETS archives (Tables 5, F2). Here, we do not compare the simulation cost of the CAM–MPAS VR configurations against regional models but instead focus on how the cost of CAM–MPAS simulations differs between the UR and VR grids and between the lower and higher resolutions.

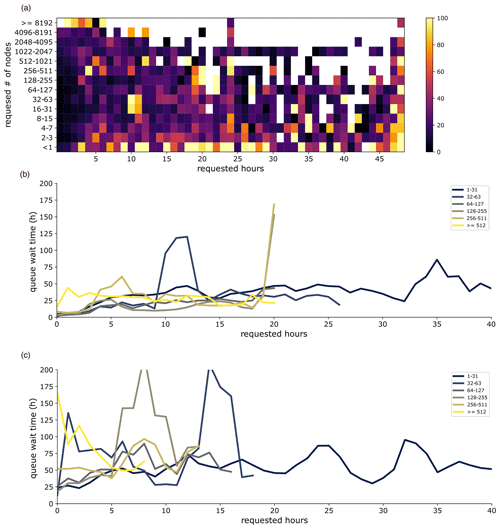

All of our simulations were run at NERSC. The following result is obtained from the production simulations and not a systematic scaling analysis of the CAM–MPAS code nor NERSC systems. The system configurations (e.g., number of nodes) of our production simulations are not only based on good throughput but also on simulation cost as well as expected queue wait time (Fig. D1), which often accounts for the majority of the total production time (e.g., the average queue wait time for VR25-100 is approximately 3 times the actual computing time). All simulations used only the distributed-memory Message Passing Interface (MPI) parallelism, i.e., shared-memory parallelism (OpenMP) is not used. The main computing system at NERSC switched from Edison to Cori when the production simulations of the CAM–MPAS model were starting (NERSC, 2021). The newer system Cori is partitioned into two subsystems, Cori Haswell (HW) and Cori Knights Landing (KNL). As discussed below, the CESM–CAM–MPAS code showed large differences in performance on KNL and other systems, posing a significant impact on our production cost. Interested readers are referred to Appendix D for further details of our runtime configurations and the characteristics of the NERSC systems.

Three simulations that are not part of the CAM–MPAS downscaling dataset are also included in the following as references: (1) the default FV dynamical core on the nominal 1∘ grid (FV 1∘), (2) the same model configuration as UR120 but using the newer version of the CAM–MPAS model that will be released as an official option of CESM2 (UR120-new), and (3) CAM–MPAS on a quasi-uniform 30 km grid (UR30). These three simulations were run for other projects but with a similar set of file output (monthly, daily, 6 h, 3 h, and hourly output) for more than 5 years. All simulations use the same CAM5.4 physics with prescribed aerosol.

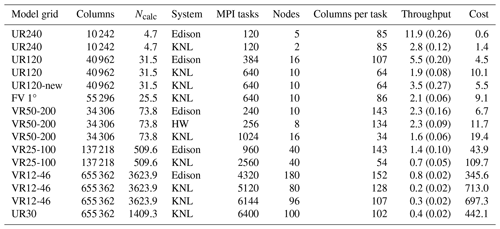

Table 5Simulation throughput and cost. The simulation cost is based on so-called “NERSC hour” (which is calculated as the number of nodes × number of hours × machine-dependent charge factor × queue priority factor), assuming the “regular” queue, and shown in units of 103 NERSC hours per simulated year (NERSC ). Throughput () is an average of at least 60 jobs, with the standard deviation shown in parentheses. Ncalc is the number of time steps per day over all grid boxes . Most of the samples are production runs, except for UR120-new, FV 1∘, and UR30, which are not the part of the dataset described in this paper but are shown as references.

Figure 6a visualizes the simulation cost vs. total MPI tasks used, as often used in cost-scaling studies. Table 5 lists the numerical values used in the figure. Although scatters in the data from different computing systems are notable, there is a clear trend to which we can fit a curve. The blue line represents a power function (y=axb) fitted to the simulation cost in the log–log space. The exponent b (the slope of a straight line on the log–log plot) is 1.54 with a 95 % confidence interval of 0.50, exhibiting a weak but nonlinear increase. The nonlinear increase is expected because linearly increasing cost is only possible for an idealized case, as also shown in the figure. The green line represents an ideal situation that the parallel part of the code speeds up linearly with additional resources (an ideal weak scaling; Eq. 5.14 in Hager and Wellein, 2011), whose cost thus increases linearly with the number of MPI ranks (slope of 1). The orange line of a constant cost applies only to the case where the size of the problem (e.g., number of grid columns) stays the same so that using more resources shortens the simulation time. This is an ideal “strong scaling” and is not applicable to the cost scaling for different resolutions over a fixed global domain. It is obvious from this comparison that larger resource use for higher resolutions on a fixed domain size, such as the global domain, always increases the computing cost nonlinearly.

Figure 6Graphs showing the relationship between (a) the simulation cost in terms of NERSC hours per simulated year (NERSC h sim. yr−1) and number of MPI tasks, (b) simulation cost and Ncalc (number of calculations equals the physics and dynamics time steps per simulated day across the global domain), and (c) Ncalc and the number of grid columns. The parameters of the fitted linear lines (blue curves, linear in the log–log space), , are shown in the legend. UR120-new refers to the UR120 simulation using the new CAM–MPAS code under development. In panel (c), we added data points for a variable-resolution 6–24 km mesh (VR6-24) as well as uniform resolution with 15 and 7.5 km grid cells (UR15 and UR7.5) by using their numbers of grid columns and scaling the model time step as described in Sect. 3.

There are several reasons for the nonlinear increase in the simulation cost against resources used, such as communication and load imbalance (Hager and Wellein, 2011; Heinzeller et al., 2016). For estimating the simulation cost of a given MPAS grid, we found that it is simpler to use the number of calculations (physics and dynamics time steps) per simulated day across all of the grid boxes in the global domain: Ncalc= (number of grid columns) × (number of vertical levels) × (number of time steps per day). Plotting simulation cost as a function of Ncalc (Fig. 6b), the fitted curve exhibits a slope of approximately 1. Looking at Ncalc as a function of the number of grid columns, it appears to be separated into two groups of VRs and URs, indicating the time step constraint from the high-resolution domains in VRs (Fig. 6c). The least-squares-fitted power functions have exponents of 1.45 for both VR meshes and UR meshes. This weak nonlinearity presumably comes from the dependence of time step length on grid spacing, which then becomes an additional implicit dependence on the numbers of grid columns.

As a specific example of VR vs. UR comparison, we take VR25-100, UR30, and UR120, as the latter two URs have comparable grid spacings in the high- and low-resolution regions of the VR25-100 grid. We use Ncalc of the simulations conducted on KNL to gauge the computational advantage of the VR25-100 against UR30, a uniform high-resolution simulation, as well as the extra cost added by the regional refinement to a uniform low-resolution simulation, UR120. The actual values of Ncalc for these three resolutions are shown in the third column of Table 5 and suggest UR30 to be 48 times more expensive than UR120 and VR25-100 to be 16 times more costly than UR120. The actual simulation cost closely follows the Ncalc scaling; 1 simulation year of UR30 (480.0×103 NERSC h sim. yr−1) is 48 times more expensive than that of UR120 (10.1×103 NERSC h sim yr−1.) The actual cost of VR25-100 is just 11 times that of UR120, which is lower than that expected from Ncalc, possibly reflecting the error from using an empirical curve fitted to three different systems in the single KNL system. In this case, VR25-100 achieves a factor of 4 computational advantage compared with UR30 for obtaining a similarly high-resolution grid over CONUS.

A couple of other points are noted in Table 5 and Fig. 6. First, the computational cost of CAM–MPAS UR120 and the default dynamical core FV 1∘ is comparable (1.9 vs. 2.1 sim. yr d−1 for CAM–MPAS UR120 and CAM–FV 1∘, respectively). Second, the model throughput (cost) of VR12-46 is 0.2 sim. yr d−1, half (double) that of UR30, despite the fact that these two grids have the same number of columns and that the simulations are run with similar numbers of columns per MPI task. The main reason for the difference is likely the shorter time steps (about one-third) in VR12-46 than in UR30 due to the numerical constraint imposed by the smallest grid spacing in the high-resolution domain. Lastly, we get consistently lower throughput and higher cost on Cori KNL than on the other two systems. Our experiment and previous studies (Barnes et al., 2017; Dennis et al., 2019) suggest a few compounding reasons (Appendix D), such as inefficient memory management for some global arrays, poor vectorization, and less focus on shared-memory parallelism of the CAM5/MPASv4 source code, which are not aligned well with the wider-vector and many-core architecture of KNL. However, the shorter expected queue time on KNL than HW (Fig. D1) makes KNL our main system for production. The weaker performance of the experimental CAM–MPAS code on KNL leads to a higher computational cost than our initial estimate for VR12-46, limiting the length of VR12-46 simulations to be half of other simulations. More importantly, the code characteristics described above are not necessarily unique to the CAM5/MPASv4 codes but may be common in other global or regional climate models in which many lines of the codes are written by domain scientists with little attention to code optimizations. Such climate models are not likely to be efficient on emerging, more energy-efficient HPC architecture similar to KNL for having wider vector units and more cores per node (and less memory per core) than previous systems. For example, two new systems being deployed to HPC centers in the United States – Perlmutter to NERSC (NERSC, 2022) and Derecho to the NCAR-Wyoming Supercomputing Center (NCAR Research Computing, 2022) – share such characteristics in their CPU nodes.

Fortunately, some of the computational problems with the CAM–MPAS model have been resolved through the MPAS-Atmosphere optimization, ongoing effort to port the later version 6 of MPAS-Atmosphere to CESM2 (the SIMA project), and other numerous changes across the CESM source code from CESM1.5 to CESM2. Those updates lead to an almost 80 % speedup of the UR120 throughput, as can be seen from the UR120 and UR120-new simulations in Table 5. Some of the speedup comes from different compiler optimizations used for the two simulations, but the code development plays a major role in this performance improvement. The Cori system is retiring, but the computational advantage of the new code is expected to be applicable to other systems, including the new NERSC system Perlmutter. We expect that decadal simulations on the VR12-46 grid or even convection-permitting VR meshes will be feasible using the newer CAM–MPAS code or the SIMA atmospheric general circulation model with MPAS as its dynamical core option. Multi-season convection-permitting simulations have been already carried out with the new SIMA-MPAS model (Huang et al., 2022).

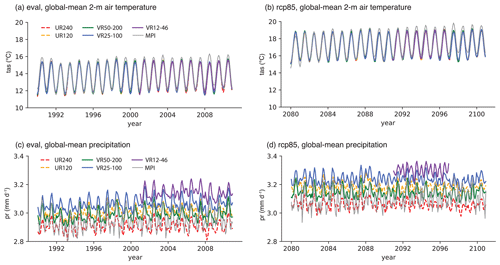

5.2 General characteristics of simulated climate

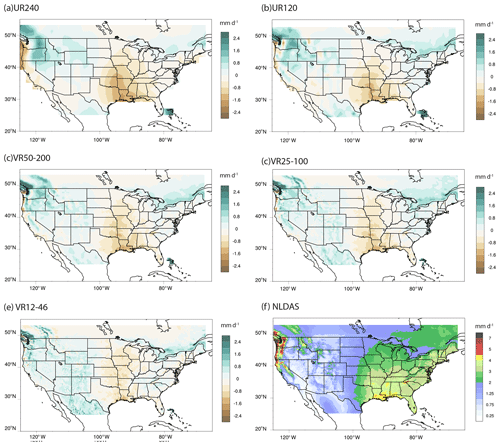

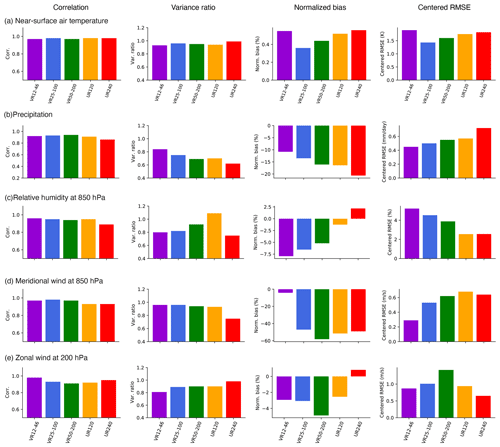

We briefly review selected aspects of the simulated climate. The focus here is the climate statistics at the global-scale and over the regions outside the VR high-resolution domain of North America. This is because, although the post-processed datasets cover a broad area encompassing the NA-CORDEX domain (Fig. 3a), the limited-area grid does not allow one to infer remote sources of large-scale forcings and their dependency on model resolution, which may be important to understand processes responsible for projected changes within the high-resolution domain. Appendix E presents additional figures and a table. For the downscaled regional climate, Appendix F provides a general overview of the model performance focusing on the CONUS region. The main finding of the regional assessment is that the performance metrics of precipitation improve with higher resolution, but the results are more mixed for other variables. Moreover, the resolution sensitivity of precipitation becomes weaker within the North American domain compared with the global statistics, which is shown below. A separate, systematic investigation of the regional climate in comparison with other limited-area models is being conducted (Sakaguchi et al., 2021) and will be reported elsewhere.

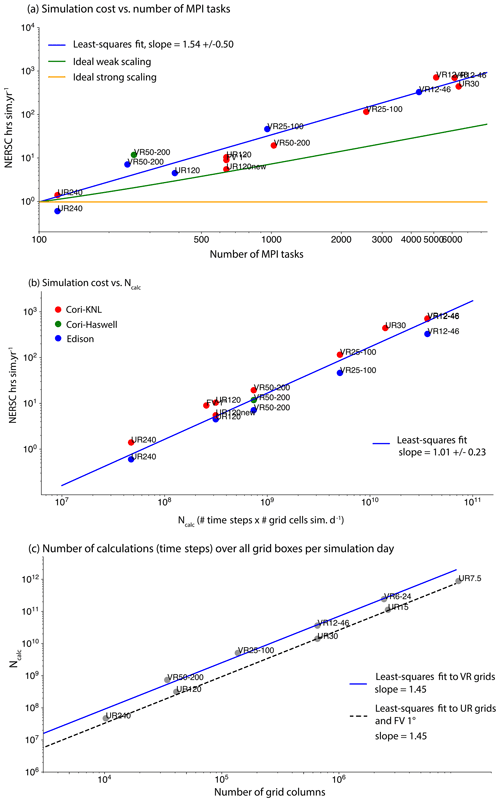

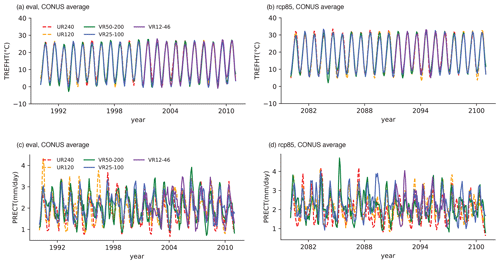

Figure 7Time series of the monthly global mean (a) near-surface air temperature (TAS) in the present-day (eval) simulations, (b) TAS in the future (rcp85) simulations, (c) precipitation (PR) in the present-day (eval) simulations, and (d) PR in the future (rcp85) simulations. “MPI” in the legend refers to the MPI-ESM-LR model simulation. The shorter VR12-46 simulation appears only in the last 11 years.

5.2.1 Present-day climate

The time evolution of global mean TAS is nearly identical across the resolutions (Fig. 7a), indicating a strong constraint by the prescribed SST. In contrast, global mean precipitation exhibits systematic differences among the resolutions such that it monotonically increases with finer resolution; UR240 simulates the lowest global mean precipitation, followed by VR50-200, UR120, VR25-100, and VR12-46 (Table 6, Fig. 7c), indicating that the coarse-resolution domain dictates the resolution sensitivity at the global scale in the VR simulations. In Fig. 7c, we see that the global precipitation of MPI-ESM-LR is similar to those of UR240 and VR50-200, which are the two resolutions closest to the MPI-ESM-LR model resolution.

Table 6 indicates that this monotonic increase is mainly contributed by convective precipitation, rather than large-scale precipitation. The trend of increasing convective precipitation with higher resolution is the inverse of what previous studies have found about the lineages of CAM physics (Williamson, 2008; Rauscher et al., 2013; Wehner et al., 2014; Herrington and Reed, 2020). This unexpected resolution sensitivity is not necessarily an improvement for the model hydrological cycle, and it is attributed to the changes that we made in the convective timescale of the ZM convection scheme (Sect. 2.2) based on our previous study (Gross et al., 2018). It would be more preferable that the total precipitation and fractions of convective (associated with unresolved updraft) and large-scale (associated with resolved upward motion) components remain unchanged for grid resolutions coarser than the so-called “gray zone” (e.g., Fowler et al., 2016). However, our result does illustrate a potential (and cursory) use of the convective timescale for tuning CAM–MPAS VR simulations. For example, smaller changes than we made in the timescale (Table 3) may result in more preferable partitioning of precipitation components. Readers are referred to Sect. 8b of Gross et al. (2018) for in-depth discussion about tuning mass-flux-based convection parameterizations for VR models. Other notable resolution sensitivities are reductions in the cloud fraction and vertically integrated cloud liquid and ice mass concentrations, which then bring about resolution sensitivities to cloud radiative forcing and radiative fluxes. Reduction in the cloud amount with higher resolutions has been noted by previous studies (Pope and Stratton, 2002; Williamson, 2008; Rauscher et al., 2013; Herrington and Reed, 2020). For example, Pope and Stratton (2002) found a 12 g m−2 reduction in the global mean cloud liquid-water path when refining the grid spacing from ≈280 to 90 km in the HadAM3 model. Herrington and Reed (2020) attributed the reduced cloud amount to stronger subsidence outside convective regions, which is linked to more intense resolved upward motion within the convective regions at higher resolution. We speculate that the same processes operate in our simulations with additional complexities due to our tuning of the ZM convection scheme.

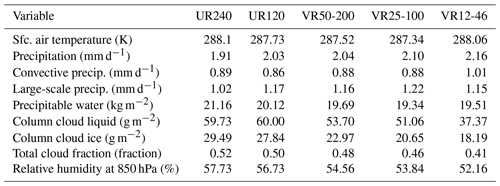

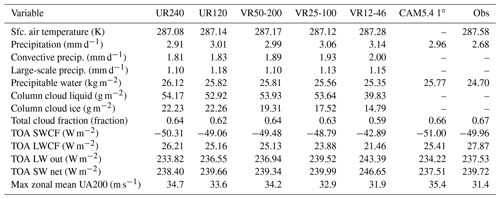

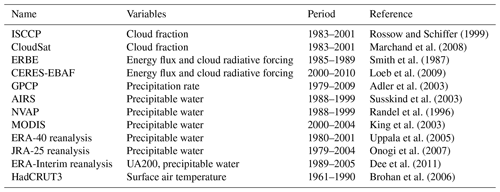

Table 6Global and annual means of selected variables from present-day (eval) simulations, taken from the Atmospheric Model Working Group (AMWG) diagnostic package (Atmospheric Model Working Group, 2014). Abbreviations in variable names are as follows: top-of-atmosphere (TOA), shortwave radiative flux (SW), longwave radiative flux (LW), shortwave cloud radiative forcing (SWCF), and longwave cloud radiative forcing (LWCF). Observational and reanalysis data (Obs) are provided through the AMWG diagnostic package and listed in Table E1. Averages are shown for variables for which multiple observational data are available.

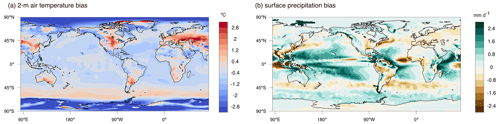

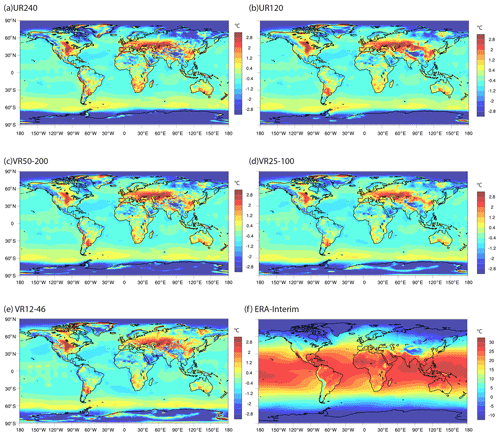

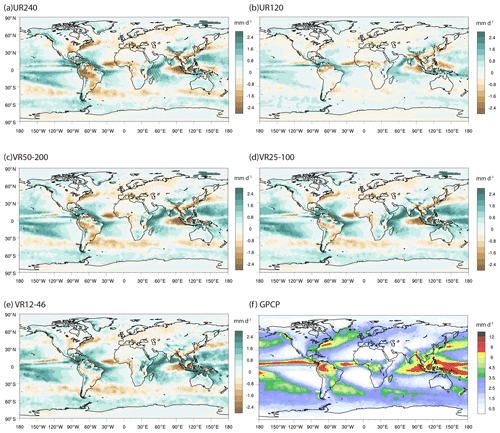

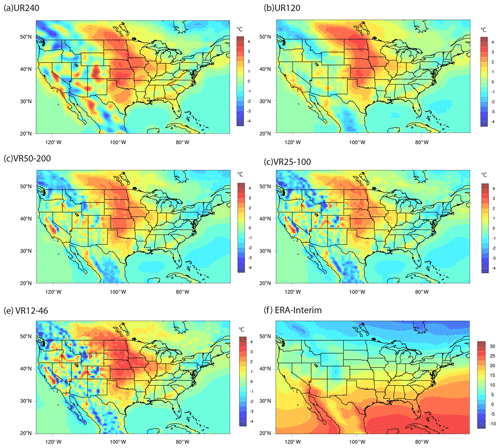

Figure 8 examines the spatial patterns of TAS and precipitation biases of VR25-100. We show VR25-100 as an example because the bias patterns are generally similar at the other resolutions (Figs. E1, E2). As with CAM5.4 and other climate models (Morcrette et al., 2018), the simulated TAS is too warm over the midlatitude continents, including the central United States (Fig. 8a). Little difference from ERA-Interim is seen over the ocean, but notable exceptions exist over the Southern Hemisphere storm track (≈0.5 ∘C) and the Arctic ( ∘C). The TAS bias appears similar to that of CAM5.4 with the default 1∘ FV dynamical core (Atmosphere Model Working Group, 2015), indicating a more important role of physics parameterizations than resolution or dynamical core for the bias (Appendix E).

Figure 8Difference in the climatological mean (a) 2 m air temperature over the 1990–2010 period between CAM–MPAS VR25-100 and ERA-Interim as well as (b) surface precipitation over the 1997–2010 period between VR25-100 and the Global Precipitation Climatology Project (GPCP). The ERA-Interim sea surface temperature and sea-ice cover are used as input for the CAM–MPAS AMIP simulations. The CAM–MPAS output and the reference data (ERA-Interim and GPCP) are remapped from their original grids to a global latitude–longitude grid with a ≈0.7∘ grid spacing, which is a similar resolution to the ERA-Interim grid.

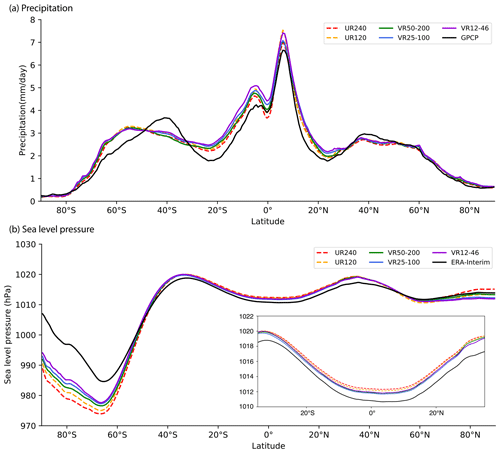

The resolution sensitivity of the global mean precipitation (Table 6c) originates mostly from the tropics between 20∘ S and 20∘ N (Figs. 8b, 9a) where the model overestimates precipitation compared with the Global Precipitation Climatology Project (GPCP). This regional bias generally becomes worse with higher resolution. While the tropics is far away from the downscale target of North America, tropical precipitation bias may have remote effects on large-scale circulations over the midlatitudes through Rossby waves and subtropical jets (Lee and Kim, 2003; Christenson et al., 2017; Dong et al., 2018; Wang et al., 2021). Such remote effects seem small over North America but much more prominent in the Southern Hemisphere, consistent with the previous VR CAM–MPAS study (Sakaguchi et al., 2015). For example, steady changes across resolution appear in the zonal mean sea level pressure in the tropics and in the high latitudes, with clearly greater magnitude in the Southern Hemisphere than in the Northern Hemisphere (Fig. 9b). Consistently, zonal mean zonal wind also shows stronger resolution sensitivities over the tropics and Southern Hemisphere than in the Northern Hemisphere (Fig. E3). The Atmosphere Model Working Group (2015) shows a similar sea level pressure bias in the default CAM5.4, and the apparently large magnitude of the bias depends on which reanalysis dataset is used as a reference. Notably, higher resolution reduces the biases of sea level pressure and zonal mean zonal wind over the Southern Hemisphere.

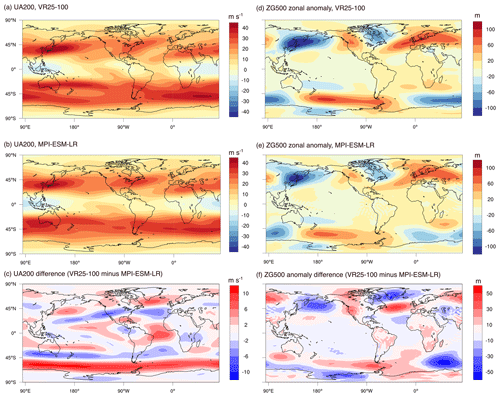

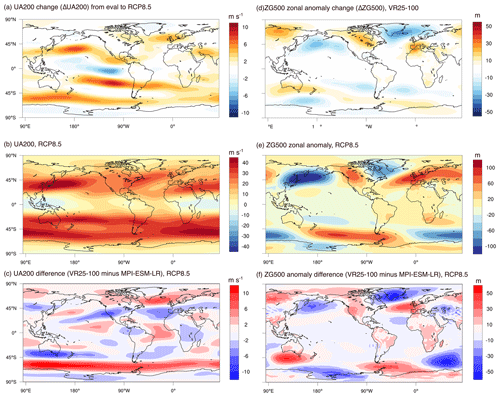

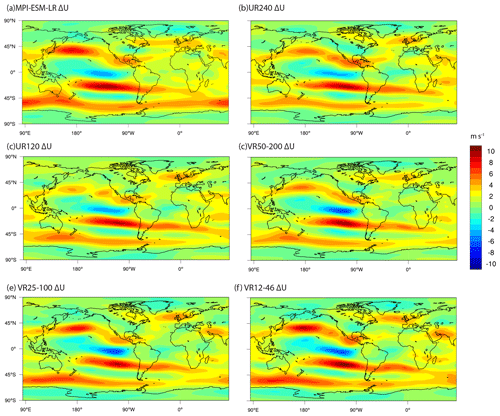

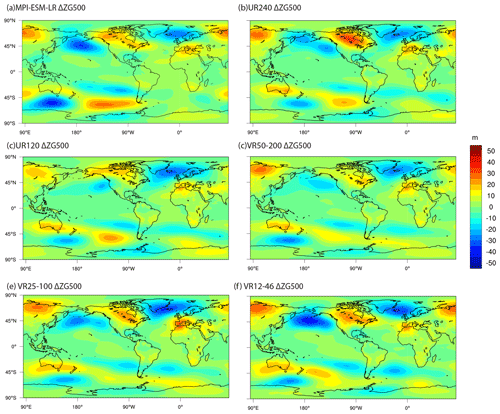

In our global pseudo-warming experiment, differences between the CAM–MPAS and MPI-ESM-LR simulations in large-scale circulations are also important to understand the processes underlying regional climate change over North America. Figure 10 compares the climatological mean zonal wind at the 200 hPa level (UA200) and zonal anomalies of 500 hPa geopotential height (ZG500) from the VR25-100 and MPI-ESM-LR simulations of the historical period. We continue to use VR25-100 as an example because differences between the two models (MPI-ESM-LR and CAM–MPAS) are substantially larger than the resolution sensitivities of the CAM–MPAS model (not shown). With respect to VR25-100, Fig. 10a, b, and c indicate that (1) the midlatitude (eddy-driven) jet is located at higher latitudes, (2) the subtropical jet over North America is stronger, and (3) the Walker circulations over the Pacific and Atlantic oceans are also stronger than those in the MPI-ESM-LR model. Notable differences in ZG500 include a stronger ridge in VR25-100 than in the MPI-ESM-LR model over the western North America (Fig. 10d, e, f). The stronger ridge and associated static stability, along with different jet locations and strengths, indicate that the two models simulate the generation and propagation of atmospheric disturbances differently as well as the local response to them, which are all factors that are suggested to be important for the hydroclimate of the western and central US (e.g., Leung and Qian, 2009; Song et al., 2021).

Figure 9Zonal and annual mean (a) precipitation and (b) sea level pressure from the CAM–MPAS simulations and reference data of (a) GPCP and (b) ERA-Interim. All data are first remapped to a ≈0.7∘ latitude–longitude grid before taking the zonal average. The inset in panel (b) shows the same mean sea level pressure but only in the region between 35∘ S and 35∘ N.

Figure 10Annual mean zonal wind at the 200 hPa level in (a) VR25-100 and (b) MPI-ESM-LR as well as (c) the difference between the two simulations. Geopotential height at the 500 hPa level in (d) VR25-100 and (e) MPI-ESM-LR as well as (f) the difference between the two simulations. All data are remapped to a latitude–longitude grid by the patch method (Balaji et al., 2018). The wavy patterns in panels (e) and (f) near the Andes are likely numerical oscillations in the MPI-ESM-LR model (Geil and Zeng, 2015).

5.2.2 Future climate

The global mean TAS remains insensitive to resolution in the future rcp85 case (Fig. 7b). Also similar to the historical period, we see steady increase in global mean precipitation with finer resolution (Fig. 7d). As a result, all of the resolutions project similar changes in the global mean precipitation (ΔP) from the historical to the rcp85 case within the range of 0.15–0.18 mm d−1.

Figure 11Spatial patterns of the near-surface climate change from the historical (1990–2010) to the future RCP8.5 case (2080–2100) and the difference in the mean future climate between the VR25-100 and MPI-ESM-LR simulations: (a) simulated change in the near-surface air temperature (ΔTAS) in VR25-100, (b) difference in the mean TAS between VR25-100 and MPI-ESM-LR in the RCP8.5 simulations, (e) precipitation change (ΔP) in VR25-100, and (f) precipitation difference between the two RCP8.5 simulations. Panel (c) is the same as panel (b) but for SST difference, and panel (d) is the same as panel (b) but for SIC difference.

Looking at the spatial patterns, the TAS change (ΔTAS) from the historical to the RCP8.5 period in VR25-100 closely follows the ΔSST patterns derived from the MPI-ESM-LR model (by comparing Figs. 4e and 11a). The almost identical ΔSST leads to a different climatological SST (and TAS) in the two future simulations (Fig. 11b) because ΔSST and ΔSIC from MPI-ESM-LR are added to the base state from ERA-Interim instead of the MPI-ESM-LR model itself (Fig. 11c, d). It is notable that the SST over the Arctic region is substantially warmer in VR25-100 than in MPI-ESM-LR, while such a difference is lacking in TAS (Fig. 11b, c). The discrepancy is the result of an assumption in the CESM data ocean model such that SST below −1.8 ∘C (a typical freezing temperature of sea ice) is reset to this assumed freezing temperature, and the SST shown in the figure is not the input to the model but output from the simulation. In the MPI-ESM-LR simulation without such an assumption, the climatological SST can be as low as −5 ∘C over the Arctic region. We presume that this SST difference does not directly affect TAS because of the Arctic sea-ice cover.

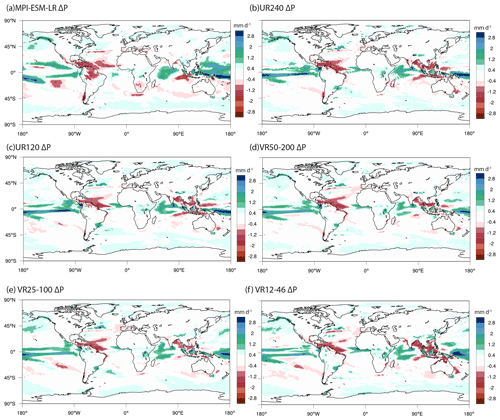

The spatial pattern of ΔP in VR25-100 is characterized by a marked increase in the tropical Pacific, Arabian Sea, and Northern Hemisphere storm tracks and by a reduction over the tropical Atlantic Ocean (Fig. 11e). These ΔP responses over the ocean generally agree with the MPI-ESM-LR projection (Fig. E4), while the extent of regional features differ, especially in the equatorial region, such that precipitation from the Intertropical Convergence Zone (ITCZ) is projected to be more intense in a narrower band in VR25-100 than in MPI-ESM-LR. Over land, ΔP in the two simulations diverges most notably in the Amazon Basin as well as in Australia, southern Africa, and, importantly, North America. These changes over land become more visible in the ocean-masked contour plots in Fig. E5e and f. Those regions are also where we see the resolution sensitivity of ΔP among the CAM–MPAS simulations (Fig. E5b, c, d, e, f), indicating a large uncertainty in the projection of regional hydrological cycles.

Figure 12Simulated changes in the annual mean upper-level circulations from the historical (1990–2010) to the future RCP8.5 case (2080–2100) and the difference in the future climate between the VR25-100 and MPI-ESM-LR simulations: (a) the 200 hPa zonal wind change (ΔUA200) in VR25-100, (b) the future UA200 climatology in VR25-100, (c) the UA200 climatology difference between VR25-100 and MPI-ESM-LR in the RCP8.5 period, (d) the simulated change in zonal anomaly 500 hPa geopotential height (ΔZG500) in VR25-100, (e) the future climatology of ZG500 zonal anomaly in VR25-100, and (f) the ZG500 difference between VR25-100 and MPI-ESM-LR.

Turning to the large-scale circulations, the projected change in the 200 hPa level zonal winds (ΔUA200) in VR25-100 indicate broader and more intense subtropical jets, midlatitude storm tracks, and Southern Hemisphere polar jet at the end of the 21st century (Fig. 12a, b). MPI-ESM-LR also projects such changes in terms of the zonal mean circulation (Shaw, 2019), and the spatial patterns of ΔUA200 are generally consistent between the two models with a pattern correlation of 0.87 (Fig. E6). The projected changes in the zonal anomaly of the 500 hPa geopotential height (ΔZG500) in VR25-100 are characterized by the pattern shift to the east over middle to high latitudes in the Northern Hemisphere (Fig. 12d, e). The shift is simulated by MPI-ESM-LR and also found in the CMIP5 multi-model mean response (Wills et al., 2019). Because the responses of these large-scale circulations to the imposed radiative forcings and (identical) ocean warming are similar in the two models, the base state differences, as seen in Fig. 10c and f, remain nearly unchanged in the future period (Fig. 12c, f). Therefore, distinct aspects of the large-scale forcings on the North American climate, as discussed in the previous section, will continue to be seen in the RCP8.5 case.

5.2.3 Soil spin-up