the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

REHEATFUNQ (REgional HEAT-Flow Uncertainty and aNomaly Quantification) 2.0.1: a model for regional aggregate heat flow distributions and anomaly quantification

Malte Jörn Ziebarth

Sebastian von Specht

Surface heat flow is a geophysical variable that is affected by a complex combination of various heat generation and transport processes. The processes act on different lengths scales, from tens of meters to hundreds of kilometers. In general, it is not possible to resolve all processes due to a lack of data or modeling resources, and hence the heat flow data within a region is subject to residual fluctuations.

We introduce the REgional HEAT-Flow Uncertainty and aNomaly Quantification (REHEATFUNQ) model, version 2.0.1. At its core, REHEATFUNQ uses a stochastic model for heat flow within a region, considering the aggregate heat flow to be generated by a gamma-distributed random variable. Based on this assumption, REHEATFUNQ uses Bayesian inference to (i) quantify the regional aggregate heat flow distribution (RAHFD) and (ii) estimate the strength of a given heat flow anomaly, for instance as generated by a tectonically active fault. The inference uses a prior distribution conjugate to the gamma distribution for the RAHFDs, and we compute parameters for a uninformed prior distribution from the global heat flow database by Lucazeau (2019). Through the Bayesian inference, our model is the first of its kind to consistently account for the variability in regional heat flow in the inference of spatial signals in heat flow data. Interpretation of these spatial signals and in particular their interpretation in terms of fault characteristics (particularly fault strength) form a long-standing debate within the geophysical community.

We describe the components of REHEATFUNQ and perform a series of goodness-of-fit tests and synthetic resilience analyses of the model. While our analysis reveals to some degree a misfit of our idealized empirical model with real-world heat flow, it simultaneously confirms the robustness of REHEATFUNQ to these model simplifications.

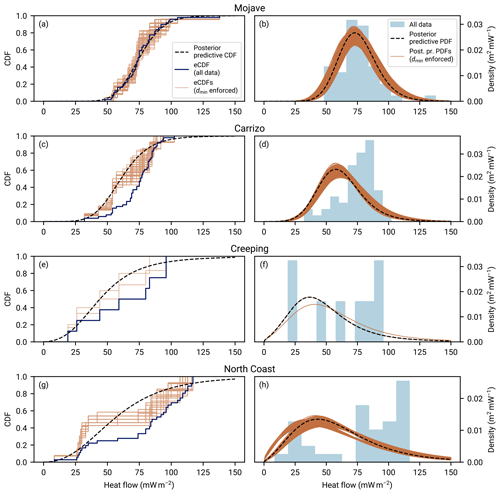

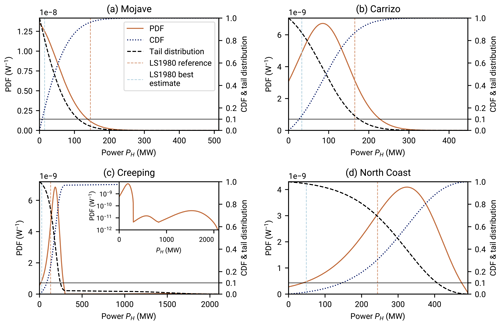

We conclude with an application of REHEATFUNQ to the San Andreas fault in California. Our analysis finds heat flow data in the Mojave section to be sufficient for an analysis and concludes that stochastic variability can allow for a surprisingly large fault-generated heat flow anomaly to be compatible with the data. This indicates that heat flow alone may not be a suitable quantity to address fault strength of the San Andreas fault.

- Article

(8050 KB) - Full-text XML

-

Supplement

(1049 KB) - BibTeX

- EndNote

Surface heat flow is an important geophysical parameter. It plays an important role in the global energy budget of the solid Earth (Davies and Davies, 2010) and has implications for the exploitability of geothermal energy as a renewable energy source (e.g., Moya et al., 2018). It is also intimately connected to the crustal temperature field which has the potential to control the crustal elastic properties (Peña et al., 2020) and is hence vital for the understanding of seismic and aseismic crustal deformation. Furthermore, measurements of the surface heat flow have been indicative of the frictional strength of the San Andreas fault (SAF) by constraining the heat production rate on the fault surface (Brune et al., 1969; Lachenbruch and Sass, 1980).

Global patterns of surface heat flow have been investigated in multiple works (e.g., Pollack et al., 1993; Goutorbe et al., 2011; Lucazeau, 2019). The models therein usually assign an average heat flow to each point of Earth's surface, for instance by dividing the surface into a grid. We denote this as the “background heat flow” qb, which might follow from the two main sources of crustal heat flow, mantle heat transmission and radiogenic heat generation (Gupta, 2011). As data accumulated, the additional information was used in later works to improve the spatial resolution of qb models.

Alas, even at the finer resolution of newer works, the models of global heat flow do not perfectly describe the heat flow measurements due to fluctuations. Goutorbe et al. (2011) observe that a residual variation of 10 to 20 mW m−2 remains between heat flow measurements not further than 50 km apart. One potential cause of this variation is the varying concentration of radiogenic elements within the upper crust, which has been observed to change by a factor of 5 within a couple of tens of meters (Landström et al., 1980; Jaupart and Mareschal, 2005).

Whatever the cause, the magnitude of the variation observed by Goutorbe et al. (2011) and its spatial extent are similar to some anomalous signals generated by processes that one might wish to investigate and distinguish from the background qb. The fault-generated heat flow anomaly discussed by Lachenbruch and Sass (1980) regarding the SAF, with a peak heat flow less than about 27 mW m−2, is an important example. The magnitude similarity between the residual variation and the queried signature implies that it is difficult to establish bounds on the latter.

In this article, we introduce the REHEATFUNQ model (REgional HEAT Flow Uncertainty and aNomaly Quantification), which aims to

-

quantify the variability within regional heat flow measurements and

-

identify how strong the surface heat flow signature of a deterministic process, e.g., fault-generated heat flow, can be given a set of heat flow measurements in the study area.

REHEATFUNQ approaches these goals by aggregating heat flow measurements in a region of interest (ROI) into a location-agnostic distribution of heat flow. It considers the heat flow within the region as the result of a stochastic process and hence the aggregate distribution as the probability distribution of a random variable. In a Bayesian workflow, this distribution is inferred from the regional heat flow data and from prior information. Processes such as the fault-generated surface heat flow can be quantified by supplying the impact of the process onto each data point and inferring the posterior distribution of a process strength parameter.

The REHEATFUNQ model is an empirical model. In this study, we have performed a number of analyses of the New Global Heat Flow (NGHF) database by Lucazeau (2019) to inform the model. Synthetic computer simulations based on the REHEATFUNQ model assumptions have been performed to test the model performance on ideal data. We also perform a resilience analysis based on a number of alternatives to the model assumptions which are also compatible with the NGHF database.

The paper starts with a description of the (heat flow) basis data of the REHEATFUNQ model in Sect. 2. The methodology section (Sect. 3) continues with a physical motivation for the REHEATFUNQ model before it transitions to a technical description of the model's capabilities. Section 4 bundles statistical analyses of the performance of the REHEATFUNQ model and is rather technical. It starts out by assessing how well the model assumptions are reflected in real-world data, uses stochastic computer simulations to investigate whether known imperfections inhibit REHEATFUNQ's usefulness, and discusses physical limitations of the model. Section 5 then illustrates how to apply the REHEATFUNQ model by means of the San Andreas fault in Southern California before Sect. 6 concludes this work. As a reference for the application of the REHEATFUNQ model, Appendix A summarizes all analysis steps mentioned throughout the paper in a workflow cheat sheet.

This work is fundamentally built on the analysis of surface heat flow measurements, that is, point measurements of the flow of thermal energy from Earth's interior through the outermost layer of the crust into the atmosphere. Heat flow has units of energy divided by time and area; integrated over an area of Earth's surface, it gives the power at which thermal energy transfers from the inside to the atmosphere.

Heat flow is typically estimated from temperature measurements at varying depths within a borehole. From these measurements, the temperature gradient is estimated which, multiplied with the heat conductivity of the surrounding rock, leads to the heat flow estimate (e.g., Henyey and Wasserburg, 1971). For more details, we refer the reader, for instance, to Henyey and Wasserburg (1971), Fulton et al. (2004), and Sass and Beardsmore (2011).

Measuring heat flow is a difficult task. Each measurement requires a borehole and sufficient time to establish temperature equilibrium at the sensors (Henyey and Wasserburg, 1971). Furthermore, the temperature profile close to Earth's surface might not be linear with depth, as would be imposed by a constant heat flow. The causes for these perturbations can include topography, erosion, climate, and water circulation (Lucazeau, 2019), the latter as advection or convection. These perturbations have to be corrected for to estimate the crustal heat flow component of the measured temperature profile. Otherwise crustal heat flow estimates will be biased or uncertain.

Heat flow data are multidimensional, spanning most prominently the heat flow as well as the spatial dimensions. Within the REHEATFUNQ model, the heat flow data are aggregated within the region of interest (ROI) and investigated without regard for the further spatial distribution. We call this data set, reduced to the heat flow dimension only, the regional aggregate heat flow distribution. Formally, given a set of heat flow measurements within a ROI, one may write the regional aggregate heat flow distribution as result of the mapping:

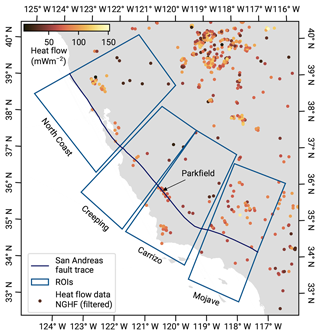

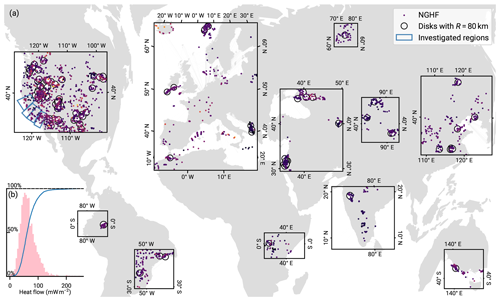

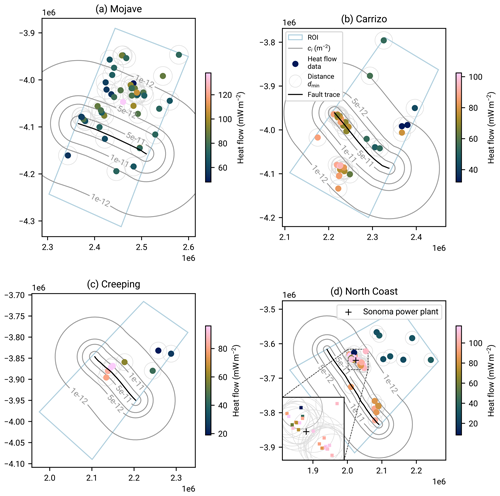

Figure 1New Global Heat Flow (NGHF) database (Lucazeau, 2019) and random regional heat flow samples. The map shows data points from the NGHF used in this study, corresponding to positive continental heat flow values with a quality ranking of “A” to “B” and not exceeding 250 mW m−2. The set of random global R-disk coverings (RGRDCs) used in Sect. 3.3.2 to determine the estimates of the prior distribution parameters is illustrated by thinly outlined disks. The algorithm to distribute the disks is described in Appendix B. The analysis regions used in Sect. 5 for the SAF are shown in a thicker blue outline. Inset (b): empirical distribution function and histogram of the same global heat flow data.

2.1 Data used and general filtering

In this work, we build upon a global database of heat flow measurements compiled by Lucazeau (2019), the NGHF. This data set, a continuation of the effort of Pollack et al. (1993), is a heterogeneous collection of 69 730 heat flow measurements from a variety of studies. It covers the time period from 1939 to 2019 and covers the globe on multiple spatial scales from repeat measurements at the same location up to the largest nearest-neighbor distances of ∼1200 km. We will use both the global coverage as well as the specific region of Southern California in this work. Due to the heterogeneous spatial coverage and quality of the data, we apply a number of data filters beforehand.

We use only a quality-filtered subset of the NGHF in all our following analyses. Lucazeau (2019) compiled heat flow data from a wide range of sources, spanning decades of technological improvements in instrumentation and combining various work on perturbation correction and uncertainty estimation. To obtain more homogeneous data quality, we follow the quality assessment described by Lucazeau (2019) and discard data points of the lowest quality rankings of C to F and those from earlier than 1990 (marking an increase in data quality). We furthermore remove negative heat flow values.

Since we will consider a continental scenario, we furthermore remove data points not marked as continental crust (i.e. not keys A to H in field “code1”). Finally, we discard data points categorized as possibly geothermal following Lucazeau (2019), that is, those exceeding 250 mW m−2. The remaining data set has 5974 entries. The global aggregate heat flow distribution of this remaining data set is shown in Fig. 1.

The filtering steps described in this section are an example of what is subsumed as step 1 of the workflow listed in Appendix A.

3.1 Physical basis

Surface heat flow is the result of heat, generated in Earth's interior, being transported to the surface by diffusive, advective, and convective processes. The main sources of heat within Earth are the thermal energy from its planetary genesis and the decay of radioactive elements (Christensen, 2011; Mareschal and Jaupart, 2021). Within the crust, heat production due to the friction on faults can be large enough to cause measurable local disturbances of the temperature field (e.g., Kano et al., 2006) and could potentially also lead to significant disturbances in the surface heat flow field if the frictional strength of the fault were large (Brune et al., 1969; Lachenbruch and Sass, 1980).

After generation, three modes of steady transport can be available to bring the heat to the surface. Heat diffusion occurs throughout Earth's interior. Advection can occur with the tectonic movement of rock or by means of gravitationally driven pore water movement (Molnar and England, 1990; Fulton et al., 2004). Convective processes range from magma convection in the mantle through crustal pore water convection (Bercovici and Mulyukova, 2021; Hewitt, 2020).

Both generation and transport of heat within Earth are subject to a number of unknowns such as material composition in terms of heat generation and conduction, the geometry of convection cells, and the existence of groundwater flow (e.g., Morgan, 2011). Some of these parameters are difficult to determine, and typically residual fluctuations remain in thermal models even if those models take into account a multitude of available information (e.g., Cacace et al., 2013; Fulton et al., 2004). That is, even though the principles underlying the full surface heat flow field are known, the incomplete knowledge of the specific crustal processes and material properties defining a specific region's surface heat flow, in combination with measurement uncertainties, makes it generally impossible to model the exact surface heat flow that is measured.

Our approach is to acknowledge that a model is unlikely to capture the full surface heat flow signal simply because the input data do not capture all relevant features of the subsurface or because the measurement is uncertain. The concept of REHEATFUNQ is then to abstract these unknowns into a black-box stochastic model of surface heat flow within a region. The stochastic model condenses the spatial distribution of heat flow into a single probability distribution of heat flow q, γ(q), for the whole region, agnostic to where heat flow is queried within. This way, unknowns about the parameters that control surface heat flow are captured by the amount and variance of the measurement data. If a region is characterized by uniform heat flow – that is, it is independent and identically distributed following a single distribution γ(q) – and sufficient data have been collected, a statistical analysis will yield a precise result. Variability in the heat flow controlling parameters reflects in a wider spread of the inferred distribution instead.

The arguments that motivate the REHEATFUNQ approach are related to the spatial variability in heat flow. Surface heat flow exhibits variability on a large range of scales. Long-wavelength contributions follow from the diffusion from deep heat sources. The diffusion up to the crustal surface smoothes the lateral pattern of heat flow from these sources with a characteristic length of 100 km (Jaupart and Mareschal, 2005, 2007). If the resulting signal does not vary significantly within the extent of the ROI, we label it the locally uniform background qb. In our later analysis, the extent will be ∼160 km, but this is not a hard constraint on the region size.

The surface heat flow also contains signals of a smaller spatial scale, say 50–100 km and below. We label the surface heat flow that varies spatially within the ROI with qs(x). Examples of these short-wavelength effects include radiogenic heat production from the tens-of-meters to kilometer scale (Jaupart and Mareschal, 2005) or recent tectonic history through movement of heated mass or friction on faults (Morgan, 2011).

One type of short-wavelength signal is topographic effects. Since they are more readily corrected for Blackwell et al. (e.g., 1980) and Fulton et al. (2004), we list them separately as qt(x). Topographic effects act on the scale of hundreds of meters to multiple kilometers (see, e.g., the extent of the mountains listed by Blackwell et al., 1980) if the boreholes are not sufficiently deep (that is, shallower than 75–300 m depending on temperature gradient and topographic variability; Blackwell et al., 1980).

Finally, the heat flow might also be influenced by random measurement error qf. This includes all kinds of difficulties inherent to the process of drilling, measuring temperature, and evaluating heat flow gradients. These effects are independent of location.

All these unknown contributions to the surface heat flow complicate the inference of a known constituent of the heat flow signal from the data. For instance, one might have good knowledge about the location of an underground heat source and its heat transport to the surface and hence be able to accurately model the spatial surface heat flow signature qa(x) that the heat source generates but might not know about the heat source's strength and hence the magnitude of the signature. The quantification of fault-generated heat flow anomalies on the San Andreas fault is a paragon of this problem (Brune et al., 1969; Lachenbruch and Sass, 1980; Fulton et al., 2004) and is the inspiration behind the name qa (“anomaly”). Because the surface heat flow is influenced by many unknown effects – unknown but evident due to the variability that is not perfectly fit by the model's signature – it is not trivial to infer the magnitude of the model's heat source. This applies particularly if the magnitude of the signature generated by the actual heat source is on the order of or less than the spread due to the unknown constituents.

REHEATFUNQ aims to solve this issue through the stochastic model of the unknown constituents of surface heat flow and consequently to help researchers calibrate models of specific surface heat flow constituents. The surface heat flow field q(x) is separated into the modeled heat flow qa(x) and the unknown contributions qb, qs(x), qt(x), and qf. The magnitude of the modeled heat flow qa(x) is expressed in terms of the power PH of the heat source (an example is given later in Sect. 3.4.1), and a Bayesian inference of this parameter is performed using heat flow measurements qi, that is, samples of the unknown stochastic constituents transformed to q(x) when combined with qa(x). Before the following sections discuss the stochastic model and the inference of the magnitude of qa, we discuss the separation of qa from the unknown constituents and, equivalently, how the stochastic model and the modeled heat flow relate to the heat flow measurements.

If heat transport in the crust is linear, which is the case for conduction and advection, the heat flow q(x) is a superposition of the five constituents:

Here, we have collected all these unknowns into qu(x). If heat transport is nonlinear, for instance in the case of nonlinear convection, a superposition like this would not be possible. Instead, q(x) would be a nonlinear function of the sources of qb, qs, qt, qf, and qa. If the heat source that causes the anomaly qa is itself a driver of the convection, REHEATFUNQ as developed in this paper cannot be applied (with one technical exception mentioned further down in Appendix G, whose applicability is unclear). This might not be a significant restriction, however: if the heat source that generates the anomaly qa is strong enough to drive convection on significant length scales (that is, the 1–10 km scale), the resulting surface heat flow signature is probably large enough (that is, more than 50–100 mW m−2) for the separation from the “background noise” (the undisturbed heat flow) to be less challenging.

However, if the magnitude of qa is small (that is, less than about 50–100 mW m−2), the need for a statistical method, such as REHEATFUNQ, is essential. In the case of a small qa with a crustal heat source, the source will be similarly small and likely not be a driver of convection. Then, if some of the other heat sources, underlying qb, qs, qt, and qf, drive nonlinear convective transport, a linearization of the heat transport equation similar to the one performed by Bringedal et al. (2011) can be performed, which would again separate qa as a linear constituent of q(x) from the unknown:

Illustratively, the nonlinear convection due to other sources would act as an advective term for the diffusion advection of the anomalous heat source.

Equation (3) shows the extent of separation that is required for REHEATFUNQ to be applied. It enables the linear separation of the model output from the unknown heat flow which is treated by the stochastic approach. But what motivates the stochastic approach, describing qu as a probability distribution γ(qu)?

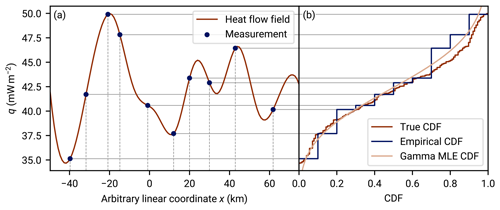

For the error term qf, the treatment as a random variable is straightforward. To treat the other terms stochastically is less evident since the surface heat flow field should in principle be deterministic and accessible through a precise measurement given enough effort. Here we can consider the random location sampling of a deterministic qu landscape as a stochastic source of the qu random variable. Figure 2 illustrates this approach. The surface heat flow field acts like a random variable transform of the spatial random variable to the random variable q. The probability density of q derives from the level set of the heat flow field.

Figure 2The stochastic model of regional aggregate heat flow from a deterministic surface heat flow field: an artificial illustration. (a) An artificial one-dimensional surface heat flow field generated from artificial underground heat sources. The underground heat sources (200 km wide, 80 km deep grid with 201×151 cells, not shown) have been optimized from random initial values such that the surface heat flow they generate fits a target heat flow distribution whose aggregate distribution is close to a gamma distribution (details in Appendix F). The blue dots illustrate heat flow measurement {qi} at random locations xi (dashed gray lines). The set {qi} is a sample of the regional aggregate heat flow distribution (RAHFD), the projection of the measurements to the heat flow dimension q (solid gray lines). Panel (b) shows, in a sideways view, the empirical cumulative distribution function (CDF) of the RAHFD. The aggregation process is illustrated by the horizontal gray lines connecting this panel to panel (a). Furthermore, the target RAHFD (derived from the continuous target heat flow distribution of panel a) is shown as well as a maximum likelihood estimate from the sample data. Combined, the two panels show how random spatial sampling of a deterministic heat flow field yields a stochastic RAHFD.

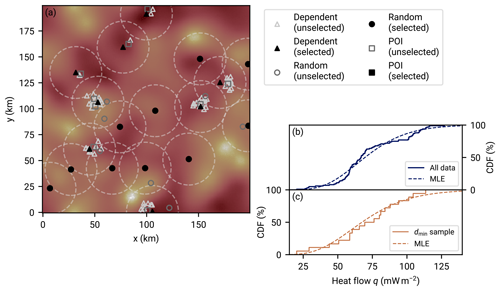

The approach illustrated in Fig. 2 highlights why it can be important to prevent spatial clustering within the data. If qs(x) is indeed a significant source of randomness within q(x), data independence depends significantly on the independence of sample locations, which is highly questionable if heat flow measurements cluster, e.g., around a geothermal field. What is more, clustered sampling point sets have a high level of discrepancy, so they could additionally lead to less accurate deterministic integration properties of the underlying heat flow distribution (Proinov, 1988). The minimum-distance criterion, effectively creating a Poisson disk sampling, can potentially trade discrepancy (Torres et al., 2021) and bias for data set size.

Nevertheless, clusters may also contain variability due to the measurement error term qf. This information would be lost if clusters would be reduced to single points through the minimum-distance criterion. REHEATFUNQ mitigates clusters while preventing this data loss by considering data points which exclude each other due to the dmin criterion as alternative representations of the cluster. Each alternative is then considered in the likelihood. Section 3.2 and 3.3.1 detail this process.

3.2 Mitigating spatial clustering of heat flow data

To date, the spatial distribution of heat flow data is inhomogeneous. In particular, spatial clusters exist around the points of interest of past or contemporary explorations in which the heat flow data were measured (e.g., The Geysers geothermal field in Sect. 5, Fig. 18). This property can be problematic for our stochastic model of regional aggregate heat flow (Sect. 3.1). If a significant part of the stochastic nature of regional aggregate heat flow is due to the random sampling of an unknown but smooth spatial heat flow field as described in the previous section, sampling in clusters that are too narrow might lead to correlated data. The statistical methods we develop in the following section, however, assume independence of the data.

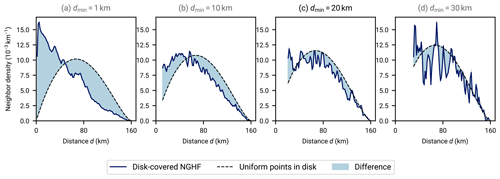

Figure 3Spatial uniformity of heat flow measurements from the NGHF within disks with a radius of 80 km when varying the minimum inter-point distance dmin. Each graph shows neighbor distributions within disks with a radius of 80 km as a function of inter-neighbor distance d, that is, the number of neighboring data points at distance d from a data point within the disk, averaged over all points within the disks. The dashed lines show the expected distribution for a uniform distribution of points within the disks (derived in Appendix E). Deviations from the dashed lines indicate a non-uniform distribution of points within the disk. The solid blue lines show the empirical neighbor density obtained from disks with a radius of 80 km randomly distributed over Earth and selecting the NGHF data points within. The difference between the three panels lies in enforcing different minimum distances between NGHF data points. If two data points within a disk are closer together than the indicated dmin, a random one of them is removed. As dmin is increased, the neighbor distributions approach uniformity but fluctuations due to small number of remaining points within the disks increase. In this work, we choose dmin=20 km as a compromise between the two effects.

To mitigate the potential bias of spatial clustering, we enforce a minimum distance dmin between data points, using only one data point of pairs that violate this distance criterion. This realizes a more uniform spatial data distribution. In Fig. 3 we compare analytical expressions for the neighbor density under a uniform distribution (Appendix E) with the distance distribution between points of the filtered NGHF. The comparison leads us to choose a minimum distance of 20 km,

between selected data points as a trade-off between uniform distribution and sufficient sampling.

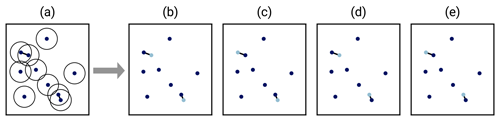

Using only one data point of close pairs raises the question of which data point to choose. Ignoring the other data point ensures that the dependency between the data points is avoided, but it also results in loss of information about any spatially independent noise component. To retain the best of both worlds, we introduce a latent parameter that iterates all possible ways to select dmin-conforming subsets from the set of heat flow measurements in a region. Each value of the latent parameter therefore corresponds to a data set that we consider independent data within our model assumption and we can evaluate posterior distributions as described in Sect. 3.3.1 and 3.4. Figure 4 illustrates the generated subsets for a simple example.

Figure 4Selecting subsets of heat flow data when data point pairs violate the minimum-distance criterion. The circles in panel (a) indicate the radius dmin which is violated by the two marked point pairs. Panels (b) to (e) show the data point subsets that would be used in the handling of spatial data clusters: of each conflicting pair, a maximum of one data point is retained (fewer if the violations occur in clusters). In this simple scenario, panels (b) to (e) list all possible permutations. The REHEATFUNQ code approximates this permutation procedure stochastically for large sample sizes.

Choosing the parameter dmin, whether according to our value of 20 km or based on the data density within the ROI, is step 2 of the workflow listed in Appendix A.

3.3 Model description

3.3.1 A combined gamma model

The disaggregation of the heat flow measurements, Eq. (2), into different components is the basis for our model of regional aggregate heat flow. In particular, we consider the unknown heat flow qu as a random variable. To yield useful results, this requires a model, that is, a probability distribution for qu. In deriving a model for qu, we make the following assumptions.

- I.

The sum qb+qf is an independent and identically distributed (i.i.d.) gamma random variable.

- II.

The sum qs(x)+qt(x) is an i.i.d. gamma-distributed random variable if x is the random variable that is derived from the spatial distribution of the heat flow data after applying the minimum-distance criterion (that is, successive point removal) in the right order.

- III.

The right order follows the uniform distribution of permutations of the ordering of the heat flow data.

When both qb+qf and qs(x)+qt(x) are gamma distributed, the resulting sum qu can be fairly well described by a gamma distribution (Covo and Elalouf, 2014). The sum is exactly gamma distributed when both qb+qf and qs(x)+qt(x) have the same scale parameter (Pitman, 1993). Hence, conditional on the right order, qu is assumed to be gamma distributed and the likelihood of the remaining data points is the gamma likelihood. We can iterate the permutations of the ordering using a latent parameter, the permutation index . The probability of j is . The full likelihood is then

where ϕ(α,β) is the prior distribution of the gamma distribution; ℐ(j)={i}j is the set of indices of data points in permutation j that are retained by the minimum-distance selection algorithm (see Sect. 3.2); and

with the gamma function Γ(α), is the gamma distribution for heat flow values qi>0. Here we have used the parameterization of the gamma distribution with shape parameter α and rate parameter β. An alternative parameterization uses the scale parameter instead.

The likelihood in Eq. (5) warrants two comments on its structure: first note that ℐ(j) always contains at least one index, the start of the permutation, due to the iterative resolution of the minimum-distance criterion. Secondly, if there is not a conflicting pair, ℐ(j) always contains all data indices and the j dimension trivially collapses to a uniform distribution. Otherwise, ℐ(j) can be a fairly complicated set.

Assumptions II and III are chosen to be peculiarly specific so as to yield a simple expression for the likelihood of the model. However, we can imagine a simple model of human data acquisition that is closely approximated by this likelihood. Imagine that a set of heat flow measurements is generated by the following process: initial drilling operations are distributed uniformly randomly over an area. Given that the level set of the underlying heat flow field is gamma distributed (or can be closely approximated by a gamma distribution), these initial drillings are gamma distributed as laid out in Sect. 3.1. Some of the initial wells turn out to be points of interest, for instance by identifying an oil or a geothermal field. Many of the following boreholes that lead to heat flow measurements would then cluster around these points of interest. This clustering, in turn, can lead to bias in the regional aggregate heat flow distributions due to the spatial correlation of qs(x). If we were to know the spatial extent of the clusters (say, disks with a radius of dmin) and we assume that a priori each point within a cluster is equally likely to be the initial drilling, we could obtain the likelihood given in Eq. (5). In Appendix C we confirm that this simple physically inspired sampling mechanism leads to estimation biases, and we find that the minimum-distance sampling used in REHEATFUNQ is an effective counter measure.

Assumption I, the use of the gamma distribution, is motivated by the general right-skewed shape of global heat flow (see Fig. 1b), positivity of surface heat flow, and existence of a conjugate prior distribution (which greatly reduces the computational cost). Besides the aforementioned and rather subjective criteria and to have an objective evaluation, we performed goodness-of-fit tests (Sect. 4.1.3) that show that the gamma distribution is at least as competitive as other simple probability distributions on the positive real line in terms of describing the regional aggregate heat flow distributions.

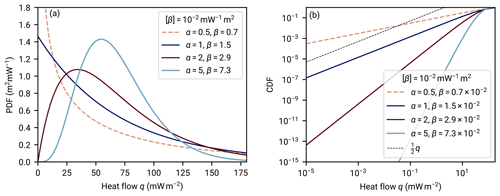

Figure 5Gamma distribution as a model for regional aggregate heat flow. Panel (a) shows the probability density functions (PDFs) of four gamma distributions with a varying shape parameter α that all have the same mean 〈q〉=68.3 mW m−2 (the mean of A quality data as estimated by Lucazeau, 2019). For α=1, the PDF is finite for q→0. For α>1, this limit is 0, and for α<1, the PDF has a singularity for q→0. Panel (b) shows the corresponding cumulative distribution functions (CDFs) with an emphasis on the asymptotics for small q values. A linear slope is plotted for comparison, corresponding to the growth of a uniform density with an increasing integration interval. The increased mass located in small q values in the case of α<1 becomes evident.

We restrict the parameter α to a minimum value of αmin=1 to prevent parameterizations with diverging densities at q→0 (see Fig. 5a). At α=1 the gamma distribution is an exponential distribution. For a smaller α, the density has a singularity at q=0. Illustratively, this causes the PDF to counter the effect of decreasing scale, and the mass decays only slowly on log scales in q.

Over the course of this paper, we will use the mean and standard deviation σq of the gamma distribution. Parameterized by α and β, they are given as (Thomopoulos, 2018)

For frequentist inference of α and β, the maximum likelihood estimator will be used a couple of times in this work. A Newton–Raphson iteration with starting values given by Minka (2002) is used.

For a Bayesian analysis of both the regional aggregate heat flow distributions and the fault-generated heat flow anomaly, a prior distribution of the parameters α and β of the gamma distribution model is required – even if it is just the implicit improper uniform prior distribution. Using an informative prior distribution instead (see, e.g., Zondervan-Zwijnenburg et al., 2017) opens up the potential to include information from outside sources in a regional analysis. Because the number of measurements in regional heat flow analysis is generally small – the R=80 km RGRDCs created from NGHF A quality data typically contain 31 disks with an average of 11 points per disk – additional information can be valuable.

Ideally, we would like the prior distribution we use to be derived on physical grounds. We do not have any independent physical criteria for constructing the prior distribution, but our empirical gamma distribution model aims to capture the predominant physics underlying regional aggregate heat flow (we will later investigate how much so). Hence, a physical basis that can guide our prior distribution choice is the implied physics captured by our gamma distribution model. A prior distribution that is constructed from the gamma distribution is the empirically best choice to reflect these underlying physics. This role is generally fulfilled by conjugate prior distributions which arise from the associated probability density functions and whose hyperparameters represent aspects of the data that can become evident in the Bayesian updating.

For the gamma distribution, a conjugate prior distribution is given by Miller (1980), parameterized by the hyperparameters p, s, n, and ν. Its probability density in gamma distribution parameters (α,β) is

with gamma function Γ(α) and

As in the previous section, we restrict the range of α from amin=1 to infinity to exclude probability densities that diverge at q→0 and place considerable weight on negligible heat flow (say mW m−2).

The conjugate prior distribution facilitates the computation of posterior distributions by means of Bayesian updating. The numerically expensive integrations over the parameter space of α and β that are involved in computing the posterior distribution are reduced to simple algebraic update rules of the conjugate prior distribution's parameters. Since numerical quadrature has the most computational cost in REHEATFUNQ and grows exponentially with the number of quadrature dimensions, reducing the set of quadratures to the computation of the normalization constant Φ of Eq. (9) significantly benefits the performance.

The Bayesian updating of the prior distribution of Eq. (8) given a sample of k heat flow values is (Miller, 1980)

The posterior distribution of α and β is hence Φ of Eq. (8) with the starred parameters given above.

Given a prior distribution parameterization (p, s, n, ν), the probability density of heat flow within the region is the predictive distribution

Here, the final step utilizes the conjugate structure of the prior distribution.

This expression can be translated to the likelihood of Eq. (5) of the REHEATFUNQ model. The Bayesian update leads to the following proportionality:

After some algebra used in Eq. (11), this resolves to

where , , , and are the parameters updated according to Eq. (10) with heat flow data set ℐ(j).

3.3.2 Minimum-surprise estimate

From a non-technical point of view, the purpose of the gamma conjugate prior distribution in the heat flow analysis is to transport universal information about surface heat flow on Earth while at the same time not significantly favoring any particular heat flow regime above other existing regimes. In other words, the prior distribution should penalize regions of the (α,β) parameter space that do not exist on Earth but should be rather uniform throughout the parts of (α,β) space that occur on Earth. The uniformity ensures that all regions on Earth are treated equally a priori in terms of heat flow, while the penalty adds universal information that can augment the aggregate heat flow data of each region.

In practice, a compromise between the uniform weighting of existing aggregate heat flow distributions and the penalizing of non-existent parameterizations needs to be found. Our choice in REHEATFUNQ is to put more weight onto the a priori uniformity of regional characteristics, that is, less bias. In parlance, we want to be minimally surprised by any of the distributions of the RGRDCs if we start from the prior distribution. One notion of the surprise contained in observed data when starting from a prior model is the Kullback–Leibler divergence (KLD) K from the prior distribution to the posterior distribution (Baldi, 2002):

where 𝒳 is the support of the parameters x. Baldi (2002) defines p(x) to be the prior distribution and ϕ(x) the posterior distribution given a set of observations.

The KLD is asymmetric, and Baldi and Itti (2010) note that the alternate order of probability distributions “may even be slightly preferable in settings where the `true' or `best' distribution is used as the first argument”. Here, we follow the alternate order and assign the gamma conjugate prior distribution to the role of ϕ(α,β). Furthermore, for the purpose of estimating the gamma conjugate prior distribution's parameters we consider the “uninformed” prior distribution (p=1, ; Miller, 1980) updated to a regional heat flow data set using the update rules of Eq. (10) to be the “true” distribution p(α,β) within that region. In this order, minimizing the KLD of Eq. (14) is also known as the “principle of minimum discrimination information” (MDI hereafter; Kullback, 1959; Shore and Johnson, 1978), closely related to the “principle of maximum entropy” (Shore and Johnson, 1978).

Applying this estimator to a set of regional aggregate heat flow distributions leads us to the following cost function of the minimum surprise which we aim to minimize. We enumerate the regional aggregate heat flow distributions of the RGRDC by index i and the heat flow values within by index j with i-dependent range (Qi={qj}i). We compute the updated parameters , , , and starting from p=1, for each Qi. Then, the cost function ![]() reads

reads

On an algebraic level, the ith KLD term emphasizes scale differences between the prior distribution and the ith regional data-driven distribution in parts of the (α,β) space which the regional data favor, while other parts of the parameter space are less important. Taking the maximum over the distributions {i} ensures that across distributions, the regions in which probability mass is concentrated are equally accurately represented. Another advantageous property of the MDI estimator is that by taking into consideration the full probability mass, it can be better suited for small sample sizes than point estimators (e.g., Ekström, 2008).

An explicit expression for the numerical quadrature of Eq. (15) is given in Appendix D1.1. For the purpose of optimization, we substituted parameters

with boundaries

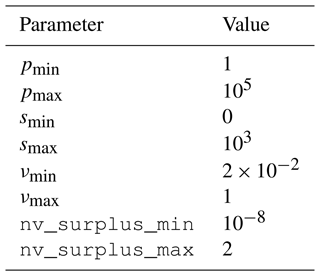

With adjustable parameters

this substitution ensures that the parameter bounds in p, s, n, and ν are adhered to. Choosing to optimize the logarithm of has shown itself to lead to a gracious convergence to the n=ν limiting case, and ln p is the standard expression of the p parameter in the numerical back end (see Appendix D).

Before the global optimization of p, s, n, and ν, it is helpful to determine some a priori bounds on the parameters. One observation is that the minimum-surprise estimate (MSE) should not introduce a strong bias to the regional results. Miller (1980) noted that the parameterization in which the updated posterior distribution parameters are dependent on the data only is the “uninformed” prior distribution p=1, . This line of thought leads to heuristic bounds on the parameters for the MSE. The posterior distribution update rule for n and ν is an increment by the data count. Hence, the prior distribution and should be smaller than or close to 1 for our desired MSE if we expect less than one data point of “information”. For p the update rule is a product with each heat flow value qi, and for s it is the sum with qi. Hence, is expected not to be larger than 250k, with, say, k∼1 and not larger than 250k. We have chosen conservative bounds based on these estimates and use the parameter bounds shown in Table 1.

To perform a global optimization of Eq. (15), we employ the simplicial homology global optimization (SHGO) algorithm implemented in SciPy (Endres et al., 2018; Virtanen et al., 2020). This algorithm starts with a uniform sampling of a compact multidimensional parameter space (we use the simplicial sampling strategy). The cost function is evaluated at the sample points, and a directed graph, approximating the cost function, is created by joining a Delaunay triangulation of the sample points with directions of cost increase. The key step of SHGO is then to determine local minimizers of this graph as starting points for further local optimization. The power of the algorithm is that under the condition that the cost function is Lipschitz continuous and the parameter space has been sampled sufficiently (whereby the directed graph is a sufficient representation of the cost function), the SHGO algorithm generates exactly one such starting point per local minimum. For the final iterative optimization of SHGO, we use the Nelder–Mead simplex algorithm (Nelder and Mead, 1965; Virtanen et al., 2020).

By manual investigation, we have found that setting the iteration parameter of SHGO to three, and using the boundaries previously defined, we obtain the optimum

with a final cost of ![]() = 4.496. Two-dimensional slices of the local neighborhood of this optimum are displayed in Fig. S1 of the Supplement.

= 4.496. Two-dimensional slices of the local neighborhood of this optimum are displayed in Fig. S1 of the Supplement.

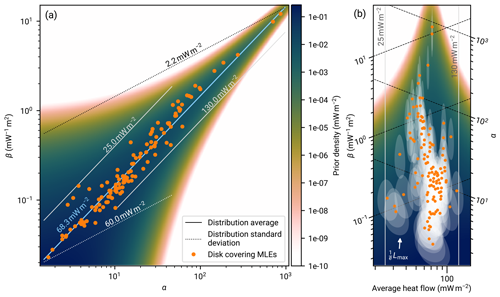

Figure 6Analysis of the global heat flow database in mW m−2 and parameter estimate of the gamma conjugate prior distribution of Eq. (8). Each dot marks the maximum likelihood estimate (MLE) of the gamma distribution parameters (αi,βi) for one of the randomly selected disk regions shown in Fig. 1 with the selection criterion of Sect. 2 applied. The solid lines mark parameter combinations of equal mean heat flow, and the dashed lines mark those with an equal standard deviation (after Thomopoulos, 2018). If the gamma distribution is assumed, global heat flow – split into disks with a radius of 80 km – can typically be described within a band of the parameter space given by a distribution average between 25 and 120 mW m−2 and standard deviation of 3 to 60 mW m−2. We capture this using the gamma conjugate prior distribution of Eq. (8) as the background color. Its parameters of , , , and stem from the minimum-surprise estimate described in Sect. 3.3.2. (a) The global mean continental heat flow of 68.3 mW m−2 is the estimate of Lucazeau (2019) from A quality data. Panel (b) shows a rotated and stretched section of the (α,β) parameter space such that the ordinate axis coincides with the average heat flow levels. The data are the same as in panel (a). Additionally we show, for each MLE, the region of the parameter space in which the corresponding likelihood is larger than times its maximum. This illustrates the uncertainty in the parameter estimates.

The prior distribution ϕ(α,β) with our MSE parameters is shown in Fig. 6. There, we also show the maximum likelihood point estimates for each of the regional aggregate heat flow distributions {Qi} from the RGRDC used in the prior distribution parameter MSE. The shape of the prior distribution in Fig. 6a does not follow the scatter of the estimates: while the are, on logarithmic scales, within a constant range of a linear slope across scales, the prior distribution widens on log scales with a decreasing α and β. The picture changes when considering the estimate uncertainties which also increase with respect to the scatter of estimates for decreasing α and β (Fig. 6b). The prior distribution thus captures the effects of the gamma distribution parameters and the parameters' sensitivities for different α and β values.

With respect to heat flow, this implies that the average heat flow, as in Eq. (7), is fairly constant for any heat flow distribution. However, the sensitivity of the overall distribution relative to the distribution parameters – and consequently the uncertainty in the distribution estimates – changes with the distribution parameters. This sensitivity is relatively lower at smaller parameter values and vice versa. If a resulting distribution is less sensitive on the parameters, then in turn the uncertainties in estimating the parameters of such a distribution will increase, as even a large change in parameters will result only in a minor change of the resulting distribution. The prior distribution reflects this behavior.

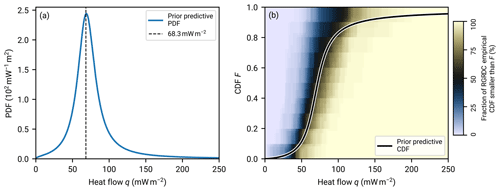

Equation (11) for the posterior predictive distribution of regional heat flow can also be evaluated for the non-updated prior distribution. Figure 7 shows the PDF and the CDF for the prior distribution parameters of Eq. (17). The mode of the PDF is close to the average heat flow of A quality data within the NGHF, 68.3 mW m−2 (Lucazeau, 2019). The prior predictive CDF follows the median CDF of the RGRDC samples fairly closely, with the exception of heat flow exceeding about 100 mW m−2. The latter is linked to the heavy tail of the PDF, which aggregates about 4.3 % probability, while the data are cut at 250 mW m−2.

Figure 7Prior predictive distribution for regional aggregate heat flow. The gamma conjugate prior distribution is parameterized as described in Eq. (17). Panel (a) shows the prior predictive PDF. The average value of A quality data from the NGHF (Lucazeau, 2019) is indicated. Panel (b) shows the prior predictive CDF. The background color shows, for each pixel in the (q,F) coordinates, the fraction of empirical cumulative distribution functions computed from the RGRDC heat flow samples at heat flow q which exceed F.

3.4 Bayesian inference of heat flow anomaly strength

We now turn to the quantification of the heat flow anomaly qa(x). This signal qa(x) is the surface heat flow signal due to a specific heat source that a researcher would like to investigate. It is implied that the surface heat flow field due to the heat source can be computed. In this article, we will use the heat flow signature of a vertical strike-slip fault with linearly increasing heat production with depth (Lachenbruch and Sass, 1980), but in principle REHEATFUNQ is agnostic to the type of surface heat flow to separate from the regional scatter. As noted in Sect. 3.1, the signal qa can be separated from the regional undisturbed heat flow by means of Eq. (3) if the heat source is weak enough not to incite nonlinear convection.

In REHEATFUNQ, the heat flow anomaly signal qa(x) is expressed by the total heat power PH that characterizes the heat source and a location-dependent heat transfer function c(x) that models the surface heat flow per unit power that is caused by the heat source. This transfer function follows by solving the relevant heat transport equation. Given a power PH and a function c(x), the heat flow anomaly contribution to the heat flow at measurement location xi is thereby

Providing the coefficients ci for each data point, by means of whichever solution technique to the heat transport equation available, is thereby the “application interface” of the REHEATFUNQ model for heat flow anomaly quantification. This is step 4 of the workflow listed in Appendix A. Note that while Eq. (18) requires the heat transport to be linear in PH, in Appendix G we note a particular case of nonlinearity in the heat transport with respect to PH that can still be addressed by REHEATFUNQ.

We can now combine the stochastic model for qu and the deterministic model for qa(PH). Treating PH as a model parameter, we perform Bayesian inference using the gamma distribution model for qu. First, we transform the heat flow measurements by removing the influence of the heat flow signature:

The data are now data of the “unknown” or “undisturbed” heat flow, for which we use the gamma model γ(qu) and its conjugate prior distribution.

Assuming the heat flow anomaly to be generated by a heat source implies the lower bound PH≥0 (0 if the heat flow data are not at all compatible with the anomaly). From Eq. (19) an upper bound on PH follows. Since we consider only positive heat flow,

for any heat flow sample iterated by j. Outside of these bounds, we assume zero probability for this value of j. The global maximum PH that can be reached across all j is

Assuming a uniform prior distribution in PH within these bounds, the full posterior distribution of the REHEATFUNQ anomaly quantification reads

To quantify the heat power PH, REHEATFUNQ uses the marginal posterior distribution in PH:

In Appendix D2, we discuss how to compute the normalization constant ℱ.

If an upper bound on the heat power PH is the aim of the investigation, the tail distribution (or complementary cumulative distribution function)

can be used. It quantifies the probability with which the heat-generating power is PH or larger.

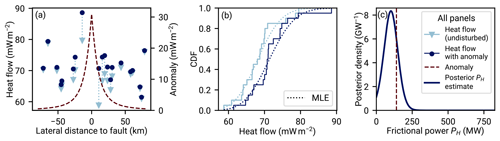

Figure 8Sketch of the Bayesian analysis of the fault-generated heat flow anomaly strength. The analysis starts out in panel (a) with heat flow measurements (dots) in spatial relation to a known strike-slip fault. The heat flow measurements within the investigated region fluctuate, and they are distributed according to a probability distribution p(q). Here we use a gamma distribution with α=180 and β=2.6354319 mW−1 m2. These undisturbed fluctuations (triangles) are superposed by the fault-generated conductive heat flow anomaly (dashed line) to yield the measurements. Both the undisturbed data and the anomaly's strength are unknown to the researcher, but the anomaly can be modeled as a function of average frictional power PH. Panel (b) shows the difference in aggregate cumulative distribution of the undisturbed heat flow and the data superposed by the anomaly. This is how REHEATFUNQ “sees” the data. Panel (c) shows the result of the REHEATFUNQ analysis. Our approach investigates the continuum of PH. Each PH corresponds to a heat flow anomaly of a different amplitude, which leads to different corrected data (from circles to triangles in panel a). The likelihood of the corrected data is evaluated against our proposed model of p(q), a gamma distribution, which leads to the posterior distribution of frictional power. In the case of this synthetic gamma-distributed data, the actual anomaly strength (vertical dashed line) is well assessed.

An illustration of the idea behind the approach in Eq. (23) is shown in Fig. 8. Panel a shows a sample of undisturbed heat flow qu drawn from a gamma distribution. This heat flow is superposed with the conductive heat flow anomaly from a vertical strike-slip fault (Lachenbruch and Sass, 1980). The result is the sample of “measured” heat flow q. Undisturbed data at the center of the heat flow anomaly are collectively shifted to higher heat flow values, while those further away from the fault are barely influenced. Within the regional aggregate heat flow distribution, the most affected data will be shifted towards the tail. This distortion of the aggregate heat flow distribution is picked up by the likelihood with the result that correcting for the heat flow anomaly of the right power of PH=140 MW (transforming the dots back to triangles in panel a) is more likely than no anomaly (PH=0 W) in the right panel.

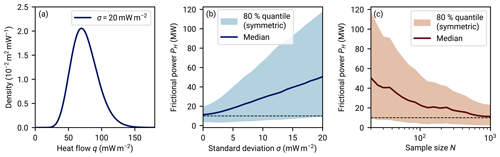

Figure 8 illustrates a core difficulty when identifying heat flow anomalies within noisy data of small sample sizes. The strength of the heat flow anomaly in this case is comparable to the intrinsic scatter of the regional aggregate heat flow distribution. This makes it difficult to identify the anomaly shape within the data. If the variance of the undisturbed heat flow is small compared to the actual magnitude of the anomaly, it becomes more and more feasible to visually identify the correct anomaly strength. Especially if the sample size is small, however, allowing for the occurrence of random fluctuations can significantly alter the interpretation of the data. The Bayesian analysis can capture all of this uncertainty in the posterior distribution of PH, yielding a powerful analysis method.

3.4.1 Providing heat transport solutions

As outlined in Sect. 3.1, steady crustal heat transport can be conductive, advective, or convective. The REHEATFUNQ model can be applied as long as the surface heat flow at the data locations is linear in the frictional power PH on the fault. The whole potentially complicated model of heat conduction from the fault to the data points can then be abstracted to the coefficients {ci}. At present, the task of computing these coefficients for use in REHEATFUNQ lies generally with the user (step 4 of the workflow listed in Appendix A). Numerical methods such as the finite element, finite difference, or finite volume method as well as analytical solutions to simplified problem geometries can be used to determine {ci} for a given problem by solving the heat transport equation of heat generated on the fault plane and dividing the surface heat flow at the data locations by the total frictional power PH on the fault.

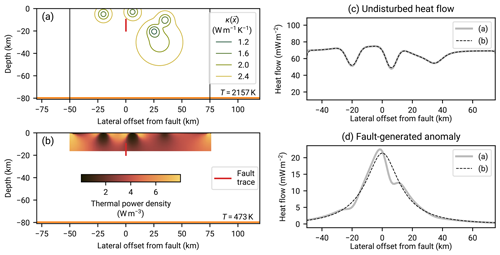

We illustrate this process using the single solution to the heat conduction equation that REHEATFUNQ presently implements: the surface heat flow anomaly generated by a vertical strike-slip fault. The solution stems from Lachenbruch and Sass (1980) and assumes a vertical fault in a homogeneous half-space medium. Furthermore, the fault is assumed to reach from depth d to the surface, and heat generation is assumed to increase linearly with depth up to a maximum Q* at depth d. In the limit of infinite time, the stationary limit, the anomaly then reads

This shape of surface heat flow is shown in the sketch of Fig. 8. In REHEATFUNQ, the anomaly is implemented based on the surface fault trace. For each data point, the distance to the closest point on this fault segment string is computed and inserted as x into Eq. (25). For an infinite straight fault line in a homogeneous half space, this coincides exactly with the analytic solution. In real-world applications, the quality of this approximation depends on the straightness of the fault and its length compared to depth and data distance from the fault, as well as the dip of the fault – shallow-dipping faults lead to asymmetric heat flow instead.

The model of Eq. (25) leads to a heat production of per unit length of the fault. We can balance this with the total heat dissipation power PH on a fault segment of length L within a region:

This finally leads to the following expression of the coefficients ci as a function of distance to the surface fault trace:

To use the surface heat flow signature of other heat sources or to include advection, one would perform similar steps. First, the heat transport equation needs to be solved. An analytical solution like Eq. (25) will not often be available, so numerical techniques can be used to directly compute qa(xi) at the data locations for a given PH. Then ci, the input values to the posterior distribution of Eq. (23), can be computed by dividing the qa(xi) by PH. The Python class AnomalyNearestNeighbor can then be used to specify the ci for use in the REHEATFUNQ Python module.

3.4.2 Heat transport uncertainty

The model for heat transport will in general be uncertain. For instance, in Eq. (26) one might be able to narrow down the depth d only to within a certain range. Or one might have an alternative model based on a different geometry and perhaps another one that includes a small amount of groundwater advection. Such uncertainties in parameter values and model selection can be accounted for in REHEATFUNQ.

The interface to do so is via the coefficients ci. The user can provide a set

of K solutions to the heat transport from source to heat flow data points. Each set {ci}k should contain a number N of coefficients ci equal to the total number of heat flow data points before applying the dmin sampling (effectively this is a K×N matrix (cki)). The weights wk quantify the probability that the user assigns to the heat transport solution k. In this way, k iterates a discretization of the N-dimensional probability distribution of the coefficients ci.

Internally, REHEATFUNQ then uses a latent parameter to iterate the combinations of the latent parameter j with the index k (another latent parameter). Then, in all previous equations, the index j is replaced with k, the sets ℐj are replaced with the set ℐj(k) belonging to the index j that k iterates, and the coefficients ci are replaced with cki. This effectively adds the k dimension to the REHEATFUNQ posterior distribution.

Since m×K is a possibly very large number – even j itself may be too large to iterate exhaustively and would hence be Monte Carlo sampled – only a user-provided maximum number of random indices l will be used in the sums.

The previous methodology section described the idea behind considering regional aggregate heat flow as a random variable and set out straightforwardly to describe the REHEATFUNQ gamma model and its prior distribution parameter estimation. Yet, no physical basis has been provided for the choice of a gamma distribution besides a number of general properties that the gamma distribution, among others, fulfills. In this section, we provide a posteriori support for this choice.

In Sect. 4.1.1–4.1.3, the NGHF (Lucazeau, 2019) will be used to investigate whether the REHEATFUNQ gamma model is suitable for the description of real-world heat flow data. The analysis reveals a degree of misfit for which we investigate possible causes. Finally, we compare the gamma model to other two-parameter univariate probability distributions.

In Sect. 4.2 and its subsections, we analyze synthetic data, allowing us to leverage large sample sizes. We investigate how well REHEATFUNQ can quantify heat flow anomalies both if the regional aggregate heat flow were gamma distributed, that is, according to the model assumptions, and if the regional heat flow were to follow some strongly gamma-deviating mixture distributions found in the NGHF in Sect. 4.1.1. Furthermore, we investigate the impact of the prior distribution parameters on the anomaly quantification.

In Sect. 4.3 and its subsections, we discuss some physical limitations of the REHEATFUNQ model.

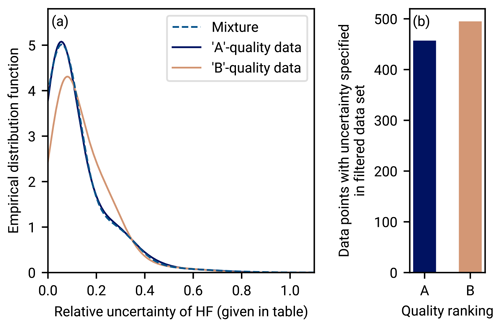

Figure 9Distribution of relative error in the NGHF database for A and B quality data. We show only A and B quality data according to our data filtering described in Sect. 2.1. Panel (a) shows the distribution of relative uncertainty for data records of A and B quality, from the filtered NGHF database, for which an uncertainty is specified. The dashed line shows a mixture distribution of three normal distributions that approximates the relative error distribution of the A quality data. The parameters of the mixture are means , standard deviations , and weights . Panel (b) shows a histogram of the number of data records of A and B for which uncertainty is specified and which pass our data selection criteria.

4.1 Validation using real-world data

4.1.1 Goodness of fit: region size

Interpreting the regional heat flow as a stochastic, fluctuating background heat flow introduces a potential trade-off in the region size. On the one hand, considering heat flow data points across a larger area increases the number of heat flow measurements, which can increase the statistical significance of the analysis. In particular when investigating fault-generated heat flow anomalies, data points further away from the fault, say >20 km (see Fig. 8), are less influenced by the fault heat flow and can hence better quantify the background heat flow that is not disturbed by the heat flow anomaly. On the other hand, increasing the region size makes the analysis more susceptible to capturing large-scale spatial trends. These trends may introduce correlations or clustering between the data points which are not captured by the stochastic model. Conversely, using smaller region extents will improve the quality of approximating large-scale spatial trends as uniform. We will now set out to find a compromise between these effects by finding a region size in which we can hope to apply the gamma model for regional aggregate heat flow distributions.

Our goodness-of-fit analysis by region size region is based on RGRDCs (see Appendix B). For each regional aggregate heat flow distribution, we investigate how well the sample can be described by the gamma distribution. Control over the radius R allows us to investigate the fit over various spatial scales.

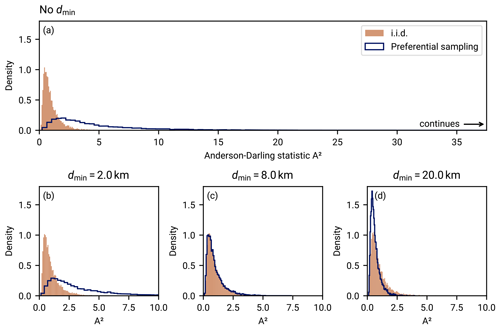

We performed tests based on the empirical distribution function (EDF tests; Stephens, 1986) to investigate the goodness of fit. We have used the Kolmogorov–Smirnov (KS) and Anderson–Darling (AD) test statistics, and we have applied them for the case that both parameters α and β are unknown (“case 3” of Stephens, 1986). We calculated critical tables for the test statistics covering the sample sizes and maximum likelihood estimate shape parameters that we encountered in the RGRDCs since the tables are independent of β as a scale parameter (David and Johnson, 1948). The critical tables yield the values for both test statistics that are exceeded at a certain rate if the data stem from a gamma distribution (we chose a 5 % rejection rate). This rejection rate means that if N samples of size M are drawn from a gamma distribution with shape α, the KS test statistic exceeds the value read from the KS critical table for that M and α in 5 % of the samples. The same holds for the AD statistic and the AD critical table. Hence if regional aggregate heat flow distributions were gamma distributed, we would expect 5 % of disks to be rejected by the tests. Higher rejection rates (number of rejected samples number of samples) indicate that they are not gamma distributed.

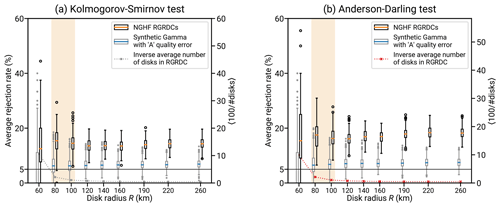

Figure 10Investigating the fit of various probability distributions to the NGHF (Lucazeau, 2019) at different spatial scales. Both sides analyze the same data sets using goodness-of-fit (GOF) tests for the gamma distribution (e.g., Stephens, 1986). First, we analyze RGRDCs of the NGHF data set (defined in Appendix B). For each disk of a global covering, a GOF test is performed for the distribution of heat flow within. The average rejection rate is the fraction of disks within a covering for which the gamma hypothesis is rejected at the α = 5 % level. For sufficiently large samples from a gamma distribution, this rate would converge to 5 %. The black box plots show, for each indicated R, the distribution of these rejection rates over 200 generated coverings. The gray box plots show the same distribution for synthetic gamma-distributed global coverings (details in Appendix B1). Known processes affecting the used part of the NGHF data set (250 mW m−2 threshold, discretization, and typical uncertainty) have been simulated. The box plots show the median (colored bar), quartiles (extent of the box), up to 1.5 times the interquartile range (whiskers), and outliers thereof. The box plot shows a separation of the two rejection rate distributions, indicating that there are patterns in the real heat flow data that cannot be explained by a gamma distribution and uncertainty. As R decreases, the discrepancy decreases as well until at R≲80 km, the R disks contain too few data points to resolve the average rejection rate properly (illustrated by the dashed line showing the average of 100 divided by the number of disks in a covering).

Figure 10 shows the results of the goodness-of-fit analysis. Two R-dependent effects can be observed in Fig. 10: at a small R of <80 km, the scatter of small sample sizes becomes dominant. There are just too few regions remaining. For an increasing R, the rate of rejecting the gamma distribution hypothesis increases slightly.

A striking observation is that for all of the region sizes, the rejection rate is larger than 5 % (centered at about 15 %) and the fluctuations in rejection rates across different RGRDCs do not alter that conclusion. Regional aggregate heat flow is not generally gamma distributed.

This deviation is not due to known heat flow data uncertainty. To test whether the heat flow data uncertainty might be the cause of the elevated rate of rejections, we have performed a synthetic analysis using synthetic RGRDCs generated by the algorithm in Appendix B1. After generating gamma-distributed random values similar to RGRDC data, relative error following the uncertainty distribution of A and B data (shown in Fig. 9) is added to the data. The resulting rejection rates show a spread similar to that of the NGHF data, but the bias is small (the median of the rejection rates across synthetic RGRDCs never exceeds ∼8 %). Consequently, unbiased random error as specified for the heat flow data within the NGHF is not sufficient to describe the ∼15 % rejection rate of the gamma model.

The impact that this imperfect model of regional aggregate heat flow has on the accuracy of the results is not immediately clear. On the one hand, using a wrong model to analyze the data suggests a detrimental impact on the accuracy. On the other hand, if the model is close enough, the method might be accurate up to a desirable precision. Later in Sect. 4.2.2 we investigate, using synthetic data, how well REHEATFUNQ can quantify heat flow anomalies when regional aggregate heat flow data is decidedly non-gamma distributed.

Before these synthetic investigations, the following sections investigate potential causes for the deviation from a gamma distribution in Sect. 4.1.2 and test whether other parsimonious models for the regional aggregate heat flow distribution perform better than the gamma distribution on RGRDCs of the NGHF data set in Sect. 4.1.3.

4.1.2 Goodness of fit: the level of misfit from mixture models

Following the observation that the gamma distribution is not a general description of regional aggregate heat flow distributions, we investigate potential causes for this misfit and how large the deviation from a gamma distribution has to be to produce the ∼15 % rejection rates of the previous section.

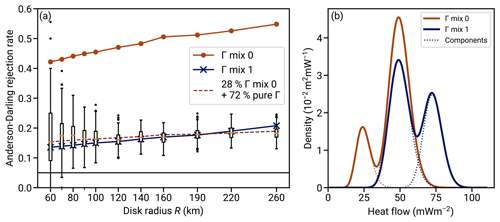

We find that the mismatch could be explained by mixture distributions. Figure 11 shows the same RGRDCs Anderson–Darling rejection rate as Fig. 10 and additionally the rejection rate computed for two mixtures of two gamma distributions each. The two mixture distributions are synthetic but cover the range of typical heat flow values, and the samples drawn from them have the sample size distribution as the RGRDCs. One distribution (“mix 0”) has less overlap between the two peaks than the other and leads to large rejection rates of ∼80 %. The other, “mix 1”, has more overlap between the peaks, and they are more equally weighted. This mixture model matches the observed rejection rates across the NGHF data RGRDCs very closely. Similar mixture models could hence be a possible cause for the observed rejection rates across the NGHF if the heat flow were indeed gamma distributed.

The mixture distribution can arise in the real heat flow data if the disk intersects a boundary between two regions of different heat flow characteristics. Since radiogenic heat production in the relevant upper crust can vary on the kilometer scale (Jaupart and Mareschal, 2005), such an occurrence seems plausible. The occurrence of a boundary intersection mixture might be frequent and with a smaller difference between the modes (corresponding to mix 1), or it might be infrequent but with a larger inter-mode distance (dashed line in Fig. 11). Both cases are compatible with the statistics observed in the NGHF data RGRDCs.

Figure 11Exploring the misfit between the gamma distribution model of regional heat flow and the NGHF data. (a) Box plots show the fraction of heat flow distributions from RGRDCs of the NGHF database for which the gamma distribution hypothesis is rejected at the 5 % level (same data as Fig. 10b). The solid lines with dots show the same fraction of rejections computed for two sets of 10 000 samples each, drawn from the two gamma mixture models shown in panel (b) (colors corresponding). Each sample from the mixture distributions has its size drawn from an NGHF RGRDC of the corresponding R, replicating the sample size structure derived from the NGHF. The dashed line in the left plot shows the case if 72 % of the samples were gamma distributed (5 % rejection rate, horizontal black line), while 28 % of the samples were draft from mixture model 0. (b) The dotted lines indicate the two gamma distributions comprising each mixture. The parameters are w0=0.2, k0=25, θ0=1 mW m−2, k1=50, and θ1=1 mW m−2 for Γ0 (where w0 is the weight of the zero-index component) and w0=0.4, k0=128, θ0=0.57 mW m−2, k1=50, and θ1 = 1 mW m−2 for Γ1.

The match with the observed rejection rates is not conclusive evidence that the heat flow data within the regions follow gamma mixture distributions. It is likely that many different distributions could be constructed that lead to similar rejection rates. However the match is a good indication of how large the deviation between the underlying distribution and the simple gamma model is. Somewhere between “gamma mix 0” and “gamma mix 1” lies a critical point in terms of mode separation beyond which the distribution would depart further from a gamma distribution than what is observed in the NGHF data RGRDCs.

At this point, we can summarize that heat flow in disks with a radius of 60 to 260 km is not generally gamma distributed and this is not an artifact of data processing or uncertainty. Mixtures of gamma distributions within the disks, for instance representing variation on smaller scales below the smallest radius we can investigate, could explain the mismatch. Moreover, the mixtures indicate the level of mismatch that would lead to the statistics observed in the real heat flow data.

We proceed in Sect. 4.1.3 by investigating whether other two-parameter univariate probability distributions perform better in describing the real-world regional aggregate heat flow distributions. Later in Sect. 4.2.2, we will investigate the impact that the misfit of the gamma model has on the quantification of heat flow anomalies. This will drive the model despite the mismatch to observed data until a physics-derived alternative becomes available.

4.1.3 Comparison with other distributions

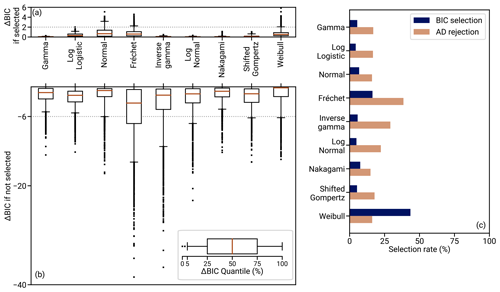

We compare the performance of the gamma distribution with a number of common probability distributions. This aims to investigate whether the mismatch can be resolved by choosing another simple probability distribution or whether the gamma distribution performs well in terms of a simple model.

The comparison is performed at the transition point between an insufficient sample size, as the radius decreases, and increasing misfit, as the radius increases. The radius is 80 km. Even though the global heat flow database of Lucazeau (2019) is large, the number of samples within a disk of R=80 km is rather small at a 20 km minimum distance between the data points (typically 11). Therefore, we focus our analysis on two-parameter models.

We generate samples from the NGHF using the RGRDCs described in Appendix B. To each disk's sample, we fit probability distribution candidates using maximum likelihood estimators and compute the Bayesian information criterion (BIC; Kass and Raftery, 1995). The probability distribution with the smallest BIC is the most favorable for describing the subset, and the absolute difference ΔBIC to the BIC of the other distributions indicates how significant the improvement is. In our particular case, ΔBIC depends only on differences in the likelihood since all investigated distributions are two-parametric. We repeat the process 1000 times to prevent a specific random regional heat flow sample selection skewing the results (see Figs. S9–S11 in the Supplement for a convergence analysis).

Due to the right-skewed shape of the global distribution (see Fig. 1b), we test a range of right-skewed distributions on the positive real numbers: the Fréchet, gamma, inverse gamma, log-logistic, Nakagami's m, shifted Gompertz, log-normal, and two-parameter Weibull distribution (Bemmaor, 1994; Leemis and McQueston, 2008; Kroese et al., 2011; Nakagami, 1960). The global distribution does not have to be representative of the regional distributions, however. Since the global distribution is a mixture of the regional distributions, only the weighted sum of the regional distributions needs to have the right-skewed shape. Therefore, we additionally test the normal distribution (e.g., used by Lucazeau, 2019).

In Fig. 12c, the results of the analysis are visualized using the rate of BIC selection, that is, the fraction of regional heat flow samples for which the hypothesized distribution has the lowest BIC. Furthermore in panel a, the distribution of ΔBIC to the second-lowest scoring distribution is shown for the samples in which each distribution is selected, and in panel b the distribution of (negative) ΔBIC to the selected distribution is shown for the samples in which each distribution is not selected. The Weibull distribution has the highest selection rate, followed by the Fréchet distribution. Combined, they account for roughly 60 % of all selections. Together with the normal distribution and one outlier of the log-logistic distribution, they are the only distributions with occurrences of ΔBIC > 2, which might be considered “positive evidence” (Kass and Raftery, 1995, p. 777). However, these ΔBIC > 2 instances occur only in less than 4.4 % of the total subsets for each of the three distributions. Therefore, no distribution is unanimously best at describing the regional heat flow.

The ΔBIC for regions in which a distribution is not selected leads to a different selection criterion: if a distribution is not the best-scoring distribution, how much worse than the best is it? These differences are generally more pronounced than the differences in the best to the second-best fitting model. Especially the Fréchet and inverse gamma distribution perform much (strong evidence at ΔBIC > 6, Kass and Raftery, 1995, p. 777) worse than the better-fitting distributions in more than 50 % of the cases in which they are not selected. The generally least worst performing models are the gamma, log-logistic, normal, Nakagami, and Weibull distribution. Their negative ΔBIC distributions have only minor differences, and different ones perform better depending on the quantile of the negative ΔBIC investigated.

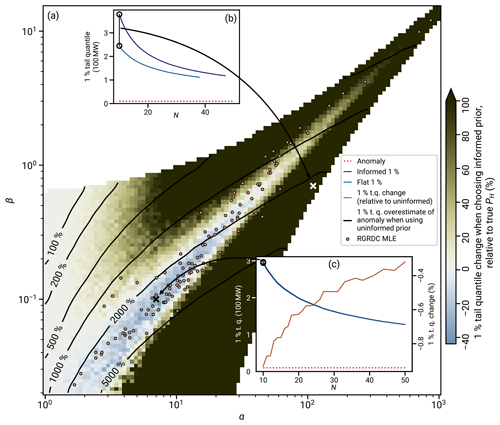

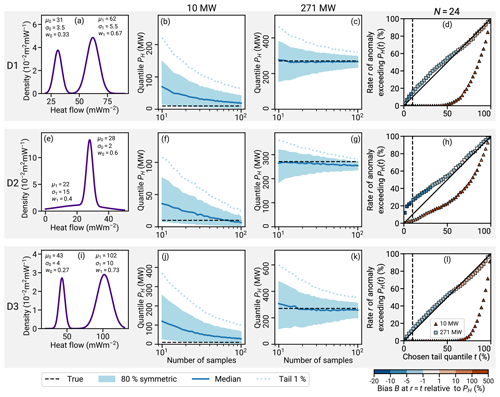

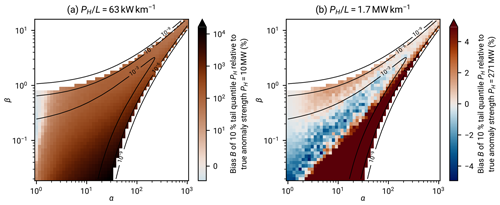

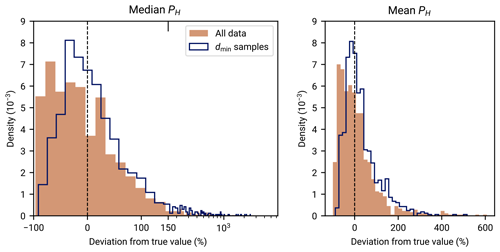

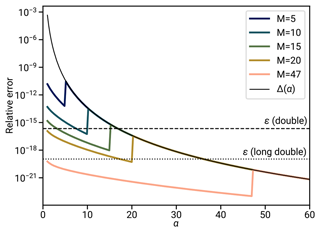

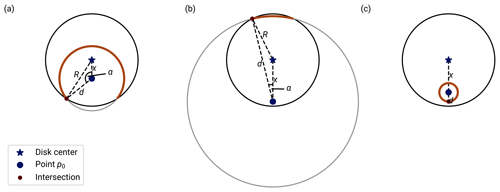

To conclude, the gamma distribution is among the best-performing distributions in terms of a consistently good description of the data. There are no significant differences between the distributions in terms of fitting the data that would favor any of the other distributions over the gamma distribution. Up until the typical shape of the regional aggregate heat flow distribution is derived from physical principles, the choice among the set of the best-performing distributions remains a modeling decision. Here, the gamma distribution is the only distribution of the best-performing set that fulfills all three of the following criteria: (1) it is defined by positive support; (2) it has a conjugate prior distribution for enabling costly computations; and (3) it is right-skewed, like the global heat flow distribution, for all parameter combinations. We hence choose the gamma distribution within REHEATFUNQ.