the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Overcoming computational challenges to realize meter- to submeter-scale resolution in cloud simulations using the super-droplet method

Toshiki Matsushima

Seiya Nishizawa

Shin-ichiro Shima

A particle-based cloud model was developed for meter- to submeter-scale-resolution simulations of warm clouds. Simplified cloud microphysics schemes have already made meter-scale-resolution simulations feasible; however, such schemes are based on empirical assumptions, and hence they contain huge uncertainties. The super-droplet method (SDM) is a promising candidate for cloud microphysical process modeling and is a particle-based approach, making fewer assumptions for the droplet size distributions. However, meter-scale-resolution simulations using the SDM are not feasible even on existing high-end supercomputers because of high computational cost. In the present study, we overcame challenges to realize such simulations. The contributions of our work are as follows: (1) the uniform sampling method is not suitable when dealing with a large number of super-droplets (SDs). Hence, we developed a new initialization method for sampling SDs from a real droplet population. These SDs can be used for simulating spatial resolutions between meter and submeter scales. (2) We optimized the SDM algorithm to achieve high performance by reducing data movement and simplifying loop bodies using the concept of effective resolution. The optimized algorithms can be applied to a Fujitsu A64FX processor, and most of them are also effective on other many-core CPUs and possibly graphics processing units (GPUs). Warm-bubble experiments revealed that the throughput of particle calculations per second for the improved algorithms is 61.3 times faster than those for the original SDM. In the case of shallow cumulous, the simulation time when using the new SDM with 32–64 SDs per cell is shorter than that of a bin method with 32 bins and comparable to that of a two-moment bulk method. (3) Using the supercomputer Fugaku, we demonstrated that a numerical experiment with 2 m resolution and 128 SDs per cell covering 13 8242×3072 m3 domain is possible. The number of grid points and SDs are 104 and 442 times, respectively, those of the highest-resolution simulation performed so far. Our numerical model exhibited 98 % weak scaling for 36 864 nodes, accounting for 23 % of the total system. The simulation achieves 7.97 PFLOPS, 7.04 % of the peak ratio for overall performance, and a simulation time for SDM of 2.86×1013 particle ⋅ steps per second. Several challenges, such as incorporating mixed-phase processes, inclusion of terrain, and long-time integrations, remain, and our study will also contribute to solving them. The developed model enables us to study turbulence and microphysics processes over a wide range of scales using combinations of direct numerical simulation (DNS), laboratory experiments, and field studies. We believe that our approach advances the scientific understanding of clouds and contributes to reducing the uncertainties of weather simulation and climate projection.

- Article

(7303 KB) - Full-text XML

-

Supplement

(7066 KB) - BibTeX

- EndNote

Shallow clouds greatly affect the Earth's energy budget, and they are one of the essential sources of uncertainty in weather prediction and climate projection (Stevens et al., 2005). Since various processes affect the behavior of clouds, understanding the individual processes and their interactions is critical. In particular, cloud droplets interact with turbulence over a wide range of scales (Bodenschatz et al., 2010) in phenomena such as entrainment and mixing as well as enhancement of the collisional growth of droplets. Hence, numerical modeling of these processes and model evaluation toward the quantification and reduction of uncertainty are challenges in the fields of weather and climate science.

Meanwhile, accurate numerical simulations of stratocumulus clouds are difficult because of the presence of a sharp inversion layer on the scale of several meters. Mellado et al. (2018) suggest that combining the direct numerical simulation (DNS) approach, which solves the original Navier–Stokes equations while changing only the kinematic viscosity (or Reynolds number) among the atmospheric parameters, and the large-eddy simulation (LES) approach, which solves low-pass-filtered Navier–Stokes equations for unresolved flow below filter length, can accelerate research on related processes. Following Mellado et al. (2018), Schulz and Mellado (2019) investigated the joint effect of droplet sedimentation and wind shear on cloud-top entrainment and found that their effects can be equally important for cloud-top entrainment, while Akinlabi et al. (2019) estimated turbulent kinetic energy. However, since they used saturation adjustment for calculating clouds, their results do not include the influence of detailed microphysics processes and their interactions with entrainment and mixing as well as supersaturation fluctuations (Cooper, 1989), which in turn affect the radiation properties.

To incorporate the details of cloud processes into such simulations, it is essential to remove the empirical assumptions on the droplet size distributions (DSDs) rather than using a bulk cloud microphysics scheme. We should use a sophisticated microphysical scheme such as a bin method and a particle-based Lagrangian cloud microphysical scheme. In particular, herein, we focus on the particle-based super-droplet method (SDM) developed by Shima et al. (2009). If meter- to submeter-scale-resolution simulations could be performed using sophisticated microphysical schemes in large domains, we could use a DNS-based approach (Mellado et al., 2018) to simulate clouds and compare these simulations with small-scale numerical studies (Grabowski and Wang, 2013) and observational studies on a laboratory scale (Chang et al., 2016; Shaw et al., 2020) to field measurement (Brenguier et al., 2011) scales. Such simulations may help understand the origins of the uncertainty in the clouds and their interactions with related processes. However, in reality, only relatively low-resolution simulations are possible using sophisticated microphysical schemes owing to their high computational cost. For example, Shima et al. (2020) recently extended the SDM to predict the morphology of ice particles and reported that the computational resources of the mixed-phase SDM are 30 times that of the two-moment bulk method of Seiki and Nakajima (2014). To the best of the authors' knowledge, the previous studies by Sato et al. (2017, 2018) are possibly the state-of-the-art numerical experiments on the largest computational scale yet. To investigate the sensitivity of nonprecipitating shallow cumulus to spatial resolution, they performed numerical experiments with spatial resolutions up to 6.25 m and 5 m (horizontal and vertical, respectively) with 30 super-droplets (SDs) per cell using the supercomputer K. They found that the highest spatial resolution used in their study is sufficient for achieving grid convergence of the cloud cover but not for the convergence of cloud microphysical properties. For solutions of the microphysical properties to converge with increasing spatial resolution, it is necessary to reduce the vertical grid length (Grabowski and Jarecka, 2015) for simulating the number of activated droplets accurately and to maintain the aspect ratio of the grid length close to 1 for turbulence statistics (Nishizawa et al., 2015).

Nevertheless, using a sophisticated microphysical scheme for meter-scale-resolution simulations remains a challenge. One approach to cope with this difficulty is to await the development of faster computers. However, single-core CPU performance is no longer increasing according to Moore's law. Therefore, to take advantage of state-of-the-art supercomputers, we must adapt our numerical models to their hardware design. Another solution to overcome the challenge is to use the rapidly advancing data scientific approaches. Seifert and Rasp (2020) developed a surrogate model of cloud microphysics from training data using machine learning. Tong and Xue (2008) estimated the parameters of conventional cloud microphysics models through data assimilation to quantify and reduce parameter uncertainty. However, these methods cannot make predictions beyond the training data or enhance the representation power of the bulk cloud microphysics schemes. The Twomey SDM proposed by Grabowski et al. (2018) could be used to reduce the computational cost of a sophisticated model; in this SDM, only cloud and rain droplet data are stored as SDs. However, the Twomey SDM cannot incorporate the hysteresis effect of haze droplets (Abade et al., 2018). Incorporating this effect is necessary for reproducing entrainment and detrainment when eddies cause the same droplets to activate or deactivate in a short time at the cloud interface. In addition, since clouds localize at multiple levels of hierarchy – from a single cloud to cloud clusters – appropriate load balancing is necessary for large-scale problems using domain decomposition parallelization if the computational cost for cloud and rain droplets is high. To the best of our knowledge, load balancing has not been applied to the SDM, even though some studies have applied it to other simulations, such as plasma simulations (Nakashima et al., 2009). The SDM and some other plasma simulations are based on solving partial differential equations that describe a coupled system of particles and cell-averaged variables, known as the particle-in-cell (PIC) method. However, applying load balancing for weather and climate models is not a good option because such codes are complicated and changes in dynamic load balancing can affect the computational performance of other components.

In this study, we attempted meter-scale-resolution cloud simulations with a sophisticated microphysical scheme by optimizing and improving the SDM. This approach is regarded as a technical approach and has not been explored much though it is a crucial approach. Our approach is based on the SDM, which is robust to the difficulties caused by dimensionality for more complex problems and is free from the numerical broadening of the DSD; furthermore, it can be used even when the Smoluchowski equation for collisional growth of droplets is invalid for small coalescence volumes (see Grabowski et al., 2019; Morrison et al., 2020 and the references therein). We focus on optimization on the Fujitsu A64FX processor, which is used in the supercomputer Fugaku. We designed cache-efficient codes and overcame the difficulties in achieving high performance for the PIC method based on the domain knowledge. To achieve this goal, we reduced data movement and parallelization using single-instruction multiple-data (SIMD) for most calculations.

In addition, there are two potentially important aspects of model improvement for meter- to submeter-scale-resolution experiments with the SDM. One aspect is the initialization for the SDM. In the SDM, we need to sample representative droplets from many real droplets to calculate the microphysical processes. Shima et al. (2020) used an importance-sampling method to sample rare-state SDs more frequently to improve the convergence of calculations of collision–coalescence. However, when we sample many SDs for meter-scale-resolution simulations, their number may exceed the number of real droplets for rare-state SDs. The second aspect is SD movement. In the SDM, the divergence at the position of SDs calculated from interpolated velocity should be identical to that at the cell to ensure consistency in changes in SD number density and air density (Grabowski et al., 2018). However, the interpolation used by Grabowski et al. (2018) only achieves first-order spatial accuracy, and the effects of vortical and shear flows within a cell are not incorporated in SD movement. Therefore, their scheme can introduce large errors in the particle mixing calculations and deteriorate the grid convergence of the mixing calculations, which may affect the larger-scale phenomena due to the interactions between eddies and microphysics.

The remainder of this paper is organized as follows. In Sect. 2, we describe and review the basic equations used in our numerical model called the SCALE-SDM, target problem, and computers to be used. Section 3 describes the main contributions in this study for optimizing and improving the SDM. For this purpose, we first describe the domain decomposition. Subsequently, we describe the development of a new initialization method for the SDM and describe optimizations of each process in the SDM (SD movement, activation–deactivation, condensation–evaporation, collision and coalescence, and sorting for SDs). In Sect. 4, we evaluate the computational and physical performances of the new SCALE-SDM in two test cases. We also compare our results with those obtained with the same numerical model using a two-moment bulk and bin methods as well as with those obtained using the original SCALE-SDM. In Sect. 5, we evaluate the applicability of our model to large-scale problems through weak scaling and discuss the detailed computational performance. Section 6 discusses the challenges for incorporating mixed-phase processes, the inclusion of the terrain, and long-time integration. We also discuss the possibilities of achieving further high performance in current and future computers. We summarize our main contributions in Sect. 7.

2.1 Governing equations

We use the fully compressible nonhydrostatic equations as the governing equations for atmospheric flow. To simplify the treatment of water in the SDM, only moist air (i.e., dry air and vapor; aerosol particles or cloud and/or rain droplets are excluded) is considered in the basic equations for atmospheric flow. The fully compressible equations require a shorter time step (20−1–10−1) than that needed to solve anelastic equations (advection time step). However, using numerous message passing interface (MPI) nodes is advantageous, as they do not require collective communications.

The basic equations are discretized using a finite-volume method on the Arakawa C-grid. For solving the time evolution of dynamical variables and the water vapor mass mixing ratio, the fifth-order upwind difference scheme (UD5) and the second-order central difference scheme discretize the advection terms and pressure gradient terms in the momentum equations, respectively. We use the flux-corrected transport (FCT) scheme (Zalesak, 1979) to ensure only positive definiteness for the water vapor mass mixing ratio.

The time evolutions of dynamical variables during the Δt interval are split into short time steps Δtdyn associated with acoustic waves and longer time steps for tracer advection Δtadv and physical processes Δtphy. The classical four-stage fourth-order Runge–Kutta method is used for short time steps, and the three-stage Runge–Kutta method (Wicker and Skamarock, 2002) is used for tracer advection. Unless otherwise noted, . Changes in the dynamic variables caused by physical processes are calculated using tendencies, which are assumed to be constant during Δtadv.

The SDM is used as a cloud microphysics scheme. In this study, only warm cloud processes are considered: movement, activation–deactivation, condensation–evaporation, and collision–coalescence. Spontaneous and collisional breakup processes were not considered here. In the SDM, each SD has a set of attributes that represent droplet characteristics. In this case, the data on SDs necessary to describe time evolution are the position in 3D space x, droplet radius R, number of real droplets (which we refer to as multiplicity ξ), and the aerosol mass dissolved in a droplet M. The ith SD moves according to the wind and falls with terminal velocity, assuming that the velocity of each SD reaches the terminal velocity instantaneously:

where U is the air velocity at the position x, ρ is the air density, P is the atmospheric pressure, T is the temperature, ez is the unit vector in the vertical positive direction, v∞ is the terminal velocity, v is the velocity of the SD, and t is the time. The midpoint method is used for time integration to solve Eq. (1). We also need to specify a method for determining the velocity U at the position of the SDs, which will be described in Sect. 3.3.2.

Activation–deactivation and condensation–evaporation are represented by assuming that the SD radius R evolves according to the Köhler theory:

where S is the saturation ratio; A is a function of the temperature at the position, and it depends on the heat conductivity and vapor diffusivity. The terms and represent the curvature effect and the solute effect, respectively. The ventilation effect is ignored in Eq. (2). See Shima et al. (2020) for the specific forms of A, a, and b since they are not important here. A method to solve Eq. (2) is described in Sect. 3.3.3.

The collision–coalescence process is calculated using the algorithm proposed by Shima et al. (2009). The volume in which SDs are well mixed and capable of colliding is set to have the same size as the control volume of the model grid. If we consider all possible pairs of SDs (, where N is the number of candidate SDs) to calculate collision–coalescence, the computational complexity is of order O(N2). However, their method considers only nonoverlapping pairs of SDs to reduce the computational complexity to the order of O(N). Hence, the obtained coalescence probability is low; this parameter was corrected to make it consistent with the actual probability. Indeed, Unterstrasser et al. (2020) showed that the method proposed by Shima et al. (2009), which they referred to as the all-or-nothing algorithm with linear sampling, is suitable for problems when computational time is critical.

The Smagorinsky–Lilly scheme with the stratification effect (Brown et al., 1994) is used as a turbulent scheme for LES. In the SDM, we do not consider the effect of turbulent fluctuations on movement, activation–deactivation, condensation–evaporation, and collision–coalescence due to the high additional computational cost and memory space required to consider these effects. However, the effect of subgrid motion (or Brownian motion modeled for kinematic viscous diffusion) should be included to ensure the convergence to DNS with ξ→1 while fixing the spatial grid length (Mellado et al., 2018); this will be addressed in future work.

2.2 Target problem

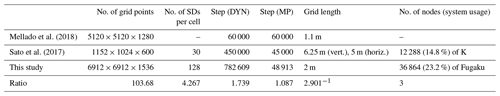

We describe the final target problem in this study and compare the problem size with that considered in Mellado et al. (2018) and Sato et al. (2017); high-resolution numerical experiments on shallow clouds were performed in these studies. Mellado et al. (2018) used the numerical settings of the first research flight of the second Dynamics and Chemistry of Marine Stratocumulus field campaign (DYCOMS-II RF01) (Stevens et al., 2005) to simulate nocturnal stratocumulus. Sato et al. (2017) used the numerical settings of the Barbados Oceanographic and Meteorological Experiment (BOMEX) (Siebesma et al., 2003) to simulate shallow trade-wind cumulous. In this study, we simulated the BOMEX case but with much higher resolutions. The main computational parameters of the two previous studies and our study are listed in Table 1. Here, the numbers of the time steps for 1 h time integration are shown in the third and fourth columns of the table.

Mellado et al. (2018)Sato et al. (2017)Table 1Comparison of model computational configurations among previous studies and this study. The last row shows the ratios of the parameters used in Sato et al. (2017) to those used in this study.

Mellado et al. (2018) used anelastic equations with saturation adjustment for calculating clouds. They performed large-scale numerical experiments using a petascale supercomputer (Blue Gene/Q system supercomputer JUQUEEN at Jülich Supercomputing Centre). We also note that similar numerical experiments with a large number of grid points () were performed by Schulz and Mellado (2019) using the same supercomputer. Meanwhile, Sato et al. (2017) used fully compressible equations with the SDM and performed 6.25 and 5 m resolution simulations of BOMEX using the petascale supercomputer K. The number of time steps for the dynamical process used in Sato et al. (2017) is 1 order of magnitude larger than that used in Mellado et al. (2018). In addition, because of the high computational cost of the SDM, Sato et al. (2017) used fewer grid points though they used 14.8 % of the total system of supercomputer K. We performed meter-scale-resolution numerical simulations of BOMEX with times higher resolution, 104 times more grid points, and 442 times more SDs than Sato et al. (2017), thereby using 23.8 % of the total system of the supercomputer Fugaku. The computational performance of this simulation will be described in detail in Sect. 5.2.

2.3 Target architecture

In this study, we mainly used computers equipped with Fujitsu A64FX processors to evaluate the computational and physical performance of the new model, SCALE-SDM. In this section, we summarize the essential features and functions of the computers.

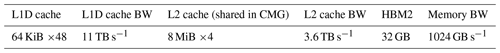

A64FX is a CPU that adopts scalable vector extension (SVE), an extension of the Armv8.2-a instruction set architecture. A64FX has 48 computing cores. Each CPU has four nonuniform memory access nodes called the core memory groups (CMGs). One core has an L1 cache of 64 KiB and can execute SVE-based 512-bit vector operations at 2.0 GHz with two fused multiply–add units. Each CMG shares an L2 cache of 8 MiB and has high bandwidth memory (HBM2) of 32 GB (bandwidth of 256 GB s−1). The theoretical peak performance per node is 3.072 tera floating-point operations per second (TFLOPS) for double precision (FP64). Supercomputer Fugaku has 158 976 nodes with a 6D torus shape. The cache and memory performances, which are particularly important for this study, are summarized in Table 2. A64FX has high memory bandwidth comparable to a GPU. In addition, SVE can execute FP64, single-precision (FP32), 32-byte integer (INT32), 16-byte floating-point number (FP16), and 16-byte integer (INT16) calculations.

Fugaku and FX1000 have a power management function to improve the computational power performance (Grant et al., 2016). Users can control the clock frequency (2.0 GHz or 2.2 GHz) and use one or two of the floating-point pipelines.

The performance of Fugaku is 46 times the peak performance and 30.7 times the memory bandwidth of K. In addition, using FP32 or FP16, the amount of data calculated by single instruction and that transferred from memory doubles or quadruples, respectively, and by optimizing a code according to its characteristics, users can potentially achieve a further 2 or 4 times higher effective peak performance, respectively. Due to the high memory bandwidth of Fugaku, its bytes per flops ratio () is 0.33, which is not too small compared to that of K ().

Although this study describes optimizations for A64FX, most of them can be applied to many-core general-purpose CPUs such as Intel Xeon equipped with x86-64 instruction set architecture. For such generalization, please see Sect. 3.3.1 with the parameters in Table 2 replaced with those for the x86-64 architecture. Optimization using accelerators such as GPUs is beyond the scope of this study. However, since the applicability of this study to accelerators is necessary for future high-performance computing, we discuss some differences between CPU-based and GPU-based approaches.

To map CPU-based optimization to GPU-based optimization, the L1 cache of the CPU can be read as the register file (for storing most frequently accessed data), L1 cache, and shared memory; OpenMP parallelization can be read as streaming multiprocessor parallelization for NVIDIA GPU (or Compute Unite for AMD GPU); and MPI processes can be read as the number of GPUs. In addition, since the memory bandwidth of one node of A64FX is comparable to that of a single GPU (e.g., NVIDIA Tesla V100: 900 GB s−1, A100: 1555 GB s−1), a comparison in terms of memory throughput is reasonable if we assume that all the SD information is on GPU memory. Although the approaches for cache and memory optimization of the CPU and GPU are similar, those for calculation optimization may differ. For example, GPUs are not good for reduction calculations, such as calculating the liquid water content in a cell from the SDs in the cell. The current trend for supercomputers is to use heterogeneous systems comprising both CPUs and GPUs as they provide excellent price performance. Nevertheless, memory bandwidth is essential for weather and climate models, including the SDM. Thus, it is not easy to achieve high performance unless the entire simulation can be handled only in GPUs.

The numerical model UWLCM (Arabas et al., 2015; Dziekan et al., 2019; Dziekan and Zmijewski, 2022) utilized GPUs for the SDM and CPUs for other processes, and Dziekan and Zmijewski (2022) achieved 10–120 times faster computations compared with CPU-only computations. Still, the time to solution using the SDM is 8 times longer than the bulk method. Although the CPU used had a lower bandwidth memory compared with the GPU for the dynamical core and the bulk method, we used a CPU with a higher bandwidth memory for all processes. This is an advantage when the entire simulation must be accelerated to reduce the time to solution.

3.1 Domain decomposition

We used SCALE-RM (Scalable Computing for Advanced Library and Environment-Regional Model; Nishizawa et al., 2015; Sato et al., 2015) as the development platform. We adopted the hybrid type of three- and two-dimensional (3D and 2D) domain decompositions using MPI. For 3D decomposition, we denoted the numbers of MPI processes for the x,y, and z axes as Nx,Ny, and Nz, respectively. For 2D decomposition, we decomposed the x and y axes into and domains, respectively. Here, we set and such that . Then, the total number of MPI processes N is common, i.e., . These two types of domain decompositions were utilized depending on the type of computations. The hybrid type of domain decomposition requires the conversion of grid systems containing every Nz of MPI processes. Note that the cost should not be a significant issue compared to collective communication across the entire MPI processes when Nz is relatively small (Nz<O(100)). The 3D domain decomposition is suitable for dynamical processes because frequent neighborhood communications are required to integrate short time steps for acoustic waves; further, the amount of communication is less because of the small ratio of halos to the inner grids. On the other hand, 2D domain decomposition is suitable for the SDM. As described later, since the number density of SDs is initialized proportional to the air density, the number of computations varies vertically in a stratified atmosphere. In addition, variations in the computation amount and data movement depend on whether clouds and precipitation shafts are within the domain. If 3D decomposition is used, domains without any clouds are likely, e.g., near the top and bottom boundaries; such domains may lead to a drastic load imbalance.

A drawback of the 3D domain decomposition is that it is more likely to suffer from network congestion; further, there will be hardware limitations on the number of simultaneous communications due to the increase in the number of processes in a neighborhood. The number of processes is 26 for 3D domain decomposition, while it is 8 for 2D domain decomposition. In addition, the throughput of communication decreases for smaller message sizes. In this study, we eliminated all unnecessary communications from the diagonal 20 directions and pack communications for each neighborhood direction to the maximum extent possible to gain high communication throughput. Communication time was overlapped with computation time during the dynamics process to reduce the time to solution.

3.2 Initialization of super-droplets

Although the SDM makes fewer assumptions about the DSD, the accuracy of the prediction depends on the initialization of the sampling of SDs from a vast number of real droplets. Shima et al. (2009) first used the constant multiplicity method, which samples SDs from normalized aerosol distribution. Further, Arabas and Shima (2013), Sato et al. (2017), and Shima et al. (2020) used the uniform sampling method, in which SDs were sampled from a uniform distribution of the log of the aerosol dry radius to sample droplets that are rare but important: for example, large droplets that may trigger rain. Indeed, Unterstrasser et al. (2017) showed that collision–coalescence calculations converge faster for a given number of SDs if the dynamic range of multiplicity is broader (i.e., the uniform sampling method), and they converge slower if the constant multiplicity method is used. However, owing to the broad dynamic range of the uniform sampling method, some multiplicities obtained using this method may fall below 1 if too many SDs are used to increase the spatial resolution. In this case, since multiplicity is stored as an integer type, some SDs will be cast to 0, and the number of SDs and real droplets will decrease.

One approach to solve this problem is to allow multiplicity to be a real number (floating-point number) (Unterstrasser et al., 2017). The SDM can handle discrete and continuous systems because its formulation is based on the stochastic and discrete nature of clouds. Nevertheless, simulations using this method may not behave as discrete systems in a small coalescence volume where the Smoluchowski equations do not hold (Dziekan and Pawlowska, 2017).

Another approach to solve the deterioration of multiplicity is to cast multiplicity from a floating-point number to an integer by stochastic rounding (Connolly et al., 2021). For example, let k be an integer, and let us set interval that contains a real number l; then, l rounds to k with probability and to k+1 with probability l−k. Hence, an expected value obtained by the stochastic rounding process is consistent with the original real number l. Thus, the sampling accuracy does not decrease. Although this approach cannot prevent a decrease in the SDs, it can prevent the decrease in the number of real droplets statistically.

However, we can consider these approaches to not be optimal for meter- to submeter-scale-resolution simulations. Unterstrasser et al. (2017)'s discussion was based on the result of a box model, which is a closed system and requires a large ensemble of simulations to obtain robust statistics. In practical 3D simulations, the cloud microphysics field fluctuates spatiotemporally because of cloud dynamics and statistics in finite samples. If we sample a vast number of SDs and if the number of samples becomes close to the actual number of droplets, imposing a constraint on the number is reasonable so that the dynamic range of multiplicity will be small (i.e., more similar to constant multiplicity) and the multiplicity for all SDs will be larger than 1. If such a method is used, we expect rare droplets to exist only in some cells rather than in every cell – this is a more natural continuation toward discrete systems. How can we develop such an initialization method? In addition, previous studies focused on collision–coalescence, but the sensitivity of cloud microphysical variability related to condensation–evaporation to SD initialization must also be considered. Against this background, which type of initialization is better overall?

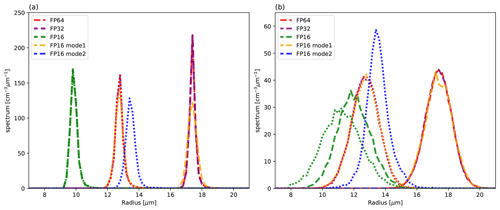

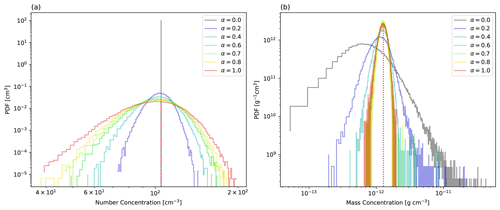

Figure 1(a) Normalized aerosol distribution given by vanZanten et al. (2011) (bold black line) and proposal distributions used for sampling (α=0–1). The bold red line shows the proposal distribution used for the uniform sampling method. (b) Relationship between dry aerosol radius and multiplicity when ΔV=23 m3 and 128 SDs per cell are sampled. (c) Distribution obtained by sampling 216 SDs using the same setup as (b) (ΔV=23 m3 and 128 SDs per cell) and sorted by multiplicity in ascending order. (d) The distribution corresponding to (c) when the L2 norm is used as a metric. The dotted lines in (b), (c), and (d) indicate .

To develop a new initialization method, we considered the simple method of generating a proposal distribution that connects the uniform sampling method to the constant multiplicity method. We chose the log of the aerosol dry radius log r in the interval between rmin and rmax as the random variable. We denote an initial aerosol distribution as n(log r) and its normalization as . The relation between ξ, n, and the proposal distribution p was given by Shima et al. (2020) as

where NSD is the SD number concentration. In the following explanation, we discretize the random variable into k bins and nondimensionalize the bin width to 1 for simplicity.

We define a probability simplex, which is a set of discretized probability distributions as follows:

Let us denote the discretized probability distribution of as b1∈Ck and the uniform distribution as b2∈Ck. Then, we define an α-weighted mean distribution a as the Fréchet mean of b1 and b2:

where ℒ is a metric to measure the distance between two distributions. A distribution a corresponds to a discretized and nondimensionalized proposal distribution of p. When the argument of the optimization is a function, the L2 norm is often used as the metric ℒ. In our case, since the argument is a probability distribution, the Wasserstein distance W2 (Santambrogio, 2015; Peyré and Cuturi, 2019), which is a metric that measures the distance between two probability distributions, is a more natural choice. Several methods have been proposed to obtain solutions in Eq. (5) numerically. One method is to regularize the optimization problem of Eq. (5) by using the entropic regularized Sinkhorn distance Sγ (Cuturi, 2013; Schmitz et al., 2018) (γ is the regularization parameter) instead of the Wasserstein distance . Another method is to use displacement interpolation (McCann, 1997), which is an equivalent formulation of Eq. (5). We used the method based on the Sinkhorn distance with in Sect. 5. In this section and Sect. 4, we used the displacement interpolation specialized for the case in which the random variable is one-dimensional to solve Eq. (5) more accurately. The specific forms of the Wasserstein distance W2, Sinkhorn distance Sγ, and displacement interpolations are described in Appendix A.

We verified this method of generating proposal distributions by adopting a specific aerosol distribution n(log r). We used the bimodal lognormal distribution of vanZanten et al. (2011). This distribution is composed of ammonium bisulfate with a number density of 105 cm−3. We chose the interval for the random variable as rmin=10 nm and rmax=5 µm, and we adopted k=1000 bins and to calculate proposal distributions.

The proposal probability distributions obtained using various α are shown in Fig. 1a. As α decreases, the uniform distribution gradually changes to the normalized aerosol distribution, and probabilities (frequency for sampling) near both ends of the random variable decrease.

The relationships between the aerosol dry radius and multiplicity for cell volume ΔV=23 m3 and 128ΔV−1 SDs are shown in Fig. 1b. Multiplicity for the large dry radius of aerosol falls below 1 for α=1.0 but exceeds 1 for α=0.8 for all samples.

Figure 1c shows the multiplicities of samples that are obtained by sorting 216 SDs by their multiplicity. The influence of α on changing the dynamic range of multiplicity and the number of ξ<1 samples can be clearly observed in Fig. 1c. Since the relationship between the aerosol dry radius and multiplicity does not change relatively with increasing spatial resolution, we indicate ξ=80–83 by dotted lines in Fig. 1c. As α decreases, the dynamic range of multiplicity decreases, and the minimum log multiplicity increases by an almost constant ratio when α≥0.2. When ΔV=1 m3, the multiplicity of all samples exceeds 1 if α≤0.7. Similarly, the multiplicity exceeds 1 when ΔV=503 cm3 if α≤0.6 and when ΔV=253 cm3 if α≤0.5. Since the numbers of samples of ξ<1 and 0.5 account for 7.82 % and 6.70 % of total samples, respectively, many invalid SDs are sampled if the uniform sampling method is used for 2 m resolution.

Figure 1d shows the results corresponding to Fig. 1c obtained for the L2 norm instead of to generate proposal distributions using Eq. (5). In this case, as α decreases, the number of ξ<1 samples decreases (0.413 % of total samples when α=0.1), but the dynamic range of multiplicity does not change unless α=0.0. Thus, these results suggest that the manner of connecting the two distributions is critical.

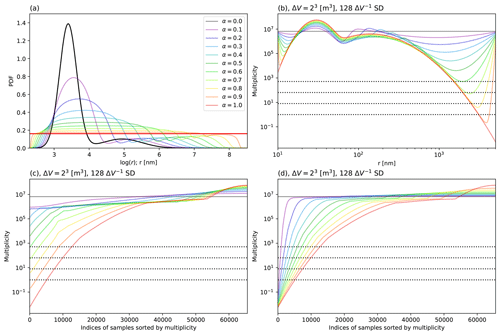

Figure 2Probability distributions of (a) aerosol number concentration and (b) aerosol mass concentration, obtained by sampling from various proposal distributions. The red dotted lines show the exact expected values.

How do aerosol statistics behave if we change α using the above method? The probability distributions of the number and mass concentration of dry aerosol for various α are shown in Fig. 2. We calculated the number and mass concentrations from 128 SDs. The multiplicity was cast to an integer using stochastic rounding for ΔV=23 m3. We performed 105 trials to obtain the probability distributions. The statistics of real droplets, corresponding to the limit when α=0 and the exact expected value, are also shown by a dotted red line in each panel of Fig. 2.

The expected values obtained by applying the importance-sampling method do not depend on the proposal distribution used. However, the variances of the expected values depend on the ratio of the original distribution to the proposal distribution, and they become small when the original and proposal distributions are similar. In fact, the aerosol number concentration distribution is narrow when the proposal distribution used is the same as the original distribution (α=0) (Fig. 2a), and it becomes broader as α increases. Thus, the uniform sampling method introduces significant statistical fluctuations (or confidence interval) of the aerosol number concentration. In contrast, the aerosol mass concentration distribution is narrow when α=1.0, and it broadens as α decreases (Fig. 2b). Thus, the uniform sampling method results in smaller statistical fluctuations of the aerosol mass concentration. That is, as α decreases, the importance sampling for the aerosol size distribution gradually changes its effect from the reduction of the variance of mass concentration to the reduction of the variance of number concentration. We note that the results are almost identical when we store multiplicity as a real-type floating-point number (not shown in the figures).

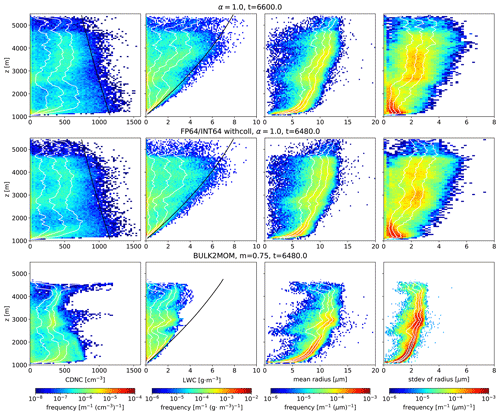

Based on the above considerations, the proposal distributions for α=0.7 were used for the numerical experiments described in Sect. 5. Although we focused on the statistical fluctuations of the aerosol, α may also be a sensitive parameter influencing the cloud dynamical and statistical fluctuations. Since this aspect is nontrivial because of the effect of cloud dynamics, we will describe the results of the sensitivity experiments for α in Sect. 4.3.

3.3 Model optimization

3.3.1 Strategy for acceleration

Based on the computers described in Sect. 2.3, we devised a strategy for optimizing the SDM. All algorithms used in the SDM have computational complexity of the order of SD numbers. In general, the PIC applications tend to have small due to the large computations involved. This will also hold for the SDM (except for the collision–coalescence process) because of the velocity interpolation to the position of SDs in movement, and the Newton iterations in activation–deactivation involve many calculations. Then, one may expect that a high computational efficiency can be achieved if the information for the grids and information for SDs are both on the cache as this can prevent the memory throughput being a bottleneck for the time to solution. However, since the calculation pattern in the cloud microphysics scheme changes depending on the presence of clouds and particle types, the codes in a loop body are complicated and often include conditional branches. Hence, high efficiency is difficult to achieve because of the difficulty of using SIMD vectorization and software pipelining. In the following paragraphs, we describe optimization based on two strategies: first, we developed cache-efficient codes by cache blocking (e.g., Lam et al., 1991) and reduction of information for the SDs. Second, we simplified the on-cache loop bodies to the maximum extent possible by excluding conditional branches.

We first considered applying cache-blocking techniques to the SDM. Since the L1 cache on A64FX is 64 KiB per core, 32 data arrays, which consist of 83 grids of 4-byte elements (each array consumes 2 KiB), can be stored on the L1 cache simultaneously. Similarly, since the user-available L2 cache is 7 MiB (of 8 MiB)/12=597 KiB per core, two data arrays which consist of 128 SDs per cell ×83 can be stored on the cache if an attribute of SDs consumes 4 bytes. Therefore, we divide the grids into groups of less than 83 (hereafter called “blocks”) for cache blocking. For each cloud microphysics process, we integrated all SDs by one time step forward and then moved on to the next process. In the original SDM, a single loop is used for all SDs in the MPI domain. In this study, we decomposed this single loop for all SDs into loops for all blocks and all SDs in each block; subsequently, we parallelized the loop for all blocks using OpenMP through static scheduling with a chunk size of 1. Although applying dynamic scheduling to the loop for all blocks may improve load balancing among blocks, it is difficult to validate the reproducibility of the stochastic processes, such as collision–coalescence, because random seeds may change with every execution.

To simplify the loop body for the SDs in a block, it is essential that the gridded values in a block are a collection of similar values because similar operations or calculations may be applied to these values in such cases. The effective resolution of atmospheric simulations (Skamarock, 2004) imparts such numerical effects on the grid fields. The volume, which consists of 83 grids, is comparable with the volume of effective resolution, which is the smallest spatial scale at which the energy spectrum is not distorted numerically by the spatial discretization. For example, since the energy spectrum obeys the law roughly in the inertial range for LES, we regard the effective resolution as the smallest spatial scale at which the energy spectrum follows the law. The typical effective resolution is 6Δ–10Δ for planetary boundary layer turbulence, which may depend on the numerical accuracy of the spatial discretization of basic equations as well as the filtering length and shape of LES. The physical interpretation of effective resolution is that the flow is well resolved if the spatial scale is larger than 6Δ–10Δ, and the variability decreases exponentially for scales smaller than this range. We used this prior knowledge to simplify the loop body, as described later.

3.3.2 Super-droplet movement

To save computational cost of the SDM, it would be advantageous to maintain the SD number density in areas where clouds are more likely to occur. This can be achieved by resampling the SDs in clouds using methods such as SD splitting and merging proposed by Unterstrasser and Sölch (2014), thereby optimizing the collision–coalescence calculations. Alternatively, one can automatically adjust the SD number density following the SD movement. We describe the second approach in detail.

To maintain the SD number density in clouds globally, we can initially place an increased number of SDs in the computational domain so that the SD number density is proportional to air density. For example, when the parcel is lifted, the SD number density decreases but may be maintained at the same level as the surrounding SD number density. However, this requires designing the SD movement scheme so that the time evolution of the SD number density follows the changes in air density. We focus on such schemes for grid-scale motion since the effect of subgrid motion should be relatively small. Because the air density decreases by the divergence of the velocity fields, the interpolation of the velocity should be developed to provide the divergence at the position of SDs that are calculated from interpolated velocity equal to divergence at the cell. For such a scheme, a reduction in the variability of the SD number density is also expected since the divergence at the SDs does not differ within a cell.

In addition to ensuring consistency between changes in SD number density and air density in velocity interpolation, numerical accuracy of the interpolation may also be necessary if small eddies and mixing are resolved in LES. Because the SDM is free from numerical diffusion by solving the SD movement in a Lagrangian manner, an interpolation scheme of higher order than the first-order scheme (Grabowski et al., 2018) may not significantly directly affect the phenomena whose spatial scale is sufficiently larger than the grid scale. However, as the flow within a cell is always irrotational and is first-order convergent with respect to grid length, the first-order scheme can deteriorate the numerical accuracy of meter- to submeter-scale eddies. Furthermore, it can affect large-scale phenomena through interactions between eddies and microphysics.

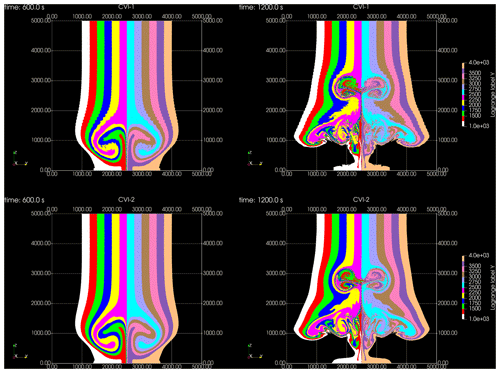

In this study, a second-order spatial-accuracy-conservative velocity interpolation (CVI) is developed on a 3D Arakawa C-grid with these properties. While the CVIs of the second-order spatial accuracy on 2D grids have been used in various studies such as Jenny et al. (2001), few studies have explored such CVIs on 3D grids. Recently, a CVI for a divergence-free velocity field on a 3D A-grid was developed by Wang et al. (2015). We extend the method used in their study for the nondivergence-free velocity field on the C-grid. The accuracy of the interpolation is of second order only within the cell, and we allowed discontinuous velocity across the cell. The derivation of our CVI using symbolic manipulation (Python SymPy) is available in Matsushima et al. (2023b). We only provide the specific form of the CVI in Appendix B.

The number of grid fields necessary to compute Eqs. (B7)–(B12) is important for computational optimization. While 24 elements (3 components × 8 vertices in a cell) are necessary to calculate the velocity at an SD position for trilinear interpolation (and the same applies for second-order CVI on the A-grid), only 18 elements are necessary for the second-order CVI on the C-grid (Eqs. B7)–(B12). That is, we can reduce 25 % of the velocity field data that occupy the L1 cache and use the remaining cache for SDs. The change in the spatial distribution of SDs through SD movement considering the spatial accuracy of CVI will be discussed in Sect. 4.2.

For warm clouds, since the information for the SD position accounts for half of all attributes, reduction of these data without loss of representation and prediction accuracy contributes greatly to saving the overall memory capacity in the SDM. However, using FP32 instead of FP64 may cause critical problems due to the relative inaccuracy and nonuniform representation in the domain in the former case. In the following paragraphs, we describe these problems and a solution.

In the original SDM, the SD position is represented by its absolute coordinate over the entire domain, but this method requires many bits. However, since we already decomposed the domain into blocks, using the relative position of SDs in a block is numerically more efficient. For this case, we can reduce the information per SD by subtracting the information that arises from the partitioning of the domain by the MPI process and a block from the global position.

If we represent the position of SDs as a relative position in a block, additional calculations are necessary when an SD crosses a block. Such calculations introduce rounding errors for the SD position, and the cell position where the SD resides may not be conserved before and after its calculations. Let us consider an example. Consider a block that consists of a grid. Let us define the relative position x of SDs belonging to and the machine epsilon for the precision of floating-point numbers as ϵ. If SD crosses to the left boundary and reaches , the relative position of the SD is calculated by adding the values of right boundary 1 in a new block to the SD position: . However, rounding to the nearest new position results in the following: . For FP32, since µm if we adopt meters as units, we expect this does not happen frequently. However, if such a case occurs even with only one SD of the vast number of SDs in the domain, the computations may be terminated by an out-of-array index. Although a simple solution is exception handling using min–max or floor–ceiling, this solution may deteriorate the computational performance by making the loop bodies more complex, and the correction bias introduced by exception handling may be non-negligible when low-precision arithmetic is used. To ensure safe computing, the suitable approach is to calculate the relative position without introducing numerical errors.

In this study, we represent the relative position using fixed-point numbers. This format allows us to define the representable position of SDs so that they are uniformly distributed in the domain, and integer-arithmetic-only calculations are used. Then, the same problem as in the case of simply using floating-point numbers does not arise in principle. Let us denote the range for which the SD is in cell k as and number of grid points along an axis as b. Then the range of positions in a block is represented as . We define the conversion from z∈Z to its fixed-point number representation q as the following affine mapping:

When b≤8, s=21 and when FP32 is used instead of INT32, the range of is accurately represented by the mantissa of the floating-point numbers, and the representation does not exceed the representable range if it is only a few grids outside a block. With regard to the velocity, the amount of movement per step is represented using a fixed-point number. We used FP32 instead of INT32 for the actual representation because the representable range of fixed-point numbers is small and could easily exceed its range by multiplication.

By using relative coordinates for the SD positions within a block, the precision of their locations is varied when Δz is changed. This is because the change of position in real space is 2−sΔz from Eq. (6) when the grid length is Δz, and the variation in q is 1; this value is reduced for smaller Δz. In addition, the change in the relative position per time step is 2sviΔtΔz−1 when the time step is Δt; hence, it increases as Δz decreases, thus providing a better representation of the relative position. Δt is set sufficiently small to ensure there is no large deviation from the time step of tracer advection. Then, the change in the relative position does not change if the ratio of Δt to Δz is kept constant. In real space, the numerical representation accuracy of position and the arithmetic operation accuracy of the numerical integration vary with the spatial resolution and time step. Therefore, we can maintain numerical precision for meter-scale-resolution simulations.

In terms of I/O, fixed-point numbers facilitate easy compression. For example, the interval of representable positions q in real space with Δz=2 m and a block size of 8 is 0.95 µm; this yields higher accuracy than the Kolmogorov length of 1 mm and is thus always excessive as a representation for DNS and LES. We can discard unnecessary bits when saving data on a disk.

3.3.3 Activation–condensation

The timescale of activation–deactivation of the cloud condensation nuclei (CCN) is short if the aerosol mass dissolved in a droplet is small (Hoffmann, 2016; Arabas and Shima, 2017). Hence, the numerical integration of activation–deactivation is classified as a stiff problem. To solve Eq. (2), Hoffmann (2016) used the fourth-order Rosenbrock method with adaptive time stepping. SCALE-SDM employs the one-step backward differentiation formula (BDF1) with Newton iterations. Although BDF1 has first-order accuracy, it has good stability because it is an L-stable and implicit method, and we can change time intervals easily because it is a single-step method. However, with the implicit method, Newton iterations must be performed per SD, and the number of iterations required for convergence of the solution differs for each SD, thereby making vectorization a complicated task. To overcome this difficulty, the original SDM uses excessive Newton iterations (20–25) that are sufficient for all SDs to converge, assuming that numerical experiments are performed on a vector computer such as the Earth Simulator. However, we cannot tune codes for both vector computers and short-length vector computation by using SIMD instructions in the same way. In the original SDM code, the loop body of time evolution by Eq. (2) is very complex because of the presence of conditional branches, grid fields at the SD position, and iterations; hence, it cannot issue SIMD instructions. Therefore, we devised a method to allow SIMD vectorization based on the previously described strategy.

Equation (2) is discretized by BDF1 as

where p is the current droplet radius, and R is the updated droplet radius. Equation (7) has at most three solutions; in other words, one or two of them may be spurious solutions. However, the uniqueness of the solution is guaranteed analytically in the following two cases (see Appendix C for derivation). Case 1, which depends on Δt, is

and Case 2, which depends on the environment and initial condition, is

Case 1 implies that an activation timescale restricts the stable time step for each SD. Based on the estimation of temperature T=294.5 K at z∼600 m in the BOMEX profile, when α=0.7, 87.7 % of SDs satisfy the condition for Case 1 if Δt=0.0736 s, 91.0 % if , and 100 % if . Similarly, when α=0.0, 91.4 % of SDs satisfy the condition if Δt=0.0736, 97.6 % if , and 100 % if . The smaller the value of α, the smaller the frequency of sampling small droplets and the greater the number of SDs that satisfy the condition.

On the other hand, Case 2 is a condition for the initial size of droplets p in an unsaturated environment. In the BOMEX setup, since cloud fraction converges at a grid length of 12.5 m (Sato et al., 2018), we can estimate the ratio of SDs that satisfy Case 2 for higher resolutions by analyzing the results of similar numerical experiments using new SCALE-SDM. We define droplets of the size as aerosol particles (or haze droplets) and droplets that are larger than and smaller than 40 µm as cloud droplets. We do not provide the detailed results, but the ratio of air density weighted volume (i.e., mass) where cloud water exists in a cell to the total volume in the BOMEX case is approximately 1.5 % in a quasi-steady state based on the numerical experiments with our developed model. Therefore, we estimate that 98.5 % of SDs satisfy the condition of Case 2 in the BOMEX setup.

Hence, if we ensure the uniqueness of the solution by Case 1 for a cloudy cell and Case 2 for a cell with no clouds, the frequency of exception handling during Newton iterations can be largely reduced. We first check whether we need a conditional branch of the unsaturated environment (of Case 2). Since the block has a small volume that is comparable with the effective resolution, as discussed in Sect. 3.3.1, we can convert the conditional branches of the unsaturated condition for an SD to that for all SDs in a block with little or no decreasing ratio of SDs to satisfy the condition. This conversion of the conditional branch allows a loop body of time evolution by Eq. (7) to be simple and specific to Case 2. There is an exception: when the initial size of droplets is larger, it is handled individually only if such droplets exist in a block. If the environment is saturated, we ensure the uniqueness of the solution with Case 1. In this case, we list the SDs that satisfy Case 1 and perform Newton iterations according to the list. Other SDs are calculated individually and using adaptive time stepping for unstable cases.

By using this method, we find that almost all SDs satisfy the uniqueness condition of the solution, and we should only focus on optimizing these SDs. For tuning, the SDs in a block are classified into groups of 1024 SDs (which fit in the L1 cache), and each division calls the process of activation–condensation. In each call, the time evolution of each SD is calculated. A single loop for the updates of droplet radius calculates two iterations because this is the maximum number of Newton iterations that can allow SIMD vectorization and software pipelining without register spill of 32 registers with the current compiler we used for A64FX. The loop is repeated for all SDs in a division and breaks if the squares of all droplet radii of SDs fall below the tolerance relative error of 10−2. Since the loop is vectorized by SIMD instructions and the number of iterations is often limited to two if we use the previous droplet radius for the initial value for the Newton iterations, the computational time for activation and condensation is drastically less than that of the original SDM, as shown later.

Note that the most frequently encountered case might depend on the type of simulated clouds. For example, Case 2 is more often selected in an experiment when clouds occupy a large fraction of the total domain volumes. However, the calculations of both cases are almost identical; hence, the computational performance depends more on the number of iterations (i.e., the closeness between the initial guess and solution) than the differences between the cases.

3.3.4 Collision–coalescence

The computational cost of the collision–coalescence process is already low for the algorithm developed by Shima et al. (2009). We reduced the computational cost and data movement further rather than achieving a higher efficiency against theoretical peak performance of floating-point number operations. Since we used only the Hall kernel for coalescence, the coalescence probability was small for two droplets of small and similar sizes. Therefore, it is reasonable to ignore the collision–coalescence process in cells with no clouds. Notably, no cloud condition can precisely match Case 2 described in Eq. (9). If even a single cloud droplet exists in a block, it becomes necessary to sort the cell indices of all SDs in the block. However, we can remove sorting if cloud droplets do not exist in a block. We do not sort the attributes of the SDs with cell indices as a key since they are already sorted with a block as a key, as will be described in Sect. 3.3.5. Further, some attributes are on the L2 cache during the collision–coalescence process due to cache blocking. By not sorting the attributes of the SDs, the write memory access of SDs that do not coalesce is avoided. In the BOMEX setup, 98.5 % of the SDs satisfy Case 2 and we do not calculate the collision–coalescence of these SDs. Therefore, we expect a drastic reduction in the computational cost and data movement in some cases in which cloudy cells occupy only a small fraction of the total domain volume. This method to reduce the computational cost potentially leads to a large imbalance as in the Twomey SDM by Grabowski et al. (2018). However, we also expect that the imbalance might be mitigated better as cache blocking improves the worst-case elapsed time among the MPI processes.

3.3.5 Sorting for super-droplets

To utilize cache blocking effectively during the simulation, the SDs in a block should be contiguous on memory. This is possible if we sort the attributes of the SDs using the block ID as the sorting key when SDs move out of one block to another. This sorting is different from the usual sorting in which each block can send SDs to any other block; in the present sorting, the direction of SD movement is limited to adjacent blocks along x, y, and z axes. Such sorting is commonly used in the field of high-performance computing. Although we did not make any novel improvement, we summarize this process because it is essential to our study, and some readers may not be familiar with on-cache parallel sorting for the PIC method used during computation (Decyk and Singh, 2014).

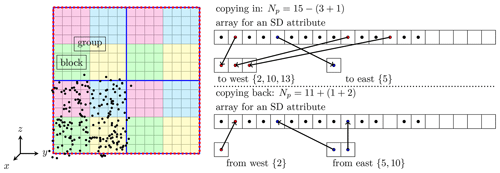

Figure 3Data hierarchy (particle and cell, block, group) in each MPI process and algorithm for SD sorting toward the x direction in each block. In the example, an MPI process has four groups, a group has four blocks, and a block has 4×4 cells. Using a list ({}) that stores the indices, SD sorting is completed by copying in the SDs moving to adjacent blocks and copying back the SDs moving into the block. The number of total SDs within a block is monitored by counting only the moving SDs.

Since memory bandwidth generally limits sorting performance, it is essential to reduce data movement. In our case, the directions of data movement are limited, and most of the SDs in a block are already sorted. We should adopt a design such that these data are not moved and any unnecessary processes are not performed. We should also reduce the buffer size for sorting because of the low memory capacity of A64FX, perform parallelization, and reduce computational costs. However, ready-made sorting, such as the counting sort, may not meet these requirements. Moreover, in the worst case, such sorting may be slower than the main computation in the SDM because of random access in the memory.

In this study, we sorted the attributes of SDs in three steps along the x, y, and z axes. Data hierarchy within each MPI process and an example of one-dimensional SD sorting are shown in Fig. 3. Each step requires at least two loops: copying in the SDs moving to adjacent blocks and copying back the SDs moving into the block. Since the SDs in a block either stay in the same block or only move one block forward or backward, we did not sort the attributes of SDs with combinations as a key. Instead, we made a list of SDs to move to reduce the computational costs and unnecessary data movement. Copying in and back of the SDs to the working array should be divided into small groups so that size of the working array for SDs is reduced by divisions. A loop for a block in each step can be parallelized naturally by using OpenMP. Although a few invalid SDs (buffer) may be included in the arrays, this study does not attempt to defragment them explicitly, expecting that the SD movement and sorting with blocks as a key per microphysical time step may cause defragmenting.

This sorting can avoid the problems of using a ready-made algorithm. The drawback of the current implementation is that a larger buffer space is necessary for SD attribute arrays because a block has few grids and the statistical fluctuation of the number of SDs within a block is large. However, this can be improved if we adaptively adjust the size of SD attribute arrays in a block according to air density and statistical fluctuations of SD numbers.

4.1 Methodology of performance evaluation

We evaluated the computational and physical performances of the numerical models and microphysics schemes by comparing the results of the new SCALE-SDM with those obtained with the same model but using the conventional cloud microphysics schemes as well as with the results obtained with the original SCALE-SDM. When comparing cloud microphysics schemes based on different concepts, we should first consider convergence in spatiotemporal resolutions. The two-moment bulk method imposes empirical assumptions on the DSD, leading to less spatial variability or no dependency on the spatial resolution, such as the spectral width of the DSD. However, this does not mean that the simulated microphysics variables can converge quickly to increase the spatial resolution; rather, this indicates that fair comparison in terms of spatial resolution is difficult in principle. Because the bulk method solves the moments of the DSD, one may assume that the timescale of the moments of the DSD might be larger than that of droplets. However, Santos et al. (2020) performed eigenvalue analysis for a two-moment bulk scheme and found that a fast mode (<1 s) also exists in the bulk scheme, which does not considerably deviate from the timescale of individual droplets. Based on this fact, we use the same spatiotemporal resolutions to compare the two-moment bulk method and sophisticated microphysics schemes.

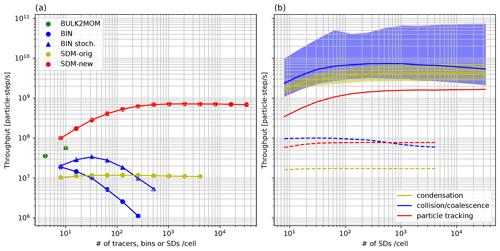

Our optimization goal was to enable meter- to submeter-scale-resolution experiments of shallow clouds to reduce uncertainty and to contribute to solving future societal and scientific problems. Therefore, we adopted a goal-oriented evaluation method instead of estimating the contributions of various innovations for improving the time to solution. Here, we describe the evaluation of the time to solution and data processing speed (throughput) to ensure the usefulness of our work for solving real problems. The throughput for the microphysics scheme, including the tracer advection of the water and ice substances, is defined as follows:

where the number of steps and elapsed time correspond to the microphysics scheme. To compare the cloud microphysics scheme that is based on different concepts, we defined the throughput for a bulk and a bin method by total tracers, including all categories (e.g., water and ice) and statistics (e.g., number and mass). In contrast, we defined the throughput for the SDM by sampling sizes in the data space . This is because we can add any attributes with less computational cost and fewer data movements, and the effective number of attributes may change during time integration; hence, considering many attributes for defining throughput is inappropriate. For example, because we give an initial value of R as a stationary solution of the Eq. (2), R, ξ, and M are initially correlated. We note that the number of tracers does not account for the water vapor mass mixing ratio. An increasing number of tracers or SDs improves the representation power for microphysics. Such an increase in the representation power can be easily achieved for a bin method and the SDM, but is difficult for a bulk method. We do not incorporate the number of SDs that are actually needed to obtain converged solutions into the metric in Eq. (10) to avoid loss of generality, and we will separately discuss the SD numbers. For example, the convergence properties can depend on the variable to be checked, including liquid water content, cloud droplet number concentration, and precipitation. They can also depend on the setup and results of simulations, such as cloud form, number of CCN, probability distributions used for initialization, and random number properties.

To evaluate physical performance, we should confirm that we obtained qualitatively comparable results faster with the SDM than with the original SDM. In terms of throughput, we should also confirm that we obtained qualitatively improved numerical solutions if the elapsed time was approximately the same.

Next, we briefly describe the original SCALE-SDM and other cloud microphysics scheme used for performance evaluation. We refer to the SCALE-SDM version 5.2.6 (retrieved 6 June 2022 from Bitbucket, contrib/SDM_develop) as the original SCALE-SDM. Meanwhile, we used the develop branch, which branches off from version 5.4.5. The new SCALE-SDM also includes a modification from SCALE version 5.4.5 to generate an initial condition of water vapor mass mixing ratio that is similar to that generated by the original SCALE-SDM. The original SCALE-SDM was used only for numerical experiments with the “original” SDM, as labeled hereinafter. When focusing on some differences among cloud microphysics schemes, we will refer to the SDM schemes associated with new SCALE-SDM and original SCALE-SDM as SDM-new and SDM-orig, respectively.

For the microphysics scheme, we used the Seiki and Nakajima (2014) scheme as a two-moment bulk method and the Suzuki et al. (2010) scheme as a (one-moment) bin method, both implemented in SCALE. The Seiki and Nakajima (2014) scheme solves the number and mass mixing ratio of two water and three ice substance categories, while the Suzuki et al. (2010) scheme solves the mass mixing ratio of each bin in discretized DSD for water and ice substances. We considered liquid-phase processes in the bin method and considered liquid- or mixed-phase processes (only for discussion in Sect. 6.1) in the bulk scheme. We also introduced the subgrid-scale evaporation model (Morrison and Grabowski, 2008) in the Seiki and Nakajima (2014) scheme for comparison with the SDM later, which considers a decrease in the cloud droplet number, depending on the entrainment and mixing scenario controlled by a parameter m (ranges from 0, indicating homogeneous mixing, to 1 for inhomogeneous mixing). We did not consider the delayed evaporation by mixing (Jarecka et al., 2013) because of the increased computational cost, which should be addressed in the future work. General optimization has been applied to the Seiki and Nakajima (2014) scheme. In this scheme, SIMD instructions vectorized the innermost loop for the vertical grid index, performing complex calculations on each water substance. The innermost calculations are divided by separate loops to improve computational performance using cache. However, there may still be room to find optimal loop fission and reordering calculations to reduce the latency of operations. In terms of computational cost, optimization is applied to the Suzuki et al. (2010) scheme. However, the innermost loops for bins are not vectorized for a small number of iterations. The future issues for optimization of the mixed-phase SDM are discussed in Sect. 6.1. SCALE adopts terrain-following coordinates and contains features of map projection as a regional numerical model. However, since any additional computational cost and data movement for these mappings cannot be ignored for meter-scale-resolution simulations, we excluded these features in the new SCALE-SDM for the dynamical core, turbulence scheme, and microphysics scheme.

4.2 Warm-bubble experiment

We first evaluated the computational and physical performances via simple, idealized warm-bubble experiments. The computational domain was km for x, y, and z directions. For the lateral boundaries, doubly periodic conditions were imposed on the atmospheric variables and positions of the SDs. The grid length was 100 m. The initial potential temperature θ, relative humidity (RH), and surface pressure Psfc were as follows:

The air density was given to be in hydrostatic balance. We provided a cosine-bell-type perturbation of the potential temperature θ′ to the initial field to induce a thermal convection:

For the SDM, the initial aerosol distribution was the same as that in vanZanten et al. (2011). For the two-moment bulk and bin methods, we used Twomey's activation formula and activated CCN (CCNact) to cloud droplets according to the supersaturation (S) as CCNact=100S0.462 cm−3. We used the mixing scenario parameter m=0.5 in the two-moment bulk method as a typical value for the pristine case in Jarecka et al. (2013). The uniform sampling method was used to initialize the aerosol mass dissolved in a droplet and multiplicity. In the SDM-orig, SDs were initialized so that they were randomly distributed in the domain. In contrast, for SDM-new, SDs were initialized such that the SD number density was proportional to air density. In addition, to reduce the statistical fluctuations caused by a varying number of SDs in space, we used the Sobol sequence (a low-discrepancy sequence) instead of pseudorandom numbers in the four-dimensional space of positions and aerosol dry radius in each block.

For the computational setup, the domain was decomposed to four MPI processes of one node in the y direction using FX1000 (A64FX, 2.2 GHz). Local domains in each MPI process were further decomposed into blocks of size for x, y, and z directions to apply cache blocking for SDM-new. For the numerical precision of floating-point numbers, FP64 was used for the dynamics, two-moment bulk method, bin method, and SDM-orig. In contrast, SDM-new uses mixed precision, but calculations for SDs were primarily performed by FP32. For time measurement, we inserted MPI_Wtime and barrier synchronization at the start and end of the measurement interval. In this experimental setting, there were no background shear flows, and the simulated convective precipitation systems were localized and stationary in some MPI processes, thereby imposing a huge load imbalance of computational costs. However, the execution time was almost the same in the presence and absence of barrier synchronization owing to the stationarity of convective precipitation systems. In addition, if the measurement interval was nested, the times measured in its lowest level of nests did not include the wait time between MPI processes. To this end, we evaluated the performance of each microphysics subprocess without additional time. Time integrations were performed for 1800 s by using Δtdyn=0.2 s for dynamics and Δt=1.0 s for other physics processes.

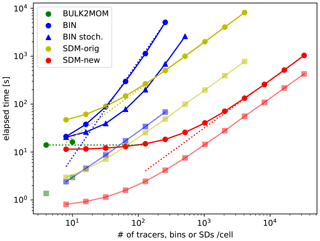

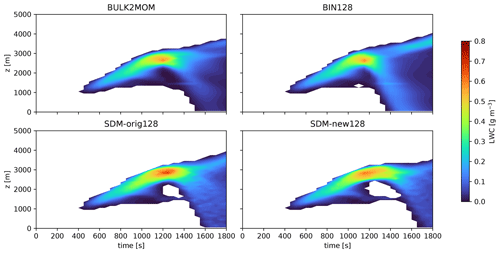

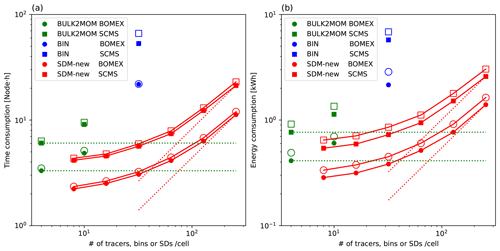

Figure 4Elapsed times of the total (circles) as well as tracer advection and SD tracking (squares) using the two-moment bulk method (green), bin method (blue), SDM-orig (yellow), and SDM-new (red) with different numbers of tracers or mean SDs per cell. Elapsed times of the total (triangles) using the bin method with stochastic collision–coalescence algorithms are also shown. Here, SD tracking included SD movement and sorting with a block as a key. The dotted blue line is the line proportional to N2. The dotted red and yellow lines are lines proportional to N. The dotted green line indicates a constant determined by N. Here, N is the number of tracers, bins, or SDs per cell.

Figure 4 shows the elapsed times of the warm-bubble experiments for various cloud microphysics and different numbers of tracers or SDs per cell. Here, we show only the elapsed times of those numerical simulations that were completed in less than 3 h and that required less than 28 GB of memory. The elapsed time obtained using the bin method (BIN) behaves as O(N2) because all possible pairs of droplet size bins N are considered for calculating collision–coalescence, while that of the SDM-orig behaves as O(N), indicating that the collision–coalescence calculation developed by Shima et al. (2009) reduces the elapsed time. Sato et al. (2009) proposed that the possible combinations of collision–coalescence of the bin method () could be reduced using Monte Carlo integration, which is similar to the SDM; hence, Fig. 4 also shows the results using this option, in which we use , and 4096 for , and 512, respectively. We should use M∝N for reducing the order of the computational complexity to O(N). However, when we set the number of bins to N≥128, the computations were terminated due to large negative values of liquid water that fixers cannot compensate for. This is consistent with a previous study (Sato et al., 2009), which stated that should be used. If we can use this option stably in the future, the elapsed time of the bin method would become comparable to that of SDM-orig. Even if we consider the above point, the SDM-new drastically reduced the elapsed time compared to the bin method for the same number of bins or SDs. Moreover, the elapsed time obtained using the SDM-new with 128 SDs per cell was about the same as that obtained using the two-moment bulk method (BULK2MOM).

The results seem to contradict the intuition that computations using sophisticated cloud microphysics schemes take more time than simpler schemes because of the high computational costs of the former. The main reason for the present results is related to the tracer advection and SD tracking, which is a bottleneck for the elapsed times, as described below, rather than to other cloud microphysics subprocesses. The elapsed times of tracer advection and SD tracking are shown in Fig. 4. The elapsed times of tracer advection and SD tracking obtained using the bin method and SDM-orig are comparable and increase as O(N). For small N, the elapsed time of tracer advection and SD tracking for the SDM-new up to 32 SDs per cell is shorter than that for the two-moment bulk method, which is advantageous in terms of the elapsed time of simulations.

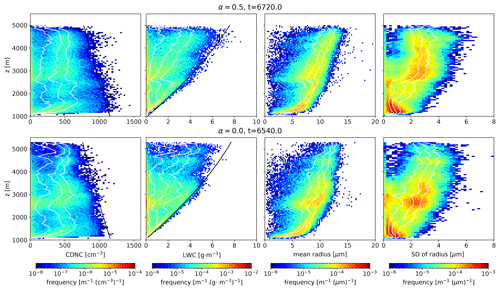

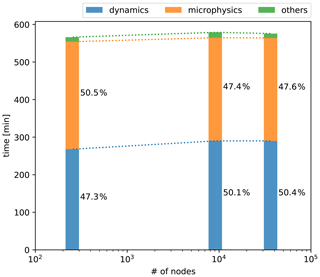

The advantages of SDM-new against the two-moment bulk for calculating tracer and SD dynamics are fewer calculations, higher compactness, and more reasonable use of low-precision arithmetic for SD tracking than for tracer advection. While tracer advection requires a high-order difference scheme to reduce the effect of numerical viscosity, SD tracking does not require a high-order scheme. We used Fujitsu's performance analysis tool (fapp) to measure the number of floating-point operations (FLOPs). We found 303.915 FLOPs per grid and tracer for tracer advection (UD5) excluding FCT and 164.3 FLOPs per SD for SD movement using CVI of second-order spatial accuracy. Since the calculation of UD5 requires values at five grids and halo regions of width 3 in each direction, the calculations are not localized, and a relatively larger amount of communication is necessary. For SD tracking, the calculations for a single SD require only grids that contain the SD, and communication is necessary only when the SD moves out of the MPI process. If FP32 is used for tracer advection, one of the advantages of the SDM-new over the two-moment bulk method is lost. However, the calculations of tracer advection require differential operations, which may cause cancellation of the significant digits. This likely cannot be ignored for high-resolution simulations where the amplitude of small-scale perturbations from the mean state decreases, especially for variables that have stratified structures (e.g., water vapor mass mixing ratio). On the other hand, for the proposed SD tracking, numerical representation precision of the SD positions in physical space becomes more accurate as the grid length and time interval decrease simultaneously. Therefore, the use of FP32 for high-resolution simulations is reasonable. Of course, another important factor behind these results is the fact that the calculations of other SDM subprocesses are no longer bottlenecks in SDM-new.