the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Stochastic ensemble climate forecast with an analogue model

Céline Déandréis

This paper presents a system to perform large-ensemble climate stochastic forecasts. The system is based on random analogue sampling of sea-level pressure data from the NCEP reanalysis. It is tested to forecast a North Atlantic Oscillation (NAO) index and the daily average temperature in five European stations. We simulated 100-member ensembles of averages over lead times from 5 days to 80 days in a hindcast mode, i.e., from a meteorological to a seasonal forecast. We tested the hindcast simulations with the usual forecast skill scores (CRPS or correlation) against persistence and climatology. We find significantly positive skill scores for all timescales. Although this model cannot outperform numerical weather prediction, it presents an interesting benchmark that could complement climatology or persistence forecast.

- Article

(4290 KB) - Full-text XML

- BibTeX

- EndNote

Stochastic weather generators (SWGs) have been devised to simulate many and long sequences of climate variables that yield realistic statistical properties (Semenov and Barrow, 1997). Their main practical use has been to investigate the probability distribution of local variables such as precipitation, temperature, or wind speed, and their impacts on agriculture (Carter, 1996), energy (Parey et al., 2014), or ecosystems (Maraun et al., 2010). Such systems can simulate hundreds or thousands of trajectories on desktop computers and propose cheap alternatives to climate model simulations.

There are many categories of SWGs (Ailliot et al., 2015). Some SWGs are explicit random processes, whose parameters are obtained from observations of the variable to be simulated (Parey et al., 2014). Some SWGs are based on a random resampling of the observations (Iizumi et al., 2012). Some SWGs simulate local variables from their dependence on large-scale variables such as the atmospheric circulation (Kreienkamp et al., 2013). This allows us to simulate spatially coherent multivariate fields (Yiou, 2014; Sparks et al., 2018) and can be used for downscaling (Wilks, 1999).

SWGs that use observations as input could in principle be used to forecast variables. This is the case for analogue weather generators (Yiou, 2014). Methods of analogues of atmospheric circulation were first devised for weather forecast (Lorenz, 1969; van den Dool, 1989). They were abandoned when numerical weather prediction was developed and implemented, because their performance was deemed inadequate (van den Dool, 2007). However, recent studies on nowcasting have shown that analogue-based methods could outperform numerical weather prediction for precipitation (Atencia and Zawadzki, 2015). Yiou (2014) showed some skill for temperature simulations in Europe of an analogue SWG.

Due to uncertainties in observations and the high sensitivity to initial conditions (van den Dool, 2007), weather forecasts estimate probability density functions rather than deterministic meteorological values. Therefore, weather forecasts examine the properties of all possible trajectories of an atmospheric system from an ensemble of initial conditions. Such properties include the range and the median, for example. Then one can compare how the ensemble of trajectories compares to observations and other reference forecasts. Numerical weather forecasts rely on large ensembles of model simulations and require a massive use of supercomputers in order to provide estimates of the probability density function (pdf) of variables of interest, for various lead times. Being able to increase the ensemble size of weather forecast systems in order to lower the bias of the forecast skill has been a challenge of major centers of weather prediction (Weisheimer and Palmer, 2014).

The most trivial prediction systems are based on either climatology (i.e., predicting from the seasonal average) or persistence (i.e., predicting from the past observed values) (Wilks, 1995). Probabilistic and statistical models can provide more sophisticated benchmarks for weather forecast systems, still without simulating the underlying primitive hydrodynamic equations and using supercomputers. For example, statistical models of forecast for precipitation based on analogues (of precipitation) were tested for North America (Atencia and Zawadzki, 2015). Such systems tend to outperform numerical weather forecast systems, although their computing cost is steeper than most SWGs. Therefore the potential of analogue-based methods can be useful to assess probability distributions, rather than a purely deterministic forecast.

Machine learning algorithms were recently devised to simulate complex systems (Pathak et al., 2018a) with surprising performances. Such algorithms are sophisticated ways of computing analogues of observed trajectories in a learning step and simulating potentially new trajectories from this learning. The main drawback is that such algorithms generally require a tricky tuning of parameters that might not be based on a physical intuition. From the inspiration of machine learning algorithms, we propose devising a weather forecast system based on a stochastic weather generator that uses analogues of circulation to generate large ensembles of trajectories. The rationale for using analogues, rather than more sophisticated machine learning, is that they correspond to a physical interpretation of relations between large scales and regional scales. Moreover, mathematical results in dynamical system theory (Freitas et al., 2016; Lucarini et al., 2016) suggest that properties of recurring patterns are asymptotically independent of the distance that is used to compute analogues.

This paper presents tests of such a system to forecast temperatures in Europe and an index of the North Atlantic Oscillation (NAO). The NAO controls the strength and direction of westerly winds and location of storm tracks across the North Atlantic in the winter (Hurrell et al., 2003). Positive values of the index indicate a strengthened Azores anticyclone and a weaker Icelandic low. Negative values indicate a weak Azores anticyclone and a strong Icelandic low. The North Atlantic Oscillation is strongly tied to temperature and precipitation variations in Europe (Slonosky and Yiou, 2001).

Since the setup of such a system is fairly light, it is possible to test it for time leads from a meteorological forecast (5 days ahead) to a seasonal forecast (80 days ahead). We test this system in hindcast experiments to forecast climate variables between 1970 and 2010. The tests are performed with the usual skill scores (continuous rank probability score and correlation).

The paper is organized as follows. Section 2 presents the datasets that are used as input of the system. Section 3 presents the forecast system based on analogues, the skill scores, and the experimental protocol. Section 4 presents the results on simulations of the NAO index and European temperatures.

We used data from different sources for sea-level pressure (SLP), NAO index, and temperatures. SLP data are used for analogue computations as a predictor. The NAO index and temperatures are the predictands (i.e., variables to be predicted). It is important that they share a common chronology, in order to allow their simulation because the NAO index and temperatures are simulated from SLP analogues.

2.1 Sea-level pressure

We use the reanalysis data of the National Centers for Environmental Prediction (NCEP) (Kistler et al., 2001). We consider the SLP over the North Atlantic region. We used SLP daily averages between January 1948 and 30 April 2018. The horizontal resolution is 2.5∘ in longitude and latitude. The rationale of using this reanalysis is that it covers more than 60 years and is regularly updated, which makes it a good candidate for a continuous time forecast exercise.

One of the caveats of this reanalysis dataset is the lack of homogeneity of assimilated data, in particular before the satellite era. This can lead to breaks in pressure-related variables, although such breaks are mostly detected in the Southern Hemisphere and the Arctic regions (Sturaro, 2003). We are not interested in the evaluation of SLP trends; therefore, breaks should only marginally impact our results.

2.2 NAO index

The NAO is a major mode of atmospheric variability in the North Atlantic (Hurrell et al., 2003). Its intensity is determined by an index that can be computed as the normalized sea-level pressure difference between the Azores and Iceland (Hurrell, 1995). The NAO index is related to the strength and direction of the westerlies, so that high values correspond to zonal flows across the North Atlantic region, stormy conditions, and rather high temperatures in western Europe (Slonosky and Yiou, 2001; Hurrell et al., 2003).

We retrieved the daily NAO index from the NOAA web site: http://www.cpc.ncep.noaa.gov/products/precip/CWlink/pna/nao.shtml (last access: 12 February 2019).

The procedure to calculate the daily NAO teleconnection indices is detailed on the NOAA web site. In short, a rotated principal component analysis (RPCA) is applied to monthly averages of geopotential height at 500 hPa (Z500) anomalies (Barnston and Livezey, 1987) in the 20–90∘ N region, between January 1950 and December 2000, from the NCEP reanalysis. The empirical orthogonal functions (EOFs) provide climatological monthly teleconnection patterns (Wilks, 1995). Those monthly teleconnection patterns are interpolated for every day in the year. Then daily Z500 anomaly fields are projected onto the interpolated climatological teleconnection patterns in order to obtain a daily NAO index.

The geographical domain on which this NAO index is computed is larger than the one for SLP data. Scaife et al. (2014) used an NAO index to test the UKMO seasonal forecast system. The index they used is based on monthly SLP differences between the Azores and Iceland, and is therefore different from ours.

2.3 European temperatures

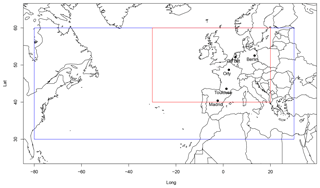

We took daily averages of temperatures from the ECAD project (Klein-Tank et al., 2002). We extracted data from Berlin, De Bilt, Toulouse, Orly, and Madrid (Fig. 1). Those five stations cover a large longitudinal and latitudinal range in western Europe. These datasets were also chosen because

-

they start before 1948 and end after 2010. This allows the computation of analogue temperatures with the SLP from the NCEP reanalysis, which includes that period, and

-

they contain less than 10 % of the missing data.

These two criteria allow 528 out of the 11 422 ECAD stations to be kept that are available in 2018.

3.1 Analogues of circulation

Analogues of circulation are computed on SLP data from NCEP (Sect. 2.1). For each day between 1 January 1948 and 31 December 2017, the 20 best analogues (with respect to a Euclidean distance) in a different year are searched. This follows the procedure of (Yiou et al., 2013). The analogues are computed over two regions (large region: North Atlantic region (80∘ W–30∘ E; 30–70∘ N); small region: western Europe (30∘ W–20∘ E; 40–60∘ N)). The large region is used to simulate/forecast the NAO index. This choice is justified by the fact that the North Atlantic atmospheric circulation patterns are well defined over that region (Michelangeli et al., 1995). The small region is used to simulate/forecast continental temperatures, following the domain recommendations of the analysis of Jézéquel et al. (2018).

3.2 Forecast with an analogue stochastic weather generator

Ensembles of simulations of temperature or the NAO index can be performed with the rules illustrated by Yiou (2014), with an analogue-based stochastic weather generator. This stochastic weather generator can be run in so-called dynamic mode. For each initial day t(1), we have N best SLP analogues. We randomly select one (k) of those N analogues and time , with a probability weight that is

-

inversely proportional to the calendar distance of the analogue dates to t(1). This constrains the time of analogues to move forward;

-

inversely proportional to the correlation of the analogue with the SLP pattern at time t(1). This constraint favors analogues with the best patterns, among those with the closest distance; and

-

a zero weight if is larger than t(1). This ensures that no information coming from times beyond t(1) is used in the simulation process.

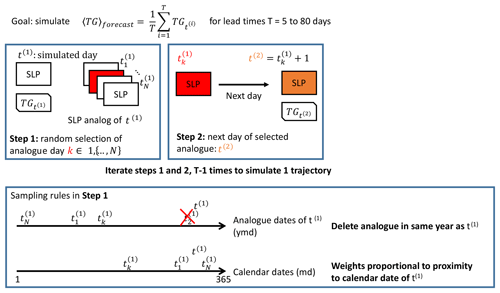

The simulated SLP at the next day t(2) is then the next day of the selected analogue (). We repeat this operation on until a lead time T. This generates one random daily trajectory between t(1) and t(1)+T. The random sampling procedure is repeated S times to generate an ensemble of trajectories. Here, S=100. This procedure is summarized in Fig. 2.

Figure 2Schematic of the iteration procedure to simulate one random trajectory of temperature (TG) from SLP analogues. The values of t(k) are the days to be simulated by the system. The values of are the analogue days for t(k). The red SLP rectangles are the randomly selected analogues according to the rule defined in the lower box. This procedure is repeated S times to generate an ensemble of trajectories.

If we want to simulate a daily sequence starting at time t and until t+T, we have excluded all analogues whose date falls in in the random analogue selection. This provides a simple way of performing hindcast forecast for temperature or NAO index.

In this paper, the lead time T is 5, 10, 20, 40, and 80 days ahead. The latter two values are meant to illustrate the limits of the system. For each daily trajectory starting at t, we compute the temporal average between t and t+T. Therefore, we go from an ensemble meteorological forecast (5 days) to a seasonal forecast (80 days) of averaged trajectories.

The S=100 simulations at each time step allow computation of medians and quantiles of the averaged trajectories.

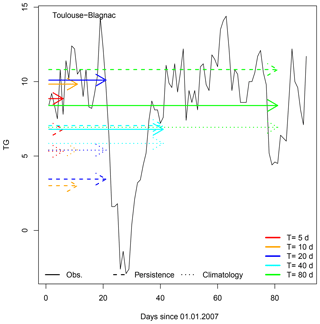

For comparison purposes, climatological and persistence forecasts are also computed. The climatological forecast for a lead time T is determined from the seasonal cycle of T averages of the variable we simulate. For each time t, the climatological forecast for t+T is the mean seasonal cycle of T averages at the calendar day of t. The persistence forecast at time t for a lead time of T is the observed average between t−T and t. Those two types of forecasts are illustrated in Fig. 3. Ensembles of reference forecasts are performed by adding a Gaussian random noise (independent and identically distributed), whose variance is the variance of the observed T averages. These two definitions ensure a coherence between the predictand (averages over T values ahead) and predictors for references (mean of averages over T values for climatology, or average over T preceding values for persistence).

Figure 3Illustration of average forecast for daily mean temperature (TG) in Toulouse, for 1 January 2007. The continuous black line indicates the observations of TG for the first 90 days of 2007. The colors indicate lead times T. The continuous arrows are for averages of observed TG from 1 January 2007 to the lead time T. The dashed lines are for the persistence forecast of TG and the dotted lines are for the climatology forecast of TG on 1 January 2007.

3.3 Alternative autoregressive weather generator

This non-parametric weather generator (based on data resampling) is compared to a parametric autoregressive simple model, based on a similar principle of a relation between SLP and variables like temperature and NAO index. We build a multi-variate autoregressive model of order 1 Rt for SLP by expressing

where A is a “memory” matrix and Bt is multivariate random Gaussian noise with covariance matrix Σ. We assume that the multivariate process Rt yields the same covariance matrix C(0) and same lag-1 covariance matrix C(1) as SLP. The matrices A and Σ can be estimated by

and

The superscript t is matrix transposition. In order to avoid numerical problems in the estimation of C(0)−1, the model in Eq. (1) is formulated on the first 10 principal components (von Storch and Zwiers, 2001) of North Atlantic SLP (80∘ W–30∘ E, 30–70∘ N: blue rectangle in Fig. 1), which account for ≈80 % of the variance. In this way, C(0) is a diagonal matrix whose elements are the variances of the 10 principal components of SLP. Such a parametric model has been used as a null hypothesis for weather regime decomposition by Michelangeli et al. (1995).

We then perform a multilinear linear regression between the five mean daily temperature records (TG at Berlin, Toulouse, Orly, Madrid, and De Bilt) and the NAO index:

where , a is a 6×10 matrix, b is a 10-dimensional vector, and ϵt is a 10-dimensional residual term. We simulate Eq. (1) with the same observed initial conditions as for the analogue forecast. Then Eq. (4) is applied to simulate an ensemble of forecasts of temperatures and NAO index. In this multivariate autoregressive model (mAR1), the temporal atmospheric dynamics is contained in the matrix A. The major caveat of the parametric model in Eqs. (1 and 4) is that it does not contain a seasonal cycle. Introducing a seasonal dependence on the matrices A and a would require many tests that are beyond the scope of this paper.

3.4 Forecast skill

The simplest score we use is the temporal correlation between the median of the ensemble forecast and the observations. Due to the autocorrelation and seasonality of the variables we try to simulate (temperature and NAO index), we consider the correlations for the forecast in the months of January and July.

The continuous rank probability score (CRPS) compares the cumulated density functions of a forecast ensemble and observations yt, for all times t (Ferro, 2014).

Ft is the cumulated density function of the ensemble forecast at time t. It is obtained empirically from the ensemble of simulations of the model. 1(x≥yt) is the empirical cumulated density function of the observation yt.

The score is the average over all times:

The CRPS is a fair score (Ferro, 2014; Zamo and Naveau, 2018) in that it compares the probability distributions of forecasts and observations and it is optimal when they are the same. Discrete estimates of CRPS can yield a bias for small ensemble sizes S. We simulate S=100 trajectories for each forecast. This is more than most ensemble weather forecasts (typically, S=51 for the European Center for Medium Range Forecast (ECMWF) ensemble forecast) and guarantees that the bias due to the number of samples is negligible. A perfect forecast gives a CRPS value of 0.

The CRPS can be decomposed into reliability, resolution, and uncertainty terms (Hersbach, 2000, Eq. 35):

The reliability Reli term measures whether events that are forecast with a certain probability p did occur with the same fraction p from the observations (Hersbach, 2000). The remaining terms of the right-hand side of Eq. (7) are called the potential CRPS; i.e., it is the CRPS value one would obtain if the forecast were perfectly reliable (Reli=0). Hersbach (2000) argues that the potential CRPS is sensitive to the average spread of the ensemble.

The units of CRPS are those of the variable to be forecast; therefore, its interpretation is not universal, and comparing the CRPS values for the NAO index and temperatures is not directly possible. Therefore, it is useful to compare the CRPS of the forecast with the one of a reference forecast. A normalization of CRPS provides a skill score with respect to that reference:

The CRPSS indicates an improvement over the reference forecast. A perfect forecast has a CRPSS of 1. A positive improvement over the reference yields a positive CRPSS value. A value of 0 or less indicates that the forecast is worse than the reference.

We compute CRPSS for the climatological and persistence references. We used packages “SpecsVerification” and “verification” in R to compute CRPS decomposition and CRPSS scores. Hence we compare our stochastic forecasts with forecasts made from climatology and persistence. By construction, the persistence forecast shows an offset with the actual value ahead, because the persistence is the value of the average of observations between t−T and t. The variability of the climatological forecast is low because it is an average of T long sequences.

3.5 Protocol

We tested the ensemble forecast system on the period between 1970 and 2010. We simulate N=100 trajectories of lengths days for a given date t, and average each trajectory over T. The dates t are shifted every days, respectively, for each different value of lead times T.

We recall that the tests we perform are on the average of the forecast between t and t+T, not on the value at time t+T.

The CRPS and CRPSS are computed for each value of lead times T, with references of climatology and persistence. We determine the CRPS reliability and plot quantile–quantile plots for observed and forecast values of the averages. This allows assessment of biases in simulating averages. Variables such as temperature yield a strong seasonality, which is larger than daily variations. It is hence natural to have very high correlations or skill scores if one considers those scores over the whole year. Therefore we compare the skill scores for January and July, in order to avoid obtaining artificially high scores.

We performed our stochastic forecasts on the NAO index and European temperatures with the analogue stochastic weather generator and the mAR1 model. The two datasets (NAO and temperature) are treated separately because the simulations are done with two different analogue computations (Sect. 3.1).

4.1 NAO index

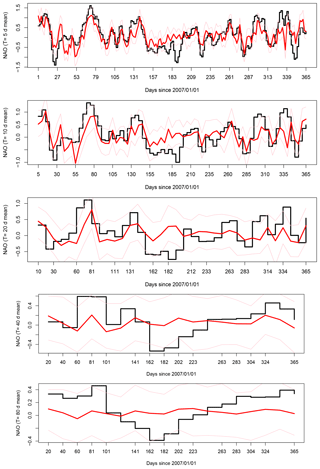

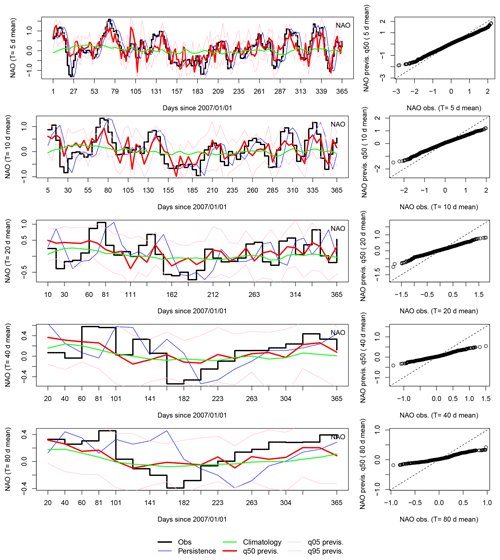

For illustration purposes, we comment on the skill of simulations of 2007. Fig. 4 shows the simulated and observed values of averages of the NAO index, for five values of T (5, 10, 20, 40, and 80 days). This example suggests a good skill to forecast the NAO index from SLP analogues, especially at lead times of T=5 to 10 days.

The q−q plots of the median of simulations versus observations show a bias that reduces the range of variations (Fig. 4, right column). There are two reasons for this reduction of variance, which is proportional to the lead time T:

-

individual simulated trajectories tend to “collapse” toward a climatological value after ≈10 days, and

-

taking the median of all simulations also naturally reduces the variance.

The q−q plots are almost linear. This means that the bias could in principle be corrected by a linear regression. We will not perform such a correction in the sequel.

Figure 4Left column: time series of analogue ensemble forecasts for 2007, for lead times days. Red lines represent the median of 100 simulations; pink lines represent the 5th and 95th quantiles of the 100-member ensemble. Right column: q−q plots of NAO forecasts versus observed values for all years in 1970–2010 for all lead times. The dotted line is the first diagonal.

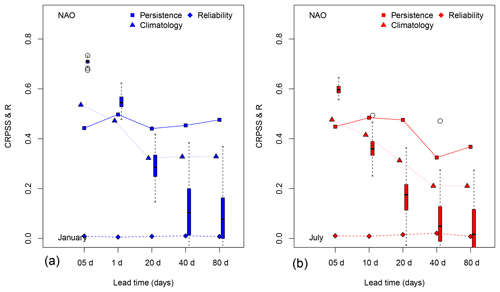

The correlation, CRPS reliability, and CRPSS values for NAO index forecast are shown in Fig. 5. The values of CRPSSpers (for a persistence reference) are rather stable (with a slight increase) near 0.45, and the climatology score slightly decreases with T, although positive.

The CRPS reliability values range from (10 days) to 0.01 (40 days). If they are normalized to the CRPS value (or the variance of the NAO index), this is in the same range as the results of Hersbach (2000) for the ECMWF forecast system up to 10 days.

On the one hand, the CRPSSclim values do not depend on the season (identical triangles in Fig. 5). On the other hand, CRPSSpers values are higher in July than January for lead times T≤10 days, and lower for lead times T≥40 days (squares in Fig. 5). This means that the climatology forecast tends to be better than the persistence forecast for T>5 days (squares higher than triangles in Fig. 5), which can be anticipated because of the inherent lag of the persistence forecast.

The correlation scores decrease with lead time T. The correlation skill is higher in January than in July. It is no longer significantly positive for T larger than 40 days (25th to 75th quantile intervals contain the 0 value). The correlation score values range between 0.65 and 0.82 for T=5-day forecasts, and 0.45 and 0.77 for T=10-day forecasts, depending on the season. This is consistent with the NAO forecast of the Climate Prediction Center (r=0.69 for a 10-day forecast). The correlation score is still significantly positive for T=20 days. The higher correlation scores over the whole year (not shown) reflect a (small) seasonal cycle of the NAO index. This artificially enhances the score for those lead times because SLP analogue predictands tend to reproduce the seasonality of the SLP field (by construction of the simulation procedure), and the NAO index and SLP variations are closely linked on monthly timescales (by construction of the NAO index).

Figure 5Skill scores for the NAO index for lead times T of 5, 10, 20, 40, and 80 days for January (a: blue) and July (b: red). Squares indicate CRPSSpers, triangles CRPSSclim, and boxplots correlation. The diamonds indicate the reliability of CRPS (on the same scale as CRPSS). Triangles are identical for all days, January and July. The boxplots for the correlation indicate the spread across the 100-member ensemble forecasts.

For comparison purposes, the multivariate autoregressive model NAO time series are shown in Fig. 6. The skill scores (correlation and CRPSS) for the NAO index give positive values, but not as high as for the analogue forecast. Since this weather generator is designed to yield stationary statistical properties, the score values do not depend on the season. CRPSS values for climatology range from 0.36 (T=5 days) to 0.23 (T=80 days). Those values are lower than for the analogue system for lead times lower than 20 days (Fig. 5, triangles). The correlation values decrease from 0.58 (T=5 days) to 0.05 (T=80 days), which is lower than for the analogue system (Fig. 5, boxplots).

4.2 European temperatures

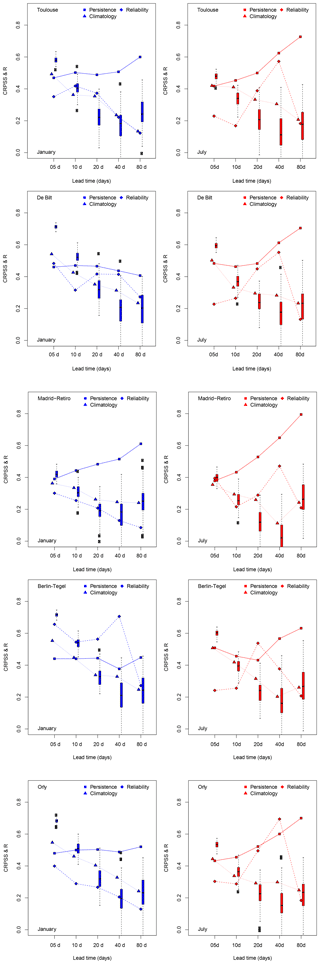

The correlation and CRPSS values for daily mean temperature (TG) forecast are shown in Fig. 7. The values of CRPSSpers (for a persistence reference) increase with lead time T. This is not surprising because the forecast for the next T days is based on the average of the past T days. Therefore, the persistence forecast is always “late” due to the strong seasonality of temperature variations.

The CRPSSclim values decrease with T and plateau near ≈0.2. This skill score is still positive (albeit small) for a seasonal forecast. This positive average skill (CRPSS>0.2) illustrates that the stochastic weather generator follows the seasonality of temperature variations. We note that the CRPSS values for temperature are higher than for the NAO index. This is explained by the seasonality of temperatures, which is more pronounced than in the daily NAO index.

The CRPSS values are rather consistent for four of the stations (Toulouse, De Bilt, Berlin, and Orly). The stochastic model CRPSS fares slightly worse at Madrid station.

The CRPS reliability values are shown in Fig. 7. Their absolute values are larger than for the NAO index, and need to be normalized by the variance of temperature (or the CRPS value itself), as the units of TG are tenths of degrees. The average relative reliability values for lead times lower than 10 days are also similar to what is reported by Hersbach (2000). The reliability values seem to decrease with lead times in winter. They peak at lead times of 40 days in the summer (except for Berlin, where the peak is at 20 days in the summer), and then decrease.

The correlation scores for January and July decrease with lead time T. The correlation score values for all days are above 0.97 due to the seasonality of temperatures and forecasts. Since this is not informative, this is not shown in Fig. 7. The correlations are always significantly positive for Toulouse, De Bilt, Berlin, and Orly. The summer correlation intervals contain the 0 value at Madrid. This is probably due to the fact that temperature is not linked to the atmospheric circulation in the summer, but rather to local processes of evapotranspiration (Schaer et al., 1999; Seneviratne et al., 2006). The distribution of the correlation scores (boxplots in Fig. 7) is significantly positive for lead times up to 20 days. It becomes stable near values of 0.2 (or increases) for lead times larger than 40 days. This indicates that there is certainly an artificial predictability beyond those lead times, which shows an upper limit of forecasts for this system.

Figure 7Skill scores for mean daily temperature in Toulouse and De Bilt, for lead times T of 5, 10, 20, 40, and 80 days. Squares indicate CRPSSpers, triangles CRPSSclim, and circles correlation. The diamonds indicate the reliability of CRPS (on the same scale as CRPSS). Blue symbols (left) are for January and red symbols (right) are for July. Triangles are identical for January and July. The boxplots for the correlation indicate the spread across the 100-member ensemble forecasts. Skill scores for temperature in Madrid and Berlin (continued). Skill scores for temperature in Orly (continued).

The mAR1 system for temperature is not designed to yield a seasonal cycle (contrary to the analogue system). Therefore, the skill scores of this system for temperatures are negative (for CRPSS) or with non-significant correlations.

We have presented a system to generate ensembles of stochastic simulations of the atmospheric circulation, based on pre-computed analogues of circulation. This system is fairly light in terms of computing resources as it can be run on a (reasonably powerful) personal computer. The most fundamental assumption of the system is that the variable to be predicted is linked to the atmospheric circulation. The geographical window for the computation of analogues needs to be adjusted to the variable to be predicted, so that prior expertise is necessary for this analogue forecast system. This implies that this approach would not be adequate for variables that are not connected in any way to the atmospheric circulation (here approximated by SLP). The use of other atmospheric fields (e.g., geopotential heights) might increase the skill of the system. The computation of analogues with other parameters (geographical zone, atmospheric predictor, type of reanalysis, climate model output, etc.) can be easily performed with a web processing service (Hempelmann et al., 2018).

We have tested the performance of the system to simulate an NAO index and temperature variations in five European stations. The performance of such a system cannot beat a meteorological or seasonal forecast with a full-scale atmospheric model (Scaife et al., 2014), but its skill is positive, even at a monthly timescale, with a rather modest computational cost. From the combination of several skill scores (from CRPS and correlation), we obtain a forecast limit of 40 days, beyond which the interpretation of score values is artificial. We emphasize that the forecast is done on averages over lead times, not on the last value of the lead time.

The reason for the positive skill (especially against climatology) remains to be elucidated, especially for lead times longer than 20 days. We conjecture that the information contained in the initial condition (as done with regular weather forecasts) actually controls the mean behavior of the trajectories from that initial condition. But such a skill is actually “concentrated” in the first few days, because the trajectories tend to converge to the climatology after 20 days. The combination of several skill scores shows that such a system is not appropriate for ensemble forecasts beyond lead times of 40 days, which is lower than what is reported by Baker et al. (2018) for a meteorological forecast of the NAO.

Although the forecast system is random, it contains elements of the dynamics of the atmosphere, from the choice of the analogues. This system is consistently better than a simple multivariate autoregressive (mAR1) model for lead times shorter than 20 days. Since the seasonal cycle is naturally embedded in the analogue simulations, there is no need to parameterize it, in contrast to the mAR1 model.

Recent experimental results in chaotic systems have shown that a well-tuned neural network algorithm could simulate efficiently the trajectories of a chaotic dynamical system (Pathak et al., 2018b). Our system is an extreme simplification of an artificial intelligence algorithm, but it does demonstrate the forecast skill of such approaches. The advantage here is the physical constraint between the atmospheric circulation and the variables to be simulated.

This system was tested on temperature for five European datasets. This could be extended to precipitation or wind speed. If a real-time forecast is to be performed, we emphasize that only the predictor (here, SLP) needs to be regularly updated for the computation of analogues.

The goal of such a system is not to replace ensemble numerical weather/seasonal forecast. Rather, it can refine the usual references (climatology and persistence) for the evaluation of skill scores. This would create a third “machine learning” reference for CRPSS that might be harder to beat than the classical references.

The code for the computation of analogues is available at (free CeCILL license) https://a2c2.lsce.ipsl.fr/index.php/deliverables/101-analogue-software (last access: 12 February 2019) and at https://github.com/bird-house/blackswan (last access: 12 February 2019).

The code for simulations is available at https://a2c2.lsce.ipsl.fr/index.php/licences (last access: 8 February 2019) under a free CeCILL licence (http://www.cecill.info/licences.fr.html, last access: 12 February 2019).

The temperature data are available at https://www.ecad.eu (last access: 12 February 2019).

The NAO index data are available at http://www.cpc.ncep.noaa.gov/products/precip/CWlink/pna/nao.shtml (last access: 12 February 2019).

The NCEP reanalysis SLP data are available at https://www.esrl.noaa.gov/psd/data/gridded/data.ncep.reanalysis.html (last access: 12 February 2019).

PY wrote the codes and designed the experiments. CD participated in the writing of the manuscript.

The authors declare that they have no conflict of interest.

This work was supported by a grant from Labex-IPSL and ERC grant no.

338965-A2C2. We thank Mariette Lamige and Zhongya Liu, who performed

preliminary analyses during their training periods. We thank the two

anonymous reviewers for their suggestions to use the CRPS decomposition and a

simple parametric stochastic model.

Edited by:

James Annan

Reviewed by: two anonymous referees

Ailliot, P., Allard, D., Monbet, V., and Naveau, P.: Stochastic weather generators: an overview of weather type models, J. Soc. Franc. Stat., 156, 101–113, 2015. a

Atencia, A. and Zawadzki, I.: A comparison of two techniques for generating nowcasting ensembles. Part II: Analogs selection and comparison of techniques, Mon. Weather Rev., 143, 2890–2908, 2015. a, b

Baker, L. H., Shaffrey, L. C., and Scaife, A. A.: Improved seasonal prediction of UK regional precipitation using atmospheric circulation, Int. J. Climatol., 38, e437–e453, 2018. a

Barnston, A. G. and Livezey, R. E.: Classification, Seasonality and Persistence of Low-Frequency Atmospheric Circulation Patterns, Mon. Weather Rev., 115, 1083–1126, 1987. a

Carter, T. R.: Developing scenarios of atmosphere, weather and climate for northern regions, Agr. Food Sci. Fin., 5, 235–249, 1996. a

Ferro, C. A. T.: Fair scores for ensemble forecasts, Q. J. Roy. Meteorol. Soc., 140, 1917–1923, 2014. a, b

Freitas, A. C. M., Freitas, J. M., and Vaienti, S.: Extreme Value Laws for sequences of intermittent maps, arXiv preprint arXiv:1605.06287, 2016. a

Hempelmann, N., Ehbrecht, C., Alvarez-Castro, C., Brockmann, P., Falk, W., Hoffmann, J., Kindermann, S., Koziol, B., Nangini, C., Radanovics, S., Vautard, R., and Yiou, P.: Web processing service for climate impact and extreme weather event analyses. Flyingpigeon (Version 1.0), Comput. Geosci., 110, 65–72, https://doi.org/10.1016/j.cageo.2017.10.004, 2018. a

Hersbach, H.: Decomposition of the continuous ranked probability score for ensemble prediction systems, Weather Forecast., 15, 559–570, 2000. a, b, c, d, e

Hurrell, J.: Decadal trends in the North-Atlantic Oscillation - regional temperatures and precipitation, Science, 269, 676–679, 1995. a

Hurrell, J., Kushnir, Y., Ottersen, G., and Visbeck, M. (Eds.): The North Atlantic Oscillation : Climatic Significance and Environmental Impact, Vol. 134 of Geophysical monograph, American Geophysical Union, Washington, DC, 2003. a, b, c

Iizumi, T., Takayabu, I., Dairaku, K., Kusaka, H., Nishimori, M., Sakurai, G., Ishizaki, N. N., Adachi, S. A., and Semenov, M. A.: Future change of daily precipitation indices in Japan: A stochastic weather generator-based bootstrap approach to provide probabilistic climate information, J. Geophys. Res.-Atmos., 117, D11114, https://doi.org/10.1029/2011jd017197, 2012. a

Jézéquel, A., Yiou, P., and Radanovics, S.: Role of circulation in European heatwaves using flow analogues, Clim. Dynam., 50, 1145–1159, 2018. a

Kistler, R., Kalnay, E., Collins, W., Saha, S., White, G., Woollen, J., Chelliah, M., Ebisuzaki, W., Kanamitsu, M., Kousky, V., van den Dool, H., Jenne, R., and Fiorino, M.: The NCEP-NCAR 50-year reanalysis: Monthly means CD-ROM and documentation, B. Am. Meteorol. Soc., 82, 247–267, 2001. a

Klein-Tank, A., Wijngaard, J., Konnen, G., Bohm, R., Demaree, G., Gocheva, A., Mileta, M., Pashiardis, S., Hejkrlik, L., Kern-Hansen, C., Heino, R., Bessemoulin, P., Muller-Westermeier, G., Tzanakou, M., Szalai, S., Palsdottir, T., Fitzgerald, D., Rubin, S., Capaldo, M., Maugeri, M., Leitass, A., Bukantis, A., Aberfeld, R., Van Engelen, A., Forland, E., Mietus, M., Coelho, F., Mares, C., Razuvaev, V., Nieplova, E., Cegnar, T., Lopez, J., Dahlstrom, B., Moberg, A., Kirchhofer, W., Ceylan, A., Pachaliuk, O., Alexander, L., and Petrovic, P.: Daily dataset of 20th-century surface air temperature and precipitation series for the European Climate Assessment, Int. J. Climatol., 22, 1441–1453, 2002. a

Kreienkamp, F., Spekat, A., and Enke, W.: The Weather Generator Used in the Empirical Statistical Downscaling Method, WETTREG, Atmosphere, 4, 169–197, https://doi.org/10.3390/atmos4020169, 2013. a

Lorenz, E. N.: Atmospheric Predictability as Revealed by Naturally Occurring Analogues, J. Atmos. Sci., 26, 636–646, 1969. a

Lucarini, V., Faranda, D., Freitas, A. C. M., Freitas, J. M., Holland, M., Kuna, T., Nicol, M., Todd, M., and Vaienti, S.: Extremes and recurrence in dynamical systems, John Wiley & Sons, 2016. a

Maraun, D., Wetterhall, F., Ireson, A. M., Chandler, R. E., Kendon, E. J., Widmann, M., Brienen, S., Rust, H. W., Sauter, T., Themessl, M., Venema, V. K. C., Chun, K. P., Goodess, C. M., Jones, R. G., Onof, C., Vrac, M., and Thiele-Eich, I.: Precipitation Downscaling under Climate Change: Recent Developments to Bridge the Gap between Dynamical Models and the End User, Rev. Geophys., 48, Rg3003, https://doi.org/10.1029/2009rg000314, 2010. a

Michelangeli, P., Vautard, R., and Legras, B.: Weather regimes: Recurrence and quasi-stationarity, J. Atmos. Sci., 52, 1237–1256, 1995. a, b

Parey, S., Hoang, T. T. H., and Dacunha-Castelle, D.: Validation of a stochastic temperature generator focusing on extremes, and an example of use for climate change, Clim. Res., 59, 61–75, 2014. a, b

Pathak, J., Hunt, B., Girvan, M., Lu, Z., and Ott, E.: Model-Free Prediction of Large Spatiotemporally Chaotic Systems from Data: A Reservoir Computing Approach, Phys. Rev. Lett., 120, 024102, https://doi.org/10.1103/PhysRevLett.120.024102, 2018a. a

Pathak, J., Wikner, A., Fussell, R., Chandra, S., Hunt, B. R., Girvan, M., and Ott, E.: Hybrid forecasting of chaotic processes: using machine learning in conjunction with a knowledge-based model, Chaos: An Interdisciplinary Journal of Nonlinear Science, 28, 041101, https://doi.org/10.1063/1.5028373, 2018b. a

Scaife, A. A., Arribas, A., Blockley, E., Brookshaw, A., Clark, R. T., Dunstone, N., Eade, R., Fereday, D., Folland, C. K., and Gordon, M.: Skillful long-range prediction of European and North American winters, Geophys. Res. Lett., 41, 2514–2519, 2014. a, b

Schaer, C., Luthi, D., Beyerle, U., and Heise, E.: The soil-precipitation feedback: A process study with a regional climate model, J. Climate, 12, 722–741, 1999. a

Semenov, M. A. and Barrow, E. M.: Use of a stochastic weather generator in the development of climate change scenarios, Clim. Change, 35, 397–414, 1997. a

Seneviratne, S. I., Luthi, D., Litschi, M., and Schaer, C.: Land-atmosphere coupling and climate change in Europe, Nature, 443, 205–209, 2006. a

Slonosky, V. and Yiou, P.: The North Atlantic Oscillation and its relationship with near surface temperature, Geophys. Res. Lett., 28, 807–810, 2001. a, b

Sparks, N. J., Hardwick, S. R., Schmid, M., and Toumi, R.: IMAGE: a multivariate multi-site stochastic weather generator for European weather and climate, Stoch. Environ. Res. Risk Assess., 32, 771–784, 2018. a

Sturaro, G.: A closer look at the climatological discontinuities present in the NCEP/NCAR reanalysis temperature due to the introduction of satellite data, Clim. Dynam., 21, 309–316, https://doi.org/10.1007/s00382-003-0334-4, 2003. a

van den Dool, H. M.: A new look at weather forecasting through analogs, Mon. Weather Rev., 117, 2230–2247, https://doi.org/10.1175/1520-0493(1989)117<2230:ANLAWF>2.0.CO;2, 1989. a

van den Dool, H. M.: Empirical Methods in Short-Term Climate Prediction, Oxford University Press, Oxford, 2007. a, b

von Storch, H. and Zwiers, F. W.: Statistical Analysis in Climate Research, Cambridge University Press, Cambridge, 2001. a

Weisheimer, A. and Palmer, T. N.: On the reliability of seasonal climate forecasts, J. Roy. Soc. Inter., 11, 20131162, https://doi.org/10.1098/rsif.2013.1162, 2014. a

Wilks, D.: Statistical Methods in the Atmospheric Sciences: An Introduction, Academic Press, San Diego, 1995. a, b

Wilks, D. S.: Multisite downscaling of daily precipitation with a stochastic weather generator, Clim. Res., 11, 125–136, 1999. a

Yiou, P.: AnaWEGE: a weather generator based on analogues of atmospheric circulation, Geosci. Model Dev., 7, 531–543, https://doi.org/10.5194/gmd-7-531-2014, 2014. a, b, c, d

Yiou, P., Salameh, T., Drobinski, P., Menut, L., Vautard, R., and Vrac, M.: Ensemble reconstruction of the atmospheric column from surface pressure using analogues, Clim. Dynam., 41, 1333–1344, https://doi.org/10.1007/s00382-012-1626-3, 2013. a

Zamo, M. and Naveau, P.: Estimation of the Continuous Ranked Probability Score with Limited Information and Applications to Ensemble Weather Forecasts, Math. Geosci., 50, 209–234, 2018. a