the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Machine learning for numerical weather and climate modelling: a review

Catherine O. de Burgh-Day

Tennessee Leeuwenburg

Machine learning (ML) is increasing in popularity in the field of weather and climate modelling. Applications range from improved solvers and preconditioners, to parameterization scheme emulation and replacement, and more recently even to full ML-based weather and climate prediction models. While ML has been used in this space for more than 25 years, it is only in the last 10 or so years that progress has accelerated to the point that ML applications are becoming competitive with numerical knowledge-based alternatives. In this review, we provide a roughly chronological summary of the application of ML to aspects of weather and climate modelling from early publications through to the latest progress at the time of writing. We also provide an overview of key ML terms, methodologies, and ethical considerations. Finally, we discuss some potentially beneficial future research directions. Our aim is to provide a primer for researchers and model developers to rapidly familiarize and update themselves with the world of ML in the context of weather and climate models.

- Article

(7102 KB) - Full-text XML

- BibTeX

- EndNote

Current state-of-the-art weather and climate models use numerical methods to solve equations representing the dynamics of the atmosphere and ocean on meshed grids. The grid-scale effects of processes that are too small to be resolved are either represented by parametrization schemes or prescribed. These numerical weather and climate forecasts are computationally costly and are not easy to implement on specialized compute resources such as graphics processing units†1 (GPUs; although there are efforts underway to do so, for example in LFRic; Adams et al., 2019). One of the main approaches to improving forecast accuracy is to increase model resolution (reduced time step between model increments and/or decreased grid spacing), but due to the high computational cost of this approach, improvements in model skill are hampered by the finite supercomputer capacity available. An additional pathway to improve skill is to improve the understanding and representation of subgrid-scale processes; however this is again a potentially computationally costly exercise.

In the remainder of this introduction, we overview the state of machine learning in weather and climate research without always providing references; we instead provide relevant references in the detailed sections that follow.

Machine learning is an increasingly powerful and popular tool. It has proven to be computationally efficient, as well as being an accurate way to model subgrid-scale processes. The term “machine learning” (ML) was first coined by Arthur Samuel in the 1950s to refer to a “field of study that gives computers the ability to learn without being explicitly programmed” (http://infolab.stanford.edu/pub/voy/museum/samuel.html, last access: 7 February 2023). Learning by example is the defining characteristic of ML.

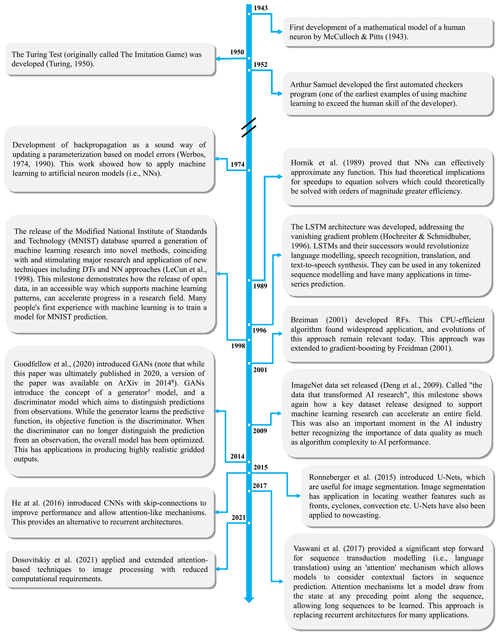

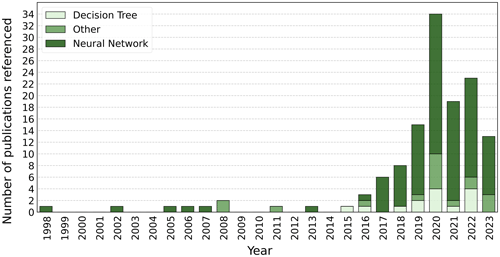

The growing potential for ML in weather and climate modelling is being increasingly recognized by meteorological agencies and researchers around the world. The former is evidenced by the development of strategies and frameworks to better support the development of ML research, such as the Data Science Framework recently published by the Met Office in the UK (https://www.metoffice.gov.uk/research/foundation/informatics-lab/met-office-data-science-framework, last access: 7 February 2023). The latter is made clear by the explosion in publications from academia, government agencies, and private industry in this space, as demonstrated by the rest of this review. Figure 1 shows the number of publications cited in this review using different categories of ML algorithms by year, and it clearly illustrates the increase in the uptake of ML methods by the research community.

Figure 1A stacked bar graph of the number of publications cited in this review using different categories of ML algorithms by per year. For a description of neural networks and decision trees see Sect. 2.1 and 2.2 respectively. The “other” category is a collection of ML model types other than decision trees and neural networks, each of which only had one or two examples of use in this review. This included custom supervised and self-supervised algorithms, support vector machines and relevance vector machine models, regression models, unsupervised learning models, reservoir computing models, and non-NN Gaussian models. This figure includes all references from this review except for seminal ML papers that are on new ML methods (e.g. foundational ML papers from outside the domain of weather and climate modelling), review papers, any paper cited that concerns a topic which is out of scope (e.g. nowcasting), and any other paper which does not present a new method directly applicable to weather and climate modelling. The full table of citations is provided in Appendix A.

This is not necessarily an unbiased sample of the use of different architectures in the literature since the selection of papers cited in this review focuses on telling the story of the growth of the use of ML in weather and climate modelling over time rather than being a comprehensive list of all uses of ML in the literature.

There are established techniques and aspects of the weather and climate modelling lifecycle that would already be considered ML by many. For example, linear regression†, principal component analysis, correlations, and the calculation of teleconnections can all be considered types of ML. Data assimilation techniques could also be considered a form of ML. There are, however, other classes of ML (e.g. neural networks† and decision trees†) which are much less widely used within the weather and climate modelling space but have great potential to be of benefit. There is growing interest in, and increasingly effective application of, these ML techniques to take the place of more traditional approaches to modelling. The potential for ML in weather and climate modelling extends all the way from replacement of individual sub-components of the model (to improve accuracy and reduce computational cost) to full replacement of the entire numerical model.

While ML models are typically computationally costly during training, they can provide very fast predictions at inference† time, especially on GPU hardware. They often also avoid the need to have a full understanding of the processes being represented and can learn and infer complex relationships without any need for them to be explicitly encoded. These properties make ML an attractive alternative to traditional parametrization, numerical solver, and modelling methods.

Neural networks (NNs, explained further in Sect. 2.1) in particular are an increasingly favoured alternative approach for representing subgrid-scale processes or replacing numerical models entirely. They consist of several interconnected layers† of non-linear nodes†, with the number of intermediate layers depending on the complexity of the system being represented. These nodes allow for the encoding of an arbitrary number of interrelationships between arbitrary parameters to represent the system, removing the need to explicitly encode these interrelationships into a parameterization or numerical model.

One challenge that must be overcome before there will be more widespread acceptance of ML as an alternative to traditional modelling methods is that ML is seen as lacking interpretability. Most ML models do not explicitly represent the physical processes they are simulating, although physics-constrained ML is a new and growing field which goes some way to addressing this (see Sect. 6). Furthermore, the techniques available to gain insight into the relative importance and predictive mechanism of each predictor (i.e. the model outputs) are limited. In contrast, traditional models are usually driven by some understanding and/or representation of the physical mechanisms and processes which are occurring. This makes it possible to more easily gain insight into what physical drivers could explain a given output. The “black box” nature of many current ML approaches to parametrization makes them an unpopular choice for many researchers (and can be off-putting for decision makers) since, for example, explaining what went wrong in a model after a bad forecast can be more challenging if there are processes in the model which are not, and cannot, be understood through the lens of physics. However, increasing attention is being paid to the interpretability of ML models (e.g. McGovern et al., 2019; Toms et al., 2020; Samek et al., 2021), and there are existing methods to provide greater insight into the way physical information is propagated through them (e.g. attention maps, which identify the regions in spatial input data that have the greatest impact on the output field, and ablation studies, which involve comparing reduced data sources and/or models to the original models that have full access to available data to gain insight into the models).

As with their traditional counterparts, ML-based parametrizations and emulators are typically initially developed in single-column models, aquaplanet configurations, or otherwise simplified models. There are many examples of ML-based schemes which have been shown to perform well against benchmark alternatives in this setting, only to fail to do so in a realistic model setting. A common theme is that these ML schemes rapidly excite instabilities in the model as errors in the ML parametrization push key parameters outside of the domain of the training data as the overall model is integrated forward in time, leading to rapidly escalating errors and to the model “blowing up”. Similarly, many ML-based full-model replacements perform well for short lead times, only to exhibit model drift and a rapid loss of skill for longer lead times due to rapidly growing errors and the model drifting outside its training envelope.

In recent years, however, progress has been made in developing ML parametrizations which are stable within realistic models (i.e. not toy models, aquaplanets, etc.), as well as ML-based full models which can run stably and skilfully to longer lead times. This is usually achieved through training the model on more comprehensive data, employing ML architectures which keep the model outputs within physically real limits or imposing physical constraints or conservation rules within the ML architecture or training loss functions†.

There are still challenges and possible limitations to an ML approach to weather and climate modelling. In most cases, a robust ML model or parameterization scheme should be able to

-

remain stable in a full (i.e. non-idealized) model run

-

generalize to cases outside its training envelope

-

conserve energy and achieve the required closures.

Additionally, for an ML approach to be worthwhile it must provide one or more of the following benefits:

-

for ML parametrization schemes,

- –

a speedup of the representation of a subgrid-scale process vs. when run with a traditional parametrization scheme, which can make the difference between the scheme being cost-effective to run or not – when it is not cost-effective the process usually needs to be represented with a static forcing or boundary condition file;

- –

a speedup of the model vs. when run with traditional parametrization schemes;

- –

improved representation of subgrid process(es) over traditional parameterization schemes, as measured by metrics appropriate to the situation;

- –

improved overall accuracy and/or skill of the model when run with traditional parametrization schemes;

- –

insight into physical processes not provided by current numerical models or theory;

- –

-

for full ML models,

- –

a speedup of the model vs. an appropriate numerical model control;

- –

improved overall accuracy and/or skill of the model vs. an appropriate numerical model control;

- –

skilful prediction to greater lead times than an appropriate numerical model control;

- –

insight into physical processes not provided by current numerical models or theory.

- –

Furthermore, in some cases of ML approaches to weather and climate modelling problems (particularly for full-model replacement) the work is led by data scientists and ML researchers with limited expertise in weather and climate model evaluation. This can lead to flawed, misleading, or incomplete evaluations. Hewamalage et al. (2022) have sought to rectify this problem by providing a guide to forecast evaluation for data scientists.

The scope of this review is deliberately limited to the application of ML within numerical weather and climate models or to their replacement. This is done to keep the length of this review manageable. ML has enormous utility for other aspects of the forecast value chain such as observation quality assurance, data assimilation, model output postprocessing, forecast product generation, downscaling, impact prediction, and decision support tools. A review of the application of, and progress in, ML in these areas would be of great value but is outside the scope of this review and is left to other work. Molina et al. (2023) have provided a very useful review of ML for climate variability and extremes which is highly complementary to this review. They draw similar lines of delineation in the earth system modelling (ESM) value chain to those mentioned above; describing them as “initializing the ESM, running the ESM, and postprocessing ESM output”. They examine each of these steps in turn, with a focus on the prediction of climate variability and extremes. Here we take a different approach, focusing on one part of the value chain (running the ESM) but looking in more detail at this one part. Additionally, here we consider climate modelling in the context of multiyear and free-running multidecadal simulations but exclude the topic of ML for climate change projections, climate scenarios, and multi-sector dynamics. This is again in the interests of ensuring the scope of the review is manageable rather than because these topics are not worthy of review. On the contrary, a review dedicated to the utility of machine learning in this area would be of enormous value to the community but cannot be adequately explored here. A brief introduction to key ML architectures and concepts, including suggested foundational reading, is also provided to aid readers who are unfamiliar with the subject.

The remainder of this review is structured as follows: in Sect. 2 an introduction to the two ML architectures most prevalent in the review is provided, followed by a suggested methodological approach to applying ML to a problem and finally a brief overview of some of the major ML architectures and algorithms. With this background in place, the application of ML in weather and climate modelling is explored in the following five sections. In Sect. 3, ML use in subgrid parametrization and emulation, along with tools and challenges specific to this domain, are covered. Zooming out from subgrid-scale to processes resolved on the model grid, in Sect. 4 the application of ML for the partial differential equations governing fluid flow is reviewed. Expanding the scope further again to consider the entire system, the use of ML for full-model replacement or emulation is reviewed in Sect. 5. In Sect. 6 the growing field of physics-constrained ML models is introduced, and in Sect. 7 a number of topics tangential to the main focus of this review are briefly mentioned. Setting the work covered in the previous sections in a broader context, a review of the history of, and progress in, ML outside of the fields of weather and climate science is presented in Sect. 8. In Sect. 9 some practical considerations for the integration of ML innovations into operational and climate models are discussed, followed by a short introduction to some of the ethical considerations associated with the use of ML in weather and climate modelling in Sect. 10. In Sect. 11, some future research directions are speculated on, and some suggestions are made for promising areas for progression. Finally, a summary is presented in Sect. 12, and a glossary of terms is provided after the final section to aid the reader in their understanding of key concepts and words (Appendix B).

While the scope of this paper is a review of ML work directly applicable to weather and climate modelling, an abridged introduction to some key fundamental ML concepts is provided here to aid the reader. Suggested starting points for interested readers, including guidance on the utility of different model architectures and algorithms, as well as the connections between different applications and approaches, are as follows:

-

Hsieh (2023) provides a thorough textbook on environmental data science including statistics and machine learning.

-

Chase et al. (2022a, b) provide an introduction to various machine learning algorithms with worked examples in a tutorial format and an excellent on-ramp to ML for weather and climate modelling.

-

Russell and Norvig (2021) provide a comprehensive book regarding artificial intelligence in general.

-

Goodfellow et al. (2016) provide a well-regarded book on deep learning theory and modern practise.

-

Hastie et al. (2009) provide a book on statistics and machine learning theory.

This introductory section is a brief exposition of the concepts most central to this review. Definitions for this section can be found in the glossary.

The majority of ML methods which have found traction in weather and climate modelling were first developed in fields such as computer vision, natural language processing, and statistical modelling. Few, if any, of the methods mentioned in this paper could be considered unique to weather and climate modelling; however they have in many cases been modified to a greater or lesser extent to suit the characteristics of the problem. In this review, the term algorithm refers to the mathematical underpinnings of a machine learning approach. By this definition, decision trees (DTs), NNs, linear regression, and Fourier transforms are examples of algorithms. The two most relevant algorithms for this review are DTs and NNs. Many ML algorithms can be thought of as optimizing a non-linear regression, with deep learning utilizing an extremely high-dimensional model. There is no consensus on the definition of ML, with the term encompassing relatively large or small topical domains depending on who is asked. A good rule of thumb, however, is that any iterative computational process that seeks to minimize a loss function or optimize an objective function can be considered to be a form of ML. Some of the chief concerns in machine learning are generalizability of the models, how to train (optimize the variables of) the model, and how to ensure robustness. The inputs and outputs of machine learning models are often the same as physical models or model components. The term architecture in machine learning refers to a specific way of utilizing an algorithm to achieve a modelling objective reliably. For example, the U-Net† architecture is a specific way of laying out an NN which has proven effective in many applications. The extreme gradient boosting decision tree† architecture is a specific way of utilizing DTs which has proven reliable and effective for an extraordinary number of problems and situations and is an excellent choice as a first tool to experiment with machine learning.

A major current focus of ML research in the context of weather and climate modelling is new NN-based architectures and algorithms, as well as improved training regimes. Many other algorithms have been and continue to be employed in machine learning more broadly but are not pertinent to this review.

A key point for ML researchers to be aware of is the critical importance of approaching model training carefully. There are many pitfalls which can result in underperformance, unexpected bias, or misclassification. For instance, adversarial examples† can occur “naturally”, and systems which process data can be subject to adversarial attack† through the intentional supply of data designed to fool a trained network.

2.1 Introduction to neural networks

NNs can be regarded as universal function approximators (Hornik et al., 1989; see also Lu et al., 2019). Further, NN architectures can theoretically be themselves modelled as a very wide feed-forward† NN with a single hidden layer†. A Fourier transform is another example of a function approximator, although it is not universal since not all functions are periodic. NNs can therefore theoretically be candidates for the accurate modelling of physical processes, although in practise they cannot always reliably interpolate beyond their training envelope and as such may not generalize to new regimes. ML models are typically introduced in the literature as being either classification† or regression† models and either supervised† or unsupervised†.

The mathematical underpinning of an NN can be considered distinctly in terms of its evaluation† (i.e. output, or prediction) step and its training update step. The prediction step can be considered as the evaluation of a many dimensional arbitrarily complex function.

The simplest NN is a single-input, single node network with a simple activation† function. A commonly used activation function for a single neuron is the sigmoid function, which helpfully compresses the range between 0 and 1 while allowing a non-linear response. A classification model will employ a threshold to map the output into the target categories. A regression model seeks to optimize the output result against some target value for the function. Larger networks make more use of linear activations and may utilize heterogenous activation function choices at different layers.

Complex NNs are built up from many individual nodes, which may have heterogenous activation functions and a complex connectome†. The forward pass†, by which inputs are fed into the network and evaluated against activation functions to produce the final prediction, uses computationally efficient processes to quickly produce the result.

The training step for an NN is far more complex. The earliest NNs were designed by hand rather than through automation. The training step applies a back-propagation† algorithm to apply adjustment factors to the weights† and biases† of each node based on the accuracy of the overall prediction from the network.

Training very large networks was initially impractical. Both hardware and architecture advances have changed this, resulting in the significant increase in the application of NNs to practical problems. Most NN research explores how to utilize different architectures to train more effective networks. There is little research going into improving the prediction step as the effectiveness of a network is limited by its ability to learn rather than its ability to predict. Some research into computational efficiency is relevant to the predictive step. NNs can still be technically challenging to work with, and a lot of skill and knowledge are needed to approach new applications.

The major classes of NN architectures most likely to be encountered are

-

small, fully connected networks, which are less commonly featured in recent publications but are still effective for many tasks and are still being applied and may well be encountered in practice;

-

convolutional† architectures, first applied to image content recognition, which match the connectome of the network to the fine structure of images in hierarchical fashion to learn to recognize high-level objects in images;

-

recurrent token†-sequence architectures, first applied to natural language processing, generation, and translation – applicable to any time-series problem – but now also applied to image and video applications, as well as mixed-mode applications such as text-to-image or text-to-video;

-

transformer architectures†, based on the attention mechanism† to provide a non-recurrent architecture that can be trained using parallelized training strategies, which allows larger models to be trained (originally developed for sequence prediction and extended to images processed through vision transformer architectures).

2.2 Introduction to decision trees

DTs are a series of decision points, typically represented in binary fashion based on a simple threshold. A particular DT of a particular size maps the input conditions into a final “leaf” node† which represents the outcome of the decisions up to that point.

A random forest† (RF) is the composition of a large number of DTs assembled according to a prescribed generation scheme, which are used as an ensemble. A gradient-boosted decision tree (GBDT) is built up sequentially, where each subsequent decision tree attempts to model the errors in the stack of trees built up thus far. This approach outperforms RFs in most cases.

The DT family of ML architectures are very easy to train and are very efficient. They are well documented in the public domain and in published literature. DTs are statistical in nature and are not capable of effectively generalizing to situations which are not similar to those seen during training. This can be an advantage when unbounded outputs would be problematic; however it can lead to problems where an ability to produce out-of-training solutions is necessary. Additionally, current DT implementations require all nodes (of all trees in the case of RFs and GBDTs) to be held in memory at inference time, making them potentially memory heavy.

2.3 Methodologies for machine learning

It is challenging to provide simplified advice for how to approach problem-solving in ML. There are few strict theoretical reasons to choose any one of the variety of architectures which are available. The authors would also caution against assuming that results in the literature are the product of a detailed comparison of alternative architectures or assuming that a deep learning approach is going to be easy or straightforward. It will often be the case that multiple machine learning architectures may be similarly effective, and determining the optimal architecture is likely to involve extensive iteration. Any specific methodology is also likely to reflect the intuitions (or biases), knowledge, and background of the authors of that methodology.

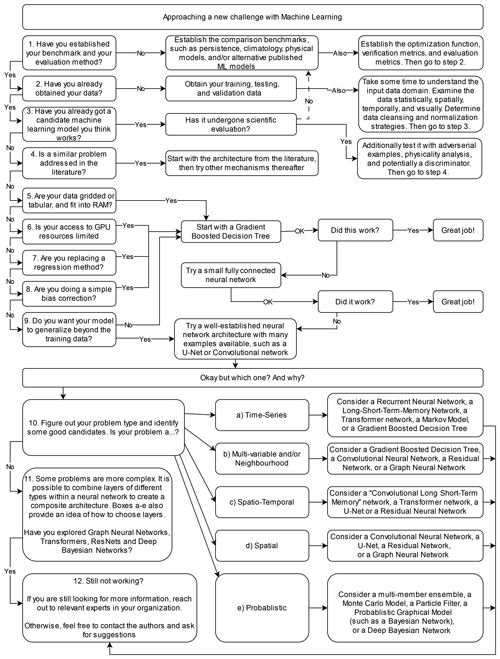

Nonetheless, there is an appetite from many scientists for reasonable ways to “get started” and to provide some assistance for practical decision-making, particularly if approaching the utilization of machine learning for the first time or in a new way. Figure 2 provides a set of suggested steps and decision points to help readers approach a new challenge with ML.

Figure 2A methodological flowchart illustrating a suggested approach to applying ML to a research problem.

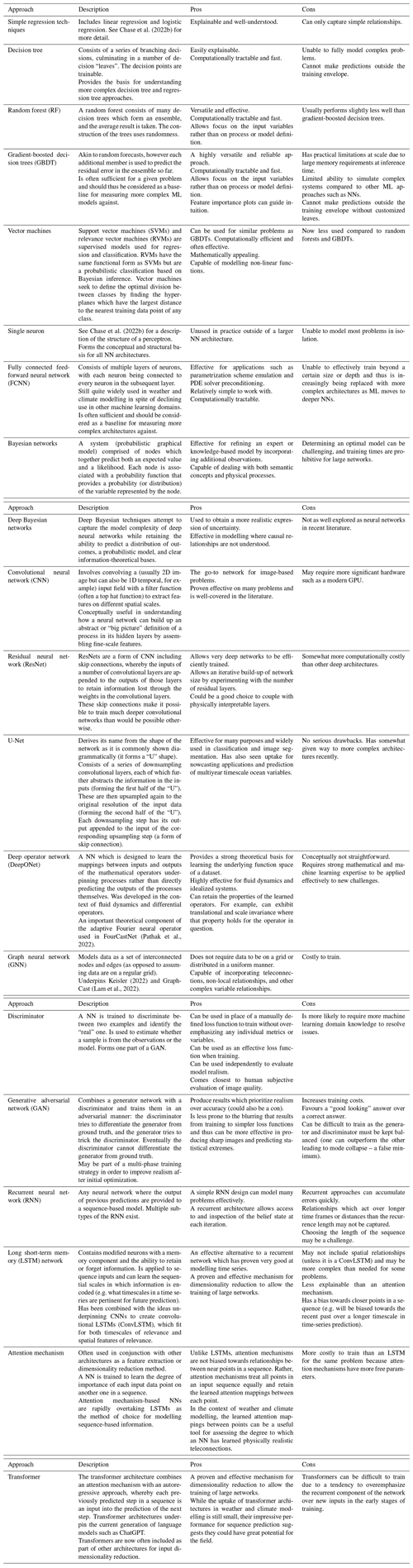

The flowchart presented in Fig. 2 provides an overview of methodological steps that can be taken when using ML to solve a problem; however it does not give much insight into the pros and cons of the common ML architectures available and used in the literature. Table 1 provides a brief summary of the major ML architectures and algorithms used by the studies cited in this review and gives a short note on some of their pros and cons. This table is not exhaustive, and readers are strongly encouraged to use it as a starting point for further exploration rather than a definitive guide. The relative strengths and weakness of each ML architecture can be subtle and highly dependent on the use case, their application, and their tuning. Establishing a good understanding of the ML architecture being used is a critical step for any scientist intending to delve into ML modelling. Interested readers should also refer to Chase et al. (2022b), where a similar table is presented that covers a wider variety of traditional methods but fewer neural network approaches.

An increasingly diverse array ML architectures are being applied to an ever-growing variety of challenges. These architectures all have sub-variants and ancestor architectures which may not be represented, all of which may be found to be of use for weather and climate modelling applications. Other concerns, such as data normalization†, training strategies, and capturing physicality, become as relevant as the choice of architecture once a certain level of performance is achieved.

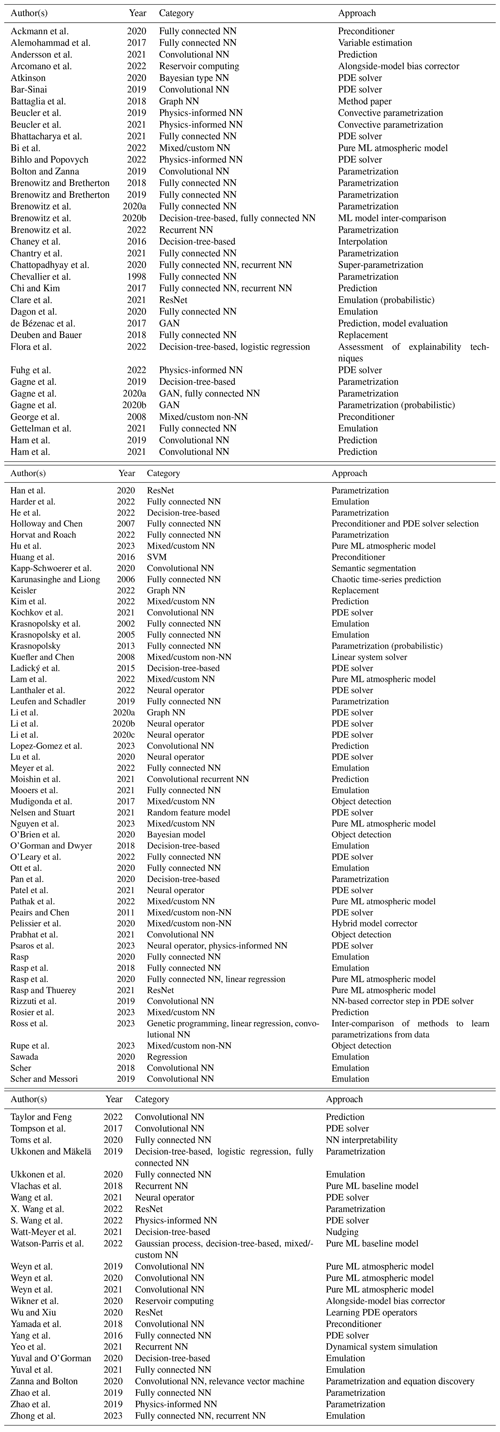

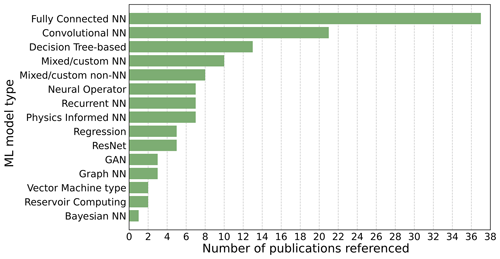

Figure 3 shows a summary of the ML architectures and algorithms used by the studies cited in this review, including the number of times each architecture is used. It can be seen from this that the two most frequently used general categories of architecture are fully connected NNs (FCNNs) and convolutional NNs (CNNs) of various sub-types.

Figure 3A count of the ML architectures and algorithms used by the studies cited in this review. As with Fig. 1, this figure includes all references from this review except for seminal ML papers that are on new ML methods (e.g. foundational ML papers), review papers, any paper cited that concerns a topic which is out of scope (e.g. nowcasting), and any other paper which does not present a new method directly applicable to weather and climate modelling. The full table of citations is provided in Appendix A.

Table 1A summary of major ML architectures and algorithms used by the studies cited in this review. Interested readers should also refer to Chase et al. (2022b) where a similar table is presented that covers a wider variety of traditional methods but fewer neural network approaches. See Appendix B for definitions of terms used in this table.

However some of the most significant recent research findings come from new architectures which by definition cannot have wide adoption yet (these are grouped under the “mixed/custom NN” category in Fig. 3).

In some cases, little justification is given for the ML architecture used in a study, and readers are therefore cautioned against using the relative popularity of a particular ML architecture in the literature as a guide for its suitability for a given task.

Furthermore, ML models increasingly use a mix of different algorithms and architectures. For example, a common combination is fully connected NN layers, convolutional NN layers, and long short-term memory (LSTM†) layers. For the purposes of Fig. 3, the authors have endeavoured to categorize the ML architectures used in the studies in this review as accurately as possible, with complex architectures being placed in the “mixed/custom NN” category; however where an architecture was mostly but not entirely aligned with a single category, it was placed in that category. For example, an LSTM model with a small number of feed-forward layers would be categorized as a recurrent neural network† (RNN). Since many contemporary ML models combine multiple architectural elements and algorithms into the one model, it is somewhat of an oversimplification to consider each of these in isolation, and while starting with a simple model design with a limited selection of layer types is advisable to aid interpretability, there is no reason they cannot be combined or used in conjunction with each other if this improves the performance of the model.

Adapting, optimizing, and debugging issues with machine learning systems can be very complex (especially so for large NNs) and is likely to require both machine learning expertise and domain knowledge (i.e. scientific knowledge). XGBoost† provides the ability to generate a chart showing the importance of the features in the model, which can be very helpful. Shapley additive explanations (Lundberg and Lee, 2017) can provide insights into feature importance for any model including NNs.

Subgrid-scale processes in numerical weather and climate models are typically represented via a statistical parameterization of what the macroscopic impacts of the process would be on resolved processes and parameters. These are commonly referred to as parameterization schemes and can be very complex and relatively computationally costly. For example, in the European Centre for Medium-Range Weather Forecast's (ECMWF) Integrated Forecasting System (IFS) model they account for about a third of the total computational cost of running the model (Chantry et al., 2021b). They also require some understanding of the underlying unresolved physical processes. Examples of subgrid-scale processes which are currently typically parameterized in operational systems include gravity wave drag, convection, radiation, subgrid-scale turbulence, and cloud microphysics. As additional complexity (for example representation of aerosols, atmospheric chemistry, and land surface processes) is added to numerical models, the computational cost will only increase.

ML presents an alternative approach to representing subgrid-scale processes, either by emulating the behaviour of an existing parametrization scheme, by emulating the behaviour of sub-components of the scheme, by replacing the current scheme or sub-component entirely with an ML-based scheme, or by replacing the aggregate effects of multiple parametrization schemes with a single ML model. ML emulation of existing schemes or sub-components has the advantage of maintaining the status quo within the model; no or minimal re-tuning of the model should be required since the ML emulation is trained to replicate the results of an already-tuned-for scheme. Because of this, the main benefit of this approach is that it reduces the computational cost of running the parametrization scheme. On the other hand, the full replacement of an existing parameterization scheme or sub-component with an ML alternative has the potential to be both computationally cheaper and also an improvement over the preceding scheme.

In the following subsections, a review of the literature on aspects of ML for the parametrization and emulation of subgrid-scale processes is presented.

3.1 Early work on ML parametrization and ML emulations

A popular target for applying ML in climate models is radiative transfer since it is one of the more computationally costly components of the model. As such, many early examples of the use of ML in subgrid parametrization schemes focus on aspects of this physical process. Chevallier et al. (1998) trained NNs to represent the radiative transfer budget from the top of the atmosphere to the land surface, with a focus on application in climate studies. They incorporated the information from both line-by-line and band models in their training to achieve competitive results against both benchmarks. Their NNs achieved accuracies comparable to or better than benchmark radiative transfer models of the time while also being much faster computationally.

In contrast to the ML-based scheme developed by Chevallier et al. (1998), which could be considered an entirely new parametrization scheme, Krasnopolsky et al. (2005) used NNs to develop an ML-based emulation of the existing atmospheric longwave radiation parametrization scheme in the NCAR Community Atmospheric Model (CAM). The authors demonstrated speedups with the NN emulation of 50–80 times the original parameterization scheme.

The emulation of existing schemes has since then become a popular method for achieving significant model speedups. For example, Gettelman et al. (2021) investigated the differences between a general circulation model (GCM) with the warm rain formation process replaced with a bin microphysical model (resulting in a 400 % slowdown) and one with the standard bulk microphysics parameterization in place. They then replaced the bin microphysical model with a set of NNs designed to emulate the differences observed and showed that this configuration was able to closely reproduce the effects of including the bin microphysical model, without any of the corresponding slowdown in the GCM.

3.2 ML for coarse graining

Coarse graining involves using higher-resolution model or analysis data to map the relationship between smaller-scale processes and a coarser grid resolution. It can be used to develop parameterization schemes without explicitly representing the physics of smaller-scale processes.

This has proven to be a popular method for developing ML-based parametrization schemes. Brenowitz and Bretherton (2018) used a near-global aquaplanet simulation run at 4 km grid length to train an NN to represent the apparent sources of heat and moisture averaged onto 160 km2 grid boxes. They then tested this scheme in a prognostic single-column model and showed that it performed better than a traditional model in matching the behaviour of the aquaplanet simulation it was trained on. Brenowitz and Bretherton (2019) built on this work by training their NN on the same global aquaplanet 4 km simulation but then embedded this scheme within a coarser-resolution (160 km2) global aquaplanet GCM. Embedding NNs within GCMs is challenging because feedbacks between NN and GCM components can cause spatially extended simulations to become dynamically unstable within a few model days. This is due to the inherently chaotic nature of the atmosphere in the GCM responding to inputs from the NN which cause rapidly escalating dynamical instabilities and/or violate physical conservation laws. The authors overcame this by identifying and removing inputs into the NN which were contributing to feedbacks between the NN and GCM (Brenowitz et al., 2020a) and by including multiple time steps in the NN training cost function. This resulted in stable simulations which predicted the future state more accurately than the coarse-resolution GCM without any parametrization of subgrid-scale variability; however the authors do observe that the mean state of their NN-coupled GCM would drift, making it unsuitable for prognostic climate simulations.

Rasp et al. (2018) trained a deep NN† to represent all atmospheric subgrid processes in an aquaplanet climate model by learning from a multiscale model in which convection was treated explicitly. They then replaced all subgrid parameterizations in an aquaplanet GCM with the deep NN and allowed it to freely interact with the resolved dynamics and the surface-flux scheme. They showed that the resulting system was stable and able to closely reproduce not only the mean climate of the cloud-resolving simulation but also key aspects of variability in prognostic multiyear simulations. The authors noted that their decision to use deep NNs was a deliberate one because they proved more stable in their prognostic simulations than shallower NNs, and they also observed that larger networks achieved lower training losses. However, while Rasp et al. (2018) were able to engineer a stable model that produced results close to the reference GCM, small changes in the training dataset or input and output vectors quickly led to the NN producing increasingly unrealistic outputs, causing model blow-ups (Rasp, 2020). Consistent with this, Brenowitz and Bretherton (2019) report that they were unable to achieve the same improvements in stability with increasing network layers found by Rasp et al. (2018).

3.3 Overcoming instability in ML emulations and parametrizations

O'Gorman and Dwyer (2018) tackled the instabilities observed in NN-based approaches to subgrid-scale parameterization by employing an alternative ML method: random forests (RFs; Breiman, 2001; Hastie et al., 2009). The authors trained a RF to emulate the outputs of a conventional moist convection parametrization scheme. They then replaced the conventional parameterization scheme with this emulation within a global climate model and showed that it ran stably and was able to accurately produce climate statistics such as precipitation extremes without needing to be specially trained on extreme scenarios. RFs consist of an ensemble of DTs, with the predictions of the RF being the average of the predictions of the DTs which in turn exist within the domain of the training data. RFs thus have the property that their predictions cannot go outside of the domain for their training data, which in the case of O'Gorman and Dwyer (2018) ensured that conservation of energy and non-negativity of surface precipitation (both critically important features of the moist convection parametrization scheme) were automatically achieved. A disadvantage of this method, however, is that it requires considerable memory when the climate model is being run to store the tree structures and predicted values which make up the RF.

Yuval and O'Gorman (2020) extended on the ideas in O'Gorman and Dwyer (2018), switching from emulation of a single parametrization scheme to emulation of all atmospheric subgrid processes. They trained a RF on a high-resolution 3D model of a quasi-global atmosphere to produce outputs for a coarse-grained version of the model and showed that at coarse resolution the RF can be used to reproduce the climate of the high-resolution simulation, running stably for 1000 d.

There are some drawbacks to a RF approach compared to an NN approach, however, namely that NNs may provide the possibility for greater accuracy than RFs and also require substantially less memory when implemented. Given that GCMs are already memory intensive this can be a limiting factor in the practical application of ML parametrization schemes. Furthermore, there is the potential to implement reduced precision NNs on GPUs and central processing units (CPUs) which still achieve sufficient accuracy, leading to substantial gains in computational efficiency. Motivated by these considerations, Yuval et al. (2021) trained an NN in a similar manner to how the RF in Yuval and O'Gorman (2020) was trained, using a high-resolution aquaplanet model and aiming to coarse grain the model parameters. They overcame the model instabilities observed to occur in previous attempts to use NNs for this process by wherever possible training to predict fluxes and sources and sinks (as opposed to the net tendencies predicted by the RF in Yuval and O'Gorman, 2020), thus incorporating physical constraints into the NN parametrization. The authors also investigated the impact of reduced precision in the NN and found that it had little impact on the simulated climate.

3.4 From aquaplanets to realistic land–ocean simulations

All of the studies discussed in this section so far which were tested in a full GCM have used aquaplanet simulations. Han et al. (2020) broke away from this trend by developing a residual NN† (ResNet)-based parametrization scheme which emulated the moist physics processes in a realistic land–ocean simulation. Their emulation reproduced the characteristics of the land–ocean simulation well and was also stable when embedded in single-column models.

Mooers et al. (2021) represent a subsequent example of an ML emulation of atmospheric fields with realistic geographical boundary conditions, where the authors developed feed-forward NNs to super-parametrize subgrid-scale atmospheric parameters and forced a realistic land surface model with them. Super-parametrization is distinct from traditional parameterization in that it relies on solving (usually simplified) governing equations for subgrid-scale processes rather than heuristic approximations of these processes. They employed automated hyperparameter† optimization† to investigate a range of neural network architectures across ∼ 250 trials and investigated the statistical characteristics of their emulations. While the authors found that their NNs had a less good fit in the tropical marine boundary layer, attributable to the NN struggling to emulate fast stochastic signals in convection, they also reported good skill for signals on diurnal to synoptic timescales.

Brenowitz et al. (2022) sought to address the challenge of emulating fast processes. They used FV3GFS (Zhou et al., 2019; Harris et al., 2021; a compressible atmospheric model used for operational weather forecasts by the US National Weather Service) with a simple cloud microphysics scheme included to generate training data and used this to train a selection of ML models to emulate cloud microphysics processes, including fast phase changes. They emulated different aspects of the microphysics with separate ML models chosen to be suitable to each task. For example, simple parameters were trained with single-layer NNs, while parameters which are more complex spatially were trained with RNNs (e.g. rain falls downwards and not upwards, so it is sequential in time steps through the atmosphere – a feature which can be represented by an RNN). They then embedded their ML emulation in FV3GFS. They found that their combined ML simulation performed skilfully according to their chosen metrics but had excessive cloud over the Antarctic Plateau.

All of these studies, however, did not test their parameterizations in prognostic long-term simulations.

3.5 Testing with prognostic long-term simulations

A barrier to achieving stable runs with minimal model drift with ML components is the fact that generic ML models are not designed to conserve quantities which are required to be conserved by the physics of the atmosphere and ocean. Beucler et al. (2019) proposed and tested two methods for imposing such constraints in an NN model: (1) constraining the loss function or (2) constraining the architecture of the network itself. They found that their control NN with no physical constraints imposed performed well but did so by breaking conservation laws, bringing into question the trustworthiness of such a model in a prognostic setting. Their constrained networks did, however, generalize better with unforeseen conditions, implying they might perform better under a changing climate than unconstrained models.

Chantry et al. (2021b) trained an NN to emulate the non-orographic gravity wave drag parameterization in the ECMWF IFS model (specifically cycle 45R1; ECMWF, 2018) and were able to run stable, accurate simulations out to 1 year with this emulation coupled to the IFS. While the authors note that RFs have been shown to be more stable (e.g. O'Gorman and Dwyer, 2018, and Yuval and O'Gorman, 2020, as described above, and Brenowitz et al., 2020b), they chose to focus on NNs since they have lower memory requirements and therefore promise better theoretical performance. The authors assessed the performance of their emulation in a realistic GCM by coupling the NN with the IFS, replacing the existing non-orographic gravity wave drag scheme, and performed 120 h, 10 d, and 1-year forecasts at ∼ 25 km resolution in a variety of model configurations. The authors showed that their emulation was able to run stably when coupled to the IFS for seasonal timescales, including being able to reproduce the descent of the quasi-biennial oscillation (QBO). Interestingly, while the authors initially aimed to ensure momentum conservation in a manner similar to Beucler et al. (2021), they found that this constraint led to model instabilities and that a better result was achieved without it. One possible explanation for this is that Beucler et al. (2021) assessed their NNs in an aquaplanet setting. Nonetheless, Chantry et al. (2021b) noted that since their method was not identical to Beucler et al. (2021), improved stability could potentially be achieved by following their method more precisely. The computational cost of the NN emulation developed by Chantry et al. (2021b) was found to be similar to that of the existing parametrization scheme when run on CPUs but was faster by a factor of 10 when run on GPUs due to the reduction in data transmission bottlenecks.

The first study to successfully run stable long-term climate simulations with ML parametrizations was X. Wang et al. (2022), who extended on the work of Han et al. (2020) by constructing a ResNet to emulate moist physics processes. They used the residual connections from Han et al. (2020) to construct NNs with good non-linear fitting ability and filtered out unstable NN parametrizations using a trial-and-error analysis, resulting in the best ResNet set in terms of accuracy and long-term stability. They implemented this scheme in a GCM with realistic geographical boundary conditions and were able to maintain stable simulations for over 10 years in an Atmospheric Model Intercomparison Project (AMIP)-style configuration. This was more akin to a hybrid ML–physics-based model than a traditional GCM with ML-based parametrization because rather than embedding the ResNet in the model code, the authors used an NN–GCM coupling platform through which the NNs and GCMs could interact through data transmission. This is in contrast to the approach employed in the Physical-model Integration with Machine Learning (https://turbo-adventure-f9826cb3.pages.github.io, last access: 7 February 2023) (PIML) project and Infero (https://infero.readthedocs.io/en/latest/, last access: 7 February 2023), which are both described in Sect. 3.11. One advantage to this approach noted by the authors is that it allows for a high degree of flexibility in the application of the ML component; however it is likely to be less efficient than a fully embedded ML model due to the potential for data transmission bottlenecks.

3.6 Training with observational data

An alternative to using more complex and/or higher-resolution models for training data is to train using direct observational data. For example, Ukkonen and Mäkelä (2019) used reanalysis data from ERA5 and lightning observation data to train a variety of different types of ML models to predict thunderstorm occurrence; this was then used as a proxy to trigger deep convection. ML models assessed were logistic regression, RFs, GBDTs, and NNs, with the final two showing a significant increase in skill over convective available potential energy (CAPE; a standard measure of potential convective instability). One of the challenges of accurately reproducing the large-scale effects of convection is correctly identifying when deep convection should occur within a grid cell. The authors proposed that an ML model such as those they assessed could be used as the “trigger function” which activates the deep convection scheme within a GCM.

3.7 ML for super-parameterization

Revisiting the topic of super-parametrized subgrid-scale processes introduced above, the use of ML for this approach was investigated in depth by Chattopadhyay et al. (2020). The authors introduced a framework for an NN-based super-parametrization and compared the performance of this method against an NN-based traditional parametrization (i.e. based on heuristic approximations of subgrid-scale processes) and direct super-parameterization (i.e. explicitly solving for the subgrid-scale processes) in a chaotic Lorenz 96 (Lorenz, 1996) system that had three sets of variables, each of a different scale. They found that their NN-based super-parameterization outperformed the direct super-parameterization in terms of computational cost and was more accurate than the NN-based traditional parametrization. The NN-based super-parameterization showed comparable accuracy to the direct super-parameterization in reproducing long-term climate statistics but was not always comparable for short-term forecasting.

3.8 Stochastic parametrization schemes

A more recent approach to the representation of subgrid-scale processes is via stochastic parameterization schemes, which can represent uncertainty within the scheme. There has been less focus on replacing these schemes with ML alternatives than with non-stochastic schemes; however some progress has been made. Krasnopolsky et al. (2013) used an ensemble of NNs to learn a stochastic convection parametrization from data from a high-resolution cloud resolving model. In this case, the stochastic nature of the parametrization was captured by the ensemble of NNs. Gagne et al. (2020b) took a different approach, investigating the utility of generative adversarial networks† (GANs) for stochastic parametrization schemes in Lorenz 96 (Lorenz, 1996) models. In this case, the GAN learned to emulate the noise of the scheme directly rather than implicitly representing it with an ensemble. They described the effects of different methods to characterize input noise for the GAN and the performance of the model at both weather and climate timescales. The authors found that the properties of the noise influenced the efficacy of training. Too much noise resulted in impaired model convergence and too little noise resulted in instabilities within the trained networks.

3.9 ML parametrization and emulation for land, ocean, and sea ice models

Models of the atmosphere make up one component of the Earth system; however for timescales beyond a few days, simulating other components of the Earth system becomes increasingly important to maintain accuracy. The components which are most often included in coupled Earth system models in addition to the atmosphere are the ocean, sea ice, and the land surface. Reflective of this, ML approaches to the parameterization of subgrid-scale processes are not limited to the atmosphere, and progress has been made in the use of ML for land, ocean, and sea ice models as well.

On the ocean modelling front, Krasnopolsky et al. (2002) presented an early application of NN for the approximation of seawater density, the inversion of the seawater equation of state, and an NN approximation of the non-linear wave–wave interaction. More recently, Bolton and Zanna (2019) investigated the utility of convolutional neural networks (CNNs) for parametrizing unresolved turbulent ocean processes and subsurface flow fields. Zanna and Bolton (2020) then investigated both relevance vector machines† (RVMs) and CNNs for parameterizing mesoscale ocean eddies. They demonstrated that because RVMs are interpretable, they can be used to reveal closed-form equations for eddy parameterizations with embedded conservation laws. The authors tested the RVM and CNN parameterizations in an idealized ocean model and found that both improved the statistics of the coarse-resolution simulation. While the CNN was found to be more stable than the RVM, the advantage of the RVM was the greater interpretability of its outputs. Finally, Ross et al. (2023) developed a framework for benchmarking ML-based parametrization schemes for subgrid-scale ocean processes. They used CNNs, symbolic regression, and genetic programming methods to emulate a variety of subgrid-scale forcings including measures of potential vorticity and velocity, and developed a standard set of metrics to evaluate these emulations. They found that their CNNs were stable and performed well when implemented online but generalized poorly to new regimes.

Focusing instead on sea ice, Chi and Kim (2017) assessed the ability of two NN models: a fully connected NN and an LSTM to predict Antarctic sea ice concentration up to a year in advance. Their ML models outperformed an autoregressive model comparator and were in good agreement with observed sea ice extent. Andersson et al. (2021) improved upon this work with their model IceNet, a U-Net ensemble model which produced probabilistic Arctic sea ice concentration predictions to a 6-month lead time. The authors compared IceNet to the SEAS5 dynamical sea ice model (Johnson et al., 2019) and showed an improvement in the accuracy of a binary classification of ice/no ice for all lead months except the first month. Horvat and Roach (2022) used ML to emulate a parameterization of wave-induced sea ice floe fracture they had developed previously, in order to reduce the computational cost of the scheme. When embedded in a climate simulation, their ML scheme resulted in an overall categorical accuracy (accounting for the fact that it was only called where needed) of 96.5 %. However the authors did note that since their ML scheme was trained on present-day sea ice conditions, it may have reduced success under different climate scenarios, and they recommend retraining using climate model sea ice conditions to account for this. Rosier et al. (2023) developed MELTNET, an ML emulation of the ocean-induced ice shelf melt rates in the NEMO ocean model (Gurvan et al., 2019). MELTNET consisted of a melt rate segmentation task, followed by a denoising autoencoder† network which converted the discrete labelled melt rates to a continuous melt rate. The authors demonstrated that MELTNET generalized well to ice shelf geometries outside the training set and outperformed two intermediate-complexity melt rate parameterizations, even when parameters in those models were tuned to minimize any misfit for the geometries used. Given the computational cost of sea ice parametrizations is relatively high for the timescales on which sea ice evolution is important (namely, seasonal to climate timescales) and given the promising results in emulating these parametrizations demonstrated in the literature, ML-based emulation of these schemes is a strong candidate for inclusion into future dynamical coupled modelling systems.

Finally, considering Earth's surface, most of the focus of ML innovations in this context has been on land use classification (e.g. Carranza-García et al., 2019; Digra et al., 2022) and crop modelling (e.g. Virnodkar et al., 2020; Zhang et al., 2023). The rate of publication of ML applications for land surface models has been slower; however there has nonetheless been steady progress in this space in recent years. Pal and Sharma (2021) presented a review of the use of ML in land surface modelling which provides an excellent primer of the state of the field to that point. They include in their review an overview of land surface modelling components and processes before reviewing the literature on the use of ML to represent them. They separate their review into attempts to predict and parametrize different variables or aspects of the model, including evapotranspiration (Alemohammad et al., 2017; Zhao et al., 2019; Pan et al., 2020), soil moisture (Pelissier et al., 2020), momentum and heat fluxes (Leufen and Schädler, 2019), and parameter estimation and uncertainty (Chaney et al., 2016; Sawada, 2020; Dagon et al., 2020). They also provide a useful summary of the ML architectures that have been used in the publications they discuss. More recently, He et al. (2022) developed a hybrid approach to modelling aspects of the land surface, where a traditional land surface model was used to optimize selected vegetation characteristics, while a coupled ML model simulated a corresponding three-layer soil moisture field. The estimated evapotranspiration from this hybrid model was compared to observations, and it was found that it performed well in vegetated areas but underestimated the evapotranspiration in extreme arid deserts. The ready application of ML to aspects of land surface modelling and the relative sparsity of publications in this space suggest that it is a fertile domain for further research and development.

3.10 ML for representing or correcting a sub-component of a parametrization scheme

An alternative method to replacing or emulating an entire parametrization scheme or schemes with ML is to target the most costly or troublesome sub-components of the scheme, and either replace those or make corrections to them.

Ukkonen et al. (2020) trained NNs to replace gas optics computations in the RTE-RRTMGP (Radiative Transfer for Energetics – Rapid Radiative Transfer Model for General Circulation Model Applications – Parallel; Pincus et al., 2019) scheme. The NNs were faster by a factor of 1–6, depending on the software and hardware platforms used. The accuracy of the scheme remained similar to that of the original scheme.

Meyer et al. (2022) trained an NN to account for the differences between 1D cloud effects in the ECMWF 1D radiation scheme ecRad and 3D cloud effects in the ECMWF SPARTACUS (SPeedy Algorithm for Radiative TrAnsfer through CloUd Sides) solver. The 1D cloud effect solver within ecRad, Tripleclouds, is favoured over the 3D SPARTACUS solver because it is 5 times less computationally expensive. The authors show that their NN can account for differences between the two schemes with typical errors between 20 % and 30 % of the 3D signal, resulting in an improvement in Tripleclouds' accuracy with an increase in runtime of approximately 1 %. By accounting for the differences between SPARTACUS and Tripleclouds rather than emulating all of SPARTACUS, the authors were able to keep Tripleclouds unchanged within ecRad for cloud-free areas of the atmosphere and utilize the NN 3D correction elsewhere.

3.11 Bridging the gap between popular languages for ML and large numerical models

A common toolset for researchers to develop and experiment with different ML approaches to problems is Python libraries, such as PyTorch†, scikit-learn†, TensorFlow†, and Keras†, or other dynamically typed, non-precompiled languages. In contrast, numerical weather models are almost universally written in statically typed compiled languages, predominantly Fortran. To make use of ML emulations or parameterizations in the models thus requires

-

that they be treated as a separate model periodically coupled to the main model (as is done between atmosphere and ocean models for example), or

-

that they be manually re-implemented in Fortran, or

-

that the pre-existing libraries used are somehow made accessible within the model code.

X. Wang et al. (2022; mentioned already above) opted for method 1, developing what could be considered a hybrid ML–physics-based model rather than a traditional GCM with ML-based parametrization. In their study, the authors used an NN–GCM coupling platform through which the NNs and GCMs could interact through data transmission. One advantage to this approach noted by the authors is that it allows for a high degree of flexibility in the application of the ML component; however it is likely to be less efficient than a fully embedded ML model due to the potential for data transmission bottlenecks. This framework was then formalized by Zhong et al. (2023).

There are many examples where method 2 was used, such as Rasp et al. (2018), Brenowitz and Bretherton (2018), and Gagne et al. (2019, 2020a). The obvious disadvantage of this approach is that every change to the ML model being used requires re-implementation in Fortran, and if the aim is to test a suite of ML models, this approach becomes untenable. Furthermore, this approach poses greater technical barriers for scientists developing ML-based solutions for numerical model challenges since they must be sufficiently proficient in Fortran to re-implement models in it rather than using existing user-friendly Python toolkits.

A solution lying somewhere between methods 2 and 3 was developed by Ott et al. (2020), who developed a Fortran–Keras bridge (FKB) library that facilitated the implementation of Keras-like NN modules in Fortran, providing a more modular means to build NNs in Fortran code. This, however, did not fully overcome the drawbacks posed by method 2 on its own; implementation of layers in Fortran is still necessary, and any innovations in the Python modules being used would need to be mirrored in the Fortran library.

Finally, method 3 is being tackled by the Met Office in the PIML (https://turbo-adventure-f9826cb3.pages.github.io/, last access: 7 February 2023) project and by ECMWF with an application called Infero (https://infero.readthedocs.io/en/latest/, last access: 7 February 2023). These projects both seek to develop a framework which can be used by researchers to develop ML solutions to modelling problems in Python and then integrate them directly into the existing codebase of the physical model (e.g. the Unified Model at the UK Met Office). The approach used is to directly expose the compiled code underpinning the Python modules within the physical model code.

The representation and solving of the partial differential equations (PDEs) governing the fluid flow and dynamical processes in the oceans and atmosphere can be considered the backbone of weather and climate models. The solvers used to find solutions to these equations are typically iterative and must solve the dynamics governing equations of their model on every time step and at every grid point. There has been growing interest in using ML to facilitate speedups and computational cost reductions in the preconditioning and execution of these solvers. Preconditioners are used to reduce the number of iterations required for a solver to converge on a solution and usually do so by inverting parts of the linear problem. Many earlier studies focused on using ML to select the best preconditioner and/or PDE solver from a set of possible choices (e.g. Holloway and Chen, 2007; Kuefler and Chen, 2008; George et al., 2008; Peairs and Chen, 2011; Huang et al., 2016; and Yamada et al., 2018). Ackmann et al. (2020) approached the preconditioner part of the system more directly, using a variety of ML methods to directly predict the precondition of a linear solver rather than using a standard preconditioner. Rizzuti et al. (2019) focused on the solver, using ML to apply corrections to a traditional iterative solver for the Helmholtz equation. Going a step further, a number of studies have used ML to replace the linear solver entirely (Ladický et al., 2015; Yang et al., 2016; Tompson et al., 2017). The representation of the fluid equations in a gridded model poses a challenge because of the inability to resolve fine features in their solution. This leads to the use of coarse-grained approximations to the actual equations, which aim to accurately represent longer-wavelength dynamics while properly accounting for unresolved smaller-scale features. Bar-Sinai et al. (2019) trained an NN to optimally discretize the PDEs based on actual solutions to the known underlying equations. They showed that their method is highly accurate, allowing them to integrate in time a collection of non-linear equations in 1 spatial dimension at resolutions 4× to 8× coarser than was possible with standard finite-difference methods.

Building on this, Kochkov et al. (2021) developed an ML-based method to accurately calculate the time evolution of solutions to non-linear PDEs which used grids an order of magnitude coarser than is traditionally required to achieve the same degree of accuracy. They used convolutional NNs to discover discretized versions of the equations (as in Bar-Sinai et al., 2019) and applied this method selectively to the components of traditional solvers most affected by coarse resolution, with each NN being equation-specific. They utilized the property that the dynamics of the PDEs were localized, combined with the convolutional layers of their NN enforcing translation invariance†, to perform their training simulations on small but high-resolution domains, making the training set affordable to produce. An interesting feature of their training approach, which is growing in popularity, was the inclusion of the numerical solver in the training loss function: the loss function was defined as the cumulative pointwise error between the predicted and ground truth values over the training period. In this way, the NN model could see its own outputs as inputs, ensuring an internally consistent training process. This had the effect of improving the predictive performance of the model over longer timescales, in terms of both accuracy and stability. Finally, the authors demonstrated that their models produced generalizable properties (i.e. although the models were trained on small domains, they produced accurate simulations over larger domains with different forcing and Reynolds number). They showed that this generalization property arose from consistent physical constraints being enforced by their chosen method.

An alternative to using ML to discover discretized versions of the PDE equations is to instead use NNs to learn the evolution operator of the underlying unknown PDE, a method often referred to as a DeepONet†. The evolution operator maps the solution of a PDE forwards in time and completely characterizes the solution evolution of the underlying unknown PDE. Because it is operating on the PDE, it is scale invariant† and so bypasses the restriction of other methods that must be trained for a specific discretization or grid scale. Interest in, and the degree of sophistication of, DeepONets has grown rapidly in recent years (e.g. Lu et al., 2019; Wu and Xiu, 2020; Bhattacharya et al., 2020; Li et al., 2020a, b, c; Nelsen and Stuart, 2021; Patel et al., 2021; Wang et al., 2021; Lanthaler et al., 2022) to the point where the method is showing promising speedups: 3× faster than traditional solvers in the case of Wang et al. (2021).

The application of ML to the solving of PDEs and the preconditioning of PDE solvers has been a fruitful avenue of research to date. It has led to innovations which have proven useful even outside of the immediate field (e.g. Pathak et al., 2022, adapted innovations from DeepONets to use in fully ML-based weather models – this is discussed further in the next section). This is likely in part because there are many areas of engineering and science which are active in progressing relevant research, leading to a greater overall pace of innovation. ML-based PDE solvers and preconditioners have not yet been tested in a physical weather and climate model. There are few theoretical reasons this could not occur and, if effective, result in significant computational efficiencies for traditional physical model architectures. This poses an interesting avenue for further research.

The shift from using ML to emulate or replace parametrization schemes to using ML to replace the entire GCM has been made plausible by the increasing volume of training data available. The focus in this section will be on the challenge of completely replacing a GCM with an ML model.

There has been a flurry of activity in the use of ML for nowcasting (e.g. Ravuri et al., 2021); however since the focus of this review is on weather and climate applications, these studies will not be elaborated on.

5.1 Early work – 1D deterministic models

Work on the use of ML to predict chaotic time-domain systems initially focused on 1D problems, including 1D Lorenz systems (e.g. Karunasinghe and Liong, 2006; Vlachas et al., 2018). Of particular interest is Vlachas et al. (2018), who used long short-term memory networks (LSTMs†), which are well-suited to complex time domain problems. Convolutional LSTMs (ConvLSTMs), which combine convolutional layers with an LSTM mechanism, were introduced in the meteorological domain by Shi et al. (2015) for precipitation nowcasting. They have since seen wide adoption in other areas (e.g. Yuan et al., 2018; Moishin et al., 2021; Kelotra and Pandey, 2020). Their success in other domains suggests that revisiting their utility for weather and climate modelling could be worthwhile.

5.2 Moving to spatially extended deterministic ML-based models

Replacing a GCM entirely with an ML alternative was first suggested and tested in a spatially resolved global configuration by Dueben and Bauer (2018), although for this study they only sought to predict a single variable (geopotential height at 500 hPa) on a 6∘ grid. Scher (2018) trained a CNN to predict the next model state of a GCM based on the complete state of the model at the previous step (i.e. an emulator of the GCM). Since this work was intended to be a proof of concept, the authors used a highly simplified GCM with no seasonal or diurnal cycle, no ocean, no orography, a resolution of ∼ 625 km in the horizontal, and 10 vertical levels. Nonetheless, their ML model showed impressive capabilities; it was able to predict the complete model state several time steps ahead and when run in an iterative way (i.e. by feeding the model outputs back as new inputs) was able to produce a stable climate run with the same climate statistics as the GCM, with no long-term drift (even though no conservation properties were explicitly built into the CNN). Scher and Messori (2019) then extended on this but continued the proof-of-concept approach. They investigated the ability of NNs to make skilful forecasts iteratively a day at a time to a lead time of a few days for GCMs of varying complexity and explored a combination of other factors, including number of training years, the effects of model retuning, and the impact of a seasonal cycle on NN model accuracy and stability.

Weyn et al. (2019) aimed to predict a limited number of variables, focusing on the NWP to medium-range time domain. They trained a CNN to predict 500 hPa geopotential height and 300 to 700 hPa geopotential thickness over the Northern Hemisphere to up to a 14 d lead time, showing better skill out to 3 d than persistence†, climatology†, and a dynamics-based barotropic vorticity model but not better than an operational full-physics weather prediction model.

Weyn et al. (2020) then improved on this significantly, with a deep U-Net-style CNN trained to predict four variables (geopotential height at 500 and 1000 hPa, 300 to 700 hPa geopotential thickness, and 2 m temperature) globally to a 14 d lead time. A major innovation in this study was their use of a cubed-sphere grid, which minimized distortions for planar convolution algorithms while also providing closed boundary conditions for the edges of the cube faces. Additionally, they extended their previous work to include sequence prediction techniques, making skilful predictions possible to longer lead times. Their improved model outperformed persistence and a coarse-resolution comparator (a T42 spectral resolution version of the ECMWF IFS model, with 62 vertical levels and ∼ 2.8∘ horizontal resolution) to the full 14 d lead time but was not as skilful as a higher-resolution comparator (a T63 spectral resolution version of the IFS model with 137 vertical levels and ∼ 1.9∘ horizontal resolution) or the operational sub-seasonal-to-seasonal (S2S) version of the ECMWF IFS.

Clare et al. (2021) tackled a short fall of many of the ML weather and climate models developed to this point, namely that most were deterministic, limiting their potential utility. To address this, they trained an NN to predict full probability density functions of geopotential height at 500 hPa and temperature at 850 hPa at 3 and 5 d lead times, producing a probabilistic forecast which was comparable in accuracy to Weyn et al. (2020).

Choosing to focus on improved skill rather than the question of probabilistic vs. deterministic models, Rasp and Thuerey (2021) developed a ResNet model trained to predict geopotential height, temperature, and precipitation to a 5 d lead time and assessed it against the same set of physical models as Weyn et al. (2020). Their model was close to being as skilful as the T63 spectral resolution version of the IFS model, and had better skill to the 5 d lead time than Weyn et al. (2020).

Keisler (2022) took an ambitious step forward, training a graph neural network† (GNN) model to predict 6 physical variables on 13 atmospheric levels on a 1∘ horizontal grid, which the author claims is ∼ 50–2000 times larger than the number of physical quantities predicted by the models in Rasp and Thuerey (2021) and Weyn et al. (2020). Their model worked by iteratively predicting the state of the six variables 6 h into the future (i.e. the output of each model time step was the input into the next time step) to a total lead time of 6 d. The authors showed that their model outperformed both Rasp and Thuerey (2021) and Weyn et al. (2020) in the variables common to all three studies. They suggested that the gain in skill seen over previous studies was due to the use of more channels† of information, as well as the higher spatial and temporal resolution of their model. Finally, they showed that their model was more skilful than NOAA's Global Forecast System (GFS) physical model to 6 d lead time but not as skilful as ECMWF IFS.

Lam et al. (2022) also used GNNs to build their ML-based weather and climate model, GraphCast. This model was the most skilful ML-based weather and climate model at the time of writing this review. While the first ML-based weather and climate model to claim to exceed the skill of a numerical model was Pangu-Weather (Bi et al., 2022; described in greater detail in the following subsection), GraphCast exceeded the skill of both the ECMWF deterministic operational forecasting system, HRES, and also Pangu-Weather. Furthermore, Lam et al. (2022) paid particular attention to evaluating their model and HRES against appropriate measures and included existing model assessment scorecards from ECMWF to evaluate them. GraphCast capitalized on the ability of GNNs to model arbitrary sparse interactions by adopting a high-resolution multi-scale mesh representation of the input and output parameters. It was trained on the ECMWF ERA5 reanalysis archive to produce predictions of five surface variables and six atmospheric variables, each at 37 vertical pressure levels, on a 0.25∘ grid. It made predictions on a 6-hourly time step and was run autoregressively to produce predictions to a 10 d lead time. The authors demonstrated that GraphCast was more accurate than HRES on 90.0 % of the 2760 variable and lead time combinations they evaluated.

5.3 Ensemble generation with ML-based models

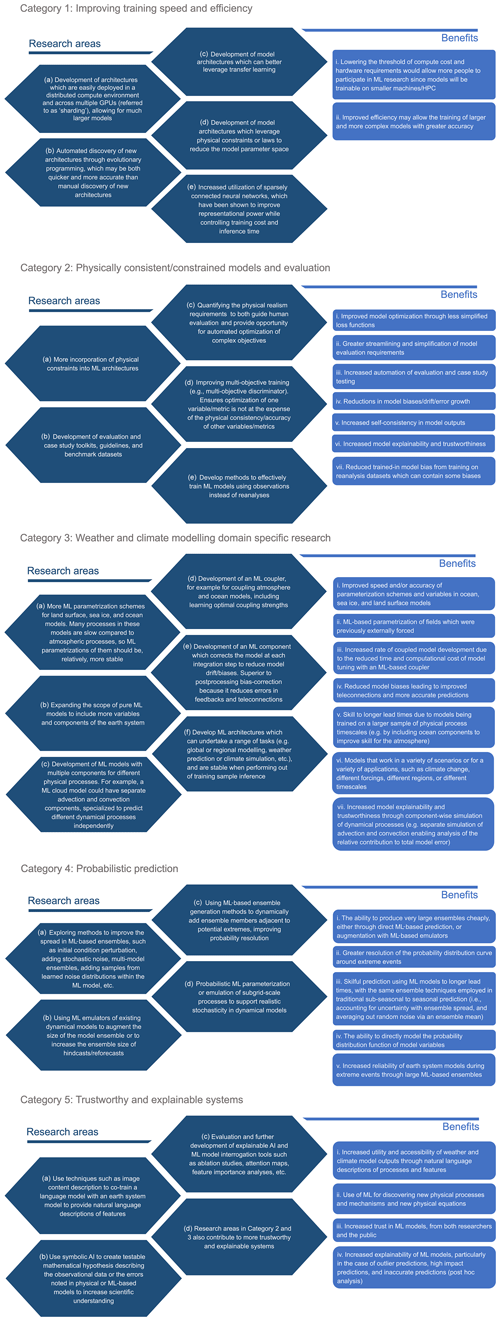

A common criticism of ML approaches to weather and climate prediction is the difficulty of representing uncertainty and/or the tails of the distribution of predicted parameters. One common method to represent the range of possible outcomes (including extremes) under different sources of uncertainty is through a well-calibrated ensemble of predictions. There are a growing number of examples where ensemble generation is considered, many of which fall into the category of full-model replacement.