the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

SymPKF (v1.0): a symbolic and computational toolbox for the design of parametric Kalman filter dynamics

Olivier Pannekoucke

Philippe Arbogast

Recent research in data assimilation has led to the introduction of the parametric Kalman filter (PKF): an implementation of the Kalman filter, whereby the covariance matrices are approximated by a parameterized covariance model. In the PKF, the dynamics of the covariance during the forecast step rely on the prediction of the covariance parameters. Hence, the design of the parameter dynamics is crucial, while it can be tedious to do this by hand. This contribution introduces a Python package, SymPKF, able to compute PKF dynamics for univariate statistics and when the covariance model is parameterized from the variance and the local anisotropy of the correlations. The ability of SymPKF to produce the PKF dynamics is shown on a nonlinear diffusive advection (the Burgers equation) over a 1D domain and the linear advection over a 2D domain. The computation of the PKF dynamics is performed at a symbolic level, but an automatic code generator is also introduced to perform numerical simulations. A final multivariate example illustrates the potential of SymPKF to go beyond the univariate case.

- Article

(5087 KB) - Full-text XML

- BibTeX

- EndNote

The Kalman filter (KF) (Kalman, 1960) is one of the backbones of data assimilation. This filter represents the dynamics of a Gaussian distribution all along the analysis and forecast cycles and takes the form of two equations representing the evolution of the mean and of the covariance of the Gaussian distribution.

While the equations of the KF are simple linear algebra, the large dimension of linear space encountered in the realm of data assimilation makes the KF impossible to handle, and this is particularly true for the forecast step. This limitation has motivated some approximation of covariance matrix to make the KF possible. For instance, in the ensemble method (Evensen, 2009), the covariance matrix is approximated by a sample estimation, whereby the time evolution of the covariance matrix is then deduced from the forecast of each individual sample. In the parametric Kalman filter (PKF) (Pannekoucke et al., 2016, 2018b, a), the covariance matrix is approximated by a parametric covariance model; the time evolution of the matrix is deduced from the time integration of the parameters' evolution equations.

One of the major limitations for the PKF is the design of the parameter evolution equations. Although not difficult from a mathematical point of view, this step requires the calculation of many terms that are difficult to calculate by hand and may involve errors in the calculation. To facilitate the derivation of the parametric dynamics and certify the correctness of the resulting system a symbolic derivation of the dynamics would be welcome.

The goal of the package SymPKF 1.01 is to facilitate the computation of the PKF dynamics for a particular class of covariance model, the VLATcov model, which is parameterized by the variance and the anisotropy. The symbolic computation of the PKF dynamics relies on a computer algebra system (CAS) able to handle abstract mathematical expressions. A preliminary version has been implemented with Maxima2 (Pannekoucke, 2021a). However, in order to create an integrated framework that would include the design of the parametric system, as well as its numerical evaluation, the symbolic Python package SymPy (Meurer et al., 2017) has been preferred for the present implementation. In particular, SymPKF comes with an automatic code generator to provide an end-to-end exploration of the PKF approach from the computation of the PKF dynamics to their numerical integration.

The paper is organized as follows. The next section provides the background on data assimilation and introduces the PKF. Section 3 focuses on the PKF for univariate VLATcov models in the perspective of symbolic computation by a CAS. Then, the package SymPKF is introduced in Sect. 4 from its use on the nonlinear diffusive advection (the Burgers equation) over a 1D domain. A numerical example illustrates the use of the automatic code generator provided in SymPKF. Then, the example of the linear advection over a 2D domain shows the ability of SymPKF to handle 2D and 3D domains. The section ends with a simple illustration of a multivariate situation, which also shows that SymPKF applies to a system of prognostic equations. The conclusion is given in Sect. 5.

2.1 Context of the numerical prediction

Dynamics encountered in geosciences are given as a system of partial differential equations (PDEs):

where 𝒳(t,x) is the state of the system and denotes either a scalar field or multivariate fields in a coordinate system , where d is the dimension the geographical space, ∂𝒳 represents the partial derivatives with respect to the coordinate system at any order, with the convention that order zero denotes the field 𝒳 itself, and ℳ denotes the trend of the dynamics. A spatial discretization (e.g., by using finite differences, finite elements, finite volumes, spectral decomposition) transforms Eq. (1) into

where, this time, 𝒳(t) is a vector, and ℳ denotes the discretization of the trend in Eq. (1). Thereafter, 𝒳 can be seen either as a collection of continuous fields with dynamics given by Eq. (1) or a discrete vector of dynamics as in Eq. (2).

Because of the sparsity and the error of the observations, the forecast 𝒳f is only an estimation of the true state 𝒳t, which is known to within a forecast error defined by . This error is often modeled as an unbiased random variable, 𝔼[ef]=0. In the discrete formulation of the dynamics in Eq. (2), the forecast error covariance matrix is given by Pf=𝔼[ef(ef)T], where the superscript T denotes the transpose operator. Since this contribution is focused on the forecast step, hereafter the upper script f is removed for the sake of simplicity.

We now detail how the error covariance matrix evolves during the forecast by considering the formalism of the second-order nonlinear Kalman filter.

2.2 Second-order nonlinear Kalman filter

A second-order nonlinear Kalman filter (KF2) is a filter that extends the Kalman filter (KF) to nonlinear situations in which the error covariance matrix evolves tangent-linearly along the trajectory of the mean state and the dynamics of this mean are governed by the fluctuation–mean interacting dynamics (Jazwinski, 1970; Cohn, 1993). Hence, we first state the dynamics of the mean under the fluctuation–mean interaction, then the dynamics of the error covariance. Note that the choice of the following presentation is motivated by the perspective of using a computer algebra system to perform the computation.

2.2.1 Computation of the fluctuation–mean interaction dynamics

Because of the uncertainty in the initial condition, the state 𝒳 is modelized as a Markov process , where ω stands for the stochasticity, while 𝒳 evolves by Eq. (1). Hence, ω lies within a certain probability space , where ℱ is σ algebra on Ω (a family of subsets of Ω, which contains Ω and which is stable for the complement and the countable union) and P is a probability measure (see, e.g., Øksendal, 2003, chap.2). is an ℱ-measurable function wherein denotes the Borel σ algebra on ℝn, where the integer n is either the dimension of the multivariate field 𝒳(t,x) or the dimension of its discretized version 𝒳(t). The connection between the Markov process and the parameter dynamics is obtained using the Reynolds averaging technique (Lesieur, 2007, chap. 4).

To perform the Reynolds averaging of Eq. (1), the first step is to replace the random field by its Reynolds decomposition . In this modeling of the random state, 𝔼[𝒳] is the ensemble average or the mean state; e is an error or a fluctuation to the mean, and it is an unbiased random field, 𝔼[e]=0. Then, Eq. (1) reads as

where η is a control of magnitude introduced to facilitate Taylor's expansion when using a computer algebra system. At the second order, the Taylor's expansion in η of Eq. (3) reads

where ℳ′ and ℳ′′ are two linear operators; the former (the latter) refers to the tangent-linear model (the Hessian), and both are computed with respect to the mean state 𝔼[𝒳]. The first-order expansion is deduced from Eq. (4a) by setting η2=0, which then reads as

By setting η to 1, the dynamics of the ensemble average are calculated at the second order from the expectation of Eq. (4a) that reads as

where denotes the tensor product of the partial derivatives with respect to the spatial coordinates, i.e., terms such as for any positive integers (k,m). Here, we have used the assumption that the partial derivative commutes with the expectation, , and that 𝔼[e]=0. Because the expectation is a projector, , the expectation of is itself. The second term of the right-hand side makes the retro-action of the error appear in the ensemble-averaged dynamics. Hence, Eq. (5) gives the dynamics of the error–mean interaction (or fluctuation–mean interaction).

Note that the tangent-linear dynamics along the ensemble-averaged dynamics in Eq. (5) are obtained as the difference between the first-order Taylor's expansion in Eq. (4b) and its expectation, and they read as

Now it is possible to detail the dynamics of the error covariance from the dynamics of the error, which tangent-linearly evolve along the mean state 𝔼[𝒳].

2.2.2 Computation of the error covariance dynamics

In the discretized form, the dynamics of the error in Eq. (6) read as the ordinary differential equation (ODE):

where M stands for the tangent-linear (TL) model evaluated at the mean state 𝔼[𝒳]. So the dynamics of the error covariance matrix, P=𝔼[eeT], are given by the ODE:

(MT is the adjoint of M) or its integrated version

where Mt←0 is the propagator associated with the time integration of Eq. (7), initiated from the covariance P0.

2.2.3 Setting of the KF2

Gathering the dynamics of the ensemble mean given by the fluctuation–mean interaction in Eq. (5) and the covariance dynamics in Eq. (8) leads to the second-order closure approximation of the extended KF, which is the forecast step equations of the KF2.

Similarly to the KF, the principal limitation of the KF2 is the numerical cost associated with the covariance dynamics in Eq. (8): living in a discrete world, the numerical cost of Eq. (8) dramatically increases with the size of the problem. As an example, for the dynamics of a simple scalar field discretized with n grid points, the dimension of its vector representation is n, while the size of the error covariance matrix scales as n2, leading to a numerical cost of Eq. (8) between 𝒪(n2) and 𝒪(n3).

We now introduce the parametric approximation of covariance matrices, which aims to reduce the cost of the covariance dynamics in Eq. (8).

2.3 Formulation of the PKF prediction

The parametric formulation of covariance evolution stands as follows. If P(𝒫) denotes a covariance model featured by a set of parameters , then there is a set featuring the forecast error covariance matrix so that approximates the forecast error covariance , i.e., . Note that a parameter pi can be a scalar or a field, e.g., a variance field.

Hence, starting from the initial condition so that , if the dynamics of the parameters are known, then it is possible to approximately determine by without solving Eq. (8) explicitly. This approach constitutes the so-called parametric Kalman filter (PKF) approximation introduced by Pannekoucke et al. (2016, 2018a) (P16, P18).

In practice, the parametric covariance models considered in the PKF are such that the number of parameters in 𝒫 is much lower than the number of coefficients required to represent the full covariance P(𝒫). For instance, for the dynamics of a scalar field discretized with n grid points, as introduced in Sect. 2.2.3, the total number of parameters in 𝒫 should be of same order as n, e.g., 2n or 3n, so that the cost to predict the evolution of the parameters would represent 2 or 3 times the cost to predict the evolution of the scalar field. Said differently, the cost to predict the parameters should scale in 𝒪(n), which is much lower than the computation of Eq. (8) in 𝒪(n2) - 𝒪(n3).

The cost of the PKF can be compared with other low-rank methods such as the reduced-rank Kalman filter (Fisher, 1998) or the ensemble Kalman filter (Evensen, 2009), for which an ensemble size of 100 members is often encountered depending on the dimension of the unstable subspace and to limit the amount of sampling noise. Hence, when each forecast is made at full resolution the cost of these approaches is 100 times the cost of a prediction, which is larger than the cost we expect for the PKF (see P16, P18). Note that low-rank and ensemble methods often consider the computation of the dynamics at a lower resolution, which leads to a lower cost than the 100 forecast at full resolution. The PKF is computed at the full resolution and is free from sampling noise.

But the frugality of the covariance model is not the only criterion. For instance, the first variational data assimilation systems considered a covariance model based on the spectral diagonal assumption in spectral space (Courtier et al., 1998; Berre, 2000). This covariance model reads as , where S denotes the spectral transform with * the conjugate transpose operator, and Σ is the diagonal matrix of standard deviation, i.e., the square root of the variance field V. In this model, the set of parameters 𝒫s is given by the grid points and the spectral variances, . If the shape of Ps(𝒫s) is n2, the number of parameters 𝒫s for this covariance model is 2n (n variances in grid points, stored as the standard deviation in the diagonal of Σ; n variances in spectral, stored in the diagonal of D), which is quite economical. However, the resulting correlation functions are homogeneous (there is the same correlation function in each point), which is enough to represent climatologically stationary background error statistics but not the flow-dependent statistics as existing in the KF. While it is possible to write the equations for the dynamics of the spectral variances (e.g., for the linear waves), the limitation that the spectral diagonal approach can only model homogeneous correlations motivated the introduction of other covariance models. For example, the covariance model based on the diagonal assumption in wavelet space (Fisher, 2004; Pannekoucke et al., 2007) can model heterogeneous correlations at a low memory cost. However, the dynamics of the wavelet variances are much more difficult to develop because of the redundancy of the wavelet transform on the sphere.

Hence, a covariance model adapted for the PKF should be able to represent realistic correlations and be such that the dynamics of the parameters can be computed, e.g., a covariance model defined by parameters in grid points. To do so, we now focus on the PKF applied to a particular family of covariance models, whose parameters are defined in grid points by the variance and the anisotropy fields: , where g will denotes the local anisotropy tensor of the local correlation function.

This part introduces a particular family of covariance models parameterized by the fields of variances and of the local anisotropy tensor: the VLATcov models (Pannekoucke, 2021b). What makes this covariance model interesting is that its parameters are related to the error field, and thus it is possible to determine the dynamics of the parameters. To introduce VLATcov models, we first present the diagnosis of the variance and of the local anisotropy tensor; then we present two examples of VLATcov models, and we end the section with a description of the dynamics of the parameters.

3.1 Definition of the fields of variance and of local anisotropy tensor

From now, we will focus on the forecast error statistics, so the upper script f is removed for the sake of simplicity. Moreover, for a function f, when there is no confusion, the value of f at a point x is written either as f(x) or as fx.

The forecast error being unbiased, 𝔼[e]=0, its variance at a point x is defined as

When the error is a random differentiable field, the anisotropy of the two-point correlation function is featured from the second-order expansion,

by the local metric tensor g(x) and defined as

where , e.g.,

The metric tensor is a symmetric positive definite matrix, and it is a 2×2 (3×3) matrix in a 2D (3D) domain.

Note that it is useful to introduce the local aspect tensor (Purser et al., 2003), defined as the inverse of the metric tensor:

where the superscript −1 denotes the matrix inverse. The aspect tensor at the point x is geometrically interpreted as an ellipse whose shape coincides with that of the local correlation function.

What makes the metric tensor attractive, either at a theoretical or at a practical level, is that it is closely related to the normalized error by

(see, e.g., Pannekoucke, 2021b, for details).

Hence, using the notation introduced in Sect. 2.3, a VLATcov model is a covariance model, P(𝒫), characterized by the set of two parameter fields, , given by the variance field and by the anisotropy field – the latter being defined either by the metric tensor field g or by the aspect tensor field s – i.e., or . Said differently, any VLATcov model reads as P(V,g) or P(V,s).

To put some flesh on the bones, two examples of VLATcov models are now presented.

3.2 Examples of VLATcov models

We first consider the covariance model based on the heterogeneous diffusion operator of Weaver and Courtier (2001), which is used in variational data assimilation to model heterogeneous correlation functions, e.g., for the ocean or for air quality. This model has the property that, under the local homogenous assumption (when the spatial derivatives are negligible), the local aspect tensors of the correlation functions are twice the local diffusion tensors (Pannekoucke and Massart, 2008; Mirouze and Weaver, 2010). Hence, by defining the local diffusion tensors as half the local aspect tensors, the covariance model based on the heterogeneous diffusion equation is a VLATcov model.

Another example of a heterogeneous covariance model is the heterogeneous Gaussian covariance model:

where ν is a field of symmetric positive definite matrices, and denotes the matrix determinant. is a particular case of the class of covariance models deduced from Theorem 1 of Paciorek and Schervish (2004). Again, this covariance model has the property that, under local homogenous assumptions, the local aspect tensor is approximately given by ν, i.e., for any point x,

Hence, as for the covariance model based on the diffusion equation, by defining the field ν as the aspect tensor field, the heterogeneous Gaussian covariance model is a VLATcov model (Pannekoucke, 2021b).

At this stage, all the pieces of the puzzle are put together to build the PKF dynamics. We have covariance models parameterized from the variance and the local anisotropy, which are both related to the error field: knowing the dynamics of the error leads to the dynamics of the VLATcov parameters. This is now detailed.

3.3 PKF prediction step for VLATcov models

When the dynamics of the error e are well approximated from the tangent-linear evolution in Eq. (6), the connection between the covariance parameters and the error, represented in Eqs. (9) and (13), makes it possible to establish the prediction step of the PKF (Pannekoucke et al., 2018a), which reads as the dynamics of the ensemble average (at the second-order closure),

coupled with the dynamics of the variance and the metric,

where it remains to replace the dynamics of the error (and its normalized version ) from Eq. (6) and where the property that the expectation operator and the temporal derivative commutes, , has been used to obtain Eq. (16b) and (16c).

Following the discussion in Sect. 2.3, the set of Eq. (16) is at the heart of the numerical sobriety of the parametric approach since the cost of the prediction of the parameter scales like 𝒪(n). In contrast to the matrix dynamics of the KF, the PKF approach is designed for the continuous world, leading to PDEs for the parameter dynamics in place of ODEs in Eq. (8) for the full matrix dynamics. Moreover, the dynamics of the parameters shed light on the nature of the processes governing the dynamics of covariances, and it does not require any adjoint of the dynamics (Pannekoucke et al., 2016, 2018a).

Note that Eq. (16) can be formulated in terms of aspect tensors thanks to the definition in Eq. (12): since sg=I, its time derivative leads to the dynamics , and then

where it remains to replace occurrences of g by s−1 in the resulting dynamics of the mean, the variance, and the aspect tensor.

Hence, the PKF forecast step for a VLATcov model is given by either the system in Eq. (16) (in metric) or by its aspect tensor formulation thanks to Eq. (17). Whatever the formulation considered, it is possible to carry out the calculations using a formal calculation language. However, even for simple physical processes, the number of terms in formal expressions can become very large; e.g., it is common to have to manipulate expressions with more than 100 terms. Thus, any strategy that simplifies the assessment of PKF systems in advance can quickly become a significant advantage.

In the following section, we present the splitting method that allows the PKF dynamics to be expressed by bringing together the dynamics of each of the physical processes, calculated individually.

3.4 The splitting strategy

When there are several processes in the dynamics in Eq. (1), the calculation of the parametric dynamics can be tedious even when using a computer algebra system. To better use digital resources, a splitting strategy can be introduced (Pannekoucke et al., 2016, 2018a).

While the theoretical background is provided by the Lie–Trotter formula for Lie derivatives, the well-known idea of time splitting is easily taken from a first-order Taylor expansion of an Euler numerical scheme.

The computation of dynamics,

over a single time step δt can be done in two steps following the numerical scheme

where at order δt, this scheme is equivalent to , which is the Euler step of Eq. (18). Because f1 and f2 can be viewed as vector fields, the fractional scheme, joining the starting point (at t) to the end point (at t+δt), remains to go through the parallelogram formed by the sum of the two vectors along its sides. Since there are two paths joining the extreme points, starting the computation by f2 is equivalent to starting by f1 (at order δt); this corresponds to the commutativity of the diagram formed by the parallelogram.

Appendix A shows that the dynamics given by Eq. (18) imply dynamics of the error, the variance, the metric, and the aspect written as a sum of trends. Hence, it is possible to apply a splitting for all these dynamics.

As a consequence of the calculation of the parametric dynamics, calculating the parametric dynamics of Eq. (18) is equivalent to separately calculating the parametric dynamics of and , then bringing together the two parametric dynamics into a single one by summing the trends for the mean, the variance, the metric, or the aspect dynamics. This splitting applies when there are more than two processes and appears to be a general method to reduce the complexity of the calculation.

3.5 Discussion and intermediate conclusion

However, although the calculation of the system in Eq. (16) is straightforward, as it is similar to the calculation of Reynolds equations (Pannekoucke et al., 2018a), it is tedious because of the many terms involved, and there is a risk of introducing errors during the calculation by hand.

Then, once the dynamics of the parameters are established, it remains to design a numerical code to test whether the uncertainty is effectively well represented by the PKF dynamics. Again, the design of a numerical code is not necessarily difficult, but with numerous terms the risk of introducing an error is important.

To facilitate the design of the PKF dynamics and the numerical evaluation, the package SymPKF has been introduced to perform the VLATcov parameter dynamics and to generate a numerical code used for the investigations (Pannekoucke, 2021c). The next section introduces and details this tool.

In order to introduce the symbolic computation of the PKF for the VLATcov model, we consider an example: the diffusive nonlinear advection in the Burgers equation, which reads

where u stands for the velocity field and corresponds to a function of the time t and the space of coordinate x and where κ is a diffusion coefficient (constant here). This example illustrates the workflow leading to the PKF dynamics. It consists of defining the system of equations in SymPy, then computing the dynamics with Eq. (16); we now detail these two steps.

4.1 Definition of the dynamics

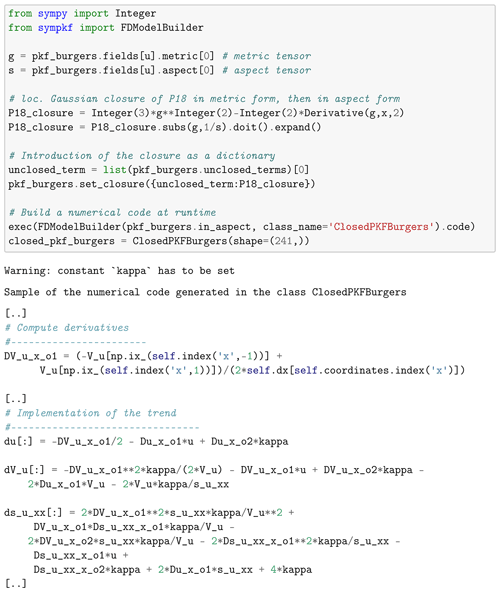

The definition of the dynamics relies on the formalism of SymPy as shown in

Fig. 1. The coordinate system is first defined as instances of the

class Symbols. Note that the time is defined as sympkf.t, while

the spatial coordinate is left to the choice of the user, here x.

Then, the function u is defined as an instance of the class Function as a

function of (t,x).

Figure 1Sample of code and Jupyter notebook outputs for the definition of the Burgers dynamics using SymPKF.

In this example, the dynamics consist of a single equation defined as an instance of

the class Eq, but in the general situation in which the dynamics are

given as a system of equations, the dynamics have to be represented as a Python list

of equations.

A preprocessing of the dynamics is then performed to determine several important quantities

to handle the dynamics: the prognostic fields

(functions for which a time derivative is present), the diagnostic fields (functions

for which there is no time derivative in the dynamics), the constant functions (functions

that only depend on the spatial coordinates), and the constants (pure scalar terms that

are not a function of any coordinate). This preprocessing is performed when

transforming the dynamics as an instance of the class PDESystem

and whose default string output delivers a summary of the dynamics:

for the Burgers equation, there is only one prognostic function, u(t,x),

and one constant, κ.

The prognostic quantities being known, it is then possible to perform the computation of the PKF dynamics, as discussed now.

4.2 Computation of the VLATcov PKF dynamics

Thanks to the preprocessing, we are able to determine the VLATcov parameters

needed to compute the PKF dynamics, which are the variance and the anisotropy tensor

associated with the prognostic fields. For the Burgers equation, the VLATcov parameters

are the variance Vu and the metric tensor or its associated

aspect tensor . Note that, in SymPKF, the VLATcov parameters

are labeled by their corresponding prognostic fields to facilitate their identification.

This labeling is achieved when the dynamics are transformed as an instance of the class

SymbolicPKF. This class is at the core of the computation of the PKF dynamics from

Eq. (16).

As discussed in Sect. 2.2.1, the PKF dynamics rely on the second-order

fluctuation–mean interaction dynamics wherein each prognostic function is replaced by

a stochastic counterpart. Hence, the constructor of SymbolicPKF

converts each prognostic function as a function of an additional

coordinate, ω∈Ω. For the Burgers equation, u(t,x) becomes .

Since the computation of the second-order fluctuation–mean interaction dynamics relies

on the expectation operator, an implementation of this expectation operator has been

introduced in SymPKF: it is defined as the class Expectation built

by inheritance from the class sympy.Function to leverage the computational

facilities of SymPy. The implementation of the class Expectation

is based on the linearity of the mathematical expectation operator with respect

to deterministic quantities and its commutativity

with partial derivatives and integrals with respect to coordinates different from ω,

e.g., for the Burgers equation .

Note that is a function of (t,x) only: the expectation operator

converts a random variable into a deterministic variable.

Then, the symbolic computation of the second-order fluctuation–mean interaction dynamics

in Eq. (16a) is performed, thanks to SymPy, by following the steps as described in

Sect. 2.2.1. In particular, the computation also leads to the tangent-linear

dynamics of the error in Eq. (6), from which it is possible to compute the dynamics of

the variance in Eq. (16b) and of the metric tensor in Eq. (16c)

(or its associated aspect tensor version).

Applying these steps and the appropriate substitutions, this is achieved

when calling the in_metric or in_aspect Python property

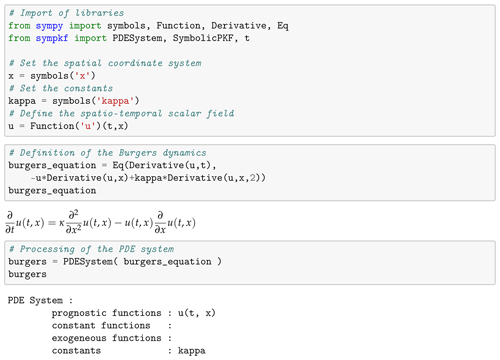

of an instance of the class SymbolicPKF. This is shown for the Burgers equation

in Fig. 2, where the background computation of the PKF dynamics

leads to a list of the three coupled equations corresponding to the mean,

the variance, and the aspect tensor, similar to the system in Eq. (22) first obtained

by Pannekoucke et al. (2018a).

Figure 2Sample of code and Jupyter notebook outputs: systems of partial differential equations given in metric and in aspect forms produced by SymPKF when applied to the Burgers equation (Eq. 20).

Hence, from SymPKF, for the Burgers equation, the VLATcov PKF dynamics given in the aspect tensor read as

where su,xx is the single component of the aspect tensor su in 1D domains. Note that in the output of the PKF equations, as reproduced in Eq. (21), the expectation in the dynamics of the mean is replaced by the prognostic field; for the Burgers equation, 𝔼[u](t,x) is simply denoted by u(t,x).

While the Burgers equation only contains two physical processes, i.e., the nonlinear advection and the diffusion, the resulting PKF dynamics in Eq. (21) make numerous terms appear, which justifies the use of symbolic computation, as mentioned above. The computation of the PKF dynamics leading to the metric and to the aspect tensor formulation takes about 1 s of computation (Intel Core i7-7820HQ CPU at 2.90 GHz × 8).

In this example, the splitting strategy has not been considered to simplify the computation of the PKF dynamics. However, it can be done by considering the PKF dynamics for the advection and the diffusion , and computed separately, then merged to find the PKF dynamics of the full Burgers equation. For instance, Fig. 3 shows the PKF dynamics for the advection (first cell) and for the diffusion (second cell); the output can be traced back in Eq. (2), e.g., by the terms in κ for the diffusion.

Figure 3Illustration of the splitting strategy that can be used to compute the PKF dynamics and applied here for the Burgers equation: PKF dynamics of the Burgers equation can be obtained from the PKF dynamics of the advection (first cell) and of the diffusion (second cell).

Thanks to the symbolic computation using the expectation operator, as implemented

by the class Expectation, it is possible to handle terms such as

during the computation of the PKF dynamics.

The next section details how these terms are handled during the computation and

the closure issue they bring.

4.3 Comments on the computation of the VLATcov PKF dynamics and the closure issue

4.3.1 Computation of terms and their connection to the correlation function

An important point is that terms such as 𝔼[ε∂αε], e.g., in Eq. (21) are directly connected to the correlation function whose Taylor expansion is written as

However, during their computation, the VLATcov PKF dynamics make the terms appear, with , where for any α, ∂α denotes the derivative with respect to the multi-index , with αi denoting the derivative order with respect to the ith coordinate xi of the coordinate system and where the sum of all derivative order is denoted by . The issue it that these terms in are not directly connected to the Taylor expansion in Eq. (22).

The interesting property of these terms is that they can be reworded as

spatial derivatives of terms in the form 𝔼[ε∂γε].

More precisely, any term can be written

from derivative of terms in 𝔼[ε∂γε], where ,

and the term (see Appendix B for

the proof).

So, to replace any term in by

terms in 𝔼[ε∂γε], where ,

a substitution dictionary is computed in SymPKF and stored as the variable

subs_tree. The computation of this substitution dictionary is performed

thanks to a dynamical programming strategy. Thereafter, the integer

is called the order of the term .

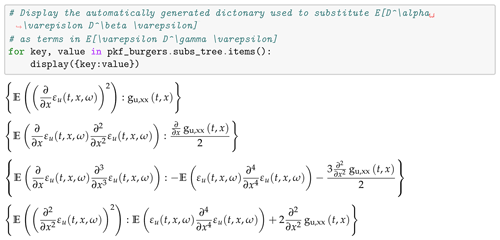

Figure 4 shows the substitution dictionary computed for

the Burgers equation. It appears that terms of order lower than 3 can be explicitly

written from the metric (or its derivatives), while terms of order larger than 4 cannot: this is known as the closure issue (Pannekoucke et al., 2018a).

Figure 4Substitution dictionary computed in SymPKF to replace terms such as by terms in 𝔼[ε∂γε], where .

The term , which features long-range correlations, cannot be related to the variance or to the metric and has to be closed. We detail this point in the next section.

4.3.2 Analytical and data-driven closure

A naïve closure for the PKF dynamics in Eq. (21) would be to replace the unknown term by zero. However, in the third equation that corresponds to the aspect tensor dynamics, the coefficient −3κ of the diffusion term being negative, it follows that the dynamics of su numerically explode at an exponential rate. Of course, because the system represents the uncertainty dynamics of the Burgers equation in Eq. (20) that is well posed, the parametric dynamics should not explode. Hence, the unknown term is crucial: it can balance the negative diffusion to stabilize the parametric dynamics.

For the Burgers equation, a closure for has been previously proposed (Pannekoucke et al., 2018a), given by

where the symbol ∼ is used to indicate that this is not an equality but a proposal of closure for the term in the left-hand side and that leads to the closed system

The closure in Eq. (23) results from a local Gaussian approximation of the correlation function. Previous numerical experiments have shown that this closure is well adapted to the Burgers equation (Pannekoucke et al., 2018a). But the approach that has been followed to find this closure is quite specific, and it would be interesting to design a general way to find such a closure.

In particular, it would be interesting to search for a generic way to design closures that leverage the symbolic computation, which could be plugged with the PKF dynamics

computed from SymPKF at a symbolic level. To do so, we propose an

empirical closure

that leverages a data-driven strategy to hybridize machine learning

with physics, as proposed by Pannekoucke and Fablet (2020) with their neural network

generator PDE-NetGen.

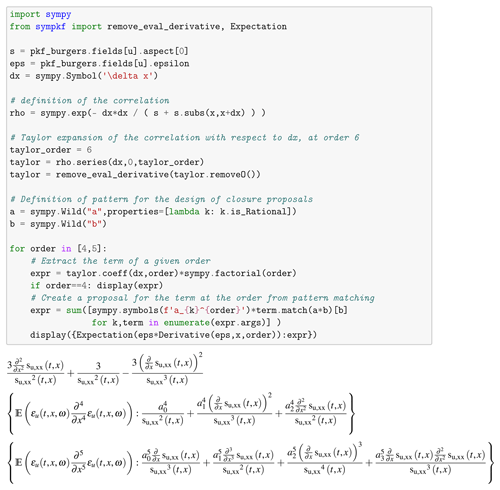

The construction of the proposal relies on the symbolic computation shown in Fig. 5.

Figure 5Example of a symbolic computation leading to a proposal for the closure of the unknown terms of order 4 and 5.

The first step is to consider an analytical approximation for the correlation function. For the illustration, we consider the local correlation function to be well approximated by the quasi-Gaussian function

Then, the second step is to perform the computation of the Taylor's expansion

of Eq. (22) at a symbolic level. This is done thanks to SymPy

with the method series applied to Eq. (25) for

δx near the value 0 and at a given order; e.g., for the illustration

expansion is computed as the sixth order in Fig. 5.

Then, the identification with the Taylor's expansion in Eq. (22) leads to the closure

While it looks like the closure in Eq. (23), the coefficients are not the same. But this suggests that the closure of can be expanded as

where represents three unknown reals. A data-driven strategy can be considered

to find an appropriate value of a4 from experiments. This has been

investigated by using the automatic generator of a neural network PDE-NetGen,

which bridges the gap between the physics and the machine learning

(Pannekoucke and Fablet, 2020) and with which the training has led to the value

. Since this proposal is deduced from

symbolic computation, it is easy to build some proposals for higher-order unknown terms

as is shown in Fig. 5 for the term

.

Whatever closure has been obtained in an analytical or an empirical way, it remains to compute the closed PKF dynamics to assess their performance. To do so a numerical implementation of the system of partial differential equations has to be introduced. As for the computation of the PKF dynamics, the design of a numerical code can be tedious, with a risk of introducing errors in the implementation due to the numerous terms occurring in the PKF dynamics. To facilitate the research on the PKF, SymPKF comes with a Python numerical code generator, which provides an end-to-end investigation of the PKF dynamics. This code generator is now detailed.

4.4 Automatic code generation for numerical simulations

While compiled language with appropriate optimization should be important for industrial applications, we chose to implement a pure Python code generator, which offers a simple research framework for exploring the design of PKF dynamics. It would have been possible to use a code generator already based on SymPy (see, e.g., Louboutin et al., 2019), but with such code generators being domain-specific, it was less adapted to the investigation of the PKF for arbitrary dynamics. Instead, we consider a finite-difference implementation of partial derivatives with respect to spatial coordinates. The default domain to perform the computation is the periodic unit square with the dimension of the number of spatial coordinates. The length of the domain can be specified along each direction. The domain is regularly discretized along each direction, while the number of grid points can be specified for each direction.

The finite difference takes the form of an operator ℱ that approximates any partial derivate at a second order of consistency: for any multi-index α, , where 𝒪 is Landau's big O notation. For any f, the notation means that is finite. The operator ℱ computed with respect to independent coordinates commutes, e.g., , where ∘ denotes the composition, but it does not commute for dependent coordinates, e.g., . The finite difference of the partial derivative with respect to the multi-index is computed sequentially, e.g., . The finite difference of order α with respect to a single spatial coordinate is the centered finite difference based on α+1 points.

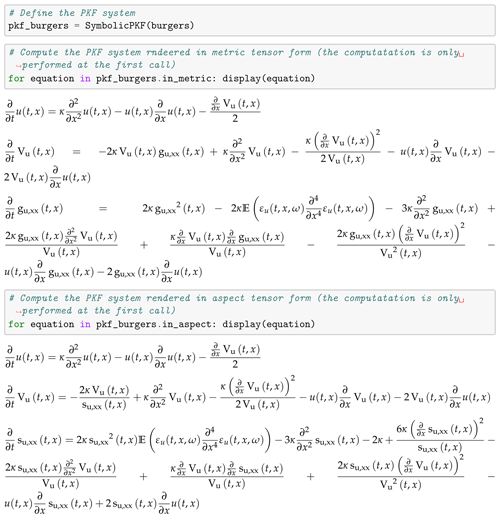

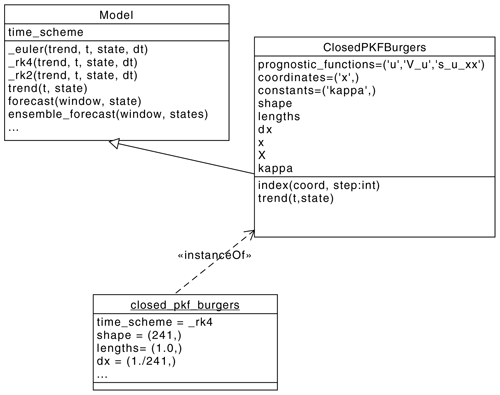

For instance, Fig. 6 shows how to close the PKF dynamics for

the Burgers equation following P18 and how to build a

code from an instance of the class sympkf.FDModelBuilder: it creates the class

ClosedPKFBurgers.

In this example, the code is rendered from templates thanks to Jinja3;

then it is executed at runtime.

Note that the code can also be written in an appropriate Python module for adapting the

code to a particular situation or to check the correctness of the generated code.

At the end, the instance closed_pkf_burgers of the class

ClosedPKFBurgers is created, raising a warning to indicate that the

value of constant κ has to be specified before performing a numerical simulation.

Note that it is possible to set the value of kappa as a keyword argument in the

class ClosedPKFBurgers.

Figure 6 also shows a sample of the generated code

with the implementation of the computation of the first-order partial derivative

∂xVu, which appears as a centered finite difference.

Then, the sample of code shows how the partial derivatives are used to compute the

trend of the system of partial differential equations in Eq. (24).

The numerical integration is handled through the inheritance

mechanism: the class ClosedPKFBurgers inherits the integration

time loop from the class sympkf.Model as described by the unified modeling language (UML) diagram shown in Fig. 7.

In particular, the class Model contains several time schemes, e.g., a fourth-order

Runge–Kutta scheme.

Note that in the present implementation of SymPKF, only explicit time schemes are considered,

but it could be possible to leverage the symbolic computation to implement other

schemes more adapted to a given PDE, e.g., an implicit scheme for the transport or

the diffusion or a high-order exponential time-differencing method (Kassam and Trefethen, 2005)

with which the linear and the nonlinear parts would be automatically determined

from the symbolic computation.

The details of the instance closed_pkf_burgers

of the class ClosedPKFBurgers make it appear that

the closed system in Eq. (24) will be integrated by using a RK4 time scheme

on the segment [0,D] (here D=1) with periodic boundaries and discretized by 241

points.

Figure 7UML diagram showing the inheritance mechanism implemented in

SymPKF: the class

ClosedPKFBurgers inherits from the class Model,

which implements several time schemes. Here, closed_pkf_burgers

is an instance of the class ClosedPKFBurgers.

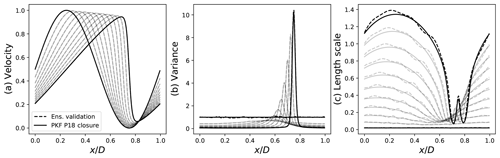

Thanks to the end-to-end framework proposed in SymPKF, it is possible to perform a numerical simulation based on the PKF dynamics in Eq. (23). To do so, we set κ=0.0025 and consider the simulation starting from the Gaussian distribution of mean with Umax=0.5 and of covariance matrix

where Vh=0.01Umax and . The time step of the fourth-order Runge–Kutta scheme is dt=0.002. The evolution predicted from the PKF is shown in Fig. 8 (solid lines). This simulation illustrates the time evolution of the mean (panel a) and of the variance (panel b); panel (c) represents the evolution of the correlation length scale defined from the aspect tensor as . Note that at time 0, the length scale field is L(x)=lh. For the illustrations, the variance (the length scale) is normalized by its initial value Vh (lh).

Figure 8Illustration of a numerical simulation of the PKF dynamics in Eq. (23) (solid line), with the mean (a), the variance (b), and the correlation length scale (c), which is defined from the component su,xx of the aspect tensor by . An ensemble-based validation of the PKF dynamics is shown as a dashed line. (Pannekoucke and Fablet, 2020, see their Fig. 7)

In order to show the skill of the PKF applied to the

Burgers equation, when using the closure of P18, an ensemble validation is now performed.

Note that the code generator of SymPKF can be used for arbitrary dynamics, e.g.,

the Burgers equation itself. Hence, a numerical code solving the Burgers equation is

rendered from its symbolic definition. Then an ensemble of 1600 forecasts is computed

starting from an ensemble of initial errors at time 0. The ensemble of initial

errors is sampled from the Gaussian distribution

of zero mean and covariance matrix .

Note that the ensemble forecasting implemented in SymPKF as the method

Model.ensemble_forecast (see Fig. 7)

leverages the multiprocessing tools of Python

to use the multiple cores of the CPU when present. On the computer used for the

simulation, the forecasts are performed in parallel on eight cores.

The ensemble estimation of the mean, the variance, and the length scale is shown in

Fig. 8 (dashed lines). Since the ensemble is finite, sampling

noise is visible, e.g., in the variance at the initial time that is not strictly equal

to Vh. In this simulation, it appears that

the PKF (solid line) coincides with the ensemble estimation (dashed lines), which shows

the ability of the PKF to predict the forecast error covariance dynamics.

Note that the notebook corresponding to the Burgers experiment is available in

the example directory of SymPKF.

While this example shows an illustration of SymPKF in a 1D domain, the package also applies in 2D and 3D domains, as presented now.

4.5 Illustration of dynamics in a 2D domain

In order to illustrate the ability of SymPKF to apply in a 2D or 3D domain, we consider the linear advection of a scalar field by a stationary velocity field , which reads as the partial differential equation

As for the Burgers equation, the definition of the dynamics relies on SymPy

(not shown but similar to the definition of the Burgers equation as given in

Fig. 1).

This leads to preprocessing the dynamics by creating the instance advection

of the class PDESystem,

which transforms the equation into a system of partial differential equations.

In particular, the procedure will diagnose the prognostic functions of dynamics, here the function c. Then it identifies the constant functions, which can depend on space but not on time: here, these are the components of the velocity (u, v). The process also identifies exogenous functions and constants, of which there are none here.

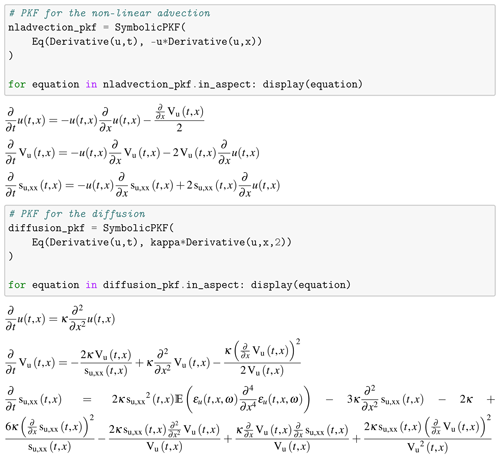

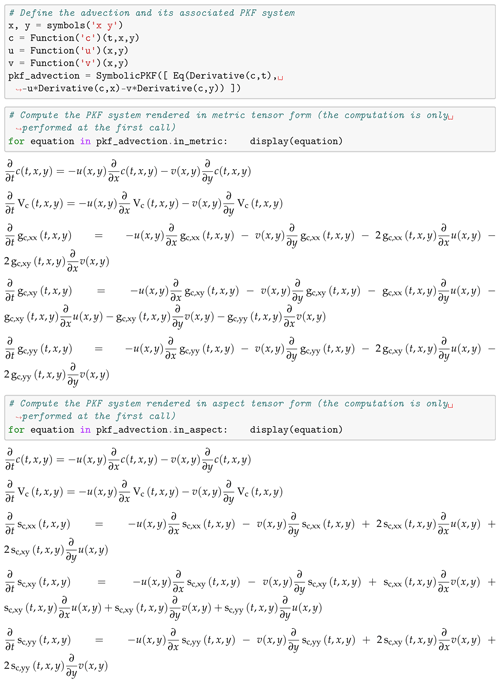

The calculation of the parametric dynamics is handled by the class SymbolicPKF

as shown in the first cell in Fig. 9.

The parametric dynamics are a property of the instance pkf_advection of the

class SymbolicPKF, and when it is called, the parametric dynamics are computed

once and for all.

The parametric dynamics formulated in terms of metric are first computed;

see the second cell.

For the 2D linear advection, the parametric dynamics are a system of

five partial differential equations, as is shown in the output of the second cell:

the dynamics of the ensemble average 𝔼[c], which outputs as c for the

sake of simplicity (first equation), the dynamics of the variance

(second equation), and the dynamics of the local metric tensor (last three equations).

In compact form, the dynamics are given by the system

which corresponds to the 2D extension of the 1D dynamics first found by Cohn (1993) (Pannekoucke et al., 2016) and validates the computation performed in SymPKF. Due to the linearity of the linear advection in Eq. (29), the ensemble average in Eq. (30a) is governed by the same dynamics as in Eq. (29). While both the variance in Eq. (30b) and the metric are advected by the flow, the metric is also deformed by the shear in Eq. (30c). This deformation more commonly appears in the dynamics written in aspect tensor form, which is given by

where Eq. (31c) is similar to the dynamics of the conformation tensor in viscoelastic flow (Bird and Wiest, 1995; Hameduddin et al., 2018).

Figure 9Sample of code and Jupyter notebook outputs: system of partial differential equations produced by SymPKF when applied to the linear advection in Eq. (29).

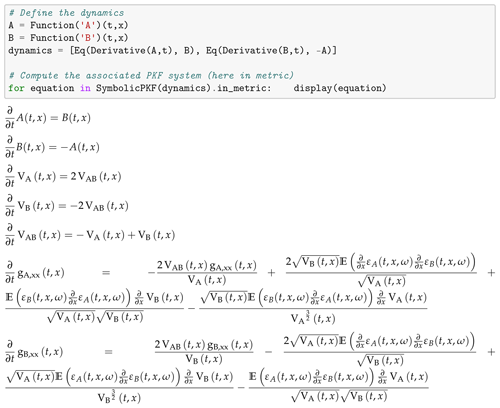

Figure 10Output of the computation by SymPKF of the PKF dynamics for the simple multivariate periodic chemical reaction, corresponding to the right-hand side of Eq. (32).

We do not introduce any numerical simulation of the PKF dynamics in Eq. (30) or Eq. (31), but interested readers are referred to the 2D numerical PKF assimilation cycles of Pannekoucke (2021b), which have been made thanks to SymPKF.

This example illustrates a 2D situation and shows the multidimensional capabilities of SymPKF. Similarly to the simulation conducted for the Burgers equation, it is possible to automatically generate a numerical code able to perform numerical simulations of the dynamics in Eq. (31) (not shown here). Hence, this 2D domain example showed the ability of SymPKF to apply in dimensions larger than 1D.

Before concluding, we would like to present a preliminary application of SymPKF in a multivariate situation.

4.6 Towards the PKF for multivariate dynamics

SymPKF can be used to compute the prediction of the variance and the anisotropy in a multivariate situation.

Note that one of the difficulties with the multivariate situation is that the number of equations increases linearly with the number of fields and the dimension of the domain; e.g., for a 1D (2D) domain and two multivariate physical fields, there are two ensemble-averaged fields, two variance fields, and two (six) metric fields. Of course this is no not a problem when using a computer algebra system as done in SymPKF.

To illustrate the multivariate situation, only a very simple example is introduced. Inspired from chemical transport models encountered in air quality, we consider the transport over a 1D domain of two chemical species, whose concentrations are denoted by A(t,x) and (B(t,x), advected by the wind u(x). For the sake of simplicity, the two species interact following periodic dynamics as defined by the coupled system

Thanks to the splitting strategy, the PKF dynamics due to the advection have already been detailed in the previous section (see Sect. 4.5), so we can focus on the chemical part of the dynamics, which is given by the processes on the right-hand side of Eq. (32). The PKF of the chemical part is computed thanks to SymPKF and shown in Fig. 10. This time, and as expected, multivariate statistics appear in the dynamics. Here, the dynamics of the cross-covariance VAB=𝔼[eAeB] are given by the fifth equation. The coupling brings up unknown terms, e.g., the term in the sixth equation of the output shown in Fig. 10. Note that by taking into account the multivariate situation with the dynamics of the cross-covariance, the multivariate PKF hybridizes the continuous nature of the multivariate fields with the matrix form in Eq. (8a), which corresponds here to the dynamics of the variances (VA,VB) and the cross-covariance VAB.

To go further, some research is still needed to explore the dynamics and the modeling of the multivariate cross-covariances. A possible direction is to take advantage of the multivariate covariance model based on the balance operator as often introduced in variational data assimilation (Derber and Bouttier, 1999; Ricci et al., 2005). Note that such multivariate covariance models have recently been considered for the design of the multivariate PKF analysis step (Pannekoucke, 2021b). Another way is to consider a data-driven strategy to learn the physics of the unknown terms from a training based on ensembles of forecasts (Pannekoucke and Fablet, 2020).

To conclude, this example shows the potential of SymPKF to tackle the multivariate situation. Moreover, the example also shows that SymPKF is able to perform the PKF computation for a system of partial differential equations. However, all the equations should be prognostic; SymPKF is not able to handle diagnostic equations.

This contribution introduced the package SymPKF that can be used to conduct the research on the parametric Kalman filter prediction step for covariance models parameterized by the variance and the anisotropy (VLATcov models). SymPKF provides an end-to-end framework: from the equations of dynamics to the development of a numerical code.

The package has been first introduced by considering the nonlinear diffusive advection dynamics in the Burgers equation. In particular, this example shows the ability of SymPKF to handle abstract terms, e.g., the unclosed terms formulated with the expectation operator. The expectation operator implemented in SymPKF is a key tool for the computation of the PKF dynamics. Moreover, we showed how to handle a closure and how to automatically render numerical codes.

For univariate situations, SymPKF applies in a 1D domain as well as in 2D and 3D domains. This has been shown by considering the computation of the PKF dynamics for the linear advection equation on a 2D domain.

A preliminary illustration with multivariate dynamics showed the potential of SymPKF to handle the dynamics of multivariate covariance. But this point has to be further investigated, and this constitutes the main perspective of development. Moreover, to perform a multivariate assimilation cycle with the PKF, the multivariate formulation of the PKF analysis state is needed. A first investigation of the multivariate PKF assimilation has been proposed by Pannekoucke (2021b).

In its present implementation, SymPKF is limited to computation with prognostic equations. It is not possible to consider dynamics based on diagnostic equations, while these are often encountered in atmospheric fluid dynamics, e.g., the geostrophic balance. This constitutes another topic of research development for the PKF, facilitated by the use of symbolic exploration.

Note that the expectation operator as introduced here can be used to compute Reynolds equations encountered in turbulence. This opens new perspectives for the use of SymPKF for other applications that could be interesting, especially for automatic code generation.

In this section we show that using a splitting strategy is possible for the design of the parametric dynamics. For this, it is enough to show that given dynamics written as

the dynamics of the error, the variance, the metric, and the aspect all write as a sum of trends depending on each process f1 and f2. We show this starting from the dynamics of the error.

Due to the linearity of the derivative operator, the TL dynamics resulting from Eq. (A1) are written as

where and denote the differential of the two functions, which can be written as the sum of two trends and , depending exclusively on f1 and f2, respectively. For the variance's dynamics, , substitution by Eq. (A2) leads to

where and depend exclusively on f1 and f2, respectively. Then the standard deviation dynamics, obtained by differentiating σ2=V as ,

read as the sum of two trends and , depending exclusively on f1 and f2, respectively. It results that the dynamics of the normalized error , deduced from the time derivative of e=σε, , read as

and also expand as the sum of two trends and , again depending exclusively on f1 and f2, respectively. For the metric terms , we deduce that the dynamics expand as

with and where each partial trend depends exclusively on f1 and f2, respectively. To this end, the dynamics of the aspect tensor s are deduced from Eq. (17), which expands as

where and only depend on f1 and f2, respectively.

To conclude, the computation of the parametric dynamics for Eq. (A1) can be performed from the parametric dynamics of and calculated separately, then merged together to obtain the dynamics of the variance in Eq. (A3), of the metric in Eq. (A6), and of the aspect tensors in Eq. (A7).

In this section we proof the property.

-

Property 1. Any term with can be related to the correlation expansion term 𝔼[ε∂γε], where , and the term .

-

Proof. The derivative with respect to a zero αi is the identity operator. Note that the multi-index forms a semi-group since for two multi-indexes α and β we can form the multi-index .

Now Property 1 can be proven considering the following recurrent process, when assuming that the property is true for all patterns of degree strictly lower than the degree .

Without loss of generality we assume αi>0 and denote , where δij is the Kronecker symbol (δii=1, ). From the formula

and from the commutativity of the expectation operator and the partial derivative with respect to the coordinate system, the result is that

considering the terms of the left-hand side. On one hand, we observe that the degree of the first term is decreasing to ; from the recurrence assumption, can be expanded as terms of the form 𝔼[ε∂γε]. On the other hand, the degree of the second term remains the same, , but with a shift of the derivative order. This shift of the order can be done again following the same process, leading after iterations to the term .

-

The SymPKF package is free and open-source. It is distributed under the CeCILL-B free software license. The source code is provided through a GitHub repository at https://github.com/opannekoucke/sympkf (last access: 22 March 2021). A snapshot of SymPKF is available at https://doi.org/10.5281/zenodo.4608514 (Pannekoucke, 2021c). The data used for the simulations presented here are generated at runtime when using the Jupyter notebooks.

OP introduced the symbolic computation of the PKF dynamics, and OP and PA imagined an end-to-end framework for the design of the PKF dynamics from the equation of the dynamics to the numerical simulation thanks to an automatic code generation. OP developed the codes.

The authors declare that they have no conflict of interest.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We would like to thank Sylwester Arabas and the two anonymous referees for their fruitful comments, which have contributed to improving the paper. The UML class diagram has been generated from UMLlet (Auer et al., 2003).

This research has been supported by the French national program LEFE/INSU (Étude du filtre de KAlman PAramétrique, KAPA).

This paper was edited by Sylwester Arabas and reviewed by two anonymous referees.

Auer, M., Tschurtschenthaler, T., and Biffl, S.: A Flyweight UML Modelling Tool for Software Development in Heterogeneous Environments, in: Proceedings of the 29th Conference on EUROMICRO, EUROMICRO '03, pp. 267–272, IEEE Computer Society, Washington, DC, USA, 1–6 September 2003, https://doi.org/10.1109/EURMIC.2003.1231600, 2003. a

Berre, L.: Estimation of Synoptic and Mesoscale Forecast Error Covariances in a Limited-Area Model, Mon. Weather Rev., 128, 644–667, 2000. a

Bird, R. B. and Wiest, J. M.: Constitutive Equations for Polymeric Liquids, Annu. Rev. Fluid Mech., 27, 169–193, https://doi.org/10.1146/annurev.fl.27.010195.001125, 1995. a

Cohn, S.: Dynamics of Short-Term Univariate Forecast Error Covariances, Mon. Weather Rev., 121, 3123–3149, https://doi.org/10.1175/1520-0493(1993)121<3123:DOSTUF>2.0.CO;2, 1993. a, b

Courtier, P., Andersson, E., Pailleux, J., Vasiljević, W. H., Hamrud, D., Hollingsworth, M., Rabier, A. F., and Fisher, M.: The ECMWF implementation of three-dimensional variational assimilation (3D-Var). I: Formulation, Q. J. Roy. Meteor. Soc., 124, 1783–1807, 1998. a

Derber, J. and Bouttier, F.: A reformulation of the background error covariance in the ECMWF global data assimilation system, Tellus A, 51, 195–221, https://doi.org/10.3402/tellusa.v51i2.12316, 1999. a

Evensen, G.: Data Assimilation: The Ensemble Kalman Filter, Springer-Verlag Berlin Heidelberg, https://doi.org/10.1007/978-3-642-03711-5, 2009. a, b

Fisher, M.: Development of a simplified Kalman filter, Tech. Rep., 260, ECMWF, https://doi.org/10.21957/vz40cqca4, 1998. a

Fisher, M.: Generalized frames on the sphere, with application to background error covariance modelling, in: Proc. ECMWF Seminar on ”Recent developments in numerical methods for atmospheric and ocean modelling”, edited by ECMWF, pp. 87–102, Reading, UK, 2004. a

Hameduddin, I., Meneveau, C., Zaki, T. A., and Gayme, D. F.: Geometric decomposition of the conformation tensor in viscoelastic turbulence, J. Fluid Mech., 842, 395–427, https://doi.org/10.1017/jfm.2018.118, 2018. a

Jazwinski, A.: Stochastic Processes and Filtering Theory, Academic Press, New York, 1970. a

Kalman, R. E.: A New Approach to Linear Filtering and Prediction Problems, Journal of Basic Engineering, 82, 35–45, https://doi.org/10.1115/1.3662552, 1960. a

Kassam, A.-K. and Trefethen, L.: Fourth-order time-stepping for stiff PDEs, SIAM J. Sci. Comput., 26, 1214–1233, 2005. a

Lesieur, M.: Turbulence in Fluids, Springer, p. 558, ISBN 978-1-4020-6434-0, 2007. a

Louboutin, M., Lange, M., Luporini, F., Kukreja, N., Witte, P. A., Herrmann, F. J., Velesko, P., and Gorman, G. J.: Devito (v3.1.0): an embedded domain-specific language for finite differences and geophysical exploration, Geosci. Model Dev., 12, 1165–1187, https://doi.org/10.5194/gmd-12-1165-2019, 2019. a

Meurer, A., Smith, C. P., Paprocki, M., Čertík, O., Kirpichev, S. B., Rocklin, M., Kumar, A., Ivanov, S., Moore, J. K., Singh, S., Rathnayake, T., Vig, S., Granger, B. E., Muller, R. P., Bonazzi, F., Gupta, H., Vats, S., Johansson, F., Pedregosa, F., Curry, M. J., Terrel, A. R., Roučka, Š., Saboo, A., Fernando, I., Kulal, S., Cimrman, R., and Scopatz, A.: SymPy: symbolic computing in Python, PeerJ Computer Science, 3, e103, https://doi.org/10.7717/peerj-cs.103, 2017. a

Mirouze, I. and Weaver, A. T.: Representation of correlation functions in variational assimilation using an implicit diffusion operator, Q. J. Roy. Meteor. Soc., 136, 1421–1443, https://doi.org/10.1002/qj.643, 2010. a

Øksendal, B.: Stochastic Differential Equations, Springer Berlin Heidelberg, https://doi.org/10.1007/978-3-642-14394-6, 2003. a

Paciorek, C. and Schervish, M.: Nonstationary Covariance Functions for Gaussian Process Regression, Advances Neural Information Processing Systems, 16, 273–280, https://proceedings.neurips.cc/paper/2003/file/326a8c055c0d04f5b06544665d8bb3ea-Paper.pdf (last access: 22 September 2021), 2004. a

Pannekoucke, O.: CAC-PKF-M (v0.1): Computer-aided calculation of PKF dynamics with Maxima, Zenodo [code], https://doi.org/10.5281/ZENODO.4708316, 2021a. a

Pannekoucke, O.: An anisotropic formulation of the parametric Kalman filter assimilation, Tellus A, 73, 1–27, https://doi.org/10.1080/16000870.2021.1926660, 2021b. a, b, c, d, e, f

Pannekoucke, O.: SymPKF: a symbolic and computational toolbox for the design of parametric Kalman filter dynamics, Zenodo [code], https://doi.org/10.5281/zenodo.4608514, 2021c. a, b

Pannekoucke, O. and Fablet, R.: PDE-NetGen 1.0: from symbolic partial differential equation (PDE) representations of physical processes to trainable neural network representations, Geosci. Model Dev., 13, 3373–3382, https://doi.org/10.5194/gmd-13-3373-2020, 2020. a, b, c, d

Pannekoucke, O. and Massart, S.: Estimation of the local diffusion tensor and normalization for heterogeneous correlation modelling using a diffusion equation, Q. J. Roy. Meteor. Soc.., 134, 1425–1438, https://doi.org/10.1002/qj.288, 2008. a

Pannekoucke, O., Berre, L., and Desroziers, G.: Filtering properties of wavelets for local background-error correlations, Q. J. Roy. Meteor. Soc., 133, 363–379, 2007. a

Pannekoucke, O., Ricci, S., Barthelemy, S., Ménard, R., and Thual, O.: Parametric Kalman Filter for chemical transport model, Tellus, 68, 31547, https://doi.org/10.3402/tellusa.v68.31547, 2016. a, b, c, d, e

Pannekoucke, O., Bocquet, M., and Ménard, R.: Parametric covariance dynamics for the nonlinear diffusive Burgers equation, Nonlin. Processes Geophys., 25, 481–495, https://doi.org/10.5194/npg-25-481-2018, 2018a. a, b, c, d, e, f, g, h, i, j

Pannekoucke, O., Ricci, S., Barthelemy, S., Ménard, R., and Thual., O.: Parametric Kalman filter for chemical transport models – Corrigendum, Tellus A, 70, 1–2, https://doi.org/10.1080/16000870.2018.1472954, 2018b. a

Purser, R., Wu, W.-S., Parrish, D., and Roberts, N.: Numerical aspects of the application of recursive filters to variational statistical analysis. Part II: Spatially inhomogeneous and anisotropic general covariances, Mon. Weather Rev., 131, 1536–1548, https://doi.org/10.1175//2543.1, 2003. a

Ricci, S., Weaver, A. T., Vialard, J., and Rogel, P.: Incorporating State-Dependent Temperature–Salinity Constraints in the Background Error Covariance of Variational Ocean Data Assimilation, Mon. Weather Rev., 133, 317–338, https://doi.org/10.1175/mwr2872.1, 2005. a

Weaver, A. and Courtier, P.: Correlation modelling on the sphere using a generalized diffusion equation, Q. J. Roy. Meteor. Soc., 127, 1815–1846, https://doi.org/10.1002/qj.49712757518, 2001. a

https://github.com/opannekoucke/sympkf, last access: 22 September 2021.

https://maxima.sourceforge.io/, last access: 22 September 2021.

https://jinja.palletsprojects.com/en/2.11.x/, last access: 22 September 2021.

- Abstract

- Introduction

- Description of the PKF

- PKF for VLATcov models

- Symbolic computation of the PKF for VLATcov

- Conclusions

- Appendix A: Splitting for the computation of the parametric dynamics

- Appendix B: Computation of terms

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

- Abstract

- Introduction

- Description of the PKF

- PKF for VLATcov models

- Symbolic computation of the PKF for VLATcov

- Conclusions

- Appendix A: Splitting for the computation of the parametric dynamics

- Appendix B: Computation of terms

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References