the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

ibicus: a new open-source Python package and comprehensive interface for statistical bias adjustment and evaluation in climate modelling (v1.0.1)

Fiona Raphaela Spuler

Jakob Benjamin Wessel

Edward Comyn-Platt

James Varndell

Chiara Cagnazzo

Statistical bias adjustment is commonly applied to climate models before using their results in impact studies. However, different methods based on a distributional mapping between observational and model data can change the simulated trends as well as the spatiotemporal and inter-variable consistency of the model, and are prone to misuse if not evaluated thoroughly. Despite the importance of these fundamental issues, researchers who apply bias adjustment currently do not have the tools at hand to compare different methods or evaluate the results sufficiently to detect possible distortions. Because of this, widespread practice in statistical bias adjustment is not aligned with recommendations from the academic literature. To address the practical issues impeding this, we introduce ibicus, an open-source Python package for the implementation of eight different peer-reviewed and widely used bias adjustment methods in a common framework and their comprehensive evaluation. The evaluation framework introduced in ibicus allows the user to analyse changes to the marginal, spatiotemporal and inter-variable structure of user-defined climate indices and distributional properties as well as any alteration of the climate change trend simulated in the model. Applying ibicus in a case study over the Mediterranean region using seven CMIP6 global circulation models, this study finds that the most appropriate bias adjustment method depends on the variable and impact studied, and that even methods that aim to preserve the climate change trend can modify it. These findings highlight the importance of use-case-specific selection of the method and the need for a rigorous evaluation of results when applying statistical bias adjustment.

- Article

(3252 KB) - Full-text XML

- BibTeX

- EndNote

Even though climate models have greatly improved in recent decades, simulations of present-day climate using both global and regional climate models still exhibit biases (Vautard et al., 2021). This means that there are systematic discrepancies between the statistics of the model output and the statistics of the observational distribution (Maraun, 2016). These discrepancies between the two distributions become especially relevant when using the output of climate models for local impact studies, which often require a focus on specific threshold metrics such as dry days; for example, when running hydrological (Hagemann et al., 2011) or crop models (Galmarini et al., 2019).

To account for and potentially correct these biases, it has become common practice to post-process climate models using statistical bias adjustment before using their output for impact studies. The idea behind statistical bias adjustment is to calibrate a statistical transfer function between the observed and climate model distributions of a chosen variable. A variety of statistical bias adjustment methods have been developed and published in recent years, ranging from simple adjustments to the mean to trend-preserving adjustments by quantile and further multivariate adjustments (Michelangeli et al., 2009; Li et al., 2010; Cannon et al., 2015; Vrac and Friederichs, 2015; Maraun, 2016; Switanek et al., 2017; Lange, 2019, and more). While this paper focuses primarily on methods that are applied at each grid cell individually, the use of multivariate methods is further discussed in Sect. 5.

Despite widespread use both within the scientific community (see, for example, IPCC, 2021, 2022) as well as by climate service providers and practitioners (see, for example, climate scenarios used by central banks across the world, NGFS, 2021), bias adjustment is known to suffer from fundamental issues. These issues have been highlighted, among others, by Maraun et al. (2017), who show that bias adjustment not only has limited potential to correct misrepresented physical processes in the climate model, but it can also introduce new artefacts and destroy the spatiotemporal and inter-variable consistency of the climate model. To avoid misuse, Maraun et al. (2017) recommend the evaluation of non-calibrated aspects, the development of process-informed bias adjustment methods based on an understanding of climate model errors, and the selection of climate models that represent the large-scale patterns and feedback relevant to the impact sufficiently well.

We argue that the remedies mentioned above are not common practice due to practical issues with statistical bias adjustment. As Ehret et al. (2012); Maraun (2016); Casanueva et al. (2020) highlight, different bias adjustment approaches are appropriate for different use cases. However, methods that exist in the academic literature are published either only as papers, as bias-adjusted datasets (Dumitrescu et al., 2020; Mishra et al., 2020; Navarro-Racines et al., 2020; Xu et al., 2021, and more), or as stand-alone packages across multiple programming languages (Iturbide et al., 2019; Lange, 2021b; Vrac and Michelangeli, 2021; Cannon, 2023, and more), often without accompanying evaluation or evaluation frameworks. This gives users who are not necessarily experts in these methods limited options to choose the bias adjustment method most appropriate for their use case and evaluate the results sufficiently to detect issues.

In this paper, we introduce ibicus, an open-source Python package for the implementation, comparison and evaluation of bias adjustment for climate model outputs. The contribution of ibicus is twofold. Firstly, it introduces a unique unified interface to apply eight different peer-reviewed and widely used bias adjustment methodologies. The implemented methods include scaled distribution mapping (Switanek et al., 2017), Cumulative Distribution Function transform (CDFt) (Michelangeli et al., 2009), quantile delta mapping (Cannon et al., 2015) and ISIMIP3BASD (Lange, 2019). Further, it develops an evaluation framework for assessing distributional properties and user-defined climate indices (covering, but not limited to, the ETCCDI (Expert Team on Climate Change Detection and Indices) indices – Zhang et al., 2011) along not only marginal but also temporal, spatial and multivariate dimensions. Applying ibicus in a case study over the Mediterranean region, we find that the most appropriate method indeed depends on the variable and impact studied and that the evaluation of spatiotemporal metrics can identify issues with bias adjustment that would not be found when only marginal, i.e. calibrated aspects are evaluated. Further, we find that even methods that aim to preserve the trend of the climate model can modify it, and that bias adjustment modifies the overall climate model ensemble spread.

The remainder of this paper is structured as follows. Section 2 gives an introduction to statistical bias correction methodologies, and Sect. 3 presents ibicus, covering both the details of the different bias adjustment methodologies and evaluation metrics implemented as well as the software design of the package. In Sect. 4, we present the results of the case study, and we draw conclusions in Sect. 5.

2.1 Statistical bias adjustment of climate models

Climate model biases can be defined as “systematic difference between a simulated climate statistic and the corresponding real-world climate statistic” (Maraun, 2016). These biases mostly stem from the imperfect representation of physical processes such as orographic drag, convection or land–atmosphere interactions. This leads to the incorrect representation of features such as the mean and variance of observed temperature or the spatial properties of extreme rainfall over a certain area.

Bias adjustment methods for climate models have their origin in methods developed for the post-processing of numerical weather prediction (NWP) models. The rationale is to calibrate a statistical transfer function between model simulations and observations over the historical period, which is then applied to the model simulation for the period of interest, often in the future. However, in contrast to NWP models, there is no direct correspondence between the time series of observations and the climate model in historical simulations. This means that typical regression-based approaches used for NWP are not applicable. Rather, properties of the statistical distribution of the two variables, such as the mean or quantiles, are mapped to each other when bias-adjusting climate models. Furthermore, the magnitude of the biases in climate models can be much larger, whereas NWP forecasts are tightly constrained by recent observations.

The most common approaches to the bias adjustment of climate models include a simple adjustment of the mean (linear scaling), a mapping of the two entire cumulative distribution functions (quantile mapping), or more advanced methods that also aim to preserve the trend projected in the climate model (such as CDFt or ISIMIP3BASD). Most of these methods, however, should rather be seen as method families that have some core characteristics – quantile mapping, for example, always implements a correction in all quantiles – as well as some interchangeable components, such as their handling of dry days, that they might share with other methods. The distinction between core characteristics and interchangeable components varies from method to method, as will be discussed in more detail in the description of the software package. An alternative approach, often termed the delta change method, adjusts the historical observations to incorporate the climate model trend (see, for example, Olsson et al., 2009; Willems and Vrac, 2011; Maraun, 2016). The practice of using bias adjustment methods to also downscale the climate model has been criticized in various publications (von Storch, 1999; Maraun, 2013; Switanek et al., 2022), so this paper focuses on the bias adjustment of climate models purely for the purpose of reducing biases at constant resolution.

The use of bias adjustment methods has become standard practice in academic climate impact studies and, increasingly, outside of academia (in national assessment reports or other climate services). For example, the ISIMIP3BASD methodology (Lange, 2019) is the only bias adjustment method implemented as a standard pre-processing step in the Inter-Sectoral Impact Model Intercomparison Project (ISIMIP) impact modelling framework that is used in the climate risk scenarios published by central banks (NGFS, 2021). However, applying statistical bias adjustment to climate models raises a number of important considerations and issues, which we categorize into fundamental and practical issues for the purpose of this paper.

2.2 Fundamental issues with statistical bias adjustment and evaluation

Climate model biases in statistics at the grid-cell level can stem from larger-scale biases of the model, such as biases in larger drivers such as El Niño, the lack of local feedback to these drivers or the misplacement of storm tracks in a region. However, univariate statistical bias adjustment methods are only as capable as their assumptions and input data and therefore correct only the impact these larger-scale biases have on the distribution of the variables at grid cell level (Maraun et al., 2017).

Univariate bias adjustment might also deteriorate the spatial, temporal or multivariate structure of the climate model. This is particularly problematic for compound events which have been argued to be of particularly high societal relevance due to their elevated impacts and neglect in standard extreme event evaluation approaches (Zscheischler et al., 2018, 2020). As this issue will not be detected in location-wise cross-validation approaches, it is necessary to evaluate bias-adjusted data with a particular focus on spatial, temporal and multi-variable components (Maraun et al., 2017; Maraun and Widmann, 2018a).

Furthermore, bias adjustment can modify the climate change trends simulated by the model, in particular those of threshold-sensitive climate indices such as dry days (Dosio, 2016; Casanueva et al., 2020). This holds in general for non-trend-preserving methods, but can also be the case for any trend-preserving methods such as ISIMIP3BASD. Reasons for the modification of the trend by “trend-preserving” methods can be traced to the underlying statistical method and assumptions, such as the specific treatment of values between a variable bound and another threshold, or parametric and non-parametric distribution fits used in different stages of the bias adjustment.

To justify any kind of trend modification by the bias adjustment method, it is necessary to make an assumption about how present-day bias relates to biases in the future period (Christensen et al., 2008). This can be based on the assumption that climate model biases are stationary in time (Gobiet et al., 2015): for example, based on this assumption, Ivanov et al. (2018) developed a theoretical model to justify future trend modifications by the bias adjustment method based on present-day biases. However, Chen et al. (2015); Hui et al. (2019) show that while temperature biases can be approximated as stationary, precipitation biases cannot. Similarly, Van de Velde et al. (2022) show a clear impact of non-stationarity on bias adjustment, in particular for precipitation. Trend-preserving bias adjustment methods, on the other hand, assume, at least to some degree, that the raw climate model trend constitutes our best available knowledge for subsequent impact studies. In line with this, Maraun et al. (2017) argue that the modification of the trend of a climate model based purely on statistical reasoning is not defendable and should rather be based on physical process understanding and reasoning about the large-scale drivers involved.

Some options are available to cope with these fundamental issues in impact studies. The first is to discard climate models that misrepresent large-scale circulation relevant to the problem at hand. The second is to conduct a careful evaluation of multivariate aspects of the bias-adjusted climate model to identify potential artefacts and discard methods that introduce these before proceeding with the impact study. The third is to develop process-informed multivariate bias adjustment methods that, for example, include large-scale covariates such as weather patterns (Maraun et al., 2017; Verfaillie et al., 2017; Manzanas and Gutiérrez, 2019). These more elaborate methods require even more careful case-by-case model selection and evaluation.

2.3 Practical issues with bias adjustment and the availability of open-source software

Attempts to address these fundamental issues and improve the application of bias adjustment are impeded by a number of practical issues.

The first practical issue is that the comparison of different bias adjustment methods and their adaptation to a specific application is not easily achieved by a user. This is because the code to implement different methodologies is published, if at all, across different software packages and languages, impeding interoperability. Users also have the option of downloading already bias-adjusted datasets, which improves ease of access but does not allow for any custom adjustments (Dobor et al., 2015; Famien et al., 2018; Dumitrescu et al., 2020; Xu et al., 2021). The second practical issue is that available software packages are not accompanied by evaluation methods beyond marginal aspects. As the evaluation of bias adjustment is not straightforward, this makes it difficult for a user to detect artefacts or identify improper results by assessing multivariate properties of the climate model, rendering bias adjustment prone to misuse (Maraun et al., 2017).

These practical issues jeopardize the current implementation of statistical bias adjustment. Addressing these issues does not solve the more fundamental issues but can improve common practice and enhance transparency.

An example of good practice is the MIdAS package, which introduces a new bias adjustment method that is compared to other methods in Berg et al. (2022). However, even though the package is, in principle, extendable, other methods are not implemented in practice and an adjustable evaluation framework has not been developed.

To address the practical issues outlined in the previous section, we introduce ibicus, an open-source Python package for the bias adjustment of climate models and evaluation thereof. ibicus introduces a unified, modular software architecture within which eight state-of-the-art peer-reviewed and widely used bias-adjustment methodologies are implemented. This enables researchers to apply different methods through a common interface and to modify components of the methods, such as the treatment of dry days, based on the region and impact of interest. The code implementation of each methodology is based on the cited academic publication as well as available accompanying code that was re-written and modularized to fit the developed interface. Consistency with the original implementation was ensured through rigorous testing and correspondence with the authors of the different methodologies. The package provides an extensive evaluation framework covering spatial, temporal and multivariate aspects. As part of this, we develop a generalized threshold metric class that allows the user to evaluate frequently used climate metrics, such as frost days or dry days, as well as to define their own threshold metrics targeted to the specific impact study. The spatiotemporal evaluation of threshold metrics enables the user to detect artefacts and evaluate compound events before and after bias adjustment. ibicus is designed to be flexible and easy to use, facilitating both the “off the shelf” use of methods as well as their customization and allowing its use in notebook environments all the way up to integration with high-performance computing (HPC) packages such as Dask (Rocklin, 2015). This section provides an overview of the key features of ibicus. A more complete user guide and tutorials can be found on the documentation page of the package.

3.1 Data input

Bias adjustment requires observational data and climate model simulations during the same historical period and climate model simulation for the (future) period of interest. ibicus operates on a numerical level, taking three-dimensional (time, latitude, longitude) NumPy arrays as input and returning arrays of the same shape and type. This choice was made to ensure interoperability with different geoscientific computing packages such as Xarray (Hoyer and Hamman, 2017) or Iris (Met Office, 2010) as well as operation in different computing environments and integration with Dask (Rocklin, 2015).

3.2 Bias adjustment

ibicus represents each bias adjustment methodology as a class which inherits generic functionalities from a base “debiaser” class, such as the common initialization interface and a function applying the debiaser in parallel over a grid of locations. The base debiaser class makes the package easily extendable, as a new bias adjustment methodology can inherit these generic functionalities and requires only the specification of a function which applies the methodology for a given location (“apply_location”).

Each debiaser object is initialized separately for each variable and requires several class parameters. These are specific to the bias adjustment methodology and include parameters such as the distribution used for a parametric fit or the type of trend preservation applied. For a number of methodology-variable combinations, default settings exist that are described in the documentation. Default settings are labelled “experimental” if they have not been published in the peer-reviewed literature but are proposed by the package authors after extensive evaluation. It is possible to, and the user is encouraged to, modify the parameters even when default settings exist to adapt the method to a given use case. For example, if precipitation extremes are of special interest, the user could choose to modify the parametric fit for this variable, as the gamma distribution – an often-used default – might underestimate precipitation extremes (Katz et al., 2002). After initialization, each debiaser object has an “apply” method to apply the bias adjustment to climate model data. This takes a three-dimensional NumPy array of observations as well as historical and future climate model simulations as input, together with optional date information for running windows. The apply function can be run in parallel to speed up execution and integrates with Dask for deployment in HPC environments.

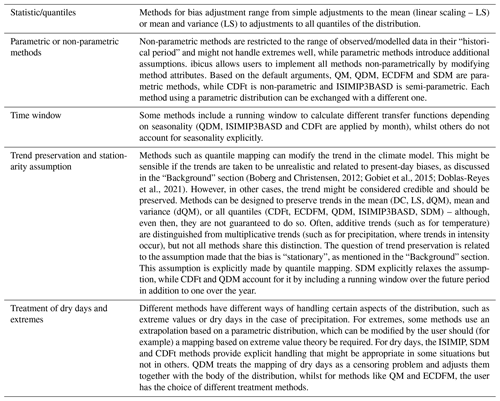

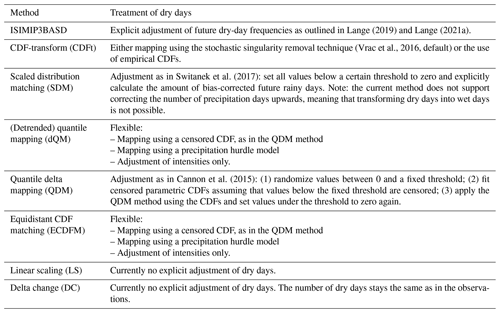

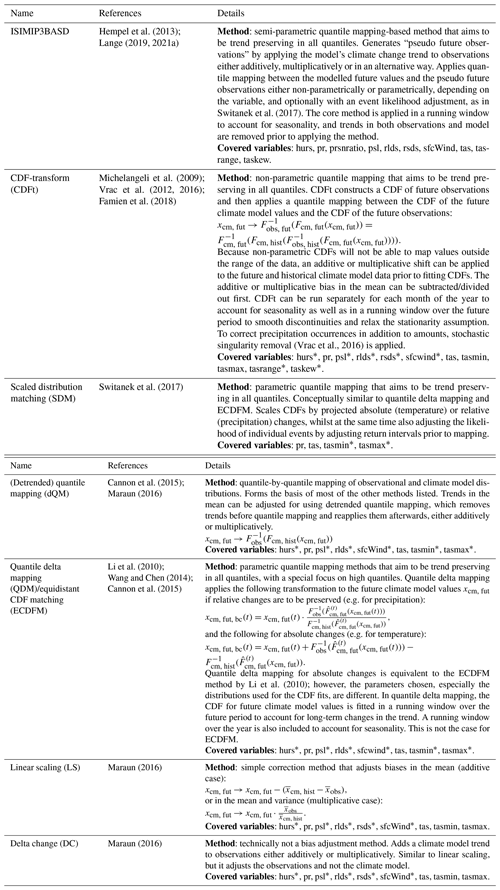

Table A1 provides an overview of the methodologies currently implemented in ibicus; these were chosen to cover some of the most widely used bias adjustment methods in current practice. These methods are based on different assumptions, making them suitable for different applications. For example, ISIMIP3BASD is a parametric trend-preserving quantile mapping which might be appropriate if the variable approximately follows a known parametric structure and the climate change trend in all quantiles is judged to be realistic. If these assumptions are not valid, a non-parametric method such as CDFt or a non-trend-preserving method such as quantile mapping might be more appropriate. Alternatively, if changes in extremes are of special interest, a parametric method based on extreme value theory might be adequate. As noted in the “Background” section, different methods should rather be viewed as method families that have core characteristics and interchangeable components in their ibicus implementation. An example of this is the treatment of dry days in different methods: while the treatment of dry days is entangled in the method design for SDM, CDFt and ISIMIP and cannot be changed by the user, QM methods allow for different treatments of dry days depending on the use case. Table 1 highlights further methodological considerations differentiating different method families. A detailed description of each individual component of each method is beyond the scope of this paper but can be found in the detailed ibicus software documentation provided online.

(Boberg and Christensen, 2012; Gobiet et al., 2015; Doblas-Reyes et al., 2021)3.3 Evaluation

Physical consistency in space, time or between variables is not ensured when using univariate bias adjustment methods. Furthermore, the trend of the climate model might be modified, and the bias of some statistics or impact metrics might be increased through some bias adjustment methods – even if it is removed in certain quantiles. The ibicus evaluation framework offers a collection of tools to identify these issues and compare the performance of different bias adjustment methods for variables of interest, building on previous efforts such as the VALUE evaluation framework for statistical downscaling (Maraun et al., 2019).

3.3.1 Metrics and design

The evaluation framework consists of two components: (1) the evaluation of bias adjustment over a validation/testing period that enables a comparison of the bias-adjusted model with observations, and (2) the analysis of trend preservation between the validation and the future or between any two future periods. The latter component is necessary as bias adjustment methods can modify the climate change trend, even when using methods that are designed to preserve it, as demonstrated by the case study in Sect. 4. In the absence of evidence to the contrary, trend-preserving methods should be preferred, as statistical bias adjustment methods usually do not have an underlying physical reasoning for modifying a particular trend.

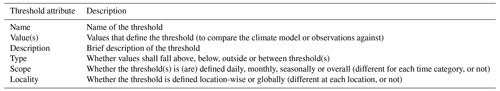

In both components of the evaluation framework, there are two kinds of metrics that can be evaluated using ibicus: statistical properties and threshold metrics. Statistical properties allow the user to compare properties of the observational distribution and the climate model distribution – such as the mean or different quantiles – before and after bias adjustment. Threshold-based climate indicators are often of special interest for climate impact studies – for example, frost days by time of year could be of interest for agricultural or biodiversity impacts – and where the success of bias adjustment methods is particularly desirable (Dosio et al., 2012; Dosio, 2016). A number of threshold metrics are implemented by default in the package. A new threshold metric can be specified by the user along the dimensions in Table 2. Accumulations such as monthly total precipitation can also be estimated. Using these definitions, the evaluation module covers but is not limited to the indices developed by the ETCCDI (Zhang et al., 2011), which are used in many application studies.

Since location-wise evaluation is not sufficient to decide whether a bias adjustment method is fit for the use case, the module offers the functionality to evaluate location-wise, as well as spatiotemporal and multivariate metrics both in terms of threshold metrics and statistical properties. Table 3 gives an overview of the implemented methods.

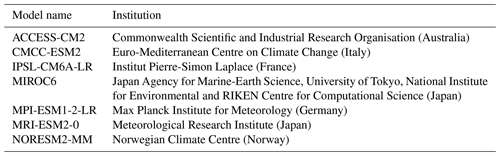

Table 3Overview of evaluation categories implemented in ibicus. CDF is cumulative distribution function.

Finally, different bias adjustment methods rely on different assumptions, such as that certain parametric distributions provide suitable fits. The evaluation framework includes functions to assess the fits of parametric distributions and the seasonality of the variable to help the user make decisions on how to customize the bias adjustment method to their application.

We demonstrate the comparison and evaluation of different bias adjustment methods by applying ibicus over the Mediterranean. Rather than conducting a comprehensive evaluation for a single use case, our aim is to highlight the use-case dependency of the method choice more broadly and hence the necessity of targeted evaluation beyond marginal aspects. We, therefore, choose to limit this case study to the bias adjustment of global climate models, even though specific impact studies often, but not always (IPCC, 2021), use higher-resolution models over the target region.

4.1 Data and methods

We consider the Mediterranean region between 35–45∘ N latitude and from 18∘ W to 45∘ E longitude and apply bias adjustment to seven Coupled Model Intercomparison Project Phase 6 (CMIP6) models selected based on their use in previous studies in the Mediterranean region (Zappa and Shepherd, 2017; Babaousmail et al., 2022). The chosen models include ACCESS-CM2, CMCC-ESM2, IPSL-CM6A-LR, MIROC6, MPI-ESM1-2-LR, MRI-ESM2-0 and NORESM2-MM. Table B1 in the Appendix provides more details on these models. We use the historical runs as well as the SSP5-8.5 experiments. We compare four widely used bias adjustment methods that are implemented in ibicus: ISIMIP3BASD (Lange, 2019) applied amongst others by (Jägermeyr et al. (2021); Pokhrel et al. (2021) as well as impact models run under the ISIMIP framework); scaled distribution mapping (Switanek et al., 2017, applied amongst others as a pre-processing step to assess changes in high-impact weather events over the UK in Hanlon et al., 2021); and quantile mapping (applied in impact studies such as Babaousmail et al., 2022) and linear scaling, which are used as reference methods. These four methods are applied to daily total precipitation (pr) and daily minimum near-surface air temperature (tasmin), chosen to cover two different types of variables (bounded vs. unbounded, different distributions, etc.) that are both highly relevant for many impact studies. The bias adjustment methods are used with their ibicus default settings for both variables (for more details, see Table A1 and the software documentation). This means that the ISIMIP and SDM methods provide an explicit adjustment of dry day frequencies, whilst for QM they are treated as censored and the method based on Cannon et al. (2015) is applied; LS provides no explicit adjustment, scaling all values. We use ERA5 reanalysis data (Hersbach et al., 2020) as an observational reference; these are conservatively regridded to match the resolution of the selected climate models. The historical data ranges from 1 January 1959 to 31 December 2005, with the data from 1 January 1959 to 31 December 1989 serving as the historical/reference period and used as a training dataset and the subsequent period (1 January 1990 to 31 December 2005) used for validation purposes. Bias adjustment is applied to the validation period as well as the future period: 1 January 2080 to 31 December 2100.

We demonstrate four bespoke impact metrics that are related to daily minimum temperature and daily total precipitation and defined using the ibicus threshold metrics class:

-

tasmin <10 ∘C (283.15 K), which was chosen based on Droulia and Charalampopoulos (2022), who estimate climate impacts on viniculture, noting that grapevines are in their optimal photosynthesis zone above >10 ∘C.

-

tasmin greater than the seasonal 95th percentile of the daily minimum temperature in each grid cell during the historical period (1959–1989). This can be an indicator of the impacts of heatwaves (Raei et al., 2018).

-

Dry days (daily precipitation <1 mm) and very wet days (daily precipitation >10 mm) as two ETCCDI indices.

4.2 Results

4.2.1 Evaluation of the location-wise bias in the validation period

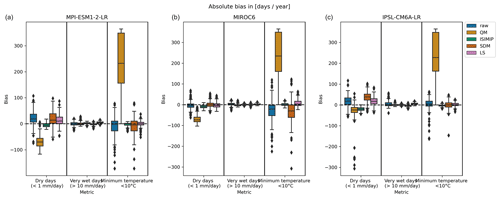

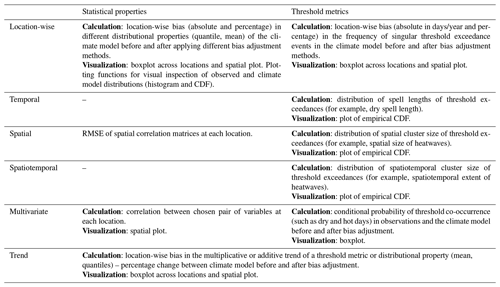

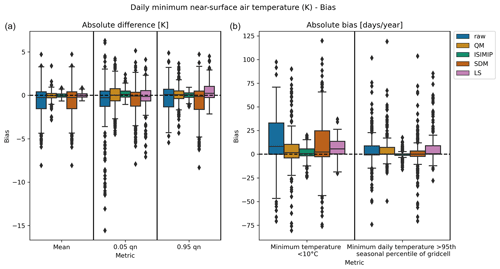

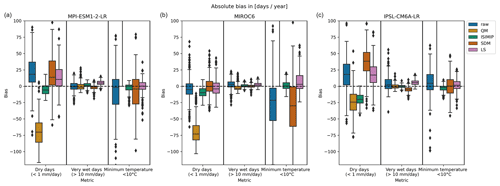

Figures 1–3 show the marginal bias of the climate model with respect to observations over the validation period before and after bias adjustment across locations in the study area.

Figure 1Distribution across locations of the marginal minimum daily temperature bias of the ACCESS-CM2 climate model before bias adjustment (raw) and after applying the ISIMIP3BASD bias adjustment method (ISIMIP), quantile mapping (QM), scaled distribution mapping (SDM) and linear scaling (LS). (a) The distribution of the absolute bias (in K) in the mean and 0.05 and 0.95 quantiles. (b) The distribution of the absolute bias in the threshold metrics: minimum daily temperature below 10 ∘C and minimum daily temperature above the 95th seasonal percentile defined for this grid cell, both in units of d yr−1. Bias (location-wise) is defined as the difference between the metric for the (bias adjustment) climate model in the validation period and the metric for the observational data in the validation period (in each grid cell, metrics were calculated in the temporal dimension). This figure shows the standard ibicus output distribution of location-wise bias for a set of specified statistics and threshold metrics. The boxplot shows the median and the first and third quartiles as a box, the outer range (defined as Q1−1.5×IQR to ) as whiskers, and any points beyond this as diamonds.

Figure 2Distribution of marginal bias across locations before bias adjustment (raw) and after applying the ISIMIP3BASD bias adjustment method (ISIMIP), quantile mapping (QM), scaled distribution mapping (SDM) and linear scaling (LS). Three climate models (MPI-ESM1-2-LR, MIROC6 and IPSL-CM6A-LR) and three threshold metrics – minimum daily temperature below 10 ∘C, dry days (defined as a total precipitation below 1 mm) and very wet days (defined as a total precipitation above 10 mm) – are evaluated. The bias in minimum temperature <10 ∘C of the climate models after applying quantile mapping is particularly large, exceeding 300 %. For improved readability of the plot, we have omitted this bias adjustment–metric combination here, but we show the full plot in the Appendix.

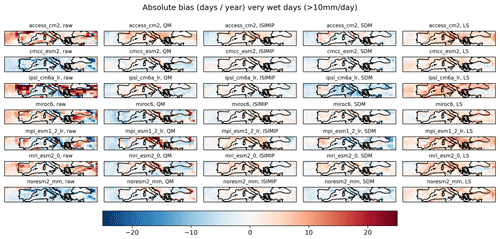

Figure 3Spatial plot of marginal absolute bias in very wet days (defined as total precipitation above 10 mm), given in d yr−1. Results are shown for seven climate models (ACCESS-CM2, CMCC-ESM2, IPSL-CM6A-LR, MIROC6, MPI-ESM1-2-LR, MRI-ESM2-0 and NORESM2-MM) before bias adjustment (raw) and after applying the ISIMIP3BASD bias adjustment method (ISIMIP), quantile mapping (QM), scaled distribution mapping (SDM) and linear scaling (LS).

We find that most methods reduce but do not eliminate the marginal bias in the mean (shown for the ACCESS-CM2 model and minimum daily temperature in Fig. 1), while the range of reduction is varied: ISIMIP and linear scaling achieve more significant reductions in the bias than quantile mapping or scaled distribution mapping. This result also holds for extremal quantiles and threshold metrics, and we even observe a slight inflation of the raw climate model bias observed in certain instances for both quantile mapping and scaled distribution mapping.

Furthermore, in Fig. 2 we see that that the success of a bias adjustment method depends on the use case, meaning the variable, metric and climate model studied. While scaled distribution mapping somewhat reduces the median bias in dry days for two of the climate models, it inflates the bias in dry days for the third. On the other hand, the method reduces the bias in the minimum temperature threshold metric for the IPSL-CM6A-LR model but inflates the bias in this metric for the MIROC6 model. ISIMIP3BASD, on the other hand, reduces the bias in dry days for the MPI-ESM1-2-LR model but increases it for the MIROC6 model. Quantile mapping performs reasonably well for the wet-day metric but quite badly for the dry-day and minimum temperature metrics. These differences in the performance of bias adjustment methods can be due to the assumptions (a parametric distribution fit might not replicate the correct tail behaviour) and the method (whether they are tailored to a specific variable or whether event frequency adjustment is implemented) applied as well as the physical source of the bias in the climate model.

When investigating the spatial distribution of the bias (Fig. 3), we find that certain methods can homogenize the spatial pattern of the bias across climate models. For example, linear scaling (LS) shifts climate models to an overestimation of very wet days in similar regions, even for models like NORESM2-MM which previously underestimated these days. In other cases, methods can perform well in certain regions but not in others. Quantile mapping (QM) seems to perform reasonably well over the Iberian peninsula but has difficulties over Italy, especially for MPI-ESM1-2-LR, where a strong underestimation is shifted into a strong overestimation. This highlights the importance of investigating the spatial distribution of the marginal bias, as this varies across the different regions in the Mediterranean.

4.2.2 Evaluation of the bias in spatiotemporal characteristics in the validation period

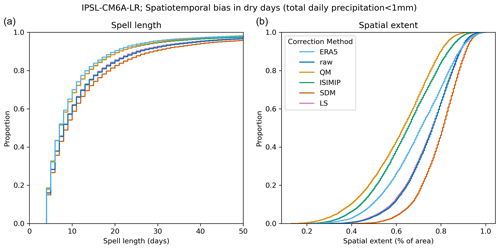

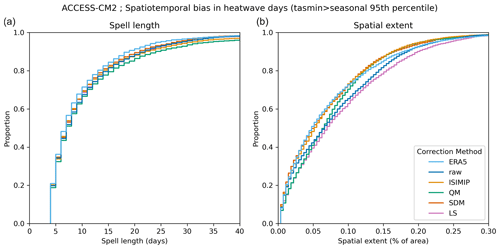

Moving on to the investigation of spatiotemporal characteristics, Figs. 4 and 5 show the cumulative distributions of spell length and spatial extent for the dry-day and minimum temperature heatwave days metrics, respectively. The plots depict the standard visualization output that the ibicus software package produces for this type of evaluation.

Figure 4Cumulative distribution functions of spell length (a) and spatial extent of dry days (b). The spell length is defined as the length of a temporal sequence longer than 3 d during which a single grid cell exceeds the specified threshold. The spatial extent is defined as the fraction of cells exceeding the specified threshold, given that a single cell exceeds the threshold. These plots show the cumulative distribution functions of individual spell lengths and spatial extents at single points in time across the entire Mediterranean region in the observational data (ERA5) and in the climate model IPSL-CM6A-LR before bias adjustment (raw) and after applying the ISIMIP3BASD bias adjustment method (ISIMIP), quantile mapping (QM), scaled distribution mapping (SDM) and linear scaling (LS).

Figure 5As Fig. 4, but investigating the threshold of minimum daily temperature exceeding its 95th seasonal percentile defined per grid cell for the climate model ACCESS-CM2.

The spatiotemporal characteristics investigated exhibit biases between the reanalysis data and raw climate model output. For example, it is ∼1.6 times more likely for a dry spell to exceed 20 d in the raw climate model IPSL-CM6A-LR compared to the reanalysis data.

We find that the bias in these spatiotemporal metrics can be reduced with some bias adjustment methods: for example, ISIMIP3BASD reduces the spell length bias for dry days, and scaled distribution mapping reduces the bias in both spell length and spatial extent for minimum temperature heatwave days. However, this result is again inconsistent across methods and variables, and different bias adjustment methods frequently appear to increase the spatiotemporal bias: scaled distribution mapping increases the bias in spell length and spatial extent of dry days, as do quantile mapping and ISIMIP3BASD when investigating the spatial extent.

These results are to some extent expected, as the selected methods are univariate methods, meaning they are calibrated location-wise and do not incorporate spatiotemporal information. However, the results highlight the need to evaluate how bias adjustment changes spatiotemporal characteristics, as these are often implicitly used in downstream impact studies.

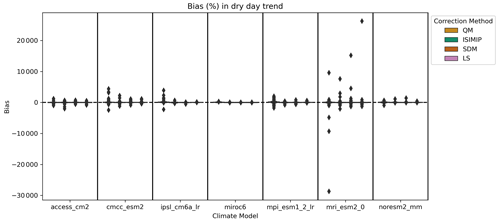

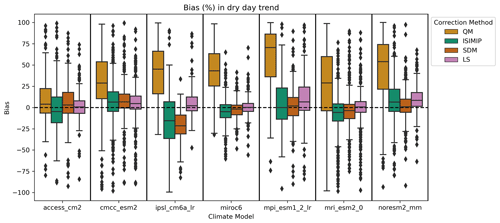

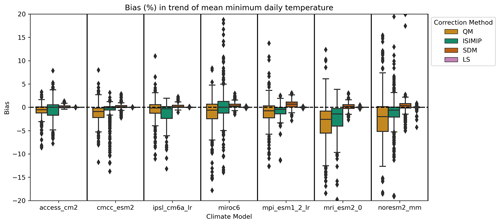

4.2.3 Evaluation of the climate change trend before and after bias adjustment

As mentioned in the “Background” section, the modification of the climate change signal through bias adjustment has been reported and discussed in various publications and has stimulated the development of methods that aim to preserve the climate signal.

Figure 6Distribution of location-wise change in the additive climate trend in dry days introduced through the bias adjustment method, as computed by computing the additive trend between the validation period and the future period in both the raw and the bias-adjusted models and taking the percentage difference between the two trends. The magnitude of the raw projected change in dry days depends on the climate model and, across different locations, lies between 10 fewer and 30 more dry days on average per year.

Figure 7As Fig. 6, but for the trend in mean minimum daily temperature. The magnitude of the raw projected change in mean minimum daily temperature again depends on the climate model and, across different locations, lies between 2–5 K.

In the analysis of the dry day trend, shown in Fig. 6, we find that a non-trend-preserving method such as quantile mapping significantly alters the climate change trend. The axes in Fig. 6 were limited to ±100 % for the sake of readability; however, a limited number of data points show even larger biases after applying quantile mapping. The unrestricted version of this plot can be found in the Appendix.

We also find that methods that aim to preserve the trend, such as ISIMIP3BASD or scaled distribution mapping, modify it by up to 100 % at some locations. For the ISIMIP method, this is presumably due to the fact that the “future observations” through which the trend preservation is implemented are mapped using empirical CDFs, whereas the bias adjustment itself is parametric. It has been argued that the normal distribution for temperature or the gamma distribution for precipitation might not adequately capture the tail behaviour of these variables (Katz et al., 2002; Nogaj et al., 2006; Sippel et al., 2015; Naveau et al., 2016). This is particularly relevant when investigating the trends of high or low quantiles as well as threshold metrics that do not sit at the centre of the distribution. Additionally, for bounded variables such as precipitation, the frequency beyond two outer thresholds is adjusted separately in the ISIMIP3BASD methodology, which could lead to the change in the dry day trend shown in Fig. 6.

We find a much smaller change in the trend of the mean minimum daily temperature across methods, shown in Fig. 7. In fact, linear scaling barely modifies the trend at all, which is to be expected since the method only subtracts the mean bias from the future and the validation period, based on the strong assumption that the bias affects the mean only and is stationary over time.

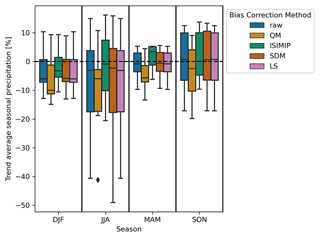

4.2.4 Evaluation of the variation in the climate model ensemble before and after bias adjustment

Figure 8 shows that the climate model ensemble spread of the trend in mean seasonal precipitation is modified in different ways by different bias adjustment methods, which is in line with previous findings in the literature (Maraun and Widmann, 2018b; Lafferty and Sriver, 2023). Interestingly, the variation (often interpreted as the uncertainty range) is not necessarily narrowed, as has been postulated by some authors (Ehret et al., 2012); it is even extended and shifted in some cases. From this finding, it follows that the range of uncertainty and possible worst-case scenarios analysed in subsequent impact studies might depend on the bias adjustment method used to pre-process the climate model.

Figure 8Ensemble spread of seven selected climate models (ACCESS-CM2, CMCC-ESM2, IPSL-CM6A-LR, MIROC6, MPI-ESM1-2-LR, MRI-ESM2-0 and NORESM2-MM), showing the trend in average seasonal precipitation between the validation and future period without applying bias adjustment (raw) and after applying ISIMIP3BASD, quantile mapping and scaled distribution mapping.

The interpretation of this shift in uncertainty is related to the previously discussed questions on trend preservation, namely whether the change in the climate model trend through a statistical bias adjustment method is justified or not. This issue was mentioned by Maraun and Widmann (2018b), who discussed that a minimum requirement to justify a change in the uncertainty spread through bias adjustment should be a critical evaluation of the validity of the results and the assumptions of the underlying statistical model. Given the finding in the previous section, namely that the best bias adjustment method depends on the variable, region and impact variable studied, it follows that indiscriminately applying a bias adjustment method across regions and variables without evaluation can shift the spread of the results of subsequent impact studies in an unjustified manner.

Statistical bias adjustment is a useful method when working with climate models to understand future climate impacts. However, there are fundamental as well as practical issues in how bias adjustment is currently used both in academic research and by practitioners in the private and government sector. One practical issue impeding good practice is the availability of open-source software to compare different bias adjustment methods and evaluate non-calibrated aspects.

This paper demonstrates that the success of a bias adjustment method depends on the variable and impact studied, and that bias adjustment should therefore be evaluated for and adapted to the region and use case at hand. Depending on the climate model and variable of interest, different methods can reduce or increase biases by a large range and can impair spatiotemporal coherence or leave it relatively unaffected. This is non-systematic across bias adjustment methods, climate models and variables/metrics of interest. Furthermore, we find that even trend-preserving methods can modify the trend in statistical properties and climate indices, and each bias adjustment method changes the climate model ensemble spread slightly differently.

With the Python package ibicus, we aim to provide a resource to address some of these practical issues. For one, the evaluation framework allows users to evaluate non-calibrated aspects and identify potential issues in bias-adjusted data. Second, the common interface developed for different bias adjustment methods allows for a relatively easy comparison between different methods and the selection of the method most appropriate for the use case. Finally, the ibicus software implementation modularizes certain components of different methods, such as the treatment of dry days. This allows the user to examine the impact of detailed methodological choices for their application and select the most appropriate option, which has so far not been possible due to the dispersed implementations of different methodologies.

So far, the package has implemented univariate bias adjustment methods, meaning that the bias adjustment is calibrated and applied to each grid point separately. Multivariate bias adjustment methods that correct spatial, temporal or inter-variable structures next to marginal aspects have been published by, amongst others, Piani and Haerter (2012); Vrac and Friederichs (2015); Sippel et al. (2016); Cannon (2016, 2018); Vrac (2018); François et al. (2020). We have so far chosen to focus on univariate methods, as the need for careful model selection and evaluation becomes even more pertinent when using multivariate methods (Maraun et al., 2017; François et al., 2020; Van de Velde et al., 2022). Our aim was therefore to first establish a robust workflow and evaluation for widely used univariate methods, thereby addressing one of the key practical issues impeding more rigorous evaluation.

The package remains under active development and maintenance, and we would like to invite collaboration from the community to extend and further develop its functionalities. Aside from adding further methods, the modularity of the different methods can be further improved, enabling even more flexible use of different methods by the user. In addition, a systematic review of different available software tools and methods for bias adjustment could be of use to the community. Furthermore, the implications of bias adjustment for the outcomes of impact modelling studies could be examined based on the evaluation and comparison of different methods within the ibicus package. The ibicus evaluation can also be used as a starting point to further examine physical sources of climate model biases, which can inform improvements in the representation of physical processes within the climate model itself. Also, both the choice of validation period and the choice of observational dataset and the uncertainty therein have been shown to affect the results of bias adjustment (Casanueva et al., 2020). While this is not explicitly explored in this publication or package, the evaluation tools available through ibicus enable the investigation of these issues.

Finally, the results presented in this paper raise a number of important broader questions regarding the use and future development of bias adjustment methods. The finding that different bias adjustment methods lead to very different results raises the question of whether bias adjustment should be seen as an additional source of uncertainty, as suggested by Lafferty and Sriver (2023). However, the paper also shows that different methods perform better or worse depending on the region and variable studied, which constitutes a clear reason to evaluate and select the bias adjustment targeted to the use case rather than viewing different methods as another source of uncertainty. This then raises questions about whether choosing a “standard” bias adjustment method to render results comparable is valid and useful in many applications. These questions can serve as a starting point to re-consider both the application of bias adjustment as well as to initiate the future development of methods suitable to address the different fundamental issues facing bias adjustment. Existing research avenues include approaches to post-process the entire climate model ensemble (Chandler, 2013; Rougier et al., 2013; Sansom et al., 2021) or conditioning the bias adjustment on specific relevant large-scale processes (Maraun et al., 2017; Verfaillie et al., 2017; Manzanas and Gutiérrez, 2019).

Table A1Bias adjustment methods currently implemented in ibicus, with variables covered and details of their functioning. Here x refers to observations xobs or climate model values during the historical/reference period xcm, hist or future period xcm, fut, and F refers to a cumulative distribution function (CDF) fitted either parametrically or non-parametrically. Covered variables indicate variables for which the bias adjustment method currently has default settings, and climatic variables with an * symbol are variables with experimental default settings. Those are settings that were not published in the peer-reviewed literature but were found to give good performance. The references given are the references used for the implementation of the method in the ibicus package.

The current version of ibicus is available from PyPI (https://pypi.org/project/ibicus/, last access: 5 February 2024) under the Apache License version 2.0, and is described in detail in https://ibicus.readthedocs.io/en/latest/ (last access: 5 February 2024). The source code is available via GitHub (https://github.com/ecmwf-projects/ibicus, last access: 5 February 2024). The exact version of ibicus used to produce the results used in this paper is archived on Zenodo (https://doi.org/10.5281/zenodo.8101898, Spuler and Wessel, 2023), as are input data and scripts to run ibicus and produce the plots for all the simulations presented in this paper (https://doi.org/10.5281/zenodo.8101842 Wessel and Spuler, 2023). The ERA5 and CMIP6 data used were accessed via the Copernicus Climate Data Store under the Copernicus licence: https://doi.org/10.24381/cds.143582cf (Hersbach et al., 2017) and https://doi.org/10.24381/cds.c866074c (Copernicus Climate Change Service, Climate Data Store, 2021) respectively.

JBW and FRS led the conceptualization, software development, methodology and formal analysis of the software package and case study, and they prepared and wrote the original draft of the paper, contributing equally to all steps outlined. ECP and CC led the project administration and supervision of the project, provided resources, and contributed to the writing of the paper by reviewing and editing. JV and ECP also contributed to the software development, and JV supported the project administration.

The contact author has declared that none of the authors has any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

We thank the researchers who developed the different bias adjustment methods implemented in this package. In particular, we thank Matthew Switanek for responding to and engaging with our questions during the development period of the package and the discussion during EGU 2023, and Matthias Mengel for discussions on the results of the case study. Fiona Spuler and Jakob Wessel thank their PhD supervisors Marlene Kretschmer and Ted Shepherd, and Frank Kwasniok and Chris Ferro, for helpful discussions on the case study; and they thank Esperanza Cuartero for her support during the ECMWF Summer of Weather Code programme. We also thank Benjamin Aslan, Simonetta Spavieri, Cynthia Rodenkirchen and Philipp Breul for helpful input on the naming of the package. We acknowledge the World Climate Research Programme, which, through its Working Group on Coupled Modelling, coordinated and promoted CMIP6. We thank ECMWF for developing the ERA5 reanalysis.

Jakob Wessel is supported and funded by the Engineering and Physical Sciences Research Council, grant/award number: 2696930. Fiona Spuler is supported and funded by the University of Reading. The development of this package was funded under the ECMWF Summer of Weather Code 2022 Scholarship for Early-Career Researchers.

This paper was edited by Fabien Maussion and reviewed by Jorn Van de Velde and one anonymous referee.

Babaousmail, H., Hou, R., Ayugi, B., Sian, K. T. C. L. K., Ojara, M., Mumo, R., Chehbouni, A., and Ongoma, V.: Future changes in mean and extreme precipitation over the Mediterranean and Sahara regions using bias-corrected CMIP6 models, Int. J. Climatol., 42, 7280–7297, https://doi.org/10.1002/joc.7644, 2022. a, b

Berg, P., Bosshard, T., Yang, W., and Zimmermann, K.: MIdASv0.2.1 – MultI-scale bias AdjuStment, Geosci. Model Dev., 15, 6165–6180, https://doi.org/10.5194/gmd-15-6165-2022, 2022. a

Boberg, F. and Christensen, J. H.: Overestimation of Mediterranean summer temperature projections due to model deficiencies, Nat. Clim. Change, 2, 433–436, https://doi.org/10.1038/nclimate1454, 2012. a

Cannon, A. J.: Multivariate Bias Correction of Climate Model Output: Matching Marginal Distributions and Intervariable Dependence Structure, J. Climate, 29, 7045–7064, https://doi.org/10.1175/JCLI-D-15-0679.1, 2016. a

Cannon, A. J.: Multivariate quantile mapping bias correction: an N-dimensional probability density function transform for climate model simulations of multiple variables, Clim. Dynam., 50, 31–49, https://doi.org/10.1007/s00382-017-3580-6, 2018. a

Cannon, A. J.: MBC: Multivariate Bias Correction of Climate Model Outputs, CRAN [code], https://cran.r-project.org/web/packages/MBC/index.html (last access: 5 February 2024), 2023. a

Cannon, A. J., Sobie, S. R., and Murdock, T. Q.: Bias Correction of GCM Precipitation by Quantile Mapping: How Well Do Methods Preserve Changes in Quantiles and Extremes?, J. Climate, 28, 6938–6959, https://doi.org/10.1175/JCLI-D-14-00754.1, 2015. a, b, c, d, e, f

Casanueva, A., Herrera, S., Iturbide, M., Lange, S., Jury, M., Dosio, A., Maraun, D., and Gutiérrez, J. M.: Testing bias adjustment methods for regional climate change applications under observational uncertainty and resolution mismatch, Atmos. Sci. Lett., 21, e978, https://doi.org/10.1002/asl.978, 2020. a, b, c

Chandler, R. E.: Exploiting strength, discounting weakness: Combining information from multiple climate simulators, Philos. T. Roy. Soc. A, 371, 20120388, https://doi.org/10.1098/rsta.2012.0388, 2013. a

Chen, J., Brissette, F. P., and Lucas-Picher, P.: Assessing the limits of bias-correcting climate model outputs for climate change impact studies, J. Geophys. Res.-Atmos., 120, 1123–1136, https://doi.org/10.1002/2014JD022635, 2015. a

Christensen, J. H., Boberg, F., Christensen, O. B., and Lucas-Picher, P.: On the need for bias correction of regional climate change projections of temperature and precipitation, Geophys. Res. Lett., 35, L20709, https://doi.org/10.1029/2008GL035694, 2008. a

Copernicus Climate Change Service, Climate Data Store: CMIP6 climate projections, Copernicus Climate Change Service (C3S) Climate Data Store (CDS) [data set], https://doi.org/10.24381/cds.c866074c, 2021. a

Doblas-Reyes, F. J., Sörensson, A. A., Almazroui, M., Dosio, A., Gutowski, W. J., Haarsma, R., Hamdi, R., Hewitson, B., Kwon, W.-T., Lamptey, B. L., Maraun, D., Stephenson, T.S., Takayabu, I., Terray, L., Turner, A., and Zuo, Z.: Linking Global to Regional Climate Change, in: Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change, edited by: Masson-Delmotte, V., Zhai, P., Pirani, A., et al., Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, 1363–1512, https://doi.org/10.1017/9781009157896.012, 2021. a

Dobor, L., Barcza, Z., Hlásny, T., Havasi, Á., Horvath, F., Ittzés, P., and Bartholy, J.: Bridging the gap between climate models and impact studies: the FORESEE Database, Geosci. Data J., 2, 1–11, https://doi.org/10.1002/gdj3.22, 2015. a

Dosio, A.: Projections of climate change indices of temperature and precipitation from an ensemble of bias-adjusted high-resolution EURO-CORDEX regional climate models, J. Geophys. Res.-Atmos., 121, 5488–5511, https://doi.org/10.1002/2015JD024411, 2016. a, b

Dosio, A., Paruolo, P., and Rojas, R.: Bias correction of the ENSEMBLES high resolution climate change projections for use by impact models: Analysis of the climate change signal, J. Geophys. Res.-Atmos., 117, D17110, https://doi.org/10.1029/2012JD017968, 2012. a

Droulia, F. and Charalampopoulos, I.: A Review on the Observed Climate Change in Europe and Its Impacts on Viticulture, Atmosphere, 13, 837, https://doi.org/10.3390/atmos13050837, 2022. a

Dumitrescu, A., Amihaesei, V.-A., and Cheval, S.: RoCliB – bias-corrected CORDEX RCMdataset over Romania, Geosci. Data J., 262–275, https://doi.org/10.1002/gdj3.161, 2020. a, b

Ehret, U., Zehe, E., Wulfmeyer, V., Warrach-Sagi, K., and Liebert, J.: HESS Opinions ”Should we apply bias correction to global and regional climate model data?”, Hydrol. Earth Syst. Sci., 16, 3391–3404, https://doi.org/10.5194/hess-16-3391-2012, 2012. a, b

Famien, A. M., Janicot, S., Ochou, A. D., Vrac, M., Defrance, D., Sultan, B., and Noël, T.: A bias-corrected CMIP5 dataset for Africa using the CDF-t method – a contribution to agricultural impact studies, Earth Syst. Dynam., 9, 313–338, https://doi.org/10.5194/esd-9-313-2018, 2018. a, b

François, B., Vrac, M., Cannon, A. J., Robin, Y., and Allard, D.: Multivariate bias corrections of climate simulations: which benefits for which losses?, Earth Syst. Dynam., 11, 537–562, https://doi.org/10.5194/esd-11-537-2020, 2020. a, b

Galmarini, S., Cannon, A., Ceglar, A., Christensen, O., de Noblet-Ducoudré, N., Dentener, F., Doblas-Reyes, F., Dosio, A., Gutierrez, J., Iturbide, M., Jury, M., Lange, S., Loukos, H., Maiorano, A., Maraun, D., McGinnis, S., Nikulin, G., Riccio, A., Sanchez, E., Solazzo, E., Toreti, A., Vrac, M., and Zampieri, M.: Adjusting climate model bias for agricultural impact assessment: How to cut the mustard, Climate Services, 13, 65–69, https://doi.org/10.1016/j.cliser.2019.01.004, 2019. a

Gobiet, A., Suklitsch, M., and Heinrich, G.: The effect of empirical-statistical correction of intensity-dependent model errors on the temperature climate change signal, Hydrol. Earth Syst. Sci., 19, 4055–4066, https://doi.org/10.5194/hess-19-4055-2015, 2015. a, b

Hagemann, S., Chen, C., Haerter, J. O., Heinke, J., Gerten, D., and Piani, C.: Impact of a Statistical Bias Correction on the Projected Hydrological Changes Obtained from Three GCMs and Two Hydrology Models, J. Hydrometeorol., 12, 556–578, https://doi.org/10.1175/2011JHM1336.1, 2011. a

Hanlon, H. M., Bernie, D., Carigi, G., and Lowe, J. A.: Future changes to high impact weather in the UK, Climatic Change, 166, 50, https://doi.org/10.1007/s10584-021-03100-5, 2021. a

Hempel, S., Frieler, K., Warszawski, L., Schewe, J., and Piontek, F.: A trend-preserving bias correction – the ISI-MIP approach, Earth Syst. Dynam., 4, 219–236, https://doi.org/10.5194/esd-4-219-2013, 2013. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horányi, A., Muñoz‐Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R.J., Hólm, E., Janisková, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thépaut, J.-N.: Complete ERA5 from 1940: Fifth generation of ECMWF atmospheric reanalyses of the global climate, Copernicus Climate Change Service (C3S) Data Store (CDS) [data set], https://doi.org/10.24381/cds.143582cf, 2017. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horányi, A., Muñoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Hólm, E., Janisková, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thépaut, J.-N.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a

Hoyer, S. and Hamman, J.: xarray: N-D labeled Arrays and Datasets in Python, Journal of Open Research Software, 5, 10, https://doi.org/10.5334/jors.148, 2017. a

Hui, Y., Chen, J., Xu, C.-Y., Xiong, L., and Chen, H.: Bias nonstationarity of global climate model outputs: The role of internal climate variability and climate model sensitivity, Int. J. Climatol., 39, 2278–2294, https://doi.org/10.1002/joc.5950, 2019. a

IPCC: Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change, edited by: Masson-Delmotte, V., Zhai, P., Pirani, A., et al., Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, in press, https://doi.org/10.1017/9781009157896, 2021. a, b

IPCC: Climate Change 2022: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change, edited by: Pörtner, H.-O., Roberts, D. C., Tignor, M., et al., Cambridge University Press. Cambridge University Press, Cambridge, UK and New York, NY, USA, 3056 pp., https://doi.org/10.1017/9781009325844, 2022. a

Iturbide, M., Bedia, J., Herrera, S., Baño-Medina, J., Fernández, J., Frías, M. D., Manzanas, R., San-Martín, D., Cimadevilla, E., Cofiño, A. S., and Gutiérrez, J. M.: The R-based climate4R open framework for reproducible climate data access and post-processing, Environ. Modell. Softw., 111, 42–54, https://doi.org/10.1016/j.envsoft.2018.09.009, 2019. a

Ivanov, M. A., Luterbacher, J., and Kotlarski, S.: Climate Model Biases and Modification of the Climate Change Signal by Intensity-Dependent Bias Correction, J. Climate, 31, 6591–6610, https://doi.org/10.1175/JCLI-D-17-0765.1, 2018. a

Jägermeyr, J., Müller, C., Ruane, A. C., Elliott, J., Balkovic, J., Castillo, O., Faye, B., Foster, I., Folberth, C., Franke, J. A., Fuchs, K., Guarin, J. R., Heinke, J., Hoogenboom, G., Iizumi, T., Jain, A. K., Kelly, D., Khabarov, N., Lange, S., Lin, T.-S., Liu, W., Mialyk, O., Minoli, S., Moyer, E. J., Okada, M., Phillips, M., Porter, C., Rabin, S. S., Scheer, C., Schneider, J. M., Schyns, J. F., Skalsky, R., Smerald, A., Stella, T., Stephens, H., Webber, H., Zabel, F., and Rosenzweig, C.: Climate impacts on global agriculture emerge earlier in new generation of climate and crop models, Nature Food, 2, 873–885, https://doi.org/10.1038/s43016-021-00400-y, 2021. a

Katz, R. W., Parlange, M. B., and Naveau, P.: Statistics of extremes in hydrology, Adv. Water Resour., 25, 1287–1304, https://doi.org/10.1016/S0309-1708(02)00056-8, 2002. a, b

Lafferty, D. C. and Sriver, R. L.: Downscaling and bias-correction contribute considerable uncertainty to local climate projections in CMIP6, npj Climate and Atmospheric Science, 6, 158, https://doi.org/10.1038/s41612-023-00486-0, 2023. a, b

Lange, S.: Trend-preserving bias adjustment and statistical downscaling with ISIMIP3BASD (v1.0), Geosci. Model Dev., 12, 3055–3070, https://doi.org/10.5194/gmd-12-3055-2019, 2019. a, b, c, d, e, f

Lange, S.: ISIMIP3b bias adjustment fact sheet, Tech. Rep., Inter-Sectoral Impact Model Intercomparison Project, https://www.isimip.org/documents/413/ISIMIP3b_bias_adjustment_fact_sheet_Gnsz7CO.pdf (last access: 5 February 2024), 2021a. a, b

Lange, S.: ISIMIP3BASD, Zenodo [code], https://doi.org/10.5281/zenodo.4686991, 2021b. a

Li, H., Sheffield, J., and Wood, E. F.: Bias correction of monthly precipitation and temperature fields from Intergovernmental Panel on Climate Change AR4 models using equidistant quantile matching, J. Geophys. Res.-Atmos., 115, D10101, https://doi.org/10.1029/2009JD012882, 2010. a, b, c

Manzanas, R. and Gutiérrez, J. M.: Process-conditioned bias correction for seasonal forecasting: a case-study with ENSO in Peru, Clim. Dynam., 52, 1673–1683, https://doi.org/10.1007/s00382-018-4226-z, 2019. a, b

Maraun, D.: Bias Correction, Quantile Mapping, and Downscaling: Revisiting the Inflation Issue, J. Climate, 26, 2137–2143, https://doi.org/10.1175/JCLI-D-12-00821.1, 2013. a

Maraun, D.: Bias Correcting Climate Change Simulations – a Critical Review, Current Climate Change Reports, 2, 211–220, https://doi.org/10.1007/s40641-016-0050-x, 2016. a, b, c, d, e, f, g, h

Maraun, D. and Widmann, M.: Cross-validation of bias-corrected climate simulations is misleading, Hydrol. Earth Syst. Sci., 22, 4867–4873, https://doi.org/10.5194/hess-22-4867-2018, 2018a. a

Maraun, D. and Widmann, M.: Statistical Downscaling and Bias Correction for Climate Research, Cambridge University Press, ISBN 978-1-108-34064-9, 2018b. a, b

Maraun, D., Shepherd, T. G., Widmann, M., Zappa, G., Walton, D., Gutiérrez, J. M., Hagemann, S., Richter, I., Soares, P. M. M., Hall, A., and Mearns, L. O.: Towards process-informed bias correction of climate change simulations, Nat. Clim. Change, 7, 764–773, https://doi.org/10.1038/nclimate3418, 2017. a, b, c, d, e, f, g, h, i

Maraun, D., Widmann, M., and Gutiérrez, J. M.: Statistical downscaling skill under present climate conditions: A synthesis of the VALUE perfect predictor experiment, Int. J. Climatol., 39, 3692–3703, https://doi.org/10.1002/joc.5877, 2019. a

Met Office: Iris: A powerful, format-agnostic, and community-driven Python package for analysing and visualising Earth science data, Tech. Rep., Met Office, Exeter, Devon, https://scitools.org.uk/ (last access: 5 February 2024), 2010. a

Michelangeli, P.-A., Vrac, M., and Loukos, H.: Probabilistic downscaling approaches: Application to wind cumulative distribution functions, Geophys. Res. Lett., 36, L11708, https://doi.org/10.1029/2009GL038401, 2009. a, b, c

Mishra, V., Bhatia, U., and Tiwari, A. D.: Bias-corrected climate projections for South Asia from Coupled Model Intercomparison Project-6, Scientific Data, 7, 338, https://doi.org/10.1038/s41597-020-00681-1, 2020. a

Navarro-Racines, C., Tarapues, J., Thornton, P., Jarvis, A., and Ramirez-Villegas, J.: High-resolution and bias-corrected CMIP5 projections for climate change impact assessments, Scientific Data, 7, 7, https://doi.org/10.1038/s41597-019-0343-8, 2020. a

Naveau, P., Huser, R., Ribereau, P., and Hannart, A.: Modeling jointly low, moderate, and heavy rainfall intensities without a threshold selection, Water Resour. Res., 52, 2753–2769, https://doi.org/10.1002/2015WR018552, 2016. a

NGFS: NGFS Climate Scenarios for central banks and supervisors, Tech. Rep., Network for Greening the Financial System, https://www.ngfs.net/en/ngfs-climate-scenarios-central-banks-and-supervisors-september-2022 (last access: 5 February 2024), 2021. a, b

Nogaj, M., Yiou, P., Parey, S., Malek, F., and Naveau, P.: Amplitude and frequency of temperature extremes over the North Atlantic region, Geophys. Res. Lett., 33, L10801, https://doi.org/10.1029/2005GL024251, 2006. a

Olsson, J., Berggren, K., Olofsson, M., and Viklander, M.: Applying climate model precipitation scenarios for urban hydrological assessment: A case study in Kalmar City, Sweden, Atmos. Res., 92, 364–375, https://doi.org/10.1016/j.atmosres.2009.01.015, 2009. a

Piani, C. and Haerter, J. O.: Two dimensional bias correction of temperature and precipitation copulas in climate models, Geophys. Res. Lett., 39, L20401, https://doi.org/10.1029/2012GL053839, 2012. a

Pokhrel, Y., Felfelani, F., Satoh, Y., Boulange, J., Burek, P., Gädeke, A., Gerten, D., Gosling, S. N., Grillakis, M., Gudmundsson, L., Hanasaki, N., Kim, H., Koutroulis, A., Liu, J., Papadimitriou, L., Schewe, J., Müller Schmied, H., Stacke, T., Telteu, C.-E., Thiery, W., Veldkamp, T., Zhao, F., and Wada, Y.: Global terrestrial water storage and drought severity under climate change, Nat. Clim. Change, 11, 226–233, https://doi.org/10.1038/s41558-020-00972-w, 2021. a

Raei, E., Nikoo, M. R., AghaKouchak, A., Mazdiyasni, O., and Sadegh, M.: GHWR, a multi-method global heatwave and warm-spell record and toolbox, Scientific Data, 5, 180206, https://doi.org/10.1038/sdata.2018.206, 2018. a

Rocklin, M.: Dask: Parallel Computation with Blocked algorithms and Task Scheduling, in: Proceedings of the 14th Python in Science Conference, edited by: Huff, K. and Bergstra, J., 130–136, https://doi.org/10.25080/Majora-7b98e3ed-013, 2015. a, b

Rougier, J., Goldstein, M., and House, L.: Second-Order Exchangeability Analysis for Multimodel Ensembles, J. Am. Stat. Assoc., 108, 852–863, https://doi.org/10.1080/01621459.2013.802963, 2013. a

Sansom, P. G., Stephenson, D. B., and Bracegirdle, T. J.: On Constraining Projections of Future Climate Using Observations and Simulations From Multiple Climate Models, J. Am. Stat. Assoc., 116, 546–557, https://doi.org/10.1080/01621459.2020.1851696, 2021. a

Sippel, S., Zscheischler, J., Heimann, M., Otto, F. E. L., Peters, J., and Mahecha, M. D.: Quantifying changes in climate variability and extremes: Pitfalls and their overcoming, Geophys. Res. Lett., 42, 9990–9998, https://doi.org/10.1002/2015GL066307, 2015. a

Sippel, S., Otto, F. E. L., Forkel, M., Allen, M. R., Guillod, B. P., Heimann, M., Reichstein, M., Seneviratne, S. I., Thonicke, K., and Mahecha, M. D.: A novel bias correction methodology for climate impact simulations, Earth Syst. Dynam., 7, 71–88, https://doi.org/10.5194/esd-7-71-2016, 2016. a

Spuler, F. and Wessel, J.: ibicus v1.0.1, Zenodo [code], https://doi.org/10.5281/zenodo.8101898, 2023. a

Switanek, M., Maraun, D., and Bevacqua, E.: Stochastic downscaling of gridded precipitation to spatially coherent subgrid precipitation fields using a transformed Gaussian model, Int. J. Climatol., 42, 6126–6147, 2022. a

Switanek, M. B., Troch, P. A., Castro, C. L., Leuprecht, A., Chang, H.-I., Mukherjee, R., and Demaria, E. M. C.: Scaled distribution mapping: a bias correction method that preserves raw climate model projected changes, Hydrol. Earth Syst. Sci., 21, 2649–2666, https://doi.org/10.5194/hess-21-2649-2017, 2017. a, b, c, d, e, f

Van de Velde, J., Demuzere, M., De Baets, B., and Verhoest, N. E. C.: Impact of bias nonstationarity on the performance of uni- and multivariate bias-adjusting methods: a case study on data from Uccle, Belgium, Hydrol. Earth Syst. Sci., 26, 2319–2344, https://doi.org/10.5194/hess-26-2319-2022, 2022. a, b

Vautard, R., Kadygrov, N., Iles, C., Boberg, F., Buonomo, E., Bülow, K., Coppola, E., Corre, L., van Meijgaard, E., Nogherotto, R., Sandstad, M., Schwingshackl, C., Somot, S., Aalbers, E., Christensen, O. B., Ciarlo, J. M., Demory, M.-E., Giorgi, F., Jacob, D., Jones, R. G., Keuler, K., Kjellström, E., Lenderink, G., Levavasseur, G., Nikulin, G., Sillmann, J., Solidoro, C., Sørland, S. L., Steger, C., Teichmann, C., Warrach-Sagi, K., and Wulfmeyer, V.: Evaluation of the Large EURO-CORDEX Regional Climate Model Ensemble, J. Geophys. Res.-Atmos., 126, e2019JD032344, https://doi.org/10.1029/2019JD032344, 2021. a

Verfaillie, D., Déqué, M., Morin, S., and Lafaysse, M.: The method ADAMONT v1.0 for statistical adjustment of climate projections applicable to energy balance land surface models, Geosci. Model Dev., 10, 4257–4283, https://doi.org/10.5194/gmd-10-4257-2017, 2017. a, b

von Storch, H.: On the Use of “Inflation” in Statistical Downscaling, J. Climate, 12, 3505–3506, https://doi.org/10.1175/1520-0442(1999)012<3505:OTUOII>2.0.CO;2, 1999. a

Vrac, M.: Multivariate bias adjustment of high-dimensional climate simulations: the Rank Resampling for Distributions and Dependences (R2D2) bias correction, Hydrol. Earth Syst. Sci., 22, 3175–3196, https://doi.org/10.5194/hess-22-3175-2018, 2018. a

Vrac, M. and Friederichs, P.: Multivariate–Intervariable, Spatial, and Temporal–Bias Correction, J. Climate, 28, 218–237, https://doi.org/10.1175/JCLI-D-14-00059.1, 2015. a, b

Vrac, M. and Michelangeli, P.-A.: CDFt: Downscaling and Bias Correction via Non-Parametric CDF-Transform, CRAN [code], https://cran.r-project.org/web/packages/CDFt/index.html (last access: 5 February 2024), 2021. a

Vrac, M., Drobinski, P., Merlo, A., Herrmann, M., Lavaysse, C., Li, L., and Somot, S.: Dynamical and statistical downscaling of the French Mediterranean climate: uncertainty assessment, Nat. Hazards Earth Syst. Sci., 12, 2769–2784, https://doi.org/10.5194/nhess-12-2769-2012, 2012. a

Vrac, M., Noël, T., and Vautard, R.: Bias correction of precipitation through Singularity Stochastic Removal: Because occurrences matter, J. Geophys. Res.-Atmos., 121, 5237–5258, https://doi.org/10.1002/2015JD024511, 2016. a, b, c

Wang, L. and Chen, W.: Equiratio cumulative distribution function matching as an improvement to the equidistant approach in bias correction of precipitation, Atmos. Sci. Lett., 15, 1–6, https://doi.org/10.1002/asl2.454, 2014. a

Wessel, J. and Spuler, F.: ibicus v1.0.1 – data and additional code for GMD submission, Zenodo [data set], https://doi.org/10.5281/zenodo.8101842, 2023. a

Willems, P. and Vrac, M.: Statistical precipitation downscaling for small-scale hydrological impact investigations of climate change, J. Hydrol., 402, 193–205, https://doi.org/10.1016/j.jhydrol.2011.02.030, 2011. a

Xu, Z., Han, Y., Tam, C.-Y., Yang, Z.-L., and Fu, C.: Bias-corrected CMIP6 global dataset for dynamical downscaling of the historical and future climate (1979–2100), Scientific Data, 8, 293, https://doi.org/10.1038/s41597-021-01079-3, 2021. a, b

Zappa, G. and Shepherd, T. G.: Storylines of Atmospheric Circulation Change for European Regional Climate Impact Assessment, J. Climate, 30, 6561–6577, https://doi.org/10.1175/JCLI-D-16-0807.1, 2017. a

Zhang, X., Alexander, L., Hegerl, G. C., Jones, P., Tank, A. K., Peterson, T. C., Trewin, B., and Zwiers, F. W.: Indices for monitoring changes in extremes based on daily temperature and precipitation data, WIREs Clim. Change, 2, 851–870, https://doi.org/10.1002/wcc.147, 2011. a, b

Zscheischler, J., Westra, S., Van Den Hurk, B. J., Seneviratne, S. I., Ward, P. J., Pitman, A., Aghakouchak, A., Bresch, D. N., Leonard, M., Wahl, T., and Zhang, X.: Future climate risk from compound events, Nat. Clim. Change, 8, 469–477, https://doi.org/10.1038/s41558-018-0156-3, 2018. a

Zscheischler, J., Martius, O., Westra, S., Bevacqua, E., Raymond, C., Horton, R. M., van den Hurk, B., AghaKouchak, A., Jézéquel, A., Mahecha, M. D., Maraun, D., Ramos, A. M., Ridder, N. N., Thiery, W., and Vignotto, E.: A typology of compound weather and climate events, Nature Reviews Earth and Environment, 1, 333–347, https://doi.org/10.1038/s43017-020-0060-z, 2020. a

- Abstract

- Introduction

- Background

- ibicus – an open-source software package for bias adjustment

- Implementation of ibicus in the Mediterranean region

- Conclusions

- Appendix A

- Appendix B

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

- Abstract

- Introduction

- Background

- ibicus – an open-source software package for bias adjustment

- Implementation of ibicus in the Mediterranean region

- Conclusions

- Appendix A

- Appendix B

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References