the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Various ways of using empirical orthogonal functions for climate model evaluation

Rasmus E. Benestad

Abdelkader Mezghani

Julia Lutz

Andreas Dobler

Kajsa M. Parding

Oskar A. Landgren

We present a framework for evaluating multi-model ensembles based on common empirical orthogonal functions (common EOFs) that emphasize salient features connected to spatio-temporal covariance structures embedded in large climate data volumes. This framework enables the extraction of the most pronounced spatial patterns of coherent variability within the joint dataset and provides a set of weights for each model in terms of the principal components which refer to exactly the same set of spatial patterns of covariance. In other words, common EOFs provide a means for extracting information from large volumes of data. Moreover, they can provide an objective basis for evaluation that can be used to accentuate ensembles more than traditional methods for evaluation, which tend to focus on individual models. Our demonstration of the capability of common EOFs reveals a statistically significant improvement of the sixth generation of the World Climate Research Programme (WCRP) Climate Model Intercomparison Project (CMIP6) simulations in comparison to the previous generation (CMIP5) in terms of their ability to reproduce the mean seasonal cycle in air surface temperature, precipitation, and mean sea level pressure over the Nordic countries. The leading common EOF principal component for annually or seasonally aggregated temperature, precipitation, and pressure statistics suggests that their simulated interannual variability is generally consistent with that seen in the ERA5 reanalysis. We also demonstrate how common EOFs can be used to analyse whether CMIP ensembles reproduce the observed historical trends over the historical period 1959–2021, and the results suggest that the trend statistics provided by both CMIP5 RCP4.5 and CMIP6 SSP245 are consistent with observed trends. An interesting finding is also that the leading common EOF principal component for annually or seasonally aggregated statistics seems to be approximately normally distributed, which is useful information about the multi-model ensemble data.

- Article

(2842 KB) - Full-text XML

-

Supplement

(5739 KB) - BibTeX

- EndNote

The question of how to evaluate climate models is often complicated by large volumes of data. In many cases, it is the salient information about the meteorological phenomena, conditions, and states that they are designed to reproduce that we want to assess rather than details in individual grid boxes that are subject to surface parameterization and numerical algorithms associated with discrete mathematics, approximations, and statistical fluctuations. The climate models are expected to have an intrinsic minimum skilful scale that arises from discrete mathematics, approximations, and parameterization (Benestad et al., 2008). Furthermore, they are typically used to study trends and variability but are not expected to be directly synchronized or correlated in time with the particular timing of chaotic meteorological phenomena playing out in Earth's climate (Lorenz, 1963), such as the El Niño Southern Oscillation (Philander, 1989) or volcanic eruptions. Hence, one approach for evaluating them may relate to the spatio-temporal covariance structure embedded in the simulated output. An emphasis on the spatio-temporal covariance can also make use of the redundancy in the data and reduce the degrees of freedom in the data and hence minimize the required data volume needed for describing the output. Empirical orthogonal functions (henceforth EOFs) represent a mathematical technique that identifies the spatio-temporal covariance structure based on linear algebra and eigenfunctions (Lorenz, 1956; Preisendorfer, 1988; Wilks, 2006; Navarra and Simoncini, 2010; Joliffe, 1986; Hannachi, 2022). Their function may also be considered to be a way of reorganizing the information embedded within a data object X according to the decomposition

where information associated with salient covariance structures is moved to the top of the list. In this case, we distinguish between the concepts of data (merely a large set of numbers) and information (what the data represent or its statistical or mathematical properties). The matrix X contains a joint dataset and is , and the principal components in the matrix denoted by symbol V can be expressed as . The first segments X1 and V1 typically hold data from a reanalysis, and the others contain data from climate models. Moreover, the components of an EOF analysis have useful mathematical properties, where is an identity matrix and Λ is a diagonal matrix related to the eigenvalues. The technique may be regarded as a form of machine learning (ML) where EOFs are based on eigenfunctions and eigenvectors for which their mathematical properties simplify the analysis of the data (Wilks, 2006).

Flury (1984), Flury and Gautschi (1986), Sengupta and Boyle (1993, 1998), and Barnett (1999) proposed a variant of EOFs that they described as common empirical orthogonal functions (henceforth common EOFs) for model intercomparison. The common EOFs are mathematically identical to ordinary EOFs but involve two or more datasets combined on a common grid along the time axis. Hence, one segment of the time axis may represent reanalysis data, whereas another segment may contain climate model data that have been interpolated onto the same grid as the reanalysis. Keeping track of which time segment represents which dataset (in this case a reanalysis or a particular climate model) is essential for common EOFs to make sense. Benestad et al. (2008) described common EOFs and discussed their application in climate research, and common EOFs have been useful as a framework for empirical–statistical downscaling (Benestad et al., 2001), motivated by Barnett (1999). Benestad et al. (2017, 2019a) also provided demonstrations of how common EOFs can be applied to analyse ensembles of decadal forecasts. However, a general literature search with Google Scholar, the assessment reports of the Intergovernmental Panel on Climate Change (IPCC), and the documentation behind the ESMValTool (Eyring et al., 2020; Weigel et al., 2021) suggests that common EOFs are not widely used in the climate research community. The impression of a modest interest in common EOFs was also expressed in Benestad (2021) and is supported by the following quote from Hannachi et al. (2022): “To the best of our knowledge only two studies considered common EOFs, which go back more than two decades (Frankignoul et al., 1995; Sengupta and Boyle, 1998), which were based on the original Flury and Gautschi (1986) (FG86)'s algorithm”. However, we also know of a few additional cases where common EOFs were employed, e.g. those cited above, that were overlooked by Hannachi et al. (2022). A Google Scholar search for “common principal component analysis” (1680 hits, 1 December 2022) nevertheless suggests that common EOFs are discussed in scientific journals belonging to scientific disciplines other than climate science, such as statistics, biometrics, biology geology, and neuro-computing.

To demonstrate the merit of common EOFs, we present some examples of how they can be used to evaluate climate models in the context of large multi-model ensembles of global climate models (GCMs). One of our objectives was to evaluate GCM data that are used as predictors for empirical–statistical downscaling (ESD) over northern Europe, and we picked this as an example to demonstrate their utility and merit. While Hannachi et al. (2022) used common EOFs to compare individual models, we present an approach where they are used to compare different ensembles, such as CMIP5 RCP4.5 and CMIP6 SSP245, and to assess whether they provide a statistical representation that has a similar statistical population (Wilks, 2006, e.g. p. 72) as the reanalysis. In this case, they provided a framework in which we applied standard hypothesis testing and statistical tests.

Hannachi et al. (2022) provide a description of the mathematics behind common EOFs, which is also relevant for our analysis, but here we present a slightly different approach for applying common EOFs for the purpose of climate model evaluation and for assessing different model ensembles. Our method bears both similarities and differences to previous applications of common EOFs. In our case, we applied EOFs to one variable from different sources stacked in the space direction, as in Barnett (1999), which is similar to a tensor decomposition where the space–time matrices of the individual projections are stacked along a third (model) direction (Cichocki et al., 2015). Our approach with data stacked from different models along the time direction had a similar function as a third model dimension because spatio-temporal covariance matrices only involve time and space. It is also possible to stack data along the space axis as the multi-variate EOFs described in Sanderson et al. (2015), where all monthly values of any one projection were stacked in the space direction instead of the time direction; however, this is only meaningful if the data are expected to contain synchronous temporal variations. Another example of using such mixed-field EOFs is found in Benestad et al. (2002), who combined standardized surface air temperature and mean sea level pressure and used EOFs of these combined fields as predictors in empirical–statistical downscaling. The different CMIP runs are not expected to be synchronized, as the regional variations are both pronounced and of a chaotic–stochastic nature.

We used singular value decomposition (SVD) (Becker et al., 1988) on a joint data matrix (multiple datasets stacked along the temporal axis) rather than the step-wise algorithm for a set of covariance matrices described by Hannachi et al. (2022). Hence, we obtained identical spatial maps and eigenvalues for all models in the joint data matrix but different statistical properties (e.g. amplitude and mean) for the different segments of the principal components that represented different models. Both these variants have been referred to as common EOFs, and for the lack of a better term, we will use the term common EOFs for the analytical framework presented herein. In our case, we used the approach described in Benestad et al. (2019a), where common EOFs were used to represent an ensemble of decadal forecasts based on a single GCM. More specifically, we used common EOFs to illustrate how well GCMs reproduce the mean annual cycle in terms of the spatio-temporal covariance structure compared to the ERA5 reanalysis (Hersbach et al., 2020). We also present another example where we used common EOFs to assess how well the GCMs simulate the interannual variability in terms of the annual mean surface air temperature, precipitation, and mean sea level pressure. A third way of applying EOFs in model evaluation is as a framework for comparing trends simulated by different GCMs, where they highlight salient features in the trend structure. In all these cases, we used common EOFs to evaluate both CMIP5 (Meehl et al., 2005; Taylor et al., 2012) and CMIP6 (Eyring et al., 2016) ensembles in a joint analysis. One complication was the varying number of simulations carried out with one model set-up, as some GCMs produced numerous simulations in the CMIP ensembles, whereas others only produced a few. To make the evaluation as objective as possible, we only selected one simulation from each GCM, filtering the data based on the ensemble member label (r1i1p1 for CMIP5, r1i1p1f1 for CMIP6), using runs that spanned the period 1850–2100, and only the emission scenarios RCP4.5 and SSP245 (in this case, we only used data for the period in common with the ERA5 reanalysis, specifically 1959–2021). We also repeated the analysis on slightly different spatial domains to assess the robustness of our results. In this evaluation, we computed common EOFs for a joint dataset of 35 CMIP5 RCP4.5 runs and 40 CMIP6 SSP245 runs (75 runs in total for surface air temperature (TAS), but not all of these were available for monthly precipitation (PR) and sea level pressure (PSL)). To cope with the vast amount of data, each model run was represented in terms of monthly mean seasonal cycle (12 calendar months each) and also annually or seasonally aggregated statistics (63 spatial maps for each run, 1 for each year in the period 1959–2021). In this case, the term aggregated statistics refers to the mean estimate for TAS and PSL and the sum for PR (total precipitation over the year or season).

Common EOFs can be used to evaluate individual GCMs against the ERA5 reanalysis through the estimation of the difference in the mean , standard deviation σ, and lag-1 autocorrelation r1 estimated for the different segments of the principal components (PCs) representing different datasets (5.1 in the Supplementary data of Benestad et al., 2016). Here, we evaluated how well the models are able to reproduce the mean seasonal cycle in the TAS, monthly PR totals, and the mean sea level pressure (PSL) over a region spanning the Nordic countries (55–72∘ N, 5∘ W–45∘ E). We repeated the same analysis in four other domains to assess the robustness of our results, and those findings can be found in the Supplement, available from Figshare (Benestad, 2022). The model performance was gauged by taking the root-mean-square error (RMSE) of the leading principal component that accounts for most of the variance, using ERA5 as a reference. We also applied common EOFs to the annual and seasonal mean TAS, annual and seasonal total PR, and annual and seasonal mean PSL to diagnose their interannual variability and how well it was reproduced in the CMIP ensembles. Moreover, we applied the analysis separately to the full year (January–December) and each of the four seasons: winter (DJF), spring (MAM), summer (JJA), and autumn (SON). The skill metric of the models' reproduction of the interannual variation in the said annually or seasonally aggregated statistics involved rank statistics and the assumption that any rank is equally probable if the weights of the PC representing the ERA5 reanalysis belongs to the same statistical population as the ensemble of GCMs. We used a two-sided Kolmogorov–Smirnov test (Wilks, 2006) to compare the empirical distribution of the rank statistics against a uniform distribution representing the case for which all ranks have the same probability. We also used Monte Carlo simulations to represent a perfect case as a reference for the rank analysis of the annual and seasonal means. In these simulations, we used the same number of years and a statistical sample with the same size as the ensemble in question and picked a fixed realization as a surrogate for the reanalysis and the rest to represent the ensemble. In these Monte Carlo simulations, the reanalysis and ensemble belonged to the same statistical population by design.

Finally, we made a data matrix with columns consisting of spatial maps (the 2D matrix orientation of the data was reordered into a 1D vector) with linear trend estimates over 1959–2021, with one column for each GCM in the respective CMIP multi-model ensemble, in addition to a corresponding map with trend estimates derived from the ERA5 reanalysis. The EOFs of this joint data matrix were used to assess the differences in reproducing the main aspects of the historical trends among the GCMs and reanalysis.

The analysis presented here was carried out using the R package esd, version 1.10.15 (Benestad et al., 2015), within the R environment, version 4.2.2 (R Core Team, 2023). Essential data and R code (an R markdown script and its output in PDF format) used for these computations are available in the Supplement and as free open-source material from Figshare in order to enhance the transparency and reproducibility of these results: https://figshare.com/articles/dataset/Common_EOFs_for_model_evaluation/21641756 (last access: 23 May 2023). The Figshare repository can be cited as Benestad (2022) and is archived as a combination of the R markdown script, the PDF file (Supplement for this paper), and a set of R binary data files stored as separate files for the respective RCP45 and SSP245 scenarios and for the three different parameters TAS, PR, and PSL. The data files contain 72–75 different GCM runs in addition to ERA5, and the total data volume of all these files is 1.9 GB. While the processing of the data stored in this repository was carried out on powerful Linux servers and the job for all combinations of seasons and regions took roughly 22 h to complete, the R code provided was run on a 64 bit HP Elitebook 850 G8 laptop with Ubuntu 18.04.6 LTS with 32 Gb memory.

It is possible to test the common EOF framework for cases with bad data to see how the results turn out for when the ensemble does not reproduce the properties of the reference. In this case, we simulated such a case by replacing the ERA5 TAS with ERA5 PR, keeping the ensemble, as it were (TAS), so that the reference and ensemble consisted of different types of variables (Supplement). The mismatch could be seen in the amplitude of the PCs representing the reference and ensemble, as well as in the leading EOFs representing a lower fraction of the variance. The EOFs were dominated by the ensemble, and in general, it may be necessary to include several PCs in the evaluation when the leading EOFs do not account for most of the variance. It is also important to keep in mind that the PCs' variance fractions may depend on the spatial domain covered by the data grid.

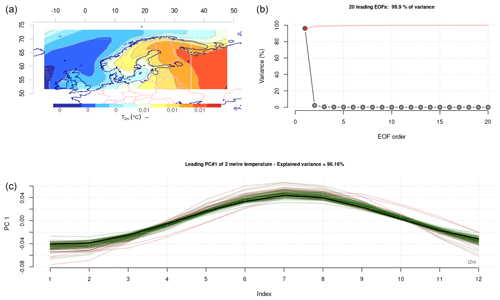

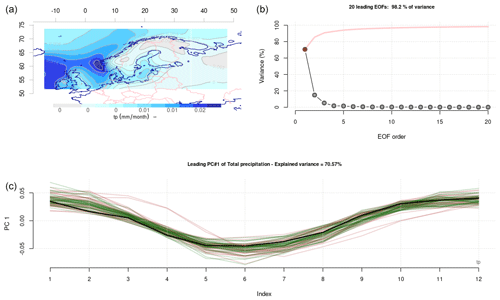

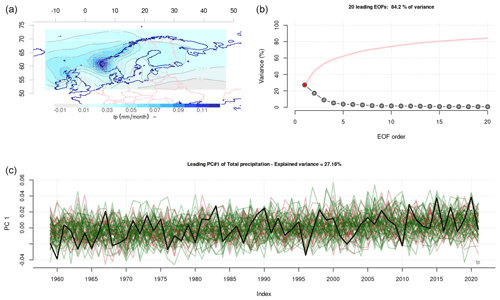

Figure 1Common EOFs which present the covariance structure for model simulations of the annual mean cycle in TAS. Panel (a) presents the spatial covariance structure of the leading mode, (b) indicates the variance associated with 20 leading modes, and (c) shows the leading PC for the multi-model ensemble. The black curve represents the ERA5 reanalysis, whereas the red curves represent CMIP5, and the green curves represent CMIP6.

2.1 Results

2.1.1 Evaluation of the simulated mean seasonal cycle

Figure 1 presents the leading common EOF for the mean seasonal cycle in the surface air temperature (TAS) over the Nordic countries. The spatial map (panel a) shows the structure of the most dominant covariance pattern of the seasonal cycle, and the eigenvalues (panel b) suggest that this mode dominates the seasonal behaviour completely. Both pattern and eigenvalues were estimated from the joint dataset that involved ERA5, the CMIP5 RCP4.5 ensemble, and the CMIP6 SSP245 ensemble. The spatial patterns (U in Eq. 1 shown in Fig. 1a) and the eigenvalues (Λ in Eq. 1 presented in Fig. 1b) are common for all models, and only the corresponding principal components (PCs, represented by the matrix V in Eq. 1) in panel c of Fig. 1 show the differences between the reanalysis and the GCMs from the CMIP5 and CMIP6 ensembles. These differences are visible as scattered brown and green curves. It is important to keep in mind that individual EOFs may not necessarily be associated with a clear physical meaning, especially the higher-order ones, as the different modes are designed to be orthogonal to each other (Ambaum et al., 2001; Huth and Beranová, 2021). However, they are useful mathematical concepts that enable more-efficient work with large data volumes and make it easier to extract salient information from data, but sometimes, they may nevertheless provide insights into physical phenomena within the analysed domain. In our analysis, they ensured a set of indices for all GCMs which were related to a common covariance structure within the joint dataset, and we used them to evaluate the mean seasonal cycle estimated over the period 1959–2021. Our evaluation was based on the root-mean-square error (RMSE) between the leading PC representing the corresponding mean seasonal cycle in TAS from ERA5 and the joint set of 75 GCMs from both CMIP5 RCP4.5 (35 members) and CMIP6 SSP245 GCMs (40 members). The results of this evaluation are presented in Table 1, and a Wilcoxon rank sum (also known as Mann–Whitney) test (Wilks, 2006) was applied to the two sets of RMSE scores representing CMIP5 and CMIP6 respectively. Our results indicated that the CMIP6 simulations had a better score, and the difference with CMIP5 was statistically significant at the 5 % confidence level. Hence, the CMIP6 models were more skilful at reproducing the mean seasonal cycle in TAS in the Nordic region. The difference in skill is also visible in panel c of Fig. 1, which shows that the curves for CMIP5 (brown) were less tightly clustered around ERA5 (black) than those for CMIP6 (green). The leading mode accounted for 96 % of the variance, which suggests that all GCMs produced a seasonal cycle with a similar spatial covariance structure (panel a, Fig. 1).

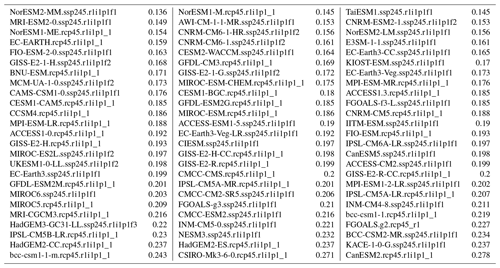

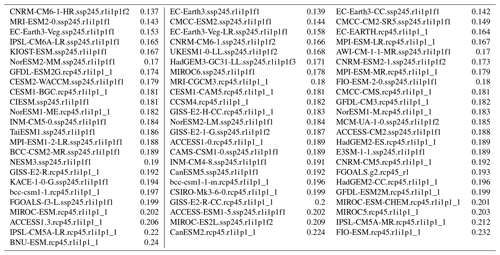

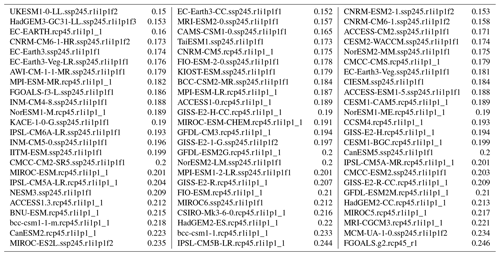

Table 1GCMs ranked according to their RMSE score for their TAS mean seasonal cycle common-EOF results. A Wilcoxon rank sum test with continuity correction data gave W=533.5, p value = 0.03892 for the alternative hypothesis that the true location shift is less than 0. See the Supplement for details behind the calculations.

Table 2GCMs ranked according to their RMSE score for their PR mean seasonal cycle common-EOF results. A Wilcoxon rank sum test with continuity correction data returned W=303.5, p value = 0.0001545 for the alternative hypothesis that the true location shift is less than 0.

Table 3GCMs ranked according to their RMSE score for their PSL mean seasonal cycle common-EOF results. A Wilcoxon rank sum test with continuity correction data gave W=296, p value = for the alternative hypothesis that the true location shift is less than 0.

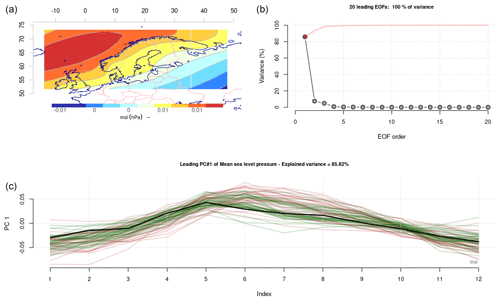

Figure 2Same as Fig. 1 but for the mean annual cycle in precipitation.

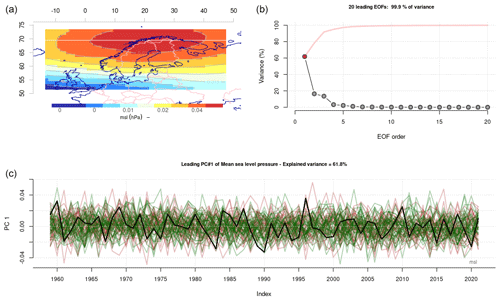

Figure 3Same as Fig. 1 but for the mean annual cycle in sea level pressure.

We repeated the evaluation of the climate models' ability to reproduce the mean seasonal cycle in PR (Fig. 2 and Table 2) and PSL (Fig. 3 and Table 3). The number of available CMIP results for PR was slightly different to that of TAS at the time of the analysis, and our ensembles consisted of 33 members from CMIP5 RCP4.5 and 37 from CMIP6 SSP245. The exact ensemble size was not critical for our demonstration, as our objective was to demonstrate the utility and merit of common EOFs for model evaluation. The eigenvalues for PR indicated that the leading mode accounted for a lower portion of the variance (71 %) than TAS, which may be due to variations in their ability to capture the typical spatial patterns in PR associated with different seasons. The greatest seasonal variations in PR can be seen near the west coast of Norway (panel a of Fig. 2). The leading mode for PSL, on the other hand, accounted for 86 % of the variance, and most GCMs reproduced a mean seasonal cycle that involved a northwest–southeast PSL gradient. The common EOFs for PSL were applied to 35 members from CMIP5 RCP4.5 and 37 from CMIP6 SSP245. The RMSE scores for PR and PSL are reported in Tables 2–3, and a Wilcoxon rank sum test indicated that the CMIP6 simulations constituted an improvement over those from the CMIP5 in terms of reproducing the mean seasonal cycle using the ERA5 reanalysis as a reference (statistically significant at the 5 % level).

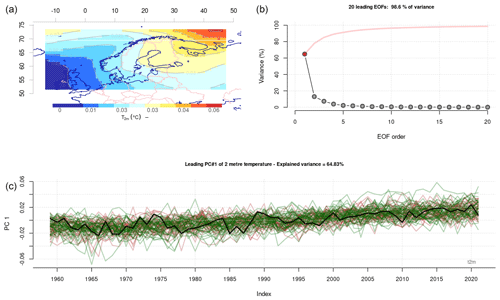

Figure 4Common EOFs which present the covariance structure for model simulations of the interannual variability in the annual mean TAS. Panel (a) presents the spatial covariance structure of the leading mode, (b) indicates the variance associated with 20 leading modes, and (c) shows the leading PC for the multi-model ensemble. The black curve represents the ERA5 reanalysis, whereas the red curves represent CMIP5 and the green curves CMIP6.

2.1.2 Evaluation of the simulated interannual variability

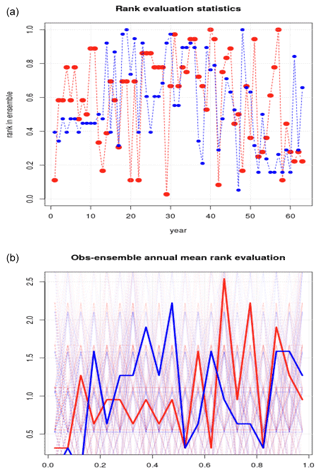

The results of the evaluation of the interannual variability in the annual mean TAS are shown in Fig. 4 in terms of the leading common EOF, with a map of the covariance connected to its interannual variability (panel a), eigenvalues (panel b), and time evolution (panel c). One striking observation is that the leading mode accounted for 65 % of the variance, with the five leading modes accounting for approximately 90 %, suggesting that most GCMs reproduced a similar covariance structure. We used a rank metric ℛ, where the PC weights for ERA5 were compared with the spread of the CMIP5 and CMIP6 ensembles in terms of their rank within each year and each ensemble. For the leading mode of the annual mean temperature shown in Fig. 4, both the CMIP5 and the CMIP6 produced ensemble results with a statistical population that was likely to be consistent with ERA5 data. In both cases, the two-sided Kolmogorov–Smirnov test indicated a high probability (p value) of ℛ belonging to a uniform distribution. A p value close to zero means that the data connected to the part of the leading PC representing ERA5 most likely belonged to a different statistical population than the respective CMIP ensemble (data from different segments of the same leading PC), whereas a p value near unity implies that ERA5 and the CMIP ensemble more likely belonged to the same statistical population. In our analysis, the Kolmogorov–Smirnov test for CMIP5 returned D=0.099206 with a p value of 0.5647, and the CMIP6 ℛ obtained D=0.11362 with a p value of 0.3902. Figure 5 provides a visualization of the rank metric ℛ on a year-by-year basis (panel a), as well as a histogram of the ranks for the TAS results shown in Fig. 4. It is evident from these plots that ℛ varies over the whole interval [0,1] and follows a distribution that is more or less uniform (flat structure), which we expect for ℛ if each rank is equally probable. Hence, for the annual mean TAS over the Nordic regions, both CMIP ensembles provided an approximate representation of the interannual variability seen in ERA5 and connected to the leading mode. A set of Monte Carlo simulations indicated that the ranking scores would fluctuate, even with ensembles that mimicked perfectly the statistical properties of the observations, due to the limited sample size.

Figure 5Rank statistics ℛ for the case presented in Fig. 4, where panel (a) shows the rank of ERA5 results within the multi-model ensemble spread on a year-to-year basis, whereas panel (b) shows histograms of the rank statistics together with results from Monte Carlo simulations of perfect cases (the y axis shows frequency, and the x axis shows the range of rank categories). Red marks CMIP5, whereas blue marks CMIP6. The Kolmogorov–Smirnov statistic D for CMIP5 was D=0.099206, with a p value of 0.5647, and the CMIP6 ℛ obtained D=0.11362 with a p value of 0.3902.

Figure 6Same as Fig. 4 but for the annual mean precipitation PR.

A corresponding assessment of the leading common EOF for PR (Fig. 6) indicated similar differences in statistical terms for both CMIP5 (D=0.11858, p value = 0.3384) and CMIP6 (D=0.13085, p value = 0.2309). The leading common EOF for annual PR representing variations along the west coast of Norway only accounted for 27 % of the variance, but the five leading modes accounted for approximately 60 %, suggesting that interannual variability in precipitation involves more-complicated anomalies and perhaps greater model differences. The low variance associated with the leading modes may suggest that the models produce different spatio-temporal covariance structures, i.e. that they produce different typical patterns of rainfall. It is also possible that a lower fraction of variance represented by the leading mode is due to smaller-scale spatial structures relative to the domain size when it comes to precipitation patterns. Hence, the common EOFs applied to annual PR revealed a more complicated situation where more than one mode dominates. In this case, the first five PCs represented the most salient properties in terms of PR spatio-temporal covariance, representing more than 60 % of the variance, and the high-order PCs were associated with negligible variance that typically represents numeric noise.

Figure 7Same as Fig. 4 but for the annual mean PSL.

Our assessment of how well the GCMs reproduced interannual variations in the annual mean PSL gave similar results as for TAS and PR. The leading mode was characterized by a centre of action over northern Scandinavia and accounted for 62 % of the variance (Fig. 7). The second and third modes were less important, but the fact that they had similar eigenvalues (16 %) suggests that they were degenerate, which refers to the two patterns being two aspects of the same mode (Wilks, 2006, p. 488). For PSL, ℛ was close to having a uniform distribution, and the test did not indicate a difference that was statistically significant at the 5 % level for either CMIP5 (D=0.13492, p value = 0.2016) or CMIP6 (D=0.14329, p value = 0.1504). In other words, both CMIP5 and CMIP6 seemed to roughly reproduce the annual mean circulation patterns over the Nordic region seen in the ERA5 data represented by the leading mode. The three leading modes accounted for approximately 94 % of the variance, suggesting that the GCMs reproduced a similar covariance structure, albeit with slight variations.

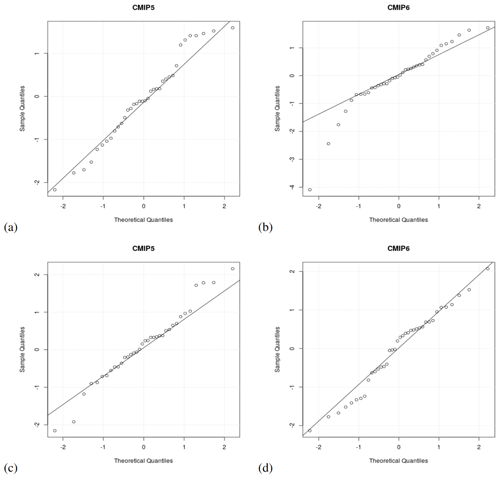

Figure 8Normal Q–Q Plots for CMIP5 RCP4.5 (a, c) and CMIP6 SSP245 (b, d) and for annual mean TAS (a, b) and winter mean TAS (c, d), showing variations in the nature of the ensemble distribution. The more pronounced deviation from a normal distribution for annual mean CMIP6 TAS in panel (b) was untypical for these results. Each data point represents the leading PC weight for 2021 for one GCM, i.e. the end points of the time series in the panel c of Figs. 4, 6, and 7.

We examined the nature of the multi-model ensemble distribution of the data of the leading PC for the annual aggregated statistics for the year 2022 and found them to be approximately normally distributed (Fig. 8) for most cases, both when it came to annual and seasonal timescales and for both CMIP5 RCP4.5 and CMIP6 SSP245. Only in a few cases did the data deviate substantially from the diagonal in the Q–Q plot, such as for the annual mean TAS (Fig. 8b), but this was not the typical outcome (Supplement, Benestad, 2022).

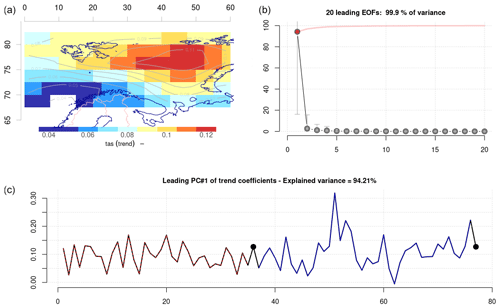

Figure 9Common EOFs which present the covariance structure for model simulations of trend maps in the annual mean TAS. Panel (a) presents the spatial covariance structure of the leading mode; (b) indicates the variance associated with 20 leading modes; and (c) shows the leading PC, where each weight represents a different member of the multi-model ensemble. The black symbols represent the ERA5 reanalysis, whereas red and blue curves mark CMIP5 and CMIP6 weights respectively. The units are dimensionless in (a) and (c), as the final numbers are a product of the eigenpattern, eigenvalue, and PC according to Eq. (1). The units of panel (b) are %.

2.1.3 Evaluation of the simulated historic trends

Figure 9 shows common EOFs that have been used to compare 1959–2021 trend maps from CMIP5 RCP4.5 (red curve in panel c) and CMIP6 SSP245 (blue curve in panel c) with ERA5 (black symbol), in this case over the Barents Sea region. Each ensemble member was represented by only one weight in the leading PC. The leading mode accounted for 94 % of the variance, suggesting that all models reproduced patterns with the strongest response in the northeast and the weakest response in the southwest, albeit with different amplitudes. The CMIP6 SSP245 (blue curve) indicated stronger variability between models than the CMIP5 RCP4.5 (red curve), suggesting a wider range of outcomes for the former and that the CMIP6 ensemble contained some more extreme models. It is nevertheless evident that the spread in both CMIP5 and CMIP6 embraced the results obtained with ERA5.

2.1.4 Assessment of robustness

The analyses of the mean seasonal cycle, the interannual variability, and historic trends were repeated for the said aggregated statistics for each of the winter, spring, summer and autumn seasons (Supplement), as one motivation behind this evaluation was to assess typical predictors used in empirical–statistical downscaling, which mainly involves seasonally aggregated statistics. We obtained similar results for the four different seasons (winter, spring, summer, and autumn). Moreover, the spatial domain (region) was chosen for the benefit of assessing the models before using them as input in downscaling exercises. The use of common EOFs as a framework for downscaling also provides a quality assessment (Benestad, 2001; Benestad et al., 2016), but extending them to larger multi-model ensembles provides a more comprehensive assessment of the entire ensemble. The repeated analysis for different spatial domains gave similar conclusions as those presented here for the 55–72∘ N, 5∘ W–45∘ E domain and the Barents Sea region.

2.2 Discussion

Linear algebra, eigenfunctions, and EOFs are well-established and versatile mathematical concepts, but we argue that there are still innovative ways of applying them in data analysis. In these demonstrations, they provided the basis for a framework, referred to as common EOFs, that enabled simple data comparisons, with an emphasis on the most salient features in the data. It is, in general, possible that the reference does not fall inside the ensemble spread for individual PCs, for which common EOFs would give low fractional variances for the leading modes and different amplitudes in the PCs. In a way, one could refer to the application of common EOFs as a kind of machine learning (ML) approach to big data, characterized by large data volumes, diverse sources, and speedy analysis.

Our demonstrations revealed a spread in the CMIP GCM ensembles that appeared to be consistent with the ERA5 interannual variability and a spread that was often close to being normally distributed. The different ensemble members were independent of each other and could be considered to be random in terms of their phase and timing, making the ensemble suitable for representing the non-deterministic natural variability. From a physical point of view, we know that these models reproduce chaotic and stochastic variability on decadal scales, and this is especially apparent if the ensemble is made up of simulations with one common model (Deser et al., 2012). For multi-model ensembles, there is also the additional component in terms of model differences. In one respect, we should indeed expect a strong interdependence between climate models, since they are built to represent the same physical system, but what we really desire is that the aspects that are not well-established and uncertain should involve different choices or methods so that they also provide a decent sample of the parameter space of unknowns. But in practice, different groups often copy others' attempts so that model uncertainties are not so well sampled (Boé, 2018). Nevertheless, the simulated stochastic–chaotic regional internal variability appears to be more pronounced, as both the time series in Figs. 4–7 seem to indicate, so these concerns are secondary in this case.

The independence between each model's representation of random variability, together with the ensemble spread being approximately normal, suggests that the salient information about future projections can be summarized by the following two parameters: the ensemble mean μe and the ensemble standard deviation σe. They can provide an estimate of a confidence interval μe±2σe, and if the ensemble spread is approximately normally distributed, we can use them to project a PDF for future aggregated TAS, PR, or PSL statistics on an annual or seasonal basis. This illustrates the difference between data and information, where the collection of time series for all ensemble members constitutes the data of the ensemble, whereas μe±2σe provides information about the ensemble. Hence, users of regional climate projections may not necessarily need to adapt their analysis to many individual simulations if they can get away with information about potential future outlooks in terms of a robust confidence interval. A PDF representing the ensemble distribution may also be used as a component of Bayesian inferences to estimate probabilities for e.g. heatwaves or heavy 24 h precipitation (Benestad et al., 2018, 2019b).

The common-EOF framework also suggested that CMIP6 models were better than CMIP5 models at reproducing the mean seasonal cycle in TAS, PR, and PSL over the Nordic region. Additionally, our analysis proposed that both CMIP5 RCP4.5 and CMIP6 SSP245 multi-model ensembles provide an approximate description of typical predictors on spatial domains relevant for empirical–statistical downscaling over the Nordic countries. The information about improved simulations in CMIP6 is in line with Lauer et al. (2022), who found that the total cloud cover, cloud water path, and cloud radiative effect were slightly better in the CMIP6 multi-model mean than in the CMIP5 ensemble mean in terms of mean bias, pattern correlation, and relative root-mean-square deviation. They also noted that an underestimation of cloud cover in stratocumulus regions is still a problem in CMIP6. The clouds simulated by the CMIP5 models were reported to be too few and too reflective over the Southern Ocean but were significantly improved in CMIP6.

The common EOF-approach and the esd tool (Benestad et al., 2015) represent a complement to already-existing analysis tools such as the GCMeval tool (Parding et al., 2020) or the Earth System Model Evaluation Tool (ESMValTool) (Eyring et al., 2020; Weigel et al., 2021). The latter performs common preprocessing operations and diagnostics that include tailored diagnostics and performance metrics for specific scientific applications.

It furthermore provides diagnostics for the mean annual cycle, pattern correlation, clustering, and EOFs, with RMSE estimates on a grid-by-grid basis (or spatial means of grid box estimates) rather than in terms of covariance structure, such as in Fig. 1 and Table 1. The ESMValTool also offers a regression of monthly mean geopotential heights onto the leading principal component monthly average to represent the northern annular mode (NAM) rather than a common-EOF approach similar to that presented in Fig. 4. It makes use of the Climate Variability Diagnostics Package (CVDP) that computes key metrics of internal climate variability in a set of user‐specific model simulations and observational datasets, providing spatial patterns and time series (Phillips et al., 2014).

Although it offers a large collection of diagnostics and performance metrics for atmospheric, oceanic, and terrestrial variables for the mean state, trends, and variability, it does not include common EOFs. While the ESMValTool is designed for the evaluation of climate model performance on a more individual basis rather than how well multi-model ensembles represent the world, the common-EOF framework proposed here can be used to assess whether the multi-model ensemble is fit for representing climate change and non-deterministic climate variability. Hence, the common-EOF framework can be designed to assess model results with a focus on their application for climate change adaptation.

The ESMValTool has been developed as a community effort currently involving more than 40 institutes, with a rapidly growing developer and user community. It offers more predefined functionalities than the esd package, but the esd package is more generic and flexible and also more geared towards empirical–statistical downscaling (ESD). ESD can also provide diagnostics about GCMs (Benestad, 2021), and the esd package is designed to deal with a more varied set of data types than just GCM output, as it has evolved from a previous open-source R library, namely clim.pact (Benestad, 2003). Both these tools can likely benefit from closer collaboration than in the past, as they seem to complement each other. Moreover, common EOFs make it easy to avoid matrices of many small maps (stamp collections) that are difficult to digest, since comparisons can be limited to time series and their differences in terms of statistics.

Finally, common EOFs also give a visual impression of simulated quality, as well as a framework for more-objective tests when applied to their principal components.

The results presented here for TAS represent a typical easy case where the variance is represented overwhelmingly by the first EOF and where ERA5 lies well in the middle of the ensemble distribution. The results for PR exhibit a more complicated situation in the interannual variation where higher-order PCs represent a greater fraction of the covariance structure, and in our case, the 20 leading modes merely accounted for about 80 % of the variance. For a more complete evaluation, the RMSE score metric em needs to include higher-order PCs, and according to Eq. (1), we get

where N is the number of modes, Λ contains the eigenvalues, and is the ith PC for model m in the ensemble references that consists of a time series over time period t. The leading EOFs are associated with higher fractions of variance and higher eigenvalues Λi, whereas the RMSE is less sensitive to higher-order ones with low Λi.

One choice regarding the use of this approach for evaluation is to include only the ensemble of projections in the EOF analysis and then to project one or several reference reanalyses onto these patterns. This variation of our approach would represent a cleaner approach not to meddle projections and references, especially if the projections involve other time periods and future outlooks. However, the exclusion of the reanalysis from estimating the EOFs would hardly make any appreciable difference with such vast ensembles as used here and with the same time coverage. In our case, the hypothesis was that the selected reanalysis and model data represent the same statistics, variable, region, and time period and hence should have similar properties. It is of course possible that the GCMs and reference differ so much so that the reference is outside the ensemble spread, which would indicate that they belong to different statistical populations. This matters for the first PCs representing a large fraction of variance but can be ignored for high-order PCs associated with negligible variance that represents numeric noise.

We present some demonstrations of how common EOFs can be applied in global climate model evaluation and use them to show that the CMIP6 SSP245 multi-model ensemble represents an improvement over CMIP5 RCP4.5 when it comes to reproducing the mean seasonal cycle in the near-surface temperature, precipitation, and mean sea level pressure over the Nordic countries. The analysis based on common EOFs also suggests that both CMIP ensembles are able to reproduce the interannual variability of these variables over the Nordic region and that they seem to embrace the observed historical trend seen in the ERA5 reanalysis. Common EOFs are not widely used within the climate research community, and we propose that they may benefit further research through innovative applications. A motivation for using common EOFs was to assess the value of multi-model ensembles of climate models for the application in climate service rather than focusing on single models. Hence, they were used to answer the question of whether the said CMIP multi-model ensembles are able to reproduce the observed statistics of the regional climate that are necessary for supporting climate change adaptation.

Both R markdown scripts with embedded R code, output in the PDF format, and data in R binary are available from Figshare (https://doi.org/10.6084/m9.figshare.21641756.v3; Benestad, 2022).

A couple of YouTube demonstrations on common EOFs are available from https://youtu.be/32mtHHAoq6k (Benestad, 2023a) and https://youtu.be/E01hthVL9pY (Benestad, 2023b).

The supplement related to this article is available online at: https://doi.org/10.5194/gmd-16-2899-2023-supplement.

REB conceptualized the work, carried out the analysis, and participated in the writing; KMP and AM contributed to the write up and the development of the esd package used to compute common EOFs and to carry out the analysis; JL, AD, and OAL contributed to the writing process.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Several datasets (CMIP5 and CMIP6) used in this work were obtained from the CMIP6 project hosted on the Earth System Grid Federation https://esgf-data.dkrz.de/projects/esgf-dkrz/ (last access: 25 May 2023) and from CMIP5 through the KNMI ClimateExplorer https://climexp.knmi.nl/start.cgi (last access: 25 May 2023). The ERA5 reanalysis was obtained through the Copernicus Climate Change Services (C3S) Climate Data Store (CDS): https://cds.climate.copernicus.eu/cdsapp#!/dataset/reanalysis-era5-single-levels-monthly-means?tab=form (last access: 25 May 2023) The analysis was implemented in the R environment (R Core Team, 2023) and R studio https://posit.co/downloads/ (last access: 25 May 2023).

This paper was edited by Axel Lauer and reviewed by Abdel Hannachi and one anonymous referee.

Ambaum, M. H. P., Hoskins, B. J., and Stephenson, D. B.: Arctic Oscillation or North Atlantic Oscillation?, J. Climate, 14, 3495–3507, https://doi.org/10.1175/1520-0442(2001)014<3495:AOONAO>2.0.CO;2, 2001. a

Barnett, T. P.: Comparison of Near-Surface Air Temperature Variability in 11 Coupled Global Climate Models, J. Climate, 12, 511–518, 1999. a, b, c

Becker, R. A., Chambers, J. M., and Wilks, A. R.: The new S language: a programming environment for data analysis and graphics, Wadsworth & Brooks/Cole computer science series, Wadsworth & Brooks/Cole Advanced Books & Software, Pacific Grove, Calif., ISBN 9780534091927, 9780534091934, 053409192X, 0534091938; OCLC Number (WorldCat Unique Identifier): 17677647, 1988. a

Benestad, R.: Common EOFs for model evaluation, Figshare [data set], https://doi.org/10.6084/M9.FIGSHARE.21641756.V3, 2022. a, b, c, d

Benestad, R.: Common EOFs for evaluation of geophysical data and global climate models, Youtube [video], https://youtu.be/32mtHHAoq6k, last access: 25 May 2023a. a

Benestad, R.: A brief presentation of common EOFs in R-studio, Youtube [video], https://youtu.be/E01hthVL9pY, last access: 25 May 2023b. a

Benestad, R., Sillmann, J., Thorarinsdottir, T. L., Guttorp, P., Mesquita, M. d. S., Tye, M. R., Uotila, P., Maule, C. F., Thejll, P., Drews, M., and Parding, K. M.: New vigour involving statisticians to overcome ensemble fatigue, Nat. Clim. Change, 7, 697–703, https://doi.org/10.1038/nclimate3393, 2017. a

Benestad, R., Caron, L.-P., Parding, K., Iturbide, M., Gutierrez Llorente, J. M., Mezghani, A., and Doblas-Reyes, F. J.: Using statistical downscaling to assess skill of decadal predictions, Tellus A, 71, 1652882, https://doi.org/10.1080/16000870.2019.1652882, 2019a. a, b

Benestad, R. E.: A comparison between two empirical downscaling strategies, Int. J. Climatol., 21, 1645–1668, dOI 10.1002/joc.703, 2001. a

Benestad, R. E.: clim.pact-V.1.0, KLIMA 04/03, met, P.O. Box 43 Blindern, 0313 Oslo, Norway, https://www.met.no (last access: 25 May 2023), 2003. a

Benestad, R. E.: A Norwegian Approach to Downscaling, Geosci. Model Dev. Discuss. [preprint], https://doi.org/10.5194/gmd-2021-176, 2021. a, b

Benestad, R. E., Sutton, R. T., Allen, M., and Anderson, D. L. T.: Interaction between Intraseasonal Kelvin waves and Tropical Instability waves in the Tropical Pacific, Geophys. Res. Lett., 28, 2041–2044, https://doi.org/10.1029/2000GL012563, 2001. a

Benestad, R. E., Hanssen-Bauer, I., and Førland, E. J.: Empirically downscaled temperature scenarios for Svalbard, Atmos. Sci. Lett., 3, 71–93, 2002. a

Benestad, R. E., Hanssen-Bauer, I., and Chen, D.: Empirical-statistical downscaling, World Scientific, 228, 2008. a, b

Benestad, R. E., Mezghani, A., and Parding, K. M.: esd V1.0, Zenodo [code], https://doi.org/10.5281/zenodo.29385, 2015. a, b

Benestad, R. E., Parding, K. M., Isaksen, K., and Mezghani, A.: Climate change and projections for the Barents region: what is expected to change and what will stay the same?, Environ. Res. Lett., 11, 054017, https://doi.org/10.1088/1748-9326/11/5/054017, 2016. a, b

Benestad, R. E., van Oort, B., Justino, F., Stordal, F., Parding, K. M., Mezghani, A., Erlandsen, H. B., Sillmann, J., and Pereira-Flores, M. E.: Downscaling probability of long heatwaves based on seasonal mean daily maximum temperatures, Adv. Stat. Clim. Meteorol. Oceanogr., 4, 37–52, https://doi.org/10.5194/ascmo-4-37-2018, 2018. a

Benestad, R. E., Parding, K. M., Erlandsen, H. B., and Mezghani, A.: A simple equation to study changes in rainfall statistics, Environ. Res. Lett., 14, 084017, https://doi.org/10.1088/1748-9326/ab2bb2, 2019b. a

Boé, J.: Interdependency in Multimodel Climate Projections: Component Replication and Result Similarity, Geophys. Res. Lett., 45, 2771–2779, https://doi.org/10.1002/2017GL076829, 2018. a

Cichocki, A., Mandic, D., De Lathauwer, L., Zhou, G., Zhao, Q., Caiafa, C., and Phan, H. A.: Tensor Decompositions for Signal Processing Applications: From two-way to multiway component analysis, IEEE Signal Processing Magazine, 32, 145–163, https://doi.org/10.1109/MSP.2013.2297439, 2015. a

Deser, C., Knutti, R., Solomon, S., and Phillips, A. S.: Communication of the role of natural variability in future North American climate, Nat. Clim. Change, 2, 775–779, https://doi.org/10.1038/nclimate1562, 2012. a

Eyring, V., Bony, S., Meehl, G. A., Senior, C. A., Stevens, B., Stouffer, R. J., and Taylor, K. E.: Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization, Geosci. Model Dev., 9, 1937–1958, https://doi.org/10.5194/gmd-9-1937-2016, 2016. a

Eyring, V., Bock, L., Lauer, A., Righi, M., Schlund, M., Andela, B., Arnone, E., Bellprat, O., Brötz, B., Caron, L.-P., Carvalhais, N., Cionni, I., Cortesi, N., Crezee, B., Davin, E. L., Davini, P., Debeire, K., de Mora, L., Deser, C., Docquier, D., Earnshaw, P., Ehbrecht, C., Gier, B. K., Gonzalez-Reviriego, N., Goodman, P., Hagemann, S., Hardiman, S., Hassler, B., Hunter, A., Kadow, C., Kindermann, S., Koirala, S., Koldunov, N., Lejeune, Q., Lembo, V., Lovato, T., Lucarini, V., Massonnet, F., Müller, B., Pandde, A., Pérez-Zanón, N., Phillips, A., Predoi, V., Russell, J., Sellar, A., Serva, F., Stacke, T., Swaminathan, R., Torralba, V., Vegas-Regidor, J., von Hardenberg, J., Weigel, K., and Zimmermann, K.: Earth System Model Evaluation Tool (ESMValTool) v2.0 – an extended set of large-scale diagnostics for quasi-operational and comprehensive evaluation of Earth system models in CMIP, Geosci. Model Dev., 13, 3383–3438, https://doi.org/10.5194/gmd-13-3383-2020, 2020. a, b

Flury, B. N.: Common Principal Components in k Groups, J. Am. Stat. A., 79, 892–898, https://doi.org/10.1080/01621459.1984.10477108, 1984. a

Flury, B. N. and Gautschi, W.: An Algorithm for Simultaneous Orthogonal Transformation of Several Positive Definite Symmetric Matrices to Nearly Diagonal Form, SIAM Journal on Scientific and Statistical Computing, 7, 169–184, https://doi.org/10.1137/0907013, 1986. a, b

Frankignoul, C., Février, S., Sennéchael, N., Verbeek, J., and Braconno, P.: An intercomparison between four tropical ocean models Thermocline variability, Tellus A, 47, 351, https://doi.org/10.3402/tellusa.v47i3.11522, 1995. a

Hannachi, A.: Patterns identification and data mining in weather and climate, Springer, Cham, oCLC: 1328009409, 2022. a

Hannachi, A., Finke, K., and Trendafilov, N.: Common EOFs: a tool for multi-model comparison and evaluation, Clim. Dynam., 60, 1689–1703, https://doi.org/10.1007/s00382-022-06409-8, 2022. a, b, c, d, e

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horanyi, A., Munoz‐Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Holm, E., Janiskova, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thepaut, J.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a

Huth, R. and Beranová, R.: How to Recognize a True Mode of Atmospheric Circulation Variability, Earth Space Sci., 8, e2020EA001275, https://doi.org/10.1029/2020EA001275, 2021. a

Joliffe, I. T.: Principal Component Analysis, Springer Series in Statistics, Springer, https://doi.org/10.1007/b98835, 1986. a

Lauer, A., Bock, L., Hassler, B., Schröder, M., and Stengel, M.: Cloud Climatologies from Global Climate Models – A Comparison of CMIP5 and CMIP6 Models with Satellite Data, J. Climate, 36, 1–53, https://doi.org/10.1175/JCLI-D-22-0181.1, 2022. a

Lorenz, E.: Deterministic nonperiodic flow, J. Atmos. Sci., 20, 130–141, 1963. a

Lorenz, E. N.: Empirical Orthogonal Functions and Statistical Weather Prediction, Sci. rep. 1, Department of Meteorology, MIT, USA, Cambridge, Massachusetts, https://eapsweb.mit.edu/sites/default/files/Empirical_Orthogonal_Functions_1956.pdf (last access: 25 May 2023), 1956. a

Meehl, G. A., Covey, C., McAvaney, B., Latif, M., and Stouffer, R. J.: Overview of the Coupled Model intercomparison project, B. Am. Meteorol. Soc., 86, 89–93, 2005. a

Navarra, A. and Simoncini, V.: A guide to empirical orthogonal functions for climate data analysis, Springer, Dordrecht, New York, oCLC: ocn462919781, 2010. a

Parding, K. M., Dobler, A., McSweeney, C. F., Landgren, O. A., Benestad, R., Erlandsen, H. B., Mezghani, A., Gregow, H., Räty, O., Viktor, E., El Zohbi, J., Christensen, O. B., and Loukos, H.: GCMeval – An interactive tool for evaluation and selection of climate model ensembles, Climate Services, 18, 100167, https://doi.org/10.1016/j.cliser.2020.100167, 2020. a

Philander, S.: El Niño, La Niña, and the Southern Oscillation, Academic Press, N.Y., ISBN 9780080570983, 1989. a

Phillips, A. S., Deser, C., and Fasullo, J.: Evaluating Modes of Variability in Climate Models, Eos, Transactions American Geophysical Union, 95, 453–455, https://doi.org/10.1002/2014EO490002, 2014. a

Preisendorfer, R. W.: Principal Component Analysis in Meteorology and Oceanology, Elsevier Science Press, Amsterdam, ISBN-10 0444430148, ISBN-13 978-0444430144, 1988. a

R Core Team: R: A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, Vienna, Austria, https://www.R-project.org/ (last access: 25 May 2023), 2023. a, b

Sanderson, B. M., Knutti, R., and Caldwell, P.: A Representative Democracy to Reduce Interdependency in a Multimodel Ensemble, J. Climate, 28, 5171–5194, https://doi.org/10.1175/JCLI-D-14-00362.1, 2015. a

Sengupta, S. and Boyle, J. S.: Using Common Principal Components in Comparing GCM Simulations, J. Climate, 11, 816–830, 1998. a, b

Sengupta, S. K. and Boyle, J. S.: Statistical Intercomparison of Global Climate Models: A Common Principal Component Approach, Tech. Rep. 13, PCMDI, Lawrence Livermore National Laboratory, California, USA, https://pcmdi.llnl.gov/report/ab13.html (last access: 25 May 2023), 1993. a

Taylor, K. E., Stouffer, R. J., and Meehl, G. A.: An Overview of CMIP5 and the Experiment Design, B. Am. Meteorol. Soc., 93, 485–498, https://doi.org/10.1175/BAMS-D-11-00094.1, 2012. a

Weigel, K., Bock, L., Gier, B. K., Lauer, A., Righi, M., Schlund, M., Adeniyi, K., Andela, B., Arnone, E., Berg, P., Caron, L.-P., Cionni, I., Corti, S., Drost, N., Hunter, A., Lledó, L., Mohr, C. W., Paçal, A., Pérez-Zanón, N., Predoi, V., Sandstad, M., Sillmann, J., Sterl, A., Vegas-Regidor, J., von Hardenberg, J., and Eyring, V.: Earth System Model Evaluation Tool (ESMValTool) v2.0 – diagnostics for extreme events, regional and impact evaluation, and analysis of Earth system models in CMIP, Geosci. Model Dev., 14, 3159–3184, https://doi.org/10.5194/gmd-14-3159-2021, 2021. a, b

Wilks, D. S.: Statistical methods in the atmospheric sciences, no. v. 91 in International geophysics series, Academic Press, Amsterdam, Boston, 2nd edn., ISBN 13 978-0-12-751966-1, ISBN 10 0-12-751966-1, 2006. a, b, c, d, e, f