the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

A circulation-based performance atlas of the CMIP5 and 6 models for regional climate studies in the Northern Hemisphere mid-to-high latitudes

Global climate models are a keystone of modern climate research. In most applications relevant for decision making, they are assumed to provide a plausible range of possible future climate states. However, these models have not been originally developed to reproduce the regional-scale climate, which is where information is needed in practice. To overcome this dilemma, two general efforts have been made since their introduction in the late 1960s. First, the models themselves have been steadily improved in terms of physical and chemical processes, parametrization schemes, resolution and implemented climate system components, giving rise to the term “Earth system model”. Second, the global models' output has been refined at the regional scale using limited area models or statistical methods in what is known as dynamical or statistical downscaling. For both approaches, however, it is difficult to correct errors resulting from a wrong representation of the large-scale circulation in the global model. Dynamical downscaling also has a high computational demand and thus cannot be applied to all available global models in practice. On this background, there is an ongoing debate in the downscaling community on whether to thrive away from the “model democracy” paradigm towards a careful selection strategy based on the global models' capacity to reproduce key aspects of the observed climate. The present study attempts to be useful for such a selection by providing a performance assessment of the historical global model experiments from CMIP5 and 6 based on recurring regional atmospheric circulation patterns, as defined by the Jenkinson–Collison approach. The latest model generation (CMIP6) is found to perform better on average, which can be partly explained by a moderately strong statistical relationship between performance and horizontal resolution in the atmosphere. A few models rank favourably over almost the entire Northern Hemisphere mid-to-high latitudes. Internal model variability only has a small influence on the model ranks. Reanalysis uncertainty is an issue in Greenland and the surrounding seas, the southwestern United States and the Gobi Desert but is otherwise generally negligible. Along the study, the prescribed and interactively simulated climate system components are identified for each applied coupled model configuration and a simple codification system is introduced to describe model complexity in this sense.

- Article

(42792 KB) - Full-text XML

-

Supplement

(12089 KB) - BibTeX

- EndNote

General circulation models (GCMs) are numerical models capable of simulating the temporal evolution of the global atmosphere or ocean. This is done by integrating the equations describing the conservation laws of physics along time as a function of varying forcing agents, starting with some initial conditions (AMS, 2020). If run in standalone mode, an atmospheric general circulation model (AGCM) is coupled with an indispensable land-surface model (LSM) only, whilst the remaining components of the extended climate system (also called “realms” in the nomenclature of the Earth System Grid Federation), including ocean, sea-ice and vegetation dynamics (depending on the model, also atmospheric chemistry, aerosols, ocean biogeochemistry and ice-sheet dynamics), are read in from static datasets instead of being simulated online (Gates, 1992; Eyring et al., 2016; Waliser et al., 2020). In these “atmosphere-only” experiments, the number of coupled realms is kept at a minimum in order to either isolate the sole atmospheric response to temporal variations in the aforementioned other components (Schubert et al., 2016; Brands, 2017; Deser et al., 2017) or to put all available computational resources into the proper simulation of the atmosphere, e.g. by augmenting the spatial and temporal resolution (Haarsma et al., 2016). This kind of experiment is traditionally hosted by the Atmospheric Model Intercomparison Project (AMIP) (Gates, 1992).

In a global climate model, interactions and feedbacks between the aforementioned realms are explicitly taken into account by coupling the AGCM and LSM with other component models. In the “ocean–atmosphere” configuration (AOGCM, for atmosphere–ocean general circulation model), the AGCM plus an LSM are coupled with an ocean general circulation model (OGCM) and a sea-ice model. Further model components representing the effects of vegetation, atmospheric chemistry, aerosols, ocean biogeochemistry and ice-sheet dynamics are then optionally included with the final aim to reach a representation of the climate system as comprehensive as possible with the current level of knowledge and available computational resources. However, due to the vast number of nonlinearly interacting processes, coupled climate models are prone to many error sources and model uncertainties, making it difficult to directly compare the simulated climate with the observed one (Watanabe et al., 2011; Yukimoto et al., 2011).

Since coupled model experiments are the best known approximation to the real climate system, they constitute the starting point of most climate change impact, attribution and mitigation studies. For use in impact studies, the coarse-resolution GCM output is usually downscaled with statistical or numerical models (Maraun et al., 2010; Jacob et al., 2014; Gutiérrez et al., 2013; San-Martín et al., 2016) or a combination thereof (Turco et al., 2011), in order to provide information on the regional to local scale where it can then be used for decision making.

Now while downscaling methods are able to imprint the effects of the local climate factors on the coarse-resolution GCM, the correction of errors inherited from a wrong representation of the large-scale atmospheric circulation is challenging (Prein et al., 2019). A physically consistent way to circumvent this “circulation error” is choosing a GCM (or group of GCMs) capable of realistically simulating the climatological statistics of the regional-scale circulation. This is why careful GCM selection for long has been the subject of any careful downscaling approach applied in a climate change context (Hulme et al., 1993; Mearns et al., 2003; Brands et al., 2013; Fernandez-Granja et al., 2021). However, due to the availability of many GCMs from many different groups, this idea has been partly replaced by the “model democracy” paradigm discussed, e.g. in Knutti et al. (2017), where as many GCMs as possible are applied irrespective of their performance in present-day conditions (Jacob et al., 2014). In the recent past, the importance of careful model selection has been re-emphasized in the context of bias correction, which can be considered a special case of statistical downscaling (Maraun et al., 2017). It should be also remembered that GCMs by definition were not developed to realistically represent regional-scale climate features (Grotch and MacCracken, 1991; Palmer and Stevens, 2019) and that they have been pressed into this role during the last 3 decades due to the ever-increasing demand for climate information on this scale. Hence, finding a GCM capable of reproducing the regional atmospheric circulation in a systematic way, i.e. in many regions of the world, would be anything but expected.

In the present study, a total of 128 historical runs from 56 distinct GCMs (or GCM versions) of the fifth and sixth phases of the Coupled Model Intercomparison Project (CMIP5 and 6) are evaluated in terms of their capability to represent the present-day climatology of the regional atmospheric circulation as represented by the frequency of the 27 circulation types proposed by Lamb (1972). Based on the proposal in Jones et al. (2013) that this scheme can in principle be applied within a latitudinal band from 30∘ to 70∘ N, it is here used with a sliding coordinate system (Otero et al., 2017) running along the grid boxes of a 2.5∘ latitude–longitude grid covering the entire Northern Hemisphere mid-to-high latitudes.

In Sects. 2 and 3, the applied data, methods and software are described. In Sect. 4, the results of an overall model performance analysis including all 27 circulation types are presented. First, those regions are identified where reanalysis uncertainty might compromise the results of any GCM performance assessment based on a single reanalysis. Then, an atlas of overall model performance is provided for each participating model (Sect. 4.1 to 4.8). The present article file focuses on the evaluation with respect to ERA-Interim, complemented by pointing out deviations from the evaluation with respect to the Japanese 55-year Reanalysis (JRA-55) in the three relevant regions in the running text. The full atlas of the evaluation against JRA-55 is provided in the Supplement to this study (see “figs-refjra55” folder therein). In Sect. 4.9, the atlas is summarized, associations between the models' performance and their resolution in the atmosphere and ocean are drawn, and the role of internal model variability is assessed with 72 additional historical runs from a subgroup of 13 models. Finally, the results of a specific model performance evaluation for each circulation type are provided in Sect. 5, followed by a discussion of the main results and some concluding remarks in Sect. 6. For the sake of simplicity, the model performance atlas is grouped by the geographical location of the coupled models' coordinating institutions, having in mind that most model developments are actually international or even transcontinental collaborating efforts.

The study resides on 6-hourly instantaneous sea-level pressure (SLP) model data retrieved from the Earth System Grid Federation (ESGF) data portals (e.g. https://esgf-data.dkrz.de/projects/esgf-dkrz/, last access: 11 February 2022), whose digital object identifiers (DOIs) can be obtained following the references in Table 1. These model runs are evaluated against reanalysis data from ECMWF ERA-Interim (Dee et al., 2011) (https://apps.ecmwf.int/datasets/data/interim-full-daily/levtype=sfc/, last access: 11 February 2022) and the Japan Meteorological Agency (JMA) JRA-55 (Kobayashi et al., 2015) (https://rda.ucar.edu/datasets/ds628.0/last access: 11 February 2022, https://doi.org/10.5065/D6HH6H41, Japan Meteorological Agency, 2013). In a first step, and in order to compare as many distinct models as possible, a single historical run was downloaded for each model for which the aforementioned data were available for the 1979–2005 period. If several historical integrations for a given model version were available, then the first member was chosen. In Sect. 4.9, it will be shown that the selection of alternative members from a given ensemble does not lead to substantial changes in the results. Out of the 31 models used in CMIP6, 26 were run with the “f1”, four with the “f2” and one with the “f3” forcing datasets (Eyring et al., 2016) (see Table 1). Not only version pairs from CMIP5 to CMIP6 are considered but also model versions either not having a predecessor in CMIP5 or a successor in CMIP6. In the most favourable case, two versions of a given model are available for both CMIP5 and 6: a higher-resolution setup considering fewer realms (the AOGCM configuration), complemented by a more complex setup including more component models, usually run with a lower resolution than the AOGCM version.

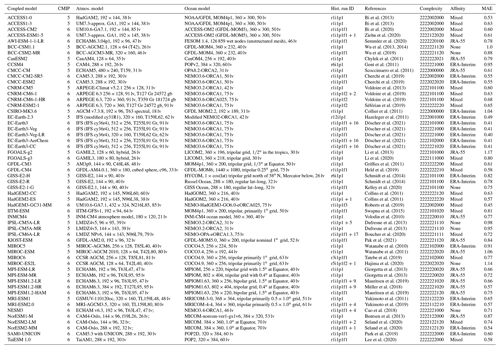

Bi et al. (2013)Bi et al. (2013)Bi et al. (2020)Ziehn et al. (2020)Semmler et al. (2020)Wu et al. (2013, 2014)Wu et al. (2019)Chylek et al. (2011)Gent et al. (2011)Scoccimarro et al. (2011)Cherchi et al. (2019)Cherchi et al. (2019)Voldoire et al. (2013)Voldoire et al. (2019)Voldoire et al. (2019)Séférian et al. (2019)Collier et al. (2011)Hazeleger et al. (2011)Döscher et al. (2021)Döscher et al. (2021)Döscher et al. (2021)Döscher et al. (2021)Döscher et al. (2021)Li et al. (2013)Li et al. (2020)Griffies et al. (2011)Held et al. (2019)Schmidt et al. (2014)Schmidt et al. (2014)Kelley et al. (2020)Collins et al. (2011)Collins et al. (2011)Roberts et al. (2019)Swapna et al. (2015)Volodin et al. (2010)Dufresne et al. (2013)Dufresne et al. (2013)Boucher et al. (2020)Pak et al. (2021)Watanabe et al. (2010)Watanabe et al. (2011)Tatebe et al. (2019)Hajima et al. (2020)Giorgetta et al. (2013)Giorgetta et al. (2013)Mauritsen et al. (2019)Müller et al. (2018)Mauritsen et al. (2019)Yukimoto et al. (2011)Yukimoto et al. (2019)Cao et al. (2018)Bentsen et al. (2013)Seland et al. (2020)Seland et al. (2020)Park et al. (2019)Lee et al. (2020)Table 1Overview of the applied model experiments, including the abbreviations of the coupled models and their atmosphere and ocean components, their resolution expressed as number of longitudinal × latitudinal grid boxes (gb), number of vertical model levels (lv), run identifiers (complemented by Fig. 12 for more than one run), reference articles, model complexity codes as defined in Sect. 3.3, reanalysis affinity and median mean absolute error (MAE) with respect to ERA-Interim; Gr indicates Gaussian reduced grid; the ocean grids are described in Appendix A.

An overview of the 56 applied model versions is provide in Table 1. The table provides information about the component AGCMs and OGCMs, their horizontal and vertical resolution, run specifications and complexity codes described in Sect. 3.3.

For 13 selected models (ACCESS-ESM1, CNRM-CM6-1, HadGEM2-ES, EC-Earth3, IPSL-CM5A-LR, IPSL-CM6A-LR, MIROC-ES2L, MPI-ESM1-2-LR, MPI-ESM1-2-HR, MRI-ESM2, NorESM2-LM, NorESM2-MM, NESM3), a total of 72 additional historical integrations (between 1 and 17 additional runs per model) were retrieved from the respective ensembles in order to assess the effects of internal model variability. By definition of the experimental protocol followed in CMIP, ensemble spread relies on initialization from distinct starting dates of the corresponding pre-industrial control runs – or similar, shorter runs as, e.g. indicated in Roberts et al. (2019) – i.e. on “initial conditions uncertainty” (Stainforth et al., 2007).

3.1 Lamb weather types

The classification scheme used here is based on Hubert Horace Lamb's practical experience when grouping daily instantaneous SLP maps for the British Isles and interpreting their relationships with the regional weather (Lamb, 1972). This subjective classification scheme contained 27 classes and was brought to an automated and objective approach by Jenkinson and Collison (1977) in what is known as the “Lamb circulation type” or “Lamb weather type” (LWT) approach (Jones et al., 1993, 2013).

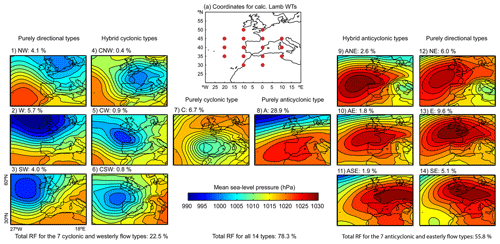

Figure 1Illustrative example for the usage of the Lamb weather type approach over the central Iberian Peninsula. The coordinate system configured for this region and a subset of 14 types as well as their relative occurrence frequencies are shown. Note that in the present study, all 27 types originally defined in Lamb (1972) are being used. The figure is taken from Brands et al. (2014), courtesy of John Wiley and Sons, Inc.

The spatial extension of the 16-point coordinate system defining this classification is 30 longitudes × 20 latitudes with longitudinal and latitudinal increments of 10 and 5∘, respectively (see Fig. 1 for an example over the Iberian Peninsula). The following numbers are place holders of instantaneous SLP values (in hPa) at the corresponding location p (from west to east and north to south):

and the variables needed for classification are defined as follows:

where , , , and d=0.5(cos (ϕ)2); ϕ is the central latitude and δϕ is the latitudinal distance.

The 27 classes are then defined following Jones et al. (1993) and Jones et al. (2013):

-

The direction of flow is . Add 180∘ if W is positive. The appropriate direction is calculated on an eight-point compass allowing 45∘ per sector. Thus, as an example, a westerly flow would occur between 247.5 and 292.5∘.

-

If is less than F, then the flow is essentially straight and corresponds to one of the eight purely directional types defined by Lamb: northeast (NE), east (E), SE, S, SW, W, NW, N.

-

If is greater than 2F, then the pattern is either strongly cyclonic (for Z>0) or anticyclonic (for Z<0), which corresponds to Lamb's pure cyclonic (PC) or anticyclonic type (PA), respectively.

-

If lies between F and 2F, then the flow is partly directional and either cyclonic or anticyclonic, corresponding to Lamb's hybrid types. There are eight directional–anticyclonic types (anticyclonic northeast (ANE), anticyclonic east (AE), ASE, AS, ASW, AW, ANW, AN and another eight directional-cyclonic types (cyclonic northeast (CNE), cyclonic east (CE), CSE, CS, CSW, CW, CWN, CN.

-

If F is less than 6 and is less than 6, there is light indeterminate flow corresponding to Lamb's unclassified type U. The choice of 6 is dependent on the grid spacing and would need tuning if used with a finer grid resolution.

An illustrative example for the results obtained from this scheme is provided in Fig. 1 for the case of the central Iberian Peninsula. Shown is the coordinate system and the composite SLP maps for a subset of 14 LWTs, as well as the respective relative occurrence frequencies, taken from Brands et al. (2014) (courtesy of John Wiley and Sons, Inc.).

Particularly since the 1990s, this classification scheme has been used in many other regions of the Northern Hemisphere (NH) mid-to-high latitudes (Trigo and DaCamara, 2000; Spellman, 2016; Wang et al., 2017; Soares et al., 2019). Since the LWTs are closely related to the local-scale variability of virtually all meteorological and many other environmental variables (Lorenzo et al., 2008; Wilby and Quinn, 2013), they constitute an overarching concept to verify GCM performance in present climate conditions and have been used so in a number of studies (Hulme et al., 1993; Osborn et al., 1999; Otero et al., 2017).

Here, for each model run and the ERA-Interim or JRA-55 reanalysis, the 6-hourly instantaneous SLP data from 1 January 1979 to 31 December 2005 are bilinearly interpolated to a regular latitude–longitude grid with a resolution of 2.5∘. Then, the Lamb classification scheme is applied for each time instance and grid box, using a sliding coordinate system whose centre is displaced from one grid box to another in a loop recurring all latitudes and longitudes of the aforementioned grid within a band from 35 to 70∘ N. Note that the geographical domain is cut at 35∘ N (and not at 30∘ N) because the various available reanalyses are known to produce comparatively large differences in their estimates for the “true” atmosphere when approaching the tropics (Brands et al., 2012, 2013). Also, since some models do not apply the Gregorian calendar but work with 365 or even 360 d per year, relative instead of absolute LWT frequencies are considered. Further, since HadGEM2-CC and HadGEM2-ES lack SLP data for December 2005, this month is equally dropped from ERA-Interim or JRA-55 when compared with these models.

As mentioned above, the LWT approach has been successfully applied for many climatic regimes of the NH, including the extremely continental climate of central Asia (Wang et al., 2017), which confirms the proposal made in Jones et al. (2013) that the method in principle can be applied in a latitudinal band from 30 to 70∘ N. Here, a criterion is introduced to explicitly test this assumption. Namely, it is established that the LWT method should not be used at a given grid box if the relative frequency for any of the 27 types is lower than 0.1 % (i.e. 1.5 annual occurrences on average). Note that, already in its original formulation for the British Isles, some LWTs were found to occur with relative frequencies as small as 0.47 % (Perry and Mayes, 1998). This is why the 0.1 % threshold seems reasonable in the present study. If at a given grid box this criterion is not met in the LWT catalogue derived from ERA-Interim or alternatively JRA-55, then this grid box does not participate in the evaluation.

3.2 Applied GCM performance measures

To measure GCM performance, the mean absolute error (MAE) of the n=27 relative LWT frequencies obtained from a given model (m) with respect to those obtained from the reanalysis (o) is calculated at a given grid box:

The MAE is then used to rank the 56 distinct models at this grid box. The lower the MAE, the lower the rank and the better the model. After repeating this method for each grid box of the NH, both the MAE values and ranks are plotted for each individual model on a polar stereographic projection.

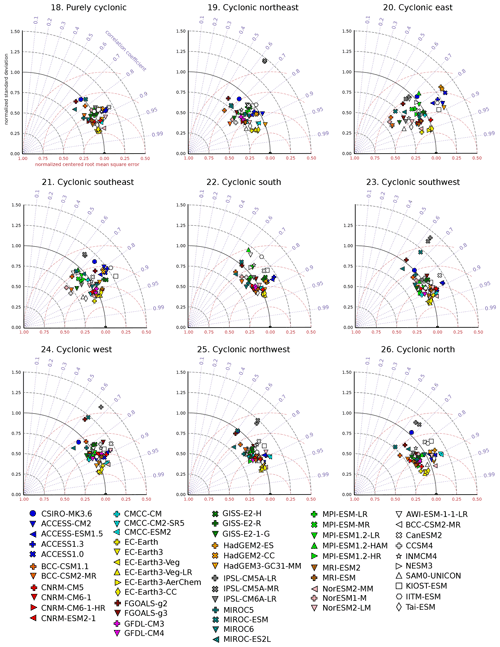

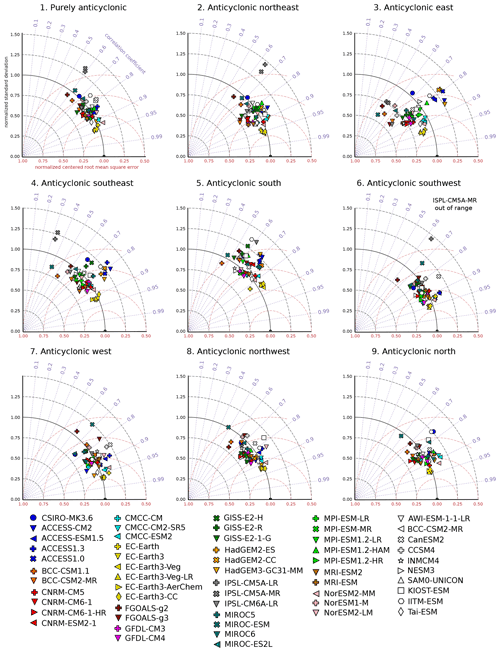

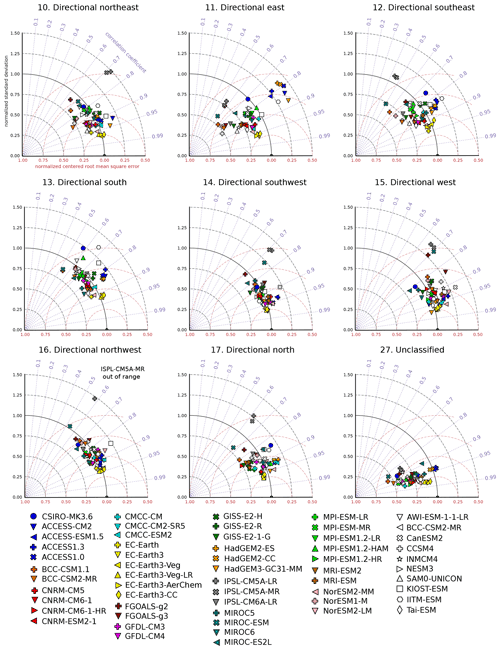

In addition to the MAE measuring overall performance, the specific model performance for each LWT is also assessed. This is done because, by definition of the MAE, errors occurring in the more frequent LWTs are penalized more than those occurring in the rare LWTs. Hence, a low MAE might mask errors in the least frequent LWTs. For a LWT-specific evaluation, the simulated frequency map for a given LWT and model are compared with the corresponding map from the reanalysis by means of the Taylor diagram (Taylor, 2001). This diagram compares the spatial correspondence of the simulated and observed (or “quasi-observed” since reanalysis data are used) frequency patterns by means of three complementary statistics. These are the Pearson correlation coefficient (r), the standard deviation ratio (ratio ), with σm and σo being the standard deviation of modelled and observed frequency patterns, and the normalized centred root-mean-square error (CRMSE):

with n=2016 grid boxes covering the NH mid-to-high latitudes and cm and co the modelled and observed frequency patterns after subtracting their own mean value (i.e. both the minuend and subtrahend are anomaly fields; “c” refers to centred). Normalization enables comparison with other studies using the same method.

3.3 Model complexity in terms of considered climate system components

In addition to the model performance assessment, a straightforward approach is followed to describe the complexity of the coupled model configurations in terms of considered climate system components. The following 10 components are taken into account: (1) atmosphere, (2) land surface, (3) ocean, (4) sea ice, (5) vegetation properties, (6) terrestrial carbon-cycle processes, (7) aerosols, (8) atmospheric chemistry, (9) ocean biogeochemistry and (10) ice-sheet dynamics. An integer is assigned to each of these components depending on whether it is not taken into account at all (0), represented by an interactive model feeding back on at least one other component (2) or anything in between (1) including prescription from external files, semi-interactive approaches or components simulated online but without any feedback on other components.

As an example, MRI-ESM's complexity code is 2222122220, indicating interactive atmosphere, land-surface, ocean and sea-ice models, prescribed vegetation properties, interactive terrestrial carbon-cycle, aerosol, atmospheric chemistry and ocean biogeochemistry models, and no representation of ice-sheet dynamics. For each of the 56 participating coupled model configurations, the reference article(s) and source attributes inside the NetCDF files from ESGF were assessed in order to obtain an initial “best-guess” complexity code. This code was then sent by e-mail to the respective modelling group for confirmation or correction (see the Acknowledgements). Out of the 19 groups contacted within this survey, 18 confirmed or corrected the code and 1 did not answer. Among the 18 groups providing feedback, a single scientist from one group was not sure whether the proposed method is suitable to measure model complexity but did not reject it either. In light of the many participating scientists (up to three individuals per group were contacted to enhance the probability of a response), this is considered favourable feedback. The final codes are listed in Table 1, column 7. The sum of the integers is here taken as an estimator for the complexity of the coupled model configuration and is referred to as “complexity score” in the forthcoming text. In the light of various available definitions for the term “Earth system model” (Collins et al., 2011; Yukimoto et al., 2011; Jones, 2020), this is a flexible approach used as a starting point for further specifications in the future.

Note that the here-defined complexity score only measures the number and treatment of the climate system components considered by a given coupled model configuration. It does not measure the comprehensiveness of the individual component models, nor the coupling frequency or treatment of the forcing datasets, among others. The score should thus be interpreted as an overarching and a priori indicator of climate system representativity and by no means can compete with in-depth studies treating model comprehensiveness for single climate system components (Séférian et al., 2020). For further details on the 56 coupled model configurations considered here, the interested reader is referred to the reference articles listed in Table 1, complemented by further citations in Sect. 4.

Along with other metadata including the names and versions of all component models and couplers, resolution details of the AGCMs and OGCMs and others, the complexity codes have been stored in the Python function get_historical_metadata.py contained in https://doi.org/10.5281/zenodo.4555367 (Brands, 2022).

3.4 Applied Python packages

The coding to the present study relies on the Python v2.7.13 packages xarray v0.9.1 written by Hoyer and Hamman (2017) (https://doi.org/10.5281/zenodo.264282, Hoyer et al., 2017), NumPy v1.11.3 written by Harris et al. (2020) (https://github.com/numpy/numpy, last access: 11 February 2022), Pandas v0.19.2 written by McKinney (2010) (https://doi.org/10.5281/zenodo.3509134, Reback et al., 2022) and SciPy v0.18.11 written by Virtanen et al. (2020) (https://doi.org/10.5281/zenodo.154391, Virtanen et al., 2016); here used for I/O tasks and statistical analyses. The Matplotlib v2.0.0 package written by Hunter (2007) (https://doi.org/10.5281/zenodo.248351, Droettboom et al., 2017), as well as the Basemap v1.0.7 toolkit (https://github.com/matplotlib/basemap, last access: 11 February 2022) are applied for plotting and the functions written by Gourgue (2020) (https://doi.org/10.5281/zenodo.3715535, Gourgue, 2020) for generating Taylor diagrams.

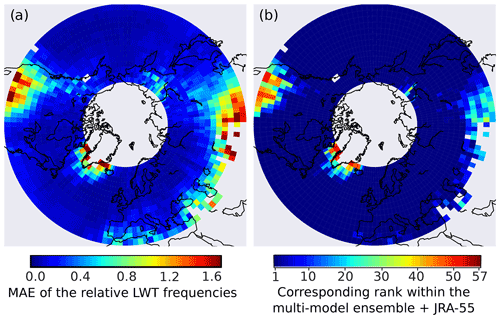

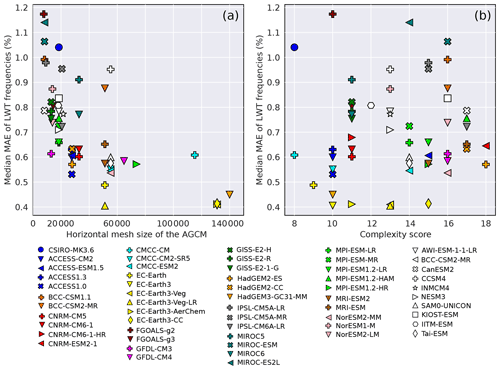

In Fig. 2, the MAE of JRA-55 with respect to ERA-Interim is mapped (panel a), complemented by the corresponding rank within the multi-model ensemble plus JRA-55 (panel b). In the ideal case, the MAE for JRA-55 is lower than for any of the 56 CMIP models, which means that the alternative reanalysis ranks first and that a change in the reference reanalysis does not influence the model ranking. This result is indeed obtained for a large fraction of the NH. However, in the Gobi Desert, Greenland and the surrounding seas, and particularly in the southwestern United States, substantial differences are found between the two reanalyses. Since different reanalyses from roughly the same generation are in principle equally representative of the “truth” (Sterl, 2004), the models are here evaluated twice in order to obtain a robust picture of their performance. In the present article file, the evaluation results with respect to ERA-Interim are mapped and deviations from the evaluation against JRA-55 in the three relevant regions are pointed out in the text. In the remaining regions, reanalysis uncertainty plays a minor role. Nevertheless, for the sake of completeness, the full atlas of the JRA-55-based evaluation was added to the Supplement to this study. For a quick overview of the results, Table 1 indicates whether a given model closer agrees with ERA-Interim or JRA-55 in the three sensitive regions. In the following, this is referred to as “reanalysis affinity”.

Figure 2Mean absolute error of the relative Lamb weather type frequencies from JRA-55 with respect to ERA-Interim (a), as well as the respective rank within the multi-model ensemble plus JRA-55 (b). The lower the rank, the lower the MAE and the closer the agreement between JRA-55 and ERA-Interim.

Figure 2 also shows that the LWT usage criterion defined in Sect. 3.1 is met almost everywhere in the domain, except in the high-mountain areas of central Asia (grey areas within the performance maps indicate that the criterion is not met). This region is governed by the monsoon rather than the turnover of dynamic low- and high-pressure systems the LWT approach was developed for. It is thus justified to use the approach over such a large domain.

Grouped by their geographical origin, Sects. 4.1 to 4.8 describe the composition of the 56 participating coupled models in terms of their atmosphere, land-surface, ocean and sea-ice models in order to make clear whether there are shared components between nominally different models that might explain common error structures. The names of all other component models are documented in the Python function get_historical_metadata.py contained in https://doi.org/10.5281/zenodo.4555367 (Brands, 2022). Then, the regional error and ranking details are provided. In Sect. 4.9, these results are summarized in a single boxplot and put into relation with the resolution setup of the atmosphere and ocean component models. The role of internal model variability is also assessed there. A complete list of all participating component models is provided in the aforementioned Python function.

The first result common to all models is the spatial structure of the absolute error expressed by the MAE. Namely, the models tend to perform better over ocean areas than over land and perform poorest over high-mountain areas, particularly in central Asia. Further regional details are documented in the following sections.

4.1 Model contributions from the United Kingdom and Australia

The atmosphere, land-surface and ocean dynamics in the Hadley Centre Global Environment Model version 2 (HadGEM2) are represented by the HadGAM2, MOSES2 and HadGOM2 models, respectively. Both the CC and ES model versions comprise interactive vegetation properties, terrestrial carbon-cycle processes, land carbon and ocean carbon-cycle processes and aerosols. The ES version also includes an interactive atmospheric chemistry which, in turn, is prescribed in the CC configuration, making it slightly less complex (Collins et al., 2011; The HadGEM2 Development Team, 2011). This centre's model contributions to CMIP6 are following the concept of seamless prediction (Palmer et al., 2008), in which lessons learned from short-term numerical weather forecasting are exploited for the improvement of longer-term predictions/projections up to climatic timescales, using a “unified” or “joint” model for all purposes (Roberts et al., 2019). For atmosphere and land-surface processes, these are the Unified Model Global Atmosphere 7 (UM-GA7) AGCM and the Joint UK Land Environment Simulator (JULES) (Walters et al., 2019). However, the specific CMIP6 model version considered here (HadGEM3-GC31-MM) is a very high-resolution AOGCM configuration comprising only one further interactive component (aerosols). In comparison with HadGEM2-ES and CC, HadGEM3-GC31-MM is therefore less complex.

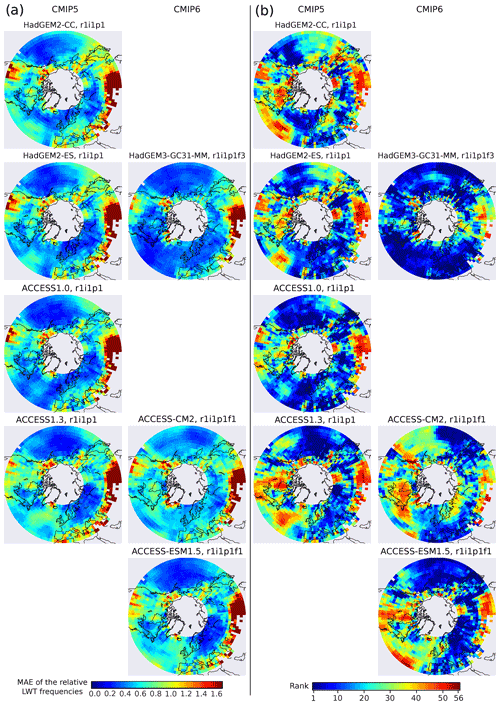

With nearly identical error and ranking patterns associated with the aforementioned almost identical configuration, already the two model versions used in CMIP5 (HadGEM2-CC and ES) yield good to very good performance which, for the European sector, is in line with Perez et al. (2014) and Stryhal and Huth (2018). Only a close look reveals slightly lower errors for the ES version, particularly in a region extending from western France to the Ural Mountains (see Fig. 3). Both CMIP5 versions are outperformed by HadGEM3-GC31-MM. While HadGEM2-CC and ES rank very well in Europe and the central North Pacific only, HadGEM3-GC31-MM does so in virtually all regions of the NH mid-to-high latitudes except in central Asia. It is undoubtedly one of the best models considered here.

Figure 3Mean absolute error of the relative Lamb weather type frequencies from the historical CMIP experiments with respect to ERA-Interim (column a), as well as the respective rank within the 56 distinct model versions outlined in Table 1 (column b). The lower the rank, the lower the MAE and the better the model. Results are for the Met Office Hadley Centre and ACCESS model families. Model pairs from CMIP5 and 6 are plotted next to each other. Results are for the 1979–2005 period.

While CSIRO-MK was an independently developed GCM of the Australian research community (Collier et al., 2011), the Community Climate and Earth System Simulator (ACCESS) depends to a large degree on the aforementioned models from the Met Office Hadley Centre. ACCESS1.0, the starting point for the new Australian coupled model configurations, makes use of the same atmosphere and land-surface components as HadGEM2 (see above) but is run in a less complex configuration. It is considered the “control” configuration of all further developments made by the Australian modelling group (Bi et al., 2013). ACCESS1.3 is the first step into this direction. Instead of HadGAM2, it uses a slightly modified version of the Met Office Global Atmosphere 1.0 (GA1) AGCM, coupled with the CABLE1.8 land-surface model developed by CSIRO. ACCESS-CM2 is the AOGCM version used in CMIP6, relying on the UM10.6-GA7.1 AGCM (also used in HadGEM3-GC31-MM) and the CABLE2.5 coupler (Bi et al., 2020). ACCESS-CM2, however, was run with a lower horizontal resolution in the atmosphere than HadGEM3-GC31-MM. Whereas the three aforementioned ACCESS versions only have interactive aerosols on top of the four AOGCM components, ACCESS-ESM1.5 additionally includes interactive land and ocean carbon-cycle processes and prescribed vegetation properties. It uses slightly older AGCM and LSM versions (UM7.3-GA1 and CABLE2.4) than ACCESS-CM2 and makes use of the ocean biogeochemistry model WOMBAT (Ziehn et al., 2020). All ACCESS models use the same ocean and sea-ice models (GFDL-MOM and CICE), which differ from those used in the HadGEM model family. The OASIS coupler (Valcke, 2006) is applied by both model families.

Within the ACCESS model family, version 1.0 performs best (see Fig. 3). The corresponding error and ranking patterns are virtually identical to HadGEM2-ES and HadGEM2-CC, which is due to the same AGCM used in these three models (HadGAM2). The three more independent versions of ACCESS (1.3, CM2 and ESM1.5) roughly share the same error pattern, which differs from ACCESS1.0 in some regions. While they perform worse in the North Atlantic and western North Pacific, they do better in the eastern North Pacific off the coast of Japan and, in the case of ACCESS-CM2, also in the high-mountain areas of central Asia and over the Mediterranean Sea. In the latter two regions, the performance of ACCESS-CM2 is comparable to HadGEM3-GC31-MM. Overall, version 1.0 performs best within the ACCESS model family. For the sake of completeness, the performance maps for CSIRO-MK3.6 have been included in the Supplement.

The two HadGEM2 versions and ACCESS1.3 compare better with JRA-55 in the southwestern US but thrive towards ERA-Interim in the seas around Greenland and in the Gobi Desert. HadGEM3-GC31-MM, ACCESS1.0, ACCESS-CM2 and ACCESS-ESM1.5 have similar reanalysis affinities, except for thriving towards JRA-55 in the seas around Greenland and for showing virtually no sensitivity in the Gobi Desert in the case of ACCESS-ESM1.5 (compare Fig. 3 with the “figs-refjra55/maps/rank” folder in the Supplement).

4.2 Model contributions from North America

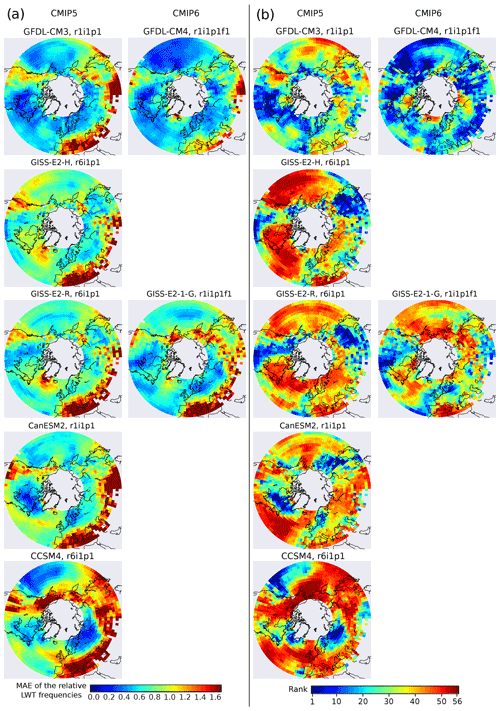

The Geophysical Fluid Dynamics Laboratory Climate Model 3 and 4 (GFDL-CM3 and CM4) are composed of in-house atmosphere, land-surface, ocean and sea-ice models and comprise interactive vegetation properties, aerosols and atmospheric chemistry (Griffies et al., 2011; Held et al., 2019). GFDL-CM4 also includes a simple ocean carbon-cycle representation which, however, does not feed back on other climate system components. From CM3 to CM4, a considerable resolution increase was undertaken, except for a reduction in the AGCM's vertical levels, and this actually pays off in terms of model performance (see Fig. 4). While GFDL-CM3 only ranks well in an area ranging from the Great Plains to the central North Pacific, GFDL-CM4 yields balanced results over the entire NH mid-to-high latitudes and is one of the best models considered here. Notably, GFDL-CM4 also performs well over central Asia and in an area ranging from the Black Sea to the Middle East, which is where most of the other models perform less favourable. Note also that GFDL's Modular Ocean Model (MOM) is the standard OGCM in all ACCESS models and is also used in the BCC-CSM model versions (see Table 1 for details).

Figure 4As Fig. 3 but for the GFDL, GISS, CCCma and NCAR models.

All Goddard Institute of Space Studies model versions considered here are AOGCMs with prescribed vegetation properties, aerosols and atmospheric chemistry. The two versions are identical except for the ocean component: HYCOM was used in GISS-E2-H and Russel Ocean in GISS-E2-R (Schmidt et al., 2014). Russel Ocean was then developed to GISS Ocean v1 for use in GISS-E2.1-G (Kelley et al., 2020), the CMIP6 model version assessed here (note that the 6-hourly SLP data for the more complex model versions contributing to CMIP6 were not available from the ESGF data portals). All these versions comprise a relatively modest resolution for the atmosphere and ocean, and no refinement was undertaken from CMIP5 to 6. However, many parametrization schemes were improved. GISS-E2.1-G generally ranks better than its predecessors, except in eastern Siberia and China, where very good ranks are obtained by the two CMIP5 versions (see Fig. 4). The small differences between the results for GISS-E2-H and -R might stem from internal model variability (see also Sect. 4.9) and from the use of two distinct OGCMs. Unfortunately, all GISS-E2 model versions considered here are plagued by pronounced performance differences from one region to another, meaning that they are less balanced than, e.g. GFDL-CM4.

The National Center for Atmospheric Research (NCAR) Community Climate System Model 4 (CCSM4) is composed of the Community Atmosphere and Land Models (CAM and CLM), the Parallel Ocean Program (POP) and the Los Alamos Sea Ice Model (CICE), combined with the CPL7 coupler (Gent et al., 2011; Craig et al., 2012). The model version considered here was used in CMIP5 and includes interactive vegetation properties and land carbon-cycle processes, whereas aerosols are prescribed. During the course of the last decade, CCSM4 has been further developed into CESM1 and 2 (Hurrell et al., 2013; Danabasoglu et al., 2020) which, due to data availability issues, can unfortunately not be assessed here (the respective data for CESM2 are available but only for 15 out of the 27 considered years). However, CMCC-CM2 and NorESM2 are almost entirely made up by components from CESM1 and 2, respectively, and should thus be also indicative for the performance of the latter (see Sect. 4.8).

The Canadian Earth System Model version 2 (CanESM2) is composed of the CanAM4 AGCM, the CLASS2.7 land-surface model, the CanOM4 OGCM and the CanSIM1 sea-ice model (Chylek et al., 2011). It contributed to CMIP5 and comprises interactive vegetation properties, land and ocean carbon-cycle processes and aerosols, whilst the ice-sheet area is prescribed.

Results indicate a comparatively poor performance for both CCSM4 and CanESM2. Exceptions are found along the North American west coast and the Labrador Sea, where both models perform well; in the central to eastern subtropical Pacific and in northwestern Russia plus Finland, where CCSM4 performs well; and in Quebec, Scandinavia and eastern Siberian, where CanESM2 ranks well (see Fig. 4). As for the GISS models, both CCSM4 and CanESM2 are also plagued by large regional performance differences.

Regarding the models' reanalysis affinity, GFDL-CM3 thrives towards ERA-Interim in the seas around Greenland and towards JRA-55 in the Gobi Desert, while being almost insensitive to reanalysis choice in the southwestern US (compare Fig. 4 with the “figs-refjra55/maps/rank” folder in the Supplement). GFDL-CM4 has similar reanalysis affinities but largely improves (by up to 20 ranks) in the southwestern US when evaluated against JRA-55. Results for GISS-E2-H and GISS-E2-R are slightly closer to ERA-Interim in the southwestern US and otherwise virtually insensitive to reanalysis choice. GISS-E2-1-G is virtually insensitive in all three regions. CanESM2 ranks consistently better if compared with JRA-55, with a stunning improvement of up to 30 ranks in the southwestern United States, and CCSM4 slightly thrives towards ERA-Interim in all three regions.

4.3 Model contributions from France

The CMIP5 contributions from the Centre National de Recherches Météorologique (CNRM) and Institut Pierre-Simon Laplace (IPSL) use the same OGCM and coupler, i.e. the Nucleus for European Modelling of the Ocean (NEMO) model (Madec et al., 1998; Madec, 2008) and OASIS but differ in their remaining components. CNRM-CM5 comprises the ARPEGE AGCM, ISBA land-surface model and GELATO sea-ice model (Voldoire et al., 2013) whereas IPSL makes use of LMDZ, ORCHIDEE and LIM, respectively (Dufresne et al., 2013). For CNRM-CM6-1, these components were updated (Voldoire et al., 2019). All CNRM model versions considered here are AOGCMs with prescribed aerosols and atmospheric chemistry, except CNRM-ESM2-1 (Séférian et al., 2019), which additionally comprises interactive component models for vegetation properties, terrestrial carbon-cycle processes, aerosols, stratospheric chemistry and ocean biogeochemistry.

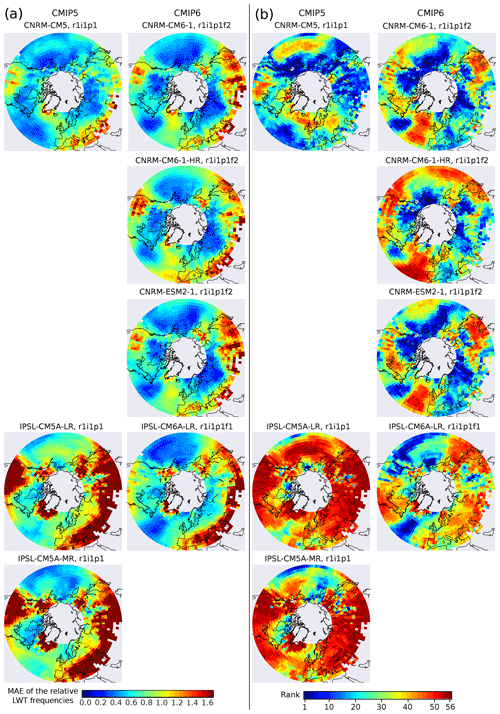

Within the CNRM model family, CNRM-CM5 is found to perform very well except in the central North Pacific, the southern US and in a subpolar belt extending from Baffinland in the west to western Russia in the east (see Fig. 5). This includes good performance over the Rocky Mountains and central Asia. From CNRM-CM5 to CNRM-CM6-1, performance gains are obtained in the central North Pacific, the southern US, Scandinavia and western Russia which, however, are compensated by performance losses in the entire eastern North Atlantic and in an area covering Manchuria, the Korean Peninsula and Japan. A similar picture is obtained for CNRM-ESM2-1, whereas a performance loss is observed for CNRM-CM6-1-HR. This is surprising since, in addition to improved parametrization schemes, the model resolution in the atmosphere and ocean was particularly increased in the latter model version.

All IPSL-CM model versions participating in CMIP5 and 6 comprise interactive vegetation properties and terrestrial carbon-cycle processes, as well as prescribed aerosols and atmospheric chemistry. Ocean biogeochemistry processes are simulated online but do not feed back on other components of the climate system. A simple representation of ice-sheet dynamics was included in IPSL-CM6A-LR (Boucher et al., 2020; Hourdin et al., 2020; Lurton et al., 2020) but is absent in IPSL-CM5A-LR and MR (Dufresne et al., 2013). The two model versions used in CMIP5 have been run with a modest horizontal resolution in the atmosphere (LMDZ) and ocean (NEMO). This changed for the better in IPSL-CM6A-LR, where a more competitive resolution was applied and all component models were improved. The result is a considerable performance increase from CMIP5 to CMIP6. Whereas both IPSL-CM5A-LR and IPSL-CM5A-MR perform poorly, IPSL-CM6A-LR does much better virtually anywhere in the NH mid-to-high latitudes, a finding that is insensitive to the effects of internal model variability (see Sect. 4.9).

Figure 5As Fig. 3 but for the CNRM and IPSL models.

The quite different results between the CNRM and IPSL models indicate that the common ocean component (NEMO) only marginally affects the simulated atmospheric circulation as defined here. All CNRM models, and also IPSL-CM6A-LR, thrive towards ERA-Interim in the southwestern US and towards JRA-55 in the seas around Greenland and the Gobi Desert. IPSL-CM5A-LR and MR are virtually insensitive to reanalysis choice (compare Fig. 5 with the “figs-refjra55/maps/rank” folder in the Supplement).

4.4 Model contributions from China, Taiwan and India

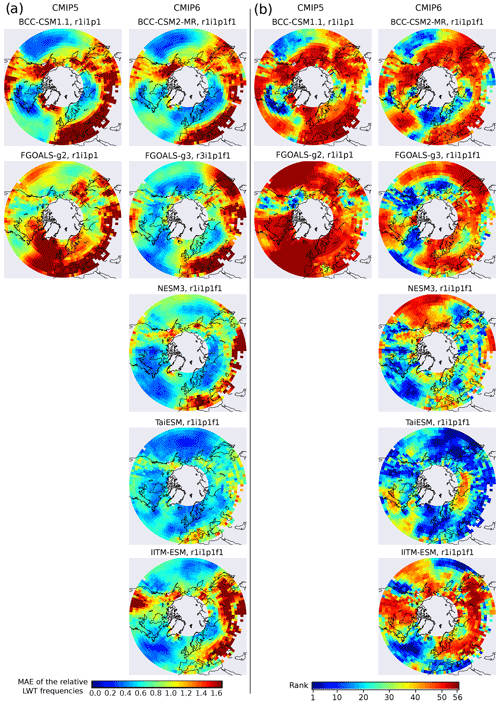

The Beijing Climate Center Climate System Model version 1.1 (BCC-CSM1.1) comprises the BCC-AGCM2.1 AOGCM, originating from CAM3 and developed independently thereafter (Wu et al., 2008), the BCC-AVIM1.0 land-surface model developed by the Chinese Academy of Science (Jinjun, 1995), GFDL's MOM4-L40 ocean model and Sea Ice Simulator (SIS). For BCC-CSM2-MR, the coupled model version used in CMIP6 (Wu et al., 2019), the latest updates of the in-house models are used in conjunction with the CMIP5 versions of MOM and SIS (v4 and 2, respectively). Both BCC-CSM1.1 and BCC-CSM2-MR are composed of interactive vegetation properties, terrestrial and oceanic carbon-cycle processes, while aerosols and atmospheric chemistry are prescribed. The MAE and ranking patterns of the two models are quite similar to those obtained from NCAR's CCSM2 (compare Figs. 6 and 4), which is likely due to the common origin of their AGCMs, meaning that the two BCC-CSM versions are likewise found to perform comparatively poor in most regions of the NH mid-to-high latitudes. The similarity between both model families is astonishing since they only share the origin of their atmospheric component but rely on different land-surface, ocean and sea-ice models. This in turn means that the latter two components do not noticeably affect the simulated atmospheric circulation as defined here, which is in line with the large differences found for the French models in spite of using the same ocean model (see Sect. 4.3).

Figure 6As Fig. 3 but for the BCCR and FGOALS models, as well as for NESM3, TaiESM and IITM-ESM.

The Flexible Global Ocean-Atmosphere-Land System Model, Grid-point version 2 (FGOALS-g2) comprises an independently developed AGCM and OGCM (GAMIL2 and LICOM2), as well as CLM3 and CICE4-LASG for the land-surface and sea-ice dynamics, respectively (Li et al., 2013), all components being coupled with CPL6. Vegetation properties and aerosols are prescribed in this model configuration. For FGOALS-g3, the model version contributing to CMIP6, the AGCM was updated to GAMIL3, including convective momentum transport, stratocumulus clouds, anthropogenic aerosol effects and an improved boundary layer scheme as new features (Li et al., 2020). The OGCM and coupler were also updated (to LICOM3 and CPL7) and a modified version of CLM4.5 (called CAS-LSM) is used as a land-surface model, whereas the sea-ice model is practically identical to that used in the g2 version. In the g3 version, vegetation properties, terrestrial carbon-cycle processes and aerosols are prescribed. While FGOALS-g2 is one of the worst-performing models considered here, FGOALS-g3 performs considerably better, particularly over the northwestern and central North Atlantic Ocean, western North America and the North Pacific Ocean (see Fig. 6). The Nanjing University of Information Science and Technology Earth System Model version 3 (NESM3) is a new CMIP participant and is entirely built upon component models from other institutions (Cao et al., 2018). Namely, the AGCM, land-surface model, coupling software and atmospheric resolution are adopted from MPI-ESM1.2-LR (see Sect. 4.6) whereas NEMO3.4 and CICE4.1 are taken from IPSL and NCAR, respectively (Cao et al., 2018). Vegetation properties and terrestrial carbon-cycle processes are interactive, aerosols are prescribed. Due to the use of the same AGCM, the error and ranking patterns for NESM3 are similar to those obtained for MPI-ESM1.2-LR (compare Fig. 6 with Fig. 8). Exceptions are found over the central and western North Pacific, where NESM3 performs poorly compared to MPI-ESM1.2-LR, and also over the eastern North Pacific, where NESM3 performs better. The similarity to MPI-ESM1.2-LR again points to the fact that the simulated LWT frequencies are determined by the AGCM rather than other component models.

The Taiwan Earth System Model version 1 (TaiESM1) is run by the Research Center for Environmental Changes, Academia Sinica in Taipei. It is essentially identical to NCAR's Community Earth System Model version 1.2.2, including new physical and chemical parametrization schemes in its atmospheric component CAM5 (Lee et al., 2020). TaiESM1 comprises interactive vegetation properties, terrestrial carbon-cycle processes and aerosols. The model's performance is generally very good, except over northern Russia, northeastern North America and the adjacent northwestern Atlantic Ocean, and the error and ranking patterns are roughly similar to SAM0-UNICAN (see Fig. 6), another CESM1 derivative, with TaiESM1 performing much better over Europe.

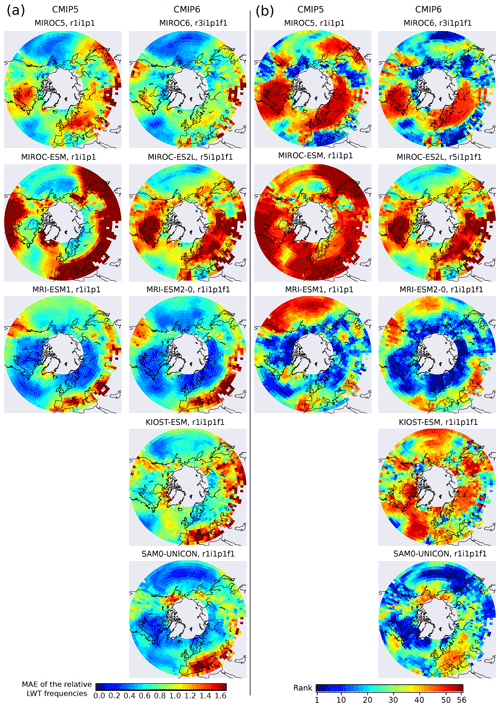

Figure 7As Fig. 3 but for the MIROC and MRI models, as well as KIOST-ESM and SAM0-UNICON.

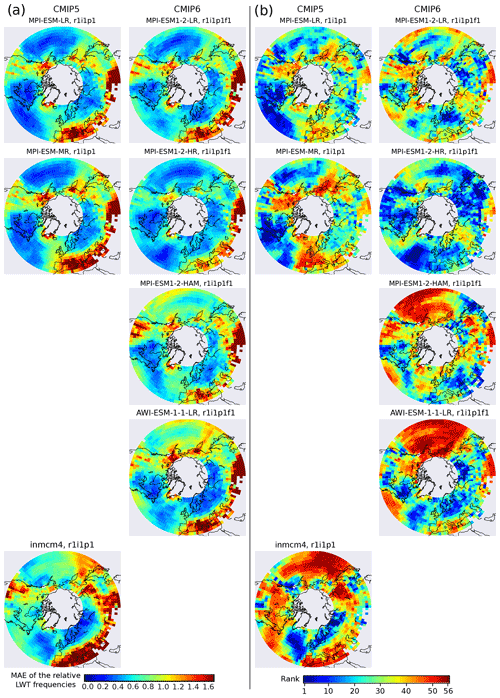

Figure 8As Fig. 3 but for the MPI, AWI and INM models.

The Indian Institute of Tropical Meteorology Earth System Model (IITM-ESM) includes the National Centers for Environmental Prediction Global Forecast System (NCEP GFS) AGCM, the MOM4p1 OGCM, Noah LSM for land-surface processes and SIS sea-ice dynamics (Swapna et al., 2015). Vegetation properties and aerosols are prescribed and ocean biogeochemistry processes are interactive. The results for IITM-ESM reveal large regional performance differences. The model ranks well over the central North Atlantic Ocean, Mediterranean Sea, the US west coast and subtropical western North Pacific but performs poorly in most of the remaining regions.

The results for BCC-CSM1.1, BCC-CSM2-MR and NESM3 are virtually insensitive to reanalysis uncertainty. To the southwest of Lake Baikal, both FGOALS-g2 and g3 are in closer agreement with JRA-55 than with ERA-Interim (compare Fig. 6 with the “figs-refjra55/maps/rank” folder in the Supplement). Over southwestern North America, however, FGOALS-g3 yields higher ranks if compared with ERA-Interim. TaiESM1 compares more closely with ERA-Interim over the southwestern US and the subtropical North Atlantic Ocean. The effects of reanalysis uncertainty on the results for IITM-ESM are generally small, except over the southern US, where JRA-55 yields better results, and in the seas surrounding Greenland, where the model agrees more closely with ERA-Interim.

4.5 Model contributions from Japan and South Korea

The Model for Interdisciplinary Research on Climate (MIROC) has been developed by the Japanese Center for Climate System Research (CCSR), National Institute for Environmental Studies (NIES) and the Japan Agency for Marine-Earth Science and Technology (JAMESTEC). It comprises the Frontier Research Center for Global Change (FRCGC) AGCM and CCSR's Ocean Component Model (COCO), as well as an own land-surface (MATSIRO) and sea-ice model. MIROC5 and 6 comprise interactive aerosols and prescribed vegetation properties (Watanabe et al., 2010; Tatebe et al., 2019). MIROC-ESM and MIROC-ESL2L are more complex configurations additionally including interactive terrestrial and ocean carbon-cycle processes, as well as interactive vegetation properties in the case of MIROC-ESM (Watanabe et al., 2011; Hajima et al., 2020). Results indicate a systematic performance increase from MIROC5 to MIROC6 in the presence of large performance differences from one region to another (see Fig. 6). Both models perform very well over the Mediterranean, northwestern North America and East Asia but do a poor job in northeastern North America and northern Eurasia. MIROC6 outperforms MIROC5 in the entire North Pacific basin including Japan, the Korean Peninsula and western North America and is also better in the central North Atlantic. The performance of the two more complex model versions is considerably lower, both ranking unfavourably if compared to the remaining GCM versions considered here.

The CMIP5 version of the Japanese Meteorological Research Institute Earth System Model (MRI-ESM1) comprises interactive component models for terrestrial carbon-cycle processes, aerosols, atmospheric photochemistry and ocean biogeochemistry, whereas vegetation properties are prescribed (Yukimoto et al., 2011). In the CMIP6 version (MRI-ESM2), terrestrial and ocean carbon-cycle processes are no longer interactive but prescribed from external files (Yukimoto et al., 2019). It is noteworthy that each model component and also the coupler have been originally developed by MRI, and the coupling applied in these models is particularly comprehensive (Yukimoto et al., 2011). The comparatively high model resolution applied in MRI-ESM1 was further refined in MRI-ESM2 by adding more vertical layers, particularly in the atmosphere (see Table 1). To the north of approximately 50∘ N, both model versions perform very well, except for Greenland and the surrounding seas in MRI-ESM1. Model performance decreases to the south of this line, particularly in the central to western Pacific basin including western North America, the subtropical North Atlantic to the west of the Strait of Gibraltar and the regions around Greenland and the Caspian Sea. It is in these “weak” regions where the largest performance gains are obtained from MRI-ESM1 to MRI-ESM2.

The Korea Institute of Ocean Science and Technology Earth System Model (KIOST-ESM) contains modified versions of GFDL-AM2.0 and CLM4 for atmosphere and land-surface dynamics, as well as GFDL-MOM5 and GFDL-SIS for ocean and sea-ice dynamics (Pak et al., 2021). The model has interactive representations for the vegetation properties and terrestrial carbon-cycle processes and works with prescribed aerosols. Its error and ranking patterns are similar to that obtained from GFDL-CM3 (using GFDL-AM3), the weakest performance found in the same regions (the western US, Mediterranean Basin, Manchuria and central North Pacific). However, KIOST-ESM consistently performs poorly compared to GFDL-CM3.

The Seoul National University Atmosphere Model version 0 with a Unified Convection Scheme (SAM0-UNICON) contributes for the first time in CMIP6 (Park et al., 2019). Its component models are identical to CESM1 in its AOGCM configuration plus interactive aerosols (Hurrell et al., 2013), including unique parametrization schemes for convection, stratiform clouds, aerosols, radiation, surface fluxes and planetary boundary layer dynamics (Park et al., 2019). Vegetation properties and terrestrial carbon-cycle processes are resolved interactively as well. Although the model components from CESM are used in SAM0-UNICON, CMCC-CM2-SR5 and NorESM2, a distinct error pattern is obtained for SAM0-UNICON (compare Fig. 7 with Fig. 10). This might be due to the use of different ocean models (see Table 1) or precisely due to the effects of the particular parametrization schemes mentioned above. Although the error magnitude of SAM0-UNICON is similar to CMCC-CM-SR5, SAM0-UNICON exhibits weaker regional performance differences, making it the more balanced model out of the two. In most regions of the NH mid-to-high latitudes, SAM0-UNICON yields better results than NorESM2-LM but is outperformed by NorESM2-MM.

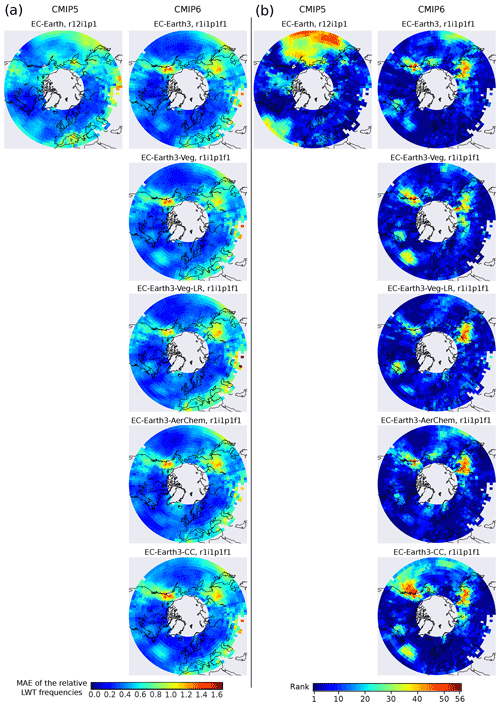

Figure 9As Fig. 3 but for the EC-Earth models.

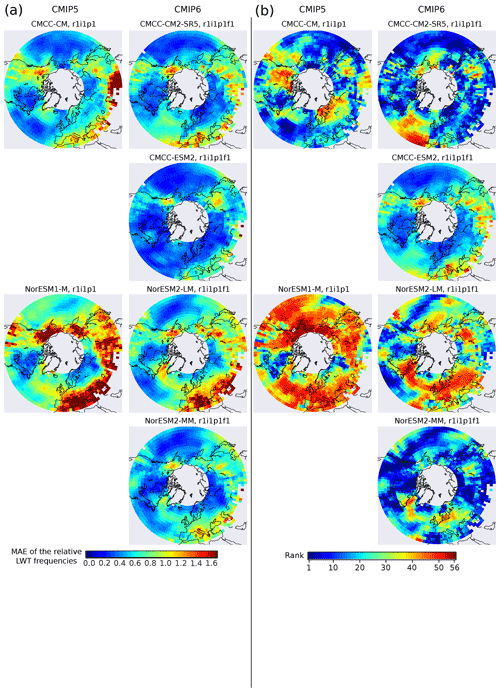

Figure 10As Fig. 3 but for the CMCC and NorESM models.

The MRI models generally agree closer with ERA-Interim than with the JRA-55, which is surprising since JRA-55 was also developed at JMA (compare Fig. 7 with the “figs-refjra55/maps/rank” folder in the Supplement). For the MIROC family, a heterogeneous picture is obtained. While MIROC5 and MIROC-ESM clearly thrive towards ERA-Interim and JRA-55, respectively, MIROC6 is closer to JRA-55 in the southwestern US and closer to ERA-Interim in the Gobi Desert and around Greenland. The results for MIROC-ES2L are virtually insensitive to the applied reference reanalysis. In the three main regions of reanalysis uncertainty, SAM0-UNICON is in closer agreement with ERA-Interim than with JRA-55. For KIOST-ESM, it is the other way around. Over the southwestern US and Gobi Desert, this model more closely resembles JRA-55.

4.6 Model contributions from Germany and Russia

The Max-Planck Institute Earth System Model (MPI-ESM) is hosted by the Max-Planck Institute for Meteorology (MPI-M) in Germany, with all component models developed independently. It comprises the ECHAM, JSBACH, and MPIOM models representing atmosphere, land-surface and terrestrial biosphere processes as well as ocean and sea-ice dynamics (Giorgetta et al., 2013; Jungclaus et al., 2013; Mauritsen et al., 2019). All model configurations interactively resolve vegetation properties as well as terrestrial and ocean carbon-cycle processes, the latter represented by the HAMOCC model, and are coupled with the OASIS software. In MPI-ESM1.2-LR and -HR, aerosols are additionally prescribed. The “working horse” used for generating large ensembles and long control runs is the “LR” version applied in CMIP5 and 6 (MPI-ESM-LR and MPI-ESM1.2-LR, respectively). In this configuration, ECHAM (versions 6 and 6.3) is run with a horizontal resolution of 1.9∘ (T63) and 47 layers in the vertical, and MPIOM with a 1.5∘ resolution near the Equator and 40 levels in the vertical. In MPI-ESM-MR, the number of vertical layers in the atmosphere is doubled and the horizontal resolution in the ocean augmented to 0.4∘ near the Equator. In MPI-ESM1.2, several atmospheric parametrization schemes have been improved and/or corrected, including radiation, aerosols, clouds, convection and turbulence, and the land-surface and ocean biogeochemistry processes have been made more comprehensive. Since the carbon-cycle has not been run to equilibrium with MPI-ESM1.2-HR, this model version is considered unstable by its development team (Mauritsen et al., 2019). For MPI-ESM1.2-HAM, an aerosol and sulfur chemistry module, developed by a consortium led by the Leibniz Institute for Tropospheric Research, is coupled with ECHAM6.3 in a configuration that otherwise is identical to MPI-ESM1.2-LR (Tegen et al., 2019). Similarly, Alfred Wegener Institute's AWI-ESM-1.1-LR makes use of their own ocean and sea-ice model FESOM but otherwise is identical to MPI-ESM1.2-LR (Semmler et al., 2020).

Results show that the vertical resolution increase in the atmosphere undertaken from MPI-ESM-LR to MR (the CMIP5 versions) sharpens the regional performance differences rather than contributing to an improvement (see Fig. 8). When switching from MPI-ESM-LR to MPI-ESM1.2-LR, i.e. from CMIP5 to 6 with constant resolution, the performance increases over Europe but decreases in most of the remaining regions. Notably, MPI-ESM-LR's good to very good performance in a zonal belt ranging from the eastern subtropical North Pacific to the eastern subtropical Atlantic is lost in MPI-ESM1.2-LR. This picture worsens for MPI-ESM1.2-HAM and AWI-ESM-1.1-LR, which, even more so than MPI-ESM-MR, are characterized by large regional performance differences and particularly unfavourable results over almost the entire North Pacific basin. However, systematic performance gains are obtained by MPI-ESM1.2-HR, indicating that a horizontal rather than vertical resolution increase in the atmosphere conducts better performance in this model family (recall that the sole vertical resolution increase from MPI-ESM-LR to MPI-ESM-MR worsens the results). In the “HR” configuration, MPI-ESM1.2 is one of the best-performing models considered here.

The atmosphere, land-surface, ocean and sea-ice components of the Institute of Numerical Mathematics, Russian Academy of Sciences model INM-CM4 were all developed independently (Volodin et al., 2010). This model comprises interactive vegetation properties and terrestrial carbon-cycle processes, as well as a simple ocean carbon model, including atmosphere–ocean fluxes, total dissolved carbon advection by oceanic currents and a prescribed biological pump (Evgeny Volodin, personal communication). INM-CM4 contributed to CMIP5, and an updated version (INM-CM4-8) is currently participating in CMIP6, but the 6-hourly SLP data are not available for this version so it had to be excluded here. The resolution setup of INM-CM4 is comparable to other CMIP5 models, except for the very few vertical layers used in the atmosphere (see Table 1). As shown in Fig. 8, INM-CM4 performs well in the eastern North Atlantic, northern Europe and the Gulf of Alaska, regularly over northern China and the Korean Peninsula and poorly over the remaining regions of the NH. It is thus marked by large performance differences from one region to another.

In the three main regions sensitive to reanalysis uncertainty, all model versions assessed in this section consistently thrive towards JRA-55 (compare Fig. 8 with the “figs-refjra55/maps/rank” folder in the Supplement).

4.7 The joint European contribution EC-Earth

The EC-Earth consortium is a collaborative effort made by research institutions from several European countries. Following the idea of seamless prediction (Palmer et al., 2008), the atmospheric component used in the EC-Earth model is based on ECMWF's Integrated Forecasting System (IFS), complemented by the HTESSEL land-surface model and a new parametrization scheme for convection. NEMO and LIM constitute the ocean and sea-ice models; OASIS is the coupling software (Hazeleger et al., 2010, 2011). Starting from this basic AOGCM configuration, additional climate system components can be optionally added to augment the complexity of the model. Regarding the historical experiments for CMIP5 and 6, EC-Earth 2.3 (or simply EC-Earth) and 3 are classical AOGCM configurations, using prescribed vegetation properties and aerosols (in the case of EC-Earth3). EC-Earth3-Veg comprises interactive vegetation properties and terrestrial carbon-cycle processes, whereas aerosols are prescribed. EC-Earth3-AerChem incorporates the interactive aerosol model TM5 whilst vegetation properties are prescribed. EC-Earth3-CC contains interactive vegetation properties, terrestrial and ocean carbon-cycle processes. Aerosols are prescribed in this “carbon-cycle” model version. Already the model version used in CMIP5 (EC-Earth2.3) comprises a fine resolution in the atmosphere and ocean, except for the relatively few vertical layers in the ocean. This configuration was adopted and more ocean layers were added for what is named “low resolution” in CMIP6 (EC-Earth3-LR, EC-Earth3-Veg-LR). For the remaining configurations used in CMIP6 (EC-Earth3, EC-Earth3-Veg, EC-Earth3-AerChem, EC-Earth3-CC), the atmospheric resolution is further refined in the horizontal and vertical (Döscher et al., 2021).

Results reveal an already very good performance for EC-Earth2.3 in all regions except the North Pacific and subtropical central Atlantic (see Fig. 9), which is in line with Perez et al. (2014) and Otero et al. (2017). EC-Earth3 performs even better and does so irrespective of the applied model complexity or resolution. All the versions of this model rank very well in almost any region of the world, including the central Asian high-mountain areas.

When evaluated against JRA-55 instead of ERA-Interim, the ranks for the EC-Earth model family consistently worsen by up to 20 integers in the southwestern US and around the southern tip of Greenland but remain roughly constant in the Gobi Desert (compare Fig. 9 with the “figs-refjra55/maps/rank” folder in the Supplement). This worsening brings the EC-Earth family to a closer agreement with the HadGEM models. Consequently, when evaluated against JRA-55, HadGEM3-GC31-MM links up with EC-Earth3 in what is here found to be the “best model”.

4.8 Model contributions from Italy and Norway

The Centro Euro-Mediterraneo per i Cambiamenti Climatici (CMCC) models are mainly built upon component models from MPI, NCAR and IPSL. For CMCC-CM, ECHAM5 is used in conjunction with SILVA, a land-vegetation model developed in Italy (Fogli et al., 2009), and OPA8.2 (note that later OPA versions were integrated into the NEMO framework) plus LIM for ocean and sea-ice dynamics, respectively. The very high horizontal resolution in atmosphere (T159) is achieved at the expense of a low horizontal resolution in the ocean and comparatively few vertical layers in both realms, as well as by the fact that no further climate system components are considered by this model version (Scoccimarro et al., 2011). For the core model contributing to CMIP6 (CMCC-CM2), all of the aforementioned components except the OGCM were substituted by those available from CESM1 (Hurrell et al., 2013). For the model version considered here (CMCC-CM2-SR5), CAM5.3 is run in conjunction with CLM4.5. For ocean and sea-ice dynamics, NEMO3.6 (i.e. OPA's successor) and CICE are applied (Cherchi et al., 2019). The coupler changed from OASISv3 to CPLv7 (Valcke, 2006; Craig et al., 2012) and the interactive aerosol model MAM3 was included. CMCC-ESM2 is the most complex version in this model family, including the aforementioned aerosol model, activated terrestrial biogeochemistry in CLM4.5 and the use of BFM5.1 to simulate ocean biogeochemistry processes. Due to the completely distinct model setups, the error and ranking patterns substantially change from CMIP5 to 6 for this model family (see Fig. 10). While CMCC-CM performs relatively weak in northern Canada, Scandinavia and northwestern Russia, CMCC-CM2-SR5 does so in the North Atlantic, particularly to the west of the Strait of Gibraltar. In the remaining regions, very good ranks are obtained by both models. Notably, CMCC-CM2-SR5 is one of the few models performing well in the central Asian high-mountain ranges and also in the Rocky Mountains (except in Alaska). In most of the remaining regions, it is likewise one of the best models considered here. Note that this model, due to identical model components for all realms except the ocean, is a good estimator for the performance of CESM1, which unfortunately cannot be assessed here due to data availability issues. The error an ranking patterns of CMCC-ESM2 are similar to CMCC-CM2-SR5, yielding fewer regional differences and a much better performance over the central eastern North Atlantic Ocean. Hence, CMCC-ESM2 is not only the most sophisticated but also the best-performing model version in this family.

The Norwegian Earth System Model (NorESM) shares substantial parts of its source code with the NCAR model family (particularly with CCSM and CESM2). NorESM1-M, the standard model version used in CMIP5 (Bentsen et al., 2013), comprises the CAM4-Oslo AOGCM – derived from CAM4 and complemented with the Kirkevag et al. (2008) aerosol module – CLM4 for land-surface processes, CICE4 for sea-ice dynamics and an ocean model based on the Miami Isopycnic Coordinate Ocean Model (MICOM) originally developed by NASA/GISS (Bleck and Smith, 1990). CPL7 is used as coupler. NorESM1-M contains interactive terrestrial carbon-cycle processes and aerosols, whereas vegetation properties are prescribed. From NorESM1 to NorESM2, the model components from CCSM were updated to CESM2.1 (Danabasoglu et al., 2020) whilst keeping the Norwegian aerosol module and modifying a number of parametrization schemes in CAM6-Nor with respect to CAM6 (Seland et al., 2020). Through the coupling of an updated MICOM version with the ocean biogeochemistry model HAMOCC, combined with the use of CLM5, the terrestrial and ocean carbon-cycle processes are interactively resolved in NorESM2. Vegetation properties and atmospheric chemistry are prescribed, and the coupler has been updated from CPL7 to CIME, which is also used in CESM2. In the present study, the basic configuration NorESM2-LM is evaluated together with NorESM2-MM, the latter using a much finer horizontal resolution in the atmosphere (see Table 1). The corresponding maps in Fig. 10 reveal low model performance for NorESM1-M with an error magnitude and spatial pattern similar to CCSM4. When switching to NorESM2-LM, i.e. to updated and extended component models and an almost identical resolution in the atmosphere and ocean, notable performance gains are obtained in most regions of the NH, except in a zonal band extending from Newfoundland to the Ural Mountains which, further to the east, re-emerges over the Baikal region. In the higher-resolution version (NorESM2-MM), these errors are further reduced to a large degree, with the overall effect of obtaining one of the best models considered here.

In the three regions of pronounced reanalysis uncertainties, CMCC-CM is in closer agreement with JRA-55, whereas CMCC-CM2-SR5 and CMCC-ESM2 are more similar to ERA-Interim, reflecting the profound change in the model components from CMIP5 to 6 (compare Fig. 10 with the “figs-refjra55/maps/rank” folder in the Supplement). For the NorESM family, different reanalysis affinities are obtained for the three regions. While NorESM1 is closer to JRA-55 in all of them, NorESM2-LM is closer to ERA-Interim in the southwestern US but closer to JRA-55 in the Gobi Desert. NorESM2-MM is generally less sensitive to reanalysis uncertainty, with some affinity to ERA-Interim in the southwestern United States.

4.9 Summary boxplot, role of model resolution, model complexity and internal variability

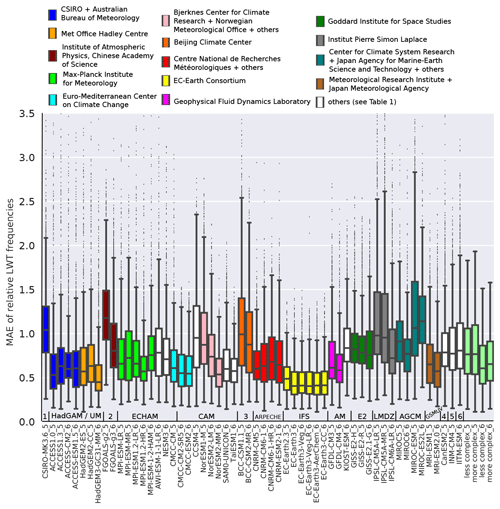

For each model version listed in Table 1, the spatial distribution of the pointwise MAE values can also be represented with a boxplot instead of a map, which allows for an overarching performance comparison visible at a glance (see Fig. 11 for the evaluation against ERA-Interim). Here, the standard configuration of the boxplot is applied. For a given sample of MAE values corresponding to a specific model, the box refers to the interquartile range (IQR) of that sample and the horizontal bar to the median. Whiskers are drawn at the 75th percentile + 1.5 × IQR and at the 25th percentile − 1.5 × IQR. All values outside this range are considered outliers (indicated by dots). Four additional boxplots are provided for the joint MAE samples of the more complex model versions (reaching a score ≥ 14) and the less complex versions used in CMIP5 and 6. In these four cases, outliers are not plotted for the sake of simplicity. The abbreviations of the coupled model configurations, as well as their participation in either CMIP5 or 6 (indicated by the final integer), are shown below the x axis. Along the x axis, the names of the coupled models' atmospheric components are also shown since some of them are shared by various research institutions (see also Table 1).

Figure 11Summary model performance plot; for each model version listed in Table 1, the pointwise MAE values are drawn with a boxplot instead of using a map. Four additional boxplots are provided for the less and the more complex model versions used in CMIP5 and 6, respectively (see text for details). Colours are assigned to the distinct coordinating research institutes, as indicated in the legend. The abbreviations of the coupled models, as well as their participation in either CMIP5 or 6 (indicated by the final integer) are shown below the x axis. Above this axis, the atmospheric component of each coupled model is shown in addition. Results are for the 1979–2005 period and with respect to ERA-Interim. AGCM abbreviations along the x axis are as defined as follows: (1) MK3 AGCM, (2) GAMIL, (3) BCC-AGCM, (4) CanAM4, (5) unnamed and (6) IITM-GFSv1; the names of the remaining AGCMs are indicated in the figure.

Results indicate a performance gain for most model families when switching from CMIP5 to 6 (available model pairs are located next to each other in Fig. 11). The largest improvements are obtained for those models performing relatively poorly in CMIP5. Namely, FGOALS-g2 improves upon FGOALS-g2 (dark brown), NorESM2-LM and NorESM2-MM upon NorESM1-M (rose), BCC-CSM1.1 upon BCC-CSM2-MR (orange), MIROC6 upon MIROC5 (blue-green) and IPSL-CM6A-LR upon IPSL-CM5A-LR and IPSL-CM5A-MR (grey). GISS-E2-R-5 improves upon GISS-E2-H and GISS-E2-R (green) in terms of median performance but suffers slightly larger spatial performance differences as indicated by the IQR. The MPI (neon green), CMCC (cyan), GFDL (magenta) and MRI (brown) models already performed well in CMIP5 and further improve in CMIP6. Among the MPI models, however, an advantage over the two CMIP5 versions is only obtained when considering the high-resolution CMIP6 version (compare MPI-ESM1.2-HR with MPI-ESM-LR and MPI-ESM-MR). Contrary to the remaining models, the performance of the CNRM (red) models does not improve from CMIP5 to 6, which may be due to the fact that the CMIP5 version (CNRM-CM5) already performed very well. Remarkably, CNRM's high-resolution CMIP6 version (CNRM-CM6-1-HR) is performing worst within this model family. Likewise, the ACCESS models (blue) do not improve either if ACCESS1.0 instead of ACCESS1.3 is taken as reference CMIP5 model.

The CMCC, HadGEM, and particularly the EC-Earth model families perform overly best, and all three exhibit a performance gain from CMIP5 to 6. NorESM2-MM also belongs to the best-performing models and largely improves upon NorESM2-LM and NorESM1. Remarkably, for four out of five possible comparisons, the more complex model version performs similar to less complex one (compare ACCESS-ESM1.5 with ACCESS-CM2, CMCC-ESM2 with CMCC-CM2-SR5, CNRM-ESM2-1 with CNRM-CM6-1-HR and EC-Earth3-CC with EC-Earth3). Only the MIROC family suffers a considerable performance loss when switching from less to more complexity, and only in this family the AGCM's resolution is considerably lower in the more complex configurations (compare MIROC-ESM with MIROC5 and MIROC-ES2L with MIROC6 in Fig. 11 and Table 1).

A virtual lack of outliers is another remarkable advantage of NorESM2-MM. MRI-ESM2 and GFDL-CM4 are also relatively robust to outliers but less so than NorESM2-MM. The fewest number of outliers among all models is obtained for EC-Earth, irrespective of the model version.

The model evaluation against JRA-55 reveals similar results (see “figs-refjra55/as-figure-10-but-wrt-jra55.pdf” in the Supplement), indicating that uncertain reanalysis data in the three relevant regions detected above do not substantially affect the hemispheric-wide statistics. What is noteworthy, however, is the slight but nevertheless visible performance loss for the EC-Earth model family, bringing EC-Earth3 approximately to the performance level of HadGEM3-GC31-MM. If evaluated against JRA-55, all EC-Earth model versions also comprise more outlier results. EC-Earth's affinity to ERA-Interim might be explained by the fact that this reanalysis was also built with ECMWF IFS.

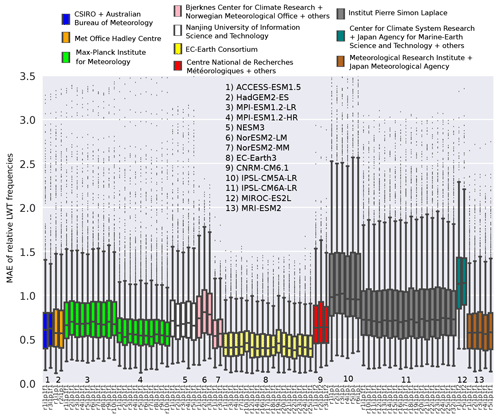

Table 2 provides the rank correlation coefficients (rs) between the median MAE with respect to ERA-Interim for each model, corresponding to the horizontal bars within the boxes in Fig. 11, and various resolution parameters of the atmosphere and ocean component models. Correlations are calculated separately for the zonal, meridional and vertical resolution represented by the number of grid boxes in the corresponding direction. Due to the presence of reduced Gaussian grids, longitudinal grid boxes at the Equator are considered. In addition, the 2-D mesh defined as the number of longitudinal grid boxes multiplied by the number of latitudinal grid boxes, as well as the 3-D mesh defined as the number of longitudinal grid boxes multiplied by the number of latitudinal grid boxes multiplied by the number of vertical layers, is taken into account in the analysis. Correlations are first calculated separately for the atmosphere and ocean, and, in the last step, the sizes of the atmosphere and ocean 3-D meshes are added to obtain the size of the combined atmosphere–ocean mesh. All dimensions are obtained from the source attribute inside the NetCDF files from ESGF or directly from the data array stored therein. Note that due to an unstructured grid in one ocean model, the breakdown in zonal and meridional resolution cannot be made in this realm.

Table 2Rank correlation coefficients between the median MAE values of the 56 models and various resolution parameters of the atmosphere or/and ocean component models. A significant relationship is indicated by an asterisk (α=0.01, two-tailed t test, H0= zero correlation). See text for more details.

As can be seen from Table 2, average model performance is closer related to the horizontal than to the vertical resolution in the atmosphere. Associations with the ocean resolution are weaker, as expected, but nevertheless significant. Since the resolution increase for most models has gone hand in hand with improvements in the internal parameters (parametrization, model physics, bugs), it is difficult to say which of these two effects is more influential for model performance. However, most of the models undergoing a version change without resolution increase do not experience a clear performance gain either. This is observed for the three ACCESS versions using the same AGCM (i.e. GA in 1.3, CM2 and ESM1-5) and also for the three model versions from GISS, all comprising the same horizontal resolution in the atmosphere within their respective model family. Likewise, CNRM-CM6-1 and MPI-ESM1-2-LR even perform slightly worse than their predecessors (CNRM-CM5 and MPI-ESM-LR), meaning that the update is counterproductive for their performance (see Fig. 11). This points to the fact that resolution is likely more influential for performance than model updates as long as the latter are not too substantial. Interestingly, the relationship between the models' median performance and the horizontal mesh size of their atmospheric component is nonlinear (rs 0.72), with an abrupt shift towards better results at approximately 25 000 grid points (see Fig. 13a).

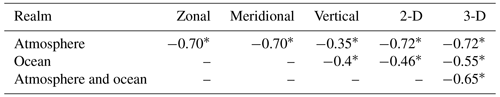

Figure 12As Fig. 11 but considering 72 additional runs for a subset of 13 distinct coupled models. All available runs per model are taken into account, except for IPSL-CM6A-LR for which the analyses were stopped after considering 17 additional ensemble members. The colours referring to the coordinating research institute are identical to those in Fig. 11, except for the Nanjing University of Information Science and Technology, which is painted white. Up to two ensembles per institute are shown, and the abbreviations of the individual coupled models are indicated by numbers. The exact run specifications are provided along the x axis.

Figure 13Relationship between the median performance of the coupled model configuration and (a) the horizontal mesh size of the atmospheric component or (b) the coupled model complexity score described in Sect. 3.3. Model performance is with respect to ERA-Interim. CNRM-CM6-1-HR and CNRM-ESM2-1 are out of scale in panel (a) due to their very fine atmospheric resolution.