the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Automated geological map deconstruction for 3D model construction using map2loop 1.0 and map2model 1.0

Vitaliy Ogarko

Yohan de Rose

Mark Lindsay

Ranee Joshi

Agnieszka Piechocka

Lachlan Grose

Miguel de la Varga

Laurent Ailleres

Guillaume Pirot

At a regional scale, the best predictor for the 3D geology of the near-subsurface is often the information contained in a geological map. One challenge we face is the difficulty in reproducibly preparing input data for 3D geological models. We present two libraries (map2loop and map2model) that automatically combine the information available in digital geological maps with conceptual information, including assumptions regarding the subsurface extent of faults and plutons to provide sufficient constraints to build a prototype 3D geological model. The information stored in a map falls into three categories of geometric data: positional data, such as the position of faults, intrusive, and stratigraphic contacts; gradient data, such as the dips of contacts or faults; and topological data, such as the age relationships of faults and stratigraphic units or their spatial adjacency relationships. This automation provides significant advantages: it reduces the time to first prototype models; it clearly separates the data, concepts, and interpretations; and provides a homogenous pathway to sensitivity analysis, uncertainty quantification, and value of information studies that require stochastic simulations, and thus the automation of the 3D modelling workflow from data extraction through to model construction. We use the example of the folded and faulted Hamersley Basin in Western Australia to demonstrate a complete workflow from data extraction to 3D modelling using two different open-source 3D modelling engines: GemPy and LoopStructural.

- Article

(12996 KB) - Full-text XML

- BibTeX

- EndNote

The 3D description and quantification of geometries displayed by deformed rocks has a long history (Sopwith, 1834; Argand, 1911; Ramsay, 1967; Ragan, 2009); however, given the technologies available at the time, these were typically manual calculations extracted from photos or sketches. It has also long been recognized that a geological map and its legend provide more than just the distribution of lithological units but is also a compendium of many different types of information (Varnes, 1974; Bonham-Carter and Broome, 1998). Burns (1988) pioneered the analysis of maps in terms of the spatial and temporal relationships stored within, and Harrap (2001) defined a legend language with the aim of consistency checking both during and after map creation, especially when large, complex compilation maps were being created, and to focus on areas where a legend contradicts map relationships. Extracting information from digital geographic information system (GIS) maps was pioneered in the context of mineral prospectivity (Bonham-Carter, 1994) and more recently to validate the maps and analyse specific structures such as stratigraphic contacts and faults and even stratigraphic thicknesses (Fernández, 2005; Rauch et al., 2019; Kelka et al., 2020; Allmendinger, 2020). These 3D modelling packages often have basic data ingestion schemes that can import GIS data, for example the open-source package gemsis (https://github.com/cgre-aachen/gemgis, last access: 9 August 2021) is an example of a system to speed up ingestion of data into the GemPy 3D modelling platform, which assumes that the data are already in the fundamentally correct format (e.g. contact data have already been parsed to determine the base of the unit). Since their inception, 3D geological modelling platforms have varied in their use of primary observations and geologic knowledge to constrain the 3D model geometry (Wellmann and Caumon, 2018). At one extreme, the kinematic code Noddy (Jessell, 1981; Jessell and Valenta, 1986) almost exclusively uses a high-level synthesis of the understanding of structural evolution provided by the model builder to build the 3D model. Hybrid approaches that include kinematic descriptions with specific located observations are also possible (Moretti, 2008; Bigi et al., 2012; Laurent et al., 2013). In contrast, most current systems draw upon the interpolation of geological orientation and contact information to represent surfaces between observations in 3D using direct triangulation or interpolation of the data that can be directly observed or interpreted from geophysical data (Mallet, 1992; Houlding, 1994; Wu et al., 2005; Caumon et al., 2009). Approaches of this type are implemented in a range of commercial software packages (Calcagno et al., 2008; Cowan et al., 2003) and more recently open-source systems (de la Varga et al., 2019; Grose et al., 2020). In the earliest systems, the topological relationships between subsequent series and the relative age of faults in a fault network were enforced through the construction of surfaces representing presumed structural relationships (Mallet, 2002; Caumon et al., 2004, 2009). More recently, developments have been made in methods that combine observed data and topological and geological knowledge in an “implicit” approach (Lajaunie et al., 1997; Aug et al., 2005; Frank et al., 2007; Caumon et al., 2013; Calcagno et al., 2008; Hillier et al., 2014; de la Varga et al., 2019; Grose et al., 2021).

The first steps in these 3D modelling workflows are time-consuming, revolving around the extraction and decimation of the source data. These steps are for the most part irreproducible: two different geologists will produce different 3D models from the same source data, and even the same geologist building the model twice would be unable to exactly reproduce the same model. In addition, the tracking of the provenance of information and decisions leading to modelling choices is effectively impossible. In this study we present the first attempts at improving that part of the 3D modelling workflow related to the transformation from map data to first model, which is one of the most time-consuming parts (hours to days) of the pre-model-building process. As discussed in this paper, this transformation is not unique but depends on the parameters used to select which features to model and the methods of combining the source datasets. This may even involve combining maps with different legends (Colman-Sadd et al., 1997); however, to date we have not addressed this issue. This study is aimed at hard-rock regional modelling scenarios, which are generally data-poor compared to mines and sedimentary basins, and is part of the Loop project, a OneGeology consortium to build a new open-source framework for 3D geological modelling (Ailleres et al., 2018; http://loop3D.org, last access: 9 August 2021). The aim of the libraries described here is to provide 3D modelling systems with a unified method for accessing legacy digital geological data, either from local files or online data servers, and to extract the maximum geological information available for use as constraints on the 3D modelling process, as well as other studies. Indeed, much of the information extracted from the map (local stratigraphic information, the topology of fault networks, local stratigraphic offsets across faults, local formation thickness) helps in understanding the geology of the area even without building a 3D model. One might want to automate these currently manual data manipulations for many reasons, in particular for considerations of speed; reproducibility; and separation of data, concepts, and interpretations. Although the primary aim of this study was to provide information for 3D modelling workflows, some of the outputs may be useful for 2D analyses.

Jessell et al. (2014) consider four 3D geological modelling scenarios: local-scale (mine) models, regional-scale sedimentary basins, regional-scale hard-rock terranes, and large-scale (crustal or lithospheric) models. The present work is focused on the regional hard-rock terranes scenario, where the best predictor for the 3D geology of the subsurface is the information contained in a geological map and, if available, logged well data. Unfortunately, with the exception of basin settings, drill holes are often too shallow to provide constraints at the regional scale and also often lack stratigraphic information (see for example the GSWA Drillhole database, http://www.dmp.wa.gov.au/geoview, last access: 9 August 2021).

Starting from standard Geological Survey of Western Australia (GSWA) map products and by extracting primary (e.g. stratigraphic contact location) and secondary (e.g. local formation thickness) geometric information, as well as fault and stratigraphic topological relationships, we are able to export a complete input file for two open-source geomodelling packages (GemPy, de la Varga et al., 2016; LoopStructural, Grose et al., 2021). In principle, this workflow could be extended to work with other implicit modelling platforms, such as EarthVision (Mayoraz et al., 1992), Geomodeller (Calcagno et al., 2008), Gocad-SKUA (Mallet, 2004) and Leapfrog (Cowan et al., 2003), although the generated input dataset may contain data that are not considered in the modelling workflow proposed by some of these packages. The idea of extracting information to feed 3D modelling algorithms directly from other data sources such as satellite data has been previously demonstrated by Caumon et al. (2013) and Wellmann et al. (2019). A parallel study building libraries for automating information extraction from drill hole data is presented by Joshi et al. (2021), and thus this toolset will not be discussed further here. Similarly, although geological cross sections can be handled by similar methods to those that are described here, for simplicity's sake we will not discuss them here.

In addition to the map2model library, map2loop depends on, but is being developed independently of, a number of external open-source libraries and in particular draws heavily on Geopandas (to manage vector geospatial data; https://geopandas.org/, last access: 9 August 2021), Rasterio (to manage raster geospatial data; https://github.com/mapbox/rasterio, last access: 9 August 2021), Networkx (to manage network graphs; https://github.com/networkx/networkx, last access: 9 August 2021) and Shapely (to manage 2D computational geometry; https://github.com/Toblerity/Shapely, last access: 9 August 2021).

For clarity, we refer to “inputs” as the inputs to map2loop and map2model libraries and “augmented data” as the products of map2loop. The augmented data in turn form the inputs to the target 3D geological modelling engines. All temporary inputs and outputs from the related map2model library are wrapped within the map2loop library.

The information contained in a geological map falls into three categories of geometric data: positional data, such as the position of faults and intrusive and stratigraphic contacts; gradient data, such as the dips of contacts or faults; and finally spatial and temporal topological data, such as the age relationships between faults and stratigraphic units. As modellers we combine all of these direct observations with conceptual information: knowledge from nearby areas; our understanding of the tectonic history of the region, including assumptions regarding the subsurface geometry of faults and plutons; and generic geological knowledge (such as our understanding of pluton emplacement mechanisms) to provide sufficient constraints to build a 3D geological model. Often these concepts are communicated via geological cross sections supplied with the map; however, these are typically based on limited or no additional data as they combine the conceptual ideas mentioned above with local positional and gradient information derived from the map, although they can now routinely be validated using regional geophysical datasets such as gravity and magnetics (Spampinato et al., 2015; Martin et al., 2013). Even when we have seismic reflection data in basins, the role of conceptual biases cannot be ignored (Bond et al., 2007; Bond, 2015) In addition, the map will usually supply a stratigraphic column that provides a more direct but simplified representation of stratigraphic relationships.

In this study we draw inspiration from existing manual workflows and structural decision-making processes by developing a suite of algorithms that allow us to automatically deconstruct a geological map to recover the necessary positional, topological and gradient data as inputs to different 3D geological modelling codes. Some of the code simply reproduces the 3D modelling packages' abilities to import different datasets; however, much of it is dedicated to extracting information that is contained within the map but rarely extracted from it in a systematic fashion, as it can be rather tedious to do so, although systems such as GeolMapDataExtractor (GMDE) certainly help (Allmendinger, 2020).

The libraries described here retrieve information from GIS layers or online servers, clean and decimate the data if needed, and then go through a series of data analysis steps to extract information from GIS layers stored locally or on online servers. This information includes the local stratigraphy, the geometries of the basal contacts of units and faults, estimates of local offsets along faults, and estimates of local formation thickness. Once these and other information have been extracted, they are output as standard formats (Graph Meta Language (GML), csv, geotif and ESRI shapefile formats) so that the target 3D modelling systems can use them as they are.

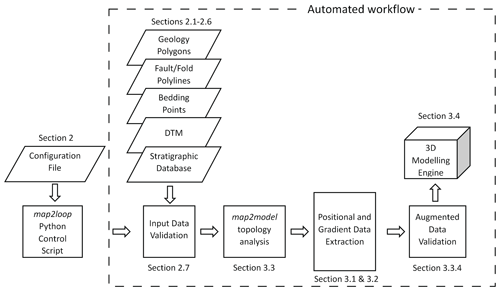

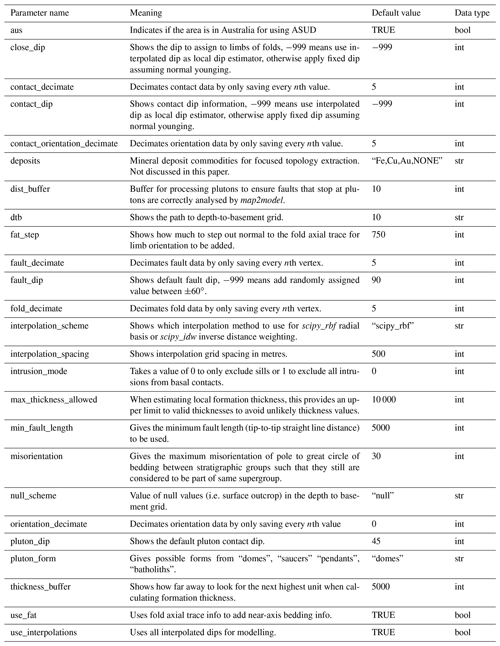

Once the input parameters are defined, it is important to emphasize that the entire workflow is automated so that all decisions about choices of parameters are made up front (see Table 1 for a list of these parameters) and the consequences of these decisions can be directly analysed in terms of the augmented outputs of the map2loop code or via the 3D models that can themselves are automatically built from these augmented outputs. Although it is a simplification, the overall workflow is shown in Fig. 2. Once the configuration file has been generated and the workflow control parameters have been defined in the map2loop control script, all further actions are fully automated, from accessing the input data, up to and including the construction of the 3D model using LoopStructural or GemPy.

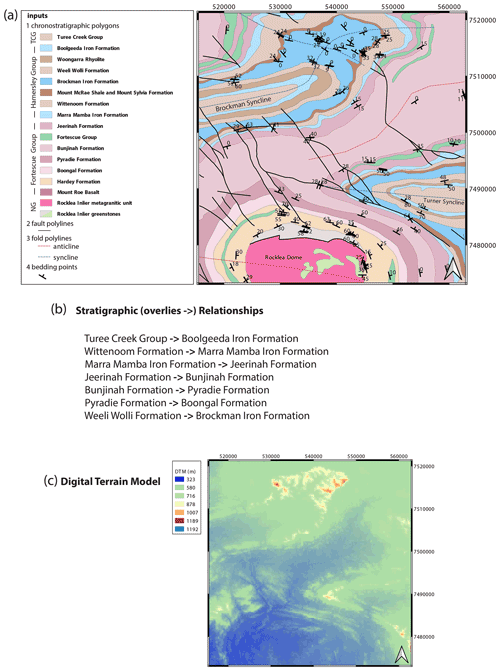

Figure 1The six types of inputs to map2loop. (a) The 1:500 000 interpreted bedrock geology of the Rocklea Dome region of Western Australia showing the different datasets used to create the 3D model. TCG stands for Turee Creek Group. NG indicates that there is no group defined by map, and thus each unit is its own group. The coordinates at the edge of the maps are provided as EPSG:28350 (GDA 94/MGA Zone 50). (b) The first seven entries of the binary stratigraphic relationships derived from the Australian Stratigraphic Units Database that relate to the test area (ASUD, Geoscience Australia and Australian Stratigraphy Commission, 2017, Australian Stratigraphic Units Database). (c) The SRTM digital terrain model is sourced directly from Geoscience Australia at http://services.ga.gov.au/gis/services/DEM_SRTM_1Second_over_Bathymetry_Topography/MapServer/WCSServer (last access: 9 August 2021).

Figure 2Automated workflow. Once the configuration file has been created and the workflow parameters (Table 1) have been defined in the map2loop control script, all steps within the dashed rectangle are fully automated, with no manual intervention. These automated steps are described in the associated sections, from accessing the data, through to and including the construction of the 3D geological model with LoopStructural or GemPy. Note that this is a schematic workflow, as individual steps need to be performed out of sequence for computational efficiency. A more detailed workflow is shown in Fig. 5.

In the example we present here, we use the 2016 1:500 000 Interpreted Bedrock Geology map of Western Australia and the WAROX outcrop database (Geological Survey of Western Australia, 2016) as the sources of the data needed to build a first-pass model of the region around the Rocklea Dome in the Hamersley Region of Western Australia (Fig. 1). The area consists of upright refolded folds of Archean and Proterozoic stratigraphy overlying an Archean basement cut by over 50 NW–SE trending faults that form a part of the Nanjilgardy Fault system (Thorne and Trendall, 2001).

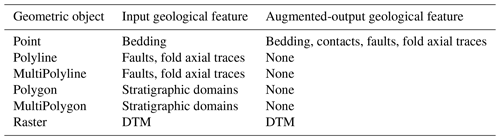

The map2loop library uses the Geopandas library to load data from several persistent formats (ESRI shapefiles, MapInfo tab files, JavaScript Object Notation (JSON) format files) and/or from a Web Feature Service (WFS). Geospatial data can be in any standard coordinate reference system (assuming a European Petroleum Survey Group (EPSG) code is supplied, http://epsg.io, last access: 9 August 2021). These libraries are used to load and transform the input geological geometries and attributes (Table 2).

Table 1Parameters that may be modified from their defaults prior to the automated workflow starting.

Table 2Geometric features imported and saved by map2loop and map2model. The geometric objects refer to specific Geopandas data objects.

In the following subsections, which are descriptions of the six sources of input data used by map2loop and map2model (Fig. 1), the terms are deliberately generic, as these two libraries uses a configuration file that allows the user to define which fields in the GIS layers or WFS servers contain which information. A Jupyter notebook (http://jupyter.org, last access: 9 August 2021) helps the user to create this HJSON format configuration file from the input layers (Utility 1 – Config file generator.ipynb). The minimum input data required to run map2loop are described in Appendix A.

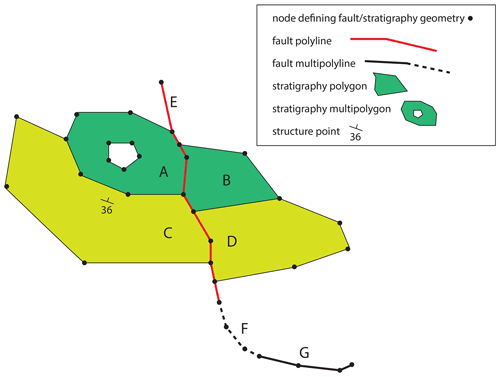

Figure 3Geometric elements used in geological maps. B, C and D are stratigraphic Polygons, defined by a sequence of the x,y locations of nodes. A is a MultiPolygon as it contains a hole, although MultiPolygons can also describe two unconnected Polygons. E is a fault Polyline. F and G are fault MultiPolylines that describe segments of the same fault (as does fault E in this case). The structure observation (bedding measurement) is of Point type. All geometric elements may possess multiple attributes and are converted to 3D equivalents by adding the information from the raster DTM.

2.1 Chronostratigraphic Polygon and MultiPolygon layer

This vector layer describes the geology polygons that have attributes defining their chronostratigraphic map. Although 3D geological models can be built from purely lithostratigraphic maps, the implicit modelling schemes targeted by map2loop assume some knowledge of the stratigraphy. The chronostratigraphic Polygon layer may also contain information on the surficial geology, but for more regional analysis this is either ignored by the map2loop library, or a map that provides interpreted bedrock geology can be used. A prototype system that accounts for thicker cover sequences is available, but not discussed further here. The layer may contain a mixture of single Polygons, MultiPolygons (sets of Polygons with the same non-spatial attributes), and or Polygons with holes (also stored as MultiPolygons, Fig. 3). We capitalize these terms as they refer to specific Geopandas data objects, rather than generic geometric descriptions. Each Polygon needs to contain the following elements:

-

a list of the ordered closed-loop x,y locations of the defining vertices;

-

a stratigraphic code or name at a lower hierarchical level (such as formation, member), which we will refer to as “units” (since the choice of stratigraphic resolution is up to the user and on a map Polygons will often have different levels of stratigraphic coding);

-

one or more higher-level stratigraphic definitions (such as group, supergroup, supersuite, province), which we will refer to as “groups”;

-

one or more lithological descriptions that help to determine if the unit is volcanic, a sill or other types of intrusions or other types of sedimentary rocks;

-

optionally (but importantly), the maximum and minimum estimated ages of the fine-scale stratigraphic unit.

In the case study presented here we use the 2016 1:500 000 Interpreted Bedrock Geology stratigraphic Polygons of Western Australia (Geological Survey of Western Australia, 2016). This map contains maximum and minimum estimates ages for each formation; however, they may share the same ranges within a group due to a lack of absolute geochronological constraints.

2.2 Fault Polyline and MultiPolyline layer

This vector layer describes the location, orientation and displacement information on mapped faults or narrow shear-zones at the surface. The layer may consist of a mixture of MultiPolylines (groups of Polylines with the same non-spatial attributes). MultiPolylines are subsequently disaggregated into distinct Polylines by the map2loop library to allow fault length and orientation analysis to be correctly performed. Faults shorter than a user-specified length can be filtered out to reduce model complexity.

Each Polyline needs to contain the following elements:

-

a list of the ordered open-loop x,y locations of the defining vertices,

-

a unique identifier so that the fault can be labelled in some way,

-

the dip and dip direction (or strike) of the fault can (optionally) be stored at its midpoint.

In the case study presented here, we use the 2016 1:500 000 Interpreted Bedrock Linear Features layer of Western Australia (Geological Survey of Western Australia, 2016) filtered by map2loop to extract the faults.

2.3 Fold axial trace Polyline layer

This vector layer describes the location and polarity (anticline vs. syncline) information on mapped fold axial traces, defined by the intersection of the fold axial surface and the surface of the Earth. The layer may consist of a mixture of Polylines and MultiPolylines (groups of Polylines with the same non-spatial attributes).

Each Polyline needs to contain the following elements:

-

a list of the ordered open-loop x,y locations of the defining vertices,

-

a unique identifier so that the fold axial trace can be labelled in some way,

-

the polarity of the fold axial trace (syncline, synform, anticline or antiform).

In the case study presented here, we use the 2016 1:500 000 Interpreted Bedrock Interpreted Bedrock Linear Features layer of Western Australia (Geological Survey of Western Australia, 2016) filtered by map2loop to extract the fold axial traces.

2.4 Bedding orientation point layer

This vector layer describes the local orientation of bedding and is often missing from map packages but can be found in the separate databases or original field notebooks. It could also be estimated by photointerpretation and/or three-point analysis.

The layer may consist of Points.

Each Point needs to contain the following elements:

-

a single x,y location of the defining Point;

-

dip information;

-

dip direction or strike information, which we will refer to as “azimuth” to avoid confusion;

-

the polarity of the bedding (upright or overturned).

In the case study presented here, we use the 2016 WAROX outcrop database (Geological Survey of Western Australia, 2016).

2.5 Reference stratigraphy

Some countries have developed national-level stratigraphic databases (such as the Australian Stratigraphic Units Database, ASUD, Geoscience Australia and Australian Stratigraphic Commission, 2017; https://asud.ga.gov.au/, last access: 9 August 2021) that allow access to detailed stratigraphic information at the formation level and above. The maximum–minimum ages for individual Polygons mentioned in Sect. 2.1 would typically be derived from such a database. This national-level stratigraphic information is typically non-spatial; however, assuming that the mapped chronostratigraphic Polygons share the same coding as the national database, we can use this to augment the stratigraphic relationships (such as “A overlies B”) once the topological analysis has been carried out by map2model, which in turn helps to define the local stratigraphy in the map area. The map2loop library currently uses a condensed extract from the ASUD database that defines neighbouring stratigraphic relationships as pairs (A overlies B) to refine the local stratigraphy (Fig. 1b).

2.6 Digital terrain model

This grid layer, usually derived from the SRTM (Shuttle Radar Topography Mission; Farr et al., 2007) or GDEM (Aster Global Digital Elevation Map; NASA/JPL, 2009) datasets (or a fusion of both), provides a uniform coverage of surface topography measurements over most of the continents. The map2loop library uses the Geoscience Australia server for 90 m coverage in Australia (Geoscience Australia, 2016) and the 1 km global coverage offered by the Pacific Islands Ocean Observing System (https://pae-paha.pacioos.hawaii.edu/thredds/dem.html?dataset=srtm30plus_v11_land, last access: 9 August 2021) server for coverage outside Australia, although there are a number of such servers now available, and the data are directly downloaded for the region of interest during the processing workflow. Local on-disk rasters of digital terrain models (DTMs) in geotif format may also be used.

In the case study presented here (Fig. 1c), we use the 90 m version served by Geoscience Australia (Geoscience Australia, 2016).

2.7 Validation of input data

Once the sources of data are defined, an automated initial verification of the data is performed to assure that the different information needed to perform the calculations is present. First it clips the data to the region of interest and then these new layers are checked to ensure that there is sufficient bedding data, as the algorithms we use require at least three orientations to interpolate a complete bedding orientation field. Then it checks to see if the geology Polygon file has any data in it. Empty layers can arise because of data path or projection errors, so there is no point continuing the calculations if there are no data and the program thus stops with an error statement. We also verify that each layer has all the fields described in the configuration file, and if required fields are again missing, the program stops. Warnings will be issued if empty values are found for required fields or optional fields are missing, in which case default values will be provided, but this will not stop program execution. Some data validations take place subsequently during calculations themselves, as they depend on an analysis of the values of features or secondary calculations as described below.

The map2loop and map2model libraries combine the inputs described in Sect. 2 in different combinations to produce a series of augmented outputs as csv, geotif and gml format files that can be used directly by the target 3D geological modelling systems or as sources of analysis for 2D studies. map2model performs a spatial and temporal topological analysis of the geological map, and map2loop further refines this analysis by including information from non-map sources, such as stratigraphic databases, acts as a wrapper for map2model and performs all other calculations.

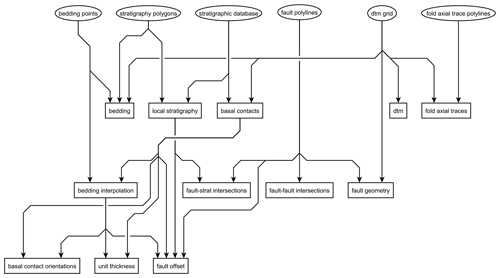

Figure 4Data flow from inputs (ellipses) provided by GIS map layers, web servers and stratigraphic databases. Augmented data (rectangles) are calculated by combining the inputs directly or incrementally during the map2loop workflow. The map2model code handles the topological analysis: fault–fault intersections, fault–stratigraphy intersections and local stratigraphic analysis; all other calculations are managed by map2loop.

This section outlines the high-level logic of how the different inputs are combined to produce information needed by the target 3DGM systems. As with the inputs to map2loop, the outputs are grouped by type: positional, gradient and topological outputs. The specific positional, gradient and topological outputs are in most cases calculated by combinations of the positional, gradient and topological inputs, and thus the ordering below does not reflect the order in which these augmented data are produced by the map2loop library in general, and reference is made to data calculated in later sections. Ordering the sections by order of calculation results would be useful to get an understanding of the specific data flow (Fig. 4), but also produces a rather confusing back and fourth in text form as some data are incrementally modified as the workflow progresses. Example pseudo-code for key calculations is included in Appendix B.

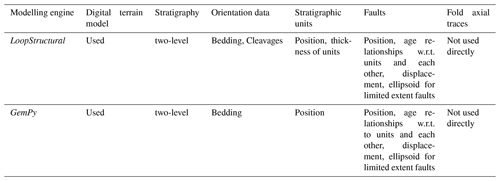

In the following sub-sections, we provide an overview of the different steps that the code automatically undertakes to extract augmented data from the input files. A summary of the specific outputs used by the 3D modelling engines used in this study is provided in Table 3.

3.1 Positional outputs

The first class of modelling constraints derived by the map2loop algorithms provide positional data. Positional outputs refer to information that defines the location of a feature, including the position of faults and intrusive and stratigraphic contacts. In this section we describe the combinations of data used to create these augmented data.

3.1.1 DTM

The online digital terrain model (DTM) servers described in Sect. 2.6 either provide the information at a fixed x,y spatial resolution or allow the client to subsample the data. For regional geological models a high-resolution topography model is usually not needed as the spatial resolution of 3D models is generally larger than the 30 m available from SRTM data, and thus a 90 m or even 1 km DTM is often sufficient for our needs. The map2loop library imports a subset of the global or national DTM, which are usually provided using a WGS84 projection. This is then reprojected using the Rasterio library to a metre or other non-degree based projection system. This distance-preserving coordinate system is appropriate for use by modelling packages that produce Cartesian models where the x, y and z coordinates use the same length units. The reprojected transformed DTM is stored as a geotif format file. Code is in development that will allow local geotif format DTM sources to be accessed.

3.1.2 Basal contacts

The map2loop library currently uses the convention that stratigraphic contacts are labelled by the overlying unit in the stratigraphy so that the contacts represent the bases of units, which we will refer to as basal contacts. Basal and intrusive contacts are calculated using the intersection of neighbouring Chronostratigraphic Polygons (Sect. 2.1). At the moment sill-like intrusive contacts are ignored, as they do not follow either massive pluton-like geometries or strict stratigraphic relationships but are instead the current subject of further study. Although stratigraphic lenses will be processed by map2loop, the 3D modelling packages we currently link to are unable to deal with these features except by inserting unconformities at the top of each lens, and this remains an open area for future studies. In order to determine the label of the resulting Polyline, we analyse the stratigraphic relationship between two Polygons using the information from the local stratigraphy calculated by the map2model library (Sect. 3.3.1):

-

if the two units are both volcano-sedimentary units, we label the basal contact with the unit name of the younger unit;

-

if one of the units is intrusive (not a sill) and the other has a volcano-sedimentary origin, we assign the intrusive unit name if the intrusion is younger than the volcano-sedimentary unit or the volcano-sedimentary unit if the intrusion is older;

-

if both units are intrusive (not sills), we assign the contact name to the younger unit.

If one or both of the units is a sill, we ignore the contact completely.

The x,y coordinates come from the intersection Polylines and can be decimated by taking every nth node; the z value comes from the DTM. Outputs from map2loop consist of the following elements:

-

a series of points,

-

a unique stratigraphic name for each Polyline,

-

the polarity of the contact for each point (relative direction of younging and dip direction; a value of 1 means they are in the same direction, and hence the bedding is the right way up; for overturned beds the value is 0).

3.1.3 Fault position and dimensions

Processing of fault geometries consists of extracting the x,y location of nodes from the fault Polylines (Sect. 2.2), combining them with the DTM to get z and calculating the distance between fault tips to define overall fault dimensions. A minimum fault length threshold can be applied so that very short fault segments, which will have little impact on the model, can be ignored. A decimation factor that only stores every nth node value can also be applied. If needed, prior to map2loop processing, we use FracG (Kelka et al., 2020) to recombine fault segments based on the coincidence of fault tip locations and similar fault trace orientations.

Outputs from map2loop consist of the following elements:

-

a series of points;

-

a unique code that can be used to create a name for each Polyline;

-

the dip, azimuth, and length of the fault for each fault Polyline.

3.1.4 Fold axial trace position and dimensions

Processing of fold axial trace geometries essentially consists of extracting the x,y location of nodes from fold Polylines (Sect. 2.3) and combining them with the DTM to get z. Fold polarity (anticline/syncline) is recovered and stored. A decimation factor that only stores every nth node can be applied. Outputs from map2loop consist of the following elements:

-

a series of points,

-

unique fold axial trace name for each Polyline,

-

the polarity of the fold for each fold axial trace Polyline.

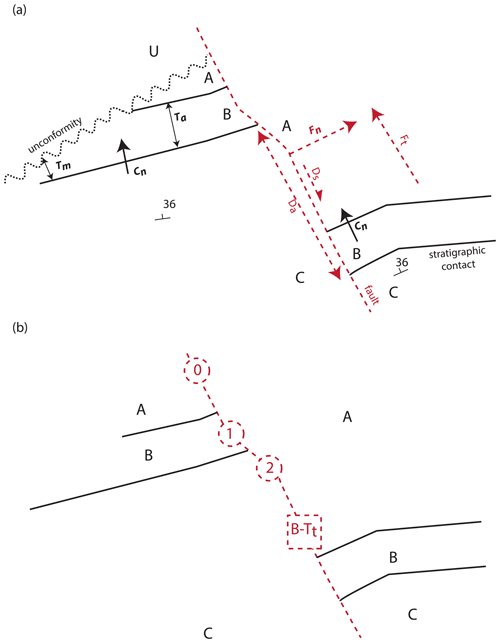

Figure 5Positional calculations. (a) Apparent unit thicknesses are calculated by calculating the normal distance from a contact (Ta) and are then transformed to “true” thicknesses by considering the local dip of the bedding. Apparent displacement is calculated by matching equivalent contacts across the fault, in this example the B–C contact (Da). This is then transformed to “true” displacement by assuming a down-dip slip vector. Finally, the downthrown direction is calculated by examining the cross product of the fault trace (Ft) and the dip direction of the strata multiplied by the displacement. See text for details. (b) If the direct calculation of fault displacement is not possible because equivalent contacts across the fault cannot be established, then a minimum displacement can be estimated by the stratigraphic offset in terms of unit thicknesses. In the example here, the dashed red square indicates that the fault locally separates units A and C, and thus the minimum displacement is the thickness of unit B, which we were able to calculate above. If the unit thickness is not calculable for some reason, the stratigraphic offset between units A–A, A–B and A–C indicate a stratigraphic offset of 0, 1 and 2 stratigraphic units, respectively (dashed red circles).

3.1.5 Local unit thickness

The local apparent thickness of units is calculated by finding the intersection of a line normal to the local tangent of a stratigraphic contact and the next stratigraphic contact (Fig. 5). Based on the stratigraphic relationship there are three possibilities.

-

If the next contact is the stratigraphically adjacent and higher contact, the distance is calculated (Ta) and stored as a local apparent thickness measurement.

-

If the next contact is stratigraphically higher but not stratigraphically adjacent, the distance is calculated and stored as the minimum apparent thickness (Tm).

-

In all other circumstances, no calculation is made.

True actual and minimum thicknesses can then be calculated from the apparent actual and minimum thicknesses as follows:

where Tt is the true dip, Ta is the apparent dip and θ is the dip of the bedding relative to the land surface (Fig. 5, Sect. 2.3.2).

As these calculations can potentially be made for each node of a stratigraphic contact, we often end up with multiple estimates per unit, for which we can calculate the aggregated information as follows.

-

If we have true actual thicknesses for a unit, we store the median and standard deviation of thicknesses and use the median of the actual thicknesses to calculate the local normalized thickness for each calculated node.

-

If we only have minimum thicknesses, we store the median and standard deviation of the minimum thicknesses and use the median of the normalized thicknesses to calculate the local normalized thickness for each calculated node.

-

If we have neither actual nor minimum thicknesses, (if needed) we use the median of the medians of thicknesses of all units as a rough estimate of the thickness, and no normalization is possible.

Outputs from map2loop consist of the following elements:

-

a series of points;

-

apparent, actual and minimum, and normalized actual and minimum thicknesses for each node (and error estimates where appropriate);

-

a table of summary thicknesses for all units.

3.1.6 Local fault displacement

We have implemented three distinct methods of estimating the displacement across faults, depending on data availability.

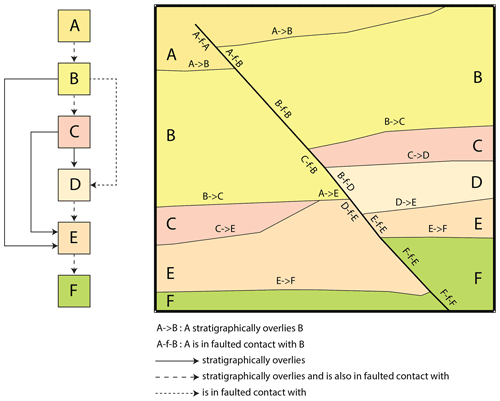

Figure 6Example topological relations extracted from the map by the map2model library. In this map we have six units (A–F) that are locally in contact with each other either by normal stratigraphic relationships (A–>B, signifying that A is younger) or separated by a fault (A–f–B, with no relative age significance). Once these individual binary relationships are aggregated by map2model into a single graph, specific pairs of units may be stratigraphic only (solid line), a combination of stratigraphic and fault relationships (long dashed line), or fault only (short dashed line). Intrusive relationships (not shown here) will also be extracted from the map where present. These relationships form the basis of our understanding of the local stratigraphic graph.

The most complete analysis of fault displacements is based on identifying equivalent stratigraphic contacts across a fault and measuring their apparent offset (Fig. 6a Da), assuming that these are not growth faults. If we combine this with the local interpolated estimates of dip and azimuth for the whole map (Sect. 3.2.4), and we know the orientation of the slip vector, we can calculate the true fault offset (Fig. 5a). Unfortunately, slip vectors are often hard to measure in the field and rarely recorded in geological maps. Given this, we can make an arbitrary assumption that the slip vector is down-dip (Ft), and then calculate the displacement based on the dip of the bedding and the dot product of the contact and fault trace normal as follows:

where Dt is the true displacement, Da is the apparent displacement, Cn is the 2D contact normal, Fn is the 2D fault normal and θ is the dip of the bedding. Since these are local estimates, we can have multiple estimates along the same fault, in which case even these poorly constrained displacement estimates are of interest, as the relative displacement pattern along the fault can still be determined. Where these displacement calculations can be made, we can also determine the local downthrown block by comparing the sense of displacement (dextral or sinistral) with the dip of the strata (Fig. 5h). Specifically, the downthrown direction is given by considering the cross product of the fault tangent, the contact normal and the sign of the relative offset as follows:

where W is the downthrow direction, Ft is the fault tangent, Cn is the contact normal and sgn(Ds) is the sign of the apparent displacement sense (positive is dextral). If W is negative, the downthrown direction is defined by the normal to the fault trace with a right-hand rule and by the opposite direction if the result is positive. The ability to match equivalent stratigraphic contacts across a fault depends on the type of geology, the scale of the project and the detail of the mapping.

A second level of displacement estimates can be made by comparing the stratigraphic offset across the fault, and thus if we have a stratigraphy going from older to younger of C–B–A and a fault locally separates unit A and unit C, then we can assume the offset has to be at least the thickness of units B. Thus, if we have estimates of unit thickness (see Sect. 3.1.5) then we can estimate minimum offset (Fig. 5b). If, for the same stratigraphy, the fault offsets the same unit A–A or stratigraphically adjacent units A–B, the conservative estimate of minimum displacement would be zero.

Finally, if we do not have unit thicknesses available, we can always simply record the stratigraphic offset in terms of number of units (Fig. 5b). Thus, in the original example above, an A–C relationship across a fault can be recorded as a stratigraphic offset of 2. The last two methods are not currently used in the automated workflow to determine fault offset; however, they do provide insights into which faults are the most important in a region.

3.2 Gradient outputs

The second class of modelling constraints derived by the map2loop algorithms provide gradient data. Gradient data in this context refers to information that defines the local orientation of a feature, such as the dips of stratigraphic contacts or faults. In this section we describe the combinations of data used to create these augmented data.

3.2.1 Bedding orientations

The orientation data produced by the map2loop library is derived from a combination of gradient and positional sources, specifically the bedding orientation point layer (x, y, dip, azimuth, polarity; Sect. 2.4), the DTM (z; Sect. 2.6) and the Chronostratigraphic Polygon layer (unit; Sect. 2.1). A filter is applied to remove observations where the dip is zero, as our experience has shown that this usually reflects a measurement where the dip was unknown, rather than a true dip of zero. Optionally, the number of points can be decimated based on taking every nth point from the layer. More sophisticated decimation procedures for orientation data, such as those described in Carmichael and Ailleres (2016), are the subject of current work. Internally, the code uses a dip direction convention, and thus if strike data are provided, we convert these to dip direction before calculation.

Secondary gradient information can be assigned along all the stratigraphic and intrusive contacts based on a series of simple assumptions.

-

The dip direction of all dips is assumed to be normal to the local tangent of the contact and are defined as zero at north and positive clockwise.

-

The dip can either be uniformly defined or, for the case of stratigraphic contacts, based on interpolated dips (see Sect. 2.2.4).

-

The azimuth of intrusive contacts for dome- or saucer-shaped bodies can be arbitrarily selected by choosing the polarity of the dips and the azimuth (domes have outward dips and inverse polarity, saucers have inward dips and normal polarity).

3.2.2 Fold orientation

If fold axial traces are available (and in areas with otherwise sparse bedding information), it can be useful to seed the model with extra orientation information that guides the anticline–syncline geometries.

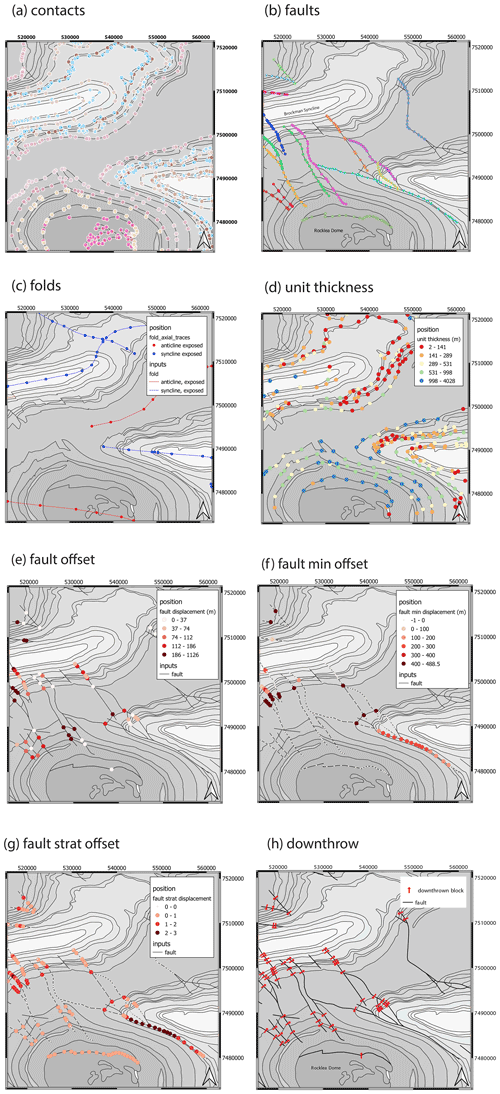

Figure 7Positional information derived from the map. (a) Basal contacts of stratigraphic units; colours are the same as Fig. 1. (b) Fault traces, with colours randomly assigned to each fault; only faults longer than a defined length, in this case 5 km, are processed. (c) Fold axial traces. (d) Local unit thicknesses. (e) Fault offset, assuming down-dip displacement. (f) Fault offset derived from minimum stratigraphic offset. (g) Stratigraphic fault offset. (h) Fault downthrown block direction.

For each fold, outputs from map2loop consist of the following elements:

-

positions,

-

a series of dip/azimuth pairs offset each side of the fold axial trace,

-

a stratigraphic unit for each position.

3.2.3 Fault orientation

If fault orientation data are available, either as numeric dip or azimuth (e.g. dip value: 75; azimuth value: 055) or in text form (e.g. dip values of “shallow”, “medium”, “steep”, “vertical” and azimuth values of “northeast”) then this is recovered and stored, otherwise the fault dip orientation is calculated from the fault tips, and the dip is set to a fixed value or is allowed to vary randomly between upper and lower limits. In the absence of other supporting information, the qualitative dip information assumes equally spaced dips between the shallowest and steepest term, and assumes that the shallowest term is not horizontal, so in the example above we would see the following values: “Shallow” = 22.5; “Medium” = 45; “Steep” = 67.5; and “Vertical” = 90.

For each fault, outputs from map2loop consist of the following elements (Fig. 7b):

-

positions of the endpoints and midpoint of the fault,

-

a dip–azimuth pair for each location.

3.2.4 Interpolated orientation field

It became apparent during the development of this library that obtaining an estimate of the dip from bedding everywhere in the map area was a necessary precursor to calculating important information such as unit thickness (Sect. 3.1.5), fault offset (Sect. 3.1.6) and the dips of contacts at arbitrary locations. In an attempt to retain more geological control over the sub-surface geometries, de Kemp (1998) used polynomial and hybrid B-spline interpolation techniques to extrapolate geological structure. All more recent 3D geological modelling packages involve generalized interpolants of one form or another (Wellmann and Caumon, 2018; see Grose et al., 2021, for a discussion of the strengths and weaknesses of the different interpolants). At the scale of the map, we observe that local bedding azimuth measurements are often relatively poor estimators of the map-scale orientation field. This occurs because the point observations record second-order structures, such as parasitic folds. In order to avoid these issues we have instead chosen to use the primary orientation data only for dip magnitudes, for which we have no alternative, and use the azimuth of stratigraphic contacts as the best estimator of the regional azimuth field. To this end we calculate a regular dip field using a multiquadratic radial basis function (RBF) of the primary orientation 3D direction cosines using the scipy library and separately use an RBF to interpolate the 2D contact azimuth direction cosines (lc, mc, Fig. 7d). Each set of orientations from structurally coherent “super-groups” (see Sect. 2.4) are interpolated separately. For each super-group, we then combine these into a single direction cosine (lo, mo, no i.e. the direction cosines of the interpolated bedding orientations), taking the no value from the interpolated 3D direction cosines and the lcmc terms from the 2D direction cosines and normalizing so that the vector has a length of 1. This gridded field is then available for the thickness and offset values as discussed above but could conceivably be used with appropriate caution as additional estimates of orientation in parts of the model where no direct observations are available or for cross-validation with known values.

3.3 Topological outputs

The third class of modelling constraints derived by the map2model algorithms provide the spatial and temporal topology of the map layers. Specifically, it creates network diagrams showing the stratigraphic relationships between units in the region of interest (Burns, 1988; Perrin and Rainaud, 2013; Thiele et al., 2016), network diagrams of the relationships between faults, and relationship tables showing whether a particular fault cuts a unit or group.

3.3.1 Local stratigraphy

The spatial and temporal relationships integrated into geological maps provide a key constraint for 3D geological modelling (Harrap, 2001; Perrin and Rainaud, 2013). At the scale of a map sheet, state- (or province-) or country-level stratigraphic legends are necessarily simplified models of the complex range of stratigraphic relationships. Since our aim is to build a model for an arbitrary geographic region, we need to be able to extract the local stratigraphic relationships rather than just relying on the high-level summaries. The map2loop library uses the map2model C++ library to extract local stratigraphic, structural and intrusive relationships from a geological map. map2model uses two of the layers sourced by map2loop: the chronostratigraphic Polygon layer (Sect. 2.1) and the fault Polyline layer (Sect. 2.2).

Shared contacts between Polygon-defining units, calculated by an intersection calculation that results in a Polyline, are labelled as either intrusive, stratigraphic or faulted based on the nature of the units either side of the contact and the presence or absence of a spatially coincident fault Polyline (Fig. 6). The logic is as follows:

-

if a Polyline between units coincides spatially with a fault Polyline, the Polyline is labelled as a fault contact;

-

if a Polyline is between one intrusive unit and a volcano-sedimentary unit, the Polyline is labelled intrusive if the intrusive unit is younger than the other unit or stratigraphic if it is older;

-

if the Polyline is between two intrusive units, the Polyline is labelled as intrusive.

Otherwise, the Polyline is labelled as stratigraphic.

The relative age of each unit is determined from the minimum and maximum ages supplied for each unit in the map, and if these are not available or they have the same age or age range, then no age relationship is assigned. The primary outputs from map2model are a series of network graphs in Graph Meta Language formal (GML) that can be visualized by the free but not open-source yEd package (https://www.yworks.com/products/yed, last access: 9 August 2021) or the open-source Gephi package (https://gephi.org/, last access: 9 August 2021). The map2model code provides graphs of all igneous, fault and stratigraphic contacts, and the stratigraphic relationship graph underpins the definition of local stratigraphy in the map2loop system.

As not all maps provide minimum and maximum age information, map2loop can optionally update the stratigraphic ordering by using a national or regional reference stratigraphic database (Sect. 2.5). Depending on the structure of the database, an age-sorted ordering of all units in the database or pairwise stratigraphic relationships such as “unit A overlies unit B”, have to be used to refine the ordering extracted from the map. Even after these progressive refinements, ambiguities in relative age of units usually remain. At the moment map2loop arbitrarily chooses one of the distinct stratigraphic orderings as the basis for its calculations, but clearly this is an important source of uncertainty that could be used stochastically to explore stratigraphic uncertainty.

We can reduce the uncertainty in the stratigraphic ordering that comes from lack of information in the map as to relative ages, or ambiguous relative map–age relationships, by considering one higher level of stratigraphy, which we will call “groups” but could be any higher rank of classification. This reduces the uncertainty, as the uncertainty in relative ages between groups is typically smaller than the relative ages of any two units if we ignore their group relationships.

Since map2loop is primarily aimed at implicit modelling schemes, there is a considerable advantage in reducing the number of stratigraphic groups that have to be interpolated separately, since the more orientation data we have for a structurally coherent set of units the better the interpolation. To this end we use the mplstereonet Python library to compare each group's best-fit girdle to bedding orientation data, and thus if their respective girdle orientations are within a user-defined value, they can be considered to be part of the same “super-group”.

The outputs of map2loop are a stratigraphic table (csv format) defining a distinct ordering of units and groups, plus a table of which groups form super-groups to be co-interpolated.

3.3.2 Fault–fault relationships

The intersection relationships between pairs of faults are calculated by map2model by analysing which faults terminate on another fault. This is assumed to represent an age relationship, with the fault that terminates assumed to be the older fault. The map2loop library converts this information into a table of binary relationships, such as Fault X truncates Fault Y, that are then compiled into a set of graphs of fault–fault relationships.

3.3.3 Fault–stratigraphy relationships

The intersection relationships between stratigraphic units and groups are calculated by the map2model library by analysing which geological Polygons have sections that are spatially coincident with faults. These are then converted by the map2loop library into two tables of the binary stratigraphic relationships: unit/group A is cut by/is not cut by fault X.

3.4 Validation of augmented data

Once the augmented data types have been calculated by map2loop and map2model, a final validation of the data is automatically performed so that there are no “orphan” data, for example orientation data for units that will not be modelled and a unit in the stratigraphy for which we have no contacts or orientations. Although this can obviously happen in nature, current modelling systems struggle with this concept, and thus we need to ensure that the model will actually build by removing unresolvable data.

3.5 3D modelling using map2loop and map2model augmented outputs

The two open-source modelling packages we have targeted use overlapping sources of information but distinct data formats to perform their modelling (Table 2). Some of the augmented data produced by the library are not (yet) explicitly required by any of the packages but are useful datasets for contextual regional analysis and can provide some guidance for studies unrelated to 3D modelling. A partner project led by the Geological Survey of Canada is developing a Knowledge Manager to support higher-level information as a geoscience ontology to provide conceptual frameworks for modelling, aggregated petrophysical data and other basic knowledge of relevance to 3D modelling workflows (Brodaric et al., 2009; Ma and Fox, 2013).

The outputs of map2loop and map2model described above provide all of the information required to build 3D geological models in GemPy (de la Varga et al., 2019) and LoopStructural (Grose et al., 2021).

The ability to generate all the necessary input data for a geological model from set of source layers in a matter of minutes demonstrates the potential for this approach to reduce the entry barrier for geologists who wish to make 3D models as part of their exploration or research programmes.

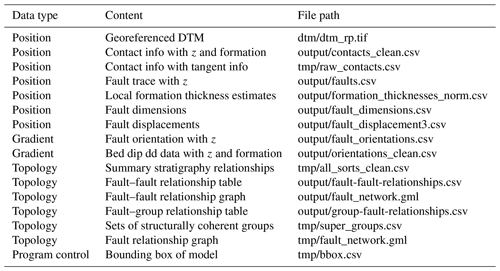

The results of the first stage of the automated workflow controlled by map2loop and including the map2model libraries are a set of augmented outputs that are both useful in their own right in terms of their ability to produce unbiased analyses of the map data, and as inputs the 3D modelling packages. A summary of all the files used by the 3D modelling engines generated by map2loop and map2model, together with file types, is given in Table 4.

Table 4Augmented outputs provided by map2loop and map2model. Many other outputs that are not described here are not currently used by the target modelling engines, and some simply provide debugging information.

4.1 Results of positional calculations

The positional information extracted from the various input data include the following results.

-

Basal contacts of stratigraphic units (Fig. 7a) that have been optionally decimated are found. Black lines show the original Polygon boundaries, and the coloured circles show the location of the base of the stratigraphic unit. Lines with no basal contacts are sills that are not yet handled by the code or the modelling engines

-

Fault traces, with colours randomly assigned to each fault and only using faults longer than a defined length (in this case 5 km) are processed (Fig. 7b) and optionally decimated. Some faults as mapped (near 56 000, 7 496 000) were ignored because they formed closed loops or were mapped with acute angles, which the modelling engines were not able to deal with properly and are in any case unlikely to be correctly drafted in this map.

-

Fold axial traces are found (Fig. 7c) and optionally decimated.

-

Local unit thicknesses are found as apparent, true and normalized thicknesses (each true thickness estimate divided by the median value for each unit) (Fig. 7d). In areas with sills, the code does not attempt to calculate thicknesses.

-

Fault offset is found, with both apparent and inferred true displacement assuming down-dip displacement (Fig. 7e).

-

Fault offset derived from minimum stratigraphic offset is found (Fig. 7f).

-

Stratigraphic fault offset is found (Fig. 7f).

-

Fault downthrown block direction found (Fig. 7g).

4.2 Results of gradient calculations

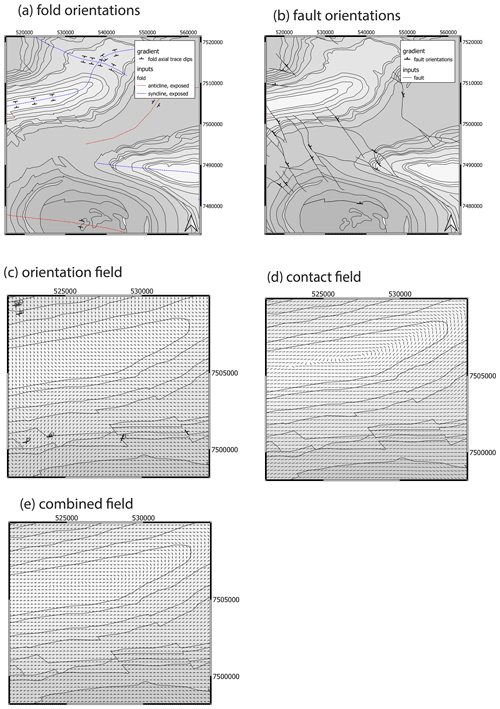

The gradient information extracted from the various input data include the following results.

-

Bedding orientations near fold axial traces are found (Fig. 8a).

-

Fault orientations (Fig. 8a) are found and optionally decimated. Fault mid-points are shown here, but the same values are also placed at each fault tip.

-

Interpolated orientation data are calculated as interpolated lc, mc and shown for a part of the NW area of the map (Fig. 8b).

-

Interpolated contact tangents, calculated as interpolated lo, mo, no direction cosines, are shown for a part of the NW area of the map (Fig. 8c).

-

Combined information from interpolated dips and interpolated contacts is shown for a part of the NW area of the map (Fig. 8d).

Figure 8Gradient information derived from map, zoomed into to Brockman syncline. (a) Bedding orientations near fold axial traces. (b) Fault orientations. (c) Interpolated orientation data, calculated as interpolated lc,mc. (d) Interpolated contact tangents, calculated as interpolated direction cosines. (e) Combined information from interpolated dips and interpolated contacts. The shown area in (c), (d) and (e) is the NW part of the map shown in (a) and (b).

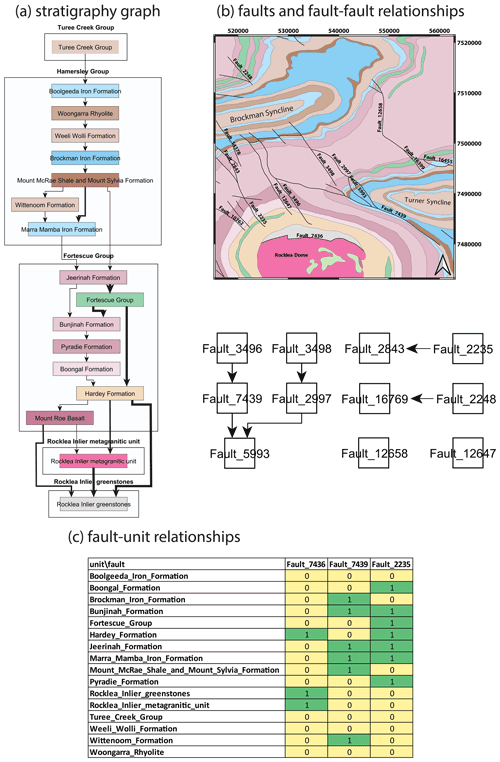

Figure 9Topological information derived from the map. (a) Stratigraphic age relationships extracted from map and ASUD. Arrows point to older units. The thickness of arrows is proportional to contact length. (b) Map with fault labels for faults longer than 5 km, and below the map the resulting fault–intersection relationships are shown. Arrows point to older faults. (c) Subset of fault–unit truncation relationships: green cells show stratigraphic units cut by faults, and yellow cells show that the unit is not cut by a given fault.

4.3 Results of topological calculations

The gradient information extracted from the various input data include the following results.

-

Stratigraphic ages relationships are extracted from the map and ASUD. Arrows point to older units. The thickness of the arrows is proportional to contact length (Fig. 9a).

-

A fault–intersection relationship graph is created (Fig. 9b).

-

A subset of fault-unit truncation relationships is given; the green cells show stratigraphic units that are cut by faults, and the yellows cells are not cut by faults (Fig. 9c).

4.4 Results of 3D model calculations

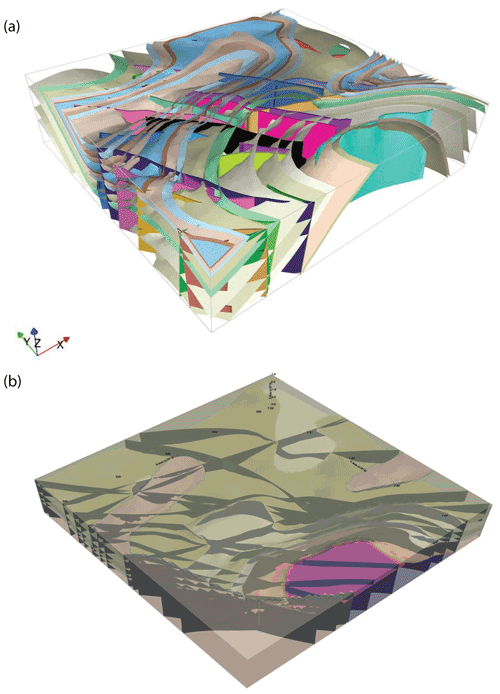

Once the automated data extraction has been completed, the augmented data are passed to the 3D modelling engines to automatically build the 3D geological model (Fig. 10). Note that two packages use different subsets of the available data, as well as different interpolation algorithms, and hence should not be expected to produce identical results. GemPy calculates limited-extent faults but currently displays them as extending across the model area. In both cases a first-pass 3D model that respects the major geological observations is produced.

The example map and associated data used in this paper took just over 3 min to deconstruct with map2loop and a further 4–15 min to build with the three target modelling engines running on a laptop computer with 32 GB of RAM and four i7 Intel Cores running at 1.8 GHz. The time taken to deconstruct a map depends on the number of features to be processed (polygons + polylines + points), with the slowest part of the calculation being the extraction of true fault displacements. The time for model construction increases systematically with the increase in resolution of the interpolation and isosurfacing calculations.

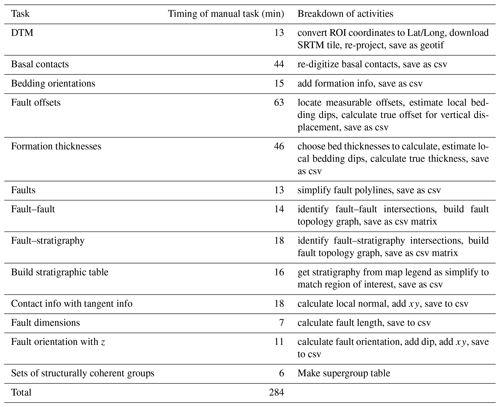

Table 5Time taken to manually reproduce the steps taken by the automated process. The addition of z values can be managed by the 3D modelling packages, and thus the time to perform this task manually is not included, except where it is needed in the calculation (calculation of true formation thickness).

There are currently no other codes that we are aware of that perform the same automated data extraction workflows presented here that are aimed at building regional 3D geological models, and thus questions of external code benchmarking are not possible; however, we have run a comparative experiment where one of the authors (Mark Jessell) extracted the information needed to provide the inputs for LoopStructural from the raw data sources and the timing results are shown in Table 5, and the time taken to extract the data manually (over 4.5 h) does not compare favourably with the automated workflow (3 min). For a one-off map we need to add around 20 min to the automated calculation time to set up the configuration file, but for any additional maps from the same map series, for which we can use the same configuration file, the start-up time is of the order of minutes.

Although the improved speed of data extraction is an advantage, the principal motivation for this study was to develop a system where the complete 3D modelling workflow, including data extraction, could be automated. This is crucial for sensitivity analysis, uncertainty quantification and value of information studies since all these approaches depend on our ability to perform stochastic simulations of the whole 3D modelling workflow, which is not possible if the first manual steps remain unquantified and subject to modeller bias.

The choices made by the map2loop and map2model code are inspired by the thought processes of geologists when manually building a 3D geological model from the same data. There are many small or large decisions and assumptions that are made when developing the model, and the discussion below highlights some of the areas where further work needs to be done to reproduce the manual workflow. In this paper we have used an example from Western Australia; however, similar examples for the Northern Territory, New South Wales, Victoria, Queensland, Tasmania and South Australia can be run using the map2loop library using copies of data stored on the Loop WFS server (https://geo.loop-gis.org/geoserver/web, last access: 9 August 2021).

5.1 Improvements to calculations

The aim of this study was to build an end-to-end workflow from raw map “data” to a 3D model, which we hope to build upon by refining the different steps, as discussed below.

5.1.1 Choice of data

The code as it stands provides limited filtering of the data via decimation and the use of a fault length filter for faults. There are many different reasons for building 3D geological models, and each reason may support a different selection of the available data to ensure critical elements in the 3D model are preserved. In the case of faults, for example, it may be the fault network itself which is important, either as a barrier to or pathway for fluid flow, or it may be the geometric consequences of faulting that are important, e.g. when the goal is to provide prior petrophysical models for geophysical inversions. Apart from fault length, these choices currently need to be made by deleting data at the source; however, a future implementation of “intelligent filtering” that makes clear the reasons for data selection would remove the hidden biases from these choices.

5.1.2 Calculation of unit thickness

The calculation of local unit thickness (Sect. 3.1.5) depends on the local estimate of apparent unit thickness, which is reasonably robust and has been validated by comparison with manual measurements, and also on the local estimate of the dip of the stratigraphy. This dip estimate comes from the application of the scipy radial basis function interpolation library, and in particular the multiquadratic radial basis function, which can be supplemented by a smoothing term. Other radial basis functions such as Gaussian and inverse are available, as well as other schemes such as inverse distance weighting and co-kriging, which all offer tuneable algorithms for estimating the local orientation field. We chose the multiquadratic RBF simply because our experience showed that for the types of geology that we started working on it produced “reasonable” results. It is likely that different geological scenarios may require optimized interpolation schemes (Jessell et al., 2014) as there is no unique solution to this problem.

5.1.3 Calculation of fault offset

The calculation of local fault offset also relies on the interpolated dip field, so the same remarks regarding geologically appropriate interpolators stated in the previous section apply. If we compare the local displacements along a fault, then we also must assume that the unit thickness is the same on both sides of the fault, but at least in general this can be tested directly. In addition, to properly estimate fault displacement, which we have validated by manual measurements, we need to know the fault displacement vector. One solution, not yet implemented, would be to calculate the relative displacement of lines of intersection of the same dipping stratigraphic units across fold axes either side of a fault.

5.1.4 Calculation of super-groups

The definition of super-groups for co-interpolation of bedding data is performed by comparing the orientation of best-fit girdles. This has a number of flaws. Firstly, disharmonic fold sequences may have the same orientation spread but different wavelengths and thus should not be interpolated together. Secondly, if a particular group is undeformed or lies on one limb of a fold, there may not be a well-developed girdle. A more robust analysis of fold structural information, which includes analysis of representative fold profiles, as described by Grose et al. (2019), would not only allow us to better identify coherent structural domains but would also provide the information needed to use the more sophisticated modelling schemes described in their work.

5.1.5 Choice of stratigraphic ordering

As described in Sect. 3.3.1, the stratigraphic ordering of units is derived from a combination of local observations drawn from the geology Polygon and fault Polyline layers, and a regional or national reference stratigraphy. This process does not generally lead to unique stratigraphic orderings, and at present we simply take the first sorted result from a sometimes long list of alternatives. A second unknown is the nature of the contact between different groups. We use the idea of super-groups to cluster structurally coherent domains, but we do not currently have a good solution to estimate the nature of stratigraphic discontinuities between structurally incoherent domains. The modelling systems we target allow for onlap and erode relationships, and Thiele et al. (2016) suggested the topological analysis of units to identify unique relationship characteristics between groups as a possible way forward, but this remains to be tested. Recent attempts at the automatic extraction of stratigraphic information from 3D seismic data (Bugge et al., 2019) could also constrain our systems in basin settings.

5.1.6 Analysis of fault–fault and fault–unit topology

The assumption that a fault or unit that truncates against another fault represents an age relationship is reasonable, but exceptions obviously exist in reactivated faults and growth faults. At the present time if a cycle in fault age relationships is discovered, e.g. Fault A cuts Fault B, Fault B cuts Fault C, and Fault C cuts Fault A, one of the age relationships is removed arbitrarily. A better approach may be to look at displacement, length or some other characteristic such as stratigraphic offset to make that decision. A further test may be the centrality of a fault, for which there are several methods (Freeman, 1977), e.g. those related to how many other faults are truncated by a specific fault. These fault–fault and fault–unit age relationships could provide further constraints on the overall stratigraphic ordering of units and show the structural history of a region which would both be valuable inputs to time-aware modelling systems such as LoopStructural.

5.2 Limitations in resulting 3D models

Given the complexity of the task, the limitations and somewhat arbitrary nature of some of the choices described above, it is perhaps surprising that we ever get a good 3D model out of the system. Conversely, there are a number of other reasons why having deconstructed a map we do not end up with a 3D model that meets our expectations or needs. When running the code over different types of geology, we need to distinguish between two types of results: firstly, has the code correctly and completely extracted the available data? Secondly, is this data sufficient to build a 3D geological model? Our experience from different geological terranes, including deformed basins such as Hamersley Basin, the Yilgarn Craton Granite–Greenstone Terranes and the igneous complexes in the South-West Terrane, all in Western Australia, is that the code provides the data we would expect as geologists, but in more complexly deformed terranes such as Granite–Greenstone belts, the 3D models do not live up to our mental images. Typically, the 3D fault networks look reasonable, but the stratigraphic surfaces only approximately match our expectations. These trials are limited by the lack of 3D “truth” at the regional scale, and thus it is hard to quantify these mismatches, as we can only compare against our prior concepts of the 3D geology with all the associated inherent biases. If forced to make a model in these regions, geologists will draw heavily on their expectations, so this form of modelling is not so much a test of their concepts as it is a realization of them. This opens a pathway to how to deal with conceptual uncertainty discussed below. It is beyond the scope of this study but very much a topic of interest that may in the future allow these codes to work in a wider range of geological settings.

It is conceivable that we could take these models as starting points for manual refinement of the models, either by adding additional “fictive” data so that the model better matches our pre-conceived notions as geologists or by exporting the model to a system where manual manipulation of the surfaces is possible. However, doing so defeats the aims of our approach as both approaches introduce modeller-specific biases and void any attempts to use stochastic analyses of alternate parameters, data or feature attribute choices.

5.2.1 Insufficient data

All geological maps are models, as even in areas of 100 % outcrop the map is the sum of hundreds of local observations and interpretations, and in most areas the gaps in outcrop mean that the map can only provide a subset of the potential surface information. It may well be that the surface map does not possess enough information to constrain a 3D model. In many regions, the surface of the Earth is covered by soils or surficial deposits (colluvium, alluvium, etc.) that prevent direct observation of the bedrock geology. In this case there is simply no map to deconstruct. As regional geophysical datasets became more widespread, interpreted maps of the top of bedrock started to be produced (such as the GSWA test case described here), together with estimates of the geometry of the cover–bedrock interface (Ailleres and Betts, 1998). map2loop contains example code showing how these may be combined to replace the surface geology as inputs for modelling but were not needed for the Hamersley test case. The integration of geophysics into the workflow is being developed by the Loop consortium (Giraud et al., 2021), and is beyond the scope of this paper, but could help to define subsurface orientations or even the (automatic) extraction of geological structures from geophysical data (Wu and Hale, 2015; Vasuki et al., 2017; Wellmann et al., 2017; Lindsay et al., 2020; Guo et al., 2021).

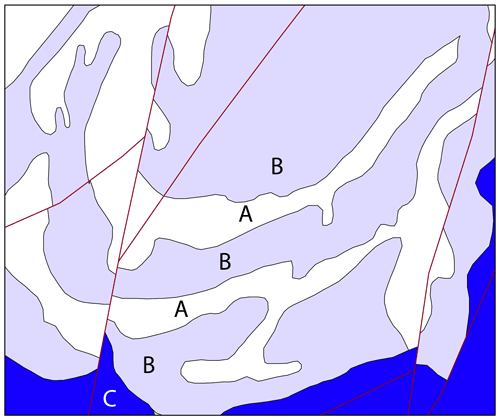

Even when surface geology maps are available, interpreted cross sections are usually added to constrain the 3D geology; however, even if they are constrained by, e.g. geophysical data, by direct interpretation of seismic data, or by gravity or magnetic validation, they are still usually less well constrained than the surface data. Even when seismic data are available, Bond et al. (2007) has shown that this prior experience is a significant source of bias for the interpreted section. Drill hole data are not currently incorporated into the workflow; however, the work of Joshi et al. (2021) goes some way to providing that possibility. Geophysically unconstrained cross sections drawn by geologists necessarily depend on two sources of information: the geology map, in which case a future map2loop could in principle provide the equivalent information, or by the geologists' prior experience, which is harder to codify and represents a significant future challenge. Many maps indicate a level of confidence in contacts and fault style via dashed lines, and whilst at present map2loop does not make use of this data, it will clearly be an important source of information when incorporating constraints during stochastic simulations. Not all maps follow a chronostratigraphic logic, for example for a map legend of C–B–A (in decreasing age, Fig. 11) a local area of the map may actually show up-sequence orderings of the type C–B–A–B–A–B, and in order for a 3D model to be built they would have to be recoded as C–B1–A1–B2–A2–B3–A3. Of course the repetition of the A–B may be due to deformation (folding of the sequence or thrust repetition); however, it often just represents a level of stratigraphic detail considered unimportant at the scale of the map or a deliberate avoidance of implying knowledge about the local stratigraphy.

Figure 11Example of lithological map descriptions that need recoding in order to work in a chronostratigraphic modelling workflow. Assuming that the repetition of units is not structurally controlled, the lithostratigraphic sequence C–B–A–B–A–B–A needs to be recoded as C–B1–A1–B2–A2–B3–A3.

In the early stages of mapping, the locations of contacts can be quite hard to define, so one approach would be to avoid the use of contacts altogether, and the SURFE package (Hillier et al., 2014; de Kemp et al., 2017) allows 3D model construction without pre-defined contact locations.

As has been mentioned earlier, in many areas the geology of interest is buried beneath regolith or basins, and thus a map-based approach may not be appropriate. Geologists are very good at building models in such data-poor areas, although validation of 3D geological models is often limited to sparse drilling. In this case it is easy to prove that the model is wrong, but it is much harder to say why.

A second consideration is the actual availability of the data in digital forms. Both within Australia and internationally, each geological survey has developed its own internal standards for storing and providing outcrop databases, and may do not provide this data at all, except with the map itself. As with the outcrop databases, each country around the world has made their own choices as to the development (or lack thereof) of a standard stratigraphy for the country and the public access to this data. One outcome of increased automation of information extraction from geological maps and other forms of geological data may be the need to establish “minimum data standards” so that the data needed for each type algorithm is made available.

5.2.2 Poor-quality data

The process of making a map, like any human endeavour, is subject to error, either because of the primary observation or due to the compilation of that information into map form, such as the closed loop fault shown in Fig. 8b. Some analysis of map logic can be made if the information in the input map or stratigraphy is incorrect, such as the fault cycles described in Sect. 3.3.2, although the choice of how to break the cycle is currently arbitrary, a future enhancement may compare the fault relationships with orientation information, for example, to make a better choice. If a 3D model fails to build using the deconstructed data, one may assume there are inconsistencies in the input data. The issue here is the modelling engine is unlikely to indicate which data are causing errors, and thus a more robust map validator that can identify potential issues prior to 3D model input and provide guidance to correction would be useful. At present, small mismatches between nodes in coincident Polygons and Polylines can be accommodated.

5.2.3 Incorrect deconstruction of the data

As discussed in Sect. 4.1, map2loop makes a number of simplifications during the deconstruction process. Estimates of fault displacement and unit thickness could be automatically checked for consistency along a contact or fault, which may improve the estimates fed to the modelling schemes.

5.2.4 Incomplete 3D modelling algorithms