the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Evaluation of iterative Kalman smoother schemes for multi-decadal past climate analysis with comprehensive Earth system models

Javier García-Pintado

André Paul

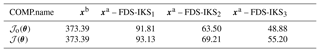

Paleoclimate reconstruction based on assimilation of proxy observations requires specification of the control variables and their background statistics. As opposed to numerical weather prediction (NWP), which is mostly an initial condition problem, the main source of error growth in deterministic Earth system models (ESMs) regarding the model low-frequency response comes from errors in other inputs: parameters for the small-scale physics, as well as forcing and boundary conditions. Also, comprehensive ESMs are non-linear and only a few ensemble members can be run in current high-performance computers. Under these conditions we evaluate two assimilation schemes, which (a) count on iterations to deal with non-linearity and (b) are based on low-dimensional control vectors to reduce the computational need. The practical implementation would assume that the ESM has been previously globally tuned with current observations and that for a given situation there is previous knowledge of the most sensitive inputs (given corresponding uncertainties), which should be selected as control variables. The low dimension of the control vector allows for using full-rank covariances and resorting to finite-difference sensitivities (FDSs). The schemes are then an FDS implementation of the iterative Kalman smoother (FDS-IKS, a Gauss–Newton scheme) and a so-called FDS-multistep Kalman smoother (FDS-MKS, based on repeated assimilation of the observations). We describe the schemes and evaluate the analysis step for a data assimilation window in two numerical experiments: (a) a simple 1-D energy balance model (Ebm1D; which has an adjoint code) with present-day surface air temperature from the NCEP/NCAR reanalysis data as a target and (b) a multi-decadal synthetic case with the Community Earth System Model (CESM v1.2, with no adjoint). In the Ebm1D experiment, the FDS-IKS converges to the same parameters and cost function values as a 4D-Var scheme. For similar iterations to the FDS-IKS, the FDS-MKS results in slightly higher cost function values, which are still substantially lower than those of an ensemble transform Kalman filter (ETKF). In the CESM experiment, we include an ETKF with Gaussian anamorphosis (ETKF-GA) implementation as a potential non-linear assimilation alternative. For three iterations, both FDS schemes obtain cost functions values that are close between them and (with about half the computational cost) lower than those of the ETKF and ETKF-GA (with similar cost function values). Overall, the FDS-IKS seems more adequate for the problem, with the FDS-MKS potentially more useful to damp increments in early iterations of the FDS-IKS.

- Article

(10170 KB) - Full-text XML

- BibTeX

- EndNote

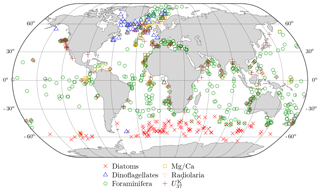

Earth system models (ESMs) to simulate the Earth system and global climate are usually developed using the present and recent historical climates as references, but climate projections indicate that future climates will lie outside these conditions. Paleoclimates very different from these reference states therefore provide a way to assess whether the ESM sensitivity to forcings is compatible with the evidence given by paleoclimatic records (Kageyama et al., 2018). Coupled atmosphere–ocean general circulation models (AOGCMs) and comprehensive ESMs have enabled the paleoclimate community to gain insights into internally generated and externally forced variability and to investigate climate dynamics, modes of variability (Ortega et al., 2015; Zanchettin et al., 2015), and regional processes in detail (PAGES 2k-PMIP3 group, 2015). However, AOGCMs and comprehensive ESMs demand high computational resources, which severely limits the length and number of affordable model integrations in current high-performance computers (HPCs). Thus, the last millennium ensemble with the Community Earth System Model (CESM) (Otto-Bliesner et al., 2016), which is still a considerable achievement, has only m=10 members for the full-forcing transient simulations for the years 850–2005 in the Common Era. Also, the multi-model ensemble in the Paleoclimate Model Intercomparison Project (PMIP) for experiments contributing to the Coupled Model Intercomparison Project (CMIP, since CMIP6) relies on coherent modelling protocols followed by the paleoclimate modelling teams in independent HPCs (Jungclaus et al., 2017). On the other hand, the gathering and analysis of existing and new paleoclimate proxy records to create multiproxy databases also relies on collective efforts focused on specific time spans, such as the global multiproxy database for temperature reconstructions of the Common Era (PAGES2K) (PAGES2k Consortium, 2017) or the Multiproxy Approach for the Reconstruction of the Glacial Ocean surface (MARGO) database (MARGO Project Members, 2009), which focuses on the Last Glacial Maximum (LGM), a period between 23 000 and 19 000 years before present (BP). The quantitative fusion of comprehensive ESMs and paleoclimate proxy observations should provide deeper insight into past climate low-frequency variability, which (here and throughout the article) we refer to as variability on timescales 30–50 years or longer (Christiansen and Ljungqvist, 2017). However, this fusion is hampered by the high computational demand of AOGCMs and comprehensive ESMs.

The issue of fusing data into models arises in scientific areas that enjoy a profusion of data and use costly models. In the geophysical community this is referred to as inverse methods and data assimilation (DA), whose aim is finding the best estimate of the state (the analysis) by combining information from the observations and from the numerical and theoretical knowledge of the underlying governing dynamical laws. Most known DA methods stem from Bayes' theorem (Lorenc, 1986), and each is made practical by making approximations (Bannister, 2017). In numerical weather prediction (NWP) the assimilation is mostly an initial condition problem. In contrast, the climate of a sufficiently long trajectory is typically much less sensitive to initial conditions, being essentially a sample of the underlying true model climate contaminated by a small amount of deterministic noise due to the finite integration interval (Annan et al., 2005b). The low-frequency errors in deterministic ESMs are therefore mostly dependent on model errors, including the parameters for the small-scale physics, and errors in forcings and boundary conditions.

DA has been used as a technique for low-frequency past climate field reconstruction (CFR) with real case studies, such as the assimilation of marine sediment proxies of sea surface temperature (SST) in a regional ocean model of the North Atlantic at the termination of the Younger Dryas (YD) cold interval (Marchal et al., 2016), and synthetic studies, such as the assimilation of tree-ring width into an atmospheric GCM (AGCM) (Acevedo et al., 2017), analysis of time-averaged observations (Dirren and Hakim, 2005), or evaluation of particle filters for paleodata assimilation (Dubinkina et al., 2011). Including model parameters as control variables, early work in climate analysis was done by Hargreaves and Annan (2002), who evaluated a Markov chain Monte Carlo (MCMC) method with a simple ESM. Later, the technique of state augmentation with model parameters (Friedland, 1969; Smith et al., 2011) and an ensemble Kalman filter (EnKF) (Evensen, 1994) was used by Annan et al. (2005a) and Annan et al. (2005b) in synthetic experiments with an Earth system model of intermediate complexity (EMIC) and with an AGCM coupled to a slab ocean, respectively. The additional issue of sparsity in paleoclimate proxies was addressed by Paul and Schäfer-Neth (2005) for climate field reconstructions with an EMIC and manual tuning. More recent applied work, in part motivated by the non-linearity of climate models, has used four-dimensional variational DA (4D-Var). Thus, Paul and Losch (2012) applied 4D-Var with a conceptual climate model, and Kurahashi-Nakamura et al. (2017) used 4D-Var with the Massachusetts Institute of Technology general circulation model (MITcgm) for ocean state estimation considering joint initial conditions, atmospheric forcings, and an ocean vertical diffusion coefficient as control variables to analyse the global ocean state in equilibrium conditions during the Last Glacial Maximum (LGM). We share the motivation of this recent work but put the focus on deterministic and comprehensive ESMs. As, in general, these models are not suited to automatic differentiation (AD) and the development of hand-coded tangent linear and adjoint models is out of reach (so, standard 4D-Var and related hybrid approaches such as En4DVar are not applicable), we seek assimilation strategies that take into account the non-linearity in ESMs and the computational constraints with current HPCs for low-frequency analysis.

Questions remain about how one should choose the control vector for the assimilation. Regarding its dimension, one possibility is to select a relatively high-dimensional control vector and to resort to ensemble methods, which involve a low-rank representation of covariances. An example is the (adjoint-free) iterative ensemble Kalman smoother (IEnKS) in Bocquet and Sakov (2014), which counts on iterations to deal with non-linearity. Also, the IEnKS has been evaluated in a synthetic study with the low-order model Lorenz-95 by state augmentation (Bocquet and Sakov, 2013). However, the ensemble (low-rank) covariances and sensitivities would be noisy because of the small ensemble size. An alternative is to resort to a low-dimensional control vector so that its error covariance is explicitly represented, and the low dimension allows for an estimation of the sensitivity of the observation space to the control vector by conducting individual perturbation experiments of the control variables: i.e. a finite-difference sensitivity (FDS) estimation. With respect to adjoint sensitivities, FDS estimation has the disadvantages that the computing cost is proportional to the dimension of the state vector and that the choice of the perturbations is critical. Too-small perturbations lead to numerical errors, while too-high perturbations lead to truncation errors. An advantage is that FDS considers the full physics of the non-linear model.

In any case, in a practical application of such a low-dimensional control vector approach (whose dimension would be imposed by computational constraints), the selection of the control variables should be carefully done. From all available model inputs, the selected control variables (given their respective background uncertainties) and model should try to explain most of the observed variability. In turn, this assumes (a) a general need to perform sensitivity analysis beforehand and (b) that the model has been previously comprehensively tuned. The exclusion of relatively less sensitive inputs from the control vector and the previous tuning would reduce possible compensation effects (i.e. that increments in the control vector due to the assimilation take partial responsibility for errors elsewhere). Nonetheless, some error compensation will always be present (for example, this is intrinsic to the common tuning of the coupled ESM, which follows tuning of individual components) and very difficult to deal with. A striking example is given by Palmer and Weisheimer (2011), who report how an inadequate representation of horizontal baroclinic fluxes resulted in a climate model error equal in magnitude and opposite to the systematic error caused by insufficiently represented vertical orographic wave fluxes. Thus, the selected control vector has the responsibility of embracing the model climate background uncertainty, and their updated values will likely compensate for non-accounted errors. For low-frequency climate analysis it is very likely that after some years of model integration, the modelled climate is less sensitive to (reasonable) initial conditions than to other possible inputs. Thus, one would generally select the most sensitive parameters for the model physics, forward operators, forcings, and boundary conditions for a given situation as a control vector.

Also, regarding initial conditions in a sequence of multi-decadal and longer data assimilation windows with transient forcings, there is no clear consensus about how one should approach the initialization at each DAW. For example, Holland et al. (2013) indicate that initialization had little impact (in general, limited to a couple of years) on Arctic and Antarctic sea ice predictability in the Community Climate System Model 3 (CCSM3) in a perfect-model framework. However, in a later synthetic study including assimilation with a perfect-model framework and an Earth system model of intermediate complexity (EMIC), Zunz et al. (2015) obtained a similar interannual predictability (∼3 years), but noted that the initialization for the DAW can still influence the state at multi-decadal timescales (although with a larger impact of external forcing). Among others, we mention these examples as sea ice has been related to changes in atmospheric circulation patterns and teleconnections with the tropical Pacific and Atlantic oceans (Marchi et al., 2018; Meehl et al., 2016), and these relatively fast climate dynamics can, for example, influence the onset or termination of glacial conditions and stronger climate changes. On the other hand, given the limited predictability at multi-decadal timescales (and to reduce computational costs), the reinitialization in paleo-DA has often be removed after the assimilation altogether, with a common initial integration being applied as background climate for a number of DAWs. This has been named offline assimilation (Acevedo et al., 2017; Hakim et al., 2016; Klein and Goosse, 2017; Steiger et al., 2014). The perspective of the offline approach can be modified when model parameters are included in the control vector because, as opposed to initial conditions, the impact of model parameters does not decay with the model integration. In general, updated model parameters as part of the assimilation and their physically consistent climate would then be used as (augmented) initial conditions for a subsequent DAW.

Throughout this study, all observations available during a data assimilation window (DAW) are assimilated in parallel. This has been termed four-dimensional data assimilation or asynchronous data assimilation and is also commonly referred to as the smoothing problem (Sakov et al., 2010). Here we choose the term smoother for the evaluated schemes, but they could just as well be termed asynchronous (or four-dimensional) filters. This study focuses on evaluating two assimilation schemes for low-frequency past climate reconstruction. They are based on finite-difference sensitivities (FDSs) and low-dimensional control vectors and rely on iterations to account for non-linearity. The schemes are then an FDS implementation of the iterative Kalman smoother (FDS-IKS, a Gauss–Newton scheme) and an alternative named the FDS-multistep Kalman smoother (FDS-MKS, based on repeated assimilation of the observations). Other paleoclimate assimilation issues, such as sparsity, measurement error characteristics (e.g. temporal autocorrelation), representation error, the development of forward operators for specific proxies (proxy system models) (Dee et al., 2016; Evans et al., 2013), and further complexities in the model–observation comparison (Goosse, 2016; Weitzel et al., 2018), are not addressed in this paper.

The rest of this article is organized as follows. In Sect. 2, within the broader context of the joint state-parameter estimation problem, we summarize the strong-constraint incremental 4D-Var formulation (Courtier et al., 1994) from a perspective for which the state vector is augmented with the model parameters to arrive, under given assumptions, at the formulation of the iterative Kalman smoother (IKS) scheme as implemented here. Then, we describe the sensitivity estimation and the two schemes, the FDS-IKS and the FDS-MKS, in a concise algorithmic format. The description of our implementation of the Gaussian anamorphosis (GA), which we applied along with an ensemble transform Kalman filter (ETKF-GA) as an alternative non-linear assimilation approach, is also included in Sect. 2. In Sect. 3 we conduct an experiment with a simple 1-D energy balance model (Ebm1D) and present-day NCEP/NCAR reanalysis surface air temperature as a target, and in Sect. 4 we conduct an identical twin experiment with CESM, assimilating MARGO-like data (MARGO uncertainties, timescales, and locations) as an example of a paleoclimate observing dataset. Ebm1D is amenable to automatic differentiation, so that we applied 4D-Var and ETKF as benchmark schemes. CESM lacks an adjoint, and we applied ETKF and ETKF-GA as benchmark schemes. The experimental set-up, results, and a discussion are given in each case. We finish with conclusions in Sect. 5.

2.1 Analysis approach

The problem is to estimate the mean state (seasonal and annual means) of a past climate state along a time window for multi-decadal and longer timescales. From a variational perspective, in NWP this would be referred to as a four-dimensional variational data assimilation (4D-Var) problem, in which the initial conditions of a model integration are estimated subject to model dynamics and according to background and observation uncertainties within a data assimilation window (DAW). In NWP, the background (or prior) is normally given by a previous model forecast. In this article, time tk and its index k measure time relative to the start of the DAW, which is t0, using conventions similar to those of 4D-Var. We use notation as close as possible to that of Ide et al. (1997). We consider a discrete non-linear time-invariant dynamical system model in which xk∈ℝn is the state vector at time tk, and θk∈ℝq is a vector of selected inputs in addition to initial conditions (model parameters, forcings, and boundary conditions). We assume a gridded system, in which specification of the model state and other inputs at time tk uniquely determine the model state at all future times and that θk is constant during the DAW (i.e. ). For example, within θ, one can include a constant error term (a bias to be estimated as part of the assimilation) in a prescribed transient radiative constituent (e.g. a CH4 time series).

Then, we consider an augmented state vector z,

and an augmented deterministic non-linear dynamics operator such that

Observations at time tk are represented by the vector and related to the model state by

where is a deterministic non-linear observation operator that maps from the augmented state zk to the observation space, and is a realization of a noise process, which consists of measurement errors and representation errors (Janjić et al., 2017). We assume ϵk is a Gaussian variable with mean 0 and covariance matrix Rk. The error covariance matrix of a state , where the superscript “T” denotes matrix transposition, at any time tk within the DAW is

where is the error covariance matrix of xk, is the error covariance matrix of θ, and is the error covariance between xk and θ. The goal in 4D-Var is then to find the initial state z0 that minimizes a non-quadratic cost function given by

where . The first term (the background term, 𝒥b) measures the deviation between and z0, with the background-error covariance matrix P0 as L2 norm. The second term (the observation term, 𝒥o) measures the deviation between (where , indicating all observations throughout the DAW) and its model equivalent using the observation-error covariance matrix as L2 norm. is a generalized observation operator mapping from the augmented initial state to all the observations at any time in the DAW (i.e. ). The maximum a posteriori estimation in Eq. (5) is also known as conditional mode estimation or the maximum of the conditional density. As presented by Lorenc (1986) from a Bayesian view, this is the maximum likelihood of the state under a Gaussian assumption for the various error terms. The cost function (Eq. 5) is subject to the states satisfying the non-linear dynamical system (Eq. 2) and is known as strong-constraint variational formulation, while the additional inclusion of a term for the model error would lead to a weak-constraint 4D-Var. The solution to the functional 𝒥1(z0) is , with the resulting states along the DAW referred to as the analysis.

In general, an exact solution cannot be found. In the incremental formulation of 4D-Var, the solution to Eq. (5) is approximated by a sequence of minimizations of quadratic cost functions. Thus, incremental 4D-Var has first an outer loop, for which provides the current approximation and initially for l=1, . The innovations are then given by the residual between the observations and the mapping of the initial state in the current approximation into observation space:

where the computation of the initial state mapped to observation space, , has the form

Then, incremental 4D-Var has an inner loop, for which two approximations are conducted. The first is

where is the Jacobian of ℋk(•) evaluated at . Hk is referred to as the tangent linear operator in the DA literature. Second, the dynamical model is also linearized, obtaining the tangent linear model (TLM):

so that , leading to the generalized linearization

where is the increment. By considering Eqs. (6) and (10), the generalized error term in Eq. (5) for all observations in the DAW can be expressed as

where . This approximation of is introduced in Eq. (5), leading to a quadratic cost function with the increment δz0 as argument

The minimization of 𝒥2(δz0) is the inner loop, which is conducted by gradient descent algorithms (Lawless, 2013) until it meets a given criterion, yielding an optimal . Then, the outer loop takes control, whereby the estimate of the initial state is updated with the estimated increment . Incremental 4D-Var has been shown to be an inexact Gauss–Newton method applied to the original non-linear cost function (Lawless et al., 2005).

In our context in this paper, we assume Gaussian statistics and a perfect-model framework except for the sources of model uncertainty in z0. Thus, the conditional mode given by the minimization of Eq. (12) is also the conditional mean (also called the minimum variance estimate) given by the explicit solution

where Kl is known as the Kalman gain matrix given by

So, the inner loop is omitted and the state vector is explicitly updated as

Thus, like incremental 4D-Var, the iterative approach described by Eq. (15) gives an approximation to the conditional mode or maximum likelihood of the cost function (Eq. 5). Iterative methods have a long history for DA applications in non-linear systems. Jazwinski (1970) considers local (conducted over a single assimilation cycle) and global (conducted over several assimilation cycles) iterations of the extended Kalman filter (EKF). Local iterations of the Kalman filter are designed to deal with non-linear observation operators and non-Gaussian errors. The locally iterative (extended) Kalman filter (IKF) is a Gauss–Newton method for approximating a maximum likelihood estimate (Bell and Cathey, 1993), and actually it is algebraically equivalent to non-linear three-dimensional variational (3D-Var) analysis algorithms (Cohn, 1997). For the first loop, the IKF is identical to an EKF (Jazwinski, 1970). Later, Bell (1994) showed that the iterative Kalman smoother (IKS) represents a Gauss–Newton method to obtain an approximate maximum likelihood, as was shown later for incremental 4D-Var (Lawless et al., 2005). The IKF and the IKS circumvent the need for choosing a step size, which is sometimes a source of difficulty in descent methods. However, as with a Gauss–Newton method, not even local convergence is guaranteed. Equation (15) is actually akin to the formulation of the IKF, but generalized to a DAW, and it is therefore an IKS. It differs, though, from the IKS formulation in Bell (1994) in that Eq. (15) is a strong-constraint version (cost term for the model neglected) without the backward pass (Bell, 1994).

Now, it remains to be seen how one would apply a scheme such as Eq. (15) for multi-decadal and longer-term paleoclimate analysis. In the last years there has been a growing effort toward the development of stochastic physical parameterizations in weather forecast and climate models. However, stochastic parameterization is still in its infancy in comprehensive ESMs. For deterministic parameterizations, on which we focus here, the model climate converges to its own dynamical attractor, and the climate of a sufficiently long model trajectory is typically much less sensitive to initial conditions than to other model inputs.

In general, ensemble methods rely on perturbations δz0 of the control vector to estimate the sensitivity matrix . Two general kinds of simulations and climate analysis are of interest to the paleoclimate community: the so-called equilibrium simulations and the transient ones. Equilibrium simulations are subject to solar forcing prescribed to a specific calendar year and fixed radiative constituents in the atmosphere, representing a situation when the past climate is considered stable. The goal is to evaluate the low-frequency seasonal and annual means and variability within these relatively long-term stable climate conditions (e.g. Eeemian, LGM, or mid-Holocene). In these simulations, the model is integrated until it reaches equilibrium conditions. Typically, starting from a standard climatology (e.g. the World Ocean Atlas), it takes a few thousand years for an ESM integration to converge to its equilibrium (even in the deepest ocean) for stationary forcings. After an initial spin-up similar to the equilibrium conditions, transient simulations with ESMs then use the corresponding time-varying solar forcing and normally use prescribed time series of radiative atmospheric constituents reconstructed from observations for the time window of interest, as well as transient boundary conditions. From an assimilation perspective, irrespective of whether the analysis is for equilibrium or transient forcing, the perturbation of model parameters and other inputs introduces a shock at the start of the model integration (in some way analogous to shocks in unbalanced ocean–atmosphere coupled models initialized with uncoupled data assimilation in NWP). And further than the initial oscillations, the model climatology in the first years of model integration is not consistent with the perturbed parameters. Thus, the estimation of the sensitivities can be spurious in the first years of model integration, and also the innovations do not result from the model climatology. It is convenient then to set up a model integration time threshold and to disregard sensitivities earlier than this time. For such a purpose, we loosely define a model quasi-equilibrium condition as the situation in which a model climatology is in reasonable physical consistency with the control vector (initial conditions plus model parameters, etc.). With equilibrium simulations, a quasi-equilibrium time tq can, for example, be evaluated based on the convergence on the maximum meridional overturning circulation, for which each ensemble member will converge towards its own attractor. By the time this convergence is reached, the correlations between the atmosphere, surface climate, ocean mixed layer, and (paleoclimate) observation space should be fully developed. Then, low-frequency climate means (annual and/or seasonal) after the integration time to quasi-equilibrium, tq, would be evaluated against the climate proxy database (e.g. MARGO for the mean annual and seasonal climate across the LGM) to obtain the sensitivity and the innovations at each iteration. This is the approach followed by the experiments in this study.

For the more general transient forcing situation, in a current DAW, the effects from a perturbed control vector and from transient forcing are entangled. As opposed to the equilibrium simulations, integration times here match physical forcing times. Observations earlier than a specified tq should be now disregarded, and quasi-equilibrium here would not refer to a model state, which will be transient as the forcings, but (as above) to a situation in which the model state is physically consistent with the input given in the control vector. At each DAW, one could first estimate tq by conducting an “equilibrium” simulation with forcings and boundary conditions prescribed to those at the start of the integration time (the DAW). Then, use this estimate as a surrogate for the transient tq. This would, however, increase computations. Alternatively, one could set up a tq based on previous experience and tests for equilibrium forcings.

In both cases, it is unlikely that errors in initial conditions are among the most sensitive ones out of all possible input errors for the evaluated integration times after quasi-equilibrium. Thus, for low-frequency past climate analysis, it should be generally acceptable to exclude x0 from the control variables, allowing for a reduced problem. In the following, we take this assumption. To simplify the notation, we then define 𝒢 as a generalized (deterministic) observation operator mapping a vector θ into the observation space: , which follows

where tq represents the model integration time to quasi-equilibrium. Instead of Eq. (5), a reduced problem is posed now by minimization of the non-quadratic cost function

After the assimilation, a forward integration with the updated θa leads to its physically consistent climate estimate. The sensitivity matrix, or Jacobian of 𝒢, is noted as . The trivial substitutions into the incremental formulation (12) and its solution (Eq. 15), with estimation of G via finite differences, lead to the finite-difference sensitivity iterative Kalman smoother (FDS-IKS), which is summarized in Sect. 2.3. The finite-difference sensitivity multistep Kalman smoother (FDS-MKS), described in Eq. 2.4, is an alternative approach to deal with non-linearity.

2.2 Background-error covariances and sensitivity estimation

The current implementation of variational assimilation is different in each operational NWP centre. A recent review of operational methods of variational and ensemble-variational data assimilation is given by Bannister (2017). However, for many geophysical models, codes are not suited to automatic differentiation and it is extremely complex to develop and maintain tangent linear and adjoint codes. This has motivated more recent research toward (adjoint-free) ensemble DA methods for high-dimensional models. Lorenc (2013) recommended using the term “EnVar” for variational methods using ensemble covariances. Thus, Liu et al. (2008) proposed (the later called) 4DEnVar as a way to estimate the generalized sensitivities of the observation space to initial conditions based on an ensemble of model integrations within a 4D-Var formulation. Gu and Oliver (2007) introduced a scheme called ensemble randomized maximum likelihood filter (EnRML) for online non-linear parameter estimation, which was later adapted as a smoother, the batch-EnRML, by Chen and Oliver (2012). The EnRML estimates sensitivities by multivariate linear regression between the ensemble model equivalent of the observations and the ensemble perturbations to the control vector. These are therefore mean ensemble sensitivities. The iterative EnKF (IEnKF) is similar to the EnRML, but instead uses an ensemble square root filter and rescales the ensemble perturbation (deflates) before and (inflates) after propagation of the ensemble as an alternative to estimate the sensitivities (Sakov et al., 2012). In this way the estimated sensitivities are more local about the current estimation. An extension to the IEnKF as a fixed-lag smoother led to the IEnKS in Bocquet and Sakov (2014). However, while in the 4D-Var approach the computing cost of the sensitivities with the adjoint method is independent of the dimension of the control vector in the cost function, in numerical estimates of the sensitivities the computing cost increases with the size of the control vector. The low-rank property of the ensemble covariances in ensemble methods means that sampling error problems will inevitably arise when the number of ensemble members is small in comparison with the size of the control vector. Instead, as indicated above, in the low-dimensional control vector schemes evaluated here, the background-error covariance is a full-rank explicit matrix at the expense of computations being linearly proportional to the size of the control vector.

While the relation between G and Pk is implied in the previous section, it is instructive to look at it in some detail. Let us consider the case of a specific observation time tk. The Kalman gain matrix (disregarding the loop index, if any) for the components of the model input θ, which we denote in this section as , can be expressed in the two following ways:

where the first way is the standard one in Kalman smoother expressions, including parameter estimation via state vector augmentation, and the second one is a parameter space formulation, which we apply here. Both are equivalent, but the covariance information in Pk has been transferred to Gk, or the sensitivity matrix, in the second expression. Let us further consider the case that at tk there is a single observation y of a state variable within the vector zk, denoted as , and we focus on the representer matrix for a single parameter θi. The covariance between θi and the observation y is expressed in both cases as

which, as , indicates that the linear equality

is taken from a bottom-up approach in the parameter space formulation, in which all sources of uncertainty are specifically evaluated to compose the covariance . In our experiments with ETKF and ETKF-GA, with parameter augmentation, the first alternative in Eq. (19) is used, and the generalized sensitivity matrix G is not explicitly computed. So, for comparison with the finite-difference sensitivity (FDS) schemes, we estimate an ensemble-based average sensitivity matrix by solving for in , where is the matrix of random model parameter perturbations drawn from Pθθ around the background values, and represents the resulting perturbations in the observation space. In an iterative approach the sensitivities would need to be evaluated for perturbations around the current estimate.

Alternatively, finite-difference sensitivity (FDS) directly samples from the conditional probability density function (CPDF) of the perturbed variable, as the remaining control variables are kept to their current estimate. However, the computing requirements in FDS are linearly proportional to the size of the input vector, its numerical estimation of derivatives is inaccurate, and the associated error can be unacceptably large due to inadequate choice of the finite-differencing step size. High perturbations increase the truncation error, which increases linearly with the perturbation magnitude, while as the magnitude of the perturbation gets smaller the accuracy of the differentiation degrades by the loss of computer precision (Dennis and Schnabel, 1996). It is possible to do more than one perturbation experiment by sampling from the CPDF for each parameter and estimating the sensitivity by univariate regression, which ameliorates the problem of non-optimal perturbations, and we include a few tests in this sense for the first experiment in this study (see Appendix A). However, it is currently computationally difficult to do more than one perturbation per control variable with comprehensive ESM and long integrations (and one might as well resort to ensemble approaches – full-rank in this case – if more computations were possible). The sensitivity estimates by forward finite differences at a loop l (initially, the background) are then computed at each column as

where for each parameter, δθi is a small perturbation (or variation) to the current approximation of θi, and δθi is the vector 0∈ℝq but with element θi replaced by δθi. As indicated, the estimation of sensitivities by local finite-difference approximations results from sampling the conditional density function in the control vector space.

2.3 Finite-difference sensitivity iterative Kalman smoother

The algorithm we describe here, denoted as finite-difference sensitivity iterative Kalman smoother (FDS-IKS), is a Gauss–Newton scheme akin to the IKF and the IKS. The “FDS” acronym clarifies that the scheme is (a) expressed in terms of explicit sensitivities to all variables in the control vector, and (b) these local sensitivities are estimated numerically by individual perturbation experiments for each variable in the control vector. The scheme then uses a full-rank representation of the background-error covariance matrix (hence, it is not called an ensemble method). It is a smoother rather than a filter as it assimilates (future) observations along a DAW to update the control variables, applicable since the start of the DAW. After iterations are stopped (due to convergence criterion or reaching a maximum iteration number), a model reintegration with the updated control vector θa leads to the analysis (or climate field reconstruction) over the DAW.

For any natural number l, the FDS-IKS provides the update

The sequences and are defined inductively as follows

where for notational convenience

and

Equations (22) and (25) show that, as in the IKS and incremental 4D-Var, the FDS-IKS uses the initial background-error statistics along all iterations. The updated is just calculated in the last iteration.

2.4 Finite-difference sensitivity multistep Kalman smoother

Here, a multistep approach is conducted by inflating the observation-error covariance matrix R and recursively applying a standard Kalman smoother over the assimilation window with the inflated R and the same observations. The multistep idea of inflating R for repeated assimilation of the observations was proposed by Annan et al. (2005a) and further clarified and applied by Annan et al. (2005b) for an atmospheric GCM using the EnKF with parameter augmentation. Their approach is designed for steady-state cases, for which time-averaged climate observations corresponding to a long DAW can be assumed as constant along a sequence of smaller assimilation sub-windows into which the DAW is divided. The model parameters are then sequentially updated in small increments and the loss of balance in a more general non-linear model should be reduced with the multistep approach (Annan et al., 2005b). The inflation weights are such that in a linear case, after the predefined sequence of integrations and assimilations, the solution is identical to that of the single step scheme along the whole DAW.

The multistep strategy was termed multiple data assimilation (MDA) by Emerick and Reynolds (2013) in the context of ensemble smoothing and was then further developed by Bocquet and Sakov (2013, 2014) in their iterative ensemble Kalman smoother (IEnKS), whereby the weights for the inflation of R were applied in overlapping data assimilation windows (MDA IEnKS). We apply here the MDA strategy to a recursive formulation of the KF in terms of FDS estimates for the dual observation space to the control vector, and here we denote the scheme as finite-difference sensitivity multistep Kalman smoother (FDS-MKS). As the inflation of R results in a reduced influence of the observations at each iteration, the increments in the early iterations are relatively reduced with respect to the FDS-IKS, making the FDS-MKS potentially more stable. We note, however, that the inflation of R modifies the direction of the increment in non-linear cases. Thus, it does not converge to the same (local) minimum as the FDS-IKS (or 4D-Var), but to an approximate point in the control space. In contrast with the scheme in Annan et al. (2005b), the FDS-MKS considers recursive integration along the complete DAW (and non-overlapping DAWs). Thus, it is not restricted to steady-state conditions.

The scheme considers the total increment in the state vector that would result from the linear assimilation of one specific observation and alternatively conducts a recursive sequence of assimilations of the same observation whose sum of fractional increments equals the total increment. This is achieved by considering the observation-error variance at loop l to be the product of an inflation factor βl and the variance of the “complete” observation as . As the (linear) increment is inversely proportional to the observation-error variance, for the total increment to be the same in both situations the condition that must be fulfilled. This leads to the constraint

Here, the sensitivity matrix G is estimated at each recursive step (iteration), similarly to the FDS-IKS. However, the error covariance Pθθ is also updated at each iteration. Hence, as indicated in Eq. (20), the covariance between any climatic variable and an input θi () is also affected by the sensitivity of the climatic variable to other inputs. The FDS-MKS is a recursive direct method as an attempt to solve the problem in a pre-specified finite sequence of iterations Nl:

The sequences and are defined inductively as follows

where for notational convenience

and

Regarding β, a possible step size approach is to set the inflation weight constant for all the iterations, which given Eq. (26) leads to β=Nl1, where the column vector has all values set to 1. However, as the iterations proceed, the updated background covariance decreases so the fractional increments get smaller. A more even distribution of fractional increments, with likely improved stability, can be given by decreasing inflation weights as the iterations proceed, so initial weights are relatively higher. Thus, among other possible solutions for the inflation factor at step l, here we adopt the expression

which satisfies the requirement of Eq. (26). A numerical advantage of inflating R for the multiple data assimilation approach is that it reduces the condition number of the matrix to be inverted in the assimilation at each iteration. A practical advantage of the FDS-MKS with respect to the FDS-IKS is that the number of iterations is predefined. With given computer resources and model computational throughput statistics, it is possible to evaluate how many ESM integrations are affordable and set the FDS-MKS inflation weights and computing schedule accordingly. This does not mean, though, that the FDS-IKS would not converge closer to the (local) minimum of the cost function with the same iterations. Also, without specific consideration of constraint (Eq. 26), the idea of inflating R has also been considered by previous studies as a mechanism to improve initial sampling for the EnKF (Oliver and Chen, 2008) and also to damp model changes at early iterations in Newton-like methods (Gao and Reynolds, 2006; Wu et al., 1999).

2.5 Early-stopped iterations for the FDS-MKS

The computational cost of the ESM integrations is much higher than that of the assimilation steps as considered in the FDS-MKS for a low-dimensional control vector. In this study, we do not evaluate adaptive strategies for the planning of the weights in the FDS-MKS. However, the evolution of the increments in the control variables along the iterations could potentially be used to guide the size of βl at each loop and even to conduct an early stopping of the iterations. At each iteration, it is possible to compute the standard update using the corresponding pre-planned weight βl and simultaneously to compute an alternative update with early termination of the iterations by applying a completion weight that both terminates the iterations and fulfils the condition of Eq. (26) as an alternative to βl in Eq. (30):

Comparison of the sequence of increments given by the fractional steps of the FDS-MKS with those with simultaneous early-stopped solutions may be used to support replanning weights and even to decide on an early stopping of the iterations using the update given by using the completion weight as a final solution.

2.6 ETKF and Gaussian anamorphosis

The ensemble Kalman filter (EnKF) was introduced by Evensen (1994). It makes it possible to apply the Kalman filter to high-dimensional discrete systems when the explicit storage and manipulation of the system-state error covariance is impossible or impractical. The EnKF methods may be characterized by the application of the analysis equations given by the Kalman filter to an ensemble of forecasts. One of the main differences among the several proposed versions of ensemble Kalman filters is how the analysis ensemble is chosen. Ensemble square root filters use deterministic algorithms to generate an analysis ensemble with the desired sample mean and covariance (Bishop et al., 2001; Tippett et al., 2003; Whitaker and Hamill, 2002). Here, in our experiments with global model parameters, we use the mean-preserving ensemble transform Kalman filter (ETKF), or “symmetric solution”, described by Ott et al. (2004) and also referred to as the “spherical simplex” solution by Wang et al. (2004). The mean-preserving ETKF is unbiased (Livings et al., 2008; Sakov and Oke, 2008).

Still, for the (En)KF to be optimal, three special conditions need to apply: (1) Gaussianity in the prior, (2) linearity of the observation operator, and (3) Gaussianity in the additive observational error density. In order to better deal with non-linearity, a number of studies have addressed the use of transformation of the model background and observation to obtain a Gaussian distribution such that the (En)KF can be applied under optimal conditions. This pre-processing transformation step is known as Gaussian anamorphosis (GA) (Chìles and Delfiner, 2012). The GA procedure was introduced into the context of data assimilation by Bertino et al. (2003) and has been applied for many years in the field of geostatistics (Deutsch and Journel, 1998; Matheron, 1973).

It is not standard, however, how the GA should be applied in the context of DA (Amezcua and Leeuwen, 2014). The process of GA involves transforming the state vector and observations {z,y} into new variables with Gaussian statistics. The (En)KF analysis is computed with the new variables, and the resulting analysis is mapped back into the original space. For the transformations, the GA makes use of the integral probability transform theorem.

In a theoretical framework and with simple experiments, Amezcua and Leeuwen (2014) evaluated several approaches using univariate GA transformations. As a key point, they found that when any of the above (1)–(3) conditions are violated the analysis step in the EnKF will not recover the exact posterior density in spite of any transformation. Also, they concluded that when ensemble sizes are small and knowledge of the conditional is not too precise, it is perhaps better to rely on independent marginal transformations for both a state variable x and observation y than on joint transforms. For field variables, one can consider them to have homogeneous distributions, so that each kind of model variable is transformed using the same monovariate anamorphosis function at all grid points of the model (Simon and Bertino, 2009, 2012), or apply local transformation functions at different grid points (Béal et al., 2010; Doron et al., 2011; Zhou et al., 2011). In both cases, the GA in these studies has been applied to the filtering problem. In the context of low-frequency past climate analysis, the temporal dimension has to be considered. For example, point (2) above would refer to the linearity in the generalized observation operator, which includes the model dynamics. Given the considerations in Amezcua and Leeuwen (2014), the sparsity of low-frequency paleoclimate records, and the lack of homogeneity in global ESM variables, here we follow the approach in Béal et al. (2010).

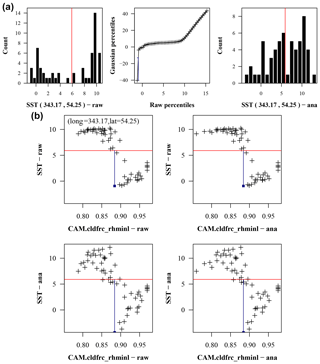

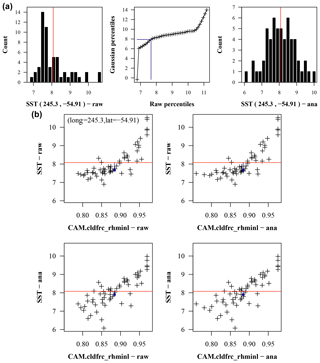

In our implementation of the ETKF we augmented the state vector with the model equivalent of the observations. We evaluated transformations of the control variables as well as transformations in sea surface temperature (SST) as observed variables. We transformed the control variables marginally. Regarding SST, due to sparsity and heterogeneity, we consider it not possible to estimate the marginal distribution of the low-frequency paleoclimate observations with enough confidence to support a transformation. Thus, in our experiments we estimated the marginal distribution of the model equivalent of the SST observations, as derived from the background ensemble, and also used the same transformation for the SST observations. The transformation then operates in the marginals in an independent way at each grid point:

where Pξ(ξ) denotes the cumulative density function (CDF), and explicitly indicates that the CDF in the transformed space is that of a Gaussian random variable. For comparison, Eq. (33) corresponds to transformation (c) in Amezcua and Leeuwen (2014). Tests with standard ETKF are included in the two experiments below. A test with ETKF including GA as just described is included in experiment 2, with CESM.

As indicated, here we use empirical cumulative density functions (CDFs) for the anamorphosis based on the background ensemble. The risk of using the tails of the transformation function during the anamorphosis of the ensemble is significant, and tail estimation can highly impact the analysis. Here, we obtained linear tails following Simon and Bertino (2009, 2012), which consists of extrapolating to infinity the first and last segments of the interpolation function with the same slope. In practice, we just extrapolated in each direction until twice the original range of the Gaussian variable . Then, we truncate (only) the physical coordinate in tail points in the transformation function to its physical bound in case it is exceeded. Then, we set the two first moments of the target Gaussian variable to those of the original ensemble (Bertino et al., 2003).

3.1 Model description

This experiment is based on a conceptual one-dimensional, south–north, energy balance model (Ebm1D; Paul, 2018), for which Paul and Losch (2012) (PL2012 hereafter) conduct a number of 4D-Var experiments. Ebm1D is based on (a) the difference between absorbed solar radiation Qabs and outgoing longwave radiation at the top of the atmosphere (TOA) on the one hand and (b) the divergence of the horizontal heat transport ΔFao on the other hand. In Ebm1D, the climate is expressed in terms of just the zonally averaged surface temperature Ts.

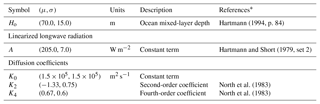

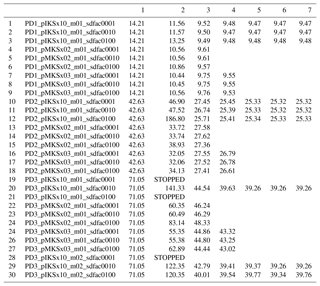

North et al. (1983)North et al. (1983)Table 11-D energy balance model. PD1 tests. Parameter definition and first-guess values.

* References just for the mean values.

PL2012 evaluate several climate conditions and uncertain parameter scenarios, including present-day and Last Glacial Maximum (LGM) climate states. Then, with the model constrained by the present-day and LGM parameter estimates, they conduct climate projections under several CO2 forcing scenarios. We revisit their PD1 scenario: a present-day test with five (scalar) parameters, summarized in Table 1, as control variables. Here we summarize the model in relation to these parameters, and the reader is referred to PL2012 for a thorough description of all model parameters and related equations. The ocean mixed-layer depth, Ho, controls the effective heat capacity of the atmosphere–ocean system. A is a constant term in the calculation of the outgoing longwave radiation , which also depends linearly on the surface temperature and the logarithm of the ratio of the actual value of the atmospheric CO2 concentration to a reference value (Eq. 6 in PL2012). Meridional heat transport is treated as a diffusive process driven by latitudinal temperature gradients, whereby the horizontal heat transport depends linearly on a thermal diffusion coefficient Kao(x) given by , where K0, K2, and K4 are the remaining three parameters included in the control vector (Table 1), and x=sinΦ, where Φ is latitude.

3.2 Observations and cost function

As observations, we took surface air temperature (SAT) derived from the NCEP/NCAR reanalysis data (Kalnay et al., 1996). From the reanalysis data we first calculated global zonal means of SAT. Then, we obtained SAT means for present-day climate at each grid cell (i.e. latitude) for winter (January, February, and March; JFM) and summer (July, August, and September; JAS) in the Northern Hemisphere. These zonal averages of SAT, Ts, were the target for the analysis. The mean of the last 10 years, out of 100 years of model integration, was taken as the model equivalent of the observations. That is, each grid cell in the 1-D model has one observation and model equivalent for winter (JFM) and similarly for summer (JAS) in the cost function, defined by

where W is a diagonal matrix, whose diagonal is a vector of weights w∈ℝp given to the observations. This cost function can be written as . The observational target and control variables are identical to those in PL2012, but they did not include a background term 𝒥b in the cost function. Like PL2012, we assumed that observation errors are uncorrelated (R is diagonal), with all observations having a standard deviation C. The explicit division of the norm for 𝒥o in terms of R−1 and w facilitates the comparison of scenarios as a function of increasing observational weight.

3.3 Experimental set-up

Like PL2012, we set the grid resolution to 10∘. We assumed a diagonal , with standard deviations given in Table 1, which we considered as reasonable. Other than the parametric uncertainty we considered a perfect-model assumption, which is overly optimistic in this specific case as, in addition to the 1-D Earth climate representation, there are strongly simplified physics in the energy balance model. While PL2012 also assume this perfect-model framework, they point to a number of specific structural model errors. Thus, the control variables will attempt to compensate for the unaccounted error in either of the evaluated estimation approaches.

For the PD1 tests, we made the observation weights w proportional to the area of the zonal band (i.e. decreasing toward the poles) with , and we compared the FDS-MKS, the FDS-IKS, the ETKF (m=60 members), and 4D-Var. We evaluated a two-step and a three-step FDS-MKS (a one-step FDS-MKS equals an FDS-EKS or a first iteration of the FDS-IKS). To evaluate the resilience of the FDS schemes to high perturbations, we conducted three tests with different perturbation sizes for each FDS scheme. In each one, the perturbation applied to each of the control variables was proportional to its background standard deviation by a factor SDfac. This perturbation factor was . The evaluation of the cost function for the ETKF (as a smoother) was conducted with a single forward integration of the mean of the posterior control variables. In the 4D-Var minimization, like PL2012, we used a variable-memory quasi-Newton algorithm as implemented in M1QN3 by Gilbert and Lemaréchal (1989), and to compute the gradient we used a discrete adjoint approach with the tangent and adjoint codes generated automatically by the Transformation of Algorithms in Fortran (Giering and Kaminski, 1998; Giering et al., 2005). The number of simulations can be higher than the number of iterations as the minimizer M1QN3 takes a step size determined by a line search that sometimes reduces the initial unit step size (Gilbert and Lemaréchal, 1989). For 4D-Var, as a stopping criterion we required a relative precision on the norm of the gradient of the cost function of 10−4. For the assimilation in the ETKF and FDS tests we used rDAF (García-Pintado, 2018a) and rdafEbm1D (García-Pintado, 2018b), both working within the R environment (R Core Team, 2018).

We conducted a number of additional tests to compare the convergence of the FDS-IKS versus the FDS-MKS as higher weight is given to the observations. These were named PD2 and PD3, corresponding to and , respectively. As these weights increase, the effect of the regularization by 𝒥b decreases, and one can expect the convergence of the Gauss–Newton scheme (the FDS-IKS) to be more difficult. A few of these additional tests evaluate how and/or if the convergence of the FDS-IKS can be improved (mostly in a low-regularization situation) by increasing the number of perturbations per parameter (that is, the ensemble size). The results of these additional tests are briefly described here and expanded in Appendix A.

3.4 Results

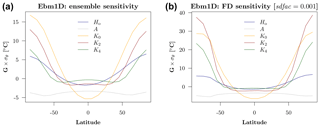

Here we provide a succinct summary of the estimation process. Broader explanation of the model climatology in relation to the control variables is given in PL2012. The background sensitivity of the 10-year mean surface temperature Ts to the control variables is shown in Fig. 1 in which, to ease comparison, the sensitivity matrix G is scaled by multiplying each of its columns by the assumed background standard deviation of the corresponding parameter. Figure 1a shows mean ensemble sensitivities estimated from the background ensemble for the ETKF, and Fig. 1b shows local finite-difference sensitivities (FDSs) estimated with perturbations using SDfac=0.001. Note that these background FDSs are identical for both the FDS-IKS and the FDS-MKS in all scenarios (PD1, PD2, and PD3) for the same SDfac. Each plot has its own scale to avoid flattened lines in the ensemble sensitivity plot. For the three control variables composing the thermal diffusion coefficient Kao, FDSs are more than twice as high as the corresponding mean ensemble sensitivities. However, the sensitivities to the ocean mixed-layer depth, Ho, and to the constant term A in the longwave radiation are quantitatively similar in both cases. In both, A is negatively correlated (as expected) with Ts at all latitudes but with a relatively low sensitivity, while the rest of the control variables show a rather neutral, but negative, sensitivity in the tropical belt and a positive sensitivity increasing toward the poles (nearly symmetrical off the Equator), with weaker scaled sensitivities for the ocean mixed-layer depth Ho. In both cases, additional plots (not shown) depict summer sensitivities similar to the corresponding winter ones. Also, FDSs with SDfac=0.01 (winter and summer) are very similar to those shown in Fig. 1b. FDSs with SDfac=0.1 are also very close to those in Fig. 1b, but slightly lower for the Kao components, toward those in Fig. 1a.

Figure 1Ebm1D experiment. Background sensitivity of winter surface air temperature to the control variables estimated as (a) ensemble sensitivity (for the ETKF) and (b) finite-difference sensitivity (for the FDS-IKS and the FDS-MKS) with SDfac=0.001. Sensitivities are scaled by the standard deviation of the control variables, and each line refers to a column in G. Each plot uses its own scale to ease visualization.

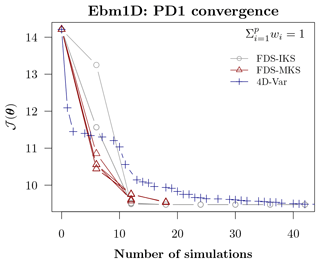

For the PD1 scenario, Fig. 2 summarizes the convergence, including the FDS-IKS, the two-step and three-step FDS-MKS, and 4D-Var; Table 2 compares the posterior control variables and the corresponding cost function values for all the evaluated schemes. For comparison, the convergence in Fig. 2 is scaled as a function of the number of simulations, which, for five control variables, is six simulations per iteration in the forward FDS schemes. Convergence details are given in Appendix A. For the PD1 scenario, 4D-Var took 141 simulations and 111 iterations to converge to the minimum with the convergence criterion indicated in Sect. 3.3. A relatively improved convergence by including the regularization term 𝒥b can be seen by comparison with PL2012, whose cost function only considered the 𝒥o term and took 236 simulations and 190 iterations to converge (Table 3 in PL2012). In any case, Fig. 2 shows that the 4D-Var convergence became apparently similar to that of the FDS-IKS tests from simulation 40 onwards. In Fig. 2, the three convergence series for the FDS-IKS with the three different perturbation parameters () are represented with the same symbol. Starting with the same background cost function value 𝒥, the two series with show identical results, while the series with SDfac=0.1 is the one that has a higher cost after the first iteration but still reunites with the other two series after the second iteration. However, the variations in SDfac had a very minor effect on the FDS-MKS schemes. For the FDS-MKS schemes, we focus now on the end of their corresponding iterations. The two-step FDS-MKS, for all SDfac values, gives final 𝒥 values that are close to but slightly higher than those of the FDS-IKS at the same number of iterations. The same happens with the three-step FDS-MKS with respect to the third iteration of the FDS-IKS. For 4D-Var, the convergence goes slightly faster than for the FDS-IKS at simulation number six (first iteration of FDS-IKS), but then it goes slower than for the FDS-IKS after that.

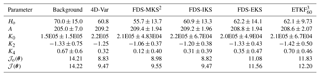

Table 2Ebm1D PD1 tests. Parameter estimation and cost function values*.

* FDS schemes with SDfac=0.001. Values are identical for SDfac=0.01 and slightly different with a minor increase in cost function values for SDfac=0.1 (see Appendix A). 2 Three-step FDS-MKS. Details of cost function convergence for the two-step FDS-MKS shown in Appendix A. 3 ETKF subindex indicates the ensemble size. Cost function obtained by reintegration of the model with the mean updated parameters.

Figure 2Ebm1D experiment. Convergence as a function of the number of simulations for the scenario PD1 (). Each iteration of the evaluated finite-difference schemes requires six simulations (background plus number of perturbations, with one perturbation per parameter). Two-step and three-step FDS-MKS are included. Each FDS scheme includes the convergence for the three perturbation tests (details in text).

Table 2 for the PD1 scenario shows that the posterior values of the control variables, as well as the corresponding cost function values, are nearly identical for 4D-Var and the FDS-IKS (values shown for SDfac=0.001 perturbations, but also similar for the higher SDfac values). The three-step FDS-MKS also (shown for SDfac=0.001) converges to relatively similar control variables. In this case, the FDS-EKS (first iteration of FDS-IKS) also obtained lower cost function values than the ETKF. Although both obtained similar values for Ho, A, and K0, in the ETKF case, the values of K2 and K4 (with a clear non-linear relation with the surface air temperature) diverged from the minimum obtained by 4D-Var and the FDS-IKS. This is related to the background departure from the minimum and the mean sensitivities used by the ETKF. In general, one would not expect an FDS-EKS to perform better than an ETKF with denser sampling (bigger ensemble) as in this case. Table 2 also indicates the posterior standard deviations for the Kalman-based schemes. Non-diagonal values of are not shown. There are no high differences among the various posterior standard deviations for the Kalman-based schemes, with some values being higher in one scheme and others higher in a different scheme. In summary, the linear approaches (ETKF and FDS-EKS) obtained some reduction in the cost function values with respect to the background, but the rest of the schemes obtained substantially lower cost function values, with the FDS-IKS and 4D-Var converging to the same minimum and getting the lowest 𝒥 values. It is possible that an alternative minimization for the strong-constraint 4D-Var would have converged faster. In any case, the FDS-IKS has been shown to have a fast convergence in this experiment. Interestingly, Fig. 2 shows that the first fraction of all FDS-MKS schemes had a substantially lower cost value than either 4D-Var or any of the FDS-IKS tests. This, along with the resilience of the FDS-MKS to relatively high perturbations, supports the strategy of using a combination of the FDS-MKS in early iterations of a Newton-like scheme such as the FDS-IKS, akin to Wu et al. (1999) or Gao and Reynolds (2006). Alternatively, one can conduct a line search along the direction given by the FDS-IKS increments at each iteration. Further details for this experiment are in Appendix A, focusing on the convergence of FDS-IKS versus FDS-MKS as the observational weight increases.

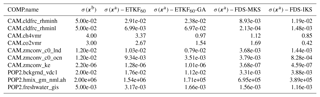

4.1 Experimental set-up

Experiment 2 is a synthetic test with the Community Earth System Model (CESM1.2), a deterministic ESM. The CESM component set used here comprises the Community Atmosphere Model version 4 (CAM4), the Parallel Ocean Program version 2 (POP2), the Community Land Model (CLM4.0), the Community Ice CodE (CICE 4) as a sea ice component, the River Transport Model (RTM), and the CESM flux coupler CPL7. The coupler computes interfacial fluxes between the various component models (based on state variables) and distributes these fluxes to all component models while ensuring the conservation of fluxed quantities. Land ice is set as a boundary condition, and the wave component is not active. The configuration uses pre-industrial forcings and it is a standard component set named B1850CN in the CESM1.2 list of compsets. We use a horizontal resolution regular finite-volume (FV) grid for the atmospheric and land components, an FV grid with a displaced pole centred at Greenland (version 7) for the ocean and sea ice components, and a 0.5∘ FV grid for the river run-off component (this is also a standard set of component grids with short name f45_g37 in CESM1.2). For comparison, this is a coarser resolution than that of the recent CESM Last Millennium Ensemble (Otto-Bliesner et al., 2016).

Here we focus on the analysis for a single DAW and equilibrium forcing and, as adequate, introduce some comments regarding practical implementations for real cases, including the case of transient forcings. The identical twin assimilation experiment is designed to approach a case of past climate reconstruction with sparse observations, as usual in pre-instrumental climate analysis. Specifically, we use the features of available observations of near sea surface temperature for the Last Glacial Maximum (LGM) from the MARGO database (MARGO Project Members, 2009). The LGM has received great attention in the paleoclimate community for its relevance to understand climate feedbacks and future climate projections; specifically, the MARGO database has been profusely used for qualitative and quantitative model–data comparisons (e.g. Kageyama et al., 2006; Hargreaves et al., 2011; Waelbroeck et al., 2014) as well as in dynamical reconstruction, with the ocean model MITgcm and 4D-Var, of the upper-ocean conditions in the LGM Atlantic (Dail and Wunsch, 2014) and the global ocean (Kurahashi-Nakamura et al., 2017). For the purpose of this study, it is not so important that the actual climate of the model matches that of the LGM but that the case study is realistic from the estimation point of view. Thus, we make use of the MARGO database characteristics (location, seasonality, and uncertainty), but conduct a synthetic experiment for pre-industrial climate conditions. To do so, before starting the experiment we spun up CESM for 1200 years starting from Levitus climatology with standard pre-industrial conditions to reach an equilibrium state. Then, we used the restart files from the end of the spin-up time to create a 60-year control simulation (as synthetic truth), in which in addition to the pre-industrial forcings and boundary conditions, we added a flux term to the ocean, as detailed below.

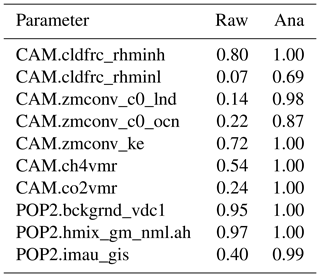

To create the background ensemble we perturbed a number of parameters for the (deterministic) physics in both the ocean and the atmosphere components, as well as input greenhouse gases and an additional influx of water into the North Atlantic Ocean. As indicated in the Introduction, the selected control variables have the responsibility of creating all the background uncertainty in a perfect-model scenario, and through the assimilation they will try to compensate for any unaccounted model error elsewhere. In a step-by-step approach, here all perturbed model parameters and forcings were included as control variables in the assimilation. An obvious (still synthetic) and very useful extension would be to perturb a wider set of model parameters and/or forcings and boundary conditions (e.g. various ice sheet configurations or alternative freshwater influx) and explicitly evaluate the compensation effect and climate reconstruction results by using subsets of the perturbed inputs as control vectors. Here, the selected parameters for model physics and radiative constituents are relevant to the global energy budget of the Earth system, but not necessarily the most sensitive model inputs for multi-decadal and longer scales. In real cases, the selection of control variables (if the control vector is to be kept low-dimensional) should be done carefully and generally based on previous global sensitivity analyses.

We included an influx of water into the North Atlantic from melting in the Greenland ice sheet (GIS) to the true run and as a control variable. This flux was homogeneously distributed along the coast of Greenland and at the ocean surface, and it is appealing to explore as a control variable because the Atlantic meridional overturning circulation (AMOC) plays a critical role in maintaining the global ocean heat and freshwater balance. It is commonly acknowledged that North Atlantic deep water (NADW) formation is key in sustaining the AMOC (Delworth et al., 1997), while in turn freshwater flux in the North Atlantic, along with surface wind forcing, ocean tides, and convection, provides the energy for NADW formation (Gregory and Tailleux, 2011). Adding this freshwater flux (or freshwater hosing) makes the identification of the model parameters more complicated, but it is realistic to expect that current paleoclimate melting estimates can hold some bias and it is useful to know how the evaluated schemes deal with this possibility. In real cases, flux terms have been used in paleoclimate modelling to account for model errors. So, they relax the perfect-model assumption in a parametric way. Here, the estimated flux term attempts to correct the mean state towards the observations along with the model parameters. As far as the authors know, this is the first experiment with a comprehensive ESM which attempts (even in a synthetic way) a joint flux and model parameter estimation for climate field reconstruction, as these are more commonly seen as competing strategies.

We initiated the background with biased control variables with respect to the truth and a zero-mean Greenland ice sheet freshwater flux. We used reasonable uncertainties in the control variables derived from previous publications. Separate analyses (weakly coupled assimilation) for different model components (atmosphere, ocean, land) may be inconsistent. In our set-up, all observations are allowed to directly impact model parameters from any component in the Earth system model. This is known as strongly coupled data assimilation. Both truth and background simulations were branched from the same initial conditions, which allowed us to use relatively short integration times (60 years) in the experiment. In a real case with steady-state forcings (e.g. estimation of real LGM climate state by assimilating the MARGO database), the model should be integrated even longer towards quasi-equilibrium to ensure that errors in the initial conditions will not affect the analysis (or they should be accounted for). Also, each model equivalent of the observations has to be mapped into the corresponding spatio-temporal domain of each paleoclimate proxy observation. Similar to the previous experiment, for the FDS schemes, we set the perturbations for each control variable as equal to their standard deviation multiplied by a perturbation factor SDfac. For computational reasons we only tested .

The cost function was as in Eq. (17), in which the set of control variables used for the experiment is summarized in Table 3. Sect. 4.2 and 4.3 give brief information on the atmospheric and ocean components of CESM as used in this experiment. For the rest of the model components we used default configurations for the indicated CESM compset. Given that adjoint codes are not available for CESM, here we alternatively tested an ETKF (with m=60 ensemble members) including Gaussian anamorphosis (ETKF-GA) as a possible non-linear approach, which has a negligible extra cost over a standard ETKF. We also evaluated the three-step FDS-MKS, the FDS-IKS (with three as the maximum number of iterations), and the ETKF, also with m=60. For all the assimilation analyses we used rDAF (García-Pintado, 2018a), and rdafCESM (García-Pintado, 2018c).

4.2 CAM

We used the Community Atmosphere Model version 4 (CAM4) as an atmospheric global circulation model (AGCM) component. A comprehensive description of CAM4 can be found in Neale and Coauthors (2011). Precipitation and the associated latent heat release drive the Earth's hydrological cycle and atmospheric circulations, and many model processes in AGCMs, including deep and shallow convection and stratiform cloud microphysics and macrophysics, are responsible for the partitioning of precipitation through competition for moisture and cooperation for precipitation generation (Yang et al., 2013). Cumulus convection is a key process for producing precipitation; it is also key for redistributing atmospheric heat and moisture (Arakawa, 2004) and, consequently, the global radiative budget (Yang et al., 2013). Since AGCMs are unable to resolve the scales of convective processes, various convection parameterization schemes (CPSs) have been developed based on different types of assumptions. The CPS usually includes multiple tunable parameters, which are related to the sub-scale internal physics and are thought to have wide ranges of possible values (Yang et al., 2012). Also, the dependence of CPS parameters on model grid size and climate regime is an important issue for weather and climate simulations (Arakawa et al., 2011). In addition, AGCMs include parameterization of macrophysics, microphysics, and subgrid vertical velocity and cloud variability to simulate the subgrid stratiform precipitation.

Here we used CAM4 with the Zhang and McFarlane (1995) deep convection scheme and the Hack (1994) shallow convection scheme. For representation of stratiform microphysics we used the scheme by Rasch and Kristjánsson (1998), which is a single-moment scheme that predicts the mixing ratios of cloud droplets and ice. Regarding cloud emissivity, clouds in CAM4 are grey bodies with emissivities that depend on cloud phase, condensed water path, and the effective radius of ice particles. By default, the CAM4 physics package uses prescribed gases except for water vapour. In CAM4, the principal greenhouse gases whose longwave radiative effect is included are H2O, CO2, O3, CH4, N2O, CFC11, and CFC12. CO2 is assumed to be well mixed. As the use of prescribed species distributions is computationally less expensive than prognostic schemes, for long-term paleoclimate analysis we would generally favour the use of prescribed greenhouse gases, for example as given by the recently published 156 kyr history of atmospheric greenhouse gases by Köhler et al. (2017). Still, we would acknowledge that these emerging datasets have an associated uncertainty and that it is generally appropriate to include the most influential ones as control variables in the climate analysis so their errors can be estimated as part of the assimilation.

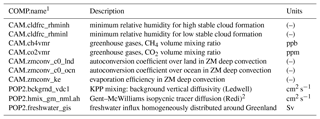

In this study, as perturbed parameters and control variables we selected parameters related to the ZM deep convection scheme and the relative humidity thresholds for low and high stable cloud formation. Also, within the radiative constituents, we included invariant surface values of CO2 and CH4 as control variables. Table 3 shows the control variables in both CAM and POP2, and Table 5 shows the true run values in column xt.

Table 3CESM definition of control variables.

1 COMP.name: CESM component and parameter name. 2 We constrained POP2.hmix_gm_nml.ah_bolus to equal POP2.ah.hmix_gm_nml.ah in the background and updates.

This experiment is based on equilibrium simulations. Regarding real cases in transient conditions, θ could e.g. include a constant error term (i.e. a bias) for a transient greenhouse gas dataset as a prescribed radiative constituent in the atmospheric model (Köhler et al., 2017). The estimated bias would be updated for successive DAWs. One could think of more complicated autocorrelated error models for the greenhouse gas dataset (García-Pintado et al., 2013), but it seems highly unlikely to us that available proxy datasets for low-frequency climate variability can constrain errors further than simple biases in GHG forcing. We did not evaluate any parameter in relation to indirect effects of aerosol to cloud nucleation and autoconversion, despite the overall effect of aerosol to cloud albedo, cloud lifetime, and climate, so this remains largely uncertain (Chuang et al., 2012).

4.3 POP2

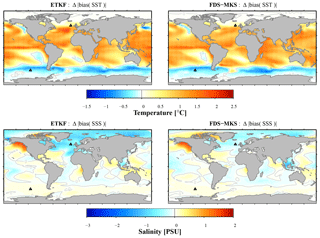

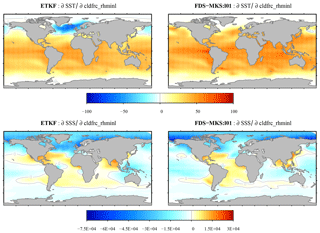

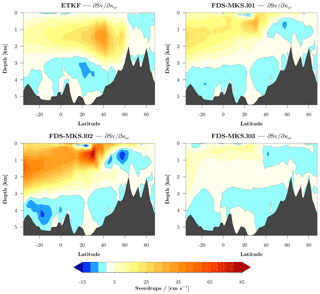

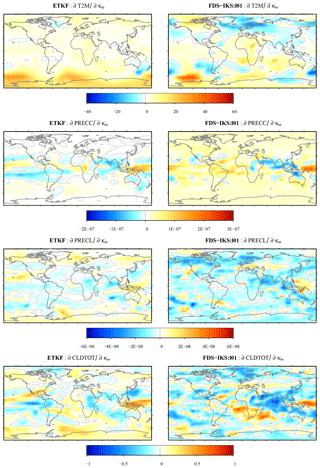

As an ocean component, we used POP2 (Smith et al., 2010). Subgrid-scale mixing parameterization includes horizontal diffusion and viscosity and vertical mixing. For horizontal diffusion we chose an anisotropic mixing of momentum and the Gent and McWilliams (1990) parameterization, which forces the mixing of tracers to take place along isopycnic surfaces with activated submesoscale mixing. The main drawback in the Gent and McWilliams (1990) scheme is that it nearly doubles the running time with respect to other simpler schemes. For vertical mixing, we chose the K-profile parameterization (KPP) of Large et al. (1994). In KPP mixing, the interior mixing coefficients (viscosity and diffusivity) are computed at all model interfaces on the grid as the sum of individual coefficients corresponding to a number of different physical processes. The first coefficients are denoted as background diffusivity κω and background viscosity υω (not to be confused with the “background” in assimilation terminology), which represents diapycnal mixing due to internal waves and other mechanisms in the mostly adiabatic ocean. Other coefficient are associated with shear instability mixing, convective instability, and diffusive convective instability. The background viscosity is allowed to vary with depth, but here we assumed a depth-constant vertical viscosity κω= bckgrnd_vdc1, where bckgrnd_vdc1 is a model input parameter. The model then computes υω=Prκω, where Pr is the dimensionless Prandtl number (set to Pr =10 in the model).