the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

asQ: parallel-in-time finite element simulations using ParaDiag for geoscientific models and beyond

Joshua Hope-Collins

Abdalaziz Hamdan

Werner Bauer

Lawrence Mitchell

Colin Cotter

Modern high-performance computers are massively parallel; for many partial differential equation applications spatial parallelism saturates long before the computer's capability is reached. Parallel-in-time methods enable further speedup beyond spatial saturation by solving multiple time steps simultaneously to expose additional parallelism. ParaDiag is a particular approach to parallel-in-time methods based on preconditioning the simultaneous time step system with a perturbation that allows block diagonalisation via a Fourier transform in time. In this article, we introduce asQ, a new library for implementing ParaDiag parallel-in-time methods, with a focus on applications in the geosciences, especially weather and climate. asQ is built on Firedrake, a library for the automated solution of finite element models, and the PETSc library of scalable linear and nonlinear solvers. This enables asQ to build ParaDiag solvers for general finite element models and provide a range of solution strategies, making testing a wide array of problems straightforward. We use a quasi-Newton formulation that encompasses a range of ParaDiag methods and expose building blocks for constructing more complex methods. The performance and flexibility of asQ is demonstrated on a hierarchy of linear and nonlinear atmospheric flow models. We show that ParaDiag can offer promising speedups and that asQ is a productive testbed for further developing these methods.

- Article

(2764 KB) - Full-text XML

- BibTeX

- EndNote

In this article, we present asQ, a software framework for investigating the performance of ParaDiag parallel-in-time methods. We focus our attention on geophysical fluid models relevant to the development of simulation systems for oceans, weather, and climate. This library allows researchers to rapidly prototype implementations of ParaDiag for time-dependent partial differential equations (PDEs) discretised using Firedrake (Ham et al., 2023), selecting options for the solution strategy facilitated by the composable design of PETSc, the Portable, Extensible Toolkit for Scientific Computation (Balay et al., 2024). The goal of the paper is not to advocate for ParaDiag as superior to other methods but to demonstrate that asQ serves this purpose.

Parallel-in-time (PinT) is the name for the general class of methods that introduce parallelism in the time direction as well as in space. The motivation for PinT methods is that eventually it is not possible to achieve acceptable time to solution only through spatial domain decomposition as one moves to higher and higher fidelity solutions, so one would need to look to the time dimension for further speedups. Falgout and Schroder (2023) provided a quantitative argument that any sufficiently high-resolution time-dependent simulation will eventually require the use of time-parallel methods (the question is just when and how).

PinT methods have a long history, surveyed by Gander (2015), but the topic has really exploded since the late 1990s, with many algorithms being proposed including space–time-concurrent waveform relaxation multigrid (WRMG) (Vandewalle and Van de Velde, 1994), space–time multigrid (Horton and Vandewalle, 1995), parareal (Maday and Turinici, 2002), revisionist integral deferred correction (RIDC) (Christlieb et al., 2010), multigrid reduction in time (MGRIT) (Falgout and Schroder, 2023), parallel full approximation scheme in space and time (PFASST) (Emmett and Minion, 2012), ParaEXP (Gander and Güttel, 2013), and the subject of this work – ParaDiag. As we shall elaborate later with references, ParaDiag is a computational linear algebra approach to PinT, solving an implicit system for several time steps at once, using Fourier transforms in time to obtain a block diagonal system whose components can be solved independently and in parallel. A review of the most common forms of ParaDiag and important analysis results can be found in Gander et al. (2021).

Although there are a small number of software libraries for PinT methods, most PinT software implementations are written from scratch as small standalone codes or individual scripts; increased availability and use of PinT libraries could increase research productivity (Speck and Ruprecht, 2024). At the time of writing, there are only a small number of PinT libraries that are both available open-source and general in either problems or methods treated. XBraid (XBraid, LLNL, 2025) and pySDC (Speck, 2019) are mature reference implementations of MGRIT and spectral deferred corrections, written in C and Python, respectively. These frameworks are designed to be non-intrusive, so users can plug in existing serial-in-time code to quickly experiment with these PinT methods. SWEET (Schreiber, 2018) is a testbed for time integration of the shallow water equations, a model of geophysical flow. As opposed to implementing a single family of methods for any problem, SWEET implements many methods for a specific class of problem. Nektar++ is a spectral/hp element library primarily for fluid dynamics and hyperbolic models. The parareal algorithm was recently implemented and can be used with any of the models or time integration methods supported in Nektar++ (Xing et al., 2024). Finally, the only other general ParaDiag library that the authors are aware of is pyParaDiag (Čaklović et al., 2023; Čaklović, 2023), which implements ParaDiag for collocation time integration schemes with space–time parallelism. pyParaDiag implements a few common models, and users can implement drivers for both new linear or nonlinear models. In comparison to these libraries, asQ implements a particular class of method (ParaDiag), for a particular class of discretisation (finite elements) but for general PDEs.

For the purposes of later discussion, we briefly define two scaling paradigms when exploiting parallelism. Strong scaling is where a larger number of processors are used to obtain the same solution in a shorter wall-clock time. Weak scaling is where a larger number of processors are used to obtain a higher-resolution solution in the same wall-clock time.

The rest of this article is structured as follows. In Sect. 1.1 we survey the varying PinT approaches to geophysical fluids models in particular, which incorporate transport and wave propagation processes that exhibit the general challenge of PinT methods for hyperbolic problems (and hence we also discuss aspects of hyperbolic problems more broadly). In Sect. 1.2, we complete this introduction with a survey of previous research on the ParaDiag approach to PinT. Then, in Sect. 2 we review the basic ParaDiag idea and discuss the extension to nonlinear problems, which are more relevant to weather and climate, highlighting the wide range of choices that need to be made when using ParaDiag for these problems. In Sect. 3, we describe the asQ library and explain how it addresses the need to rapidly explore different options in a high-performance computing environment. In Sect. 4 we present some numerical examples to demonstrate that we have achieved this goal. Finally, in Sect. 5 we provide a summary and outlook.

1.1 Parallel-in-time methods for hyperbolic and geophysical models

The potential for PinT methods in oceans, weather, and climate simulation is attractive because of the drive to higher resolution. For example, the Met Office Science Strategy (The Met Office, 2022) and Bauer et al. (2015) highlight the need for global convection-permitting atmosphere models, eddy-resolving ocean models, eddy-permitting local area atmosphere models, and estuary-resolving shelf-sea models, to better predict hazards and extremes, which will require sub-10 km global resolution. In operational forecasting, the model needs to run to a particular end time (e.g. 10 simulation days) within a particular wall-clock time in order to complete the forecasting procedure in time for the next cycle (e.g. three wall-clock hours). Similarly, climate scientists have a requirement for simulations to complete within a feasible time for a model to be scientifically useful. To try to maintain the operational wall-clock limit when resolution is increased, weak scaling is used so that each time step at the higher spatial resolution can be completed in the same wall-clock time as the time step at the lower spatial resolution. However, even when this weak scaling is achievable, high spatial resolution yields dynamics (e.g. transport and waves) with higher temporal frequencies that should be resolved in the time step (and sometimes we are forced to resolve them due to stability restrictions in time-stepping methods). This means that more time steps are required at the higher resolution than the lower resolution. To satisfy the operational wall-clock limit, we now need to be able to strong scale the model to reduce the wall-clock time for each time step so that the same simulation end time can be reached without breaking the wall-clock time limit at the higher resolution. Achieving this scaling with purely spatial parallelism is very challenging because these models are already run close to the scaling limits. This motivates us to consider other approaches to time stepping such as PinT methods.

The challenge to designing effective PinT methods for these geophysical fluid dynamics models is that their equations support high-frequency wave components coupled to slow balanced motion that governs the large-scale flow, such as the fronts, cyclones, jets, and Rossby waves that are the familiar features of midlatitude atmospheric weather. The hyperbolic nature of these waves makes them difficult to treat efficiently using the classical parareal algorithm, as discussed in Gander (2015). The difficulty is that the errors are dominated by dispersion error, and there is a mismatch in the dispersion relation between coarse and fine models (Ruprecht, 2018). Similarly, De Sterck et al. (2024c) showed that standard MGRIT has deteriorated convergence for hyperbolic problems due to the removal of some characteristic components on the coarse grid if simple rediscretisation is used. However, De Sterck et al. (2023a) showed that a carefully modified semi-Lagrangian method can overcome this deficiency by ensuring that the coarse-grid operator approximates the fine-grid operator to a higher order of accuracy than it approximates the PDE, analogously to previous findings for MGRIT applied to chaotic systems (Vargas et al., 2023). Using this approach, De Sterck et al. (2023b, a) demonstrated real speedups for the variable coefficient scalar advection. Scalable iteration counts were obtained for nonlinear PDEs using a preconditioned quasi-Newton iteration (De Sterck et al., 2024a) and systems of linear and nonlinear PDEs (De Sterck et al., 2024b). Hamon et al. (2020) and Caldas Steinstraesser et al. (2024) have demonstrated parallel speedups for the nonlinear rotating shallow water equations (a prototypical highly oscillatory PDE for geophysical fluid dynamics), using multilevel methods.

For linear systems with pure imaginary eigenvalues (e.g. discretisations of wave equations), a parallel technique based on sums of rational approximations of the exponential function (referred to in later literature as rational exponential integrators (REXI)) restricted to the imaginary axis was proposed in Haut et al. (2016). The terms in the sum are mutually independent, so they can be evaluated in parallel. Each of these terms requires the solution of a problem that resembles a backward Euler integrator with a complex-valued time step. For long time intervals in the wave equation case, some of these problems resemble shifted Helmholtz problems with coefficients close to the negative real axis and far from the origin. These problems are known to be very unsuited to be solved by multigrid methods which are otherwise a scalable approach for linear time-stepping problems (Gander et al., 2015). Haut et al. (2016) avoided this by using static condensation techniques for higher-order finite element methods. This approach was further investigated and developed in the geophysical fluid dynamics setting using pseudospectral methods (including on the sphere) in Schreiber et al. (2018) and Schreiber and Loft (2019). In Schreiber et al. (2019), a related approach using coefficients derived from Cauchy integral methods was presented. In an alternative direction, ParaEXP (Gander and Güttel, 2013) provides a PinT mechanism for dealing with nonzero source terms for the linear wave equation, if a fast method for applying the exponential is available.

Multiscale methods are a different strategy to tackle the highly oscillatory components, whose phases are not tremendously important, but their bulk coupling to the large scale can be. Legoll et al. (2013) proposed a micro–macro parareal approach where the coarse propagator is obtained by averaging the vector field over numerical solutions with frozen macroscopic dynamics, demonstrating parallel speedups for test problems using highly oscillatory ordinary differential equations. Haut and Wingate (2014) proposed a different approach, based on previous analytical work (Schochet, 1994; Embid and Majda, 1998; Majda and Embid, 1998), in which the highly oscillatory PDE is transformed using operator exponentials to a nonautonomous PDE with rapidly fluctuating explicit time dependence. After averaging over the phase of this explicit time dependence, a slow PDE is obtained that approximates the transformed system under suitable assumptions. To obtain a numerical algorithm, the “averaging window” (range of phase values to average over) is kept finite, and the average is replaced by a sum whose terms can be evaluated independently in parallel (providing parallelisation across the method for the averaged PDE). Haut and Wingate (2014) used this approach to build a coarse propagator for the one-dimensional rotating shallow water equations. In experiments with a standard geophysical fluid dynamics test case on the sphere, Yamazaki et al. (2023) showed that the error due to averaging can actually be less than the time discretisation error in a standard method with the same time step size, suggesting that phase averaging might be used as a PinT method in its own right without needing a parareal iteration to correct it. Bauer et al. (2022) showed an alternative route to correcting the phase averaging error using a series of higher-order terms, which may expose additional parallelism.

1.2 Prior ParaDiag research

The software we present here is focused on α-circulant diagonalisation techniques for all-at-once systems, which have come to be known in the literature as “ParaDiag”. In this class of methods, a linear constant coefficient ODE (e.g. a discretisation in space of a linear constant coefficient PDE) is discretised in time, resulting in a system of equations with a tensor product structure in space and time. This system is then diagonalised in time, leading to a block diagonal system with one block per time step, which can each be solved in parallel before transforming back to obtain the solution at each time step. The first mathematical challenge is that the block structure in time is not actually diagonalisable for constant time step Δt because of the nontrivial Jordan normal form. In the original paper on diagonalisation in time, Maday and Rønquist (2008) tackled this problem by using time steps forming a geometric progression, which then allows for a direct diagonalisation. The main drawback is that the diagonalisation is badly conditioned for small geometric growth rate, which might otherwise be required for accurate solutions. McDonald et al. (2018) proposed to use a time-periodic (and thus diagonalisable) system to precondition the initial value system. This works well for parabolic systems but is not robust to mesh size for wave equations (such as those arising in geophysical fluid applications). Gander and Wu (2019) (following Wu (2018) in the parareal context) proposed a modification, in which the periodicity condition u(0)=u(T) is replaced by a periodicity condition in the preconditioner, with u0 the real initial condition, k the iteration index, and , with the resulting time block structure being called α-circulant. This system can be diagonalised by fast Fourier transform (FFT) in time after appropriate scaling by a geometric series. When , an upper bound can be shown for the preconditioner, which is independent of the mesh, the linear operator, and any parameters of the problem. In particular, it produces mesh-independent convergence for the wave equation. However, the diagonalisation is badly conditioned in the limit α→0 (Gander and Wu, 2019). Čaklović et al. (2023) addressed this by adapting α at each iteration.

The technique has also been extended to other time-stepping methods. Čaklović et al. (2023) provided a general framework for higher-order implicit collocation methods, using the diagonalisation of the polynomial integral matrix. The method can also be extended to higher-order multistep methods, such as backward difference formulae (BDF) methods (Danieli and Wathen, 2021; Gander and Palitta, 2024) or Runge–Kutta methods (Wu and Zhou, 2021; Kressner et al., 2023).

Moving to nonlinear PDEs, the all-at-once system for multiple time steps must now be solved using a Newton or quasi-Newton method. The Jacobian system is not a constant coefficient in general, so it must be approximated by some form of average, as first proposed by Gander and Halpern (2017). There are a few analytical results about this approach. Gander and Wu (2019) proved convergence for a fixed-point iteration for the nonlinear problem when an α-periodic time boundary condition is used. Čaklović (2023) developed a theory for convergence of quasi-Newton methods, presented later in Eq. (25).

Performance measurements for time-parallel ParaDiag implementations are still relatively sparse in the literature and have been predominantly for linear problems with small-scale parallelism. Goddard and Wathen (2019), Gander et al. (2021), and Liu et al. (2022) found very good strong scaling up to 32, 128, and 256 processors respectively for the heat, advection, and wave equations, with Liu et al. (2022) noting the importance of a fast network for multi-node performance due to the collective communications required in ParaDiag. Actual speedups vs. a serial-in-time method of 15× were obtained by Liu and Wu (2020) for the wave equation on 128 processors. A doubly time-parallel ParaDiag implementation was presented in Čaklović et al. (2023) for the collocation method with parallelism across both the collocation nodes and the time steps. For the heat and advection equations, they achieved speedups of 15–20× over the serial-in-time/serial-in-space method on 192 processors and speedups of 10× over the serial-in-time/parallel-in-space method on 2304 processors (with a speedup of 85× over the serial-in-time/serial-in-space method). As far as the authors are aware, the study of Čaklović (2023) is the only one showing speedups vs. a serial-in-time method for nonlinear problems, achieving speedups of 25× for the Allen–Cahn equations and speedups of 5.4× for the hyperbolic Boltzmann equation.

In this section we review the ParaDiag method. First, we discuss application to linear problems for which it was originally developed. Second, we discuss the adaptation to nonlinear problems which are of interest in many practical applications. We then use a simple performance model to highlight the requirements for ParaDiag to be an effective method.

ParaDiag is a method to accelerate the solution of an existing time integrator, so we will define the serial-in-time method first. In our exposition, we are interested in time-dependent (and initially linear with constant coefficient) PDEs combined with a finite element semi-discretisation in space:

where is the unknown, is the mass matrix, is the stiffness matrix arising from the discretisation of the spatial terms, is some forcing term not dependent on the solution, and Nx is the number of spatial degrees of freedom (DoFs). Throughout Sect. 2.1 and 2.2, lower-case Roman letters denote vectors (except t, which is reserved for time, and n and j, which are reserved for integers); upper-case Roman letters denote matrices (except N, which is reserved for integers); vectors and matrices defined over both space and time are in boldface; and Greek letters denote scalars. Equation (1) is solved on a spatial domain x∈Ω with appropriate boundary conditions on the boundary ∂Ω. We want to find the solution in the time range with T=NtΔt, starting from an initial condition u0=u(t0) at time t0. To achieve this, Eq. (1) is discretised in time using the implicit θ-method:

where the right-hand side is

where Δt is the time step size, is the time step index, un is the discrete solution at time , and is a parameter. ParaDiag is not restricted to the θ method, but this is currently the only method implemented in asQ (other methods may be added in the future).

As written, Eq. (2) is an inherently serial method, requiring the solution of an implicit system for each time step un+1 given un.

2.1 Linear problems

The ParaDiag method begins by constructing a single monolithic system for multiple time steps, called the “all-at-once system”. We illustrate an all-at-once system below for four time steps (Nt=4), created by combining Eq. (2) for , to obtain

where ⊗ is the Kronecker product. The all-at-once matrix (which is the Jacobian of the full all-at-once residual ) is written using Kronecker products of the mass and stiffness matrices with the two matrices . These are Toeplitz matrices which define the time integrator:

and the vector of unknowns for the whole time series is

The right-hand side includes the initial conditions:

Due to the properties of the Kronecker product, if B1 and B2 are simultaneously diagonalisable, then the Jacobian A is block-diagonalisable. A block-diagonal matrix can be efficiently inverted by solving each block separately in parallel. Note that when forming an space–time matrix using a Kronecker product, the matrix is always on the left of the Kronecker product, and the matrix is always on the right.

The original ParaDiag-I method introduced by Maday and Rønquist (2008) premultiplied Eq. (4) by , where Ix is the spatial identity matrix, and chose the time discretisation such that is diagonalisable. This gives a direct solution to Eq. (4), but diagonalisation is only possible if the time steps are distinct; Maday and Rønquist (2008) and Gander and Halpern (2017) used geometrically increasing time steps, which leads to large values of Δt and poor numerical conditioning for large Nt.

Here, we focus on an alternative approach, named ParaDiag-II. The Toeplitz matrices in Eqs. (5) and (6) are approximated by two α-circulant matrices:

so that the preconditioning operator is

where . The approximation properties of α-circulant matrices to Toeplitz matrices are well established (Gray et al., 2006) and are especially favourable for triangular or low-bandwidth Toeplitz matrices, as is the case here. The advantage of using Eq. (11) is that all α-circulant matrices are simultaneously diagonalisable with the weighted Fourier transform (Bini et al., 2007):

where , 𝔽 is the discrete Fourier transform matrix, and the eigenvalues in Dj are the weighted Fourier transforms of cj, the first column of Cj. The weighting matrix is , . Using the mixed product property of the Kronecker product, , the eigendecomposition Eq. (12) leads to the following factorisation of Eq. (11):

Using this block factorisation, the inverse can then be applied efficiently in parallel in three steps, detailed in Algorithm 1. Steps 1 and 3 correspond to a (weighted) FFT/IFFT in time at each spatial degree of freedom, which is “embarrassingly” parallel in space. Step 2 corresponds to solving a block-diagonal matrix, which is achieved by solving Nt complex-valued blocks with structure similar to the implicit problem required for Eq. (2). The blocks are independent, so Step 2 is “embarrassingly” parallel in time. Steps 1 and 3 require data aligned in the time direction, and Step 2 requires data aligned in the space direction. Switching between these two layouts corresponds to transposing a distributed array (similar transposes are required in parallel multidimensional FFTs). This requires all-to-all collective communication. The implications of these communications on efficient implementation will be discussed later.

Algorithm 1Algorithm for calculating for x with a given right-hand side b and the circulant preconditioner P Eq. (11) via block diagonalisation. In Step 2, each block of the block-diagonal matrix is solved with a preconditioned Krylov method.

The matrix P can then be used as a preconditioner for an iterative solution method for the all-at-once system in Eq. (4). The effectiveness of this preconditioner relies on how well P approximates A. McDonald et al. (2018) showed that the matrix P−1A has at least (Nt−1)Nx unit eigenvalues independent of Nt or discretisation and problem parameters. Although this is a favourable result, the values of the Nx non-unit eigenvalues may depend on the problem parameters, so a good convergence rate is not guaranteed. However, Gander and Wu (2019) proved that the convergence rate η of Richardson iterations for Eq. (4) preconditioned with Eq. (11) scales according to

when . Čaklović et al. (2021) proved the same bound for collocation time integration methods, and Wu et al. (2021) proved that Eq. (14) holds for any stable one-step time integrator.

This bound on the convergence rate in Eq. (14) implies that a very small α should be used. However, the roundoff error of the three-step algorithm above is 𝒪(Ntϵα−2), where ϵ is the machine precision (Gander and Wu, 2019), so for very small values of α the roundoff errors will become large. A value of around 10−4 is often recommended to provide a good convergence rate without suffering significant roundoff error (Gander and Wu, 2019). For improved performance, Čaklović et al. (2021) devised a method for adapting α through the iteration to achieve excellent convergence without loss of accuracy.

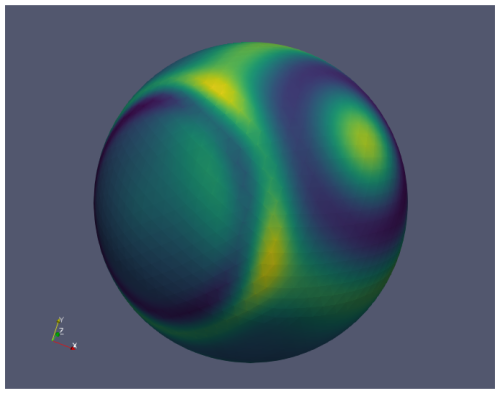

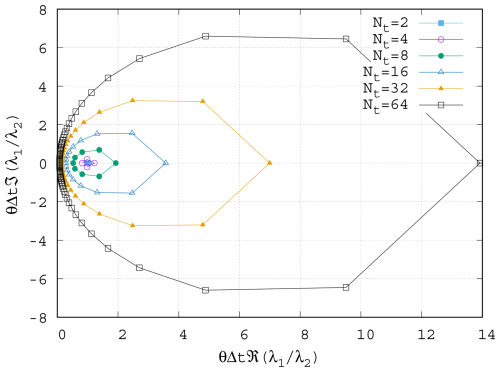

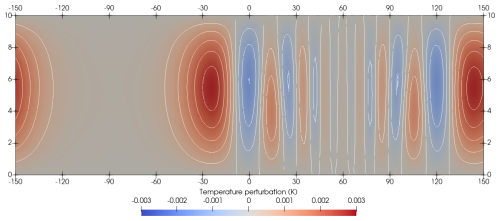

Figure 1 in the complex plane for varying Nt with θ=0.5 and . As Nt increases, the ψj values for low frequencies cluster towards the imaginary axis.

Before moving on to consider nonlinear problems, we will briefly discuss the complex-valued block systems in Step 2 of the algorithm above. We compare these blocks to the implicit linear system in the serial-in-time method in Eq. (2). For consistent naming convention, we refer to the linear system from the serial-in-time method as the “real-valued block” contrasted with the “complex-valued blocks” in the circulant preconditioner. In each case we need to solve blocks of the form

where (β1,β2) is in the serial-in-time method and in the parallel-in-time method, where λ1,j and λ2,j are the jth eigenvalue of C1 and C2 respectively. We have divided throughout by β2 to make comparison easier. The ratio of the coefficient on the mass matrix M in the parallel-in-time method to the coefficient in the serial-in-time method is

Figure 1 shows ψ plotted in the complex plane for increasing Nt. When Nt is small, ψj is clustered around unity, and the blocks in the parallel-in-time method are almost identical to those in the serial-in-time method. However, as Nt increases, ψj spreads further from unity in all directions. The magnitude of ψj for high-frequency modes increases, with a real part ≥1, which is comparable to a small (albeit complex) time step and is usually a favourable regime for iterative solvers. On the other hand, the ψj for low-frequency modes cluster closer and closer to the imaginary axis as Nt increases. This resembles the case of a very large and mostly imaginary time step (and hence a large, imaginary, Courant number), which is challenging for many iterative methods.

2.2 Nonlinear problems

The method as presented so far is designed for linear problems with constant coefficients. However, many problems of interest are nonlinear in nature or have non-constant coefficients. In this section we will show the all-at-once system for nonlinear problems and show how the ParaDiag method can be applied to such problems. We consider PDEs of the form

where the function may be nonlinear. The discretisation of Eq. (17) with the implicit θ method is analogous to Eq. (2) with Kun replaced by f(un,tn). The all-at-once system for the nonlinear PDE, analogous to Eq. (4), is

where is the concatenation of the function evaluations for the whole time series, which we show again for four time steps:

where the vector of time values t is

The right-hand side of Eq. (18) resembles Eq. (8) with Ku0 replaced by f(u0). This nonlinear system can be solved with a quasi-Newton method once a suitable Jacobian has been chosen. The exact Jacobian of Eq. (18) is

where is the derivative of f with respect to u and is a block-diagonal matrix with the nth diagonal block corresponding to the spatial Jacobian of f with respect to un, i.e. . Unlike K in the constant coefficient linear system in Eq. (4), the spatial Jacobian ∇uf varies at each time step either through nonlinearity, time-dependent coefficients, or both. As such, Eq. (21) cannot be written solely as a sum of Kronecker products like the Jacobian in Eq. (4), and we cannot immediately apply the same α-circulant trick as before to construct a preconditioner. First, we must choose some constant-in-time value for the spatial Jacobian. Gander and Halpern (2017) proposed to average the spatial Jacobians over all time steps . In our implementation, we use a constant-in-time reference state and reference time and evaluate the spatial Jacobian using these reference values (this choice is discussed further in Sect. 3.3). We also allow the preconditioner to be constructed from a different nonlinear function ; this allows for a variety of quasi-Newton methods. Using , we can construct an α-circulant preconditioner according to

The preconditioner in Eq. (22) can be used with a quasi-Newton–Krylov method for Eq. (18). The preconditioner in Eq. (22) has two sources of error: the α-circulant block as in the linear case and now also the difference between and ∇uf(un,tn) at each step. For a fixed , , and , we can define a function g which quantifies the “error” in the spatial Jacobian at a given t.

The Lipschitz constant of g with respect to u, over the time domain of interest, is the smallest κ such that

By analogy to Picard iterations for the Dahlquist equation with an approximate circulant preconditioner, Čaklović (2023, Theorem 3.6 and Remark 3.8) estimates the convergence rate η of the quasi-Newton method as

where NtΔt=T is the duration of the time window, and ϑ is a constant that depends on the time integrator (Čaklović, 2023). This result can be understood as stating that the convergence rate deteriorates as the nonlinearity of the problem gets stronger (κ increases) or as the all-at-once system encompasses a longer time window (T=NtΔt increases). The estimate implies that if α is small enough, then, for any moderate nonlinearity, the predominant error source will be the choice of reference state.

We now highlight the flexibility that expressing Eq. (18) as a preconditioned quasi-Newton–Krylov method provides. There are four main aspects, as follows.

-

At each Newton iteration, the Jacobian in Eq. (21) can be solved exactly (leading to a “true” Newton method) or inexactly (quasi-Newton). In the extreme case of the least exact Jacobian, the Jacobian is simply replaced by the preconditioner in Eq. (22), as in previous studies (Gander and Halpern, 2017; Gander and Wu, 2019; Čaklović, 2023; Wu and Zhou, 2021).

-

The nonlinear function used in the preconditioner in Eq. (22) does not need to be identical to the nonlinear function f in Eq. (18). The Jacobian in Eq. (21) has been written using f, but in general it could also be constructed from yet another function (not necessarily equal to either f or ). For example if Eq. (18) contains both linear and nonlinear terms, the Jacobian and/or preconditioner may be constructed solely from the linear terms, as in Čaklović (2023).

-

The Jacobian J(u,t) in Eq. (21) need not be linearised around the current Newton iterate u, but instead it could be linearised around some other time-varying state , e.g. some reduced-order reconstruction of u.

-

Usually the reference state for the preconditioner in Eq. (22) is chosen to be the time-average state and is updated at every Newton iteration; however, any constant-in-time state may be used (e.g. the initial conditions). This may be favourable if does not change between Newton iterations, and a factorisation of may be reused across multiple iterations.

2.3 Performance model

In this section we present a simple performance model for ParaDiag. The purpose of the model is firstly to identify the factors determining the effectiveness of the method and secondly to help us later demonstrate that we have produced a reasonably performant implementation in asQ. The model extends those presented in Maday and Rønquist (2008) and Čaklović et al. (2021) by a more quantitative treatment of the block solve cost. In Maday and Rønquist (2008) it is assumed that the cost of the block solves in the all-at-once preconditioner is identical to the block solves in the serial-in-time method. In Čaklović et al. (2021) the costs are allowed to be different, but the difference is not quantified. Here we assume that an iterative Krylov method is used to solve both the real- and complex-valued blocks and that, because of the variations in ψj in Eq .(16), the number of iterations required is different for the blocks in the circulant preconditioner and in the serial-in-time method. In the numerical examples we will see that accounting for the difference in the number of block iterations is essential for accurately predicting performance. We assume perfect weak scaling in space for the block iterations: the time taken per Krylov iteration for the complex-valued blocks in Step 2 to apply the preconditioner is the same as the time taken per Krylov iteration for the real-valued blocks in the serial-in-time method so long as twice the number of processors is used for spatial parallelism.

We start with a simple performance model for the serial-in-time method for Nt time steps of the system in Eq. (17) with Nx degrees of freedom (DoFs) in space. Each time step is solved sequentially using Newton's method, requiring a certain number of (quasi-)Newton iterations per time step where, at each Newton iteration, the real-valued block is solved (possibly inexactly) using a Krylov method. We assume that the block solves constitute the vast majority of the computational work in both the serial- and parallel-in-time methods and will revisit this assumption later. The cost of each time step is then proportional to the number of Krylov iterations ks per solve of the real-valued block and to the number of times ms the real-valued block must be solved per time step. For linear problems ms=1 and for nonlinear problems, ms is the number of Newton iterations. We assume that each Krylov iteration on the block requires work proportional to , where the exponent q determines the scalability in space; for example a textbook multigrid method has q=1, and a sparse direct solve of a 2D finite element matrix has ks=1 and (up to some upper limit on Nx). The cost of solving Nt time steps serial-in-time is therefore proportional to

The s subscript refers to “serial”(-in-time). If we parallelise only in space and assume weak scaling, then the number of processors is proportional to the number of spatial degrees of freedom, i.e. Ps∼Nx (this relation also holds once we have reached saturation when strong scaling). If the spatial parallelism weak scales perfectly with Nx, then the time taken to calculate the entire time series using the serial-in-time method is

from which in can be seen that Ts is linear in Nt.

The nonlinear all-at-once system in Eq. (18) is solved using a (quasi-)Newton–Krylov method, shown in Algorithm 2. At each Newton iteration, this requires first evaluating the nonlinear residual of Eq. (18) (lines 3 and 8). Then, the update δu is calculated by solving (possibly inexactly) a linear system with the all-at-once Jacobian J in Eq. (21) using an iterative Krylov method, referred to as the outer Krylov method (line 5). Each outer Krylov iteration requires calculating both a matrix–vector multiplication for the action of J on a vector and the application of the circulant preconditioner P in Eq. (22). The preconditioner is applied using Algorithm 1, which in Step 2 requires solving (possibly inexactly) each complex-valued block using a separate Krylov method, referred to as the inner Krylov method.1 The inner Krylov iterations may also be preconditioned, based on the structure of the blocks for the particular PDE being solved.

Algorithm 2Newton–Krylov algorithm to solve the all-at-once system in Eq. (18) to a tolerance ntol. The Krylov method approximately solves Jδu=r, preconditioned with P, to a tolerance ktol. P is applied using Algorithm 1.

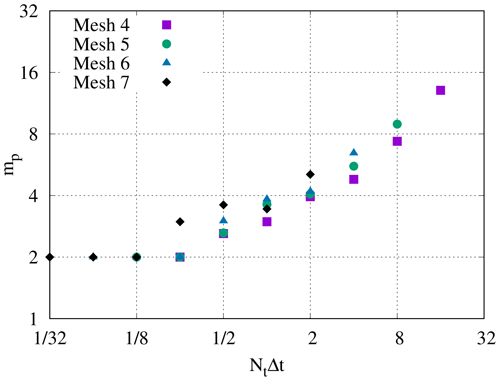

Assuming that the block solves constitute the vast majority of the computational load, we estimate the total work required by starting from this inner level of the Newton–Krylov algorithm. If kp inner Krylov iterations are required to solve each complex-valued block in Step 2 of Algorithm 1, and if we use the same solver as for the real-valued blocks so that the work per inner Krylov iteration is , then the work for a single block solve is proportional to . Each block is solved once per application of the circulant preconditioner, so the total work is the cost per block solve multiplied by the number of blocks Nt and by the total number of circulant preconditioner applications mp. This mp is the total over the entire Newton solve, i.e. the total number of outer Krylov iterations across all Newton iterations in Algorithm 2. (Note that for linear systems, where J reduces to A, mp≠1 but is equal to the number of outer Krylov iterations to solve A). Finally, this gives the following estimate for the total computational work to solve Eq. (18):

where the p subscript refers to “parallel”(-in-time), and the factor of 2 accounts for the blocks being complex-valued.

We revisit the assumption that Wp is dominated by the complex-valued block solves. The main computational components of the ParaDiag method are the evaluation of the residual of Eq. (18), the action of the all-at-once Jacobian in Eq. (21), solving the complex-valued blocks in the circulant preconditioner, the collective communications for the space–time data transposes, and the (I)FFTs. In the numerical examples we will see that, in practice, the contributions to the runtime profile of the (I)FFTs and evaluating the residual are negligible, and the contribution of the Jacobian action is only a fraction of that of the block solves, so we do not include these operations in the performance model. This leaves just the computational work of the block solves, and the time taken for the space–time transpose communications in Steps 1 and 3.

The parallel-in-time method is parallelised across both time and space, giving

The factor of 2 ensures that the number of floating point numbers per processor in the complex-valued block solves is the same as in the real-valued block solves. The time taken to complete the calculation is then

where Tc is the time taken for the collective communications in the space–time data transposes per preconditioner application. This leads to an estimate S of the speedup over the serial-in-time (but possibly parallel-in-space) method of

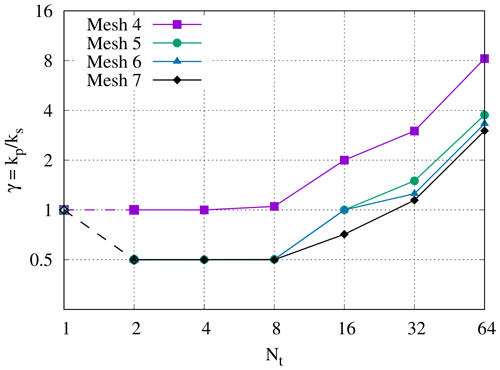

where

Here, γ quantifies how much more “difficult” the blocks in the circulant preconditioner are to solve than the blocks in the serial method, ω quantifies how much more “difficult” the all-at-once system is to solve than one time step of the serial method, and Tb is the time taken per block solve. If Tc≪Tb, then the speedup estimate simplifies to and depends solely on the “algorithmic scaling”, i.e. the dependence on Nt of the block and all-at-once system iteration counts. However, the speedup will suffer once Tc becomes non-negligible compared to Tb. Because both Tc and Tb are independent of mp, we would expect this to happen only for larger Nt, independently of how effective the circulant preconditioner is.

From the speedup estimate in Eq. (31), we can estimate the parallel efficiency as

Note that using as the processor count means that Eq. (33) estimates the efficiency of the time-parallelism independently of the efficiency of the space parallelism, just as Eq. (31) estimates the speedup over the equivalent serial-in-time method independently of the space parallelism.

In this section we introduce and describe asQ. First we state the aims of the library. Secondly, we take the reader through a simple example of solving the heat equation with asQ. Once the reader has a picture of the basic usage of asQ, we discuss how the library is structured to meet the stated aims. Next, we briefly describe the space–time Message Passing Interface (MPI) parallelism. Lastly, we describe the main classes in the library with reference to the mathematical objects from Sect. 2 that they represent.

asQ is open-source under the MIT licence and is available at https://github.com/firedrakeproject/asQ (last access: 14 July 2025). It can be installed by cloning or forking the repository and pip installing in a Firedrake virtual environment.2 It is also installed in the Firedrake Docker container available on Docker Hub.

3.1 Library overview

A major difficulty in the development and adoption of parallel-in-time methods is their difficulty of implementation. Adapting an existing serial-in-time code to be parallel-in-time often requires major overhauls from the top level, and many parallel-in-time codes are written from the ground up as small codes or individual scripts for developing new methods. The simplest implementation is often to hard code a specific problem and solution method, which then necessitates significant additional effort to test new cases in the future. Once a promising method is found, it must be tested at scale on a parallel machine to confirm whether it actually achieves real speedups. However, efficiently parallelising a code can be time-consuming in itself and is a related but distinct skill set to developing a good numerical algorithm.

In light of these observations, we state the three aims of the asQ library for being a productive tool for developing ParaDiag methods.

- 1.

It must be straightforward for a user to test out different problems – both equations sets and test cases.

- 2.

It must be straightforward for a user to try different solution methods. We distinguish between two aspects:

- a.

the construction of the all-at-once systems, e.g. specifying the form of the all-at-once Jacobian or the state to linearise the circulant preconditioner around and

- b.

the linear/nonlinear solvers used, e.g. the Newton method used for the all-at-once system or the preconditioning used for the linear block systems.

- a.

- 3.

The implementation must be efficient enough that a user can scale up to large-scale parallelism and get a realistic indication of the performance of the ParaDiag method.

Broadly, we attempt to fulfil these aims by building asQ on top of the Unified Form Language (UFL), Firedrake, and PETSc libraries and by following the design ethos of these libraries. The UFL (Alnæs et al., 2014) is a purely symbolic language for describing finite element forms, with no specification of how those forms are implemented or solved. UFL expressions can be symbolically differentiated, which allows for the automatic generation of Jacobians and many matrix-free methods. Firedrake is a Python library for the solution of finite element methods that takes UFL expressions and uses automatic code generation to compile high-performance C kernels for the forms. Firedrake integrates tightly with PETSc via petsc4py (Dalcin et al., 2011) for solving the resulting linear and nonlinear systems. PETSc provides a wide range of composable linear and nonlinear solvers that scale to massive parallelism (Lange et al., 2013; May et al., 2016), as well as mechanisms for creating new user-defined solver components, e.g. preconditioners. After the example script below we will discuss further how the three aims above are met.

We reinforce that asQ is not intended to be a generic ParaDiag library into which users can port their existing serial-in-time codes, as XBraid is for MGRIT or pySDC is for spectral deferred correction (SDC) and collocation methods. asQ requires a Firedrake installation and for the user to have some familiarity with basic Firedrake usage and to consider a modelling approach that is within the Firedrake paradigm (essentially a discretisation expressible in the domain-specific language UFL). In return for this restriction we gain all the previously mentioned benefits of Firedrake and PETSc for increasing developer productivity and computational performance. Restricting the scope to finite element methods means that a user does not need to implement spatial discretisations to experiment with temporal solution methods – this is in contrast to XBraid, pySDC, and pyParaDiag, which do not restrict the spatial discretisation at the cost of requiring user implementations. Additionally, we believe that for ParaDiag in particular it is important to have easy access to a wide range of linear solvers because the complex-valued coefficients on the block systems in Eq. (15) can impair the performance of iterative methods, so a range of strategies may have to be explored before finding a sufficiently efficient scheme. Contrast this with MGRIT or parareal, for example, where the inner solves are exactly the serial-in-time method, so existing solvers are often still optimal.

3.2 A heat equation example

The main components of asQ will be demonstrated through an example. We solve the heat equation over the domain Ω with boundary Γ:

where n is the normal to the boundary, ΓN is the section of the boundary with zero Neumann boundary conditions, ΓD is the section of the boundary with zero Dirichlet boundary conditions, and . Equation (34) will be discretised with a standard continuous Galerkin method in space. If V is the space of piecewise linear functions over the mesh, and V0 is the space of functions in V which are 0 on ΓD, the solution u∈V0 is the solution of the weak form:

The Neumann boundary conditions are enforced weakly by removing the corresponding surface integral, and the Dirichlet conditions are enforced strongly by restricting the solution to V0.

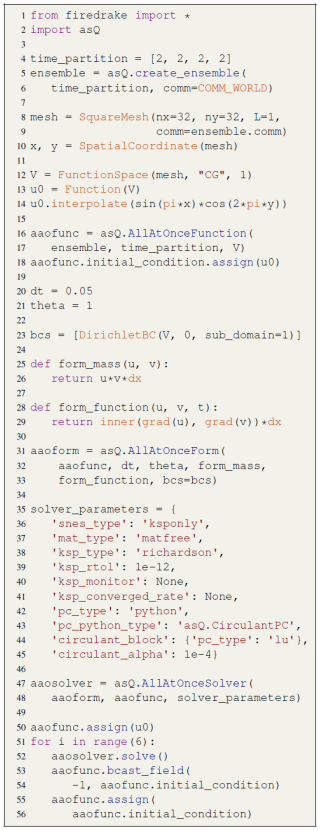

We will set up and solve an all-at-once system for the backward Euler method for eight time steps distributed over four spatial communicators. The code for this example is shown in Listing 1 and is available in the heat.py script in Hope-Collins et al. (2024b).

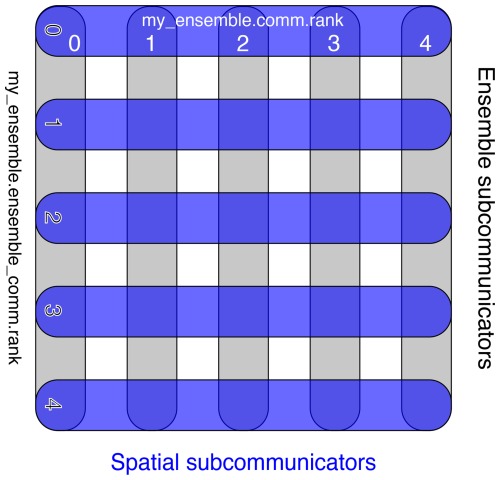

The first part of the script will import Firedrake and asQ and set up the space–time parallel partition. asQ distributes the time series over multiple processors in time, and each time step may also be distributed in space. Firedrake's Ensemble class manages this distribution by setting up a tensor product Px×Pt of MPI communicators for space and time parallelism (described in more detail later and illustrated in Fig. 2). The time parallelism is determined by the list time_partition on line 4. Here we request four processors in time, each holding two time steps for a total time series of eight time steps. We refer to all time steps on a single partition in time as a “slice” of the time series; here, we have four slices of two time steps each.

Listing 1Code for solving the heat equation with ParaDiag (available in Hope-Collins et al., 2024b). asQ and Firedrake components are highlighted in blue and orange respectively.

We do not need to provide the spatial partition when we create the Ensemble on line 5 because the total MPI ranks will automatically be distributed evenly in time. For example if the script is run with four MPI ranks, then each spatial communicator will have a single rank, but if the script is run with eight MPI ranks, then each spatial communicator will have two MPI ranks.

On line 8 we define the mesh for a square domain defined on the local spatial communicator ensemble.comm. The linear continuous Galerkin ("CG") function space V for a single time step is represented by the Firedrake FunctionSpace on line 12 and is used to create a Firedrake Function holding the initial conditions u0=sin(πx)cos(2πy).

Now we build the all-at-once system using asQ, starting with an AllAtOnceFunction on line 16. This class represents Eq. (7), a time series of finite element functions in V distributed in time over an Ensemble. In an AllAtOnceFunction each spatial communicator holds a slice of one or more time steps of the time series, in this example two time steps per communicator.

Next we need to define the all-at-once system itself. To represent the finite element form in Eq. (4) or Eq. (18) over the time series aaofunc, we use an AllAtOnceForm. Building this requires the time step Δt, the implicit parameter θ, the boundary conditions, and a way of describing the mass matrix M and the function f(u,t) (which for linear equations is just Ku). This is shown between lines 20 and 33. The time step Δt=0.05 gives a Courant number of around 13, and θ=1 gives the implicit Euler time-integration method. The Python functions form_mass and form_function each take in a function u and a test function v in V and return the UFL form for the mass matrix M and f(u,t) respectively. asQ uses these two Python functions to generate all of the necessary finite element forms for the all-at-once system in Eq. (18), the Jacobian in Eq. (21), and the circulant preconditioner in Eq. (22), while the user need only define them for the semi-discrete form of a single time step. The boundary conditions for a single time step are passed to the AllAtOnceForm in bcs and are applied to all time steps. For Eq. (36) we set ΓD to be the left boundary x=0 by passing the subdomain=1 argument to the DirichletBC, and for Eq. (35) Firedrake defaults to zero Neumann boundary conditions on all other boundaries. Given V and a set of Dirichlet boundary conditions, Firedrake will automatically create the restricted space V0. The initial conditions defined earlier conform to these boundary conditions.

We next need to specify how we solve for the solution of aaoform. In PETSc, linear and nonlinear solvers are specified using dictionaries of solver parameter options, here shown on lines 35 to 45. Nonlinear problems are solved using a SNES (Scalable Nonlinear Equations Solver), and linear problems are solved using a KSP (Krylov SPace method), which is preconditioned by a PC. Starting with the SNES, our problem is linear, so we use 'snes_type': 'ksponly' to perform one Newton iteration and then return the result. Assembling the entire space–time matrix would be very expensive and is unnecessary for ParaDiag, so asQ implements the Jacobian matrix-free, specified by the 'mat_type': 'matfree' option. We select the Richardson iterative method and require a drop in the residual of 12 orders of magnitude. The ksp_monitor and ksp_converged_rate options tell PETSc to print the residual at each Krylov iteration and the contraction rate upon convergence respectively. The Richardson iteration is preconditioned with the corresponding block circulant ParaDiag matrix, which is provided by asQ as a Python type preconditioner CirculantPC with the circulant parameter . Lastly, we need to specify how to solve the blocks in the preconditioner after the diagonalisation. The composability of PETSc solvers means that to specify the block solver we simply provide another dictionary of options with the 'circulant_block' key. The block solver here is just a direct LU factorisation but could be any other Firedrake or PETSc solver configuration.

The tight integration of Firedrake and asQ with PETSc means that a wide range of solution strategies are available through the dictionary of options. For example, we can change the Krylov method for the all-at-once system simply by changing the 'ksp_type' option. Rather than a direct method, an iterative method could be used for the block solves by changing the options in the circulant_block dictionary. Options can also be passed from the command line, in which case zero code changes are required to experiment with different solution methods.

The last all-at-once object we need to create is an AllAtOnceSolver on line 47, which solves aaoform for aaofunc using the specified solver parameters. If we want to solve for, say, a total of 48 time steps, then, for an all-at-once system of size Nt=8, we need to solve six windows of 8 time steps each, where the final time step of each window is used as the initial condition for the next window, shown on lines 51 to 56. After each window solve, we use the final time step as the initial condition for the next window. To achieve this, aaofunc.bcast_field(i, uout) on line 53 wraps MPI_Bcast to broadcast time step i across the temporal communicators so that all spatial communicators now hold a copy of time step i in uout (in this example, i is the Pythonic −1 for the last element, and uout is the initial_condition). After broadcasting the new initial conditions, every time step in aaofunc is then assigned the value of the new initial condition as the initial guess of the next solve. We have been able to set up and solve a problem parallel in time (and possibly parallel in space) in 56 lines of code.

We now return to the three requirements stated in the library overview and discuss how asQ attempts to meet each one.

- 1.

Straightforward to test different problems. This requirement is met by only requiring the user to provide UFL expressions for the mass matrix M and the function f(u,t): a Firedrake

FunctionSpacefor a single time step and a FiredrakeFunctionfor the initial conditions. These are all components that a user would already have, or would need anyway, to implement the corresponding serial-in-time method. From these components asQ can generate the UFL for the different all-at-once system components, which it then hands to Firedrake to evaluate numerically. Changing to a different problem simply requires changing one or more of the UFL expressions, the function space, or the initial conditions. - 2.

Straightforward to test different solution methods.

- a.

Requirement 2a is met by allowing users to optionally pass additional UFL expressions (

form_function) for constructing the different all-at-once system components (see theAllAtOnceSolver,CirculantPC, andAuxiliaryBlockPCdescriptions below). - b.

Requirement 2b is met through the use of PETSc's solver options interface. Changing between many different methods is as simple as changing some options strings. For more advanced users, novel methods can be written as bespoke petsc4py Python PCs. The use of UFL means that symbolic information is retained all the way down to the block systems, so solution methods that rely on certain structure can be applied without issue, for example Schur factorisations or additive Schwarz methods defined on topological entities.

- a.

- 3.

Efficient parallel implementation. This requirement involves both spatial and temporal parallelism. The spatial parallelism is entirely provided by Firedrake and PETSc. The temporal parallelism is implemented using a mixture of PETSc objects defined over the global communicator, and mpi4py calls via the Firedrake

Ensembleor via the mpi4py-fftw library (Dalcin and Fang, 2021). The temporal parallelism is discussed in more depth in Sect. 3.3 and is profiled in the examples in Sect. 4.

3.3 Space–time parallelism

As stated previously, time parallelism is only used once space parallelism is saturated due to the typically lower parallel efficiency. This means that any practical implementation of parallel-in-time methods must be space–time parallel. In terms of evaluating performance, full space–time parallelism is especially important for ParaDiag methods due to the need for all-to-all communication patterns, which can be significantly affected by network congestion.

The three steps in applying the block circulant preconditioner require two distinct data access patterns. The (I)FFTs in Steps 1 and 3 require values at a particular spatial degree of freedom (DoF) at all Nt time steps/frequencies, whereas the block solves in Step 2 require values for all spatial DoFs at a particular frequency. The data layout in asQ places a single slice of the time series on each spatial communicator. The slice length is assumed to be small and is usually just one, i.e. a single time step per spatial communicator, to maximise time parallelism during Step 2. This layout minimises the number of ranks per spatial communicator, hence reducing the overhead of spatial halo swaps during the block solve. However, parallel FFTs are not efficient with so few values per processor. Instead of using a parallel FFT, the space–time data are transposed so that each rank holds the entire time series for a smaller number of spatial DoFs. Each rank can then apply a serial FFT at each spatial DoF. After the transform the data are transposed back to their original layout. These transposes require all-to-all communication rounds, which are carried out over each partition of the mesh separately, so we have Px∼𝒪(Nx) all-to-all communications involving Pt∼𝒪(Nt) processors each, instead of a single all-to-all communication involving all PtPx processors. asQ currently uses the mpi4py-fftw library for these communications, which implements the transposes using MPI_Alltoallw and MPI derived data types.

Collective communication patterns, particularly Alltoall and its variants, do not scale well for large core counts compared to the point-to-point communications typical required for spatial parallelism. There are two common implementations of Alltoall in MPI: pairwise and Bruck (Netterville et al., 2022). The pairwise algorithm minimises the total communication volume but has communication complexity 𝒪(P). The Bruck algorithm has communication complexity 𝒪(log P) at the expense of a higher total communication volume, so it is only more efficient than the pairwise algorithm for latency bound communications (i.e. small message sizes). MPI implementations usually select which algorithm to use at runtime based on a variety of factors, primarily the number of processors in the communicator and the message size. The typical message size for ParaDiag is above the usual thresholds for the Bruck algorithm to be used. Therefore, we expect the communication time Tc in our performance model above to scale with 𝒪(Pt) and become a limiting factor on the parallel scaling for large Nt. It is worth noting that, although to a first approximation Tc should depend on Pt but not Px for constant DoFs/core, in practice network congestion may cause Tc to increase with Px even if Pt is fixed.

Figure 2Space–time parallelism using Firedrake's Ensemble class. ensemble.comm.size is Px=5, and ensemble.ensemble_comm.size is Pt=5. The dark-blue horizontal lines each represent a spatial communicator Ensemble.comm over which a mesh is partitioned. Every spatial communicator has the same number of ranks and the same mesh partitioning. The grey vertical lines represent communicators in time, Ensemble.ensemble_comm. These communicators are responsible for connecting the ranks on each spatial communicator with the same section of the mesh partition.

The space–time parallelism in asQ is implemented using Firedrake's Ensemble class, which splits a global communicator (usually COMM_WORLD) into a Cartesian product of spatial and temporal communicators. The resulting layout of communicators in the Ensemble is shown in Fig. 2. Firedrake objects for each time step are defined on each spatial communicator, and PETSc objects and asQ objects for the all-at-once system are defined over the global space–time communicator. The Ensemble provides wrappers around mpi4py calls for sending Firedrake Function objects between spatial communicators over the temporal communicators. The mpi4py-fftw library is used for space–time transposes separately across each temporal communicator. The spatial parallelism required is already fully abstracted away by Firedrake, so asQ need only implement the temporal parallelism on top of this. The Ensemble splits the global communicator such that each spatial communicator has a group of consecutive cores, which minimises the amount of off-node communication needed during the block solves. This is important if we assume that the space parallelism will already be at the strong scaling limit before time parallelism is used. We experimented briefly with grouping the ranks in each temporal communicator consecutively, but the performance was either unchanged or reduced in all cases that we tried compared to grouping the ranks in each spatial communicators.

As stated earlier, in asQ we construct the circulant preconditioner from the spatial Jacobian of a constant reference state (usually the time-averaged state), rather than the time average of the spatial Jacobians. The time average of the spatial Jacobian is optimal in the L2 norm, but using the reference state has two advantages. First, the communication volume of averaging the state is smaller than averaging the Jacobians. For scalar PDEs or low-order methods the difference is minor, but for systems of PDEs or high-order methods the difference can be substantial. Second, explicitly assembling the Jacobian prevents the use of matrix-free methods provided by Firedrake and PETSc for the block solves. Matrix-free methods have lower memory requirements than assembled methods, which is important for finite element methods because they are usually memory-bound. The matrix-free implementation in Firedrake (Kirby and Mitchell, 2018) also enables the use of block preconditioning techniques such as Schur factorisation by retaining symbolic information that is lost when assembling a matrix numerically. We also note that for quadratic nonlinearities (such as in the shallow water equations in primal form) the Jacobian of the average and the average of the Jacobian are identical. However, for cubic or higher nonlinearities (such as the compressible Euler equations in conservative form) the two forms are not identical.

3.4 asQ components

Now that we have seen an example of the basic usage of asQ, we give a more detailed description of each component of the library, with reference to which mathematical objects described in Sect. 2 they represent. First the components of the all-at-once system are described, then a variety of Python type PETSc preconditioners for both the all-at-once system and the blocks, and then the submodule responsible for the complex-valued block solves in the circulant preconditioner. There are a number of other components implemented in asQ, but these are not required for the numerical examples later, so they are omitted here (see Appendix C for a description of some of these components).

AllAtOnceFunction

The AllAtOnceFunction is a time series of finite element functions distributed over an Ensemble representing the vector u in Eq. (7) plus an initial condition. Each ensemble member holds a slice of the time series of one or more time steps, and time step i can be accessed on its local spatial communicator as a Firedrake Function using aaofunc[i]. Evaluation of the θ-method residual in Eq. (2) at a time step un+1 requires the solution at the previous time step un, so the AllAtOnceFunction holds time halos on each spatial communicator for the last time step on the previous slice. Some other useful operations are also defined, such as calculating the time average, linear vector space operations (e.g. axpy), and broadcasting particular time steps to all slices (bcast_field). Internally, a PETSc Vec is created for the entire space–time solution to interface with PETSc solvers.

AllAtOnceCofunction

In addition to functions in the primal space V, it is also useful to have a representation of cofunctions in the dual function space V*. asQ provides this dual object as an AllAtOnceCofunction, which represents a time series of the dual space V*. Cofunctions are used when assembling residuals of Eq. (4) or Eq. (18) over the time series and providing the right-hand side (the constant part) of linear or nonlinear systems. Both AllAtOnceFunction and AllAtOnceCofunction have a riesz_representation method for converting between the two spaces.

AllAtOnceForm

The AllAtOnceForm holds the finite element form for the all-at-once system Eq. (18) and is primarily responsible for assembling the residual of this form to be used by PETSc. It holds the Δt, θ, form_mass, form_function, and boundary conditions which are used for constructing the Jacobian and preconditioners. The contribution of the initial conditions to the right-hand side is automatically included in the system. After updating the time halos in the AllAtOnceFunction, assembly of the residual can be calculated in parallel across all slices. The AllAtOnceForm will also accept an α parameter to introduce the circulant approximation at the PDE level, such as required for the waveform relaxation iterative method of Gander and Wu (2019), which uses the modified initial condition .

AllAtOnceJacobian

The AllAtOnceJacobian represents the action of the Jacobian in Eq. (21) of an AllAtOnceForm on an all-at-once function. This class is rarely created explicitly by the user, but it is instead created automatically by the AllAtOnceSolver or LinearSolver classes described below and used to create a PETSc Mat (matrix) for the matrix–vector multiplications required for Krylov methods. As for assembly of the AllAtOnceForm, calculating the action of the AllAtOnceJacobian is parallel across all slices after the time halos are updated. The automatic differentiation provided by UFL and Firedrake means that the action of the Jacobian is computed matrix-free, removing any need to ever explicitly construct the entire space–time matrix. For a quasi-Newton method the Jacobian need not be linearised around the solution at the current iteration. The AllAtOnceJacobian has a single PETSc option for specifying alternate states to linearise around, including the time average, the initial conditions, or a completely user-defined state.

AllAtOnceSolver

The AllAtOnceSolver is responsible for setting up and managing the PETSc SNES for the all-at-once system defined by an AllAtOnceForm using a given dictionary of solver options. The nonlinear residual is evaluated using the AllAtOnceForm, and an AllAtOnceJacobian is automatically created. By default this Jacobian is constructed from the AllAtOnceForm being solved, but AllAtOnceSolver also accepts an optional argument for a different AllAtOnceForm to construct the Jacobian from, for example one where some of the terms have been dropped or simplified. Just like Firedrake solver objects, AllAtOnceSolver accepts an appctx dictionary containing any objects required by the solver which cannot go into a dictionary of options (i.e. not strings or numbers). For example for CirculantPC this could include an alternative form_function for or a Firedrake Function for in Eq. (22).

Callbacks can also be provided to the AllAtOnceSolver to be called before and after assembling the residual and the Jacobian, similar to the callbacks in Firedrake's NonlinearVariationalSolver. There are no restrictions on what these callbacks are allowed to do, but potential uses include updating solution-dependent parameters before each residual evaluation and manually setting the state around which to linearise the AllAtOnceJacobian.

Paradiag

In most cases, users will want to follow a very similar workflow to that in the example above: setting up a Firedrake mesh and function space, building the various components of the all-at-once system, and then solving one or more windows of the time series. To simplify this, asQ provides a convenience class Paradiag to handle this case. A Paradiag object is created with the following arguments:

paradiag = Paradiag(

ensemble=ensemble,

form_mass=form_mass,

form_function=form_function,

ics=u0, dt=dt, theta=theta,

time_partition=time_partition,

solver_parameters=solver_parameters),creating all the necessary all-at-once objects from these arguments. The windowing loop in the last snippet of the example above is also automated and can be replaced by

paradiag.solve(nwindows=6).

The solution is then available in paradiag.aaofunc, and the other all-at-once objects are similarly available. Callbacks can be provided to be called before and after each window solve, e.g. for writing output or collecting diagnostics.

SerialMiniApp

The SerialMiniApp is a small convenience class for setting up a serial-in-time solver for the implicit θ method and takes most of the same arguments as the Paradiag class.

serial_solver = SerialMiniApp( dt, theta, u0, form_mass, form_function, solver_parameters)

The consistency in the interface means that, once the user is satisfied with the performance of the serial-in-time method, setting up the parallel-in-time method is straightforward, with the only new requirements being the solver options and the description of the time parallelism (ensemble and time partition). Importantly, this also makes ensuring fair performance comparisons easier. In Sect. 4, all serial-in-time results are obtained using the SerialMiniApp class.

CirculantPC

The block α-circulant matrix in Eq. (22) is implemented as a Python type petsc4py preconditioner, which allows it to be applied matrix-free. By default the preconditioner is constructed using the same Δt, θ, form_mass, and form_function as the AllAtOnceJacobian and linearising the blocks around the time average . However, alternatives for all of these can be set via the PETSc options and the appctx. For example, an alternate can be passed in through the appctx, and could be chosen as the initial conditions via the PETSc options. Additional solver options can also be set for the blocks, as shown in the example above. The implementation of the complex-valued linear system used for the blocks can also be selected via the PETSc options: more detail on this below.

AuxiliaryRealBlockPC and AuxiliaryComplexBlockPC

These two classes are Python preconditioners for a single real- or complex-valued block respectively, constructed from an “auxiliary operator”, i.e. from a different finite element form than the one used to construct the block. These are implemented by subclassing Firedrake's AuxiliaryOperatorPC. Examples of when preconditioning the blocks with a different operator may be of interest include preconditioning the nonlinear shallow water equations with the linear shallow water equations or preconditioning a variable diffusion heat equation with the constant diffusion heat equation. This preconditioner can be supplied with any combination of alternative values for form_mass, form_function, boundary conditions, (Δt,θ) or (λ1,λ2) as appropriate, and , as well as PETSc options for how to solve the matrix resulting from the auxiliary operator.

complex_proxy

When PETSc is compiled, a scalar type must be selected (e.g. double-precision float, single-precision complex), and then only this scalar type is available. asQ uses PETSc compiled with a real-valued scalar because complex scalars would double the required memory access when carrying out real-valued parts of the computation, and not all of Firedrake's preconditioning methods are available in complex mode yet (notably geometric multigrid). However, this means that the complex-valued linear systems in the blocks of the CirculantPC must be manually constructed. The complex_proxy module does this by writing the linear system λAx=b with complex number , complex vectors and , and real matrix A as

Two versions of complex_proxy are available, both having the same API but different implementations. One implements the complex function space as a two-component Firedrake VectorFunctionSpace and one which implements the complex function space as a two-component Firedrake MixedFunctionSpace. The VectorFunctionSpace implementation is the default because for many block preconditioning methods it acts more like a true complex-valued implementation. The complex_proxy submodule API will not be detailed here, but example scripts are available in the asQ repository demonstrating its use for testing block preconditioning strategies without having to set up an entire all-at-once system.

We now present a set of numerical examples of increasing complexity in order to demonstrate two points, firstly that asQ is a correct and efficient implementation of the ParaDiag method and secondly that the ParaDiag method is capable of delivering speedups on relevant linear and nonlinear test cases from the literature. The correctness of asQ will be demonstrated by verifying the convergence rate in Eq. (14) from Sect. 2.1, and the efficiency of the implementation will be demonstrated by comparing actual wall-clock speedups to the performance model of Sect. 2.3.

In the current article we are interested only in speedup over the equivalent serial-in-time method: can ParaDiag accelerate the calculation of the solution to the implicit-θ method? We are not considering here the question of whether ParaDiag can beat the best of some set of serial-in-time methods (e.g. on error vs. wall-clock time). This question is the topic of a later publication.

The situation we consider is that we want a solution over a time interval of length T, which, for reasonable values of Δt, requires a large number of time steps . However, ParaDiag may be more efficient for all-at-once systems with Nt<NT, so we split the time series of NT time steps into Nw windows of time steps each. We then use ParaDiag to solve the all-at-once system for each window in turn, using the last time step of each window as the initial condition for the next window.

For linear constant-coefficient equations, for a given α the number of iterations required to solve each window is constant, so if both α α and Nt are fixed, then the time taken to solve each window is essentially constant. Likewise, in the serial-in-time method the time taken per time step is essentially constant. This means that we can solve a single window of a given α and Nt, or a single time step serial-in-time, and extrapolate the time taken to solve all NT time steps. In practice, network congestion and other hardware factors may affect the time taken, so in the linear examples below we actually solve five windows for each α and Nt combination and average the time taken – although we note that there was very little variation. For the nonlinear equations, both the serial- and parallel-in-time methods may require a different number of iterations per time step or window as the nonlinearity in the local solution evolves. For the nonlinear examples we calculate all NT time steps for both serial-in-time and parallel-in-time methods and use the total time taken.

In the performance model in Eq. (31) it is assumed that, once spatial parallelism is saturated, the number of cores is proportional to the number of time steps in the all-at-once system Nt. We have not found a situation in which there is a speedup advantage to having more time steps than cores by having multiple time steps per time slice. Therefore in all examples we keep the DoFs/core constant when varying Nt or Nx, which, when combined with the framing of scaling in terms of resolution and wall-clock time, leads to the following strong- and weak-scaling interpretations. For strong scaling in time, Δt and NT are fixed, and we attempt to decrease the total wall-clock time by increasing Nt and decreasing Nw. Although the number of DoFs being computed at any one time is increasing, with DoFs/core fixed (as it would be for traditional weak scaling in space), we call this strong scaling because the resolution is fixed. For weak scaling in time, Δt is decreased and NT is increased proportionally such that the final time T remains the same. We attempt to keep the wall-clock time fixed by keeping Nw fixed but increasing Nt so that we can use more cores. Usually – but not necessarily – Δx is decreased proportionally to Δt, in which case the number of cores used for spatial parallelism will also increase to keep the DoFs/core constant.

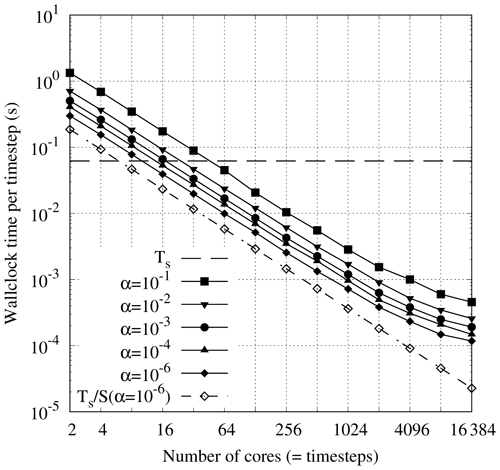

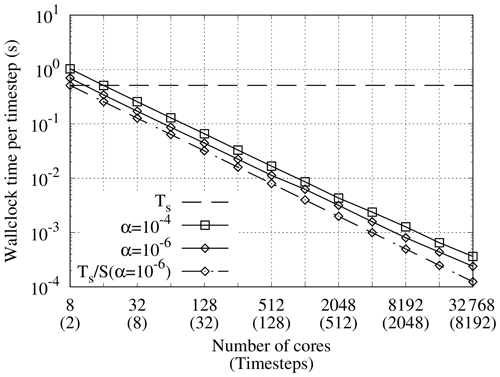

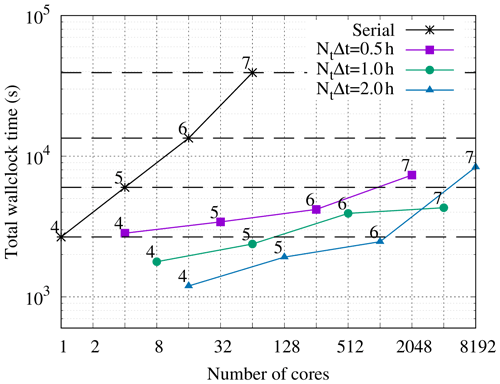

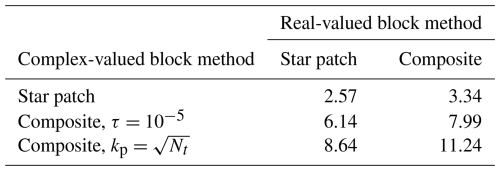

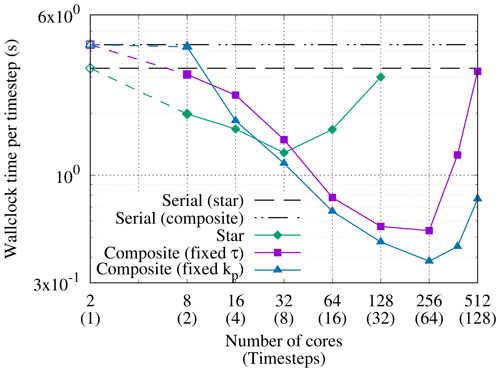

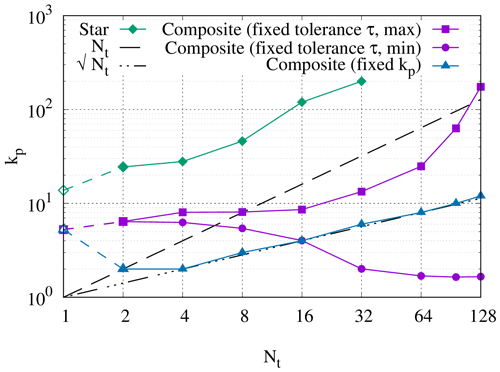

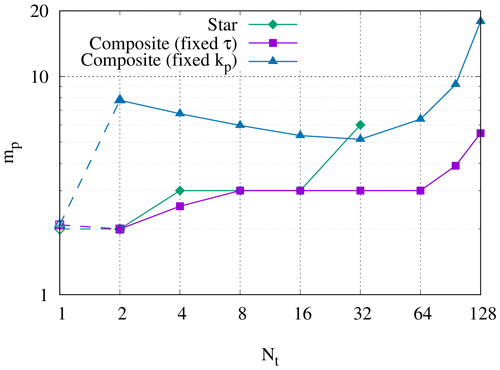

Figure 3Strong scaling in time of the linear scalar advection equation test case with Nx=1282 and σ=0.8 is demonstrated by measured wall-clock times per time step () for varying Nt and α. The wall-clock time for the serial-in-time method is plotted as the horizontal line Ts. The prediction of the performance model in Eq. (31) is shown for using the measured Ts and (assuming Tc=0). Maximum speedup of 528 achieved with and Nt=16384.

All results presented here were obtained on the ARCHER2 HPE Cray supercomputer at the EPCC (Beckett et al., 2024) using a Singularity container with asQ, Firedrake, and PETSc installed. ARCHER2 consists of 5860 nodes, each with 2 AMD EPYC 7742 CPUs with 64 cores per CPU for a total of 128 cores per node. The nodes are connected with an HPE Slingshot network. The EPYC 7742 CPUs have a deep memory hierarchy, with the lowest level of shared memory being a 16 MB L3 cache shared between four cores. During preliminary testing we found that, due to the memory-bound nature of finite element computation, the best performance was obtained by underfilling each node by allocating fewer than four cores per L3 cache. For the examples using the shallow water equations and the compressible Euler equations, we use 2 cores per L3 cache, giving a maximum of 64 cores per node. For the advection equation example, using a single core per L3 cache gave the best scaling due to the small size of the spatial domain and the use of LU for the block solves, giving a maximum of 32 cores per node. For all cases we have only one time step per Ensemble member to maximise the available time parallelism. Unless otherwise stated, we use twice as many cores per time step in the parallel-in-time method as in the serial-in-time method to account for the complex-valued nature of the blocks in the circulant preconditioner.