the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

SICNetseason V1.0: a transformer-based deep learning model for seasonal Arctic sea ice prediction by incorporating sea ice thickness data

Yibin Ren

Xiaofeng Li

Yunhe Wang

The Arctic sea ice suffers dramatic retreat in summer and fall, which has far-reaching consequences for the global climate and commercial activities. Accurate seasonal sea ice predictions significantly infer climate change and are crucial for planning commercial activities. However, seasonal prediction of the summer sea ice encounters a significant obstacle known as the spring predictability barrier (SPB): predictions made later than the date of melt onset (roughly May) demonstrate good skill in predicting summer sea ice, while predictions made during or earlier than May exhibit considerably lower skill. This study develops a transformer-based deep learning model, SICNetseason (V1.0), to predict the Arctic sea ice concentration on a seasonal scale. Including spring sea ice thickness (SIT) data in the model significantly improves the prediction skill at the SPB point. A 20-year (2000–2019) test demonstrates that the detrended anomaly correlation coefficient (ACC) of September sea ice extent (sea ice concentration >15 %) predicted by our model during May and April is improved by 7.7 % and 10.61 %, respectively, compared to the ACC predicted by the state-of-the-art dynamic model SEAS5 from the European Centre for Medium-Range Weather Forecasts (ECMWF). Compared with the anomaly persistence benchmark, the mentioned improvement is 41.02 % and 36.33 %. Our deep learning model significantly reduces prediction errors in terms of September's sea ice concentration on seasonal scales compared to SEAS5 and the anomaly persistence model (Persistence). The spring SIT data are key in optimizing the predictions around the SPB, contributing to an enhancement in ACC of more than 20 % in September's sea ice extent (SIE) for 4- to 5-month-lead predictions. Our model achieves good generalizability in predicting the September SIE of 2020–2023.

- Article

(10134 KB) - Full-text XML

- BibTeX

- EndNote

Arctic sea ice plays a significant role in the global climate because it modulates the thermal and dynamic exchanges between the ocean and the atmosphere (Ding et al., 2017; Kapsch et al., 2013; Liu et al., 2021a; Olonscheck et al., 2019). In recent decades, global warming has resulted in a dramatic retreat in Arctic sea ice during the summer and fall (Cao et al., 2017; Shu et al., 2022). This decline triggers a system-positive feedback mechanism that causes the Arctic's surface air temperature to increase 2–4 times faster than the global mean state, known as the Arctic amplification (AA) (England et al., 2021; Pithan and Mauritsen, 2014; Screen et al., 2013; Screen and Simmonds, 2010). AA accelerates sea ice decline, strengthening positive feedback (Jenkins and Dai, 2021; Kumar et al., 2010). If the situation is unchanged, climate models project that the Arctic will become ice-free during summer by the 2050s (Jahn et al., 2024; Kim et al., 2023). The dramatic Arctic sea ice loss has consequences for global climate (Francis and Vavrus, 2012) and commercial activities (Min et al., 2022). For example, it may weaken the stratospheric polar vortex in the winter, increasing extreme cold events in the Northern Hemisphere (Blackport et al., 2019; Cohen et al., 2014). Furthermore, the lower sea ice area during summer extends the navigability of the Arctic Passage to seasonal scales (Cao et al., 2022).

Sea ice predictions are helpful in better understanding global climate change and in supporting human activities in the Arctic (Lindsay et al., 2008; Merryfield et al., 2013). Therefore, sea ice prediction, commonly represented by parameters such as sea ice concentration (SIC) or sea ice extent (SIE, defined as the sum of a grid cell area where SIC > 15 %), has always attracted substantial efforts (Guemas et al., 2016; Stroeve and Notz, 2015). Various prediction systems are proposed, such as numerical (Chevallier et al., 2013; Liang et al., 2020; Mu et al., 2020; Wang et al., 2013; Yang et al., 2019; Zhang et al., 2008, 2022), statistical (Gregory et al., 2020; Wang et al., 2016, 2022; Yuan et al., 2016) and deep learning models (Jun Kim et al., 2020; Ren et al., 2022; Ren and Li, 2023). However, accurate sea ice prediction for Arctic summer remains challenging, particularly at seasonal or even longer scales (Zampieri et al., 2018; Blanchard-Wrigglesworth et al., 2015, 2023). One of the biggest challenges is the spring predictability barrier (SPB): predictions for summer sea ice made before or during the timing of melt onset show significantly lower skill than predictions made after the timing of melt onset (Bonan et al., 2019; Bushuk et al., 2020; Day et al., 2014; Zeng et al., 2023). Studies show that SPB is evident in nearly all of the fully coupled global climate models (GCMs) in Phase 5 of the Coupled Model Intercomparison Project (CMIP5), a crucial initiative providing climate projections to support essential climate research worldwide (Blanchard-Wrigglesworth et al., 2011; Tietsche et al., 2014). Thus, optimizing the predictions around the SPB is an urgent task for accurate summer sea ice predictions.

Experiments based on ensemble simulations reveal that the predictability of summer SIE is limited before spring due to the ice motion and growth in winter (Bushuk et al., 2020). However, the predictability increases rapidly after the melting processes in the spring (Bushuk et al., 2020). The satellite observations show that the spring sea ice thickness (SIT) correlates more with the summer SIE than the spring SIE (Landy et al., 2022). These findings indicate that the spring SIT may be a key factor in optimizing the predictions around the SPB (Bushuk et al., 2020). Recently, researchers assimilated the CryoSat-2 observed SIT data, the first summer SIT observations, into the Geophysical Fluid Dynamics Laboratory (GFDL) ocean–sea ice model and found that the prediction skill of September's SIC is improved significantly when the model is initialized with SIT anomalies in July and August (Zhang et al., 2023). This study further proves that the summer SIT data contribute to September's sea ice predictions. However, as the SPB flag is May for most studies, whether the predictions around the SPB could be optimized by including SIT data remains largely unknown.

Currently, numerical models are widely used in operational sea ice prediction, but they are inflexible and have been limited by the SPB (Msadek et al., 2014; Sigmond et al., 2013). Statistical models are good at long-term prediction but cannot model complex nonlinear relationships and face SPB challenges. Deep learning models are more flexible than numerical models and more potent than traditional statistical ones, and they have been successfully used in Earth prediction problems (Li et al., 2021; Reichstein et al., 2019). Researchers have successfully developed deep learning models to predict polar sea ice states from synoptic to sub-seasonal scales (Andersson et al., 2021; Dong et al., 2024; Li et al., 2024; Mu et al., 2023; Palerme et al., 2024; Ren et al., 2022; Ren and Li, 2023; Song et al., 2024; Wu et al., 2022; Yang et al., 2024.; Zhu et al., 2023), bringing about new potential to solve the SPB problem to improve the seasonal prediction skill from a data-driven perspective.

This work develops a seasonal sea ice prediction model named SICNetseason (V1.0) to optimize the predictions around the SPB. SICNetseason is a transformer-based deep learning model with a physically constrained loss function based on SIC morphology. It takes historical SIC and SIT data as predictors and predicts the SIC of the following 6 months. The SIC data are the satellite-observed data from the National Snow and Ice Data Center (NSIDC) (DiGirolamo et al., 2022). The SICNetseason model is trained on data from the period 1979–2019 and is tested with data from the period 2000–2019 by means of a leave-1-year-out strategy. Data from the 4 most recent years, 2020–2023, are employed to verify the model's generalizability. Experiments demonstrate that our model significantly optimizes the SPB, with a higher detrended anomaly correlation coefficient (ACC) compared with the anomaly persistence model (Persistence) and the state-of-the-art dynamic model SEAS5 from the European Centre for Medium-Range Weather Forecasts (ECMWF) (Johnson et al., 2019). Our model significantly reduces the errors of September's SIC and SIE in 4- to 5-month-lead predictions. The spring SIT data are key in optimizing the predictions around the SPB. Our model generalized well in predicting the September SIE of 2020–2023. Finally, we compare our SICNetseason model with an IceNet-inspired U-Net model (Andersson et al., 2021). IceNet is a probability prediction model for Arctic SIE based on convolutional neural network (CNN) units and the U-Net architecture. The IceNet model achieved state-of-the-art performance in predicting the probability of SIE for 6 months (Andersson et al., 2021). Therefore, we construct an IceNet-inspired U-Net model as a comparison model.

2.1 Sea ice concentration data

The SIC data of 1979–2023 are experiment data. The SIC is made up of the satellite-observed data obtained from the NSIDC. It is a daily observation derived from the Nimbus-7 Scanning Multichannel Microwave Radiometer (SMMR) and the Defense Meteorological Satellite Program (DMSP) Special Sensor Microwave Imager (SSM/I and SSMIS) (DiGirolamo et al., 2022). The projection of the SIC data is the North Polar Stereographic with a 25 km spatial resolution.

2.2 Sea ice thickness data

The SIT data are the reanalysis SIT from the Pan-Arctic Ice Ocean Modeling and Assimilation System (PIOMAS). PIOMAS is a numerical model with sea ice and ocean components, and it assimilates SIC and sea surface temperature (Zhang and Rothrock, 2003). PIOMAS SIT agrees well with in situ, airborne and satellite measurements (Schweiger et al., 2011). It is made up of daily data with an 18 km spatial resolution. Although PIOMAS generally overestimates thin ice and underestimates thick ice regions, it is widely adopted by Arctic studies (Collow et al., 2015; Kwok et al., 2020; Nakanowatari et al., 2022). The SIC and PIOMAS SIT data are converted to a Northern Polar Stereographic grid with 80 km resolution. The temporal resolution is 1 month.

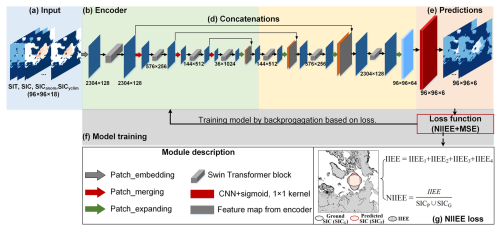

3.1 Framework of SICNetseason

The SICNetseason model is derived from a transformation-based U-Net deep learning model, Swin-UNet (Cao et al., 2023). It accepts a three-dimensional sea ice data sequence and predicts a three-dimensional SIC sequence for the future (Fig. 1). The inputs of the model are monthly mean fields. The predicted target is the SIC of the next 6 months. For example, if we make predictions in May, the 6-month predictions will cover the months from June to November.

Figure 1Framework of the model SICNetseason. (a) Input consists of SIT of the last 3 months, SIC of the last 6 months, SIC anomaly of the last 3 months and SIC climatology of 6 target months (). (b) The encoder comprises four Swin Transformer blocks and three patch-merging operators. (c) The decoder contains three Swin Transformer blocks and four patch-expanding operators. (d) Concatenations connect the feature maps from the encoder and the decoder module. (e) A CNN layer with a sigmoid activation transforms the feature map to the predicted SIC of 6-month leads. (f) Model training procedure. The loss function combines the normalized integrated ice edge error (NIIEE) and the mean square error (MSE).

The input for SICNetseason is a SIC and SIT sequence, composed of SIT of the last 3 months, SIC of the last si6 months, SIC anomaly of the last 3 months and SIC climatology of the 6 target months (Fig. 1a). We determine the length of the input factors by combining domain knowledge and manual-tuning experiments. The primary domain of knowledge we considered is the spring–fall re-emergence mechanism. This occurs between pairs of months where the ice edge is in the same position, such as in May and December (Blanchard-Wrigglesworth et al., 2011; Day et al., 2014). The spring sea ice anomaly is positively correlated with fall sea ice anomalies, and there is also a weaker re-emergence between fall sea ice anomalies and anomalies of the following spring (Bushuk et al., 2015). Therefore, we set the initial input length of the SIC–SIT–SIC anomaly as 6 months. We change the input length manually (from 6 to 1 in step one) to fine-tune the deep learning model to find the best-matched length for each factor. The SIC climatology of the target months provides an essential mean state of the prediction SIC. It represents the monthly cycle signal that IceNet has considered (Andersson et al., 2021).

The input is fed into the encoder to capture spatiotemporal correlations among SIC–SIT data sequences at different levels to form multi-scale correlation maps. The encoder comprises four Swin Transformer (Liu et al., 2021b) blocks and three patch-merging operators (Fig. 1b). A Swin Transformer block is a transformer unit integrated with shifted windows (Liu et al., 2021b). A transformer operator captures global dependencies through an attention mechanism. The shifted windows help the transformer operator capture local dependencies like the convolution operator. Therefore, local and global spatiotemporal dependencies among sea ice sequences can be captured. The patch-merging operator downscales the captured feature maps like the pooling layer in CNN models. The decoder upscales the feature maps through the patch-expanding operator and Swin Transformer blocks (Fig. 1c). The extracted correlation maps of the encoder and decoder are stacked to form fused spatiotemporal maps (Fig. 1d). A CNN layer transforms the decoded feature maps into the same shape as the target SIC sequence. Here, it is a array (Fig. 1e). As the range of SIC is 0–1, we employ the sigmoid function to activate the last feature map to transform the predicted values to 0–1.

During the training procedure, the loss is calculated between the predicted and ground values. Then, the model's parameters are trained by minimizing the loss value literately. We will explain the loss function in the following section.

3.2 Integrated ice-edge-constrained loss function

For a deep learning model, the loss function is crucial during the training procedure as it guides the optimization of the model's parameters. Here, the loss is the difference between the predicted values and the ground ones from NSIDC. Generally, the mean square error (MSE) is a fundamental loss function for prediction tasks. The MSE measures the mean state for all predicted values and cannot reflect the spatial differences between two-dimensional SIC patterns. To address the issue, we proposed a normalized integrated ice edge error (NIIEE) loss function that considers the spatial distribution of SIC to constrain the model's optimization (Fig. 1g).

The NIIEE loss is based on the integrated ice edge error (IIEE), a professional metric for sea ice predictions. The IIEE represents the error regions the prediction model overestimated and underestimated (Goessling et al., 2016). It measures the spatial similarity between two two-dimensional SIC patterns. Initially, the IIEE binaries the SIC by 15 % to describe the SIE. For the SIC prediction here, we do not perform binarization. Let PSIC and GSIC represent the predicted and ground SIC; the IIEE is calculated with Eq. (1). We normalize IIEE to the range of 0–1 to form the NIIEE loss with Eq. (2). If the NIIEE loss is 0 then the predicted SIC and the ground SIC will match spatially and numerically. The fundamental MSE loss has been demonstrated to be adequate for prediction tasks. If the number of all predicted values is N, the MSE is calculated with Eq. (3). We combine the NIIEE with MSE to be the loss function of SICNetseason. A constant scale factor, 0.01, is multiplied by NIIEE to balance its range with that of MSE; see Eq. (4).

4.1 Model training

The model is trained on a computer station with an NVIDIA Tesla V100 32-GB card. The training and test samples are constructed by step-by-step sliding. The testing period is 2000–2019. The leave-1-year-out strategy is adopted to train and/or evaluate our SICNetseason model. For example, if the testing year is 2000, the training set is from 1979 to 1999 and from 2001 to 2019. The leave-1-year-out strategy is widely adopted by statistical models to maximize the sample volume while obtaining a multi-year evaluation (Wang et al., 2022; Yuan et al., 2016). The validation set is split by 20 % from the training set. We set the batch size to be 8 and the initial learning rate to be 0.0001. We employ the early-stopping strategy to break the training procedure when the validation loss does not decrease. The model is trained three times to eliminate random errors. The testing set is run on three trained models, and the mean values are adopted as the final predictions. Data from the 4 most recent years, 2020–2023, are employed to verify the model's generalizability. Data from these 4 years do not participate in the training stage. They are fed into the trained models obtained by the leave-1-year-out strategy to obtain the predictions. The predictions are the mean values of the 20 trained models.

4.2 Evaluation metrics

The mean absolute error (MAE), binary accuracy (BACC) and detrended ACC are evaluation metrics. The MAE is for SIC, and the other two metrics are for SIE. To accurately calculate the metrics, we use the maximum observed monthly SIE since 1979 to mask the predictions. Assuming that the predicted and truth values of the ith grid are pi and gi, the number of validation grids is N. The MAE values are calculated with Eq. (5). The BACC of time t is obtained by using a value of 1 and subtracting from this the ratio of IIEE to the area of the activated grid cell region (the maximum observed SIE during 1979–2019) of t with Eq. (6). The detrended ACC of SIE is the anomaly correlation coefficient of two detrended SIE series. Each SIE series has 20 elements from 2000 to 2019.

4.3 Model skill in seasonal predictions

We compare SICNetseason with Persistence and SEAS5 to validate our model's ability to optimize the predictions around the SPB. The persistence is the anomaly persistence model. It assumes that the anomaly is constant in time and estimates the target SIC values by adding the current anomaly to the climate mean state at the target time, widely adopted as a benchmark for sea ice prediction (Wang et al., 2016). The SEAS5 is a new seasonal forecast system from the ECMWF that shows excellent sea ice prediction skills (Johnson et al., 2019). A BACC value of 100 % indicates that the predicted SIE matches the observed SIE by 100 % spatially. The metrics are calculated for 20 testing years, 2000–2019, in a leave-1-year-out training–testing strategy. As the SPB occurred in the target summer month, we focus on the 4 summer months of June to September.

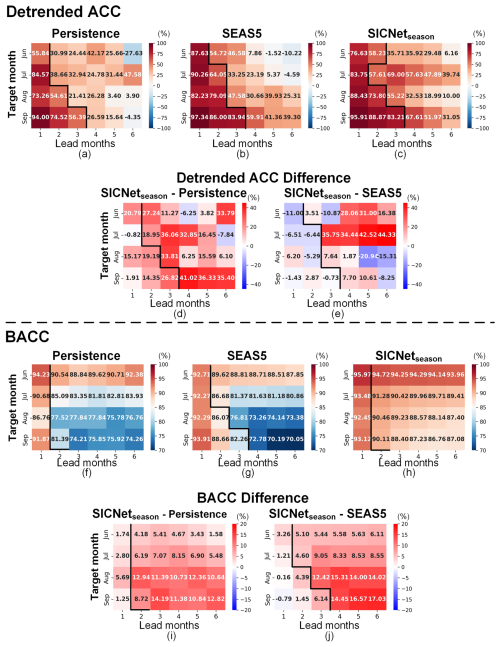

Figure 2 shows the detrended ACC and BACC of the target months, June–September, based on 6-month-lead predictions. As shown in Fig. 2a and b, the predictions of Persistence and SEAS5 show an apparent SPB: the detrended ACC drops sharply when the predictions are made earlier than May, with a maximum ACC gap between 2 adjacent lead months marked by black lines. Taking September, for example, the detrend ACC is 56.39 % for Persistence when the prediction is made in June (3-month lead). Then, it decreases to 26.59 % in May's prediction (4-month lead); see Fig. 2a. For SEAS5, the ACC of June's prediction is 83.94 %, which then drops to 59.91 % for May's prediction, forming a 24.03 % ACC gap; see Fig. 2b. Although the SICNetseason prediction also shows an SPB feature (see the black line in Fig. 2c), the ACC of May's prediction is improved to 67.61 %, and the ACC gap is reduced to 15.6 %. Furthermore, the ACC difference is calculated between SICNetseason and Persistence and SEAS5. Compared with Persistence, SICNetseason improves the ACC in most predictions; see Fig. 2d. The ACC improvements along the SPB flag are more than 30 % on average (the lead months right on the black line in Fig. 2d). Compared with SEAS5, SICNetseason also improves the prediction skill of the SPB. When the target months are June and July, SICNetseason shows a much higher prediction skill than SEAS5 in 4- to 6-month-lead predictions; see Fig. 2e. For the target month of September, SICNetseason improves the ACC by 7.70 % or 10.61 % compared to SEAS5 when the prediction is made in May or April (4- or 5-month-lead in Fig 2e). For the target month of August or September, SICNetseason shows lower ACCs than SEAS5 when prediction is made during or before March (5- or 6-month-lead for August or September). However, for the predictions made adjacent to the SPB flag line, SICNetseason achieves larger ACCs than SEAS5 (values right along the black line in Fig. 2e). Therefore, SICNetseason optimizes the SPBs significantly compared to the well-known numerical model.

The BACC of SEAS5 also shows a similar SPB characteristic to the ACC. A sharp BACC drop occurred when the prediction was made during and before May; see the black line in Fig. 2g. The maximum BACC gaps of Persistence and SICNetseason occurred in the second lead month. However, the maximum BACC gap of SICNetseason is about 2 %, much lower than the 10 % gap of Persistence and SEAS5. Compared with Persistence and SEAS5, SICNetseason improves the BACC by more than 10 % in predicting the SIE of August and September (3 to 6-month-lead; see Fig. 2i and j).

Figure 2Detrended ACC of SIE and detrended BACC of SIE, as well as the differences between Persistence, SEAS5 and SICNetseason from June to September, averaged for 2000–2019. (a)–(c) Detrended ACC of three models. Two detrend SIE series (predicted and observed) calculate each value. (d)–(e) Detrended ACC differences between SICNetseason and Persistence and SEAS5. (f)–(h) BACC of three models. Each BACC is a mean value of 20 testing years. (i)–(j) BACC differences between SICNetseason and Persistence and SEAS5. The black line indicates the SPB: a maximum decrease between 2 adjacent lead months. The red signifies a high or improvement in ACC and/or BACC, and the blue signifies a decrease.

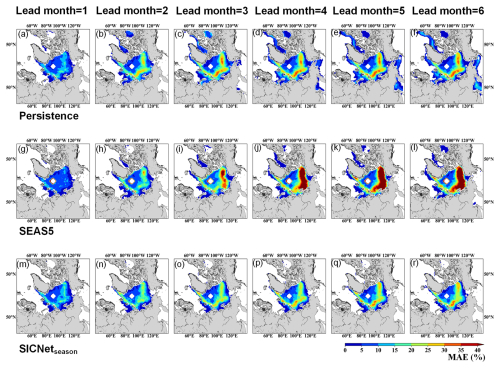

4.4 Performance in predicting SIC of September

As September's sea ice draws wider attention than other months, we calculate the MAE of the SIC of September predicted by three models. Figure 3 shows the spatial MAE of Persistence, SEAS5 and SICNetseason based on 6 lead months. The MAE in the three models is not very different for the first 2 lead months. When the lead month duration is 1, the MAE of SEAS5 is slightly better than that of Persistence and SICNetseason, indicating that the SEAS5 model performs well in monthly predictions. This result may be due to the good atmospheric initialization in SEAS5, which beat many machine learning and dynamical models in sub-seasonal-scale SIC prediction (Bushuk et al., 2024). However, when the lead month duration is longer than 3, the SEAS5 MAEs are much more than 45 % in the Pacific sector, mainly containing the Beaufort Sea, the Chukchi Sea, the East Siberian Sea and the Laptev Sea; see Fig. 3j–l. SICNetseason reduces the MAEs to 20 %–30 % for most regions in the Pacific Arctic; see Fig. 3q–r. Compared with Persistence, SICNetseason also reduces MAEs by 5 %–10 % in the four mentioned local seas; see Fig. 3d–f. Therefore, SICNetseason significantly reduces the SIC errors of September in seasonal-scale predictions (3- to 6-month lead).

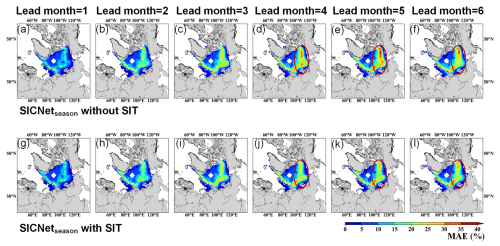

4.5 SIT contributions to seasonal predictions

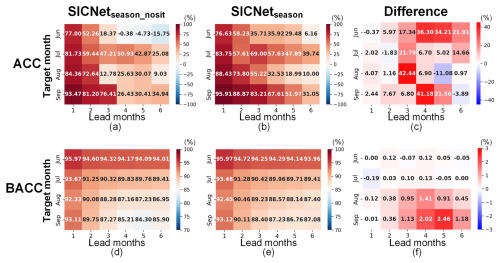

We further conduct a comparison experiment to validate the role of SIT data in seasonal predictions based on SICNetseason. The model without SIT as an input is named SICNetseason_nosit. The other settings for SICNetseason_nosit are the same as those for SICNetseason. The detrended ACC and BACC are shown in Fig. 4.

Without the SIT data as input, the model's prediction skill drops noticeably in 3- to 6-month-lead predictions; see Fig. 4a. For the target month of September, the detrend ACC is 76.41 % when the prediction is made in June (3-month lead). Then, the ACC drops to 26.43 % for May's prediction (4-month lead). By including SIT data as input, the ACC of May's prediction is improved by 41.18 % in the model SICNetseason; Fig. 4c. For the target month of August, the ACC improvement for May's prediction (3-month lead) as a result of including SIT data is 42.44 %. Therefore, the SIT data are important to improve the model's prediction skill with regard to SPB.

For the target months of August and September, the BACCs of SICNetseason_nosit show an apparent drop in 3- to 6-month-lead predictions. By including SIT data as the model's input, the BACC improvement is 0.95 % and 2.02 % for the target months of August and September for May's predictions; see Fig. 4f. Then, we calculate the MAE of the target month of September; see Fig. 5. The MAEs of the first 2 lead months are similar for the two models. When the lead month duration is larger than 3, the MAEs of SICNetseason_nosit in the Beaufort Sea, the East Siberian Sea and the Laptev Sea are 30 %–45 %, as shown by the red circles in Fig. 5d–f. By including SIT data, the MAEs in the three mentioned regions are reduced to 20 %–35 % by SICNetseason, as shown with the red circles in Fig. 5j–l. Therefore, including SIT data reduces the errors of September's SIC by more than 10 % in seasonal-scale predictions.

Figure 4Detrended ACC of SICNetseason_nosit (a) and SICNetseason (b). (c) ACC difference obtained with SICNetseason minus SICNetseason_nosit. BACC of SICNetseason_nosit (d) and SICNetseason (e). (f) BACC difference as in (c). The red signifies a high or improvement in ACC and/or BACC, and the blue signifies a decrease.

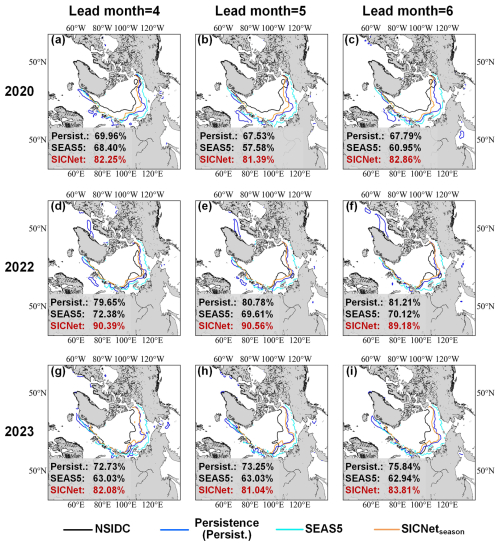

4.6 Generalizability in predicting the SIEs of 2020–2023

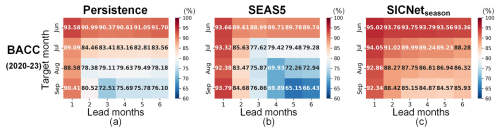

To verify our model's generalizability in predicting the SIEs of recent years, we employed the 20 trained models to predict the SIE of the 4 most recent years of 2020–2023. The 20 models are trained for 2000–2019, as mentioned in the earlier sections. The data from the period 2020–2023 are “blind” for the models. The mean values of the 20 models' predictions are the final predictions. As the temporal span of 4 years is too short for calculating ACC, we use the BACC as the metric (Fig. 6). Compared with Persistence and SEAS5, SICNetseason achieves higher BACCs in predicting the SIEs of August and September. For the target month of September, the BACC of SICNetseaon is 10 % higher than those of the other two models in 3- to 6-month-lead predictions.

We draw the observed and predicted September SIEs of 2020, 2022 and 2023 in Fig. 7. The September SIEs in 2020 and 2023 (4.0×106 and 4.37×106 km2) are the second and sixth lowest recorded in the Arctic since 1979. The SIE in September 2022 (4.90 mil km2) has been so large since 2015. We focus on the seasonal-scale predictions with 4- to 6-month leads. Our SICNetseason model shows obvious advantages over SEAS5 and Persistence. For predictions made during or before May, with lead months of 4 to 6, the BACCs of SICNetseason are much higher than those of Persistence and SEAS5; see Fig. 7. For May's prediction, our model achieved a BACC of 82.25 %, 90.39 % and 82.08 % in 2020, 2022 and 2023, more than 10 % higher compared to Persistence and SEAS5; see Fig. 7a, d and g. Therefore, the SICNetseason model achieves good generalizability in predicting the SIEs of 2020–2023.

Figure 6BACC of 2020–2023. (a) Persistence, (b) SEAS5 and (c) SICNetseason. Each value is a mean value of the 4 testing years. The horizontal axis represents the 6 lead months, and the vertical axis represents the target months of June to September. The red signifies a high or improvement in ACC and/or BACC, and the blue signifies a decrease.

4.7 Comparison with the representative deep learning model

We compare SICNetseason against the representative deep learning sea ice prediction model, the U-Net (IceNet-inspired) model. IceNet is a seasonal sea ice prediction model that performs state-of-the-art SIE probability prediction (Andersson et al., 2021). It is a CNN-based U-Net model for classification tasks, and it outputs the probability of three classes: open water (SIC ≤ 15 %), marginal ice (15 % < SIC < 80 %) and full ice (SIC ≥ 80 %). Differently, our SICNetseason outputs the 0 %–100 % range SIC values. The IceNet inputs consist of 50 monthly mean variables, including SIC, 11 climate variables, statistical SIC forecasts and metadata. The original IceNet model has some unique designs in terms of inputs and training strategy. As we focus on the differences in model structures, we construct a U-Net (IceNet-inspired) model for comparison.

We set the inputs (including SIT data) of U-Net (IceNet-inspired) to the same ones as SICNetseason. The loss function is also set as the NIIEE together with the MSE. We set the output layer of U-Net (IceNet-inspired) as a sigmoid activation function to output continuous values of 0 %–100 %. We also change the number of CNN filters to make the number of training parameters in U-Net (IceNet-inspired) equal to that in SICNetseason, about 140 million. The training and testing settings of U-Net (IceNet-inspired) are the same as those of SICNetseason. U-Net (IceNet-inspired) is trained using the same leave-1-year-out strategy as SICNetseason. For example, if the testing year is 2019, the training set is made up of data from the period 1979–2018, and the testing data are from 2019. Then, the testing data are moved to 2018; the remaining data (1979–2017, 2019) make up the training set. For each training–testing pair, the model is trained three times to eliminate randomness, and the final prediction for the testing data is the mean value of the three models.

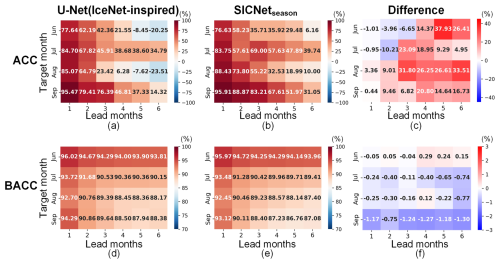

Figure 8Detrended ACC of U-Net (IceNet-inspired) (a) and SICNetseason (b). (c) ACC difference obtained by SICNetseason minus U-Net (IceNet-inspired). BACC of U-Net (IceNet-inspired) (d) and SICNetseason (e). (f) BACC difference as in (c). The red signifies a high or improvement in ACC and/or BACC, and the blue signifies a decrease.

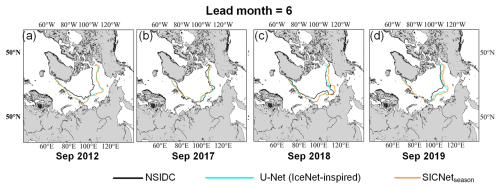

Figure 9The predicted September SIEs of U-Net (IceNet-inspired) and SICNetseason in for 6-month lead: (a) 2012, (b) 2017, (c) 2018 and (d) 2019.

Figure 8 shows the detrend ACC and BACC, as well as the differences between the two models. Compared with the U-Net (IceNet-inspired) model, our SICNetseason model significantly improves the ACC for most predictions; see Fig. 8c. For the target months of August and September, the SPB feature is obvious in U-Net (IceNet-inspired): the maximum ACC gap is about 40 % and 30 %, respectively, for predictions made in May and June; see Fig. 8a. Our SICNetseason model optimizes the ACC gap with an improvement of 31.8 % and 20.8 % for May's predictions; Fig. 8c. The ACC improvements are also larger than 15 % for predictions made before May. Therefore, compared with the state-of-the-art deep learning model U-Net (IceNet-inspired), our model achieves more skillful seasonal predictions by optimizing the predictions around the SPB.

Unlike the ACC values, the BACC values of U-Net (IceNet-inspired) are more significant than those of SICNetseason for most predictions; see Fig. 8f. This result implies that U-Net (IceNet-inspired) depends more on SIE trends than SICNetseason. This difference can be attributed to the distinct fundamental units employed by the two models. The U-Net (IceNet-inspired) model is a CNN-based model, and the weight-sharing mechanism of convolutional kernels forces the model to capture the most “common” local dependencies spatially. Though representative, these common local dependencies tend to yield smoother model outputs. SICNetseason is a transformer-based model. The attention mechanism of the transformer can capture global dependencies without weight-sharing. As a result, “personalized” global dependencies are extracted, and the output is not smooth like the output of a CNN-based model. The common local dependencies have more apparent trend features than the personalized global dependencies. Figure 9 shows the September SIEs predicted by U-Net (IceNet-inspired) and SICNetseason in the 6-month lead. The SIEs of U-Net (IceNet-inspired) are smoother than those of SICNetseason. For 2012 and 2017, the SIE locations of the two models are very similar. For the other 2 years, the SIEs of U-Net (IceNet-inspired) match the observed SIEs better than those of SICNetseason. However, the SIEs of U-Net (IceNet-inspired) are over-smoothed and fail to characterize some abnormal characteristics, such as the SIE in September 2018; see Fig. 9c.

Therefore, our transformer-based SICNetseason is more skillful than the representative CNN-based model U-Net (IceNet-inspired) in optimizing the predictions around the SPB. SICNetseason exhibits a lower dependency on SIE trends and fewer smooth results than the CNN-based model.

This study develops a deep learning model, SICNetseason, to predict the Arctic SIC on a seasonal scale. The model is derived from a Swin-UNet architecture. It inputs the historical SIC, SIT and SIC climatology of target months and predicts the SIC of the next 6 months. A spatially constrained loss function NIIEE is employed to train the model by considering the sea ice distribution. We employ a 20-year (2000–2019) testing set to validate the model's performance. The summer season, June to September, is the target period. The detrend ACC, BACC and MAE are metrics. Comparison experiments with Persistence and seasonal predictions of SEAS5 are made to validate our model's performance. In particular, an ablation experiment is carried out to investigate the role of SIT data in optimizing the predictions around the SPB. A generalizability experiment with data from the last 4 years, 2020–2023, is carried out – the seasonal predictions of September SIEs are analyzed. Finally, we discuss the advantages and disadvantages of our model and the typical CNN-based model, U-Net (IceNet-inspired). Given the mentioned efforts, our study draws the following conclusions.

First, our deep learning model, SICNetseason, is skillful in predicting the Arctic sea ice seasonally. Compared with the dynamic model SEAS5, SICNetseason optimizes the SPB significantly. The detrended ACC of September SIE predicted by SICNetseason in May and April is improved by 7.7 % and 10.61 % over the ACC predicted by SEAS5. Compared with the anomaly persistence benchmark, the mentioned improvement is 41.02 % and 36.33 %, respectively. Our deep learning model significantly reduces the prediction errors of September's SIC on seasonal scales compared to SEAS5 and Persistence, a 20 %–30 % reduction measured by MAE.

Second, the spring SIT data are key in optimizing the predictions around the SPB, contributing to a more than 20 % ACC enhancement in September's SIE at 4 to 5-month-lead predictions. By including SIT data, the MAEs in the Beaufort Sea, the East Siberian Sea and the Laptev Sea are reduced by more than 10 % compared with those without SIT data.

Third, our model achieves good generalizability in predicting the September SIEs of 2020–2023. When predicting the September's SIE in 2020 and 2023 (second and sixth lowest record) in May, SICNetseason achieved a BACC of 82.25 % and 82.08 %, about 12 % and 10 % higher than Persistence and SEAS5.

Fourth, our transformer-based SICNetseason is more skillful than the CNN-based U-Net (IceNet-inspired) model in seasonal sea ice predictions. Our SICNetseason model optimizes the ACC gap with an improvement of 31.8 % and 20.8 % for May's predictions compared to U-Net (IceNet-inspired). SICNetseason exhibits a lower dependency on SIE trends and fewer smooth results than the CNN-based model. This is due to the attention mechanism of the transformer operator extracting personalized global dependencies, while the CNN operator captures the most common local dependencies globally. The common local dependencies smooth the map and depend more on the trend than personalized ones.

The code, the exact input–output data and the saved well-trained weights of the developed model SICNetseason are available at https://doi.org/10.5281/zenodo.14561423 (renyibin-iocas, 2024).

All the authors designed the experiments and carried them out. YR developed and evaluated the model. XL designed the experiments and revised the paper. YW analyzed the experimental results.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors acknowledge the valuable comments from reviewers and editors.

This work was supported by the National Science Foundation of China (grant nos. 42206202 and 42221005); Laoshan Laboratory Innovation Project (grant no. LSKJ202202302); and, in part, by the China–Portugal Xinghai Belt and Road (grant no. 2022YFE0204600).

This paper was edited by Christopher Horvat and reviewed by two anonymous referees.

Andersson, T. R., Hosking, J. S., Pérez-Ortiz, M., Paige, B., Elliott, A., Russell, C., Law, S., Jones, D. C., Wilkinson, J., Phillips, T., Byrne, J., Tietsche, S., Sarojini, B. B., Blanchard-Wrigglesworth, E., Aksenov, Y., Downie, R., and Shuckburgh, E.: Seasonal Arctic sea ice forecasting with probabilistic deep learning, Nat. Commun, 12, 1, https://doi.org/10.1038/s41467-021-25257-4, 2021.

Blackport, R., Screen, J. A., van der Wiel, K., and Bintanja, R.: Minimal influence of reduced Arctic sea ice on coincident cold winters in mid-latitudes, Nat. Clim. Chang., 9, 697–704, https://doi.org/10.1038/s41558-019-0551-4, 2019.

Blanchard-Wrigglesworth, E., Armour, K. C., Bitz, C. M., and Deweaver, E.: Persistence and inherent predictability of arctic sea ice in a GCM ensemble and observations, J. Climate, 24, 231–250, https://doi.org/10.1175/2010JCLI3775.1, 2011.

Blanchard-Wrigglesworth, E., Cullather, R. I., Wang, W., Zhang, J., and Bitz, C. M.: Model forecast skill and sensitivity to initial conditions in the seasonal Sea Ice Outlook, Geophys. Res. Lett., 42, 8042–8048, https://doi.org/10.1002/2015GL065860, 2015.

Blanchard-Wrigglesworth, E., Bushuk, M., Massonnet, F., Hamilton, L. C., Bitz, C. M., Meier, W. N., and Bhatt, U. S.: Forecast Skill of the Arctic Sea Ice Outlook 2008–2022, Geophys. Res. Lett., 50, 6, https://doi.org/10.1029/2022GL102531, 2023.

Bonan, D. B., Bushuk, M., and Winton, M.: A spring barrier for regional predictions of summer Arctic sea ice, Geophys. Res. Lett., 46, 5937–5947, https://doi.org/10.1029/2019GL082947, 2019.

Bushuk, M., Giannakis, D., and Majda, A. J.: Arctic Sea Ice Reemergence: The Role of Large-Scale Oceanic and Atmospheric Variability, J. Climate, 28, 5477–5509, https://doi.org/10.1175/JCLI-D-14-00354.1, 2015.

Bushuk, M., Winton, M., Bonan, D. B., Blanchard-Wrigglesworth, E., and Delworth, T. L.: A mechanism for the Arctic sea ice spring predictability barrier, Geophys. Res. Lett., 47, 13, https://doi.org/10.1029/2020GL088335, 2020.

Bushuk, M., Zhang, Y., Winton, M., Hurlin, B., Delworth, T., Lu, F., Jia, L., Zhang, L., Cooke, W., Harrison, M., Johnson, N. C., Kapnick, S., Mchugh, C., Murakami, H., Rosati, A., Tseng, K.-C., Wittenberg, A. T., Yang, X., and Zeng, A. F.: Mechanisms of Regional Arctic Sea Ice Predictability in Two Dynamical Seasonal Forecast Systems, J. Climate, 35, 4207–2431, https://doi.org/10.1175/JCLI-D-21, 2022.

Bushuk, M., Ali, S., Bailey, D. A., Bao, Q., Batté, L., Bhatt, U. S., Blanchard-Wrigglesworth, E., Blockley, E., Cawley, G., Chi, J., Counillon, F., Coulombe, P. G., Cullather, R. I., Diebold, F. X., Dirkson, A., Exarchou, E., Göbel, M., Gregory, W., Guemas, V., Hamilton, L., He, B., Horvath, S., Ionita, M., Kay, J. E., Kim, E., Kimura, N., Kondrashov, D., Labe, Z. M., Lee, W. S., Lee, Y. J., Li, C., Li, X., Lin, Y., Liu, Y., Maslowski, W., Massonnet, F., Meier, W. N., Merryfield, W. J., Myint, H., Acosta Navarro, J. C., Petty, A., Qiao, F., Schröder, D., Schweiger, A., Shu, Q., Sigmond, M., Steele, M., Stroeve, J., Sun, N., Tietsche, S., Tsamados, M., Wang, K., Wang, J., Wang, W., Wang, Y., Wang, Y., Williams, J., Yang, Q., Yuan, X., Zhang, J., and Zhang, Y.: Predicting September Arctic Sea Ice: A Multimodel Seasonal Skill Comparison, B. Am. Meteorol. Soc., 105, E1170–E1203, https://doi.org/10.1175/BAMS-D-23-0163.1, 2024.

Cao, H., Wang, Y., Chen, J., Jiang, D., Zhang, X., Tian, Q., and Wang, M.: Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation, in: Computer Vision – ECCV 2022 Workshops, 205–218, 2023.

Cao, Y., Liang, S., Chen, X., He, T., Wang, D., and Cheng, X.: Enhanced wintertime greenhouse effect reinforcing Arctic amplification and initial sea-ice melting, Sci. Rep., 7, 8462, https://doi.org/10.1038/s41598-017-08545-2, 2017.

Cao, Y., Liang, S., Sun, L., Liu, J., Cheng, X., Wang, D., Chen, Y., Yu, M., and Feng, K.: Trans-Arctic shipping routes expanding faster than the model projections, Global Environ. Change, 73, 102488, https://doi.org/10.1016/j.gloenvcha.2022.102488, 2022.

Chevallier, M., Mélia, D. S. Y., Voldoire, A., Déqué, M., and Garric, G.: Seasonal forecasts of the pan-arctic sea ice extent using a GCM-based seasonal prediction system, J. Climate, 26, 6092–6104, https://doi.org/10.1175/JCLI-D-12-00612.1, 2013.

Cohen, J., Screen, J. A., Furtado, J. C., Barlow, M., Whittleston, D., Coumou, D., Francis, J., Dethloff, K., Entekhabi, D., Overland, J., and Jones, J.: Recent Arctic amplification and extreme mid-latitude weather, Nat. Geosci., 7, 627–637, https://doi.org/10.1038/ngeo2234, 2014.

Collow, T. W., Wang, W., Kumar, A., and Zhang, J.: Improving arctic sea ice prediction using PIOMAS initial sea ice thickness in a coupled ocean-atmosphere model, Mon. Weather Rev., 143, 4618–4630, https://doi.org/10.1175/MWR-D-15-0097.1, 2015.

Day, J. J., Tietsche, S., and Hawkins, E.: Pan-arctic and regional sea ice predictability: Initialization month dependence, J. Climate, 27, 4371–4390, https://doi.org/10.1175/JCLI-D-13-00614.1, 2014.

DiGirolamo, N. E., Parkinson, C. L., Cavalieri, D. J., Gloersen, P., and Zwally, H. J.: Sea ice concentrations from Nimbus-7 SMMR and DMSP SSM/I-SSMIS passive microwave data, Boulder, Colorado USA, NASA National Snow and Ice Data Center Distributed Active Archive Center, https://doi.org/10.5067/MPYG15WAA4WX, 2022.

Ding, Q., Schweiger, A., L'Heureux, M., Battisti, D. S., Po-Chedley, S., Johnson, N. C., Blanchard-Wrigglesworth, E., Harnos, K., Zhang, Q., Eastman, R., and Steig, E. J.: Influence of high-latitude atmospheric circulation changes on summertime Arctic sea ice, Nat. Clim. Chang., 7, 289–295, https://doi.org/10.1038/nclimate3241, 2017.

Dong, X., Yang, Q., Nie, Y., Zampieri, L., Wang, J., Liu, J., and Chen, D.: Antarctic sea ice prediction with A convolutional long short-term memory network, Ocean Model., 190, 102386, https://doi.org/10.1016/j.ocemod.2024.102386, 1 August 2024.

England, M. R., Eisenman, I., Lutsko, N. J., and Wagner, T. J. W.: The Recent Emergence of Arctic Amplification, Geophys. Res. Lett., 48, 15, https://doi.org/10.1029/2021GL094086, 2021.

Francis, J. A. and Vavrus, S. J.: Evidence linking Arctic amplification to extreme weather in mid-latitudes, Geophys. Res. Lett., 39, 6, https://doi.org/10.1029/2012GL051000, 2012.

Goessling, H. F., Tietsche, S., Day, J. J., Hawkins, E., and Jung, T.: Predictability of the Arctic sea ice edge, Geophys. Res. Lett., 43, 1642–1650, https://doi.org/10.1002/2015GL067232, 2016.

Gregory, W., Tsamados, M., Stroeve, J., and Sollich, P.: Regional september sea ice forecasting with complex networks and gaussian processes, Weather Forecast., 35, 793–806, https://doi.org/10.1175/WAF-D-19-0107.1, 2020.

Guemas, V., Blanchard-Wrigglesworth, E., Chevallier, M., Day, J. J., Déqué, M., Doblas-Reyes, F. J., Fučkar, N. S., Germe, A., Hawkins, E., Keeley, S., Koenigk, T., Salas y Mélia, D., and Tietsche, S.: A review on Arctic sea-ice predictability and prediction on seasonal to decadal time-scales, Q. J. Roy. Meteorol. Soc., 142, 546–561, https://doi.org/10.1002/qj.2401, 2016.

Jahn, A., Holland, M. M., and Kay, J. E.: Projections of an ice-free Arctic Ocean, Nat. Rev. Earth Environ., 5, 164–176, https://doi.org/10.1038/s43017-023-00515-9, 1 March 2024.

Jenkins, M. and Dai, A.: The Impact of Sea-Ice Loss on Arctic Climate Feedbacks and Their Role for Arctic Amplification, Geophys. Res. Lett., 48, 15, https://doi.org/10.1029/2021GL094599, 2021.

Johnson, S. J., Stockdale, T. N., Ferranti, L., Balmaseda, M. A., Molteni, F., Magnusson, L., Tietsche, S., Decremer, D., Weisheimer, A., Balsamo, G., Keeley, S. P. E., Mogensen, K., Zuo, H., and Monge-Sanz, B. M.: SEAS5: the new ECMWF seasonal forecast system, Geosci. Model Dev., 12, 1087–1117, https://doi.org/10.5194/gmd-12-1087-2019, 2019.

Kim, Y. J., Kim, H.-C., Han, D., Lee, S., and Im, J.: Prediction of monthly Arctic sea ice concentrations using satellite and reanalysis data based on convolutional neural networks, The Cryosphere, 14, 1083–1104, https://doi.org/10.5194/tc-14-1083-2020, 2020.

Kapsch, M. L., Graversen, R. G., and Tjernström, M.: Springtime atmospheric energy transport and the control of Arctic summer sea-ice extent, Nat. Clim. Chang., 3, 744–748, https://doi.org/10.1038/nclimate1884, 2013.

Kim, Y. H., Min, S. K., Gillett, N. P., Notz, D., and Malinina, E.: Observationally-constrained projections of an ice-free Arctic even under a low emission scenario, Nat. Commun., 14, 1, https://doi.org/10.1038/s41467-023-38511-8, 2023.

Kumar, A., Perlwitz, J., Eischeid, J., Quan, X., Xu, T., Zhang, T., Hoerling, M., Jha, B., and Wang, W.: Contribution of sea ice loss to Arctic amplification, Geophys. Res. Lett., 37, 21, https://doi.org/10.1029/2010GL045022, 2010.

Kwok, R., Cunningham, G. F., Kacimi, S., Webster, M. A., Kurtz, N. T., and Petty, A. A.: Decay of the Snow Cover Over Arctic Sea Ice From ICESat-2 Acquisitions During Summer Melt in 2019, Geophys. Res. Lett., 47, 12, https://doi.org/10.1029/2020GL088209, 2020.

Landy, J. C., Dawson, G. J., Tsamados, M., Bushuk, M., Stroeve, J. C., Howell, S. E. L., Krumpen, T., Babb, D. G., Komarov, A. S., Heorton, H. D. B. S., Belter, H. J., and Aksenov, Y.: A year-round satellite sea-ice thickness record from CryoSat-2, Nature, 609, 517–522, https://doi.org/10.1038/s41586-022-05058-5, 2022.

Li, X., Liu, B., Zheng, G., Ren, Y., Zhang, S., Liu, Y., Gao, L., Liu, Y., Zhang, B., and Wang, F.: Deep-learning-based information mining from ocean remote-sensing imagery, Natl. Sci. Rev., 7, 10, https://doi.org/10.1093/NSR/NWAA047, 2021.

Li, Y., Qiu, Y., Jia, G., Yu, S., Zhang, Y., Huang, L., and Lepparanta, M.: An Explainable Deep Learning Model for Daily Sea Ice Concentration Forecast, IEEE T. Geosci. Remote Sens., 62, 1–17, https://doi.org/10.1109/TGRS.2024.3386930, 2024.

Liang, X., Zhao, F., Li, C., Zhang, L., and Li, B.: Evaluation of ArcIOPS sea ice forecasting products during the ninth chinare-arctic in summer 2018, Adv. Polar Sci., 31, 14–25, https://doi.org/10.13679/j.advps.2019.0019, 2020.

Lindsay, R. W., Zhang, J., Schweiger, A. J., and Steele, M. A.: Seasonal predictions of ice extent in the Arctic Ocean, J. Geophys. Res.-Oceans, 113, C2, https://doi.org/10.1029/2007JC004259, 2008.

Liu, Z., Risi, C., Codron, F., He, X., Poulsen, C. J., Wei, Z., Chen, D., Li, S., and Bowen, G. J.: Acceleration of western Arctic sea ice loss linked to the Pacific North American pattern, Nat. Commun., 12, 12, https://doi.org/10.1038/s41467-021-21830-z, 2021a.

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., and Guo, B.: Swin Transformer: Hierarchical Vision Transformer using Shifted Windows, in: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 9992–10002, https://doi.org/10.1109/ICCV48922.2021.00986, 2021b.

Merryfield, W. J., Lee, W. S., Wang, W., Chen, M., and Kumar, A.: Multi-system seasonal predictions of Arctic sea ice, Geophys. Res. Lett., 40, 1551–1556, https://doi.org/10.1002/grl.50317, 2013.

Min, C., Yang, Q., Chen, D., Yang, Y., Zhou, X., Shu, Q., and Liu, J.: The Emerging Arctic Shipping Corridors, Geophys. Res. Lett., 49, 10, https://doi.org/10.1029/2022GL099157, 2022.

Msadek, R., Vecchi, G. A., Winton, M., and Gudgel, R. G.: Importance of initial conditions in seasonal predictions of Arctic sea ice extent, Geophys. Res. Lett., 41, 5208–5215, https://doi.org/10.1002/2014GL060799, 2014.

Mu, B., Luo, X., Yuan, S., and Liang, X.: IceTFT v1.0.0: interpretable long-term prediction of Arctic sea ice extent with deep learning , Geosci. Model Dev., 16, 4677–4697, https://doi.org/10.5194/gmd-16-4677-2023, 2023.

Mu, L., Nerger, L., Tang, Q., Loza, S. N., Sidorenko, D., Wang, Q., Semmler, T., Zampieri, L., Losch, M., and Goessling, H. F.: Toward a data assimilation system for seamless sea ice prediction based on the AWI climate model, J. Adv. Model Earth Syst., 12, 4, https://doi.org/10.1029/2019MS001937, 2020.

Nakanowatari, T., Inoue, J., Zhang, J., Watanabe, E., and Kuroda, H.: A New Norm for Seasonal Sea Ice Advance Predictability in the Chukchi Sea: Rising Influence of Ocean Heat Advection, J. Climate, 35, 2723–2740, https://doi.org/10.1175/JCLI-D-21-0425.1, 2022.

Olonscheck, D., Mauritsen, T., and Notz, D.: Arctic sea-ice variability is primarily driven by atmospheric temperature fluctuations, Nat. Geosci., 12, 430–434, https://doi.org/10.1038/s41561-019-0363-1, 2019.

Palerme, C., Lavergne, T., Rusin, J., Melsom, A., Brajard, J., Kvanum, A. F., Macdonald Sørensen, A., Bertino, L., and Müller, M.: Improving short-term sea ice concentration forecasts using deep learning, The Cryosphere, 18, 2161–2176, https://doi.org/10.5194/tc-18-2161-2024, 2024.

Pithan, F. and Mauritsen, T.: Arctic amplification dominated by temperature feedbacks in contemporary climate models, Nat. Geosci., 7, 181–184, https://doi.org/10.1038/ngeo2071, 2014.

Reichstein, M., Camps-Valls, G., Stevens, B., Jung, M., Denzler, J., Carvalhais, N., and Prabhat: Deep learning and process understanding for data-driven Earth system science, Nature, 566, 195–204, https://doi.org/10.1038/s41586-019-0912-1, 2019.

Ren, Y. and Li, X.: Predicting the Daily Sea Ice Concentration on a Subseasonal Scale of the Pan-Arctic During the Melting Season by a Deep Learning Model, IEEE T. Geosci. Remote Sens., 61, 1–15, https://doi.org/10.1109/TGRS.2023.3279089, 2023.

Ren, Y., Li, X., and Zhang, W.: A data-driven deep learning model for weekly sea ice concentration prediction of the Pan-Arctic during the melting season, IEEE T. Geosci. Remote Sens., 60, 1–19, https://doi.org/10.1109/TGRS.2022.3177600, 2022.

renyibin-iocas: renyibin-iocas/SICNetseason: SICNetseason code and input/output data, Zenodo, https://doi.org/10.5281/zenodo.14561423, 2024.

Schweiger, A., Lindsay, R., Zhang, J., Steele, M., Stern, H., and Kwok, R.: Uncertainty in modeled Arctic sea ice volume, J. Geophys. Res.-Oceans, 116, C8, https://doi.org/10.1029/2011JC007084, 2011.

Screen, J. A. and Simmonds, I.: The central role of diminishing sea ice in recent Arctic temperature amplification, Nature, 464, 1334–1337, 2010.

Screen, J. A., Simmonds, I., Deser, C., and Tomas, R.: The atmospheric response to three decades of observed arctic sea ice loss, J. Climate, 26, 1230–1248, https://doi.org/10.1175/JCLI-D-12-00063.1, 2013.

Shu, Q., Wang, Q., Arthun, M., Wang, S., Song, Z., Zhang, M., and Qiao, F.: Arctic Ocean Amplification in a warming climate in CMIP6 models, Sci. Adv., 8, eabn9755, https://doi.org/10.1126/sciadv.abn9755, 2022.

Sigmond, M., Fyfe, J. C., Flato, G. M., Kharin, V. V., and Merryfield, W. J.: Seasonal forecast skill of Arctic sea ice area in a dynamical forecast system, Geophys. Res. Lett., 40, 529–534, https://doi.org/10.1002/grl.50129, 2013.

Song, C., Zhu, J., and Li, X.: Assessments of Data-Driven Deep Learning Models on One-Month Predictions of Pan-Arctic Sea Ice Thickness, Adv. Atmos. Sci., 41, 1379–1390, https://doi.org/10.1007/s00376-023-3259-3, 2024.

Stroeve, J. and Notz, D.: Insights on past and future sea-ice evolution from combining observations and models, Glob. Planet. Change, 135, 119–132, https://doi.org/https://doi.org/10.1016/j.gloplacha.2015.10.011, 2015.

Tietsche, S., Day, J. J., Guemas, V., Hurlin, W. J., Keeley, S. P. E., Matei, D., Msadek, R., Collins, M., and Hawkins, E.: Seasonal to interannual Arctic sea ice predictability in current global climate models, Geophys. Res. Lett., 41, 1035–1043, https://doi.org/10.1002/2013GL058755, 2014.

Wang, L., Yuan, X., Ting, M., and Li, C.: Predicting summer arctic sea ice concentration intraseasonal variability using a vector autoregressive model, J. Climate, 29, 1529–1543, https://doi.org/10.1175/JCLI-D-15-0313.1, 2016.

Wang, W., Chen, M., and Kumar, A.: Seasonal prediction of arctic sea ice extent from a coupled dynamical forecast system, Mon. Weather Rev., 141, 1375–1394, https://doi.org/10.1175/MWR-D-12-00057.1, 2013.

Wang, Y., Yuan, X., Bi, H., Bushuk, M., Liang, Y., Li, C., and Huang, H.: Reassessing seasonal sea ice predictability of the Pacific-Arctic sector using a Markov model, The Cryosphere, 16, 1141–1156, https://doi.org/10.5194/tc-16-1141-2022, 2022.

Wu, A., Che, T., Li, X., and Zhu, X.: Routeview: an intelligent route planning system for ships sailing through Arctic ice zones based on big Earth data, Int. J. Digit Earth, 15, 1588–1613, https://doi.org/10.1080/17538947.2022.2126016, 2022.

Yang, Q., Mu, L., Wu, X., Liu, J., Zheng, F., Zhang, J., and Li, C.: Improving Arctic sea ice seasonal outlook by ensemble prediction using an ice-ocean model, Atmos. Res., 227, 14–23, https://doi.org/10.1016/j.atmosres.2019.04.021, 2019.

Yang, Z., Liu, J., Song, M., Hu, Y., Yang, Q., and Fan, K.: Extended seasonal prediction of Antarctic sea ice using ANTSIC-UNet, EGUsphere [preprint], https://doi.org/10.5194/egusphere-2024-1001, 2024.

Yuan, X., Chen, D., Li, C., Wang, L., and Wang, W.: Arctic sea ice seasonal prediction by a linear markov model, J. Climate, 29, 8151–8173, https://doi.org/10.1175/JCLI-D-15-0858.s1, 2016.

Zampieri, L., Goessling, H. F., and Jung, T.: Bright Prospects for Arctic Sea Ice Prediction on Subseasonal Time Scales, Geophys. Res. Lett., 45, 9731–9738, https://doi.org/10.1029/2018GL079394, 2018.

Zeng, J., Yang, Q., Li, X., Yuan, X., Bushuk, M., and Chen, D.: Reducing the Spring Barrier in Predicting Summer Arctic Sea Ice Concentration, Geophys. Res. Lett., 50, 8, https://doi.org/10.1029/2022GL102115, 2023.

Zhang, J. and Rothrock, D. A.: Modeling Global Sea Ice with a Thickness and Enthalpy Distribution Model in Generalized Curvilinear Coordinates, Mon. Weather Rev., 131, 845–861, https://doi.org/https://doi.org/10.1175/1520-0493(2003)131<0845:MGSIWA>2.0.CO;2, 2003.

Zhang, J., Steele, M., Lindsay, R., Schweiger, A., and Morison, J.: Ensemble 1-Year predictions of Arctic sea ice for the spring and summer of 2008, Geophys. Res. Lett., 35, 8, https://doi.org/10.1029/2008GL033244, 2008.

Zhang, Y.-F., Bushuk, M., Winton, M., Hurlin, B., Delworth, T., Harrison, M., Jia, L., Lu, F., Rosati, A., and Yang, X.: Subseasonal-to-Seasonal Arctic Sea Ice Forecast Skill Improvement from Sea Ice Concentration Assimilation, J. Climate, 35, 4233–4252, https://doi.org/https://doi.org/10.1175/JCLI-D-21-0548.1, 2022.

Zhang, Y. F., Bushuk, M., Winton, M., Hurlin, B., Gregory, W., Landy, J., and Jia, L.: Improvements in September Arctic Sea Ice Predictions Via Assimilation of Summer CryoSat-2 Sea Ice Thickness Observations, Geophys. Res. Lett., 50, 24, https://doi.org/10.1029/2023GL105672, 2023.

Zhu, Y., Qin, M., Dai, P., Wu, S., Fu, Z., Chen, Z., Zhang, L., Wang, Y., and Du, Z.: Deep Learning-Based Seasonal Forecast of Sea Ice Considering Atmospheric Conditions, J. Geophys. Res.-Atmos., 128, 24, https://doi.org/10.1029/2023JD039521, 2023.