the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Great Lakes wave forecast system on high-resolution unstructured meshes

Ali Abdolali

Saeideh Banihashemi

Jose Henrique Alves

Aron Roland

Tyler J. Hesser

Mary Anderson Bryant

Jane McKee Smith

Wind-wave forecasts play a crucial role in the North American Great Lakes region towards ensuring the safety of communities, enhancement of the economy, and protection of property. Modeling wind waves in closed and relatively shallow basins with complex bathymetry like the Great Lakes is a challenge that is successfully tackled in part by using variable-resolution triangular unstructured meshes with no limits in terms of computational scalability and maximum resolution in the coastal areas. In this paper, we discuss recent advances in developing unstructured mesh capabilities as part of the spectral wave model WAVEWATCH III, in the context of National Oceanic and Atmospheric Administration (NOAA) operational requirements such as model robustness, efficiency, and accuracy. We revisit the history of developments leading to the transition from rectilinear to curvilinear grids and finally to an unstructured mesh version of NOAA's operational Great Lakes wave modeling system (GLWUv2.0). The article describes the development of the operational GLWUv2.0, from mesh design and scalability analysis to validation and verification for hindcast of storm cases and re-forecast using 4 months of retrospective simulations. In closed Great Lakes basins untouched by swell from distant sources, the atmospheric model's direct impact on wave behavior stands apart, showing reduced forecast accuracy over time, while maintaining consistent precision in accurately wind-hindcasted stormy conditions.

- Article

(17486 KB) - Full-text XML

- BibTeX

- EndNote

The North American Great Lakes play a crucial role in the social, economic, and environmental fabric of the United States and Canada, supporting the livelihoods and well-being of millions of people. The region is home to approximately 8 % of the US population (eight US states: Illinois, Indiana, Michigan, Minnesota, New York, Ohio, Pennsylvania, and Wisconsin) and 32 % of the Canadian population (two Canadian provinces: Ontario and Quebec). Local communities rely on the Great Lakes for various purposes, including drinking water, industrial and agricultural activities, transportation, and recreation. The region further supports a range of economic sectors, including manufacturing, tourism, fishing, and shipping. Great Lakes waterways enable the transportation of goods and raw materials, supporting trade and commerce within the region and beyond.

Accurate wind-wave forecasts play a crucial role in ensuring the safety of Great Lakes communities, protecting coastal properties, and facilitating smooth maritime operations. As described in Alves et al. (2014, 2023), the history of forecasting in the Great Lakes region dates back to the establishment of NOAA's Great Lakes Environmental Research Laboratory (GLERL) in 1974, which marked the beginning of systematic marine forecasting in this area. In collaboration with researchers from the Ohio State University, GLERL pioneered the development of the first wave forecasting system based on a parametric, first-generation wave model, specifically tailored for the Great Lakes during the early 1980s (Schwab et al., 1984). As advancements in wind-wave modeling progressed and third-generation models emerged in the late 1980s, GLERL, in partnership with NOAA's Environmental Modeling Center (EMC), successfully co-developed a next-generation forecast system. This system was integrated into NOAA's operational wave model framework, becoming an integral part of the suite of operational environmental forecast models at the National Centers for Environmental Prediction (NCEP).

Upon the release of the third-generation wave model WAVEWATCH III (The WAVEWATCH III®, 1999) at EMC, GLERL and NCEP initiated the development of the next generation of wave forecast system for the Great Lakes waves (GLW) in 2004, incorporating key requirements from forecasters and science operations officers (SOOs) from regional weather forecast offices (WFOs). About 2 years later in 2006, the first WW3-based GLW system was implemented operationally with 3-hourly intervals. The model grid was a single rectilinear grid with a resolution of ∼4 km, with coverage of all five major Great Lakes basins (Erie–Saint Clair, Ontario, Huron, Michigan, and Superior). Details are provided in Alves et al. (2014).

The next upgrade featuring a 2.5 km Lambert-conformal spatial grid took place in 2015, enhancing the forecast due to a better representation of the basin-wide geographical features, particularly during rapidly changing conditions (Alves and Chawla, 2015). This upgrade shed light on the importance of grid resolution and the need for the implementation of more accurate physics, especially in coastal environments (Alves et al., 2023). However, the existing system was not sufficiently computationally efficient to resolve coastal areas with a uniform resolution grid. In the meantime, the core WW3 model was equipped with unstructured mesh capabilities and more advanced nearshore physical parameterizations including, but not limited to, depth breaking, triad interaction, and reflection (Abdolali et al., 2020). The use of a single triangular mesh from coarse offshore to fine nearshore resolution instead of multiple inter-nested grids with different resolutions showed a substantial improvement in the workflow design, maintenance, computational efficiency, and accuracy.

In 2017, the GLW system was upgraded to an unstructured mesh (GLWUv1.0), with a 2.5 km resolution in deep water down to 250 m in the nearshore area. The added benefit as a result of better representation of wave transformation in the coastal areas was recognized by the forecasters in the regions within this implementation (Alves et al., 2023). GLWUv1.0 used the explicit numerical solver and the same parallelization algorithm as the structured and curvilinear grids, mainly due to operational limitations. The approach had several limitations including restrictions on time stepping due to resolution and grid size, as well as a maximum number of CPU threads dependent on the number of spectral components. With the advent of a new parallelization algorithm and an implicit numerical solver, the model was made more efficient and accurate on very large and fine-resolution meshes. The contrast lies in how domain decomposition (DD) surpasses card deck (CD) in scalability with a large number of CPUs. CD has a restricted maximum count of CPUs compared to the unlimited count in DD. Moreover, when dealing with finer-resolution meshes, the implicit solver in DD enables operation with larger CFL numbers, whereas the explicit solver in CD is limited by CFL <1, resulting in slower model performance (Abdolali et al., 2020).

With the availability of appropriate resources, a recent GLWU implementation enabled taking advantage of such features, with enhanced benefits that will be discussed below, providing a unique opportunity to improve the representation of nearshore wave transformation, incorporating water level and current effects and resolving complicated geometries in shallow water regions (Moghimi et al., 2020). In the past few decades, the noticeable increase in frequency and destructiveness of coastal storms has led to a growing recognition of the need to couple wave models with other Earth system models. This necessity extends beyond just public awareness and warning systems, and its benefits encompass applications such as hindcasts for climatologies, risk analyses for the insurance industry, and relevant data for tourism, investment sectors, and numerous other stakeholders. The coupling of atmospheric, ocean wave, surge, and hydrological models on high-resolution numerical grids has improved model accuracy by better representing nearshore and inland geometries and physics (Moghimi et al., 2020). This breakthrough has expanded the limits of WW3 to be dynamically coupled with storm surge, hydrological, ice, and atmospheric models and provides the opportunity to investigate nearshore wave climate (Bakhtyar et al., 2020). Moreover, the highly efficient WW3 model allowed researchers to evaluate uncertainty via ensemble modeling (Abdolali et al., 2021).

This paper focuses on the latest operational implementation of the Great Lakes wave model GLWUv2.0, but also discusses new features added to the wave model that benefit Earth system coupling and ensemble forecasting. The sections are arranged as follows. Section 2 provides an overview of the GLWUv2.0 implementation in operation in May 2023, including mesh design, forcing characteristics, the end-to-end workflow design based on the explicit solver in WW3, retrospective simulations for 2 summer and 2 winter months, and validation analysis. In Sect. 3, the performance of the unstructured WW3 was analyzed for 10 hindcasted windy conditions over the Great Lakes, highlighting the scalability of the domain decomposition algorithm and the effectiveness of the implicit solver on high-resolution meshes. The contrasts, shown in Sects. 2 and 3, manifest the lag between operation and the core model development front and define the path forward in the future of operational forecast. Concluding remarks and requirements for future validation studies as well as support of better wave forecasts, in particular in the winter season and coastal areas, are provided in Sect. 4.

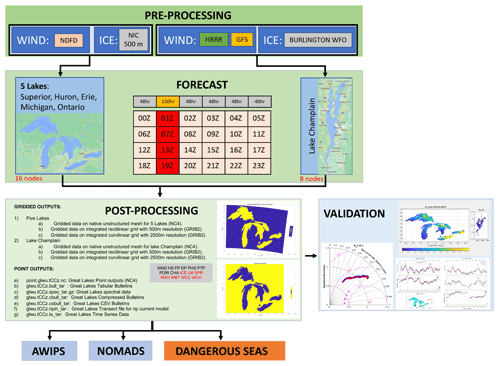

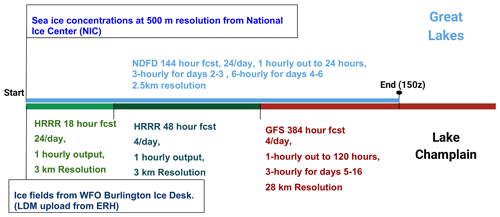

The Great Lakes Wave Model (GLWUv2.0) currently provides guidance to 12 weather forecast offices (WFOs) on six domains, including the five Great Lakes region plus Lake Champlain. The system is forced by NOAA operational wind sources, as described in Sect. 2.2. The Great Lakes wave forecast operates on an hourly basis, incorporating both short and long cycles, as depicted in Fig. 1. There are 20 short cycles, each running for 48 forecast hours. Additionally, there are four long cycles, which extend for 150 forecast hours and are scheduled at 01:00, 07:00, 13:00, and 19:00 Z. The core model, WAVEWATCH III, takes advantage of key features from the most recent advancements in the WW3v7 model package outlined above, with some restrictions due to NOAA operational requirements (NCO, 2022), such as end-to-end runtime, output formats, and an operational readiness test (ORT). These restrictions mandate the use of the explicit solver for the GLWUv2.0 implementation without incorporating water level and current velocity.

The GLWUv2.0 model resolves the wave energy density spectrum with frequencies between 0.05 and 1.055 Hz, divided into 32 frequency bands with a geometric increment factor of 1.1, and 36 directions with 10∘ increments. In addition, wave physics parameterizations include the Ardhuin et al. (2010) wind input and wave dissipation source term (ST4), the generalized multiple discrete (GMD) interaction approximation of Tolman (2014) for nonlinear wave–wave interaction (NL3, replacing the discrete interaction approximation – DIA – of Hasselmann et al., 1985), JONSWAP bottom friction (BT1; Hasselmann et al., 1973), and depth-limited breaking based on the Battjes–Janssen formulation (DB1) (Battjes and Janssen, 1978). In order to take into account ice, a simple ice blocking parameterization (IC0) with the discontinuous method is used, where a critical ice concentration in which the scheme switches between free propagation and blocking is . Due to NOAA operational requirements, a parallelization scheme known as “card deck” and the explicit numerical scheme are utilized, which limits performance due to restrictions on the number of allowed parallel threads, dependent on the discrete wave spectrum resolution, and on time stepping. In view of the latter, the global, spatial, spectral, and minimum source term WW3 time steps are set to 180, 60, 90, and 10 s, respectively.

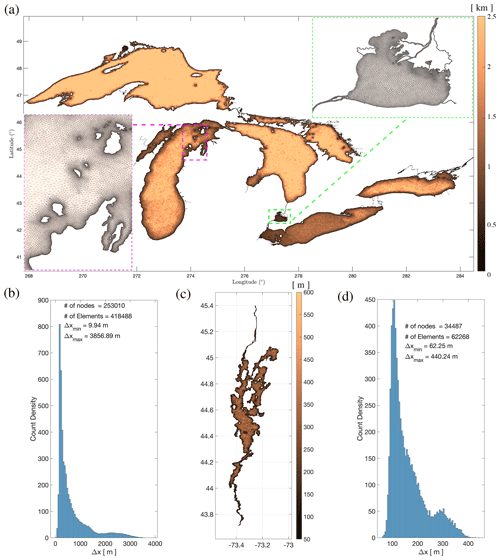

Figure 2Great Lake Wave Unstructured v2.0 mesh resolutions: (a, b) five Great Lakes and (c, d) Lake Champlain.

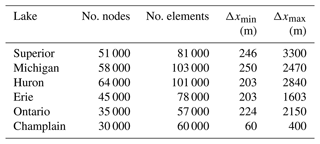

2.1 Unstructured mesh

In the GLWUv2.0, the G0 unstructured mesh is utilized for the five lakes with ∼253 000 nodes and ∼418 000 elements. A new feature in the latest implementation is the addition of a Lake Champlain mesh containing ∼30 000 nodes and ∼62 000 triangular elements, ranging in size from approximately 60 m in dynamic coastal regions to approximately 400 m in less dynamic offshore areas. The Great Lakes bathymetry collection compiles geological and geophysical data from five lake floors, including bathymetry and detailed maps sourced from over a century of soundings by various organizations like the US Army Corps of Engineers, NOAA, and the Canadian Hydrographic Service. NCEI/NOAA compiled unified topobathy data for Lake Erie and Saint Claire (NGDC, 1999a), Lake Huron (NGDC, 1999b), Lake Michigan (NGDC, 1996), Lake Ontario (NGDC, 1999c), and Lake Superior and provided public access to these data (https://www.ngdc.noaa.gov/mgg/greatlakes/, last access: 20 January 2021). The topobathymetric grid for the generation of the Lake Champlain mesh was obtained by refining the mesh developed by Environment and Climate Change Canada (ECCC), integrating data from 15 sources for a detailed two-dimensional hydrodynamic model. Covering from South Bay to the Poultney River in Whitehall, NY, and extending northward to Fryers Rapids near St. Jean, QC, it intricately maps 14 significant river inputs to the lake. This grid encompasses surrounding floodplains to simulate various inundation scenarios across the spectrum of water level fluctuations experienced within the region (Titze et al., 2023). Mesh resolution and corresponding histograms, highlighting the distribution of element size and significance of coastal elements in comparison to deepwater elements, are shown in Fig. 2, and the summary is provided in Table 1. Mesh systems were tested to ensure they resolved key morphological features in all domains while remaining computationally feasible in a real-time operational environment.

2.2 Forcing fields

The operational GLWUv2.0 forcing includes temporally variable wind speed and a stationary ice concentration, defined at the initialization time step and kept constant for the entire cycle. A combination of various sources is used for wind due to operational limitations such as availability and spatial and temporal coverage. A flowchart of the forcing fields is shown in Fig. 3.

The wind for lakes Superior, Michigan, Huron, Erie, and Ontario are extracted from the National Digital Forecast Database (NDFD) with a spatial resolution of 2.5 km and variable temporal resolution up to 150 h forecast (1-hourly out to 24 h, 3-hourly for days 2–3, 6-hourly for days 4–6). The NDFD is a combination of data from regional NWS weather forecast offices (WFOs) and the National Centers for Environmental Prediction (NCEP) models (Glahn and Ruth, 2003). The corresponding resolution of the sea ice concentration analysis for these five domains is 500 m (as opposed to 5 km in v1.0), obtained from the National Ice Center (NIC). The NIC data for the Great Lakes are created from daily ice analysis. The files contain information on ice conditions that are separated into total ice concentration, ice types with their respective concentrations, and ice floe size.

The Lake Champlain portion of the system is forced with atmospheric wind data from NCEP's High-Resolution Rapid Refresh (HRRR) with 3 km resolution for the first 2 d and switched to Global Forecast System (GFS) with 0.25∘ (∼ 27 km) resolution up to 150 h. The HRRR is a NOAA real-time 3 km resolution, hourly updated, cloud-resolving, convection-allowing atmospheric model, initialized by 3 km grids with 3 km radar assimilation. Radar data are assimilated in the HRRR every 15 min over a 1 h period, adding further detail to that provided by the hourly data assimilation from the 13 km radar-enhanced Rapid Refresh (Dowell et al., 2022). The Global Forecast System (GFSv16) from the National Centers for Environmental Prediction (NCEP) serves as a fundamental component in NCEP's operational numerical guidance suite for global climate modeling (NOAA, 2021). It offers both deterministic and probabilistic global forecasts, extending up to 16 d, and plays a key role by supplying initial and boundary conditions for NCEP's regional, ocean, and wave prediction models. The Lake Champlain ice coverage data are taken from the National Weather Service weather forecast office in Burlington, VT.

Figure 3Great Lake Wave Unstructured v2.0 atmospheric and ice forcing hierarchy. For wind, the National Digital Forecast Database (NDFD) and a combination of High-Resolution Rapid Refresh (HRRR) and Global Forecast System (GFSv16) are used for the five lakes and Lake Champlain, respectively. The ice is taken from the National Ice Center (NIC) and WFO Burlington help desk for the five lakes and Lake Champlain, respectively.

2.3 Workflow

The main characteristics of an end-to-end workflow in an operational environment are its robustness, predictability of potential unavailability of inputs with replaceable alternative options, and full automation, with no human intervention and following a strict runtime scheduling to ensure on-time delivery of guidance products. The three main steps of the GLWUv2.0 workflow, as shown in Fig. 1, are pre-processing, forecast, and post-processing jobs for two parallel jobs, one for the five Great Lakes and the other for Lake Champlain. The workflow runs every hour for 48 h in the short cycles and 150 h in long cycles four times a day (01:00, 07:00, 13:00, and 19:00 Z). Within the Great Lakes domain, the runtime is 24 min for the short cycle and 50 min for the long cycle. For Lake Champlain, the process completes in 16 min in the short cycle and 26 min in the long cycle.

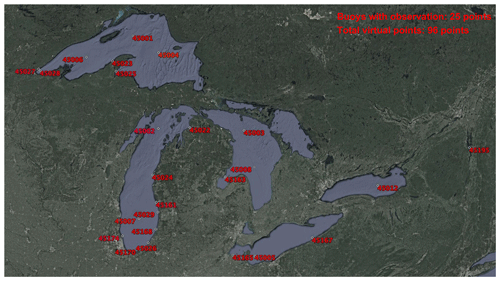

Figure 4The location of meteorological and wave observations, obtained from the National Data Buoy Center (NDBC) locations for model validation (© Google Earth, https://earth.google.com/web/, last access: 20 January 2021).

2.3.1 Pre-processing job

This serial job retrieves wind and ice inputs from other operational models like HRRR and GFS or analysis from NDFD, NIC, and the Burlington weather forecast office. In the case that the forcing for the current cycle is not available, a look-back option fills the forcing from the previous forecast cycles. If the ice field is not provided, the previous forecast cycle ice field is used. It is worth noting that the ice field is constant during the entire forecast job and initiated at the beginning of simulation. Within this step, the grib2 files are cropped for GLWU coverage, interpolated on the computational grid, and saved in NetCDF format. The WW3 pre-processing executable (ww3_prnc) converts the inputs into binary format. The runtimes for pre-processing step are 3 and 4 min for short and long cycles for the five lakes and 8 and 13 min for short and long cycles for Lake Champlain, respectively.

2.3.2 Forecast job

This parallel job performs two simulations for the five lakes (on 16 nodes, each node with 64 cores) and Lake Champlain (on eight nodes) simultaneously. Note that the reason for the separation into two domains is to load-balance between the two domains in which Lake Champlain requires more iterations (due to CFL criteria). The runtime for the five lakes is 9 and 24 min for short and long cycles, while Lake Champlain takes 5 and 10 min, respectively. Binary point and gridded outputs are stored during the simulation.

2.3.3 Post-processing job

This serial job generates NetCDF and grib2 outputs on 500 m and 2.5 km resolutions, while point outputs are generated in NetCDF format (spectral outputs and time series of wave statistics). These data are transferred to the Advanced Weather Interactive Processing System (AWIPS) and NOAA Operational Model Archive and Distribution System (NOMAD) for use by WFOs and the public. The runtime of this step is 12 and 22 min for short and long cycles for the five lakes and 3 min for both short and long cycles for the Lake Champlain.

2.3.4 Validation and verification job

Once the field and point outputs are prepared during the post-processing phase and real-time observations from buoys are gathered and subjected to quality checks, a comparative analysis of the results is conducted. This analysis involves generating statistical summaries, including time series plots for parameters such as wind speed, wind direction, significant wave height, peak period, and mean wave direction. Additionally, graphical representations such as linear regression plots and Taylor diagrams are generated, along with tables containing relevant statistics.

2.4 Validation and verification

The long cycle runs with 6 d re-forecast simulations (four times a day) for the GLWUv2.0 system are validated against in situ measurements at 25 locations, as shown in Fig. 4. The model performance has been evaluated during 2 summer months (June and July 2022) where observations were available. Note that the temporarily deployed buoy in Lake Champlain was available during this period, which was the criterion for the selection of the retrospective summertime run period.

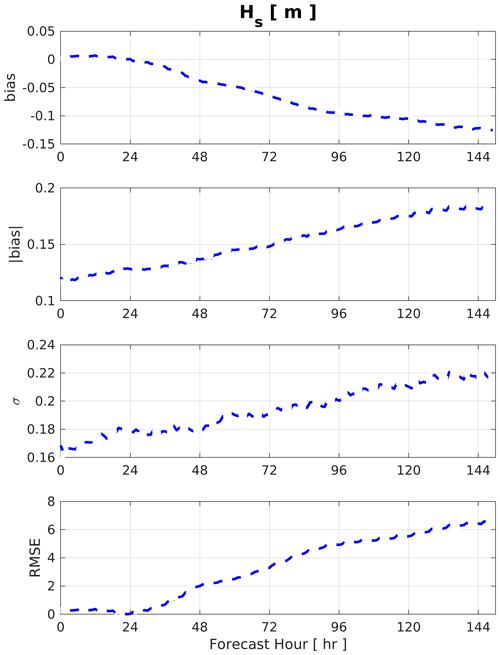

Figure 6Wave model performance for hourly Hs forecast versus lead time in terms of bias, absolute bias, standard deviation (σ), and root mean square error (RMSE).

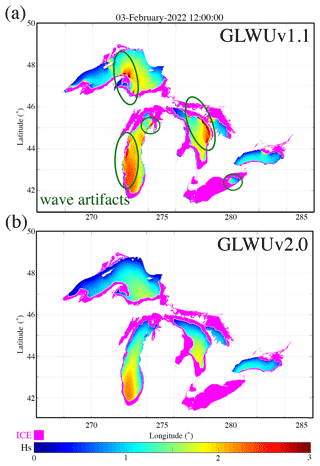

A total of 2 months of simulations (January and February 2022) were conducted to evaluate the model results that included the higher-resolution ice and assess model performance with previously reported observed wave artifacts at the ice edge by WFOs (Fig. 5). The buoys are removed during wintertime to avoid damage to the gauges; therefore, the forecasters qualitatively verified agreement of the model with their observations.

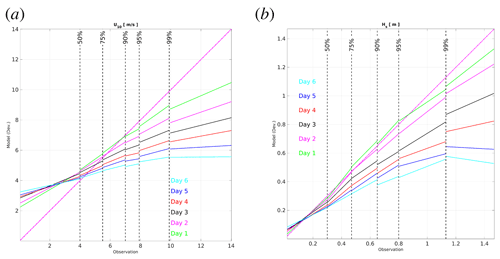

Figure 7Linear regression plot for wind speed (a) and significant wave height (b) for the daily forecast lead times, separated for < 50th, 75th, 90th, 95th, and > 99th percentiles.

The physical parameters in the wave model were tuned to minimize the statistical metrics of significant wave height, keeping the first forecast day close to the observations. As shown in Fig. 6, the model score in terms of bias, absolute bias, standard deviation, and root mean square error deteriorates with longer forecast lead time, which is introduced by the forcing field.

Looking closely at the trend between forcing wind and downstream wave model output in Fig. 7, the linear regression plot is shown for results separated for percentile ranges of < 50, 75, 90, 95, and > 99 for up to six daily forecasts. It is clearly shown that the wind (U10) was overestimated in NDFD for small values (<50 percentile) and then underestimated each day for larger values since the forecast was initiated. On the other hand, the wave model outputs were close to 1:1 for the first day with slight underestimation for the larger waves (panel b). Note that unless a dynamic forecast-lead-time-dependent correction is applied to the wind field, the wave models cannot be tuned for the whole duration of the forecast, and the results would deviate from observations as forecast lead time increases. The pattern propagation (underestimation or overestimation) from wind to wave model is due to the nature of wind-wave generation and the direct impact of wind on waves.

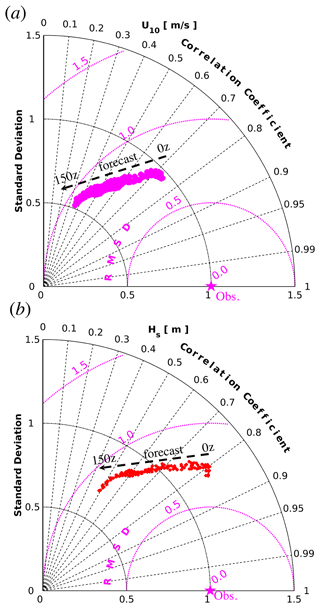

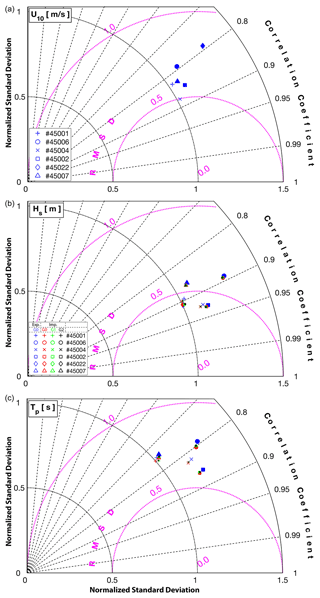

Last, the performance of the NDFD winds and WW3 waves at 25 in situ observations was assessed as a function of forecast lead time and summarized in the Taylor diagrams shown in Fig. 8, in terms of normalized standard deviation (σ), root mean square deviation (RMSD), and correlation coefficient (CC). For U10 (top panel), as forecast lead time passes, the RMSD increases from 0.7 to 0.9, while σ and CC drop from 0.98 and 0.74 to 0.5 and 0.32, respectively. On the other hand, a similar pattern is observed for Hs (bottom panel), with a slight change in RMSD (0.7–0.8), whereas σ and CC drop from 1.23 and 0.8 to 0.7 and 0.5, respectively.

In this section, key features of the core WW3 model left out from GLWUv2.0 due to operational restrictions are tested in support of future upgrades. The aim is to highlight the performance and efficiency benefits of new model features in meshes that have 1 order of magnitude, in the number of nodes, larger than the one used in operation (G0) with high resolution (∼ 5–10 m) in coastal regions.

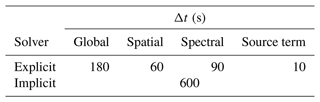

The model configuration for simulations closely resembles those outlined in Sect. 2, differing in the following aspects: the parallelization algorithm utilized here is domain decomposition instead of card deck, the implicit numerical solver is used in place of the explicit solver, and the time stepping is as summarized in Table 2. Note that the majority of spectral wave models utilize a first-order time–space method to solve the wave action equation (WAE). Implicit schemes are constrained to first-order time–space methods due to the Godunov theorem, limiting higher-order accuracy to nonlinear approaches and leading nonmonotonic methods to produce negative wave action and highly dispersive results with respect to the time step (Booij et al., 1999). Notably, experiments employing implicit schemes in nearshore environments have revealed a significant lag between the physical timescale variation and the stability timescales governed by explicit schemes (CFL), justifying the quasi-steady temporal variation in physical variables and validating the use of a first-order time stepping scheme. Janenko (1971) supports this approach due to the temporal scale mismatch between physical and stability time steps for application of implicit schemes to hyperbolic problems. Janssen (2007) argues against employing higher-order methods in geographical space, suggesting that adding numerical diffusion to counteract the garden sprinkler effect (GSE) degrades the solution and only affects distantly advected low-frequency waves (swell), such as those traveling from the Arctic to the Indian Ocean, a concern irrelevant to the current study. Consequently, our work establishes the groundwork for furthering higher-order methods by employing a first-order implicit scheme, representing the current state of scientific understanding in fully monotone integration of the WAE.

Table 2Model time steps for GLWUv2.0 with the explicit solver and experimental study with the implicit solver.

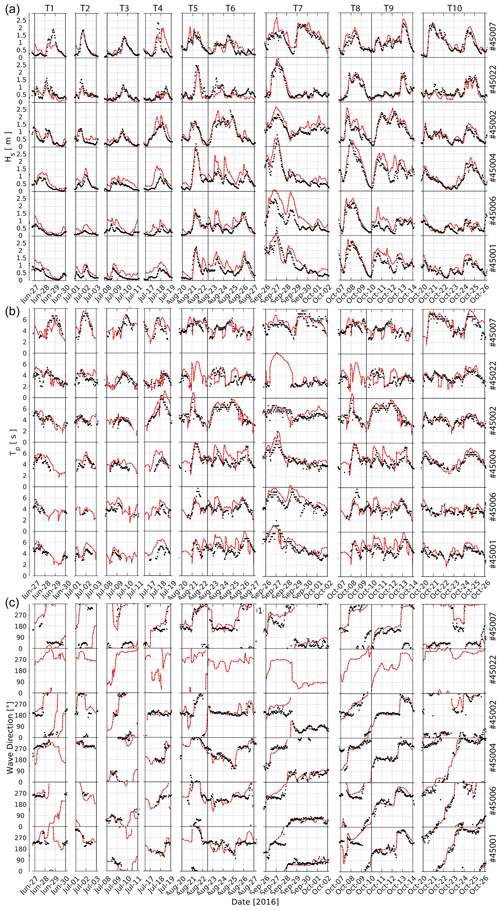

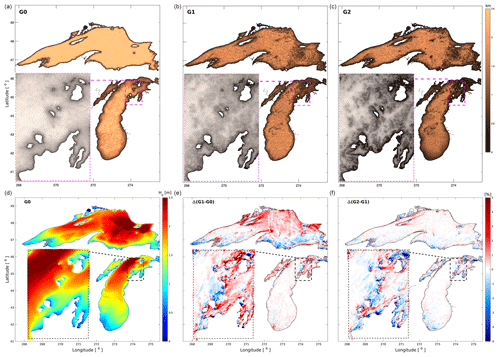

A validation study is performed on three unstructured meshes (G0, G1, and G2) for 10 stormy conditions () in the Great Lakes from 27 June to 26 October 2016. The atmospheric forcing is provided by the HWRF model (Tallapragada et al., 2015) covering Lake Michigan and Lake Superior. The G0 mesh, which is used in the operational GLWUv1.0 and GLWUv2.0 systems, is compared with two other meshes of higher resolutions. The top row of Fig. 9 shows the grid resolutions with an inset magnified view of the northeast side of Lake Michigan. The meshes are finer near the shore and at locations with irregular bathymetry to ensure that the complicated coastline and geometries, which have a significant influence on wave transformations, are better represented in the model.

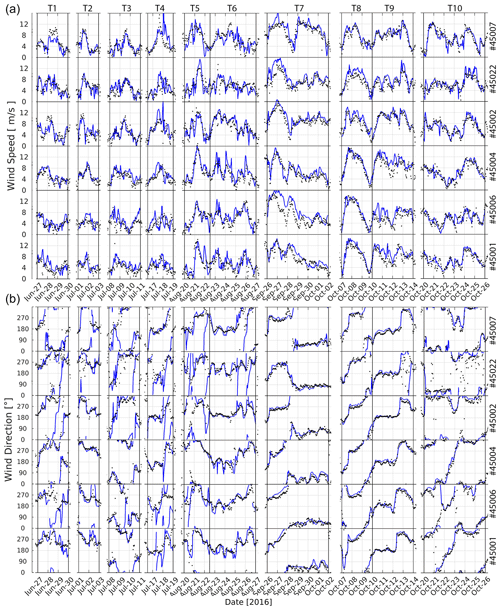

A snapshot of the significant wave height field on the G0 mesh and the percentage difference for G1 and G2 meshes are shown in the bottom row. In the bottom left-hand panel, the significant wave height is shown, which is extracted from simulations on the G0 grid. The middle panel illustrates the percentage changes between the G0 and G1 grids, while the right panel shows the percentage changes between the G1 and G2 grids. These changes indicate approximately a 5 % variation in the domain extent. These variations primarily occur in regions characterized by sharp gradients in bathymetry, where the higher-resolution meshes can effectively resolve the terrain with a sufficient number of elements. In addition to a quantitative comparison, the atmospheric and wave model outputs are validated at six available stationary buoy stations. The performance of the atmospheric and wave models at the six buoy locations is summarized in Taylor diagrams shown in Fig. 10, in terms of normalized standard deviation (σ), root mean square deviation (RMSD), and correlation coefficient (CC) for the observation and model outputs for wind speed (top panel), significant wave height (middle panel), and peak period (bottom panel).

Figure 9(a, b, c) Grid resolution for Lake Michigan and Lake Superior with a closer view of the northeast side of Lake Michigan for mesh G0 (∼250 000), G1 (∼750 000), and G2 (∼2.4 million); (d, e, f) A snapshot of significant wave height on mesh G0 (a, d). Percentage change in Hs field between G1 and G0 (b, e) and between G2 and G1 (c, f).

Figure 10Taylor diagram for wind speed – U10 (a), significant wave height – Hs (b), and peak period – Tp (c) representing modeled and collected data at buoy locations (blue: explicit on mesh G0; red: implicit on mesh G0; green: implicit on mesh G1; black: implicit on mesh G2) in terms of the Pearson correlation coefficient (CC), root mean square deviation (RMSD), and normalized standard deviation σ.

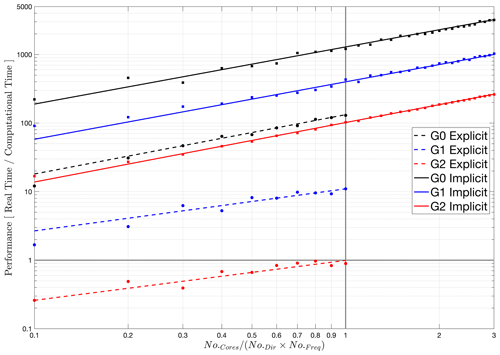

Figure 11Model performance (v7) on an HPC environment and scalability of WW3 models for an explicit numerical solver with card deck parallelization (dashed lines) as well as the implicit scheme with the domain decomposition parallelization algorithm (solid lines) for three unstructured grids with 250 000 (G0), 750 000 (G1), and 2.4 million (G2) nodes. The horizontal axes show the number of computational cores normalized by the number of frequencies and directions. The vertical solid line represents the CD limit (no.cores=no.dir×no.freq). The horizontal solid line represents real-time performance. The tests are performed on NCEP's HPC, Hera, equipped with a 2.60 GHz Intel Haswell CPU and 2.67 GB memory per core.

The wind speed has normalized standard deviations in the range of 1–1.3 and RMSD 0.5–0.8 with a correlation coefficient of nearly 0.8 for all the buoys. On the other hand and for all the grids, the significant wave height has σ=1–1.3, RMSD = 0.42–0.6, and CC = 0.86–0.94. The peak period has a similar normalized standard deviation, with RMSD = 0.6–0.81 and CC = 0.75–0.88. These results show a slight improvement for the intermediate- (G1) and high-resolution grids (G2) with the implicit scheme relative to the operational grid (G0) with the explicit scheme. Note that a significant improvement is not expected due to the deepwater location of the available buoys, which are not expected to benefit from higher resolutions nearer the coast. Time series of wind speed U10, wind direction (Fig. A1), significant wave height (Hs), peak period (Tp), and mean wave direction from buoy observations and HWRF/WW3 models in Fig. A2 are provided in the Supplement. Unlike the forecast (Sect. 2.4), where the upstream atmospheric model and downstream wave model diminish in accuracy with an increase in forecast lead time, the wind hindcast for 10 stormy conditions maintains its precision over time (Fig. A1). Consequently, the time series data for the significant wave height, peak period, and wave direction exhibit strong consistency throughout the entire simulations (Fig. A2). As depicted in the middle panel of Fig. A2, the peak period of young waves during severe conditions remains below 8 s. Notably, in closed basins like the Great Lakes where swell generated in distant regions does not exist, the immediate influence of local wind on waves is more evident, showcasing the significance of the upstream wind model's accuracy in the behavior of the wave model.

Our findings highlight a noteworthy improvement in model output achieved by increasing the grid resolution, with particularly remarkable enhancements observed in the nearshore region. The finer details of subscale geographical features that were previously absent in the coarse meshes are now captured in the higher-resolution simulations. This finding underscores the importance of higher-resolution grids in accurately representing coastal morphology features. These significant differences in model output hold the promise of qualitative improvements that can be of great importance for nearshore hazard forecasting and prediction. Forecasters in the region have a crucial need for such advancements to enhance their ability to predict and mitigate potential coastal hazards effectively.

However, to observe a more pronounced impact of mesh resolution, especially for the 10 stormy conditions studied, a comparable increase in atmospheric forcing resolution, coupled with changes in water level and current fields, becomes essential. The reason behind this requirement is that the wave climate in enclosed basins is primarily influenced by locally generated wind-seas. During this study, the same wind field was interpolated on all three grids without considering water levels and current fields. In addition, due to the lack of coastal observations, where the dominant waves might interact with the bottom, the statistics of the three simulations show nearly equal performances. Looking solely at the comparison plots with the existing in situ observations underestimates the importance of high-resolution bathymetric features because the gauges are far away from any geographical feature like offshore islands that would show the resolution effect on wave transformation interacting with those features (see the concluding remarks regarding the need for future improvements on high-resolution grids).

The model performance has been evaluated on NCEP's HPCs for the pre-existing parallelization algorithm in WW3 (CD) with its explicit equation solver and the newer domain decomposition (DD) algorithm with the implicit solver on three unstructured triangular meshes. The grid resolutions are compared in Fig. 9. The G0 mesh is designed for operational implementation. The criteria for the G0 mesh design were mainly computational efficiency and limited available resources in view of the requirements of the CD parallelization and explicit propagation schemes. Therefore, the G0 grid is relatively small with nearly 250 000 nodes and a minimum resolution of 250 m in coastal areas. In contrast, a moderate (G1 with 750 000 nodes) and a large grid (G2 with 2.40 million nodes) with ∼ 5 m minimum resolution were designed to distinguish the computational limits of each parallelization algorithm and solver scheme.

The scalability performance is shown in Fig. 11 in terms of nondimensional computational speed, revealing linear growth in the model performance for various model options and grids for implicit (solid lines) and explicit (dashed lines) schemes. It is clearly shown that the G0 grid (black lines) has 1 order of magnitude faster performance with the implicit scheme compared to the explicit scheme and faster than real-time computation for any given number of CPU cores for both solver schemes. However, increasing the number of grid nodes to 750 000 and decreasing the minimum resolution to 5 m led to a significant slowdown in the model performance for the explicit solvers (dashed blue) due to the ineffectiveness of CD parallelization and model CFL constraint embedded in the explicit scheme on triangular unstructured grids.

Such limitations have confronted WW3 users applying larger grids with very high resolution for a long time. The shown slowdown in performance is more evident for the G2 mesh (dashed red), where the model computational wall time dropped under real time (horizontal solid line). On the other hand, the implicit scheme (solid lines) allows resolution of the physical processes in nearshore regions with the prescribed higher grid resolution in an efficient way. For example, the G2 mesh with the DD scheme has nearly the same performance as the G0 mesh with the explicit scheme, in spite of having nearly 10 times more nodes. In addition, the DD algorithm does not have any limit in terms of the number of CPU core allocations, unlike the CD algorithm. Therefore, larger grids can be distributed on a larger number of computational cores, free from the NSPEC limit (number of spectral components) imposed in the CD algorithm (vertical solid line). This computational performance breakthrough is a valuable contribution from our work to research and operational applications using the WW3 model.

This article presents an overview of the implementation of GLWUv2.0, encompassing workflow design and validation studies for the duration of 4 months of re-forecast, so-called retro-respective simulations. The validation includes a qualitative comparison during the summer season and a qualitative analysis for the ice season when no buoys were available in the Great Lakes. The article also acknowledges the limitations and challenges encountered in the operational environment, which restricted the utilization of the most advanced components of the wave model. Model tuning focused on minimizing statistical metrics for significant wave height, aligning initial forecasts closely with observations. However, as forecast lead time increased, the model's accuracy decreased due to higher uncertainty in the forcing field. The relationship between wind and wave model outputs showed discrepancies, with wind being consistently overestimated for smaller wind speeds and underestimated for larger wind speeds as the forecast progressed. The impact of forecast lead time on NDFD winds and WW3 waves was analyzed through Taylor diagrams, indicating a decrease in accuracy over time for both wind and wave forecasts in terms of deviation and correlation coefficient.

In addition to GLWUv2.0 implementations, the model scalability was evaluated on three unstructured meshes, with 250 000 (G0), 750 000 (G1), and 2.4 million (G2) nodes with minimum resolutions of 250, 20, and 5 m, respectively. The higher-resolution meshes show a 5 % variation in domain extent, primarily resolving sharp bathymetric gradients more effectively. The comparison at buoy stations reveals slight improvements in wave prediction for higher-resolution grids. However, significant improvements were not expected due to buoy locations being distant from coastlines. The scalability analysis shows the efficiency of the implicit numerical solver as opposed to the explicit solver, constrained by CFL criteria. In addition, the limitless domain decomposition (DD) parallelization will support the use of large meshes in operational environments, where simulation time mandates the design process. In order to show the accuracy of the model during storm conditions, the aforementioned unstructured meshes were compared during 10 selected stormy conditions.

The article explores studies that offer insights into future improvements in wave forecasting. It emphasizes the need for refining grid resolution and modernizing numerical methods to achieve significant enhancements. Additionally, considering coastal-scale phenomena and interactions with other Earth system models such as circulation, sea ice, atmosphere, and hydrological models is crucial for a comprehensive understanding of coastal dynamics. The role of coastal observations in model validation is highlighted, requiring remote sensing techniques such as coastal altimetry and new in situ data collection technologies such as drifter buoys and HF radars, particularly during the ice season when conventional field observation is not possible. Improving our understanding of the wave climate in diverse environments (i.e., interacting with ice, vegetation, rocky beaches, and coastal structures) through more observations and with more accurate numerical models will help to better evaluate and design coastal protection with nature-based solutions, so-called engineering with nature, to improve coastal resilience.

4.1 Future development

GLWUv2.0 features seven additional field outputs in order to be utilized in the Dangerous Seas Project over the Great Lakes Region. This project is a bilateral collaborative agreement between NOAA (EMC-OPC) and Environment and Climate Change Canada (ECCC) to enhance scientific and operational cooperation between Canada and the United States. In this effort, a dangerous sea is defined as a combination of wave height, period, steepness, breaking waves, crossing seas, and rapidly changing sea state (time rate of change) that causes navigational risk (speed and course) and/or leads to the potential loss of a vessel, cargo, or crew. Mariners should avoid dangerous seas at all costs. The project has started with the Great Lakes, a basin responding mostly to the wind impact on the water surface, and will migrate the work to the open ocean where wave patterns are more complex. Additional details on this will be made available in a separate article in the future.

Figure A1Atmospheric model validation at the buoy locations: HWRF (blue) versus observations (black) for (a) wind speed and (b) wind direction.

The current version of the GLWUv2.0 modeling system, including the workflow, the WW3 model, and input files to produce the results shown in this paper, can be accessed at the Zenodo archive at https://doi.org/10.5281/zenodo.8341987 (Abdolali, 2023) under the Lesser GNU Public License v3.

AA: conceptualization, methodology, WW3 code development, workflow development and implementation, data curation, visualization, validation, writing (original draft). SB: methodology, workflow development and implementation, data curation, visualization, validation, writing (review and editing). JHA: WW3 code development, workflow development and implementation, data curation, writing (review and editing). AR: WW3 code development, writing (review and editing). TJH: WW3 code development, writing (review and editing). MAB: WW3 code development, writing (review and editing). JMS: WW3 code development, writing (review and editing).

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors wish to thank Robert E. Jensen for the fruitful discussions on the future of wave modeling and observations in the Great Lakes area. The authors acknowledge NOAA's Great Lakes Environmental Research Laboratory (GLERL) for providing the bathymetry and coastline of the Lake Champlain and buoy no. 45195 observations, Greg Mann from the National Weather Service (Detroit office) for providing 10 storm cases, the forecast officers at the Great Lakes WFOs for their feedback during the evaluation period (particularly during ice season when eyewitnesses were the only source of validation), and the EMC Engineering and Implementation Branch as well as NCEP Central Operation (NCO) for their help during GLWUv2.0 transition to operations.

This paper was edited by Simone Marras and reviewed by two anonymous referees.

Abdolali, A.: Great Lakes Wave Unstructured v2.0, Zenodo [code and data], https://doi.org/10.5281/zenodo.8341987, 2023. a

Abdolali, A., Roland, A., van der Westhuysen, A., Meixner, J., Chawla, A., Hesser, T. J., Smith, J. M., and Sikiric, M. D.: Large-scale hurricane modeling using domain decomposition parallelization and implicit scheme implemented in WAVEWATCH III wave model, Coast. Eng., 157, 103656, https://doi.org/10.1016/j.coastaleng.2020.103656, 2020. a, b

Abdolali, A., van der Westhuysen, A., Ma, Z., Mehra, A., Roland, A., and Moghimi, S.: Evaluating the accuracy and uncertainty of atmospheric and wave model hindcasts during severe events using model ensembles, Ocean Dynam., 71, 217–235, https://doi.org/10.1007/s10236-020-01426-9, 2021. a

Alves, J.-H., Tolman, H., Roland, A., Abdolali, A., Ardhuin, F., Mann, G., Chawla, A., and Smith, J.: NOAA’s Great Lakes Wave Prediction System: A Successful Framework for Accelerating the Transition of Innovations to Operations, B. Am. Meteorol. Soc., 104, E837–E850, 2023. a, b, c

Alves, J. H. G. and Chawla, A.: Forecasting Wind-Waves at the North American Great Lakes, Research activities in atmospheric and oceanic modelling. CAS/JSC Working Group on Numerical Experimentation, Report No. 12, 8 (1–3), https://library.wmo.int/idurl/4/39792 (last access: 9 May 2023), 2015. a

Alves, J. H. G., Chawla, A., Tolman, H. L., Schwab, D., Lang, G., and Mann, G.: The operational implementation of a Great Lakes wave forecasting system at NOAA/NCEP, Weather Forecast., 29, 1473–1497, 2014. a, b

Ardhuin, F., Rogers, E., Babanin, A. V., Filipot, J.-F., Magne, R., Roland, A., van der Westhuysen, A., Queffeulou, P., Lefevre, J.-M., Aouf, L., and Collard, F.: Semiempirical Dissipation Source Functions for Ocean Waves. Part I: Definition, Calibration, and Validation, J. Phys. Oceanogr., 40, 1917–1941, https://doi.org/10.1175/2010JPO4324.1, 2010. a

Bakhtyar, R., Maitaria, K., Velissariou, P., Trimble, B., Mashriqui, H., Moghimi, S., Abdolali, A., Van der Westhuysen, A. J., Ma, Z., Clark, E. P., and Flowers, T.: A New 1D/2D Coupled Modeling Approach for a Riverine-Estuarine System Under Storm Events: Application to Delaware River Basin, J. Geophys. Res.-Oceans, 125, e2019JC015822, https://doi.org/10.1029/2019JC015822, 2020. a

Battjes, J. A. and Janssen, J.: Energy loss and set-up due to breaking of random waves, Coast. Eng., 1978, 569–587, https://doi.org/10.1061/9780872621909.034, 1978. a

Booij, N., Ris, R. C., and Holthuijsen, L. H.: A third-generation wave model for coastal regions: 1. Model description and validation, J. Geophys. Res.-Oceans, 104, 7649–7666, 1999. a

Dowell, D. C., Alexander, C. R., James, E. P., Weygandt, S. S., Benjamin, S. G., Manikin, G. S., Blake, B. T., Brown, J. M., Olson, J. B., Hu, M., et al.: The High-Resolution Rapid Refresh (HRRR): An hourly updating convection-allowing forecast model. Part I: Motivation and system description, Weather Forecast., 37, 1371–1395, 2022. a

Glahn, H. R. and Ruth, D. P.: The new digital forecast database of the National Weather Service, B. Am. Meteorol. Soc., 84, 195–202, 2003. a

Hasselmann, K., Barnett, T. P., Bouws, E., Carlson, H., Cartwright, D. E., Enke, K., Ewing, J., Gienapp, A., Hasselmann, D., Kruseman, P., et al.: Measurements of wind-wave growth and swell decay during the Joint North Sea Wave Project (JONSWAP), Ergaenzungsheft zur Deutschen Hydrographischen Zeitschrift, Reihe A, 1–95, https://hdl.handle.net/21.11116/0000-0007-DD3C-E (last access: 9 May 2023), 1973. a

Hasselmann, S., Hasselmann, K., Allender, J., and Barnett, T.: Computations and parameterizations of the nonlinear energy transfer in a gravity-wave specturm. Part II: Parameterizations of the nonlinear energy transfer for application in wave models, J. Phys. Oceanogr., 15, 1378–1391, https://doi.org/10.1175/1520-0485(1985)015<1378:CAPOTN>2.0.CO;2, 1985. a

Janenko, N. N.: The method of fractional steps, vol. 160, Springer, ISBN 978-3-642-65108-3, 1971. a

Janssen, P.: Progress in ocean wave forecasting, ECMWF Technical Memoranda, p. 27, https://doi.org/10.21957/yt6pxl7kp, 2007. a

Moghimi, S., Van der Westhuysen, A., Abdolali, A., Myers, E., Vinogradov, S., Ma, Z., Liu, F., Mehra, A., and Kurkowski, N.: Development of an ESMF Based Flexible Coupling Application of ADCIRC and WAVEWATCH III for High Fidelity Coastal Inundation Studies, J. Mar. Sci. Eng., 8, 5, https://doi.org/10.3390/jmse8050308, 2020. a, b

NCO: NCO High Performance Computing (HPC) Implementation Standards, version 11.0.0, NCEP Central Operations, https://www.nco.ncep.noaa.gov/idsb/implementation_standards/#:~:text=ImplementationStandards.v11.0.0.pdf (last access: 9 May 2023), 2022. a

NGDC: Bathymetry of Lake Michigan, National Geophysical Data Centre [data set], https://doi.org/10.7289/V5B85627, 1996. a

NGDC: Bathymetry of Lake Erie and Lake St. Clair, National Geophysical Data Centre [data set], https://doi.org/10.7289/V5KS6PHK, 1999a. a

NGDC: Bathymetry of Lake Huron, National Geophysical Data Centre [data set], https://doi.org/10.7289/V5G15XS5, 1999b. a

NGDC: Bathymetry of Lake Ontario, National Geophysical Data Centre [data set], https://doi.org/10.7289/V56H4FBH, 1999c. a

NOAA: NOAA, 2021: Upgrade NCEP Global Forecast Systems (GFS) to v16: Effective March 17, 2021. Service Change Notice 21-20, Updated, National Weather Service Headquarters, Silver Spring MD, https://www.weather.gov/media/notification/pdf2/scn21-20gfs_v16.0_aac.pdf (last access: 9 May 2023), 2021. a

Schwab, D. J., Bennett, J. R., Liu, P. C., and Donelan, M. A.: Application of a simple numerical wave prediction model to Lake Erie, J. Geophys. Res., 89, 3586–3592, 1984. a

Tallapragada, V., Kieu, C., Trahan, S., Zhang, Z., Liu, Q., Wang, W., Tong, M., Zhang, B., and Strahl, B.: Forecasting tropical cyclones in the western North Pacific basin using the NCEP operational HWRF: Real-time implementation in 2012, Weather Forecast., 30, 1355–1373, 2015. a

The WAVEWATCH III® Development Group (WW3DG): User manual and system documentation of WAVEWATCH III® version 6.07, Tech. Note 333, NOAA/NWS/NCEP/MMAB, College Park, MD, USA, 326 pp. + Appendices, 2019. a

Titze, D., Beletsky, D., Feyen, J., Saunders, W., Mason, L., Kessler, J., Chu, P., and Lee, D.: Development and skill assessment of a real-time hydrologic-hydrodynamic-wave modeling system for Lake Champlain flood forecasting, Ocean Dynam., 73, 231–248, https://doi.org/10.1007/s10236-023-01550-2, 2023. a

Tolman, H.: A genetic optimization package for the Generalized Multiple DIA in WAVEWATCH III, Tech. Note 289, NOAA/NWS/NCEP/MMAB, ver. 1.4, 22 pp., https://doi.org/10.1029/95JC03219, 2014. a