the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

ICON-Sapphire: simulating the components of the Earth system and their interactions at kilometer and subkilometer scales

Cathy Hohenegger

Peter Korn

Leonidas Linardakis

René Redler

Reiner Schnur

Panagiotis Adamidis

Jiawei Bao

Swantje Bastin

Milad Behravesh

Martin Bergemann

Joachim Biercamp

Hendryk Bockelmann

Renate Brokopf

Nils Brüggemann

Lucas Casaroli

Fatemeh Chegini

George Datseris

Monika Esch

Geet George

Marco Giorgetta

Oliver Gutjahr

Helmuth Haak

Moritz Hanke

Tatiana Ilyina

Thomas Jahns

Johann Jungclaus

Marcel Kern

Daniel Klocke

Lukas Kluft

Tobias Kölling

Luis Kornblueh

Sergey Kosukhin

Clarissa Kroll

Junhong Lee

Thorsten Mauritsen

Carolin Mehlmann

Theresa Mieslinger

Ann Kristin Naumann

Laura Paccini

Angel Peinado

Divya Sri Praturi

Dian Putrasahan

Sebastian Rast

Thomas Riddick

Niklas Roeber

Hauke Schmidt

Uwe Schulzweida

Florian Schütte

Hans Segura

Radomyra Shevchenko

Vikram Singh

Mia Specht

Claudia Christine Stephan

Jin-Song von Storch

Raphaela Vogel

Christian Wengel

Marius Winkler

Florian Ziemen

Jochem Marotzke

Bjorn Stevens

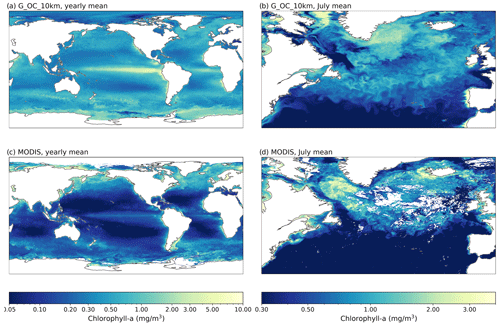

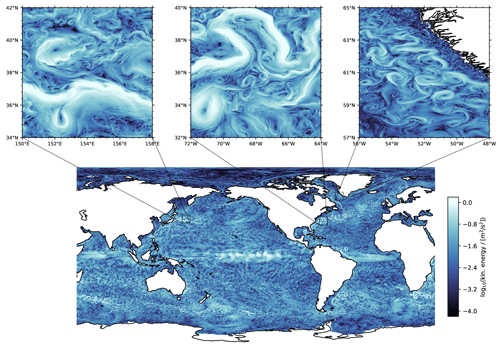

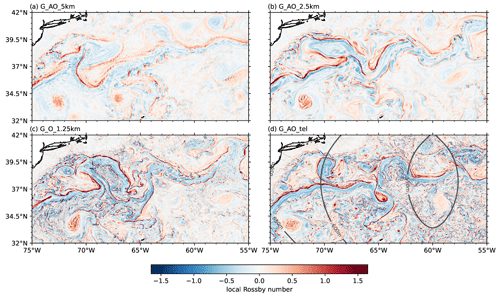

State-of-the-art Earth system models typically employ grid spacings of O(100 km), which is too coarse to explicitly resolve main drivers of the flow of energy and matter across the Earth system. In this paper, we present the new ICON-Sapphire model configuration, which targets a representation of the components of the Earth system and their interactions with a grid spacing of 10 km and finer. Through the use of selected simulation examples, we demonstrate that ICON-Sapphire can (i) be run coupled globally on seasonal timescales with a grid spacing of 5 km, on monthly timescales with a grid spacing of 2.5 km, and on daily timescales with a grid spacing of 1.25 km; (ii) resolve large eddies in the atmosphere using hectometer grid spacings on limited-area domains in atmosphere-only simulations; (iii) resolve submesoscale ocean eddies by using a global uniform grid of 1.25 km or a telescoping grid with the finest grid spacing at 530 m, the latter coupled to a uniform atmosphere; and (iv) simulate biogeochemistry in an ocean-only simulation integrated for 4 years at 10 km. Comparison of basic features of the climate system to observations reveals no obvious pitfalls, even though some observed aspects remain difficult to capture. The throughput of the coupled 5 km global simulation is 126 simulated days per day employing 21 % of the latest machine of the German Climate Computing Center. Extrapolating from these results, multi-decadal global simulations including interactive carbon are now possible, and short global simulations resolving large eddies in the atmosphere and submesoscale eddies in the ocean are within reach.

- Article

(16514 KB) - Full-text XML

- BibTeX

- EndNote

Earth system models (ESMs) have evolved over the years to become complex tools aiming to simulate the flow of energy and matter across the main components – ocean, land, atmosphere, cryosphere – of the Earth system, with their distinctive ability to simulate an interactive carbon cycle (Flato, 2011). ESMs, for instance, allow tracking how water evaporates from the ocean, precipitates over continents, boosts net primary productivity, returns as freshwater via runoff into the salty ocean, and affects the spatial distribution of marine organisms before reevaporating and restarting the cycle anew. Yet ESMs operate on grid spacings of O(100 km). In the atmosphere, this is too coarse to explicitly represent the vertical transport of energy and water due to atmospheric convection. Convection is the main mechanism by which radiative energy is redistributed vertically and the main production mechanism of rain in the tropics (Stevens and Bony, 2013). In the ocean, a grid spacing of O(100 km) is too coarse to explicitly represent mesoscale eddies. Mesoscale eddies control the uptake of heat and carbon by the deep ocean and change the simulated nature of the ocean from a smooth laminar to a chaotic turbulent flow, structuring the large-scale circulation along the way (Hewitt et al., 2017). Moreover, a grid spacing of O(100 km) can only crudely represent the effect of the heterogeneous land surface, bathymetry, and coastal as well as ice shelves on the flow of energy and matter. A grid spacing of 10 km would at least allow an explicit representation of deep convection in the atmosphere (“storm-resolving” model, see Hohenegger et al., 2020) and mesoscale eddies in the ocean (“eddy-rich” model, see Hewitt et al., 2020), and it would capture much of Earth's heterogeneity. In this study, we present the new configuration of the ICOsahedral Nonhydrostatic (ICON) model, called ICON-Sapphire, developed at the Max Planck Institute for Meteorology for the simulation of the components of the Earth system and their interactions at kilometer and subkilometer scales on global and regional domains.

The first climate models were developed to represent atmospheric processes on the global scale and were successively extended to incorporate more components of the Earth system (e.g., Phillips, 1956; Smagorinsky, 1963; Manabe et al., 1965; GARP, 1975). ESMs participating in the last assessment report (AR6) can now represent dynamical ice sheets, terrestrial and marine vegetation that grows and dies, atmospheric chemistry, carbon, nitrogen, sulfur, and phosphorus cycles, and more traditional physical processes that are at play in the atmosphere, at the land surface, and in the ocean (see Sect. 1.5 in Chen et al., 2021). Given the finiteness of computer resources, the increase in the degree of complexity has come at the cost of increases in resolution. The approximations imposed by the limited resolution are thought to be a reason for well-known biases. In the tropics, it still rains many hours too early, the locations of the rain belts are misplaced, and too little rain falls over continents, with implications for the representation of the biosphere, which depends crucially on the precipitation distribution (Fiedler et al., 2020; Tian and Dong, 2020). Prominent biases persist in sea surface temperature (SST), such as warm biases in the upwelling regions at the western coasts of continents, cold tongue biases in the tropical Atlantic and Pacific, and a subpolar cold bias in the North Atlantic (Keelye et al., 2012; Richter and Tokinaga, 2020). State-of-the-art ESMs struggle not only to capture the mean spatial distribution of precipitation and surface temperature, but also to replicate their associated extremes (Wehner et al., 2020). These biases limit confidence in regional and dynamical aspects of climate change (Shepherd, 2014; Lee et al., 2022b). As several of these biases involve interactions between the components of the Earth system, resolving such biases may not be possible by increasing the resolution of just one component.

Flavors of atmospheric models operating at kilometer and subkilometer scales exist. Global atmosphere-only climate simulations at such resolutions have been pioneered by the Nonhydrostatic ICosahedral Atmospheric Model (NICAM) group (Satoh et al., 2005; Tomita et al., 2005; Miura et al., 2007) and typically employ a grid spacing of 14 km, allowing multi-decadal integration periods. The recent DYnamics of the Atmospheric general circulation Modeled On Non-hydrostatic Domains (DYAMOND) intercomparison project demonstrated that global kilometer-scale simulations in short time periods (40 d) are now possible with a range of atmospheric models, with a grid spacing as fine as 2.5 km (Stevens et al., 2019). Independent of these efforts, kilometer grid spacings have been used for regional climate modeling activities on multi-decadal timescales on domain sizes that have evolved from small Alpine regions (Grell et al., 2000; Hohenegger et al., 2008) to continents (Ban et al., 2014; Liu et al., 2017; Stratton et al., 2018). The feasibility of hectometer simulations, wherein not only deep convection but also shallow convection can be represented explicitly, has been demonstrated for the monthly timescale and for domains the size of Germany (Stevens et al., 2020a). Experience gained from simulating the atmosphere at kilometer scales (see, e.g., reviews by Prein et al., 2015; Satoh et al., 2019; Hohenegger and Klocke, 2020; Schär et al., 2020) has robustly shown improvements in the representation of the diurnal cycle, organization, and propagation of convective storms. Furthermore, a more realistic representation of extremes, orographic precipitation, and blocking is obtained, and interactions between mesoscale circulation triggered by surface heterogeneity and convection become visible.

As with atmosphere-only models, ocean-only global simulations have been conducted using grid spacings as fine as ∘ for short timescales (e.g., Rocha et al., 2016; Qiu et al., 2018; Flexas et al., 2019). Coupled models typically employ grid spacings of 1∘, but high-resolution ocean models with grid spacings of ∘ (Haarsma et al., 2016) and even ∘ (Hewitt et al., 2016) have been coupled to low-resolution atmospheres. Notably, Chang et al. (2020) conducted a 500-year simulation with a grid spacing of 0.1∘ for the ocean and 0.25∘ for the atmosphere. Through the use of limited or nested domains, ocean simulations that can explicitly represent submesoscale eddies by making use of hectometer grid spacings have been conducted for specific current systems (e.g., Capet et al., 2008; Gula et al., 2016; Jacobs et al., 2016; Chassignet and Xu, 2017; Trotta et al., 2017). Submesoscale eddies have a horizontal size of 100 m to 10 km. With the increase in computational power, such simulations have become more affordable and now allow for intercomparison exercises (Uchida et al., 2022). As reviewed by Hewitt et al. (2017) and Chassignet and Xu (2021), ocean models with a grid spacing of ∘ and finer lead to a better representation of upwelling regions as well as to a more realistic positioning and penetration of boundary currents; these are features which lead to a reduction of associated SST biases. Such models also benefit from a better representation of the interactions between mesoscale motions and bathymetry when channels and straits become resolvable. Furthermore, resolving ocean eddies reduces subsurface ocean model drift and affects the ocean heat budget, since mesoscale eddies act to transport heat upwards, whereas the mean flow transports heat downwards (Wolfe et al., 2008; Morrison et al., 2013; Griffies et al., 2015; von Storch et al., 2016). Even submesoscale eddies are thought to be potentially climate-relevant as they promote a re-stratification of the mixed layer, impacting atmosphere–ocean interactions (Fox-Kemper et al., 2011), and additionally have been shown to strengthen the mesoscale and large-scale circulation when present in models (e.g., Schubert et al., 2021).

All these developments have paved the way to the ultimate step: resolving the flow of energy and matter by both small- and large-scale phenomena, on climate timescales, and across all components of the Earth system: in other words, resolving convective storms in the atmosphere, mesoscale eddies, and marginally submesoscale eddies in the ocean on global domains with a surface of the Earth that retains most of its heterogeneity. Regional climate model simulations have shown that feedbacks between the land and the atmosphere can potentially be of distinct strength and sign in simulations that explicitly represent convection compared to simulations that parameterize convection, although uncertainties remain due to the use of prescribed lateral boundary conditions (Hohenegger et al., 2009; Leutwyler et al., 2021). Something similar may be true concerning interactions between the various components of the Earth system, making the development of kilometer-scale ESMs particularly interesting.

In this paper, we present the new ICON-Sapphire configuration. Sapphires are blue, like the blue end of the visible light spectrum associated with short wavelengths. Likewise, ICON-Sapphire is designed to resolve small spatial scales, with targeted grid spacings finer than 10 km. Moreover, ICON-Sapphire can in principle be employed as an ESM able to simulate the biogeochemical processes both on land and in the ocean that influence the flow of carbon. To illustrate the intended abilities of ICON-Sapphire, we present results of key simulations: (i) a 1-year global coupled 5 km simulation and its counterpart integrated for 2 months at 2.5 km to resolve the ocean–atmosphere system on multi-decadal timescales with deep convection and mesoscale ocean eddies; (ii) a hectometer-scale atmosphere-only simulation conducted on a limited domain to resolve large eddies in the atmosphere; (iii) a coupled global simulation with an ocean grid spacing of 12 km refined to 530 m over the North Atlantic, as well as an uncoupled global simulation with an ocean grid spacing of 1.25 km, both to explicitly represent submesoscale eddies in the ocean; and (iv) a 10 km 4-year global ocean-only simulation including ocean biogeochemistry to demonstrate the ESM ability of ICON-Sapphire. ICON-Sapphire was also run in a coupled configuration with a grid spacing of 1.25 km for 5 d, but given the shortness of the integration period, results of this simulation are not presented here. The overall goal of the paper is threefold: the first is to describe ICON-Sapphire, thus serving as a reference for future, more comprehensive studies; the second is to show that such ultrahigh-resolution simulations are technically feasible, and the third is to investigate to what extent basic features of the Earth system can be reproduced using a configuration wherein most of the relevant climate processes are represented explicitly, thus also highlighting potential remaining shortcomings of such configurations.

It is generally thought that a throughput of 1 simulated year per day (SYPD), or at least not fewer than 100 simulated days per day (SDPD), is needed for a climate model to be useful, meaning that simulations spanning many decades can be run in a reasonable amount of time (Neumann et al., 2019; Schär et al., 2020). This has long prevented the use of kilometer grid spacings. The use of Graphics Processing Units (GPUs) instead of the conventional Central Processing Units (CPUs) provides a performance increase, bringing kilometer-scale simulations close to the target, as first demonstrated by Fuhrer et al. (2018) for a nearly global atmosphere-only climate simulation. Giorgetta et al. (2022) ported the atmosphere version of ICON-Sapphire to GPUs, making ICON-Sapphire versatile and well adapted to the new generation of supercomputers. This brings multi-decadal ESM simulations at kilometer scales and global large eddy simulations on short timescales within reach, as discussed in this paper.

The outline of the paper is as follows. Section 2 describes the ICON-Sapphire configuration. This includes a description of its main components, their coupling, I/O, and workflow. Section 3 lists the throughput of different configurations. Section 4 illustrates key simulation examples, with an emphasis on the results from the fully coupled ICON-Sapphire configuration run at 5 and 2.5 km. Section 5 summarizes the results and highlights some future development priorities.

The ICON model was originally developed by the Max Planck Institute for Meteorology (MPI-M) for coarse-resolution (grid spacings of O(100 km)) climate simulations and by the German Weather Service (DWD) for weather forecasts. The DWD configuration, called ICON-NWP (Numerical Weather Prediction), only simulates the atmosphere together with a simple representation of land surface processes, whereas the MPI-M configuration includes all components traditionally used in ESMs, especially biogeochemistry on land and in the ocean. In the atmosphere, both model configurations share the same dynamical core and tracer transport scheme but rely on distinct physical parameterizations. The ICON dynamical core is based on the work of Gassmann and Herzog (2008) and Wan et al. (2013) (see Zängl et al., 2015), whereas the coarse-resolution climate configuration of ICON, called ICON-ESM, is presented in Jungclaus et al. (2022). Parallel to the development of this coarse-resolution configuration, MPI-M has developed its new Sapphire configuration, targeting grid spacings of 10 km and finer, including the ability to be used as a true ESM with interactive carbon on multi-decadal timescales.

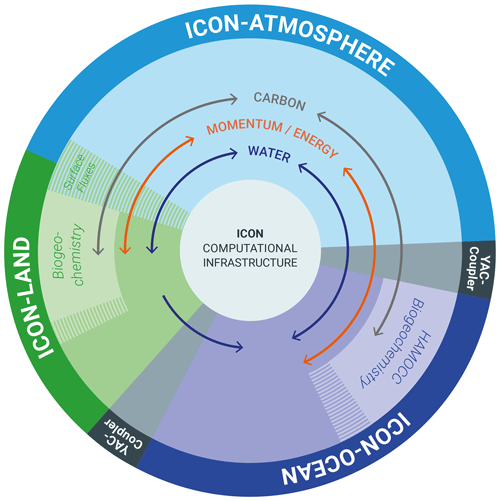

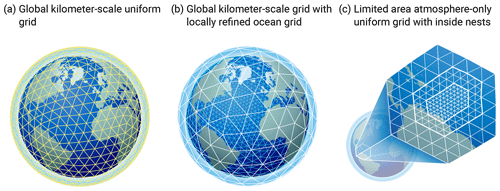

As presented in more detail in the next sections, the Earth system in ICON-Sapphire is split into three main components: atmosphere, land, and ocean, with the ocean physical part including sea ice (see Fig. 1). Ocean biogeochemistry is simulated by the HAMburg Ocean Carbon Cycle (HAMOCC) model, whereas all land surface processes, including biogeochemistry, are simulated by the Jena Scheme for Biosphere–Atmosphere Coupling in Hamburg (JSBACH). Interactions between the atmosphere and the ocean are handled by the Yet Another Coupler (YAC), whereas, due to the choice of implicit coupling, the land is directly coupled to the atmosphere via the turbulence routine. The biogeochemical tracers in the ocean are transported by the same numerical routines as for temperature and salinity, and carbon is exchanged with the atmosphere. The atmosphere component of ICON-Sapphire can be run globally or on limited domains, both supporting only uniform grids (see Fig. 2). Higher resolution is achieved by nesting multiple simulations with successively finer grid spacings; these are simulations which communicate through the boundaries of their respective domains (see Sect. 4.2 for a practical example). The ocean component of ICON-Sapphire can only be run globally but can make use of a nonuniform grid, which allows smoothly refining the grid spacing in one simulation, thus zooming into a region of interest to achieve higher resolution.

Figure 2Grid configurations supported by ICON-Sapphire: (a) global coupled kilometer-scale simulations with a uniform grid in the atmosphere and ocean, (b) global coupled kilometer-scale simulations with a uniform grid in the atmosphere and refined ocean grid over a specific region (telescope), with white for the atmosphere grid and blue for the ocean grid, and (c) atmosphere-only large eddy simulations over limited domains with the possibility of using inside nests to consecutively refine the resolution.

2.1 Atmosphere

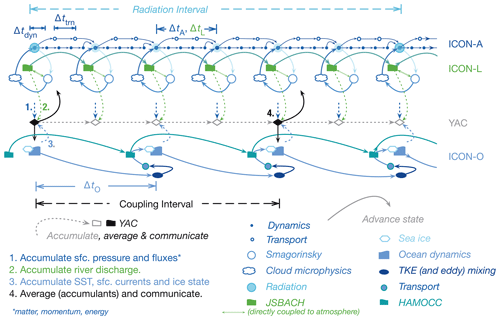

The evolution of the atmosphere is simulated by solving the 3D nonhydrostatic version of the Navier–Stokes equations and conservation laws for mass and thermal energy on the sphere; see Eqs. (3)–(6) in Zängl et al. (2015). The equations are integrated in time with a two-time-level predictor–corrector scheme. Due to the presence of sound waves, the dynamics are sub-stepped so that dynamic adjustments happen at temporal increments 5 times smaller than physical adjustments, as schematically illustrated in Fig. 3. The spatial discretization is performed on an icosahedral–triangular C grid. On such a grid, the prognostic atmospheric variables are the horizontal velocity component normal to the triangle edges, the vertical wind component on cell faces, the virtual temperature, and the full air density including liquid and solid hydrometeors. The placing of these variables on the grid is illustrated in Fig. 4 of Giorgetta et al. (2018). The grid can be global or restricted to a limited area (see Fig. 2a, c). The limited-area implementation follows Reinert et al. (2022). The configuration uses a simple one-way or two-way nesting strategy at the boundary. Boundary conditions can be prescribed from larger-scale simulations (or reanalyses) or set to doubly periodic, as recently employed in Lee et al. (2022a) following Dipankar et al. (2015). In addition, there is the possibility to use inside nests. In this case, all the simulations are integrated at the same time but fine scales can be simulated over specific regions. In the vertical, a terrain-following hybrid sigma z coordinate (the Smooth LEvel VErtical coordinate, SLEVE) is used (Leuenberger et al., 2010) with a Rayleigh damping layer in the top levels after Klemp et al. (2008) using a Rayleigh damping coefficient of 1 s−1.

The ICON-Sapphire configuration only retains parameterizations for the physical processes, which unequivocally cannot be represented by the underlying equations at kilometer scales. Those are radiation, microphysics, and turbulence. It may be argued that other processes, like shallow convection, subgrid-scale cloud cover, and orographic drag, should still be parameterized at kilometer scales (Frassoni et al., 2018). We nevertheless refrain from including further or more complex parameterizations. First, it is especially important to have a slim code base that can be easily ported on emerging new computer architectures to fully exploit exascale computers given that the main roadblock preventing climate simulations at these fine grid spacings is computer resources (Palmer and Stevens, 2019; Schär et al., 2020). Second, parameterizations generally do not converge to a solution as the grid spacing is refined, unlike the basic model equations given appropriate discretization algorithms (Stevens and Lenschow, 2001). Along similar lines, underresolved processes at kilometer scales may look more similar to their resolved version than what a parameterization may predict. Hohenegger et al. (2020) showed that many large-scale bulk properties of the atmosphere, such as mean precipitation amount, already start to converge with a grid spacing of 5 km. Third, the need for parameterizations depends upon the intended use of the model. For a weather forecast model, it is important to simulate the atmospheric state as realistically as possible. In this sense, parameterizations may be viewed as a high-level way to tune a model. But this is not the goal of the developed ICON-Sapphire configuration, which is used as a tool to understand the Earth system and its susceptibility to change. In this context, the use of minimalistic physics also helps us understand which climate properties depend upon details of the flow that remain underresolved or parameterized at kilometer scales.

The calling order of the three parameterizations is illustrated in Fig. 3 together with the coupling between dynamics and physics. Radiation is parameterized by the Radiative Transfer for Energetics RRTM for General circulation model applications-Parallel (RTE-RRTMGP) scheme (Pincus et al., 2019). It uses the two-stream, plane-parallel method for solving the radiative transfer equations. The RRTMGP part of the scheme employs a k distribution for computing the optical properties based on profiles of temperature, pressure, and gas concentration. Gaseous constituents and aerosols are prescribed (see Sect. 2.5). The RTE part of the scheme then computes the radiative fluxes using the independent-column approximation in plane-parallel geometry. There are 16 bands in the longwave and 14 bands in the shortwave, and the spectrum is sampled using 16 g points per band. The RTE-RRTMGP scheme can be employed on both GPU and CPU architectures, unlike its predecessor PSrad (Pincus and Stevens, 2013). All simulations presented in this study were nevertheless performed with PSrad as, at first, the RTE-RRTMGP scheme was unexpectedly slow on CPUs. This issue has now been solved and all future simulations with ICON-Sapphire will employ RTE-RRTMGP. Differences between the two schemes are the use of a more recent spectroscopy and roughly twice as many g points in RTE-RRTMGP, although a version with a reduced number of g points, similar to the one used with PSrad, exists for RTE-RRTMGP as well. Due to the high computational cost of radiation schemes in general, radiation is called less frequently than the other parameterizations (see Fig. 3).

For the parameterization of microphysical processes, two schemes employed in the ICON-NWP configuration have been implemented in ICON-Sapphire. There is the option to choose between a one-moment (Baldauf et al., 2011) and a two-moment (Seifert and Beheng, 2006) scheme. All the simulations of this study were conducted using the one-moment scheme. The one-moment scheme predicts the specific mass of water vapor, cloud water, rain, cloud ice, snow, and graupel. In addition, the two-moment scheme predicts the specific numbers of all hydrometeors and also includes hail as one supplementary hydrometeor category. Saturation adjustment is called twice, before and after calling the microphysical scheme. All hydrometeors (number and mass) are advected by the dynamics, but only cloud water and ice are mixed by the turbulence scheme and are optically active. Condensation requires the full grid box to be saturated. ICON-Sapphire does not use a parameterization for subgrid-scale clouds, leading to a binary cloud cover of 0 or 1 at each grid point.

Turbulence is handled by the Smagorinsky scheme (Smagorinsky, 1963) with modifications by Lilly (1962). The implementation and validation of the Smagorinsky scheme are described in Lee et al. (2022a), following Dipankar et al. (2015). The Smagorinsky scheme is the scheme of reference for parameterizing turbulence in large eddy simulations as it includes horizontal turbulent mixing. Strictly speaking, it was not designed for applications at kilometer scales as it assumes that most of the turbulent eddies can be represented explicitly by the flow (e.g., Bryan et al., 2003). It has nevertheless been successfully used with kilometer grid spacings in both idealized and realistically configured simulations (e.g., Klemp and Wilhelmson, 1978; Langhans et al., 2012; Bretherton and Khairoutdinov, 2015). The surface fluxes are computed according to Louis (1979) using the necessary information provided by the land surface scheme; see Sect. 2.2 for a description of the coupling between the land surface and the atmosphere.

2.2 Land

Land processes are simulated by the JSBACH land surface model version 4. It provides the lower boundary conditions for the atmosphere over land, namely albedo, roughness length, and the necessary parameters to compute latent and sensible heat fluxes from similarity theory. The latter are part of solving the surface energy balance equation, which is, together with the multi-soil thermal layers, implicitly coupled to the atmospheric diffusion equations for vertical turbulent transport following the Richtmyer and Morton numerical scheme (Richtmyer and Morton, 1967). A multi-layer soil hydrology scheme is used for the prognostic computation of soil water storage (Hagemann and Stacke, 2015). Surface runoff and subsurface drainage are collected in rivers and are routed into the ocean by the hydrological discharge model of Hagemann and Dümenil (1997) with river directions determined by the steepest descent (Riddick, 2021). The physical, biogeophysical, and biogeochemical processes included in JSBACH are described in Reick et al. (2021) for JSBACH version 3.2. These processes are leaf phenology, dynamical vegetation, photosynthesis, carbon and nitrogen cycles, natural disturbances of vegetation by wind and fires, and natural and anthropogenic land cover change. New features in JSBACH 4 are the inclusion of freezing and melting of water in the soil, a multi-layer snow scheme (Ekici et al., 2014; de Vrese et al., 2021), and the possibility to compute the soil properties as a function of the soil water and organic matter content. Land surface processes are called at the same time as the main atmospheric processes (see Fig. 3) and integrated on the same grid. From an infrastructure point of view, JSBACH 4 has been newly designed in a Fortran 2008 object-oriented, modular, and flexible way.

Given the targeted horizontal resolution of ICON-Sapphire, there is no subgrid-scale heterogeneity in vegetation type. Only one vegetated tile is used, characterized by a vegetation ratio that gives the area coverage of vegetation over that tile, implicitly accounting for the presence of bare soil on the vegetated tile. A land cell can nevertheless be split between lake and vegetated or be fully covered by a glacier. Lakes are fractional but are not allowed in coastal cells. The surface temperature of lakes is computed by a simple mixed layer scheme including ice and snow on lakes (Roeckner et al., 2003). In coastal regions, fractional land is permitted.

In this study, JSBACH has been used in a simplified configuration that only interactively simulates the physical land surface processes, as in weather forecasts or in traditional general circulation models. Leaf phenology is prescribed following a monthly climatology. Interactive leaf phenology has been employed in Lee et al. (2022a) with idealized radiative convective equilibrium simulation conducted using a grid spacing of 2.5 km. Also, the hydrological discharge model was turned off. Initialization of the hydrological discharge model reservoirs using remapped values taken from a prior MPI-ESM simulation failed. With this approach, unrealistically large discharge values occurred during the first 3 months, indicative of a poor initialization. Moreover, the discharge of a river is dumped into the uppermost ocean layer in a single cell, which is an unrealistic assumption with kilometer grid spacings. By running an offline version of the hydrological model to quasi-equilibrium to obtain better initial states of the reservoirs and by redistributing the discharge over multiple ocean cells, the mentioned problems could be solved. Newer simulations conducted with ICON-Sapphire include river discharge.

2.3 Ocean

The ocean model ICON-O (see Korn, 2018; Korn et al., 2022) solves the hydrostatic Boussinesq equations, the classical set of dynamical equations for global ocean dynamics. The time stepping is performed using a semi-implicit Adams–Bashforth-2 scheme in which the dynamics of the free surface are discretized implicitly. ICON-O evolves the state vector consisting of the horizontal velocity normal to the triangle edges, surface elevation, potential temperature, and salinity. The UNESCO-80 formulation is employed as equation of state to compute density given potential temperature, salinity, and depth. ICON-O uses, as the atmosphere, an icosahedral–triangular C grid. However, in contrast to the atmosphere, the global grid can be locally refined to create a “computational telescope” (Fig. 2b) that zooms into a region of interest. The numerics of ICON-O share similarities to the atmosphere component but also have important differences. Both components use a mimetic discretization of discrete differential operators, but ICON-O uses the novel concept of Hilbert-space compatible reconstructions to calculate volume and tracer fluxes on the staggered ICON grid (see Korn, 2018). The transport of the oceanic tracers potential temperature and salinity is performed using a flux-corrected transport scheme with a Zalesak limiter and a piecewise-parabolic reconstruction in the vertical direction, analogous to what is done for the atmospheric tracers. The numerical scheme of ICON-O allows for generalized vertical coordinates: of particular importance here are the depth-based z and z* coordinates. In the z-coordinate system, only the sea surface height varies in time. Since the surface height is added to the thickness of the top grid cell, the combination of a thin surface grid cell with a strong reduction of sea surface height can lead to a negative layer thickness, creating a model blow-up. This problem is avoided by using the z* coordinate. In this case, the changing domain of the ocean is mapped onto a fixed domain but with vertical coordinate surfaces that change in time. For the simulations presented in this study, the z coordinate was used.

Given the fine resolution of ICON-Sapphire, only a subset of the parameterizations available in ICON-O and described in detail in Sect. 2.2 of Korn et al. (2022) are used, namely a parameterization for vertical turbulent mixing and one for velocity dissipation. The parameterization of turbulent vertical mixing relies on a prognostic equation for turbulent kinetic energy and implements the closure suggested by Gaspar et al. (1990), wherein a mixing length approach for the vertical mixing coefficient for velocity and oceanic tracers is used. For velocity dissipation (or friction), either a “harmonic” Laplace, a “biharmonic” iterated Laplace operator, or a combination of the two can be used. The viscosity parameter can be set to a constant value, scaled by geometric grid quantities such as edge length of triangular area, or computed in a flow-dependent fashion following the modified Leith closure in which the viscosity is determined from the modulus of vorticity and the modulus of divergence (Fox-Kemper and Menemenlis, 2008). The calling order between dynamics, physics, and transport is illustrated in Fig. 3.

The sea ice model is part of ICON-O. It consists of a dynamic and a thermodynamic component. Sea ice thermodynamics describe freezing and melting by a single-category, zero-layer formulation (Semtner, 1976). The current sea ice dynamics are based on the sea ice dynamics component of the Finite-Element Sea Ice Model (FESIM) (Danilov et al., 2015). The sea ice model solves the momentum equation for sea ice with an elastic–viscous–plastic (EVP) rheology. The configuration of the sea ice model with an EVP rheology and single-category instead of multi-category sea ice thermodynamics was chosen because of computational efficiency considerations. Recent work (e.g., Wang et al., 2016) suggests that the EVP rheology provides at a high resolution of 4.5 km a good compromise between physical realism and computational efficiency. Since ICON-O and FESIM use different variable staggering, a wrapper is needed to transfer variables between the ICON-O grid and the sea ice dynamics component (see Korn et al., 2022). A new sea ice dynamics model has been developed to bypass these limitations (see Mehlmann et al., 2021; Mehlmann and Korn, 2021) and will be employed in the future.

The ocean biogeochemistry component is provided by HAMOCC6 (Ilyina et al., 2013). It simulates at least 20 biogeochemical tracers in the water column, following an extended nutrient, phytoplankton, zooplankton, and detritus approach, also including dissolved organic matter, as described in Six and Maier-Reimer (1996). It also simulates the upper sediment by 12 biologically active layers and a burial layer to represent the dissolution and decomposition of inorganic and organic matter as well as the diffusion of pore water constituents. The co-limiting nutrients consist of phosphate, nitrate, silicate, and iron. A fixed stoichiometry for all organic compounds is assumed. Phytoplankton is represented by bulk phytoplankton and diazotrophs (nitrogen fixers). Particulate organic matter (POM) is produced by zooplankton grazing on bulk phytoplankton and enters the detritus pool. Export production is separated explicitly into CaCO3 and opal particles. The POM sinking speed is calculated using the Microstructure, Multiscale, Mechanistic, Marine Aggregates in the Global Ocean (M4AGO) scheme (Maerz et al., 2020). The time stepping and horizontal grid are as in ICON-O.

2.4 Coupling

For the coupling between the atmosphere and the ocean (see Figs. 1 and 3), we use YAC (Hanke et al., 2016) version 2.4.2. This version has been completely rewritten. In particular, each MPI process now only has to provide its own local data to the coupler without any information about MPI processes containing neighboring partitions of the horizontal grid. Furthermore, the internal workload is now evenly distributed among all source and target MPI processes.

The atmosphere provides to the ocean the zonal and meridional components of the wind stress separately over ocean and sea ice given their different drag coefficients, the surface freshwater flux as rain and snow over the whole grid cell, and evaporation over the ocean fraction of the cell, shortwave and longwave radiation, latent and sensible heat fluxes over the ocean, sea ice surface and bottom melt potentials, the 10 m wind speed, and sea level pressure. The ocean provides the sea surface temperature, the zonal and meridional components of velocity at the sea surface, the ice and snow thickness, and ice concentration. The interpolation of the wind is done using the simple one-nearest-neighbor method when the grid of the ocean and of the atmosphere matches. For more complex geometries, the wind is interpolated using Bernstein–Bézier polynomials following Liu and Schumaker (1996). We use the so-called interpolation stack technique of YAC and apply a four-nearest-neighbor interpolation to fill target cells with incomplete Bernstein–Bézier interpolation stencils. All other fields are interpolated with a first-order conservative remapping. The land–sea mask is determined by the ocean grid. The coupler and our coupling strategy are designed to conserve fluxes.

2.5 Input/output

External data and initial conditions are interpolated onto the horizontal grid at a preprocessing step. Vertical interpolation is done online during the model simulation. Concerning the land surface, required external physical parameters are based on the same data set (Hagemann, 2002; Hagemann and Stacke, 2015) as used in the past in the MPI-ESM (Mauritsen et al., 2019). These data sets have an original grid spacing of 0.5∘ × 0.5∘. For experiments at kilometer scales, this is not optimal and work to derive these parameters directly from high-resolution data sets is underway. The orography is nevertheless derived from the Global Land One-km Base Elevation Project (GLOBE), which has a nominal resolution of 30′′, so about 1 km. Likewise, the bathymetry has a 30′′ resolution, as provided by the Shuttle Radar Topography Mission (SRTM30_PLUS) data set (Becker et al., 2009). In the atmosphere, for the simulations presented in this study, global mean concentrations of greenhouse gases are set to their values of the simulated year, with values taken from Meinshausen et al. (2017). Ozone varies spatially on a monthly timescale. The data set is taken from the input data sets for model intercomparison projects (input4MIPS) with a resolution of 1.875∘ by 2.5∘ (latxlon), and the year 2014 is chosen as default. Aerosols are specified from the climatology of Kinne (2019), which provides monthly data on a 1∘ grid.

Initial conditions for the atmosphere are derived from the European Centre for Medium-Range Weather Forecasts (ECMWF) operational analysis for the chosen start date. Initial conditions for soil moisture, soil and surface temperatures, and snow cover are also derived from the ECMWF analysis. The soil is not spun up as it is unclear how to best do this in global climate storm-resolving simulations. Analysis of the output of the global coupled 5 km simulation nevertheless reveals that four of the five soil layers are already equilibrated after 7 simulation months in the northern extratropics, defined from 30 to 60∘ N, and three of them in the tropics in the sense that the continuous drying of the soil since simulation start has stopped. In contrast, all the ocean prognostic variables are spun up. The ocean initial state is taken from a spun-up ocean model run with climatological forcing. As the spin-up methodology slightly differs between the different experiments presented in this study, it is described for each simulation individually in more detail in Sect. 4 where the simulation results are presented.

ICON-Sapphire uses asynchronous parallel input/output because efficient parallel I/O is the main performance bottleneck. Moreover, the size of a single 3D variable is too big to fit into the memory of one CPU. The model calculations are carried out in parallel and the output is distributed across the local memories of the cluster's CPUs. The employed approach is to hide the overhead introduced by the output behind the computation (“I/O forwarding”). By using dedicated I/O processes, the simulations can be further integrated in time while dedicated CPUs handle the I/O. Moreover, reading and writing files in parallel greatly reduces the time spent on I/O (see Sect. 3).

One challenge of kilometer-scale simulations is the amount of output generated. At 2.5 km, the atmosphere contains 83 886 080 cells at each of the 90 vertical levels of our set-up, and the ocean contains 59 359 799 cells and 112 levels. The output has mostly been analyzed using Jupyter notebooks and Python, with which efficient ways to analyze the output directly on the unstructured grid have been developed. As part of ICON-Sapphire activities and of the next Generation Earth Modelling Systems (nextGEMS) project, an e-book has been developed as a community effort, providing information on the simulations as well as hints and example scripts. The e-book can be found at https://easy.gems.dkrz.de (last access: 17 January 2023). An important tool for selecting a subset of the output or in general for reformatting and transforming variables is the Climate Data Operators (CDO), which are developed at MPI-M (Schulzweida, 2022). Calculations are performed in CDO with double precision, whereas the output format of ICON-Sapphire simulations (see next section) is single precision. To reduce the memory requirement, single-precision 32 bit data are now mapped directly in memory without conversion. This reduces the memory requirement for memory-intensive operations by a factor of 2. New operators have also been created to support unstructured grids, e.g., selcircle, selregion, maskregion, and sellonlatbox; see the latest release of the CDO documentation for more information (Schulzweida, 2022).

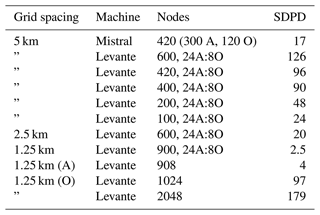

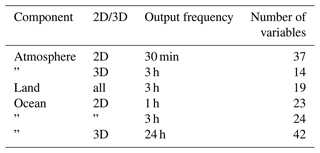

Table 1 focuses on the performance of the global coupled simulations conducted with ICON-Sapphire as those are the type of simulations that ultimately should be integrated on multi-decadal timescales, and Table 2 summarizes the type of variables outputted for one simulation. In ICON terminology (Giorgetta et al., 2018), the considered grid spacings are R2B9 and R2B10, which approximately correspond to grid spacings of 5 and 2.5 km, respectively. Note that on the unstructured ICON grid, all cells have approximately the same area, and the grid spacing in kilometers corresponds to the square root of the mean cell area. The specific settings of the simulations are summarized in Table 3 and described in more detail in the next section when we present the simulation results. For the 5 km simulation, we write about 40 TB of output per month (uncompressed) using NetCDF-4 and 32 bit as output format. The output storage increases to 135 TB per month for the 2.5 km simulation, making it clear that new output strategies have to be developed for longer-term simulations.

The production simulations have been performed on the supercomputer Levante of the German Climate Computing Center (DKRZ). Levante was ranked 86 in the top 500 list in November 2021 (time of installation) and replaced the previous machine Mistral. Table 1 indicates that ICON-Sapphire scales very well with node number. Increasing the node number by a factor of 6, from 100 to 600 nodes, leads to a factor of 5.25 increase in simulated days per day (SDPD) for the simulation with 5 km grid spacing on Levante. The scaling starts breaking when using more than 400 nodes. Going from the old machine Mistral to the new machine Levante leads to a 5.6× increase in SDPD for the same number of nodes. There are two reasons for this increase. From a technical point of view, we expect a gain in throughput by roughly a factor of 4 from the increase in the number of processors per node (128 on Levante versus 32 on Mistral). The additional gain is attributed to a more economic use of resources due to the introduction of asynchronous ocean output, which became available only recently.

Making use of these various advantages, Table 1 reveals that a throughput of 126 SDPD is achieved on Levante for a grid spacing of 5 km when using 600 nodes, or 21 % of the compute partition. This is slightly larger than the minimum throughput of 100 SDPD considered to be needed for climate simulations to be useful (see introduction). With a grid spacing of 2.5 km, a throughput of 20 SDPD is obtained on 600 nodes. On the one hand, it is slightly better than the theoretically expected 8-fold decrease in performance due to the doubling in grid spacing. On the other hand, it is presently still too small to allow conducting climate simulations at such a high resolution. At best, a throughput of 100 SDPD would be obtained if using the whole Levante and assuming a perfect scaling.

Instead of CPUs, GPUs can be used to obtain a better throughput. GPUs are available on the supercomputer JUWELS Booster at the Jülich Supercomputing Centre. One JUWELS Booster node is about 0.05 PFlops compared to 0.003 PFlops for one Levante node in terms of their linpack benchmarks. Hence, in theory, we would expect a performance increase of 17 going from Levante to JUWELS Booster, giving a throughput of almost 1SYPD for the 2.5 km configuration. Giorgetta et al. (2022) compared atmosphere-only 5 km simulations conducted with ICON on JUWELS Booster (with GPU) and on Levante (with CPU) using a slightly different model configuration than our ICON-Sapphire set-up, consisting of a different turbulence scheme and using RTE-RRTMGP as radiation scheme. In their study, the performance increase is closer to 8 (see their Table 3). This would mean that 1 SYPD would be feasible on a 68 PFlops machine, a machine 1.5 times the size of JUWELS Booster. Such a throughput would now be possible on petascale machines such as Europe's most powerful supercomputer LUMI in Finland with 150 PFlops. GPUs are interesting not only because of their performance increase but also because of their low energy consumption. JUWELS Booster gets about 50 PFlops per MW, whereas Levante gets about 4.6 PFlops per MW. So even if JUWELS Booster is less productive than expected from the number of PFlops per node, it still does 5 times as much throughput per watt than Levante.

A grid spacing of 1.25 km (R2B11) is the highest grid spacing that has currently been tested with the coupled ICON-Sapphire configuration. It has been integrated for 5 d, with a throughput of 2.5 SDPD (see Table 1). This number could be improved by a better load balancing between the ocean and atmosphere as currently the ocean waits upon the atmosphere. The atmosphere-only version of ICON-Sapphire has been run at 1.25 km on Levante on 908 nodes for 3 d, giving a throughput of 4 SDPD. Also, an ocean-only version of ICON-Sapphire has been run at 1.25 km on Levante. The throughput is 97 SDPD on 1024 nodes and 179 SDPD on 2048 nodes, which is 15 % short of the expected perfect scaling (194 SDPD on 2048 nodes). These numbers indicate that the ocean also begins to become a bottleneck to reach 1 SYPD with a grid spacing of 1.25 km. Although these numbers are by far too low to allow multi-decadal simulations, even on a machine like LUMI, seasonal timescales are in principle already possible on a global scale and with a grid spacing of 1.25 km using ICON-Sapphire.

Table 1Performance of global coupled configuration runs with a grid spacing of 5 and 2.5 km, called G_AO_5km and G_AO_2.5km in Table 3 summarizing the setting of all simulations, and with a grid spacing of 1.25 km. The performance at 1.25 km is also given for an atmosphere-only (A) and ocean-only (O) configuration as the throughput of the coupled simulation has not been fully optimized yet. Under nodes, “A” stands for atmosphere and “O” for ocean. On Levante, the A and O numbers give the load balancing, and on Mistral they directly give the number of processors. The specifications of Mistral and Levante nodes are as follows. Mistral node: 2x 18-core Intel Xeon E5-2695 v4 (Broadwell) 2.1GHz, connected with Fourteen Data Rate (FDR) Infiniband; Levante node: 2x AMD 7763 CPU; 128 cores.

Table 2Overview of the output of the simulation with a grid spacing of 5 km, called G_AO_5km in Table 3.

In this section, we present key examples of simulations conducted with ICON-Sapphire to illustrate its intended abilities. The simulation settings are summarized in Table 3. As will be shown, the simulated fields agree to a satisfactory degree with expectations and observations, even though we identify aspects of the climate system that remain problematic to capture and would require further tuning of ICON-Sapphire for improvement. In addition, the versatility and good scalability of ICON-Sapphire allow its use in a variety of set-ups at the forefront of exascale climate computing. Extrapolating from these results, ICON-Sapphire now allows Earth system simulations with interactive carbon with a grid spacing of 10 km for multi-decadal timescales. At the other end of the spectrum, ICON-Sapphire allows studying the effects of submesoscale eddies in the ocean by employing its telescope feature and large eddies in the atmosphere by employing its limited-area ability on the transport of energy and matter.

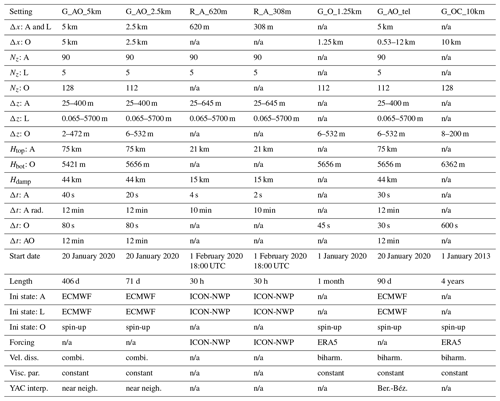

Table 3Summary of simulation settings. More information on the employed external data is in Sect. 2.5 and on the ocean spin-up in Sect. 4 in the respective subsections. Abbreviations A, L, O, and C are for atmosphere, land, ocean, and carbon; n/a is for not applicable, Δx for the horizontal grid spacing, Δz for the vertical grid spacing, Nz for the number of vertical levels, Htop for the domain top, Hbot for the ocean bottom, Hdamp for the height at which damping starts in the atmosphere, and Δt for the time step. Note that in the atmosphere and on land, Δz increases with height, which is not the case in the ocean where the top layer has a thickness of 7 m. “Forcing” indicates the simulation providing the lateral boundary conditions in the limited-area atmosphere-only simulations and the simulation providing the atmospheric forcing in the ocean-only simulations. Given the strong vertical stretching in the upper atmosphere, the indicated Δz in the atmosphere is for layers below 14 km.

4.1 Simulating the coupled ocean–atmosphere system globally on seasonal timescales at 5 and 2.5 km

In this section, we present results from global coupled simulations conducted with ICON-Sapphire employing a uniform grid spacing of 5 and 2.5 km, referred to as G_AO_5km and G_AO_2.5km for global atmosphere–ocean simulation with the suffix indicating the grid spacing (see Table 3 and Fig. 2a). The length of the simulation periods is unique, with G_AO_5km integrated for more than 1 year from 20 January 2020 to 28 February 2021 and G_AO_2.5km for 72 d from 20 January 2020 to 30 April 2020 to at least cover the time period of the new winter DYAMOND intercomparison study. The necessary initial and external data are listed in Sect. 2.5. The ocean is spun up by conducting an ocean-only simulation. We start with a grid spacing of 10 km and use the Polar science center Hydrographic Climatology 3.0 (PHC) observational data set (Steele et al., 2001) to initialize all ocean fields. That simulation is run for 1 year 25 times using the Ocean Model Intercomparison Project (OMIP) atmospheric forcing by Röske (2006). Then the simulation is forced from 1948 to 1999 by the National Centers for Environmental Prediction (NCEP) reanalysis (Kalnay et al., 1996) and from 2000 to 2009 by the ECMWF reanalysis ERA5 (Hersbach et al., 2020). The obtained state serves as a new initial state for a 5 km ocean-only simulation forced by ERA5 and integrated over the time period 2010 to 20 January 2020. This end state is interpolated onto the 2.5 km grid to obtain the initial state of G_AO_2.5km. In all the simulations, sea surface salinity is relaxed towards observed 10 m salinity of the PHC data set with a time constant of 3 months. Through this initialization technique, the ocean is spun up and already entails mesoscale eddies without having to use a multi-decadal spin-up at 2.5 km, which is computationally too expensive. The conducted long spin-up gives some confidence that the ocean model is working correctly. Comparing the last full month of the spin-up (December 2019) to the Ocean Reanalysis System 5 (ORAS5, Zuo et al., 2019), the global mean SST bias is −0.53 K. The SST is consistently too cold except along 60∘ S, north of 50∘ N, and along the western coast of North America. Due to the relaxation, the salinity is well captured except in the Arctic Ocean, with the latter showing a bias of more than 1 g kg−1.

Despite the uniqueness of the integration period for such simulations, the shortness of the integration period makes it challenging to validate the simulations as we cannot expect to reproduce one particular observation year. As such, we will not only consider the mean climatology from observations, but include year-to-year variability in the analysis. Also, the integration period remains too short to demonstrate at the outset that the ocean model works correctly given its slow dynamics. As a consequence, we will focus the ocean validation on variables that couple more strongly to the atmosphere and react fast. The ocean validation merely intends to show that no obvious bias exists, as for many scientific questions, e.g., related to the seasonal migration of tropical rain belts, effects of instability waves on air–sea fluxes, or interactions between ocean, atmosphere, and ice as shown in some of the examples below, an integration period of 1 year is sufficient. Despite the long experience of running ICON at low resolution in a coupled mode (Jungclaus et al., 2022), it is not a given that the ocean works and couples correctly to the atmosphere. In fact, several bugs were discovered during the development phase of the coupled high-resolution configuration, in particular bugs related to the momentum coupling between the ocean and atmosphere.

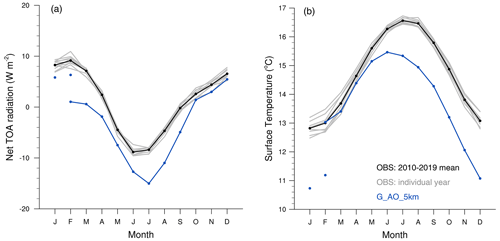

Figure 4Seasonal cycle in (a) net TOA radiation and (b) surface temperature from observations and G_AO_5km. Observations in (a) are from CERES-EBAF (Clouds and the Earth's Radiant Energy System–Energy Balanced and Filled, Loeb et al., 2018) version 4.1 with a grid spacing of 1∘ and in (b) from HadCRUT (Hadley Centre/Climatic Research Unit Temperature, Morice et al., 2021) version 5.0 with a grid spacing of 5∘. For G_AO_5km, the solid marked line shows the months in 2020 and the two dots in 2021.

4.1.1 5 km results

As ESMs aim to represent the flow of energy and matter in the Earth system, we start our validation with energy. Figure 4a displays the seasonal cycle in the net top-of-the-atmosphere (TOA) energy. Too much energy leaves the Earth system in G_AO_5km during the first half of the year, by 3 to more than 6 W m−2 compared to observations. The simulation adjusts with time and better matches the observations from September 2020 to February 2021 with a remaining underestimation between 1 and 3 W m−2. Overall, this leads to a yearly imbalance of −4 W m−2 in G_AO_5km. This imbalance results from an overly high reflection of shortwave radiation by 7.3 W m−2, which is partly compensated for by corresponding outgoing longwave radiation that is too weak by 3.3 W m−2. The bias in shortwave radiation is due to too much reflection over oceans (11 W m−2), whereas the land shows too little reflection (by 3.7 W m−2). The differences are especially prominent over tropical oceans in regions of shallow convection and stratocumulus, which exhibit cloud cover that is too large (not shown). This is expected from storm-resolving simulations whose grid spacings remain too coarse to properly resolve shallow convection (e.g., Hohenegger et al., 2020; Stevens et al., 2020a). The consequence is a spurious and strong increase in cloud cover the coarser the grid spacing is. In contrast, the bias in outgoing longwave radiation is more prominent over the extratropics, especially over the Northern Hemisphere extratropics, with a deviation of 6 W m−2 against 3 W m−2 in the Southern Hemisphere extratropics and 2 W m−2 in the tropics. The simulated outgoing longwave radiation that is too weak is in agreement with the Northern Hemisphere extratropics being much colder (and cloudier) than observations, a reflection of their larger land fraction (see further below).

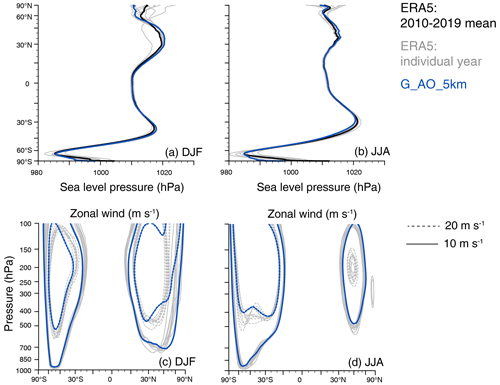

Figure 5Meridional cross-sections of zonally averaged sea level pressure (hPa) for (a) DJF and (b) JJA as well as height–latitude cross-sections of zonal wind velocity (m s−1) for (c) DJA and (d) JJA from ERA5 (grid spacing of 30 km) and G_AO_5km. In G_AO_5km, DJF is the mean from December 2020 to February 2021.

As explained in Mauritsen et al. (2012), it is common practice in ESMs to tune the radiation balance to adjust uncertain parameters related to unresolved processes. Given the obtained yearly imbalance of −4 W m−2, this need to adjust parameter values remains true at 5 km. However, known tuning knobs of coarse-resolution simulations, such as entrainment rates of convective parameterizations or cloud inhomogeneity parameters, either do not exist anymore or were not as efficient as at low resolution. A promising route to tune the TOA imbalance in ICON-Sapphire, which is currently under investigation, is to slightly adapt the mixing formulation in the Smagorinsky scheme. Our current formulation sets the eddy diffusivities to zero if the Richardson number is greater than the eddy Prandtl number. This cutoff unrealistically inhibits mixing because of well-known limitations of the Smagorinsky scheme in simulating the transition to turbulence (Porte-Agel et al., 2000) and because of a failure to incorporate the effect of moist processes. As a result, over cold and moist surfaces, insufficient ventilation of the boundary layer occurs, causing moisture to build up and resulting in excessive low clouds. In ongoing experiments, we have explored adding a small amount of background mixing at interfaces between saturated and unsaturated layers where the equivalent potential temperature decreases upward, mimicking the effects of buoyancy reversal (Mellado, 2017). Low clouds respond sensitively to this background mixing. Adapting the amount of this background mixing provides a convenient tuning knob on the quantity of low clouds and their influence on the top-of-the-atmosphere energy budget.

Complicating the issue, several areas where energy is not conserved have been identified: the dynamical core unphysically extracts energy from the flow at a rate of about 8 W m−2, and precipitation is an unphysical source of energy. The former has been documented by Gassmann (2013). The latter arises because hydrometeors are assumed to have the temperature of the cell in which they are found. Because this assumption neglects the cooling of the air that accompanies the precipitation through a stratified atmosphere, it acts as an internal energy source of roughly (and coincidentally) equal magnitude as the dynamic sink. This explains why these energy leaks, which are also present in ICON-ESM, were not discovered previously. Moreover, minor energy leaks related to phase changes in the constant volume grid not conserving internal energy, as well as to an inconsistent formulation of the turbulent fluxes, have been discovered. Fixes for all of these problems have been identified and are being implemented.

Given that the Earth system is losing too much energy, a cooling of the global mean surface temperature is expected (Fig. 4b). The mean values are 286.5 K in G_AO_5km against 287.9 K in observations. Biases are stronger over land than over oceans, with a mean bias of −1.7 K over land versus −0.6 K over oceans, likely reflecting the smaller heat capacity of the land surface (see also Fig. 5f in Mauritsen et al., 2022, for an overview of the spatial distribution of temperature biases during JJA).

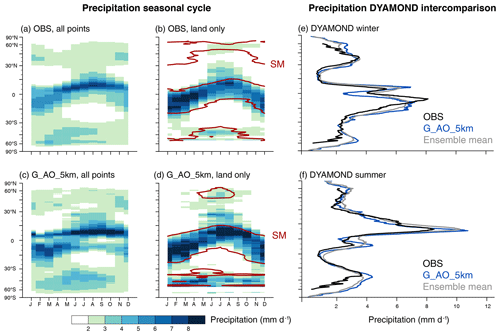

Figure 6Seasonal evolution of zonal mean precipitation (shading) from (a–b) observations and (c–d) G_AO_5km. In panels (b) and (d), only land points are included with the soil moisture (SM) contour overlaid in brown (1.5 cm in b and 1 cm in d given their distinct magnitude). Observations are from the Global Precipitation Climatology Project (GPCP, grid spacing of 2.5∘, Adler et al., 2018) and from the European Space Agency Climate Change Initiative (ESACCI, Dorigo et al., 2017) soil moisture (grid spacing of 0.25∘) averaged over the years 2010–2019. For G_AO_5km the January and February values are from 2021. Panels (e)–(f) show the zonal mean precipitation from (e) the DYAMOND winter and (f) DYAMOND summer intercomparison study including the ensemble mean of participating models.

ICON-Sapphire explicitly represents the modes of energy transport. In the atmosphere, storms (baroclinic eddies) dominate the energy transport in the extratropics, whereas atmospheric convection dominates the vertical energy transport in the tropics. We thus now investigate the representation of the large-scale circulation and precipitation. Figure 5a and b show meridional cross-sections of zonally averaged sea level pressure in G_AO_5km and reanalysis for winter and summer. Except for sea level pressure over Antarctica that is too low, the simulated sea level pressure lies within the internal variability from the reanalysis. The location and strength of the midlatitude low-pressure systems and of the subtropical high-pressure systems match ERA5, and their expected seasonal migration and intensification is reproduced. Similarly good agreement is obtained by looking at the jets (see Fig. 5c–d). Both the location and intensity of the jet in the winter hemisphere lie within internal variability. The position of the jet in the summer hemisphere is also well captured, but the simulated jet is weaker than the jet in the reanalysis, consistently so in the Northern Hemisphere.

Figure 6 shows the observed and simulated seasonal cycle in precipitation. The bands of enhanced precipitation within the extratropical storm tracks can be recognized together with their seasonal cycle. Averaged over the midlatitudes, defined from 30∘ poleward, precipitation amounts to 2.37 mm d−1 in G_AO_5km against 2.31 mm d−1 for the 10-year observational mean and is larger than any of the observed yearly mean values by at least 0.03 mm d−1. Likewise, the migration of the tropical rain belt is generally captured in the zonal mean (see Fig. 6a and c), although the Equator stands out in G_AO_5km with too little precipitation. This leads to the formation of a double Intertropical Convergence Zone (ITCZ) over the western Pacific with two parallel precipitation bands; the southern band is too zonal, reaching too far east and remaining south of the Equator even during boreal summer (see Fig. 6a and c). This bias is linked to SSTs that are too low at the Equator, as shown in Segura et al. (2022) in their detailed assessment of the representation of tropical precipitation in G_AO_5km. Over land (Fig. 6b and d), in contrast, the seasonal migration of the tropical rain belt is very well reproduced, but the amounts are overestimated. Tropical mean precipitation amounts over land are 3.6 mm d−1 in G_AO_5km and 3.11 mm d−1 in observations for the 10-year mean, with no observed yearly value larger than 3.30 mm d−1.

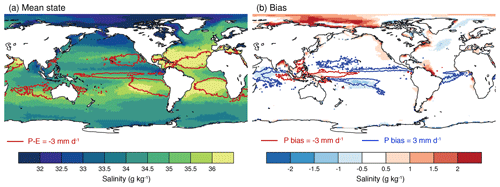

Figure 7Salinity (g kg−1) from G_AO_5km as (a) a temporal mean and (b) bias relative to observations overlaid with contour lines representing P−E in (a) and precipitation bias in (b). Observations from PHC (grid spacing of 1∘) for salinity and from GPCP for precipitation. For G_AO_5km, the time period 1 March 2020 to 28 February 2021 is considered.

Hence, ICON-Sapphire overestimates precipitation amounts at least over the tropics. This remains true in the global mean, with an overestimation by 0.4 mm d−1. The overestimation is indicative of a radiative cooling of the atmosphere that is too strong. However, even on the observational side, existing discrepancies between observed net radiation and observed precipitation suggest that precipitation amounts may in reality be larger than what observational precipitation data sets are suggesting (Stephens et al., 2012). Despite these apparent discrepancies, the simulated precipitation is close to the mean precipitation as derived from the ensemble of storm-resolving models participating in the DYAMOND intercomparison study, as shown in Fig. 6e and f. As the DYAMOND models employ prescribed SST derived from ECMWF analysis, the good agreement gives us confidence in the behavior of G_AO_5km. The most noticeable difference, which is clearly out of the ensemble mean, is the underestimation of precipitation at the Equator during DYAMOND winter.

Explicitly representing convection is important not only for explicitly representing one of the main modes of energy transport in the tropics but also for explicitly simulating the transport of water that is important for the biosphere. The brown contour line in Fig. 6b and d depicts the seasonal cycle in soil moisture. Not surprisingly, in the tropics, it matches the migration of the tropical rain belt very well, as also found in observations. In the northern extratropics, the moistening of the soil during summertime seems consistent with the simulated enhanced precipitation, but is inconsistent with observations. This inconsistency may be related to the difficulty of measuring soil moisture during winter due to the presence of ice and snow. In any case, the hydrological budget over land is closed in ICON-Sapphire. As a second main discrepancy, soil moisture is consistently lower in G_AO_5km than in observations and gets drier with time.

The effect of precipitation on the ocean is investigated by comparing salinity and precipitation minus evaporation (P−E) patterns as a sanity check (Fig. 7a). As expected, P−E imprints itself on salinity to a first order (Fig. 7a). Areas with evaporation much stronger than precipitation are more salty. Biases in salinity are small with values smaller than 0.5 g kg−1 except in the western Arctic (Fig. 7b). The latter bias was already present in the spin-up. Interestingly, and even though we are comparing climatological to yearly values and observations from different sources, there seems to be a good correlation in the tropics between precipitation biases and salinity biases, especially in areas receiving too much precipitation. Positive localized salinity biases can also be recognized at the mouth of big rivers, like the Amazon, the Mississippi, or the Congo, which is a signature of not having freshwater discharge into the salty ocean (see Sect. 2.2). Being related to the absence of river discharge and to the simulated precipitation pattern, the pattern of the salinity bias in the tropics is distinct from the one at the end of the spin-up period.

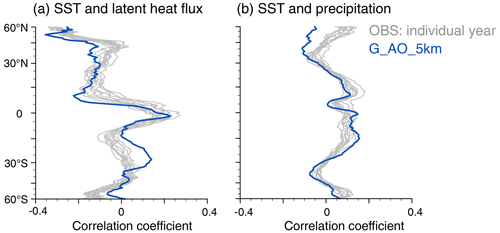

Figure 8Meridional cross-section of zonally averaged correlation between SST and (a) latent heat flux and between SST and (b) precipitation. Observed SST from the Optimum Interpolation SST (OISST, grid spacing 0.25∘, Reynolds et al., 2018), latent heat flux from the Objectively Analyzed air–sea flux (OAFlux_V3, grid spacing 1∘), and precipitation from the Integrated Multi-satellitE Retrievals for Global Precipitation Measurement (IMERG, grid spacing 0.1∘). Daily values are used.

The coupling between the ocean and the atmosphere is further investigated in Fig. 8 by computing correlations between SST, latent heat flux, and precipitation at each grid point and averaging these values zonally. As shown in Wu et al. (2006), the investigated coarse-resolution climate model simulations struggle to capture basic features of air–sea interactions, with an especially widespread positive correlation between SST and precipitation, whereas observations show clear meridional differences in the correlation between SST and precipitation (see their Fig. 3). As shown by Fig. 8, G_AO_5km reproduces the order of magnitude of the correlation as well as its meridional variations between SST and latent heat flux and between SST and precipitation. The main bias is a positive correlation between SST and latent heat flux in the Southern Hemisphere subtropics, which is at odds with the observed negative correlation. Concerning precipitation, even though the strength of the simulated coupling between SST and precipitation is within observed individual years, it tends to fall either on the weak or on the strong side. The exception is in the higher northern latitudes that have a negative correlation between SST and precipitation, which is opposite to the positive correlation seen in observations.

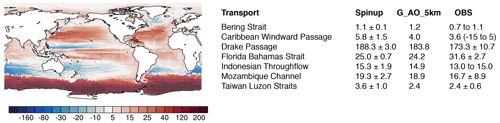

Figure 9Barotropic streamfunction (Sv) with associated major transports that can be computed from the streamfunction. Observations for the historic period are taken from Table J2 of Griffies et al. (2016) except for the Drake Passage, which is taken from a more recent estimate (Donohue et al., 2016). In the table, the spin-up values are averaged over the years 2015–2021 with 1 standard deviation indicated.

The dynamical coupling between the atmosphere and the ocean is reflected in the wind-driven circulation, which responds fairly fast when coupling the ocean component to the atmosphere component. This circulation is described by the barotropic streamfunction (see Fig. 9) with positive values indicating clockwise flows. G_AO_5km simulates the main features of the wind-driven circulation: the subtropical and subpolar gyres in the North Atlantic and the strong circumpolar currents in the Southern Ocean. When considering the mass transports through major transects, the simulated transports compare reasonably well with those found in observations. Compared to the mean transport obtained in the spin-up, the transport, with the exception of the Bering Strait, is weaker, with values out of 1 standard deviation for all the passages but the Indonesian Throughflow and the Mozambique Channel. Except for the Florida–Bahamas strait, the weaker transport of G_AO_5km is in better agreement with observations. The weaker transport is consistent with weaker wind stress in G_AO_5km compared to the spin-up simulation, also expressed in a weaker barotropic streamfunction (not shown).

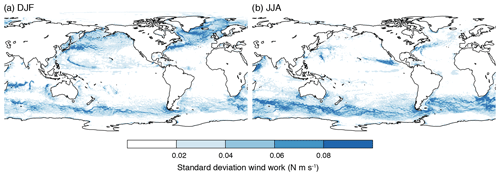

The dynamical coupling between the atmosphere and the ocean is also reflected in the variability of wind work (Fig. 10), which is defined as the product of surface wind stress and surface ocean currents (Wunsch, 1998). In eddy-rich regions such as the Gulf Stream, the Kuroshio current, the Agulhas Return Current, the Brazil–Malvinas confluence, and the Southern Ocean, strong dynamic interaction is seen year-round and is stronger in their respective winter hemisphere. The shift in direction associated with monsoonal winds induces the seasonal (boreal summer) Great Whirl off the Somali coast that is also associated with pronounced ocean–atmosphere interactions, which may affect the Indian summer monsoon (Seo, 2017). While this has been simulated in a regional coupled model (Seo et al., 2007), global storm-resolving configurations like G_AO_5km enable us to investigate even larger-scale effects. Tropical cyclone tracks are also visible, for instance off the coast of Central America in the eastern Pacific in JJA. The total wind work displayed by Fig. 10 is 3.21 TW. In comparison, Flexas et al. (2019) estimated 4.22 TW for their ∘ simulation.

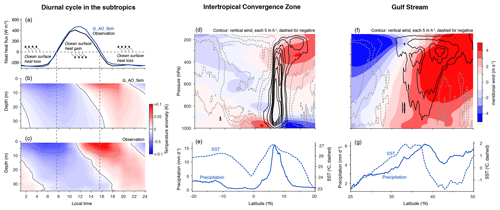

Figure 11(a–c) Composite diurnal cycle of the upper-ocean daily temperature anomaly in G_AO_5km as well as in observations with associated net heat flux at the ocean surface. Observations are from ship data for the net heat flux and from three Slocum gliders for the temperature. Observations and G_AO_5km are averaged between 57–58∘ W and 11.5–14.∘ N and from 21 January–29 February 2020. Panel (d) shows meridional cross-sections of wind together with meridional variations in SST and precipitation in (e) from G_AO_5km averaged over February 2020 through the central–eastern Pacific ITCZ. Panels (f)–(g) are like panels (d)–(e) but over the Gulf Stream. In (g) the SST Equator-to-pole meridional gradient is removed. Zonal averages are taken between 150 and 90∘ W in (d)–(e) and between 60 and 20∘ W in (f)–(g).

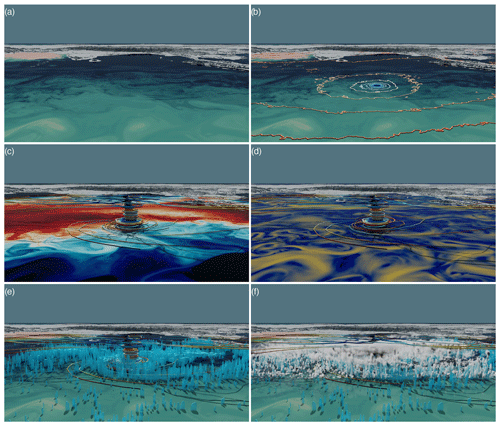

Figure 12A low-pressure system in the Southern Ocean and associated large- and small-scale features, visualized from G_AO_2.5km with (a) salinity, (b) salinity (shading) and sea level pressure (contour lines), (c) wind (streamlines) and surface temperature (shading), (d) wind (streamlines) and ocean velocity (shading), (e) wind (streamlines) and rain (blue shading), and (f) wind (streamline), rain (blue), and cloud (white). The field of view is towards the Bay of Bengal. The tip of India and Sri Lanka can be recognized.

As a final illustration of the coupling between the ocean and the atmosphere, which, for the first time, may not be blurred by the use of a convective parameterization, we show in Fig. 11 three examples taken from different regimes: a subsiding subtropical region coincident with the area of operation of the EUREC4A (ElUcidating the RolE of Clouds–Circulation Coupling in Climate) field campaign (Stevens et al., 2021), a region of deep convection with the Pacific Intertropical Convergence Zone (ITCZ), and the Gulf Stream, the latter motivated by the findings of Minobe et al. (2008). G_AO_5km reproduces the observed diurnal cycle of net heat flux and temperature very well (Fig. 11a, b, c). The onset of ocean cooling and warming phases around 21:00 and 11:00 local time as well as the development of a diurnal warm layer during daytime are well represented in G_AO_5km compared to the observed data. We nevertheless note that the simulated surface warming and cooling do not propagate far enough into the ocean, potentially pointing to too little mixing. Ocean-only simulations conducted at a grid spacing of 5 km indeed reveal a strong sensitivity of the mixed layer depth to the chosen mixing parameter in the turbulent kinetic energy (TKE) mixing scheme, with mixed layers that are generally too shallow over the tropical Atlantic. The cross-section through the ITCZ (Fig. 11d, e) reveals features that can hardly be reproduced with coarse-resolution ESMs: a narrow region of about 5∘ of strong ascent around 6∘ N with associated boundary layer wind convergence and upper-level divergence at the top of the deep convection at around 300 hPa. Precipitation peaks below the convergence, a region which coincides with high SST and strong SST gradients, which are two features favorable for the development of deep convection. Figure 11e also reveals the previously mentioned low SSTs and dip in precipitation at the Equator. Finally, the atmospheric cross-section taken over the Gulf Stream (Fig. 11f, g) also reveals enhanced precipitation under the area of strong vertical motion. The latter sits on the top of the warm water of the Gulf Stream, albeit slightly shifted northward, blending with the jet location.

4.1.2 2.5 km results

The global ocean–atmosphere coupled simulation with a grid spacing of 2.5 km (G_AO_2.5km) employs the same set-up as G_AO_5km, except for its finer grid spacing and, accordingly, reduced time step, as well as for a slightly distinct arrangement of the vertical levels in the ocean (see Table 3). We now show four examples illustrating small-scale features embedded in larger-scale structures that such a model configuration aims to resolve. We also chose the four examples to illustrate interactions involving different components of the Earth system, which is rendered possible with the design of ICON-Sapphire.

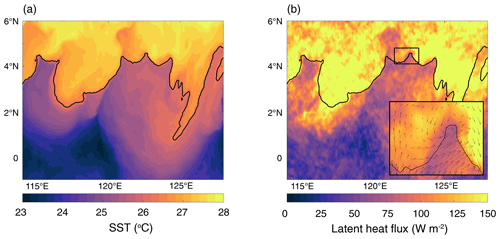

Figure 13Breaking of a tropical instability wave in the Pacific in G_AO_2.5km with (a) SST and (b) latent heat flux. The black line shows the 26.3∘C isoline. Snapshot for 5 February 2020 at 23:00. The inset in (b) shows a blow-up of the region enclosed by the rectangle at about 4∘ N and 122∘E.

Figure 12 focuses on the Southern Ocean. On the small scale, ocean eddies and filaments of enhanced velocity (Fig. 12d) imprint themselves on the salinity (Fig. 12a) and SST (Fig. 12c) fields, as expected. Superimposed on this variability, on the large scale, cold and salty water encounters warm and fresh water, leading to the formation of a large-scale SST front. At this front, a low-pressure system (Fig. 12b) develops with its associated circling wind (shown as streamlines), precipitation, and clouds (Fig. 12e, f). But even in this large-scale low-pressure system, the precipitation is neither randomly nor uniformly distributed but aligns with the circling streamlines, forming mesoscale bands of precipitation. Comparing Fig. 12c and f, perhaps surprisingly, a cooling of the surface below clouds and a freshening of the ocean below precipitation are not noticeable.

Figure 13 illustrates the breaking of tropical instability waves. Tropical instability waves already develop in ocean models with a grid spacing of 0.25∘ (Jochum et al., 2005); the finer grid spacing nevertheless allows resolving the submesoscale structure along the wave cusps with the development of secondary fronts. These secondary fronts rotate counterclockwise, while the tropical instability waves break clockwise. The imprint of these secondary fronts in the surface flux, as shown in Fig. 13b for latent heat, is noteworthy.

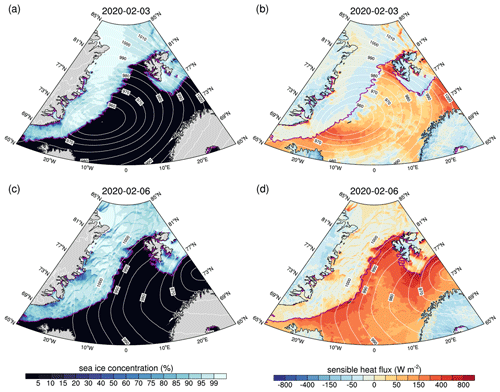

Figure 14Sea ice breakup by a polar low and the effect on sensible heat flux in G_AO_2.5km. Daily mean values are shown. The white contour lines are for sea level pressure (hPa), and the magenta line is for the extent of the sea ice (15 % concentration).

In Fig. 14, the interactions between the ocean, sea ice, and atmosphere are illustrated. The passage of a polar low leads to the formation of narrow leads a few kilometers wide and polynyas; compare Fig. 14c after the passage and Fig. 14a before the passage. The sea ice breakup results from the strong wind stress exerted by the southerly winds on the back of the polar low. By resolving these small-scale linear kinematic features in the sea ice, heat is released from leads and polynyas, whereas there is no or negative heat flux over thicker ice (compare Fig. 14c and d). Positive heat fluxes over leads and polynyas are to be expected and have been measured, for example, during the Surface Heat Budget of the Arctic Ocean (SHEBA) field experiment (Overland et al., 2000). Using a development version of ICON-Sapphire run at 5 km, Gutjahr et al. (2022) also illustrated the interactions between the polar low, katabatic storms, and dense water formation in the Irminger Sea.

Lastly, as expected for such a resolution, G_AO_2.5km can resolve mesoscale circulations induced by surface heterogeneity, which is most easily seen over islands, and their impacts on convection (not shown).

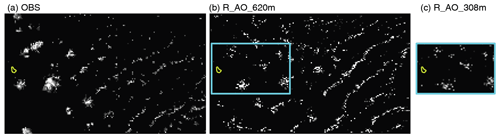

4.2 Resolving large eddies in the atmosphere