the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

MSDM v1.0: A machine learning model for precipitation nowcasting over eastern China using multisource data

Chaohui Chen

Eastern China is one of the most economically developed and densely populated areas in the world. Due to its special geographical location and climate, eastern China is affected by different weather systems, such as monsoons, shear lines, typhoons, and extratropical cyclones. In the near future, the rainfall rate becomes difficult to predict precisely due to these systems. Traditional physics-based methods such as numerical weather prediction (NWP) tend to perform poorly on nowcasting problems due to the spin-up issue. Moreover, various meteorological stations are distributed in this region, generating a large amount of observation data every day, which have great potential for application to data-driven methods. Thus, it is important to train a data-driven model from scratch that is suitable for the specific weather situation of eastern China. However, due to the high degrees of freedom and nonlinearity of machine learning algorithms, it is difficult to add physical constraints. Therefore, with the intention of using various kinds of data as a proxy for physical constraints, we collected three kinds of data (radar, satellite, and precipitation data) in the flood season from 2017 to 2018 in this area and preprocessed them into tensors (256×256) that cover eastern China with a domain of . The developed multisource data model (MSDM) combines the optical flow, random forest, and convolutional neural network (CNN) algorithms. It treats the precipitation nowcasting task as an image-to-image problem, which takes radar and satellite data with an interval of 30 min as inputs and predicts radar echo intensity with a lead time of 30 min. To reduce the smoothing caused by convolutions, we use the optical flow algorithm to predict satellite data in the following 120 min. The predicted radar echoes from the MSDM together with satellite data from the optical flow algorithm are recursively implemented in the MSDM to achieve a 120 min lead time. The MSDM predictions are comparable to those of other baseline models with a high temporal resolution of 6 min. To solve blurry image problems, we applied a modified structural similarity (SSIM) index as a loss function. Furthermore, we use the random forest algorithm with predicted radar and satellite data to estimate the rainfall rate, and the results outperform those of the traditional, nonlinear radar reflectivity factor and rainfall rate (Z–R) relationships that use logarithmic functions. The experiments confirm that machine learning with multisource data provides more reasonable predictions and reveals a better nonlinear relationship between radar echo and precipitation rate. Apart from developing complicated machine learning algorithms, exploiting the potential of multisource data will yield more improvements.

- Article

(8985 KB) - Full-text XML

- BibTeX

- EndNote

In recent years, deep learning (DL) and machine learning (ML) have achieved great advances with big data. Tremendous meteorological data are produced every day, which perfectly matches these novel data-driven artificial intelligence (AI) approaches. Quantitative precipitation nowcasting (QPN) using radar echo extrapolation (REE) has recently become popular (Tran and Song, 2019). Precipitation nowcasting predicts rainfall intensity in the following few hours. Based on various data with high spatiotemporal resolutions, AI precipitation prediction can be relatively accurate compared to traditional numerical weather prediction (NWP) methods. U-Net (Ronneberger et al., 2015) is a well-known network designed for image segmentation, and its core is upsampling, downsampling, and skip connection. It can efficiently achieve high accuracy with a small number of samples. Agrawal et al. (2019) treated precipitation nowcasting as an image-to-image problem. They employed U-Net (Ronneberger et al., 2015) to predict the change in radar echo for QPN, which is superior to High-Resolution Rapid Refresh (HRRR) numerical prediction from the National Oceanic and Atmospheric Administration (NOAA) when the prediction time is within 6 h. Sønderby et al. (2020) proposed a neural weather model (NWM) called MetNet that uses axis self-attention (Ho et al., 2019) to discover weather patterns from radar and satellite data. MetNet can predict the next 8 h of precipitation in 2 min intervals with a resolution of 1 km. Shi et al. (2015) treated precipitation nowcasting as a problem of predicting spatiotemporal sequences and modified the fully connected long short-term memory (FC-LSTM) by replacing the Hadamard product with a convolution operation in the input-to-state and state-to-state transitions. They believe that cloud movement is highly uniform in some areas, and convolutions can capture these local characteristics. Therefore, the convolution operation in the input transformations and recurrent transformations of their proposed convolutional LSTM (ConvLSTM) helps to handle the spatial correlations. Furthermore, they apply the same modification to the gated recurrent unit (GRU) and notice that convolution is location-invariant and focuses on only a fixed location because its hyperparameters (kernel size, padding, dilation) are fixed. However, in the QPN problem, a specific location of cloud clusters continuously changes over time. Hence, Shi et al. (2017) proposed a trajectory GRU (TrajGRU) that uses a subnetwork to output a location-variant connection structure before state transitions. The dynamically changed connections help TrajGRU capture the trajectory of cloud clusters more accurately than previous methods. In the field of video prediction, Wang et al. proposed various recurrent neural networks (RNNs) based on LSTM. For example, they designed PredRNN (Wang et al., 2018) with a cascaded dual-memory structure and gradient highway unit, which strengthens the power for modeling short-term dynamics and alleviates the vanishing gradient problem, respectively. In addition, to capture spatial characteristics through recurrent state transitions, Wang et al. (2019a) integrated 3D convolutions inside LSTM units and proposed Eidetic 3D LSTM (E3D-LSTM). Moreover, Wang et al. (2019b) designed the memory-in-memory (MIM) network to handle higher-order nonstationarity of spatiotemporal data. By using differential signals, MIM can model the nonstationary properties between adjacent recurrent states. However, their work is based on a slight modification of existing techniques that demand massive computing resources for model training and has not been applied to big meteorological data.

Computer vision techniques have long been used in object detection, video prediction, and human motion prediction. Tran and Song (2019) used image quality assessment techniques as a new loss function instead of the common mean squared error (MSE), which misled the process of training and generated blurry images. Ayzel et al. (2019) designed an advanced model based on the multiple optical flow algorithm for QPN, but it still performs poorly in the prediction of the onset and decay of precipitation systems because optical flow methods simply calculate the position and velocity of the radar echo with a constant velocity rather than consider the changing intensity of radar echo.

On the one hand, the current massive amounts of data are underutilized. On the other hand, scientists in the field of machine learning focus on pursuing high accuracy by increasing the complexity of models based on a single source of data. Given this background, from the perspective of atmospheric science, we build a multisource data model (MSDM) with the aim of fully using multisource observation data (for example, radar reflectivity, infrared satellite data, and rain gauge data) and find suitable machine learning algorithms (for example, deep neural network, optical flow, and random forest algorithms) for each type of data that can ensure accuracy while saving computing resources. In addition, due to the high degrees of freedom and nonlinearity of neural networks, it is difficult to apply physical constraints to these machine learning models. Hence, we hope that multisource data will function as a proxy for physical constraints to guide the model during the training process. The main advantage of MSDM lies in its transferability: any machine learning model and observation data can be incorporated into the model. For example, wind speed data can be a proxy for dynamic constraints, and temperature data can function as a proxy for thermodynamic constraints. Due to the limit of computing resources, the aim of this paper is not to achieve a higher resolution or prediction accuracy but to propose a method combining machine learning and deep learning with radar echo data, satellite data, and automatic ground observation data to achieve physically reasonable QPN.

Section 2 introduces the related work about the use of machine learning and deep-learning models for radar and precipitation. The dataset, models, and methods used in this study are described in Sect. 3. Section 4 shows the results. Section 5 draws conclusions and discusses some possible future work.

2.1 Machine learning

There is a large volume of published studies describing the use of ML for radar and precipitation. Logistic regression, as one of the simple ML algorithms, has been used to improve the forecast of precipitation probability (Vislocky and Young, 1989). Kuligowski and Barros (1998) use neural networks to postprocess NWP output and forecast precipitation in the next 6 h. Lakshmanan et al. (2014) use neural networks to improve quality control of weather radar data. K-means clustering is a form of unsupervised learning, which is used for the classification of precipitation (Yang and Deng, 2010). Hwang et al. (2019) modify this kind of clustering algorithm and train two nonlinear regression models to improve the subseasonal forecast of temperature and precipitation. Support vector machine (SVM) uses kernels to transform data to the nonlinear space, which has been applied to forecast tornadoes (Adrianto et al., 2009), predict precipitation in tropical cyclones (Wei, 2012), and train precipitation estimation models (Huang et al., 2015). Decision tree algorithms are widely used in classification and regression tasks. Gagne et al. (2009) use the decision tree to classify storm types based on radar observations. Loken et al. (2019) calibrate the ensemble precipitation forecast via random forest. Hill et al. (2020) use random forests to predict the probability of severe weather across the United States. Mao and Sorteberg (2020) use random forest to train a binary model to improve the accuracy of radar-based precipitation nowcasts. Bayesian techniques are an important branch of ML. Todini (2001) use them to improve the radar precipitation estimation. Fox and Wikle (2005) propose a quantitative precipitation nowcast scheme based on a Bayesian hierarchical model. Chandra et al. (2021) use Bayesian machine learning to reconstruct annual precipitation from climate-sensitive lithologies and improve the predictive accuracy of global circulation models (GCM) at a low computational cost.

2.2 Deep learning

Deep learning (DL; LeCun et al., 2015) has gained popularity in meteorology recently. The existing literature on the application of DL to radar and precipitation is extensive. Foresti et al. (2019) train artificial neural networks (ANN) on a 10-year archive of radar images in Switzerland to nowcast the growth and decay of precipitation. Sadeghi et al. (2019) apply convolutional neural networks (CNNs) together with the same kind of data to estimate precipitation, which shows great improvement compared with baseline models. Yan et al. (2021) introduce a flow-deformation network (FDNet) that captures the motion of the optical flow field and the deformation of radar echoes at the same time. The ML community tends to treat nowcasting problems as the prediction of spatiotemporal sequences. Chen et al. (2020) use ConvLSTM for nowcasting and early warning of heavy rainfall. Ran et al. (2021) use Faster-RCNN (Ren et al., 2016) to identify precipitation clouds for Doppler weather radar. The deep neural networks have also been applied to reduce the bias and false alarms of satellite-based precipitation products (Tao et al., 2016). Tao et al. (2017) design two DL models that incorporate satellite data from infrared and water vapor channels to identify the precipitation that significantly outperform the operational product. Yo et al. (2020) propose a volume-to-point framework for radar-based quantitative precipitation estimation (QPE), which can automatically detect the movement and evolution of precipitation systems. Ravuri et al. (2021) present a deep generative model to eliminate the blurry nowcast at longer lead times. As for data fusion, Chandra and Kapoor (2020) design a Bayesian transfer learning framework to provide an approach for handling multiple sources of data. Veillette et al. (2020) use satellite data, radar images, and lightning flash data to synthetic weather radar. Miao et al. (2020) deem the nowcasting problem as a computer vision task and propose a multimodal graph framework to model different data jointly.

3.1 Dataset

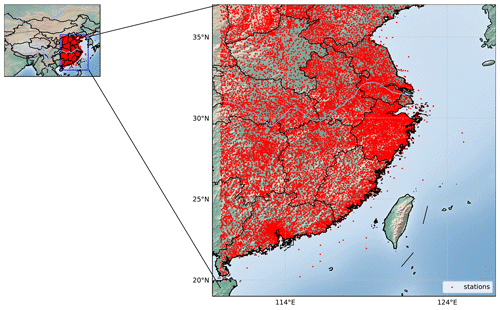

The spatial and temporal distribution characteristics of precipitation are related to many factors, such as the terrain, atmospheric circulation, and climatic conditions. To train a deep-learning model that can capture the precipitation characteristics of eastern China, we collected multisource observation data of the flood season (May to September) for a total of 306 d from 2017 to 2018. Due to the missing radar data from 1 to 9 May and 26 to 30 September 2018, there are only 292 d of radar data in total. The missing data are obtained by interpolating the data from adjacent moments. Among the data, the precipitation data of regional automatic weather stations (AWSs) in eastern China with a time interval of 10 min are shown in Fig. 1.

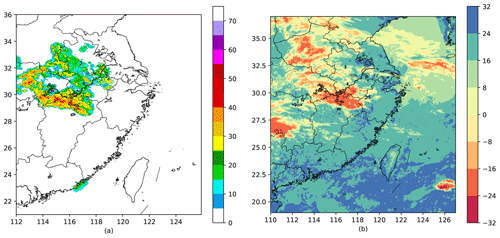

The weather radar data (resolution of ) have been preprocessed into the combined reflectivity: the latitude range is from 21.0 to 36.0∘ N, the longitude range is from 112.0 to 125.9∘ E, and the data were available every 6 min (Fig. 2a). The Himawari 8 satellite brightness temperature data (resolution ) for channels 07–16 are used with a latitude range of 19–37∘ N, a longitude range of 110–127∘ E, and a time interval of 30 min (Fig. 2b). The links for the datasets are as follows.

-

Radar data can be found at the following link: http://data.cma.cn/data/detail/dataCode/J.0012.0003.html (last access: 27 December 2019).

-

AWS data can be found at the following link: http://data.cma.cn/data/detail/dataCode/A.0012.0001.html (last access: 27 December 2019).

-

Himawari 8 satellite data can be found at the following link: http://www.cr.chiba-u.jp/databases/GEO/H8_9/FD/index.html (last access: 27 December 2019).

3.2 Model description

3.2.1 Model architecture

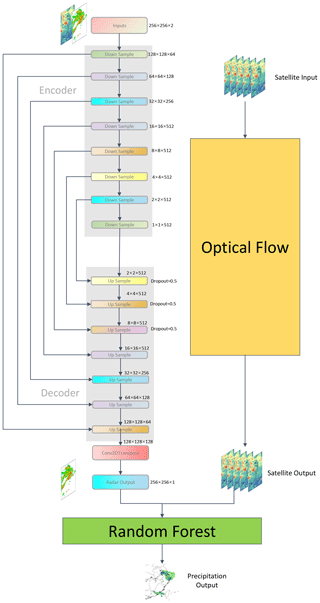

To incorporate multisource data, we designed an MSDM with three parts: deep learning, optical flow, and random forest (Fig. 3). The deep-learning part of the MSDM is inspired by the state-of-the-art U-Net (Ronneberger et al., 2015) designed for image segmentation. It follows the encoder–decoder structure that encoder has eight downsample blocks and decoder has seven upsample blocks. Each downsampling block in the encoder consists of Conv2D, batch normalization, and leaky rectified linear unit (LeakyReLU) activation layers. Each upsampling block in the decoder includes transposed convolutional, batch normalization, dropout of 0.5 (applied to the first three blocks), and ReLU activation layers. In each convolutional layer, the step size parameter (stride) is set to 2, and padding is set to “same”. The kernel size varies between 4×4 and 2×2 to extract the spatial characteristics at different scales. The batch normalization layer effectively avoids the gradient disappearance problem and improves the convergence speed. We use dropout to randomly discard some information with a probability of 50 % to prevent overfitting. The activation function adds nonlinearity to each block and allows the model to better learn the nonlinear relationship between the input and target. Transposed convolutional layers are introduced to substitute upsampling layers in U-Net to increase the resolution of the images. As in U-Net, there are skip connections between the encoder and decoder to solve the problem of gradient explosion and gradient disappearance during training.

The primary reason that we use transposed convolutional layers to replace upsampling layers is that both layers are used for upsampling images. Upsampling layers use an interpolation method (for example, nearest-neighbor interpolation, bilinear interpolation, and bicubic interpolation) to rescale the input image to a desired size with a higher resolution. These interpolation methods are preset, so there is little room for the network to learn. The deconvolution operation is not a predefined interpolation method, and it has some learnable parameters to convert the output to the original image resolution. Through the training of the model, it will learn an optimal upsampling method instead of a preset method.

In the deep-learning part, the MSDM takes the array with a shape of , which represents the height, width, and channel of the image. Radar and satellite grid point data are at different channels. The output of this part is a predicted radar image 30 min later with a shape of . The optical flow part takes five consecutive satellite frames as input to extrapolate the satellite image in the following 2 h. Subsequently, the predicted radar image and satellite image will be used in two parts. First, it will flow into the random forest part to estimate the precipitation rate. Second, it will be recursively used as the input of the deep-learning part to achieve a lead time of 2 h.

The reasons why we do not predict precipitation directly using deep learning are as follows: (1) the precipitation data we collected are irregular site data, which are distributed only on land and do not include precipitation on the sea (Fig. 1). The combined radar reflectivity (Fig. 2a) and Himawari 8 satellite data (Fig. 2b) are regular grid point data and include sea data. The spatial distributions of these three types of data are inconsistent, so it is impossible to make a feature–label correspondence to directly predict precipitation. (2) The use of shapefiles to extract radar echo or satellite data on land will cause the edge of the echo to be limited to the land, which loses the meaning of extrapolation. (3) We hope to improve the transferability of MSDMs that can integrate different kinds of data except grid point data. Therefore, the method of processing precipitation data can be used on other observation site data in daily operation. (4) We believe that deep learning efficiently extracts the long-period trend in precipitation, but it cannot capture the transient characteristics of precipitation. Therefore, for each rainfall event, we use random forest to model the nonlinear relationship between multisource data to capture its unique characteristics.

3.2.2 Reference models

Optical flow method

We first employed rainy motion v1, an optical flow model proposed by Ayzel et al. (2019), to evaluate the performance of the optical flow algorithm for tracking and extrapolating radar echoes by our dataset. It performs poorly on the radar echo data when the lead time is up to 60 min. However, it performs better on satellite data, which are recorded every 30 min. We believe that cloud layer motion is dominated by air advection transportation; thus, the optical flow method can better simulate its motion characteristics. Additionally, the temporal resolution of satellite data is coarser (30 min), so we can directly obtain the sequence of four frames of the following 2 h through one prediction rather than iterative prediction. Optical flow can predict such short sequences quickly and shows great advantages in saving computing resources and avoiding error accumulation. In addition, the main drawback of the convolution operation is that it smooths the characteristics of the image, and the level of smoothness increases when applying convolutions recursively in deep-learning models. Therefore, to ease the smoothing of radar echoes and preserve more details of precipitation systems, we decide to use the results of satellite data predicted by the optical flow component of our model.

ConvLSTM

ConvLSTM (Shi et al., 2015) is a traditional model for the QPN problem. Hence, we compare our model with ConvLSTM to see whether the model with multisource data performs well when we simply formulate QPN as an image-to-image problem rather than a spatiotemporal sequence problem (Eq. 1).

Tensor Xt represents the radar echo map in the shape of 256×256 at time t, and tensor represents the model prediction result.

U-Net

U-Net (Ronneberger et al., 2015) was employed by Agrawal et al. (2019) for QPN. They treat the problem as an image-to-image problem (Eq. 2) to forecast the precipitation in the next hour.

Tensor Xt and are the same as in Eq. (1); we use the U-Net architecture to predict the radar image 30 min later in comparison to the MSDM to demonstrate that the combination of multisource data is better than single-source data.

3.3 Training and evaluation method of the MSDM

The model that we designed is a modified U-Net model (Fig. 3). We use the radar and satellite data as inputs, and the output is the intensity of the radar echo after 0.5 h (Eq. 3). The two kinds of data were fed into the encoder and then concatenated by skip connections and flowed into the decoder and transposed convolutional layer (Fig. 3).

The MSDM uses weather radar echo data Xt and Himawari 8 satellite brightness temperature data Yt to predict the radar echo map at time t+5. After the first round of prediction, we combined from our model and the predictions of from optical flow for further prediction. During preprocessing, the weather radar data and Himawari 8 satellite brightness temperature data are extracted, which cover the area of 23.0–35.8∘ N, 113.0–125.8∘ E with a 256×256 window. Then, the values of these data Z are transformed into pixels P by Eq. (4):

To improve the image quality, we apply a modified structural similarity index (SSIM) (Wang et al., 2004) as the loss function, which is helpful to solve blurry image problems. The loss function for the predicted image and ground truth is defined by Eq. (5):

where ypred is the predicted image, ytrue is the ground truth, and and are the average values of ypred and ytrue, respectively. and are the variances of ypred and ytrue, respectively. is the cross-correlation of ypred and ytrue. C1 and C2 are small positive constants. In each calculation, a window of 3×3 is taken from the image, and then the window is continuously sliding for calculation. Finally, the average value is taken as the global SSIM.

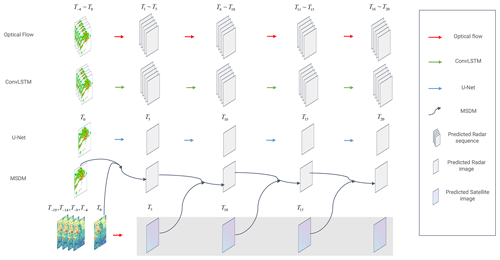

To evaluate our model, a comparison was made between the optical flow method, ConvLSTM, and U-Net methods. Due to limits on computational resources, we use a few frames to predict the results for 0.5 h. Following this, the output results are used to iteratively predict the radar echo in the next 0.5 h to achieve a lead time of 2 h (Fig. 4). For the baseline sequence-to-sequence models (ConvLSTM, optical flow), we use the first five frames (T−4–T0) to predict a sequence of the next five frames (T1–T5) and use this result to iteratively predict the remaining three sequences (T6–T10, T11–T15, T16–T20). For image-to-image models (U-Net, MSDM), we use frame T0 to predict frame T5 and use this prediction as input to iteratively predict the following frames (T10, T15, T20).

3.4 Performance evaluation

The MSDM is trained with our dataset on Google Colab Pro with TensorFlow-GPU-2.2.0 and executed on an NVIDIA Tesla P100 GPU (16 GB). In total, 240 d of data are used for training, 26 d are used for validation, and 26 d are used for testing. All the models are compiled with the Adam optimizer, and the learning rate is set at 0.001. To avoid overfitting, we apply the early stopping strategy to monitor the loss in the validation set. We use several metrics to evaluate the model's performance on the test set, i.e., the critical success index (CSI, Eq. 6), Heide skill score (HSS, Eq. 7), false alarm ratio (FAR, Eq. 8) (Woo and Wong, 2017), and root-mean-square errors (RMSEs), and we used the SSIM to evaluate the structural similarity between the generated image and target image.

where the correct negatives, hits, misses, and false alarms are determined by the threshold value. Woo and Wong (2017) provide more details about these metrics. We applied six thresholds of 0.1, 1, 5, 10, 25, and 40 dBZ to calculate the CSI, HSS, and FAR. To stress the importance of areas with large radar reflectivity, we assign a weight w(threshold) (Eq. 9) to different thresholds and calculate the weighted CSI and HSS (the larger the better).

We set all the weights to 1 for the FAR (the smaller the better) because we believe that the influence of false alarms of every threshold is the same. The RMSE is used to evaluate the global error of the predicted radar image. For the SSIM, we set the Gaussian filter size to 3×3 and the width to 1.5 to evaluate the local structural similarity between the generated image and target image.

4.1 REE

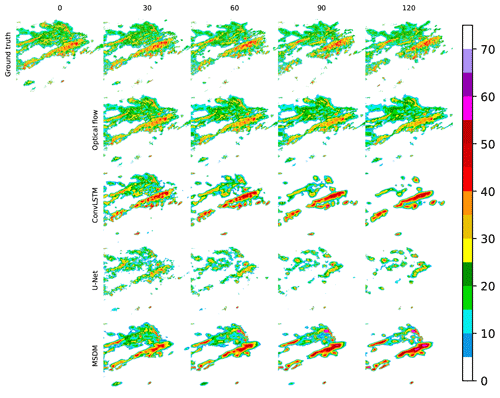

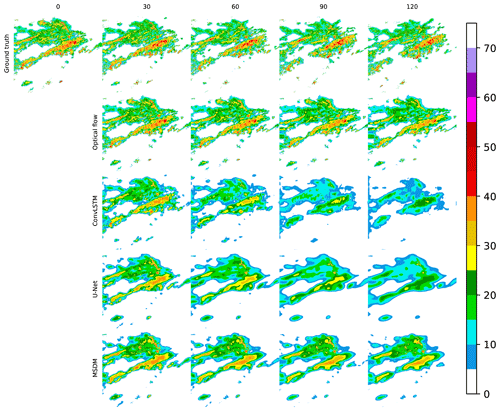

In the region we selected over eastern China, the radar echo and precipitating cloud system change little between two adjacent frames (6 min). Therefore, the results of all the models are shown every 30 min (Fig. 5). The input of optical flow and ConvLSTM is a sequence of five frames before time 0, and the output is a sequence of five frames in the following 0.5 h. The input of U-Net is a single frame of the radar echo data at time 0, and the input of the MSDM includes a frame of satellite data and a frame of the radar echo data. When the output of the first 30 min is obtained, we take it as the input to replace the real data for further prediction. After the first step of prediction, the satellite data are input into the MSDM for QPN by the optical flow algorithm. Because cloud movements are dominated by advective motion, the optical flow method is used.

Figure 5Illustrations of the observed radar echo, the radar echo simulated by the optical flow, ConvLSTM, U-Net, and MSDM. For the optical flow and ConvLSTM, we select one frame every 0.5 h for comparison with other models. Each model was trained with the modified SSIM. The date and time are set to 7 September 2018, 00:00 UTC.

Figure 6Illustrations of the observed radar echo, the radar echo simulated by the optical flow, ConvLSTM, U-Net, and MSDM. For the optical flow and ConvLSTM, we select one frame every 0.5 h for comparison with other models. Each model was trained with the MSE. The date and time are set to 7 September 2018, 00:00 UTC.

We present the comparison of four models trained with different loss functions. Figure 5 shows that the models trained with the modified SSIM predict many large-value areas of radar echo because the SSIM can extract the local structural similarity through the training process. In contrast, Fig. 6 shows that models trained with the MSE tend to smooth the details of radar echo and seldom predict large radar echo values because the large-value area is only a small part of the entire echo, and the MSE will ignore these areas when it optimizes errors on a global scale. Hence, the modified SSIM shows its advantage when compared with the conventional loss function in the REE task.

The radar echoes predicted by the ConvLSTM, U-Net, and MSDM decay in the following 2 h, while those predicted by the optical flow method remain stable. Thus, the optical flow method can perfectly predict the edge and shape of the radar echo, which is the reason why it obtains the highest average weighted CSI at a lead time of 30 min (Table 1) on the testing set. However, the fatal weakness of the optical flow method is that it simply predicts radar echo movement from previous images without predicting radar echo decay and initiation, which causes its accuracy to decrease over time (Table 1), and thus the FAR keeps increasing (Table 3). In addition, it employs an algorithm called a corner detector (Ayzel et al., 2019) to identify special points from previous frames and track the movement of these points. When it extrapolates the tail of the radar echo, it cannot find corresponding points from previous images because the tail of the radar echo at this moment was in a position outside the radar image of previous frames. Consequently, unreasonable shapes exist in the tail of the predicted radar echo. In Fig. 5, we find that ConvLSTM performs the best for the strong echoes, but it cannot maintain the shape of the echo. Additionally, there exists a phenomenon in which only the strong-echo area is increasing, while the weak-echo area is continuously decreasing, which is contradictory according to fluid continuity theory. The ConvLSTM captures the temporal features from previous frames, which strengthens the intensity, but it cannot properly predict the initiation and decay of the whole system, because it predicts lower values for weak-echo areas and makes fewer false alarms in these areas, which comprise the majority of radar echoes. This could explain why it obtains the lowest FAR in the last hour (Table 3).

ConvLSTM is prone to error accumulation due to iterative training and requires massive computing resources (Yu et al., 2018). Therefore, we use a convolutional neural network (CNN) as a substitute to treat REE as an image-to-image problem. U-Net, along with our MSDM, can generally simulate the motion of the radar echo while maintaining its outline, but the MSDM with satellite data can avoid radar echo decay through iterations. The MSDM has comparable performance with baseline models and outperforms other models in the short-term period (Tables 1 and 2). We believe it retains the merits of the optical flow method, which can maintain the shape of the radar echo, and it has the ability to predict the strong-echo area from U-Net. The MSDM performs poorly when the lead time is longer than 90 min because the cumulative error from the two kinds of data was larger than either of them individually. In addition, satellite data may provide more details that the radar echo may not contain, for example, data over the sea; instead, these details may be treated as noise or false alarms, and thus the accuracy will decrease.

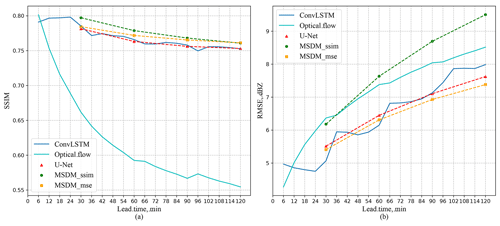

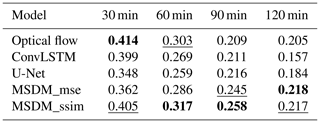

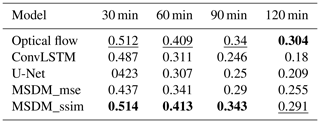

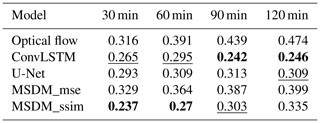

Tables 1 and 2 show the weighted average CSI and HSS on the test set with different thresholds (0.1, 1, 5, 10, 25, 40; unit: dBZ). The two metrics are used to evaluate the performance of each model (the higher the better). From Table 1, we notice that optical flow method achieves the best score when the lead time is 30 min, which shows its great advantage in short-term forecasting. However, its long-term predictions are not accurate due to a lack of simulation of the radar echo evolution. ConvLSTM performs poorly because it only increases the strong echo and neglects the prediction of low-value areas. Hence, even though it obtains high scores on large reflectivity areas, its weighted CSI and HSS are still lower than those of the other models. U-Net also performs poorly due to its inability to handle temporal correlations and the absence of key spatial information. The MSDMs with different loss functions (MSE and SSIM) perform well in long-term forecasting. The SSIM can capture the structural similarities of radar images, while the MSE can calculate the global errors. However, SSIM is prone to error accumulation through iterative prediction. Therefore, in Table 1, MSDM_ssim ranks best for lead times of 60 and 90 min, while MSDM_mse ranks best for other lead times. Satellite data add more spatial information for the MSDM to learn and set physical constraints on it. Therefore, the MSDM scores best in the first three moments of the weighted HSS. Regarding the FAR, the MSDM still performs best in the first two moments due to its reasonable prediction of the shape and intensity of the radar echoes. ConvLSTM ranks best in the last two moments because it forecasts only strong echoes of a few areas, which greatly reduces the probability of false alarms.

Table 1Weighted average CSI on the test set with different thresholds (0.1, 1, 5, 10, 25, 40; unit: dBZ). The best scores are highlighted in bold. The second-best score is underscored (the larger the better).

Table 2Weighted average HSS on the test set with different thresholds (0.1, 1, 5, 10, 25, 40; unit: dBZ). The best scores are highlighted in bold. The second-best score is underscored (the larger the better).

Table 3Average FAR on the test set with different thresholds (0.1, 1, 5, 10, 25, 40; unit: dBZ). The best scores are highlighted in bold. The second-best score is underscored (the smaller the better).

We calculate the SSIM and RMSE between the predicted radar echoes of the four models and the ground truth on the test set (Fig. 7). The optical flow model achieves the lowest SSIM (Fig. 7a), which means that it has the worst SSIM to the ground truth. MSDM_ssim obtains the highest score on the SSIM but the worst performance on the RMSE because it focuses on only local features but ignores the minimization of the global error. ConvLSTM, U-Net, and MSDM_mse are trained on the MSE loss function, which achieve a lower RMSE. We believe that when the SSIM is used as the loss function, the model will generate more reasonable predictions with proper shapes, but it will lead to poor performance on global evaluation metrics such as the mean absolute error (MAE) and RMSE. Moreover, we notice that the ConvLSTM model produces larger errors in the first frame of each sequence than other models. This phenomenon can result from the deficiency of LSTM that cannot handle accumulative error, which is magnified by iterative prediction.

4.2 QPN

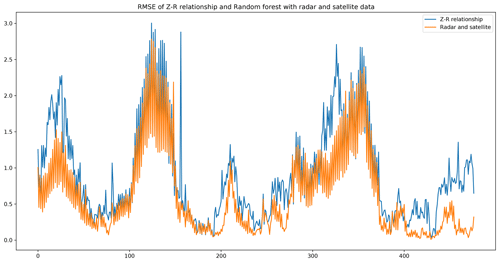

Previous works seem to pay little attention to QPN after they achieve good performance on REE tasks. Researchers tend to use an empirical formula to calculate the precipitation rate based on the prediction of radar echo from models. Shi et al. (2015) employed the Z–R relationship ( to calculate the rainfall, where Z represents the radar echo (dBZ), R represents the rainfall rate (mm h−1), and a and b are two constants that are calculated based on the statistical data of specific regions. We believe that this empirical formulation cannot describe the nonlinear relationship between the radar echo intensity and the rainfall rate. Therefore, random forest machine learning regression techniques are used to describe this relationship. The weather radar data and precipitation data 1 h before the prediction time are used for training. The method we take is as follows. First, an automatic station is identified. Then, the radar and satellite data for these grid points as well as the corresponding rainfall rate from site points are applied to train the random forest model. Finally, the learned nonlinear relationship is used to predict the rainfall rate an hour later.

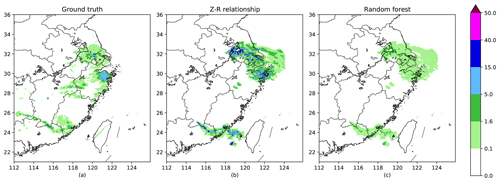

Figure 8(a) Ground truth interpolated from site points (mm h−1). (b) Rainfall rate calculated by the Z–R relationship (mm h−1). (c) Rainfall rate calculated by the random forest model (mm h−1).

Figure 8 shows the results of the Z–R relationship and random forest model. Since the precipitation data on the grid points are obtained by interpolation and might have errors, we did not make a quantitative comparison for the whole dataset. However, this example shows that the Z–R relationship tends to overestimate the rain intensity. For example, the Z–R relationship predicts many areas with precipitation rates larger than 15 mm h−1, but there are few areas that reach the value on the ground truth. Figure 9 shows the RMSEs of 480 QPN samples using different methods and data. When we use the radar and satellite data as input, the random forest model shows its superiority for the QPN task. Its RMSEs are lower than those of the Z–R relationship in most of the samples. Therefore, we believe that multisource data have great potential to make the results more precise.

5.1 Discussion

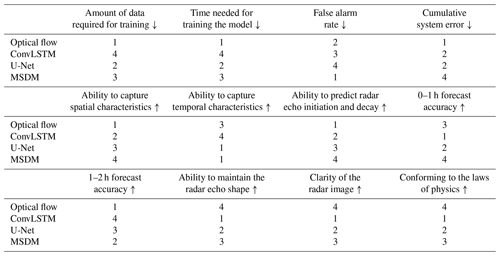

In Table 4, we evaluate four models in terms of 12 performance indictors (amount of data required for training, time needed for training the model, false alarm rate, cumulative system error, ability to capture spatial and temporal characteristics, ability to predict the radar echo initiation and decay, 0–1 h forecast accuracy, 1–2 h forecast accuracy, ability to maintain the radar echo shape, clarity of the radar image, conformation to the laws of physics). We use the mark “↓” to represent where scores are judged via the principle of “the lower the better” and the mark “↑” to represent where scores are judged via “the higher the better”. Subsequently, we discuss and summarize the advantages and limitations of the models and their combinations.

Here, the smaller the first four indictors values are (amount of data required for training, time needed for training the model, false alarm rate, cumulative system error), the better the model performance is, and the larger the last eight indictors values are (ability to capture spatial and temporal characteristics, ability to predict the radar echo initiation and decay, 0–1 h forecast accuracy, 1–2 h forecast accuracy, ability to maintain the radar echo shape, clarity of the radar image, conformation to the laws of physics), the better the model performance is. From Table 4 we can see that all of them have advantages and disadvantages. We are now going to discuss the strong points and weak points of the methods.

5.1.1 Optical flow

The advantages of the optical flow algorithm are as follows: (1) it has the fewest parameters and takes the least time to train; (2) the amount of data required for training the model is small, and at least two radar images can be used to extrapolate the radar echo; (3) it maintains the shape of the radar echo very well, and the prediction result is closest to the real echo. (therefore, its MSE is the smallest); and (4) it is suitable for the extrapolation of advection precipitation from 0 to 1 h in the future.

The disadvantages of the optical flow algorithm are as follows: (1) it cannot extract features of the evolution process of the radar echo; (2) except the advective precipitation, it performs poorly in other precipitation situations (e.g., convective precipitation and typhoon precipitation) in which the radar reflectivity changes rapidly in a short period of time, and for the large-value area of radar echo, it basically has no forecasting ability; and (3) the tail of the echo cannot be extrapolated due to the lack of previous data. As a result, the longer the lead time, the more irregular the shape of the echo at the tail.

5.1.2 ConvLSTM

The advantages of ConvLSTM are as follows: (1) it can extract the spatial characteristics of echoes while capturing the time characteristics efficiently; (2) it can simulate the initiation and decay of radar echo better than optical flow; and (3) it is the best for the prediction of long time periods and large-value areas of radar echo.

The disadvantages of ConvLSTM are as follows: (1) there are many parameters, many matrix operations, and various gating structures in ConvLSTM, and thus its training speed is the slowest among the four models; (2) it overestimated (underestimated) the large-value (low-value) radar echo, which does not conform to the fluid continuity theory; and (3) it predicts the worst shape of the echo in that there is no transition between the large echo area and the non-echo area, which is far away from the true echo and has no guidance for operational forecasting. For example, we cannot issue an early warning of heavy precipitation in one place, and at the same time it cannot forecast whether there will be no rain in neighboring areas.

5.1.3 U-Net

The advantages of U-Net are as follows: (1) it is an efficient CNN that has relatively few parameters and can achieve high accuracy with a small amount of data; (2) it is capable of capturing the spatial characteristics of radar echoes and predicting the evolution of echoes; and (3) the forecasting effect is very good for the next one or two frames.

The disadvantages of U-Net are as follows: (1) it is unable to extract the temporal characteristics of changes in the radar echo; (2) the convolution operation will smooth the characteristics of the radar echo so that the shape of the predicted echo will change and deviate from the true one; and (3) the error accumulates through iterative training and prediction.

5.2 Conclusions

As a conventional QPN method, the optical flow method has played a certain role in the forecasting of advective precipitation. However, it performs poorly in the prediction of advective precipitation due to the simplicity of its algorithm and the lack of use of existing big data (Woo and Wong, 2017). Moreover, deep learning shows great advantages in processing vast amounts of data. By using convolution and LSTM structures, deep-learning algorithms are better at capturing spatiotemporal correlations. Nevertheless, recurrent networks (represented by ConvLSTM) for predicting spatiotemporal sequences are widely known to be difficult to train and are computationally expensive (Yu et al., 2018). Compared with traditional spatiotemporal sequence tasks in the field of machine learning, such as moving Modified National Institute of Standards and Technology (MNIST) prediction, human position prediction, and traffic flow prediction, the REE task has specific background and physical constraints. Therefore, merely obtaining predictions with higher scores does not reflect the quality of the results. Wang et al. (2018, 2019a, b) designed state-of-the-art models to capture comprehensive correlations between spatiotemporal sequences. However, when we apply them to the physics-based tasks represented by REE and QPN, we must evaluate their prediction from the perspective of atmospheric science. The prediction is of reference significance only when it is physically reasonable rather than having high scores. However, it is difficult to apply physical constraints to neural networks due to their high degree of freedom and nonlinearity. Hence, we input more kinds of data as features into the network with the intention that it can obtain more information through feature interaction. Therefore, we collect multisource data and design an MSDM. In a situation in which the model becomes incorrect and tries to predict low radar reflectivity, the incorporated satellite data will balance it out. We hope the multisource data function as another form of model constraint. Solving the sequence-to-sequence problem is computationally expensive, so we treat the QPN as an image-to-image problem and design the MSDM based on a CNN (U-Net) with high efficiency and few parameters. The main advantage of the MSDM is its transferability. Apart from satellite data, any other data (wind speed, pressure, temperature, etc.) can be used as input into the model in the future. Wind speed data could add dynamic constraints, and temperature data could add thermodynamic constraints. To further save computational resources, we use optical flow to predict the sequence of satellite data with the assumption that the cloud cluster is dominated by convective movement. This approach is adopted by an operational nowcasting system to estimate convective cloud movement (Shi et al., 2017). Subsequently, we use the satellite data predicted by optical flow and radar reflectivity predicted by the MSDM as input for iterative prediction to achieve a lead time of 2 h. After predicting the radar echo, we replace the empirical formula (Z–R relationships) with a random forest model to estimate the rainfall rate. We believe that deep-learning models capture the long-term trend in precipitation. There should be an algorithm that captures real-time dynamic characteristics, and random forest regression is very suitable for short-term prediction with small samples. Therefore, we trained a random forest regressor using radar and precipitation data from 1 h prior. Subsequently, the learned nonlinear relationships were applied to estimate the precipitation rate from radar reflectivity.

In conclusion, the MSDM combines the merits of optical flow and U-Net, maintains the pattern of the radar echo, and predicts their initiation and decay. The results predicted by the MSDM also contain more details that U-Net cannot produce. Given the background that ConvLSTM overestimates the strong echo and underestimates the weak echo, the MSDM shows great potential in predicting areas of both strong and weak radar echo. We conducted an experiment by using random forest for QPN, which obtained relatively better results than those obtained by the Z–R relationship. This finding suggests that the empirical formula is not suitable for all areas. We believe that by the combination of multisource data, the radar echoes predicted by the MSDM can provide more details and have more physical constraints than those predicted by single-observation data. It not only learns the long-term trend through deep learning but also incorporates real-time dynamic characteristics captured by the optical flow and random forest models. Hence, the prediction from the MSDM is more physically reasonable and of reference significance.

Currently, methods still exist to estimate the precipitation rate more precisely. For example, Wu et al. (2020) used a graph convolutional regression network to produce more spatial characteristics of precipitation. For future work, we believe that the predictions could be more accurate with RNNs and GRUs. Additionally, the precipitation rate should consider the influence of the terrain and different scales. In fact, we will perform further experiments on these factors.

The source code and pretrained model of MSDM are available at https://doi.org/10.5281/zenodo.4749183 (Li, 2021).

For more information about the underlying datasets, see Sect. 3.1.

Conceptualization was performed by DL, YL, and CC. DL contributed to the methodology, software, and investigation, as well as the preparation of the original draft. CC contributed to the resources and data curation, as well as visualizations and project administration. YL contributed to the review and editing of the manuscript, supervision of the project, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

The authors declare that they have no conflict of interest.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research has been supported by the National Natural Science Foundation of China (grant no. 41875060).

This paper was edited by Rohitash Chandra and reviewed by Patrick Armand and three anonymous referees.

Agrawal, S., Barrington, L., Bromberg, C., Burge, J., Gazen, C., and Hickey, J.: Machine Learning for Precipitation Nowcasting from Radar Images [cs, stat], arXiv [preprint], arXiv:1912.12132, December 2019.

Ayzel, G., Heistermann, M., and Winterrath, T.: Optical flow models as an open benchmark for radar-based precipitation nowcasting (rainymotion v0.1), Geosci. Model Dev., 12, 1387–1402, https://doi.org/10.5194/gmd-12-1387-2019, 2019.

Adrianto, I., Trafalis, T. B., and Lakshmanan, V.: Support vector machines for spatiotemporal tornado prediction, Int. J. Gen. Syst., 38, 759–776, https://doi.org/10.1080/03081070601068629, 2009.

Chandra, R. and Kapoor, A.: Bayesian neural multi-source transfer learning, Neurocomputing, 378, 54–64, https://doi.org/10.1016/j.neucom.2019.10.042, 2020.

Chandra, R., Cripps, S., Butterworth, N., and Muller, R. D.: Precipitation reconstruction from climate-sensitive lithologies using Bayesian machine learning, Environ. Model. Softw., 139, 105002, https://doi.org/10.1016/j.envsoft.2021.105002, 2021.

Chen, L., Cao, Y., Ma, L., and Zhang, J.: A Deep Learning-Based Methodology for Precipitation Nowcasting With Radar, Earth Space Sci., 7, e2019EA000812, https://doi.org/10.1029/2019EA000812, 2020.

Foresti, L., Sideris, I. V., Nerini, D., Beusch, L., and Germann, U.: Using a 10-Year Radar Archive for Nowcasting Precipitation Growth and Decay: A Probabilistic Machine Learning Approach, Weather Forecast., 34, 1547–1569, https://doi.org/10.1175/WAF-D-18-0206.1, 2019.

Fox, N. I. and Wikle, C. K.: A Bayesian Quantitative Precipitation Nowcast Scheme, Weather Forecast., 20, 264–275, https://doi.org/10.1175/WAF845.1, 2005.

Gagne, D. J., McGovern, A., and Brotzge, J.: Classification of Convective Areas Using Decision Trees, J. Atmospheric Ocean. Tech., 26, 1341–1353, https://doi.org/10.1175/2008JTECHA1205.1, 2009.

Hill, A. J., Herman, G. R., and Schumacher, R. S.: Forecasting Severe Weather with Random Forests, Mon. Weather Rev., 148, 2135–2161, https://doi.org/10.1175/MWR-D-19-0344.1, 2020.

Huang, B.-J., Tseng, T.-H., and Tsai, C.-M.: Rainfall Estimation in Weather Radar Using Support Vector Machine, in: Intelligent Information and Database Systems, vol. 9011, edited by: Nguyen, N. T., Trawiński, B., and Kosala, R., Springer International Publishing, Cham, 583–592, https://doi.org/10.1007/978-3-319-15702-3_56, 2015.

Hwang, J., Orenstein, P., Cohen, J., Pfeiffer, K., and Mackey, L.: Improving Subseasonal Forecasting in the Western U.S. with Machine Learning [cs, stat], arXiv [preprint], arXiv:1809.07394, May 2019.

Ho, J., Kalchbrenner, N., Weissenborn, D., and Salimans, T.: Axial Attention in Multidimensional Transformers, arXiv [preprint], arXiv:1912.12180, December 2019.

Kuligowski, R. J. and Barros, A. P.: Localized Precipitation Forecasts from a Numerical Weather Prediction Model Using Artificial Neural Networks, Weather Forecast., 13, 1194–1204, https://doi.org/10.1175/1520-0434(1998)013<1194:LPFFAN>2.0.CO;2, 1998.

Lakshmanan, V., Karstens, C., Krause, J., and Tang, L.: Quality Control of Weather Radar Data Using Polarimetric Variables, J. Atmos. Ocean. Tech., 31, 1234–1249, https://doi.org/10.1175/JTECH-D-13-00073.1, 2014.

LeCun, Y., Bengio, Y., and Hinton, G.: Deep learning, Nature, 521, 436–444, https://doi.org/10.1038/nature14539, 2015.

Li, D.: MSDM v1.0: A machine learning model for precipitation nowcasting over East China using multisource data, Zenodo, https://doi.org/10.5281/zenodo.4749183, 2021.

Loken, E. D., Clark, A. J., McGovern, A., Flora, M., and Knopfmeier, K.: Postprocessing Next-Day Ensemble Probabilistic Precipitation Forecasts Using Random Forests, Weather Forecast., 34, 2017–2044, https://doi.org/10.1175/WAF-D-19-0109.1, 2019.

Mao, Y. and Sorteberg, A.: Improving Radar-Based Precipitation Nowcasts with Machine Learning Using an Approach Based on Random Forest, Weather Forecast., 35, 2461–2478, https://doi.org/10.1175/WAF-D-20-0080.1, 2020.

Miao, K., Wang, W., Hu, R., Zhang, L., Zhang, Y., Wang, X., and Nian, F.: Multimodal Semisupervised Deep Graph Learning for Automatic Precipitation Nowcasting, Math. Probl. Eng., 2020, 1–9, https://doi.org/10.1155/2020/4018042, 2020.

Ran, Y., Wang, H., Tian, L., Wu, J., and Li, X.: Precipitation cloud identification based on faster-RCNN for Doppler weather radar, EURASIP J. Wirel. Commun. Netw., 2021, 19, https://doi.org/10.1186/s13638-021-01896-5, 2021.

Ravuri, S., Lenc, K., Willson, M., Kangin, D., Lam, R., Mirowski, P., Athanassiadou, M., Kashem, S., Madge, S., Prudden, R., Mandhane, A., Clark, A., Brock, A., Simonyan, K., Hadsell, R., Robinson, N., Clancy, E., and Mohamed, S.: Skillful Precipitation Nowcasting using Deep Generative Models of Radar, arXiv [preprint], arXiv:2104.00954, April 2021.

Ren, S., He, K., Girshick, R., and Sun, J.: Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks [cs.CV], arXiv [preprint], arXiv:1506.01497v3, January 2016.

Ronneberger, O., Fischer, P., and Brox, T.: U-Net: Convolutional Networks for Biomedical Image Segmentation, in: Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, vol. 9351, edited by: Navab, N., Hornegger, J., Wells, W. M., and Frangi, A. F., Springer International Publishing, Cham, 234–241, https://doi.org/10.1007/978-3-319-24574-4_28, 2015.

Sadeghi, M., Asanjan, A. A., Faridzad, M., Nguyen, P., Hsu, K., Sorooshian, S., and Braithwaite, D.: PERSIANN-CNN: Precipitation Estimation from Remotely Sensed Information Using Artificial Neural Networks–Convolutional Neural Networks, J. Hydrometeorol., 20, 2273–2289, https://doi.org/10.1175/JHM-D-19-0110.1, 2019.

Shi, X., Chen, Z., Wang, H., Yeung, D.-Y., Wong, W., and Woo, W.: Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting, arXiv [preprint], arXiv:1506.04214, September 2015.

Shi, X., Gao, Z., Lausen, L., Wang, H., Yeung, D.-Y., Wong, W., and Woo, W.: Deep Learning for Precipitation Nowcasting: A Benchmark and A New Model, arXiv [preprint], arXiv:1706.03458 October 2017.

Sønderby, C. K., Espeholt, L., Heek, J., Dehghani, M., Oliver, A., Salimans, T., Agrawal, S., Hickey, J., and Kalchbrenner, N.: MetNet: A Neural Weather Model for Precipitation Forecasting, arXiv [preprint], arXiv:2003.12140, March 2020.

Tao, Y., Gao, X., Hsu, K., Sorooshian, S., and Ihler, A.: A Deep Neural Network Modeling Framework to Reduce Bias in Satellite Precipitation Products, J. Hydrometeorol., 17, 931–945, https://doi.org/10.1175/JHM-D-15-0075.1, 2016.

Tao, Y., Gao, X., Ihler, A., Sorooshian, S., and Hsu, K.: Precipitation Identification with Bispectral Satellite Information Using Deep Learning Approaches, J. Hydrometeorol., 18, 1271–1283, https://doi.org/10.1175/JHM-D-16-0176.1, 2017.

Todini, E.: A Bayesian technique for conditioning radar precipitation estimates to rain-gauge measurements, Hydrol. Earth Syst. Sci., 5, 187–199, https://doi.org/10.5194/hess-5-187-2001, 2001.

Tran, Q.-K. and Song, S.: Computer Vision in Precipitation Nowcasting: Applying Image Quality Assessment Metrics for Training Deep Neural Networks, Atmosphere, 10, 244, https://doi.org/10.3390/atmos10050244, 2019.

Veillette, M. S., Samsi, S., and Mattioli, C. J.: SEVIR: A Storm Event Imagery Dataset for Deep Learning Applications in Radar and Satellite Meteorology, 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada, 6-12 December 2020, 11 pp., 2020.

Vislocky, R. L. and Young, G. S.: The use of perfect prog forecasts to improve model output statistics forecasts of precipitation probability, Weather Forecast., 4, 202–209, 1989.

Wang, Y., Gao, Z., Long, M., Wang, J., and Yu, P. S.: PredRNN: Towards A Resolution of the Deep-in-Time Dilemma in Spatiotemporal Predictive Learning [cs, stat], arXiv [preprint], arXiv:1804.06300, November 2018.

Wang, Y., Jiang, L., Yang, M.-H., Li, L.-J., Long, M., and Fei-Fei, L.: Eidetic 3D Lstm: A Model For Video Prediction And Beyond, International Conference on Learning Representations, Ernest N. Morial Convention Center, New Orleans, 6–9 May 2019, 14 pp., 2019a.

Wang, Y., Zhang, J., Zhu, H., Long, M., Wang, J., and Yu, P. S.: Memory In Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spatiotemporal Dynamics [cs, stat], arXiv [preprint], arXiv:1811.07490, April 2019b.

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P.: Image Quality Assessment: From Error Visibility to Structural Similarity, IEEE Trans. Image Process., 13, 600–612, https://doi.org/10.1109/TIP.2003.819861, 2004.

Wei, C.-C.: Wavelet Support Vector Machines for Forecasting Precipitation in Tropical Cyclones: Comparisons with GSVM, Regression, and MM5, Weather Forecast., 27, 438–450, https://doi.org/10.1175/WAF-D-11-00004.1, 2012.

Woo, W. and Wong, W.: Operational Application of Optical Flow Techniques to Radar-Based Rainfall Nowcasting, Atmosphere, 8, 48, https://doi.org/10.3390/atmos8030048, 2017.

Wu, Y., Tang, Y., Yang, X., Zhang, W., and Zhang, G.: Graph Convolutional Regression Networks for Quantitative Precipitation Estimation, IEEE Geosci. Remote Sensing Lett., 1–5, https://doi.org/10.1109/LGRS.2020.2994087, 2020.

Yan, B.-Y., Yang, C., Chen, F. Takeda, K. and Wang, C.: FDNet: A Deep Learning Approach with Two Parallel Cross Encoding Pathways for Precipitation Nowcasting [cs.LG], arXiv [preprint], arXiv:2105.02585, 22 pp., 2021.

Yang, L. and Deng, M.: Based on k-Means and Fuzzy k-Means Algorithm Classification of Precipitation, in: 2010 International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 29–31 October 2010, 218–221, https://doi.org/10.1109/ISCID.2010.72, 2010.

Yo, T.-S., Su, S.-H., Chu, J.-L., Chang, C.-W., and Kuo, H.-C.: A deep learning approach to radar-based QPE, Earth Space Sci., 8, e2020EA001340, https://doi. org/10.1029/2020EA001340, 2021.

Yu, B., Yin, H., and Zhu, Z.: Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting [cs.LG], arXiv [preprint], arXiv:1709.04875, July 2018.