the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Documenting numerical experiments in support of the Coupled Model Intercomparison Project Phase 6 (CMIP6)

Charlotte Pascoe

Bryan N. Lawrence

Eric Guilyardi

Martin Juckes

Karl E. Taylor

Numerical simulation, and in particular simulation of the earth system, relies on contributions from diverse communities, from those who develop models to those involved in devising, executing, and analysing numerical experiments. Often these people work in different institutions and may be working with significant separation in time (particularly analysts, who may be working on data produced years earlier), and they typically communicate via published information (whether journal papers, technical notes, or websites). The complexity of the models, experiments, and methodologies, along with the diversity (and sometimes inexact nature) of information sources, can easily lead to misinterpretation of what was actually intended or done. In this paper we introduce a taxonomy of terms for more clearly defining numerical experiments, put it in the context of previous work on experimental ontologies, and describe how we have used it to document the experiments of the sixth phase for the Coupled Model Intercomparison Project (CMIP6). We describe how, through iteration with a range of CMIP6 stakeholders, we rationalized multiple sources of information and improved the clarity of experimental definitions. We demonstrate how this process has added value to CMIP6 itself by (a) helping those devising experiments to be clear about their goals and their implementation, (b) making it easier for those executing experiments to know what is intended, (c) exposing interrelationships between experiments, and (d) making it clearer for third parties (data users) to understand the CMIP6 experiments. We conclude with some lessons learnt and how these may be applied to future CMIP phases as well as other modelling campaigns.

- Article

(6308 KB) - Full-text XML

- BibTeX

- EndNote

Climate modelling involves the use of models to carry out simulations of the real world, usually as part of an experiment aimed at understanding processes, testing hypotheses, or projecting some future climate system behaviour. Executing such simulations requires an explicit understanding of experiment definitions including knowledge of how the model must be configured to correctly execute the experiment. This is often not trivial, especially when those executing the simulation were not party to the discussions defining the experiment. Analysing simulation data also requires at least minimal knowledge of both the models used and the experimental protocol to avoid drawing inappropriate conclusions. This again can be non-trivial, especially when the analysts are not close to those who designed and/or ran the experiments.

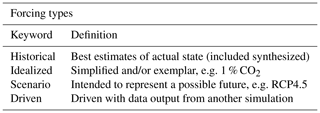

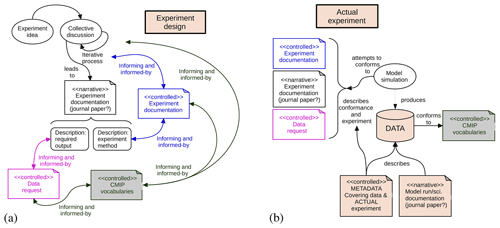

Figure 1(a) The process of defining an “experiment” involves multiple steps, interactions, and component descriptions. In the simplest case, ideas are iterated leading to some sort of final description (white boxes), but at scale, there is a need to control the structure used to document the experiments (blue) and their intended output (data request, magenta), and such structure needs to utilize controlled vocabularies (shaded). (b) The realization of an experiment is carried out by a model simulation which produces data, but in practice simulations often deviate in detail from the experiment protocol, and such deviations themselves need to be recognizable; how well a simulation conforms to the protocol is a key element of the documentation. In both (a) and (b) the ≪narrative≫ and ≪controlled≫ notation indicates the key characteristics of the two types of documentation: the former in scientific prose for human readers, the latter more structured for consumption both by humans and automated machinery.

Traditionally numerical experiment protocols have appeared in the published literature, often alongside analysis. This approach has worked for years, since mostly the same individuals designed the experiment, ran the simulations, and carried out the analysis. However, as model inter-comparison has become more germane to the science, there has been growing separation between designers, executers, and analysts. This separation has become acute with the advent of sixth Coupled Model Intercomparison Project (CMIP6, Eyring et al., 2016). With dozens of models and experiments, dozens of modelling centres engaged, and hundreds of output variables, it is no longer possible for all modellers to fully digest all the nuances of all the experiments which they are required to execute. Simulations are now carried out for direct application within specific model inter-comparison projects (or MIPs), for reuse between MIPs, and often with an explicit requirement that they be made available to support serendipitous analysis. Much of such reuse is by people who have no intimate knowledge of either the model or the experiment.

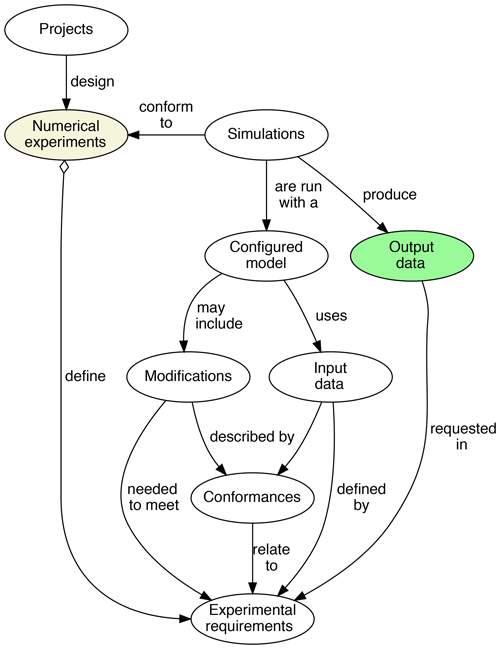

Figure 2Simulation workflow in which experimental requirements (termed “Numerical requirements”) play a central role.

This increasing separation within the workflow, and between individuals and communities, leads to an increased necessity for information transfer, both between people and across time (often analysts are working years after those who designed the experiments have moved on). In this paper we introduce the “design” component of the Earth System Documentation (ES-DOC) project ontology, intended to aid in this information transfer by supporting both those designing experiments (especially those with inter-experiment dependencies) and those who try to execute and/or understand what has been executed. This ontology provides a structure and vocabulary for building experiment descriptions which can be easily viewed, shared, and understood. It is not intended to supplant journal articles, rather to provide recipes which can be reused (by those running models) and understood by analysts as an introduction to the experiment designs. We explain how it was deployed in support of CMIP6, how it has added value to the CMIP6 process, and how we expect it to be used in the future based on lessons learnt thus far.

We begin by describing key elements of simulation workflows and introduce a formal vocabulary for describing the experiments and the simulations. We provide some examples of ES-DOC-compliant experiment descriptions and then present some of the experiment linkages which can be understood from the use of our canonical experiment descriptions. Our experiences in gathering information and the linkages (and some of the missing links) required to define and document CMIP6 experiments expose opportunities for improving future MIP designs, which we present in the “Summary and further work” section.

In this section we introduce the key concepts involved in designing experiments and describing simulation workflows. We describe how this has evolved from previous work and differs from other work with which we are familiar.

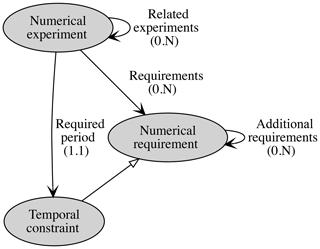

Figure 3NumericalExperiments are designed and governed by MIPs. Each numerical experiment is defined by NumericalRequirements, including a mandatory constraint setting out the required period of the numerical experiment. Numerical requirements may have complicated internal structures (see Fig. 4). In both this and the next figure, arrows and their labels use the Unified Modelling Language (UML) syntax to describe the relationships between the entities named in the bubbles. UML provides a standard way to visualize the components of a system and how they relate to each other; different styles of arrow denote different types of relationship. The UML relationships used here and in Fig. 4 are described in Table 1 of Hassell et al. (2017); a short primer on UML concepts can be found in Appendix A of Hassell et al. (2017).

2.1 Experiment definition

The process of defining numerical experiments is potentially complex (Fig. 1a). It begins with an idea and often entails an iterative community discussion which results in the final experimental definition and documentation. In the simplest cases, such documentation may be prose, in a paper or a journal article, but when many detailed requirements are in play and/or many experiments and individuals are involved, it is helpful to structure the documentation – both to ensure that key steps are recorded and to aid in the inter-comparison of methodology between experiments (especially the automatic generation of tables and views). Key requirements include being very specific about imposed experimental conditions and the required output.

Once the experiments are defined (Fig. 1a), modelling groups realize the experiments in the form of simulations which attempt to conform to the specifications of the experiment and which produce the desired output (Fig. 1b).

In both generic experiment documentation and in defining data requests, it is helpful to utilize controlled vocabularies so that unambiguous machine navigable links can exist between the design documentation, simulation execution, data production, and the analysis outcomes.

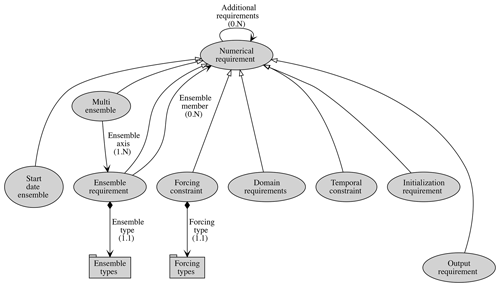

Figure 4NumericalRequirements govern the structure of a numerical experiment covering constraints on duration (TemporalConstraint), the domain covered (DomainRequirement, e.g. global or a regional bounding box), any forcings (ForcingConstraint, such as particular greenhouse gas concentrations), OutputRequirements (e.g. the CMIP6 data request), and a complicated interplay of potential EnsembleRequirements (see text). Controlled vocabularies are necessary for EnsembleTypes, ForcingTypes, and NumericalRequirementScopes. Indices associated with the connectors indicate the numerical nature of the relationships; e.g. a NumericalRequirement can have anywhere between zero to many (0.N) additional requirements, whereas an EnsembleRequirement can have only one (1.1) EnsembleType.

2.2 Key concepts

The requisite controlled vocabulary for a numerical simulation workflow requires addressing the actions and artefacts of the workflow summarized in Fig. 2, in which we see Projects (e.g. MIPs) design NumericalExperiments and define their NumericalRequirements. (In this section we use italics to denote specific concepts in the ES-DOC taxonomy.) Experiment definitions are adopted by modelling groups who use a model to run Simulations, with Output Data requirements (“data requests”) being one of the many experimental requirements. A simulation is run with a Configured Model, using a configuration which will include details of InputData and may include Modifications required to conform to the experiment requirements. Not all of the configuration will be related to the experiment, aspects of the workflow and computing environment may also need to be configured. In practice, simulations can deviate in detail from the experiment protocol; that is, they do not conform exactly to the requirements. A key part of a simulation description, then, is the set of Conformance descriptions which indicate how the simulation conforms to the experimental requirements. In this paper we are limiting our attention to the definition of the Experiment and its Requirements, with application to CMIP6 and the relationship between the MIPs and those requirements. We address other parts of the workflow elsewhere.

As noted above, a project has certain scientific objectives that lead it to define one or more NumericalExperiments. We describe the rules for performing the numerical experiments as NumericalRequirements (Fig. 3). Both NumericalExperiments and NumericalRequirements may be nested and the former may also explicitly identify specific related experiments which may provide dependencies or other scientific context such as heritage. For example, an experiment from which initialization fields are obtained is referred to as the parent experiment. Nested requirements are used to bundle requirements together for easy reuse across experiments. (An example of a nested requirement can be seen in the Appendix, where Table A1 shows how all the components which go into a common CMIP6 pre-industrial solar particle forcing are bundled together. We will see later that in CMIP6, many implicit relationships arise from common requirements.)

The experiment description itself includes attributes covering the scientific objective and the experiment rationale addressing the following questions: what is this experiment for and why is it being done?

2.3 Requirements

The NumericalRequirements are the set of instructions required to configure a model and provide prescribed input needed to execute a simulation that conforms to a NumericalExperiment. These instructions include (Fig. 4) specifications such as the start date, simulation period, ensemble size, and structure (if required), any forcings (e.g. external boundary conditions such as the requirement to impose a 1 % increase in carbon dioxide over 100 years), initialization requirements (e.g. whether the model should be “spun-up” or initialized from the output of a simulation from another experiment), and domain requirements (for limited area models). A scope keyword from a controlled vocabulary can be used to indicate whether the requirement is reused elsewhere, e.g. in the specifications for related experiments.

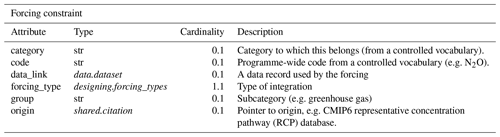

Each requirement carries a number of optional attributes and may contain mandatory attributes, as shown in Tables 1 and 2 for a ForcingConstraint.

Table 1ES-DOC controlled structure for describing a forcing constraint: each attribute has a name, a Python data type (those in italics are other ES-DOC types), a cardinality (0.1 means either zero or one, 1.1 means one is required) and a description.

2.4 Related work

The ES-DOC vocabulary is an evolution of the “Metafor” system (Lawrence et al., 2012; Guilyardi et al., 2013; Moine et al., 2014), developed to support the fifth CMIP phase (CMIP5). Metafor was intended to provide the structured vocabulary and tools to allow those contributing simulations to CMIP5 to document their models and simulations. In that context, Metafor was a qualified success; useful information was collected, but the tools were not able to be fully tested before use and were found to be difficult to use by those providing the documentation content. Such difficulties resulted in documentation generally arriving too late to be of use to the target audience: scientists analysing the data. The lessons learnt from that exercise were baked into the ES-DOC project which has superseded Metafor, leading to a much improved ontology, better tooling, and improved viewing of the resulting documentation (https://es-doc.org, last access: 2 March 2020).

The ES-DOC controlled vocabulary is an instance of an ontology (“a formal specification of a shared conceptualization”, Borst, 1997). There is considerable literature outlining the importance of such ontologies in establishing common workflow patterns with the goal of improving reproduction of results and reuse of techniques (whether they be traditional laboratory experiments or in silico) and explicitly calling out the failure of published papers as a medium to provide all the details of experiment requirements (e.g. Vanschoren et al., 2012, in the context of reproducible machine learning).

The description of ontologies is often presented in the context of establishing provenance for specific workflows and often only retrospectively. Work supporting scientific workflows has mainly been concerned with execution and analysis phases, with little attention paid to the composition phase of workflows (Mattoso et al., 2010), let alone the more abstract goals.

For the “conception phase” of workflow design, a controlled vocabulary introduced by Mattoso et al. (2010) as part of their proposed description of “experiment life cycles” directly maps to our work on experiment descriptions (discussed in this paper). In their view, the conception phase potentially consists of an abstract workflow, describing what should be done (but without specifying how), and a concrete workflow, binding abstract workflows to specific resources (models, algorithms, platforms, etc). ES-DOC respects that split with an explicit separation of design (experiment descriptions) and simulation (the act of using a configured model in an attempt to produce data conforming to the constraints of an experiment).

The notion of “an experiment” also needs attention, since the experiments described here are even more abstract than the notion of “a workflow” and cover a wider scope than that often attributed to an experiment. Dictionary definitions of “scientific experiment” generally emphasize the relationship between hypothesis and experiment (e.g. “An experiment is a procedure carried out to support, refute, or validate a hypothesis. Experiments provide insight into cause-and-effect by demonstrating what outcome occurs when a particular factor is manipulated.”, Wikipedia contributors, 2018). In this context “factor” has a special meaning, a factor generally being one of a few input variables; but in numerical modelling there can be a multiplicity of such factors, leading to difficulties in formal experimental definition and consistency of results (Zocholl et al., 2018 in the context of big data experiments).

The first formal attempt to define a generic ontology of experiments (as opposed to workflows), appears to be that of Soldatova and King (2007) (who also expressly identify the limitations of natural language alone for precision and disambiguation). Key components of their ontology include the notions of experimental classification, design, results, and their relationships, but it is not obvious how this ontology can be used to guide either conception or implementation. To specify more fully the abstract conception phase of workflow with more generic experiment concepts, da Cruz et al. (2012) build on Mattoso et al. (2010) with much the same aim as Soldatova and King (2007); however, they introduce many elements in common with ES-DOC, and one could imagine some future mapping between these ontologies (although there is not yet any clear use case for this).

With the advent of simulation, another type of experiment (beyond those defined earlier) is possible: the simulation (and analysis) of events which cannot be measured empirically, such as predictions of the state of a system influenced by factors which cannot be replicated (or which may be hypothetical, such as the climate on a planet with no continents). For climate science, the most important of these is of course the future; experiments can be used to predict possible futures (scenarios).

In this form of experiment, ES-DOC implicitly defines two classes of “controllable factor”: those controlled by the experiment design (and defined in NumericalRequirements, in particular, by constraints) and those which are controlled by experiment implementation (the actual modelling system). Only the former are discussed here. Possibly because most of the existing work does not directly address this class of experiment, there is no similar clear split along these lines in the literature we have seen.

The rationale and need for CMIP6 were introduced in Meehl et al. (2014), and the initial set of MIPs which arose are documented in Eyring et al. (2016). In this section we discuss a little of the history leading to CMIP6 in terms of how the documentation requirement has evolved; we discuss the interaction of various players in the specification of the experiments and how that has led to the ES-DOC descriptions of the CMIP6 experiments and their important forcing constraints.

3.1 History

Global model inter-comparison projects have a long history, with pioneering efforts beginning in the late 1980s (e.g. Cess et al., 1989; Gates et al., 1999). The first phase of CMIP was initiated in the mid 1990s (Meehl et al., 1997). CMIP1 involved only a handful of modelling groups, but participation grew with each succeeding phase of CMIP. Phase six (CMIP6, underway now) will involve dozens of institutions, including all the major climate modelling centres and many smaller modelling groups. Throughout the CMIP history, there has been a heavy reliance on CMIP results in the preparation of Intergovernmental Panel on Climate Change (IPCC) reports – CMIP1 diagnostics were linked to IPCC diagnostics and the timing of CMIP phases has been associated with the IPCC timelines.

With each phase, more complexity has been introduced. CMIP1 had four relatively simple goals: to investigate differences in the models’ response to increasing atmospheric CO2, to document mean model climate errors, to assess the ability of models to simulate variability, and to assess flux adjustment (Sausen et al., 1988). CMIP6 continues to address the first three of these objectives (flux adjustment being rarely used in modern models), but with a broader emphasis on past, present, and future climate in a variety of contexts covering process understanding, suitability for impacts and adaptation, and climate change mitigation.

In CMIP5 and again in CMIP6, there was a substantial increase in the number and scope of experiments. This has led to a new organizational framework in CMIP6 involving the distributed management of a collection of quasi-independently constructed model inter-comparison projects, which were required to meet requirements and expectations set by the overall coordinating body (the CMIP Panel) before they were “endorsed” as part of CMIP6. These MIPs were designed in the context of both increasing scope and wider-spread interest and the growth of two important constituencies: (1) those designing “diagnostic MIPs”, which do not require new experiments, but rather request specific output from existing planned experiments to address specific interests, and (2) the even wider group of downstream users who use the CMIP data opportunistically, having little or no direct contact with either the MIP designers or the modelling groups who ran the experiments.

With the increasing complexity, size, and scope of CMIP came a requirement to improve the documentation of the activity, from experiment specification to data output. CMIP5 addressed this in three ways: by documenting the experiment design in a detailed specification paper (Taylor et al., 2011); by improving documentation of metadata requirements and data layout to improve access to, and interpretation of, simulation output; and by requiring model participants to exploit the Metafor system (Sect. 2.4) to describe their models and simulations. ES-DOC use is now required for the documentation of CMIP6 models and ensembles (Balaji et al., 2018).

3.2 Documentation and the MIP design process

The overview of the experiment design process given in Fig. 1 can be directly applied to the way many of the CMIP6 MIPs were designed. For CMIP6 the iterative process involved the CMIP Panel,1 the CMIP6-endorsed MIPs, the CMIP team at the Program for Climate Model Diagnosis and Intercomparison (PCMDI)2, the ES-DOC team, and the development of the data request (Juckes et al., 2020). The discussion revolved around interpreting and clarifying the MIP requirements in terms of data and experiment setup, as initially described by endorsed MIP leaders in their proposals to the CMIP panel and later in a special issue of Geophysical Model Development (GMD).3 The ES-DOC community worked towards additional precision in the experiment decisions (in accordance with the structure described in Sect. 2.2) and sought opportunities for synergy between MIPs. The CMIP6 team at PCMDI developed the necessary common controlled cross-experimental CMIP vocabularies (the CMIP6-CV). The data request was an integral part of the process, since some MIPs were dependent on data produced in other MIPs, and in all cases the data were the key interface between the aspirations of the MIP and the community of analysts who need to deliver the science.

The semantic structure of the data request was developed in parallel to the development of the CMIP6 version of ES-DOC; each had to deal with a distinctive range of complex expectations and requirements. Hence ES-DOC has not yet fully defined or populated the OutputRequirement shown in Fig. 4. Similarly, the data request was not able to fully exploit ES-DOC experiment descriptions. A future development will bring these together and make use of the relationships between MIPs and between their output requirements and objectives. However, despite some semantic differences, there was communication between all parties throughout the definition phase.

The initial ES-DOC documentation was generated from a range of sources and then iterated with (potentially) all parties involved, which provided both challenges and opportunities. An example of the challenge was keeping track of material through changing nomenclature. Experiment names were changed, experiments were discarded, and new experiments were added. In one case an experiment ensemble was formed from a set of hitherto separate experiments. Conversely, a key opportunity was the ability to influence MIP design to add focus and clarity, including influencing those very names. For example, the names of experiments which applied sea surface temperature (SST) anomalies for positive and negative phases of ocean oscillation states were changed from “plus” to “pos” and “minus” to “neg” to better reflect the nature of the forcing and the relationship between experiment objectives and names.

The ES-DOC documentation process also raised a number of discrepancies and duplications, which were sorted out by conversations mediated by PCMDI. Many of the latter arose from independent development within MIPs of what eventually became shared experiments between those MIPs. For example, not all shared experiment opportunities were identified as such by the MIP teams, and it was the iterative process and the consolidated ES-DOC information which exposed the potential for shared experimental design (and significant savings in computational resources).

A specific example of such a saving occurred with ScenarioMIP and CDRMIP, which both included climate change overshoot scenario experiments that examine the influence of CO2 removal (negative net emissions) from 2040 to 2100 following unmitigated baseline scenarios through to 2040. As originally conceived, the ScenarioMIP experiment (ssp534-over) utilized the year 2040 from the CMIP6 updated RCP8.5 for initialization, but the CDRMIP equivalent (esm-ssp534-over) requested initialization in 2015 from the esm-historical experiment. In developing the ES-DOC descriptions of these experiments it was apparent that CDRMIP could follow the ScenarioMIP example and initialize from the C4MIP experiment esm-ssp585 in 2040 and avoid 25 years of unnecessary simulation (by multiple groups). This is now the recommended protocol.

Discrepancies also arose from the parallel nature of the workflow. For example, specifications could vary between what was published in a CMIP6-endorsed MIP's GMD paper and what had been agreed by the MIP authors with the data request and/or the PCMDI team with the controlled vocabulary. On occasion ES-DOC publication exposed such issues, resulting in revisions all round. This process required the sustained attention of representatives of each of these groups and eventually resulted in a system relying on Slack (https://slack.com/, last access: 2 March 2020) to notify all involved of updates but usually requiring initiation by a human who has identified an issue. However, synchronicity was and is a problem, with quite different timescales involved in each of the processes. For example, the formal literature itself evolved and so version control has been important – all current ES-DOC documents cite the literature as it was during the design phase and will be updated as necessary. A rather late addition to the taxonomies supported by both ES-DOC and PCMDI was support for aliases, to try and minimize issues arising from parallel naming conventions for experiments. The use of aliases addressed the documentation and specification issues associated with experiment names evolving or being specified differently within a MIP and the wider CMIP6. For example some GeoMIP experiments have very different names in the GeoMIP GMD paper and in the CMIP6-CV, e.g. “G1extSlice1” vs. “piSST-4xCO2-solar”.

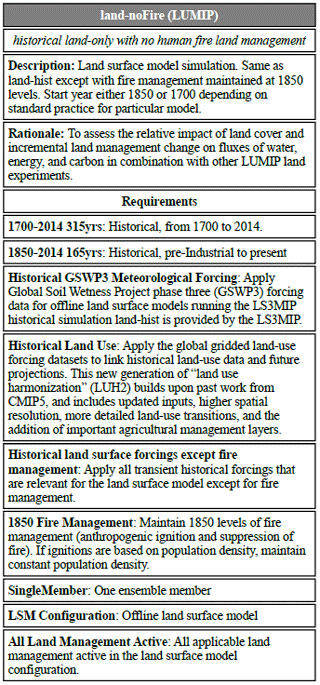

This process had other outcomes too: LUMIP originally had a set of experiments that were envisaged to address the impact of particular behaviours such as “grass crops with human fire management”. Some of these morphed to become entirely the opposite of their original incarnation, such as “land-noFIRE”, where the experiment requires no human fire management (see Table A2). Rather than building experiments that simulate the effect of including a phenomenon, the LUMIP constrained this suite of experiments in terms of the phenomena that were removed from the model. This change prompted a discussion about how then to describe experiments that are built around the concept of missing out one or more processes. For instance, with a suite of experiments that require that the land scheme is run without phenomenon A, then without phenomenon B, without phenomenon C and finally without phenomenon D, can we define the individual experiments in the suite with the form “not A but with B, C, and D” and “not B but with A, C, and D”, as in the case of an experiment where one forcing constraint might be set to pre-industrial levels whilst the rest of the forcing constraints are set to present-day conditions? It turns out that there is not yet much uniformity about how land models are set up; each is very different, so it only makes sense for LUMIP to constrain this suite of experiments in terms of the phenomenon that is removed. That is, the experiments should simply be described with the anti-pattern “not A” and “not B”. It has become clear that the way an experiment's forcing constraints are framed depends to some extent on the maturity and uniformity of the models that are expected to run the simulations.

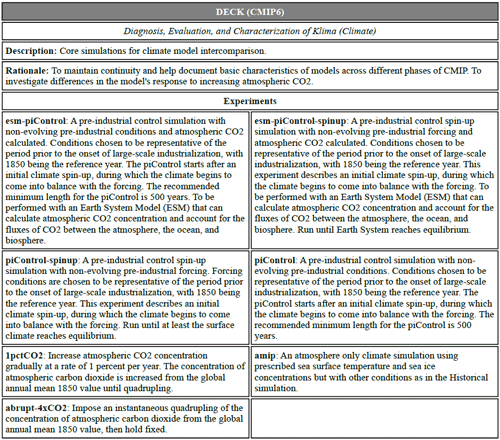

Table 3The experiments within the DECK, as described in ES-DOC. The content of this table, like all the ES-DOC tables in this paper, was generated directly from the online documentation using a Python script (details in the Appendix). The choice of content to display was made in the Python code; other choices could be made (e.g. see https://documentation.es-doc.org/cmip6/mips/deck, last access: 2 March 2020).

Table 4The modelling CMIP6 experiments as introduced in Eyring et al. (2016). This list does not include CORDEX or the diagnostic MIPs, which are not currently included in the ES-DOC MIP documentation.

3.3 Forcing constraints in practice

Somewhat naively, the initial concepts for ForcingConstraints anticipated the description of forcing in terms of specific input boundary conditions or, perhaps, specific modifications needed to models – this was how they were described for the CMIP5 documentation. The ES-DOC semantics introduced for CMIP6 are more inclusive and allowed a wider range of possible forcing constraints. For example, in CMIP5 the infamous Metafor questionnaire asked modellers to describe how they implemented solar forcing. In CMIP6, the approach to solar forcing requirements was outlined in the literature (Matthes et al., 2017), and the resulting requirements are found in rather more precise forcing constraints (with additional related requirements), an example of which appears in Table A1. The ES-DOC documentation now provides a checklist of important requirements and a route to the literature for both those implementing the experiments and those interpreting their results. Modellers can now use this information both in setting up their simulations and in documenting that setup. A discussion of how the latter is done for CMIP6 (and how it builds on lessons learnt from the generally poor experiences interacting with an excessively long and complicated CMIP5 questionnaire) will appear elsewhere.

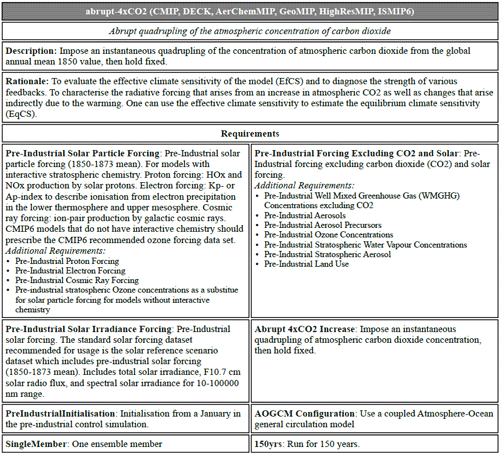

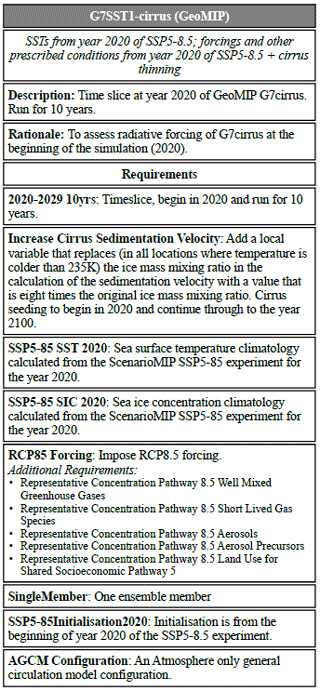

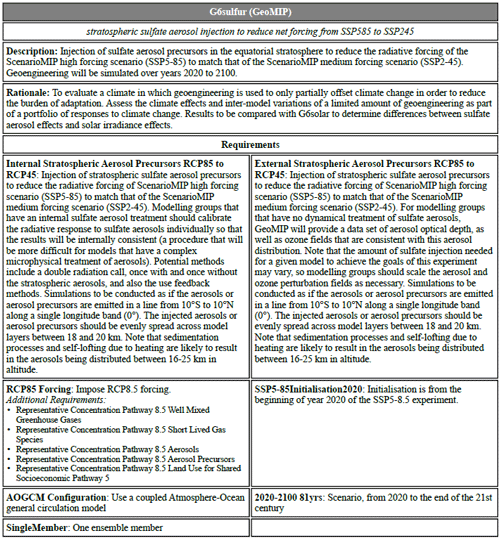

Increasing precision is evident throughout CMIP6 and in the documentation. In some cases, rather than ask how it is done in a model post fact, the experiment definition describes what is expected, as in the GeoMIP experiment G7SST1-cirrus (Table A3) where explicit modelling instructions are provided. However, where appropriate, experiments still leave it open to modelling groups to choose their own methods of implementing constraints, e.g. the reduction in aerosol forcing described in GeoMIP experiment G6sulfur (Table A4).

CMIP6 is more than just an assemblage of unrelated MIPs. One of the beneficial outcomes of the formal documentation of CMIP6 within ES-DOC has been a clearer understanding of the dependencies of MIPs on each other and of experiments on shared forcing constraints. In this section we provide an ES-DOC-generated overview of CMIP6 and discuss elements of commonality and how these interact with the burden on modellers of documenting how their simulation conformed (or did not) to the experiment requirements.

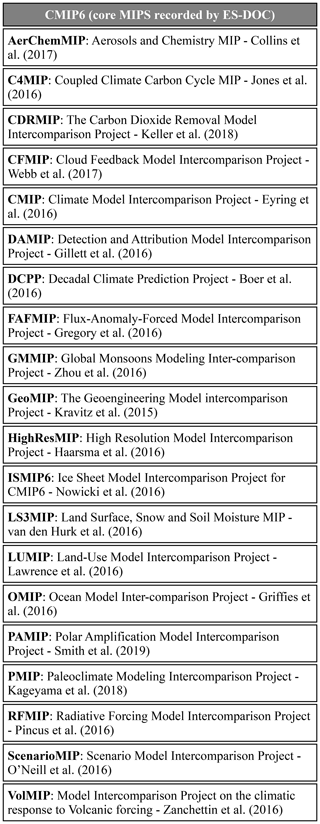

Figure 5CMIP6 MIPs and experiments. Individual MIPs are represented by large purple dots. Lines connect each MIP to the experiments that are related to it, which are shown as smaller blue dots. Some widely used experiments are labelled, such as the piControl, historical, amip, ssp245, and ssp585, which are used by numerous MIPs within CMIP6.

4.1 An overview of CMIP6 via ES-DOC

At the heart of the current CMIP process is a central suite of experiments known as the DECK (Diagnosis, Evaluation, and Characterization of Klima; Eyring et al., 2016). The DECK includes a pre-industrial control under 1850 conditions, an atmosphere-only AMIP simulation with imposed historical sea surface temperatures, and two idealized CO2 forcing experiments where in one CO2 is increased by 1 % per year until reaching 4 times the original concentration, while in the other CO2 is abruptly increased to 4 times the original concentration. Variants of most of these fundamental experiments have been core to CMIP since the beginning, and now within the DECK there is a second variant of the pre-industrial control designed to test the relatively new earth system models which respond to internally calculated CO2 concentrations as opposed to responding to externally imposed CO2 concentration (Table 3). Completion of the suite of DECK experiments is intended to serve as an entry card for model participation in the CMIP exercise. The CMIP panel are responsible for the DECK design and definition, which should evolve only slowly over future phases of CMIP and will enable cross-generational model comparisons. CMIP is also responsible for the “historical” experiments, but the definition of these will change as better forcing data become available and as the historical period extends forward in time.

Table 4 provides a summary of most of the CMIP6 endorsed MIPs as of December 2018, with the DECK incorporated in CMIP as discussed above. This table was auto-generated from the ES-DOC experiment repository (see the Code availability section). It does not include the MIPs that have not originated any of the CMIP6 experiments. There are three of these, which focus on and use CMIP6 output for various purposes: the Coordinated Regional Climate Downscaling Experiment (CORDEX; Gutowski Jr. et al., 2016) or the three diagnostic MIPs – DYnVarMIP (Dynamics and Variability MIP; Gerber and Manzini, 2016), SIMIP (Sea Ice MIP; Notz et al., 2016), and VIACSAB (Vulnerability, Impacts, Adaptation and Climate Services Advisory Board; Ruane et al., 2016) – as these are not yet included in ES-DOC. There are of course many other “non-endorsed” MIPs such as ISA-MIP (the Interactive Stratospheric Aerosol MIP; Timmreck et al., 2018), which could also be documented with the ES-DOC system at some future time.

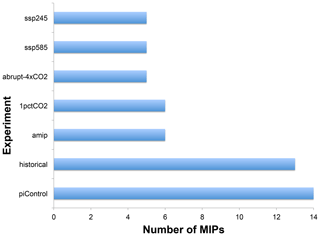

4.2 Common experiments

Figure 5 shows the sharing of experiments between MIPs. The importance of piControl, historical, AMIP, key scenario experiments (ssp245 and ssp585), and the idealized experiments (1pctCO2 and abrupt-4xCO2) is clear. These seven experiments form part of the protocol for many of the CMIP6 MIPs (Fig. 7). The scope of the historical and piControl experiments is demonstrated by their connections to MIPs on the far edges of the plot in all directions.

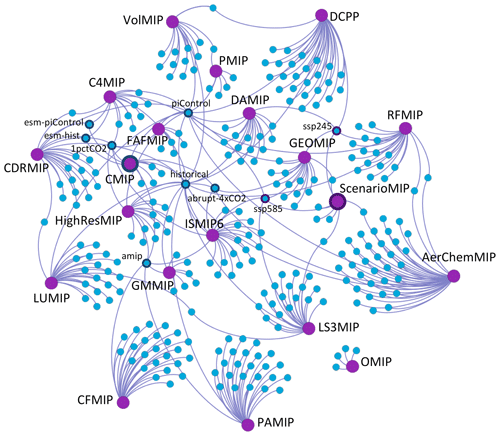

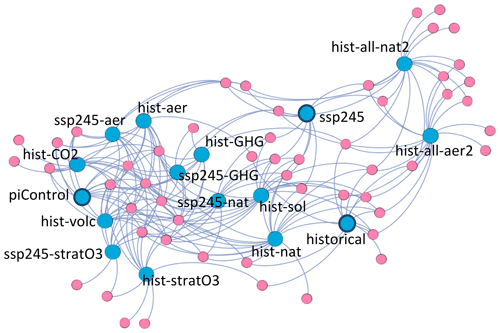

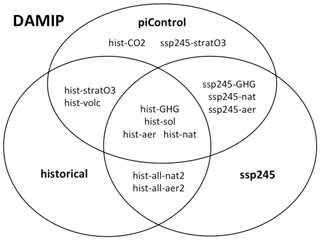

Figure 6DAMIP experiments and forcing constraints. Individual experiments are represented by large blue dots. Lines connect each experiment to related forcing constraints, represented by pink dots. An example of a forcing constraint might be a constraint on atmospheric composition such as a requirement for a particular concentration of atmospheric carbon dioxide. In this figure three experiments are shown with dark blue borders (piControl, historical, and ssp245); these are experiments that are required by DAMIP but are not defined by DAMIP. The forcing constraints for these three “external” experiments are used extensively by the DAMIP experiment suite.

Figure 7The most-used CMIP6 experiments in terms of the number of model inter-comparison projects (MIPs) to which they contribute.

There are other shared experiments too, which bring MIPs together around shared scientific goals: land-hist jointly defined and shared by LUMIP and L3SMIP; past1000 defined by PMIP forms part of VolMIP; piClim-control defined by RFMIP forms part of AerChemMIP; and dcppC-forecast-addPinatubo defined by DCPP forms part of VolMIP. By contrast, OMIP stands alone, sharing no experiments with other MIPs.

4.3 Common forcing

Experiments share forcing constraints, just as MIPs share experiments. Figure 6 shows the interdependence of the DAMIP experiments on common forcing constraints. Experiments are grouped near each other when they share forcing constraints. The dense network shown reflects the similarity of experiments within DAMIP and arises from a common design pattern or protocol in numerical experiment construction: a new experiment is a variation on a previous experiment with one (or a few) forcing changes. It is of course this “perturbation experiment” pattern which provides much of the strength of simulation in exposing causes and effects in the real world.

Unique modifications appear in Fig. 6 as forcing constraint nodes that are only connected to one or two experiments, which is also why the alternative forcing experiments hist-all-nat2 and hist-all-aer2 are placed further from the main body of the DAMIP network – they share fewer forcing constraints with the other experiments. However, they themselves are similar to each other as between them they share a number of unique forcing constraints.

The importance of the perturbation experiment pattern is further emphasized in DAMIP by noting that the three external experiments (piControl, historical and ssp245) account for 62 % of the DAMIP forcing constraints; five of the DAMIP experiments can be completely described by forcing constraints associated with these external experiments – being different assemblies of the same “forcing building blocks”. The key role of these building blocks is exposed by placing the DAMIP experiments into sets according to which of those external experiments is used for forcing constraints (Fig. 8).

Figure 8A view of DAMIP with experiments placed in sets according to the forcing constraints they share with the external experiments: piControl, historical, and ssp245.

This framing of shared forcing constraints exposes some apparent anomalies. Why, for example, is hist-CO2 not in the historical set? The reasons for these apparent anomalies expose the framing of the experiments. In the historical experiment, greenhouse gas forcing is a single constraint, which includes CO2 and other well-mixed greenhouse gases. By contrast, hist-CO2 varies only CO2, with the other well-mixed greenhouse gases constrained to pre-industrial levels (and hence uses the piControl forcing constraints for those, with its own CO2 forcing constraint).

It would have been possible to avoid this sort of anomaly by constructing finer constraints in the case of historical, but this would have been at the cost of simplicity of understanding (and greater multiplicity in reporting as discussed below). There is a necessary balance between clear guidance on experiment requirements and reuse of such constraints to expose relationships between experiments.

4.4 Forcing constraint conformance

One of the goals of the constraint formalism is to minimize the burden on modelling groups. Minimizing the burden of executing the CMIP6 experiments and the burden of documenting how the experiments were carried out (that is, populating the concrete part of the experiment definition, using the language of Mattoso et al., 2010, as discussed in Sect. 2.4). By clearly identifying commonalities between experiments, modelling groups can implement constraints once and reuse both the implementation and documentation across experiments.

Constraint “conformance” documentation is intended to provide clear targets for interpreting the differences between simulations carried out with different models. Given that differing constraints often define differing experiments, understanding why models give different results can be aided by understanding differences in constraint implementation (in those cases where there is implementation flexibility). Section 3.3 discussed some aspects of this from a constraint definition perspective.

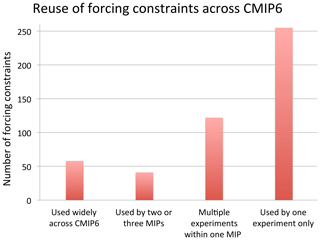

One can then ask, how much reuse of constraints is possible? Figure 9, shows that a few forcing constraints are reused widely across CMIP6. These are the forcing constraints associated with the DECK and historical experiments and the prominent scenario experiments from ScenarioMIP which are used by numerous MIPs (Fig. 5). It is with these forcing constraints that many deep connections between MIPs are made. From a practical perspective the wide application of these forcing constraints allows for considerable streamlining of the documentation burden on CMIP6 modelling groups. Beyond this core we see a smaller group of forcing constraints that are used by a few MIPs. For the most part these are forcing constraints associated with the less prominent scenario experiments from ScenarioMIP. The remainder of the forcing constraints are specific to just one MIP, and of these, 265 are only used once by a single experiment. Although this last group of forcing constraints is large in number, many groups will only make use of them if they happen to run the specific experiments to which they pertain.

4.5 Temporal constraints

History suggests there has been – and continues to be – divergent understanding of instructions for the expected duration of simulations (temporal constraints), often manifest by delivering “off by one” differences in the number of years of simulation. Such errors hamper statistical inter-comparison between simulations and can result in unnecessary effort (often expensive in human and computer time). The CMIP6 experiments have not been immune from this issue. Temporal constraints in the CMIP6 controlled vocabulary are defined in terms of a start year and a minimum length of simulation expressed in years. However, the publications by the CMIP6-endorsed MIPs often also include an end year which can be inconsistent with the minimum simulation length as described by the CMIP6-CV. The divergence in understanding generally occurs in the interpretation of the dates implied by a given start year and end year, specifically whether they refer to the beginning of January or the end of December.

A significant effort has been made by ES-DOC to identify these discrepancies and instigate their correction. ES-DOC temporal constraints unambiguously specify a start date, end date, and length for simulations and are a mandatory part of the ES-DOC experiment documentation. Despite these steps, there are still many cases where the MIPs of CMIP6 might have coordinated yet further and used the same temporal constraints for different experiments with essentially the same temporal requirements, such as those that begin in the present day and run to the end of the 21st century. These differences provide scope for further rationalization in future experiments and/or CMIP phases, leading to further simplification in analysis and savings in computer time.

The need for structured documentation constrained by controlled descriptive terminology is not always well understood by all parties involved in creating content. While structured scientific metadata has an important role in science communication, it exacts a cost in time, energy, and attention. This cost causes friction in the scientific process even though it can provide the information necessary for investigators to reach a common understanding across barriers arising from distance in space, time, institutional location, or disciplinary background. The balance between this “metadata friction” and the potential benefit in ameliorating the “scientific friction” barriers is difficult to achieve (Edwards et al., 2011). Solutions need to be iterative and achieve a balance between ease of information collection and structures which support handling information at scale and being able to support multi-disciplinary cross walks in meaning. However, with the right information in place, it is possible to provide traceable, documented answers to questions about experiment protocols that could otherwise elicit different answers from different individuals over time. Overall this can result in a reduction in the support load on all parties from those who designed the experiment to those who manage the simulation data.

In this paper we have introduced the ES-DOC structures for experimental design and shown their application in CMIP6. We have introduced a formal taxonomy for experimental definition based around collections of climate modelling projects (MIPs), experiments, and numerical requirements and, in particular, constraints of one form or another. These provide structure for the formal definition of the experiment goals, design, and method. The conformance, model, and simulation definitions (to be fully defined elsewhere) will provide the concrete expression of how the experiments were executed.

The construction of ES-DOC descriptions of CMIP6 experiments has been carried out mostly by the ES-DOC team, using published material, but often as part of the iterative discussions which specified the CMIP6 MIPs. These iterative discussions, led by the MIP teams, with coordination provided at various stages by the CMIP panel and PCMDI, have improved on previous MIP exercises, albeit with a larger increase in process and still with opportunities for imprecision, duplication of design effort, and unnecessary requirements for participants. The ES-DOC experiment definitions provided another route to internal review of the design and aided in identifying and removing some of the imprecision, duplication of effort, and simulation requirements. However, there is still scope for improving the design phase.

Earlier involvement of formal documentation, would have facilitated more interaction between the MIP design teams by requiring more information to be shared earlier. Doing so in the future might allow more common design patterns, and perhaps more experiment and simulation reuse between MIPs, reducing the burden on carrying out the simulations and on storing the results. This potential gain would need to be evaluated and tensioned against the potential process burden, but it can be seen that the ES-DOC experiment, requirement, or constraint definitions are relatively lightweight yet communicate significant precision of objective and method. Early involvement of formal documentation is important for building a culture of engagement. Our experience with the CMIP6 MIPs indicates that the process of providing detailed information about experiments was perceived in a positive way by groups when the intervention occurred early in the experiment life cycle. These groups also had a sense of ownership of their content. In contrast, groups who engaged later in the experiment life cycle were more likely to perceive the documentation effort as yet another burden.

Sharing of experiments and constraints is clearly common within CMIP6, but there remain opportunities for improvement in this regard. Section 2.4 outlines a set of important relationships between the MIPs and MIP dependency on key experiments – most of which are in the CMIP (and the DECK) sub-project. Such sharing introduces extra problems of governance: who owns the shared experiment definition? In the case of the dependencies on the DECK, this is clear (it is the CMIP panel), but for other cases it is not so clear. For example, both LS3MIP and LUMIP needed a historical land experiment, and it was obvious it should be shared. In this case (and hopefully most cases) the solution was amicable, resulting in the following description:

Start year either 1850 or 1700 depending on standard practice for particular model. This experiment is shared with the LS3MIP; note that LS3MIP expects the start year to be 1850.

Although clear, this is not really ideal for downstream users (either those who may run the simulations in the wrong order, or those analysts doing inter-comparison). If sharing is to be enhanced in future CMIP exercises, then the early identification of synergies (and the resolution of any inconsistencies and related governance issues) will be necessary.

The sharing and visualization of constraint dependencies (Sect. 4.3) provides a route to both efficient execution and better understanding of experimental structure. In the case of DAMIP there is clear value to the interpretation of the MIP goals in terms of the forcing constraints, and this sort of analysis could both be extended to other MIPs and used during future design phases. While there is a trade-off between granularity of forcing and the burden of conformance documentation, with CMIP6 this trade-off was never explicitly considered. In the future it is possible that such consideration may in fact improve experimental design. We believe it will be easier for both the MIP designers and participants to be confident that they have requested, understood, and/or executed experiments that will meet their scientific objectives.

ES-DOC remains work in progress. It is fair to say that there was no wide community acceptance of the burden of documentation for CMIP5, but this was in part because of the tooling available then. With the advent of CMIP6, the tooling is much enhanced and available much earlier in the cycle, but both the underlying semantic structure and tooling can and will be improved. There is clearly opportunity of convergence between the data request and ES-DOC, and there will undoubtedly be much community feedback to take on board!

ES-DOC is not intended to apply only to CMIP exercises. We believe the preciseness and self-consistency ES-DOC imposes on experiment design documentation should be of use even when only one or a few models generate related simulations. One such target will be the sharing of national resources to deliver extraordinarily large and expensive simulations (in time, resource, and energy) where individuals and small communities could not justify the expense without sharing goals and outputs. Realizing such sharing opportunities is often impaired by insufficient communication and documentation. We believe the ES-DOC methodology can go some way towards capitalizing on these opportunities and will become essential as we contemplate using significant portions of future exascale machines.

All the underlying ES-DOC code is publicly available at https://github.com/es-doc (last access: 21 March 2020; ES-DOC, 2016). The full CMIP6 documentation is available online at https://search.es-doc.org/ (last access: 21 March 2020; ES-DOC, 2015). The ES-DOC documentation of the CMIP6 experiments can be found in the ES-DOC GitHub repository at https://github.com/ES-DOC/esdoc-docs/blob/master/cmip6/experiments/spreadsheet/experiments.xlsx (last access: 24 March 2020; ES-DOC, 2017). The code to extract and produce the ES-DOC tables in this paper is available online at https://github.com/bnlawrence/esdoc4scientists (last access: 2 March 2020) (Lawrence, 2019). Figures 5 and 6 were produced using content (in the form of triples) generated from ES-DOC and imported into gephi (https://gephi.org/legal/, last access: 2 March 2020; Gephi 2008) with manual annotations.

To improve readability, a number of examples are provided in this Appendix, rather than where first referenced in the main text.

All these tables are produced by a Python script. The ES-DOC pyesdoc4 library is used to obtain the documents and instantiate them as Python objects with access to CIM attributes via instance attributes with CIM property names. These can then be used to populate HTML tables described using jinja25 templates which are then converted to PDF for inclusion in the document using the weasyprint6 package. This methodology is more fully described in the code (Lawrence, 2019).

Table A1The abrupt 4XCO2 experiment is integral to a number of MIPs. (Not all properties are shown; see http://documentation.es-doc.org/cmip6/experiments/abrupt-4xCO2, last access: 2 March 2020, for more details.)

Table A2This is an experiment that has an anti-forcing “Historical land surface forcings except fire management” (note also two temporal constraint options “Start year either 1850 or 1700 depending on standard practice for particular model.”). See https://documentation.es-doc.org/cmip6/experiments/land-NoFire, last access: 2 March 2020, for more information.

Table A3The “Increase Cirrus Sedimentation Velocity” forcing constraint is very precise about the change to be made to “Add a local variable that replaces (in all locations where temperature is colder than 235K) the ice mass mixing ratio in the calculation of the sedimentation velocity with a value that is eight times the original ice mass mixing ratio”. See https://documentation.es-doc.org/cmip6/experiments/g7sst1-cirrus, last access: 2 March 2020, for more information.

Table A4GeoMIP is clear about what the forcing should achieve (reduction in radiative forcing from rcp8.5 to rcp4.5) but leave it open to the modelling groups to choose a method that best suits their aerosol scheme. See https://documentation.es-doc.org/cmip6/experiments/g6sulfur, last access: 2 March 2020, for more information.

The supplement related to this article is available online at: https://doi.org/10.5194/gmd-13-2149-2020-supplement.

CP represented ES-DOC in discussions with CMIP6 experiment designers, collecting information and influencing design. MJ was responsible for the data request. KET led the PCMDI involvement in experiment coordination. EG and BNL led various aspects of ES-DOC at different times. BNL and CP wrote the bulk of this paper, with contributions from the other authors.

The authors declare that they have no conflict of interest

Clearly the CMIP6 design really depends on the many scientists involved in designing and specifying the experiments under the purview of the CMIP6 panel. The use of ES-DOC to describe experiments depends heavily on the tool chain, much of which was designed and implemented by Mark Morgan under the direction of Sébastien Denvil (CNRS/IPSL). Paul Durack was instrumental in the support for CMIP6 vocabularies at PCMDI. Most of the ES-DOC work described here has been funded by national capability contributions to NCAS from the UK Natural Environment Research Council (NERC) and by the European Commission under FW7 grant agreement 312979 for IS-ENES2. The writing of this paper was part funded by the European Commission via H2020 grant agreement no. 824084 for IS-ENES3. Work by Karl E. Taylor was performed under the auspices of the US Department of Energy (USDOE) by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344 with support from the Regional and Global Modeling Analysis Program of the USDOE's Office of Science.

This research has been supported by the UK Natural Environment Research Council (NERC) (grant National capability contributions to the National Centre for Atmospheric Science (NCAS)), the Seventh Framework Programme (grant no. IS-ENES2 (312979)), the U.S. Department of Energy, Office of Science (grant no. DE-AC52-07NA27344), and the European Commission Horizon 2020 (EC funded project: IS-ENES3 (824084)).

This paper was edited by Juan Antonio Añel and reviewed by Ron Stouffer and one anonymous referee.

Balaji, V., Taylor, K. E., Juckes, M., Lawrence, B. N., Durack, P. J., Lautenschlager, M., Blanton, C., Cinquini, L., Denvil, S., Elkington, M., Guglielmo, F., Guilyardi, E., Hassell, D., Kharin, S., Kindermann, S., Nikonov, S., Radhakrishnan, A., Stockhause, M., Weigel, T., and Williams, D.: Requirements for a global data infrastructure in support of CMIP6, Geosci. Model Dev., 11, 3659–3680, https://doi.org/10.5194/gmd-11-3659-2018, 2018. a

Boer, G. J., Smith, D. M., Cassou, C., Doblas-Reyes, F., Danabasoglu, G., Kirtman, B., Kushnir, Y., Kimoto, M., Meehl, G. A., Msadek, R., Mueller, W. A., Taylor, K. E., Zwiers, F., Rixen, M., Ruprich-Robert, Y., and Eade, R.: The Decadal Climate Prediction Project (DCPP) contribution to CMIP6, Geosci. Model Dev., 9, 3751–3777, https://doi.org/10.5194/gmd-9-3751-2016, 2016.

Borst, W. N.: Construction of Engineering Ontologies for Knowledge Sharing and Reuse, Ph.D. thesis, University of Twente, Centre for Telematics and Information Technology (CTIT), Enschede, 1997. a

Cess, R. D., Potter, G. L., Blanchet, J. P., Boer, G. J., Ghan, S. J., Kiehl, J. T., Treut, H. L., Li, Z.-X., Liang, X.-Z., Mitchell, J. F. B., Morcrette, J.-J., Randall, D. A., Riches, M. R., Roeckner, E., Schlese, U., Slingo, A., Taylor, K. E., Washington, W. M., Wetherald, R. T., and Yagai, I.: Interpretation of Cloud-Climate Feedback as Produced by 14 Atmospheric General Circulation Models, Science, 245, 513–516, https://doi.org/10.1126/science.245.4917.513, 1989. a

Collins, W. J., Lamarque, J.-F., Schulz, M., Boucher, O., Eyring, V., Hegglin, M. I., Maycock, A., Myhre, G., Prather, M., Shindell, D., and Smith, S. J.: AerChemMIP: quantifying the effects of chemistry and aerosols in CMIP6, Geosci. Model Dev., 10, 585–607, https://doi.org/10.5194/gmd-10-585-2017, 2017.

da Cruz, S. M. S., Campos, M. L. M., and Mattoso, M.: A Foundational Ontology to Support Scientific Experiments, in: Proceedings of the VII International Workshop on Metadata, Ontologies and Semantic Technology (ONTOBRAS-MOST), edited by: Malucelli, A. and Bax, M., Recife, Brazil, 2012. a

Edwards, P. N., Mayernik, M. S., Batcheller, A. L., Bowker, G. C., and Borgman, C. L.: Science Friction: Data, Metadata, and Collaboration, Soc. Stud. Sci., 41, 667 –690, https://doi.org/10.1177/0306312711413314, 2011. a

ES-DOC: ES–DOC Documentation Search, available at: https://search.es-doc.org/ (last access: 21 March 2020), 2015. a

ES-DOC: Earth Science – Documentation, available at: https://github.com/es-doc (last access: 21 March 2020), 2016. a

ES-DOC: esdoc–docs, available at: https://github.com/ES-DOC/esdoc-docs/blob/master/cmip6/experiments/spreadsheet/experiments.xlsx (last access: 24 March 2020), 2017. a

Eyring, V., Bony, S., Meehl, G. A., Senior, C. A., Stevens, B., Stouffer, R. J., and Taylor, K. E.: Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization, Geosci. Model Dev., 9, 1937–1958, https://doi.org/10.5194/gmd-9-1937-2016, 2016. a, b, c, d

Gates, W. L., Boyle, J. S., Covey, C., Dease, C. G., Doutriaux, C. M., Drach, R. S., Fiorino, M., Gleckler, P. J., Hnilo, J. J., Marlais, S. M., Phillips, T. J., Potter, G. L., Santer, B. D., Sperber, K. R., Taylor, K. E., and Williams, D. N.: An Overview of the Results of the Atmospheric Model Intercomparison Project (AMIP I), B. Am. Meteorol. Soc., 80, 29–56, https://doi.org/10.1175/1520-0477(1999)080<0029:AOOTRO>2.0.CO;2, 1999. a

Gerber, E. P. and Manzini, E.: The Dynamics and Variability Model Intercomparison Project (DynVarMIP) for CMIP6: assessing the stratosphere–troposphere system, Geosci. Model Dev., 9, 3413–3425, https://doi.org/10.5194/gmd-9-3413-2016, 2016. a

Gillett, N. P., Shiogama, H., Funke, B., Hegerl, G., Knutti, R., Matthes, K., Santer, B. D., Stone, D., and Tebaldi, C.: The Detection and Attribution Model Intercomparison Project (DAMIP v1.0) contribution to CMIP6, Geosci. Model Dev., 9, 3685–3697, https://doi.org/10.5194/gmd-9-3685-2016, 2016.

Gregory, J. M., Bouttes, N., Griffies, S. M., Haak, H., Hurlin, W. J., Jungclaus, J., Kelley, M., Lee, W. G., Marshall, J., Romanou, A., Saenko, O. A., Stammer, D., and Winton, M.: The Flux-Anomaly-Forced Model Intercomparison Project (FAFMIP) contribution to CMIP6: investigation of sea-level and ocean climate change in response to CO2 forcing, Geosci. Model Dev., 9, 3993–4017, https://doi.org/10.5194/gmd-9-3993-2016, 2016.

Griffies, S. M., Danabasoglu, G., Durack, P. J., Adcroft, A. J., Balaji, V., Böning, C. W., Chassignet, E. P., Curchitser, E., Deshayes, J., Drange, H., Fox-Kemper, B., Gleckler, P. J., Gregory, J. M., Haak, H., Hallberg, R. W., Heimbach, P., Hewitt, H. T., Holland, D. M., Ilyina, T., Jungclaus, J. H., Komuro, Y., Krasting, J. P., Large, W. G., Marsland, S. J., Masina, S., McDougall, T. J., Nurser, A. J. G., Orr, J. C., Pirani, A., Qiao, F., Stouffer, R. J., Taylor, K. E., Treguier, A. M., Tsujino, H., Uotila, P., Valdivieso, M., Wang, Q., Winton, M., and Yeager, S. G.: OMIP contribution to CMIP6: experimental and diagnostic protocol for the physical component of the Ocean Model Intercomparison Project, Geosci. Model Dev., 9, 3231–3296, https://doi.org/10.5194/gmd-9-3231-2016, 2016.

Guilyardi, E., Balaji, V., Lawrence, B., Callaghan, S., Deluca, C., Denvil, S., Lautenschlager, M., Morgan, M., Murphy, S., and Taylor, K. E.: Documenting Climate Models and Their Simulations, B. Am. Meteorol. Soc., 94, 623–627, https://doi.org/10.1175/BAMS-D-11-00035.1, 2013. a

Gutowski Jr., W. J., Giorgi, F., Timbal, B., Frigon, A., Jacob, D., Kang, H.-S., Raghavan, K., Lee, B., Lennard, C., Nikulin, G., O'Rourke, E., Rixen, M., Solman, S., Stephenson, T., and Tangang, F.: WCRP COordinated Regional Downscaling EXperiment (CORDEX): a diagnostic MIP for CMIP6, Geosci. Model Dev., 9, 4087–4095, https://doi.org/10.5194/gmd-9-4087-2016, 2016. a

Haarsma, R. J., Roberts, M. J., Vidale, P. L., Senior, C. A., Bellucci, A., Bao, Q., Chang, P., Corti, S., Fučkar, N. S., Guemas, V., von Hardenberg, J., Hazeleger, W., Kodama, C., Koenigk, T., Leung, L. R., Lu, J., Luo, J.-J., Mao, J., Mizielinski, M. S., Mizuta, R., Nobre, P., Satoh, M., Scoccimarro, E., Semmler, T., Small, J., and von Storch, J.-S.: High Resolution Model Intercomparison Project (HighResMIP v1.0) for CMIP6, Geosci. Model Dev., 9, 4185–4208, https://doi.org/10.5194/gmd-9-4185-2016, 2016.

Hassell, D., Gregory, J., Blower, J., Lawrence, B. N., and Taylor, K. E.: A data model of the Climate and Forecast metadata conventions (CF-1.6) with a software implementation (cf-python v2.1), Geosci. Model Dev., 10, 4619–4646, https://doi.org/10.5194/gmd-10-4619-2017, 2017. a, b

Jones, C. D., Arora, V., Friedlingstein, P., Bopp, L., Brovkin, V., Dunne, J., Graven, H., Hoffman, F., Ilyina, T., John, J. G., Jung, M., Kawamiya, M., Koven, C., Pongratz, J., Raddatz, T., Randerson, J. T., and Zaehle, S.: C4MIP – The Coupled Climate–Carbon Cycle Model Intercomparison Project: experimental protocol for CMIP6, Geosci. Model Dev., 9, 2853–2880, https://doi.org/10.5194/gmd-9-2853-2016, 2016.

Juckes, M., Taylor, K. E., Durack, P. J., Lawrence, B., Mizielinski, M. S., Pamment, A., Peterschmitt, J.-Y., Rixen, M., and Sénési, S.: The CMIP6 Data Request (DREQ, version 01.00.31), Geosci. Model Dev., 13, 201–224, https://doi.org/10.5194/gmd-13-201-2020, 2020. a

Kageyama, M., Braconnot, P., Harrison, S. P., Haywood, A. M., Jungclaus, J. H., Otto-Bliesner, B. L., Peterschmitt, J.-Y., Abe-Ouchi, A., Albani, S., Bartlein, P. J., Brierley, C., Crucifix, M., Dolan, A., Fernandez-Donado, L., Fischer, H., Hopcroft, P. O., Ivanovic, R. F., Lambert, F., Lunt, D. J., Mahowald, N. M., Peltier, W. R., Phipps, S. J., Roche, D. M., Schmidt, G. A., Tarasov, L., Valdes, P. J., Zhang, Q., and Zhou, T.: The PMIP4 contribution to CMIP6 – Part 1: Overview and over-arching analysis plan, Geosci. Model Dev., 11, 1033–1057, https://doi.org/10.5194/gmd-11-1033-2018, 2018.

Keller, D. P., Lenton, A., Scott, V., Vaughan, N. E., Bauer, N., Ji, D., Jones, C. D., Kravitz, B., Muri, H., and Zickfeld, K.: The Carbon Dioxide Removal Model Intercomparison Project (CDRMIP): rationale and experimental protocol for CMIP6, Geosci. Model Dev., 11, 1133–1160, https://doi.org/10.5194/gmd-11-1133-2018, 2018.

Kravitz, B., Robock, A., Tilmes, S., Boucher, O., English, J. M., Irvine, P. J., Jones, A., Lawrence, M. G., MacCracken, M., Muri, H., Moore, J. C., Niemeier, U., Phipps, S. J., Sillmann, J., Storelvmo, T., Wang, H., and Watanabe, S.: The Geoengineering Model Intercomparison Project Phase 6 (GeoMIP6): simulation design and preliminary results, Geosci. Model Dev., 8, 3379–3392, https://doi.org/10.5194/gmd-8-3379-2015, 2015.

Lawrence, B.: Bnlawrence/Esdoc4scientists: Tools for Creating Publication Ready Tables from ESDOC Content, https://doi.org/10.5281/zenodo.2593445, 2019. a, b

Lawrence, B. N., Balaji, V., Bentley, P., Callaghan, S., DeLuca, C., Denvil, S., Devine, G., Elkington, M., Ford, R. W., Guilyardi, E., Lautenschlager, M., Morgan, M., Moine, M.-P., Murphy, S., Pascoe, C., Ramthun, H., Slavin, P., Steenman-Clark, L., Toussaint, F., Treshansky, A., and Valcke, S.: Describing Earth system simulations with the Metafor CIM, Geosci. Model Dev., 5, 1493–1500, https://doi.org/10.5194/gmd-5-1493-2012, 2012. a

Lawrence, D. M., Hurtt, G. C., Arneth, A., Brovkin, V., Calvin, K. V., Jones, A. D., Jones, C. D., Lawrence, P. J., de Noblet-Ducoudré, N., Pongratz, J., Seneviratne, S. I., and Shevliakova, E.: The Land Use Model Intercomparison Project (LUMIP) contribution to CMIP6: rationale and experimental design, Geosci. Model Dev., 9, 2973–2998, https://doi.org/10.5194/gmd-9-2973-2016, 2016.

Matthes, K., Funke, B., Andersson, M. E., Barnard, L., Beer, J., Charbonneau, P., Clilverd, M. A., Dudok de Wit, T., Haberreiter, M., Hendry, A., Jackman, C. H., Kretzschmar, M., Kruschke, T., Kunze, M., Langematz, U., Marsh, D. R., Maycock, A. C., Misios, S., Rodger, C. J., Scaife, A. A., Seppälä, A., Shangguan, M., Sinnhuber, M., Tourpali, K., Usoskin, I., van de Kamp, M., Verronen, P. T., and Versick, S.: Solar forcing for CMIP6 (v3.2), Geosci. Model Dev., 10, 2247–2302, https://doi.org/10.5194/gmd-10-2247-2017, 2017. a

Mattoso, M., Werner, C., Travassos, G. H., Braganholo, V., Ogasawara, E., Oliveira, D. D., Cruz, S. M. S. D., Martinho, W., and Murta, L.: Towards Supporting the Life Cycle of Large Scale Scientific Experiments, Int. J. Business Proc. Integr. Manage., 5, 79, https://doi.org/10.1504/IJBPIM.2010.033176, 2010. a, b, c, d

Meehl, G. A., Boer, G. J., Covey, C., Latif, M., and Stouffer, R. J.: Intercomparison Makes for a Better Climate Model, Eos, Trans. Am. Geophys. Union, 78, 445–451, https://doi.org/10.1029/97EO00276, 1997. a

Meehl, G. A., Moss, R., Taylor, K. E., Eyring, V., Stouffer, R. J., Bony, S., and Stevens, B.: Climate Model Intercomparisons: Preparing for the Next Phase, Eos, Trans. Am. Geophys. Union, 95, 77–78, https://doi.org/10.1002/2014EO090001, 2014. a

Moine, M.-P., Valcke, S., Lawrence, B. N., Pascoe, C., Ford, R. W., Alias, A., Balaji, V., Bentley, P., Devine, G., Callaghan, S. A., and Guilyardi, E.: Development and exploitation of a controlled vocabulary in support of climate modelling, Geosci. Model Dev., 7, 479–493, https://doi.org/10.5194/gmd-7-479-2014, 2014. a

Notz, D., Jahn, A., Holland, M., Hunke, E., Massonnet, F., Stroeve, J., Tremblay, B., and Vancoppenolle, M.: The CMIP6 Sea-Ice Model Intercomparison Project (SIMIP): understanding sea ice through climate-model simulations, Geosci. Model Dev., 9, 3427–3446, https://doi.org/10.5194/gmd-9-3427-2016, 2016. a

Nowicki, S. M. J., Payne, A., Larour, E., Seroussi, H., Goelzer, H., Lipscomb, W., Gregory, J., Abe-Ouchi, A., and Shepherd, A.: Ice Sheet Model Intercomparison Project (ISMIP6) contribution to CMIP6, Geosci. Model Dev., 9, 4521–4545, https://doi.org/10.5194/gmd-9-4521-2016, 2016.

O'Neill, B. C., Tebaldi, C., van Vuuren, D. P., Eyring, V., Friedlingstein, P., Hurtt, G., Knutti, R., Kriegler, E., Lamarque, J.-F., Lowe, J., Meehl, G. A., Moss, R., Riahi, K., and Sanderson, B. M.: The Scenario Model Intercomparison Project (ScenarioMIP) for CMIP6, Geosci. Model Dev., 9, 3461–3482, https://doi.org/10.5194/gmd-9-3461-2016, 2016.

Pincus, R., Forster, P. M., and Stevens, B.: The Radiative Forcing Model Intercomparison Project (RFMIP): experimental protocol for CMIP6, Geosci. Model Dev., 9, 3447–3460, https://doi.org/10.5194/gmd-9-3447-2016, 2016.

Ruane, A. C., Teichmann, C., Arnell, N. W., Carter, T. R., Ebi, K. L., Frieler, K., Goodess, C. M., Hewitson, B., Horton, R., Kovats, R. S., Lotze, H. K., Mearns, L. O., Navarra, A., Ojima, D. S., Riahi, K., Rosenzweig, C., Themessl, M., and Vincent, K.: The Vulnerability, Impacts, Adaptation and Climate Services Advisory Board (VIACS AB v1.0) contribution to CMIP6, Geosci. Model Dev., 9, 3493–3515, https://doi.org/10.5194/gmd-9-3493-2016, 2016. a

Sausen, R., Barthel, K., and Hasselmann, K.: Coupled Ocean-Atmosphere Models with Flux Correction, Clim. Dynam., 2, 145–163, https://doi.org/10.1007/BF01053472, 1988. a

Smith, D. M., Screen, J. A., Deser, C., Cohen, J., Fyfe, J. C., García-Serrano, J., Jung, T., Kattsov, V., Matei, D., Msadek, R., Peings, Y., Sigmond, M., Ukita, J., Yoon, J.-H., and Zhang, X.: The Polar Amplification Model Intercomparison Project (PAMIP) contribution to CMIP6: investigating the causes and consequences of polar amplification, Geosci. Model Dev., 12, 1139–1164, https://doi.org/10.5194/gmd-12-1139-2019, 2019.

Soldatova, L. and King, R. D.: An Ontology of Scientific Experiments, J. Roy. Soc., Interface/the Royal Society, 3, 795–803, https://doi.org/10.1098/rsif.2006.0134, 2007. a, b

Taylor, K. E., Stouffer, R. J., and Meehl, G. A.: A Summary of the CMIP5 Experiment Design, Tech. rep., PCMI, Livermore, 2011. a

Timmreck, C., Mann, G. W., Aquila, V., Hommel, R., Lee, L. A., Schmidt, A., Brühl, C., Carn, S., Chin, M., Dhomse, S. S., Diehl, T., English, J. M., Mills, M. J., Neely, R., Sheng, J., Toohey, M., and Weisenstein, D.: The Interactive Stratospheric Aerosol Model Intercomparison Project (ISA-MIP): motivation and experimental design, Geosci. Model Dev., 11, 2581–2608, https://doi.org/10.5194/gmd-11-2581-2018, 2018. a

van den Hurk, B., Kim, H., Krinner, G., Seneviratne, S. I., Derksen, C., Oki, T., Douville, H., Colin, J., Ducharne, A., Cheruy, F., Viovy, N., Puma, M. J., Wada, Y., Li, W., Jia, B., Alessandri, A., Lawrence, D. M., Weedon, G. P., Ellis, R., Hagemann, S., Mao, J., Flanner, M. G., Zampieri, M., Materia, S., Law, R. M., and Sheffield, J.: LS3MIP (v1.0) contribution to CMIP6: the Land Surface, Snow and Soil moisture Model Intercomparison Project – aims, setup and expected outcome, Geosci. Model Dev., 9, 2809–2832, https://doi.org/10.5194/gmd-9-2809-2016, 2016.

Vanschoren, J., Blockeel, H., Pfahringer, B., and Holmes, G.: Experiment Databases, Mach. Learn., 87, 127–158, https://doi.org/10.1007/s10994-011-5277-0, 2012. a

Webb, M. J., Andrews, T., Bodas-Salcedo, A., Bony, S., Bretherton, C. S., Chadwick, R., Chepfer, H., Douville, H., Good, P., Kay, J. E., Klein, S. A., Marchand, R., Medeiros, B., Siebesma, A. P., Skinner, C. B., Stevens, B., Tselioudis, G., Tsushima, Y., and Watanabe, M.: The Cloud Feedback Model Intercomparison Project (CFMIP) contribution to CMIP6, Geosci. Model Dev., 10, 359–384, https://doi.org/10.5194/gmd-10-359-2017, 2017.

Wikipedia contributors: Experiment – Wikipedia, The Free Encyclopedia, 2018. a

Zanchettin, D., Khodri, M., Timmreck, C., Toohey, M., Schmidt, A., Gerber, E. P., Hegerl, G., Robock, A., Pausata, F. S. R., Ball, W. T., Bauer, S. E., Bekki, S., Dhomse, S. S., LeGrande, A. N., Mann, G. W., Marshall, L., Mills, M., Marchand, M., Niemeier, U., Poulain, V., Rozanov, E., Rubino, A., Stenke, A., Tsigaridis, K., and Tummon, F.: The Model Intercomparison Project on the climatic response to Volcanic forcing (VolMIP): experimental design and forcing input data for CMIP6, Geosci. Model Dev., 9, 2701–2719, https://doi.org/10.5194/gmd-9-2701-2016, 2016.

Zhou, T., Turner, A. G., Kinter, J. L., Wang, B., Qian, Y., Chen, X., Wu, B., Wang, B., Liu, B., Zou, L., and He, B.: GMMIP (v1.0) contribution to CMIP6: Global Monsoons Model Inter-comparison Project, Geosci. Model Dev., 9, 3589–3604, https://doi.org/10.5194/gmd-9-3589-2016, 2016.

Zocholl, M., Camossi, E., Jousselme, A.-L., and Ray, C.: Ontology-Based Design of Experiments on Big Data Solutions, in: Proceedings of the Posters and Demos Track of the 14th International Conference on Semantic Systems (SEMPDS), edited by: Khalili, A. and Koutraki, M., Vol. 2198, p. 4, ceur-ws.org, Vienna, Austria, 2018. a

https://www.wcrp-climate.org/wgcm-cmip/cmip-panel, last access: 2 March 2020

https://pcmdi.llnl.gov/CMIP6/, last access: 2 March 2020

https://www.geosci-model-dev.net/special_issue590.html, last access: 2 March 2020

https://pypi.org/project/pyesdoc/, last access: 2 March 2020

http://jinja.pocoo.org/, last access: 2 March 2020

https://weasyprint.org/, last access: 2 March 2020