the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

The Lagrangian particle dispersion model FLEXPART version 10.4

Espen Sollum

Henrik Grythe

Nina I. Kristiansen

Massimo Cassiani

Sabine Eckhardt

Delia Arnold

Don Morton

Rona L. Thompson

Christine D. Groot Zwaaftink

Nikolaos Evangeliou

Harald Sodemann

Leopold Haimberger

Stephan Henne

Dominik Brunner

John F. Burkhart

Anne Fouilloux

Jerome Brioude

Anne Philipp

Petra Seibert

Andreas Stohl

The Lagrangian particle dispersion model FLEXPART in its original version in the mid-1990s was designed for calculating the long-range and mesoscale dispersion of hazardous substances from point sources, such as those released after an accident in a nuclear power plant. Over the past decades, the model has evolved into a comprehensive tool for multi-scale atmospheric transport modeling and analysis and has attracted a global user community. Its application fields have been extended to a large range of atmospheric gases and aerosols, e.g., greenhouse gases, short-lived climate forcers like black carbon and volcanic ash, and it has also been used to study the atmospheric branch of the water cycle. Given suitable meteorological input data, it can be used for scales from dozens of meters to global. In particular, inverse modeling based on source–receptor relationships from FLEXPART has become widely used. In this paper, we present FLEXPART version 10.4, which works with meteorological input data from the European Centre for Medium-Range Weather Forecasts (ECMWF) Integrated Forecast System (IFS) and data from the United States National Centers of Environmental Prediction (NCEP) Global Forecast System (GFS). Since the last publication of a detailed FLEXPART description (version 6.2), the model has been improved in different aspects such as performance, physicochemical parameterizations, input/output formats, and available preprocessing and post-processing software. The model code has also been parallelized using the Message Passing Interface (MPI). We demonstrate that the model scales well up to using 256 processors, with a parallel efficiency greater than 75 % for up to 64 processes on multiple nodes in runs with very large numbers of particles. The deviation from 100 % efficiency is almost entirely due to the remaining nonparallelized parts of the code, suggesting large potential for further speedup. A new turbulence scheme for the convective boundary layer has been developed that considers the skewness in the vertical velocity distribution (updrafts and downdrafts) and vertical gradients in air density. FLEXPART is the only model available considering both effects, making it highly accurate for small-scale applications, e.g., to quantify dispersion in the vicinity of a point source. The wet deposition scheme for aerosols has been completely rewritten and a new, more detailed gravitational settling parameterization for aerosols has also been implemented. FLEXPART has had the option of running backward in time from atmospheric concentrations at receptor locations for many years, but this has now been extended to also work for deposition values and may become useful, for instance, for the interpretation of ice core measurements. To our knowledge, to date FLEXPART is the only model with that capability. Furthermore, the temporal variation and temperature dependence of chemical reactions with the OH radical have been included, allowing for more accurate simulations for species with intermediate lifetimes against the reaction with OH, such as ethane. Finally, user settings can now be specified in a more flexible namelist format, and output files can be produced in NetCDF format instead of FLEXPART's customary binary format. In this paper, we describe these new developments. Moreover, we present some tools for the preparation of the meteorological input data and for processing FLEXPART output data, and we briefly report on alternative FLEXPART versions.

- Article

(3916 KB) - Full-text XML

- BibTeX

- EndNote

Multi-scale offline Lagrangian particle dispersion models (LPDMs) are versatile tools for simulating the transport and turbulent mixing of gases and aerosols in the atmosphere. Examples of such models are the Numerical Atmospheric-dispersion Modelling Environment (NAME) (Jones et al., 2007), the Stochastic Time-Inverted Lagrangian Transport (STILT) model (Lin et al., 2003), the Hybrid Single-Particle Lagrangian Integrated Trajectory (HYSPLIT) model (Stein et al., 2015) and the FLEXible PARTicle (FLEXPART) model (Stohl et al., 1998, 2005). LPDMs are stochastic models that compute trajectories for a large number of notional particles that do not represent real aerosol particles but points moving with the ambient flow. The trajectories represent the transport by mean flow as well as turbulent, diffusive transport by unresolved parameterized subgrid-scale transport processes (e.g., turbulence, meandering, deep convection, etc.) and can also include gravitational settling. Each particle carries a certain mass, which can be affected by loss processes such as radioactive decay, chemical loss, or dry and wet deposition.

The theoretical basis for most currently used atmospheric particle models was laid down by Thomson (1987). He introduced the criterion to formulate Lagrangian stochastic models that produce particle trajectories consistent with predefined Eulerian probability density functions in physical and velocity space. Rodean (1996) and Wilson and Sawford (1996) provided detailed descriptions of the theory and formulation of LPDMs in constant density flows and under different atmospheric stability conditions. Stohl and Thomson (1999) extended this to flows with vertically variable air density. An important characteristic of LPDMs is their ability to run backward in time in a framework that is theoretically consistent with both the Eulerian flow field and LPDM forward calculations. This was discussed by Thomson (1987, 1990), further developed by Flesch et al. (1995), and extended to global-scale dispersion by Stohl et al. (2003) and Seibert and Frank (2004). The more practical aspects and efficiency of LPDMs were discussed by Zannetti (1992) and Uliasz (1994). A history of their development was provided by Thomson and Wilson (2013).

Lagrangian models exhibit much less numerical diffusion than Eulerian or semi-Lagrangian models (e.g., Reithmeier and Sausen, 2002; Cassiani et al., 2016), even though some numerical errors also arise in the discretization of their stochastic differential equations (Ramli and Esler, 2016). Due to their low level of numerical diffusion, tracer filaments generated by dispersion in the atmosphere (Ottino, 1989) are much better captured in Lagrangian models than in Eulerian models. It has been noticed, for instance, that Eulerian models have difficulties simulating the fine tracer structures created by intercontinental pollution transport (Rastigejev et al., 2010), while these are well preserved in LPDMs (e.g., Stohl et al., 2003). Furthermore, in Eulerian models a tracer released from a point source is instantaneously mixed within a grid box, whereas Lagrangian models are independent of a computational grid and can account for point or line sources with potentially infinitesimal spatial resolution. When combined with their capability to run backward in time, this means that LPDMs can also be used to investigate the history of air parcels affecting, for instance, an atmospheric measurement site (e.g., for in situ monitoring of atmospheric composition).

The computational efficiency of LPDMs depends on the type of application. One important aspect is that their computational cost does not increase substantially with the number of species transported (excluding aerosol particles with different gravitational settling, for which trajectories deviate from each other), making multispecies simulations efficient. On the other hand, the computational time scales linearly with the number of particles used, while the statistical error in the model output decreases only with the square root of the particle density. Thus, it can be computationally costly to reduce statistical errors, and data input/output can require substantial additional resources. Generally, a high particle density can be achieved with a small number of released particles in the vicinity of a release location, where statistical errors, relative to simulated concentrations, are typically small. However, particle density and thus the relative accuracy of the results decrease with distance from the source. Methods should therefore be used to reduce the statistical error (e.g., Heinz et al., 2003), such as kernels or particle splitting, and it is important to quantify the statistical error.

1.1 The Lagrangian particle dispersion model FLEXPART

One of the most widely used LPDMs is the open-source model FLEXPART, which simulates the transport, diffusion, dry and wet deposition, radioactive decay, and 1st-order chemical reactions (e.g., OH oxidation) of tracers released from point, line, area or volume sources, or filling the whole atmosphere (Stohl et al., 1998, 2005). FLEXPART development started more than 2 decades ago (Stohl et al., 1998) and the model has been free software ever since it was first released. The status as a free software is formally established by releasing the code under the GNU General Public License (GPL) Version 3. However, the last peer-reviewed publication describing FLEXPART (version 6.2) was published as a technical note about 14 years ago (Stohl et al., 2005). Since then, while updates of FLEXPART's source code and a manual were made available from the web page at https://flexpart.eu/ (last access: 30 October 2019), no citable reference was provided. In this paper, we describe FLEXPART developments since Stohl et al. (2005), which led to the current version 10.4 (subsequently abbreviated as v10.4).

FLEXPART can be run either forward or backward in time. For forward simulations, particles are released from one or more sources and concentrations (or mixing ratios) are determined on a regular latitude–longitude–altitude grid. In backward mode, the location where particles are released represents a receptor (e.g., a measurement site). Like in the forward mode, particles are sampled on a latitude–longitude–altitude grid, which in this case corresponds to potential sources. The functional values obtained represent the source–receptor relationship (SRR) (Seibert and Frank, 2004), also called source–receptor sensitivity (Wotawa et al., 2003) or simply emission sensitivity, and are related to the particles' residence time in the output grid cells. Backward modeling is more efficient than forward modeling for calculating SRRs if the number of receptors is smaller than the number of (potential) sources. Seibert and Frank (2004) explained in detail the theory of backward modeling, and Stohl et al. (2003) gave a concrete backward modeling example. FLEXPART can also be used in a domain-filling mode whereby the entire atmosphere is represented by (e.g., a few million) particles of equal mass (Stohl and James, 2004). The number of particles required for domain-filling simulations, not unlike those needed for other types of simulations, depends on the scientific question to be answered. For instance, a few million particles distributed globally are often enough to investigate the statistical properties of air mass transport (e.g., monthly average residence times in a particular area that is not too small) but would not be enough for a case study of airstreams related to a particular synoptic situation (e.g., describing flow in the warm conveyor belt of a particular cyclone).

FLEXPART is an offline model that uses meteorological fields (analyses or forecasts) as input. Such data are available from several different numerical weather prediction (NWP) models. For the model version described here, v10.4, data from the European Centre for Medium-Range Weather Forecasts (ECMWF) Integrated Forecast System (IFS) and data from the United States National Centers of Environmental Prediction (NCEP) Global Forecast System (GFS) can be used. Common spatial resolutions for IFS depending on the application include at 3 h (standard for older products, e.g., ERA-Interim), at 1 h (standard for newer products, e.g., ERA5) and at 1 h (current ECMWF operational data). The ECMWF IFS model currently has 137 vertical levels. NCEP GFS input files are usually used at horizontal resolution, with 64 vertical levels and 3 h time resolution. NCEP GFS input files are also available at and horizontal resolution. Other FLEXPART model branches have been developed for input data from various limited-area models, for example the Weather Research and Forecasting (WRF) meteorological model (Brioude et al., 2013) and the Consortium for Small-scale Modeling (COSMO) model (Oney, 2015), which extend the applicability of FLEXPART down to the meso-gamma scale. Notice that the turbulence parameterizations of FLEXPART are valid at even smaller scales. Another FLEXPART model version, FLEXPART–NorESM/CAM (Cassiani et al., 2016), uses the meteorological output data generated by the Norwegian Earth System Model (NorESM1-M) with its atmospheric component CAM (Community Atmosphere Model). The current paper does not document these other model branches, but most share many features with FLEXPART v10.4 and some are briefly described in Appendix C. A key aspect of these model branches is the ability to read meteorological input other than that from ECMWF or NCEP.

1.2 FLEXPART and its history

FLEXPART's first version (v1) was a further development of the trajectory model FLEXTRA (Stohl et al., 1995) and was coded in Fortran 77. It provided gridded output of concentrations of chemical species and radionuclides. Its meteorological input data were based on the ECMWF-specific GRIB-1 (gridded binary) format. The model was first applied in an extensive validation study using measurements from three large-scale tracer experiments (Stohl et al., 1998). A deposition module was added in version 2. Version 3 saw improvements in performance and the addition of a subgrid-scale terrain effects parameterization. In v3.1 the output format was optimized (sparse matrix) and mixing ratio output could optionally be produced. It also allowed for the output of particle positions. Furthermore, a density correction was added to account for decreasing air density with height in the boundary layer (Stohl and Thomson, 1999). Further v3 releases included the addition of a convection scheme (Seibert et al., 2001) based on Emanuel and Živković-Rothman (1999), the option to calculate mass fluxes across grid cell faces and age spectra, and free format input (v3.2). The preliminary convection scheme of v3.2 was revised in v4 (see Forster et al., 2007). In v5 the output unit of backward calculations was changed to seconds and improvements in the input/output handling were made. Comprehensive validation of these early FLEXPART versions was done during intercontinental air pollution transport studies at the end of the 1990s and early 2000s (Stohl and Trickl, 1999; Forster et al., 2001; Spichtinger et al., 2001; Stohl et al., 2002, 2003). Special developments were also made in order to extend FLEXPART's forecasting capabilities for large-scale field campaigns (Stohl et al., 2004). Version 6.0 saw corrections to the numerics in the convection scheme, the addition of a domain-filling option used, for instance, in water cycle studies (Stohl and James, 2004) and the possibility to use nested output. Version 6.2, which added the ability to model sources and receptors in both mass and mixing ratio units (Seibert and Frank, 2004), is currently the last version described in a publication (Stohl et al., 2005). A separate sister model branch (v6.4) was adapted to run with NCEP GFS meteorological input data. The current paper describes the most important model developments since v6.2 (for ECMWF) and v6.4 (for GFS).

Version 8.0 unified the model branches based on ECMWF IFS and NCEP GFS input data in one source package but still required the building of two different executables. Importantly, Fortran 90 constructs were introduced in parts of the code, such as initial support for dynamic memory allocation. Furthermore, a global land use inventory was added, allowing for more accurate dry deposition calculations everywhere on the globe (before, land use data were provided only for Europe). The reading of the – at the time – newly introduced GRIB-2 format with the ECMWF grib_api library was implemented in v8.2. An option to calculate the sensitivity to initial conditions in backward model runs (in addition to the emission sensitivity calculations) was also implemented in v8.2. Version 8 was also the first version that distinguished between in-cloud and below-cloud scavenging for washout, relying on simple diagnostics for clouds based on grid-scale relative humidity. With a growing number of parameters defining removal processes, each species was given its own definition file, whereas in previous versions the properties for all species were contained in a single file. The gravitational settling scheme was improved in v8.2.1 (Stohl et al., 2011), and this is briefly described in this paper in section 2.3.

For v9, the code was transformed to the Fortran 90 free-form source format. The option to read the run specifications from Fortran namelists instead of the standard input files was introduced, as described in Sect. 5 of this paper. This change was motivated by the resulting greater flexibility, in particular with regard to setting default values, optional arguments, when new developments require adding new parameters and when specifying parameter lists. In addition, an option to produce output in compressed NetCDF 4 format was provided (see Sect. 6.3). Another option to write some model output only for the first vertical layer to save storage space for inverse modeling applications was also introduced (Thompson and Stohl, 2014) (see Sect. 2.6).

1.3 FLEXPART version 10.4

For v10.4 of FLEXPART, described in this paper, several more changes and improvements were made. First, an optional new scheme applying more realistic skewed rather than Gaussian turbulence statistics in the convective atmospheric boundary layer (ABL) was developed (Sect. 2.1). Second, the wet deposition scheme for aerosols was totally revised (Grythe et al., 2017), introducing dependencies on aerosol size, precipitation type (rain or snow), and distinguishing between in-cloud and below-cloud scavenging (see Sect. 2.4). The code now also allows for the reading of three-dimensional (3-D) cloud water fields from meteorological input files. Third, a method to calculate the sensitivity of deposited quantities to sources in backward mode was developed (Sect. 2.5) Fourth, chemical reactions with the hydroxyl radical (OH) are now made dependent on the temperature and vary sub-monthly (Sect. 2.7). Fifth, large parts of the code were parallelized using the Message Passing Interface (MPI), thus facilitating a substantial speedup for certain applications (see Sect. 3), and the code was unified so that a single executable can now use both ECMWF and GFS input data. Sixth, a dust mobilization scheme that can be run as a FLEXPART preprocessor was developed (Sect. 2.8). Seventh, the software used to retrieve data from the ECMWF has been modernized and can now also be used by scientists from non-ECMWF member states (Sect. 5.2.1). Finally, a testing environment was created that allows users to verify their FLEXPART installation and compare results (Sect. 7).

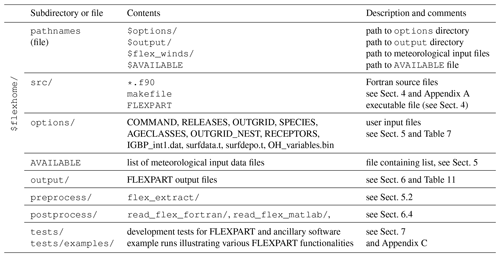

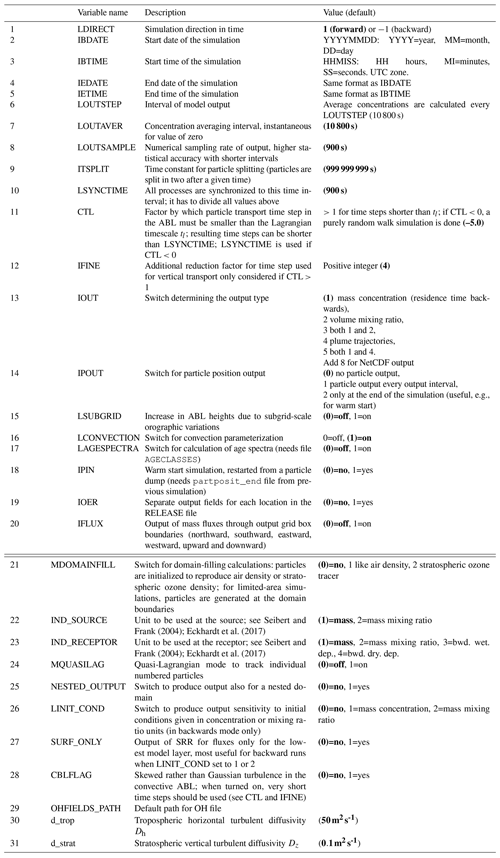

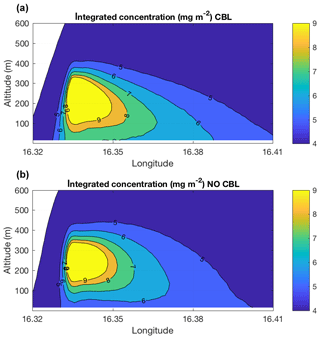

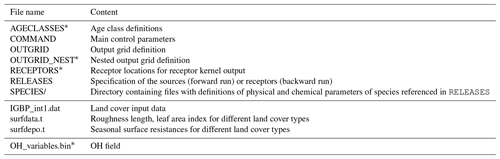

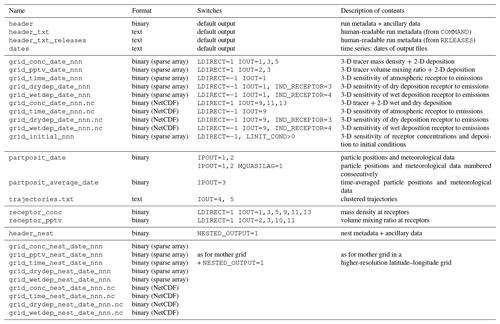

Despite the many changes and additions, in large part the operation of FLEXPART v10.4 still resembles the original version 1 design. Throughout this paper, we avoid repeating information on aspects of the model that have not changed since earlier model descriptions. The paper should therefore always be considered together with the publications of Stohl et al. (1998, 2005). To provide the necessary context for the rest of this paper, we provide a brief overview of the FLEXPART v10.4 directory structure in Table 1. The source code is contained in directory src. The pathnames of the input and output directories are stated in the file pathnames read by the FLEXPART executable. The directory options contains the parameters that define a run in files such as COMMAND (e.g., start and end times of the simulation, output frequency, etc.), RELEASES (definition of the particle releases), OUTGRID (output grid specifications) and others. All the output is written in a directory unique for each run. There are also other directories containing the model testing environment and example runs, as well as preprocessing and post-processing software (see Table 1).

Sensu stricto FLEXPART consists of the (Fortran) source files required to build an executable, not including external libraries such as those needed for input reading. The makefiles and the sample input as provided in the “options” may also be included under this term. However, in order to do real work with FLEXPART, one also needs to obtain meteorological input data (in the case of the ECMWF this is not trivial, so the flex_extract package is provided), and one needs to do post-processing. This is the reason why we include a selection of such tools here.

This section gives an overview of the main updates of the model physics and chemistry since the last published FLEXPART version, v6.2 (Stohl et al., 2005). Some developments have been published already separately, and in such cases we keep the description short, focusing on technical aspects of the implementation in FLEXPART that are important for model users or demonstrating applications not covered in the original papers.

2.1 Boundary layer turbulence

Subgrid-scale atmospheric motions unresolved by the meteorological input data need to be parameterized in FLEXPART. This is done by adding stochastic fluctuations based on Langevin equations for the particle velocity components (Stohl et al., 2005). In the ABL, the stochastic differential equations are formulated according to the well-mixed criteria proposed by Thomson (1987). Until FLEXPART version 9.2, the Eulerian probability density functions (PDFs) for the three velocity components were assumed to be three independent Gaussian PDFs. However, for the vertical velocity component, the Gaussian turbulence model is well suited only for stable and neutral conditions. In the convective ABL (CBL), turbulence is skewed since a larger area is occupied by downdrafts than by updrafts (e.g., Stull, 1988; Luhar and Britter, 1989). In such conditions, the Gaussian turbulence model is not appropriate for sources within the ABL, as it cannot reproduce the observed upward bending of plumes from near-ground sources or the rapid downward transport of plumes from elevated sources (Venkatram and Wyngaard, 1988). However, the Gaussian approximation has negligible influence once the tracer is mixed throughout the whole ABL.

Cassiani et al. (2015) developed an alternative Langevin equation model for the vertical particle velocity including both skewed turbulence and a vertical density gradient, which is now implemented in FLEXPART v10.4. This scheme can be activated by setting the switch CBL to 1 in the file COMMAND. In this case, the time step requirement for numerical stability is much more stringent than for the standard Gaussian turbulence model (typically, values of CTL=10 and IFINE=10 are required, also set in the file COMMAND). Therefore, also considering that the computation time required for each time step is about 2.5 times that of the standard Gaussian formulation, the CBL option is much more computationally demanding and not recommended for large-scale applications. However, for studies of tracer dispersion in the vicinity of individual point sources, the CBL option is essential to reproduce the characteristic features of CBL dispersion (Weil, 1985), while the additional computational burden remains tolerable.

Figure 1 shows a comparison between two simulations of dispersion from an elevated source, with the skewed and with the Gaussian turbulence model. It can be seen that the maximum time-averaged ground-level concentration is about 30 % higher for the skewed turbulence parameterization. This is the result of the plume centerline tilting downward to the surface in the vicinity of the source for the skewed turbulence case due to downdrafts being more frequent than updrafts. The plume also spreads faster in this case. These results are similar to those obtained by others (e.g., Luhar and Britter, 1989).

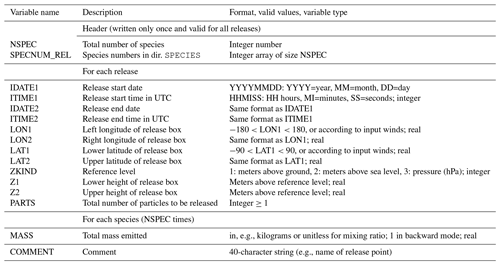

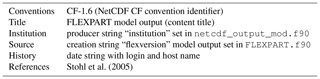

Figure 1Comparison of FLEXPART results obtained with the skewed turbulence parameterization (a) and with the Gaussian turbulence parameterization (b). Shown are the tracer concentrations integrated over all latitudes as a function of altitude and longitude. The simulations used a point source emitting 100 kg of tracer per hour for a period starting on 1 July 2017 at 12:00 UTC and ending at 13:30 UTC. The source was located near Vienna (Austria) at 47.9157∘ N and 16.3274∘ E, 250 m above ground level. Results are averaged for the time period 12:40 to 13:00 UTC. Notice that the maximum ground-level concentration in panel (a) (ca. 7 mg m−2) is about 30 % higher than in panel (b) (5 mg m−2).

It is important to note that the CBL formulation smoothly transits to a standard Gaussian formulation when the stability changes towards neutral (Cassiani et al., 2015). However, the actual equation solved inside the model for the Gaussian condition is still different from the standard version as actual particle velocities rather than the scaled ones are advanced (see, e.g., Wilson et al., 1981; Rodean, 1996). Full details of the CBL implementation can be found in Cassiani et al. (2015).

To date, FLEXPART has mainly been used for large-scale applications. With this new CBL option, FLEXPART is now also well suited for the simulation of small-scale tracer dispersion or for the inverse modeling of point source emissions from near-field measurements – at least if the resolved winds are representative of the situation considered. In fact, to our knowledge FLEXPART is the only particle model considering both skewness in the vertical velocity distribution and vertical gradients in air density. Both these effects are particularly important in deep CBLs and can be additive with respect to simulated ground-level concentrations.

2.2 Turbulent diffusivity above the boundary layer

Above the atmospheric boundary layer, turbulent fluctuations can be represented with a turbulent diffusivity. The value of the diffusivity tensor controls the size and lifetimes of the filamentary structures caused by advection. Diffusivities are converted into velocity scales using , where i is the direction. This corresponds to a mean diffusive displacement of , characteristic of Brownian motion. For i, only the vertical (v) and horizontal (h) directions are considered. The value of the vertical diffusivity Dz is related to the value of the horizontal diffusivity Dh by the square of the typical atmospheric aspect ratio for tracer structures κ≈100–250 (Haynes and Anglade, 1997).

FLEXPART uses by default a constant vertical diffusivity Dz=0.1 m2 s−1 in the stratosphere, following Legras et al. (2003), whereas a horizontal diffusivity Dh=50 m2 s−1 is used in the free troposphere. In general in the atmosphere, the values of the turbulent diffusivity depend on time and location, showing in particular seasonal, latitudinal and altitude variability: e.g., m2 s−1 in the stratosphere (Balluch and Haynes, 1997) and Dh=104 m2 s−1 (Pisso et al., 2009) in the troposphere. The values can be modified by the user in the COMMAND file (namelist variables d_trop and d_strat, in m2 s−1). As mentioned above, Dh≈κ2Dz, and therefore both values can be used interchangeably.

In FLEXPART version 6.2, the stratosphere and troposphere were distinguished based on a threshold of 2 pvu (potential vorticity units), with a maximal height of 18 km in the tropics and a minimal height of 5 km elsewhere. Such a threshold is well suited to midlatitudes, but it can differ from the thermal tropopause in the polar regions and close to the Equator. In FLEXPART 10.4, the thermal tropopause definition is used and is calculated using the lapse rate definition (Hoinka, 1997).

2.3 Gravitational settling

Gravitational settling of aerosols is implemented in FLEXPART as a process that changes the particle trajectories. The settling velocity is determined at each time step and added to the vertical wind velocity. In simulations in which a particle represents several species, all species are transported with the settling velocity of the first species. If this is not intended, simulations for the different species must be run separately. Gravitational settling velocities are also used in the calculation of dry deposition.

In older FLEXPART versions, gravitational settling was calculated using a single dynamic viscosity of air. With FLEXPART 8.2.1, the gravitational settling calculation was generalized to higher Reynolds numbers and it takes into account the temperature dependence of dynamic viscosity. This is done in subroutine get_settling.f90 in an iterative loop, wherein both the Reynolds number and the settling velocity are determined (Naeslund and Thaning, 1991). For initialization of the loop, Stokes' law and a constant viscosity estimate is used. The dynamic viscosity is calculated as a function of temperature using the formula of Sutherland (1893). A spherical shape of the particles is assumed in the settling calculation, which could be further extended in the future to allow for more complex particle shapes. For particle sizes of about 10 µm, settling velocities in the new FLEXPART version are not much different from earlier versions using the old settling calculation, typically less than 20 %. However, the differences are largest in the cold upper troposphere, implying, for instance, changes in the residence time of volcanic ash particles at heights relevant for aviation. The residence times in the upper troposphere are increased with the new scheme, but the effect is not particularly large, typically on the order of 20 %.

2.4 Wet deposition

In FLEXPART, the calculation of wet scavenging is divided into three parts. First, where scavenging occurs and which form it takes is determined (e.g., below- or within-cloud scavenging). Second, the scavenging coefficient is determined. Third, the actual removal of particle mass is calculated.

With respect to the first part, it is important to understand that wet scavenging occurs only in the presence of clouds and where precipitation occurs. In air columns without clouds, above the top of the clouds, and where neither the large-scale nor the convective precipitation rate exceeds 0.01 mm h−1, no scavenging occurs. To quickly know where a particle is located relative to the clouds, in subroutines verttransform_ecmwf.f90 andverttransform_gfs.f90 each grid cell is categorized as being in a cloud-free column, above a cloud, inside a cloud or below a cloud. This cloud definition has been completely revised compared to earlier versions and is described in Sect. 2.4.1.

With respect to the second step, the scavenging coefficient Λ (s−1) is determined in subroutine get_wetscav.f90. After a series of updates, in particular Grythe et al. (2017), FLEXPART now distinguishes between below-cloud and in-cloud scavenging and also has different parameterizations of Λ for gases and particles. For the latter, it also distinguishes between liquid-phase and ice-phase states. This yields in total six different parameterizations for Λ, described in Sect. 2.4.2 and 2.4.3.

In the third step, the removal of particle mass due to wet deposition is calculated. It takes the form of an exponential decay process (McMahon, 1979),

where m is the particle mass (kg) (it can also be a mass mixing ratio, depending on settings in file COMMAND). This removal of particle mass and corresponding accumulation of deposition at the surface is calculated in subroutine wetdepo.f90 and has not been changed since earlier versions.

2.4.1 Definition of clouds, cloud water content and precipitation

The location of clouds, the total cloud column water content and phase, and precipitation intensity and phase are needed in the calculation of the wet scavenging. Therefore, a three-dimensional cloud mask is defined in subroutine verttransform_ecmwf.f90 (or verttransform_gfs.f90 for GFS data). In previous FLEXPART versions, the cloud definition scheme was very simple and based on relative humidity (RH). In grid columns with precipitation, grid cells with RH >80 % were defined as in-cloud, and those with RH <80 % were set as below-cloud up to the bottom of the uppermost layer with RH >80 %. This was appropriate for the first version of FLEXPART, as the ECMWF had a similarly simple definition of clouds and more detailed information was not available from the ECMWF archives at the time.

If no cloud information is available from the meteorological data, the old RH-based scheme is still used in FLEXPART. However, nowadays, specific cloud liquid water content (CLWC; kg kg−1) and specific cloud ice water content (CIWC; kg kg−1) are available as 3-D fields in meteorological analyses from the ECMWF, and NCEP also provides the 3-D cloud water (liquid plus ice) mixing ratio (CLWMR; kg kg−1), from here on referred to as qc. A cloudy grid cell is defined when qc>0. FLEXPART v10.4 can ingest the ECMWF CLWC and CIWC either separately or as a sum (qc=CLWC+CIWC). However, to save storage space, we recommend retrieving only the sum, qc, from the ECMWF, as the relative fractions of ice and liquid water can be parameterized quite accurately using Eq. (4).

The column cloud water (cl; kg m−2), which is needed for the in-cloud scavenging parameterization, is calculated by integrating qc over all vertical z levels:

where ρair(z) is the density of the air in the grid cell, and Δz is the vertical extent of the grid cell. In older FLEXPART versions, cl was parameterized based on an empirical equation given in Hertel et al. (1995) using the subgrid (see below for a description of how subgrid is defined) surface precipitation rate Is (mm h−1). While such a parameterization is not needed anymore if qc is available, it is still activated in the case that cloud water input data are missing. However, in order to ensure that cl from the parameterization is statistically consistent with the cloud data, we derived the modified expression

using a regression analysis between existing cloud and precipitation data.

Precipitation is not uniform within a grid cell. To account for subgrid variability, it is assumed that precipitation is enhanced within a subgrid area and that no precipitation (and thus no scavenging) occurs outside this subgrid area. The subgrid area fraction and precipitation rate (Is) are estimated from the grid-scale precipitation rate (It) based on values tabulated in Hertel et al. (1995). This parameterization of subgrid variability is used for all scavenging processes in FLEXPART and maintained from previous FLEXPART versions as described in Stohl et al. (2005).

The precipitation phase needed for the below-cloud scavenging scheme is simply based on ambient grid-scale temperature, with snow occurring below 0 ∘C and rain above. For cloud water, cl, we assume a temperature-dependent mixture of liquid and solid particles, for which the liquid fraction (αL) is calculated based on the local temperature T,

where C and ∘C. For T>TL, αL=1 and for T<TI, αL=0. Even when CLWC and CIWC are available as separate fields, we derive the liquid fraction (αL) of cloud water from the local temperature. The ice fraction αI is 1−αL. Comparisons have shown that CLWC is very accurately reproduced by αLqc.

The cloud information should be linearly interpolated like the other variables, and situations in which the diagnosed cloud is incompatible with the precipitation rate (be it because of interpolation or because of convective precipitation accompanied by too shallow or lacking grid-scale clouds) need to receive special treatment. This is planned for a version upgrade in the near future in conjunction with a better interpolation scheme for precipitation (see Hittmeir et al., 2018). In certain cases, the deposition calculation of FLEXPART might be improved by using higher-resolution precipitation data from other sources such as from radar observations (Arnold et al., 2015); however, as the precipitation and ECMWF cloud data, and also the precipitation and wind fields, may not match, this does not guarantee better results.

2.4.2 Below-cloud scavenging

For gases, the scavenging coefficient, Λ, for below-cloud scavenging is calculated as described in Asman (1995),

where the scavenging parameters A and B depend on the chemical properties of the gas and are specified in the SPECIES_nnn file as described in Sect. 5.1.3 (nnn represents the species number (0–999) used for the simulation). In older FLEXPART versions, this scheme was used also for aerosols; however, Grythe et al. (2017) developed a new aerosol scavenging scheme that is implemented in FLEXPART v10.4 and briefly summarized here.

The relevant processes of collision and attachment of ambient aerosol particles to falling precipitation depend mainly on the relationship between the aerosol and hydrometeor size and type (rain or snow) as well as to a lesser degree on the density and hygroscopicity of the aerosol. In FLEXPART v10.4, the dependence of scavenging on the sizes of both the aerosol and falling hydrometeors are taken into account by the schemes of Laakso et al. (2003) for rain and Kyrö et al. (2009) for snow. Both schemes follow the equation

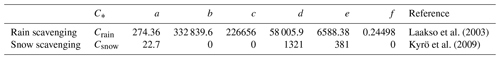

where , dp is the particle dry diameter provided in the SPECIES_nnn file, dp0=1 m, Λ0=1 s−1 and I0=1 mm h−1. Coefficients for factors a–f are different for rain and snow scavenging and are given in Table 2. The C* values are collection efficiencies that reflect the properties of the aerosol and must be given for both rain () and snow scavenging () in the SPECIES_nnn file. Notice that by setting Csnow=0, below-cloud scavenging by snowfall is switched off (similarly, Crain=0 for rain).

Laakso et al. (2003)Kyrö et al. (2009)2.4.3 In-cloud scavenging

For in-cloud scavenging of both aerosols and gases, Λ is calculated as described in Grythe et al. (2017):

where is the cloud water replenishment factor, which was determined empirically in Grythe et al. (2017) (there it was named icr), and Si is proportional to the in-cloud scavenging ratio, which is derived differently for gases and aerosols.

For gases, , where H is Henry's constant (describing the solubility of the gas and specified in the SPECIES_nnn file), R is the gas constant and T is temperature. Notice that this is applied for both liquid-phase and ice clouds.

For aerosols, the in-cloud scavenging is dominated by activated particles forming cloud droplets or ice nuclei. Those may eventually combine to form a hydrometeor that falls out of the cloud, thus removing all aerosol particles contained in it. Therefore, Si depends on the nucleation efficiency (Fnuc) and cl:

Fnuc describes how efficiently the aerosol particles are activated as cloud droplet condensation nuclei (CCN) or ice nuclei (IN):

where the relative abundances of the liquid and ice phase are accounted for by the factor αL. Values for the efficiencies, CCNeff and INeff, are available from the literature for many different types of aerosols (e.g., black carbon, mineral dust particles or soluble particles) and some have been collected in SPECIES_nnn files distributed with FLEXPART (see Sect. 5.1.3). The CCNeff and INeff values are set for an aerodynamic particle radius of 1 µm, but CCN and IN efficiencies increase with increasing particle size. The in-cloud parameterization takes this into account. For further details on the wet scavenging scheme used in FLEXPART, see Grythe et al. (2017).

2.4.4 Influence of wet scavenging on the aerosol lifetime

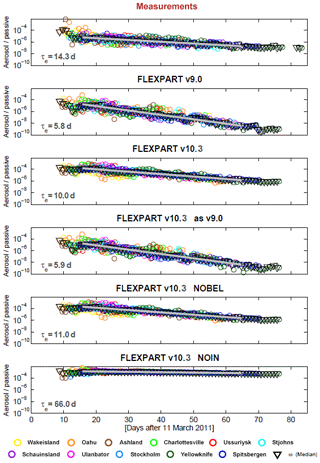

Aerosol wet scavenging controls the lifetime of most aerosols. In Fig. 2, we compare modeled e-folding lifetimes from a number of FLEXPART simulations using different model versions and switching off in-cloud and below-cloud scavenging in FLEXPART v10.4 with measured lifetimes. The parameter settings in FLEXPART used for these comparisons were the same as used by Grythe et al. (2017). To derive aerosol lifetimes in a consistent way from both measurements and model simulations, a radionuclide attached to ambient aerosol and a noble gas radionuclide were used. Kristiansen et al. (2016) used the same method to compare many different aerosol models, and we refer to their paper for more details on the method. For our model simulations, several size bins of aerosols were used, though total concentrations and lifetimes are largely controlled by 0.4 and 0.6 µm particles (see Grythe et al., 2017). E-folding lifetimes increase from 5.8 to 10.0 d between FLEXPART v9 and v10.4. A simulation performed with v10.4 but which emulated the in-cloud scavenging of v9 showed that the difference is mainly due to the decreased in-cloud scavenging in the new removal scheme compared to the old one. Notice that the lifetime obtained with v10.4 is much closer to the observation-based lifetimes. Turning off the below-cloud removal has a relatively small effect, increasing the lifetime to 11 d, whereas turning off the in-cloud removal extends the lifetime to the unrealistic value of 66 d (see bottom two panels in Fig. 2). This highlights the dominant role of in-cloud removal for accumulation-mode particles in FLEXPART.

Figure 2Aerosol lifetimes estimated from the decrease in radionuclide ratios (aerosol-bound 137Cs and noble gas 133Xe as a passive tracer) with time after the Fukushima nuclear accident, as measured and modeled at a number of global measurement stations. For details on the method, see Kristiansen et al. (2016). E-folding lifetimes, τe, are estimated based on fits to the data and reported in each panel. In the top panel, the observed values are shown and in subsequent panels from the top, modeled values are given for FLEXPART v9, FLEXPART v10.4, FLEXPART v10.4 with parameter settings to emulate removal as in v9, FLEXPART v10.4 with no below-cloud removal and FLEXPART v10.4 with no in-cloud removal.

Notice that compared to older versions of FLEXPART, the SPECIES_nnn files now include additional parameters related to the wet deposition scheme. Old input files, therefore, need to be updated for use with FLEXPART v10.4. The required format changes are detailed in Sect. 5.1.3.

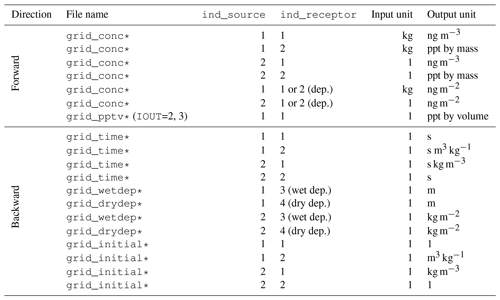

2.5 Source–receptor matrix calculation of deposited mass backward in time

When running FLEXPART forward in time for a depositing species with a given emission flux (kilograms per release as specified in file RELEASES), the accumulated wet and dry deposition fluxes (ng m−2) are appended to the FLEXPART output files (grid_conc_date and/or grid_pptv_date, for which date represents the date and time in format YYYYMMDDhhmmss; see Sect. 6) containing the atmospheric concentration and/or volume mixing ratio output. The deposition is always given in mass units, even if atmospheric values are given in mixing ratio units. In contrast to concentration values, deposition quantities are accumulated over the time of the simulation, so the deposited quantities generally increase during a simulation (except when radioactive decay is activated, which also affects deposited quantities and can decrease them).

As discussed in Sect. 1, running FLEXPART backward in time for calculating SRRs is more efficient than running it forward if the number of (potential) sources is larger than the number of receptors. For atmospheric concentrations (or mixing ratios), the backward mode has been available from the very beginning and in an improved form since FLEXPART v5 (Stohl et al., 2003; Seibert and Frank, 2004). This has proven very useful for the interpretation of ground-based, shipborne or airborne observations (e.g., to characterize sources contributing to pollution plumes). Furthermore, the inversion scheme FLEXINVERT (Thompson and Stohl, 2014) that is used to determine the fluxes of greenhouse gases is based on backward simulations. However, there are also measurements of deposition on the ground, e.g., in precipitation samples or ice cores, and for this type of measurement no backward simulations were possible until recently. Therefore, Eckhardt et al. (2017) introduced the option to calculate SRR values in backward mode also for wet and dry deposition, and a first application to ice core data was presented by McConnell et al. (2018). It is anticipated that quantitative interpretation of ice core data will be a major application of the new backward mode, which is efficient enough to allow for the calculation of, for example, 100 years of seasonally resolved deposition data in less than 24 h of computation time.

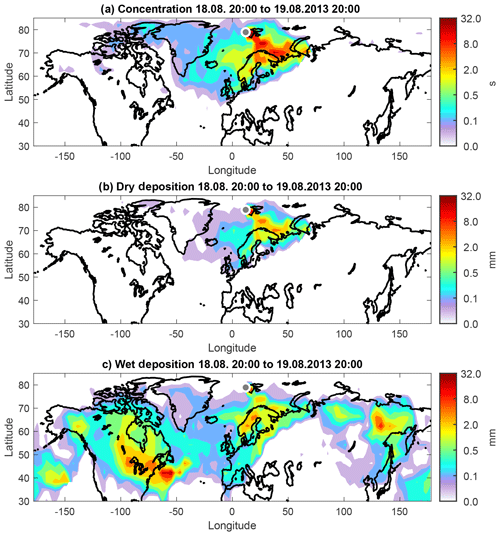

We illustrate the different backward modes and explain the required settings with an example. The calculations were run for a single receptor location, Ny-Ålesund in Spitsbergen (78.93∘ N, 11.92∘ E) and for the 24 h period from 18 August 2013 at 20:00 UTC to 19 August 2013 at 20:00 UTC. SRR values are calculated for the atmospheric concentration averaged over the layer 0–100 m a.g.l., as well as for wet and dry deposition. The substance transported is black carbon (BC), which is subject to both dry and wet deposition. Backward simulations for wet and dry deposition must always be run separately. In order to obtain SRR values for total deposition, results for wet and dry deposition need to be summed.

The backward mode is activated by setting the simulation direction, LDIRECT in file COMMAND (see Sect. 5), to −1. The three simulations are obtained by consecutively setting IND_RECEPTOR to 1 (for concentration), 3 (wet deposition) and 4 (dry deposition). IND_SOURCE is always set to 1, meaning that the sensitivities (SRR values) are calculated with respect to physical emissions in mass units. A complete list of possible options is reported in Table 1 of Eckhardt et al. (2017).

Figure 3 shows the resulting SRR (i.e., emission sensitivity) fields for the concentration as well as dry and wet deposition at the receptor. Dry deposition occurs on the Earth's surface, and therefore particles are released in a shallow layer adjacent to the surface. Its height is consistent with the shallow depth over which dry deposition is calculated in forward mode (user settings for the release height are ignored for dry deposition backward calculations). Dry deposition rates are the product of the surface concentration and the deposition velocity. Therefore, the SRR fields for surface concentration (Fig. 3a) and dry deposition (Fig. 3b) show similar patterns, in this case indicating high sensitivity for sources over Scandinavia and northwestern Russia. The differences in the spatial patterns are mainly due to temporal variability in the dry deposition velocity at the receptor caused by varying meteorological conditions (e.g., stability) and surface conditions during the 24 h release interval.

Figure 3Source–receptor relationships (for emissions occurring in the lowest 100 m a.g.l.) for black carbon observed at Ny-Ålesund in Svalbard for a 24 h period starting on 18 August 2013 at 20:00 UTC. The sensitivities were calculated for (a) concentrations (s) in the layer 0–100 m a.g.l., (b) dry deposition (mm) and (c) wet deposition (mm).

Wet deposition, on the other hand, can occur anywhere in the atmospheric column from the surface to the top of the precipitating cloud. FLEXPART automatically releases particles in the whole atmospheric column (again, user settings for the release height are ignored), but particles for which no scavenging occurs (e.g., those above the cloud top or when no precipitation occurs) are immediately terminated. Therefore, and because of the vertical variability of the scavenging process, the sensitivity for the deposited mass can deviate significantly from the sensitivity corresponding to surface concentration. Here (Fig. 3c), the sensitivity is high over Scandinavia and northwestern Russia, as was already seen for surface air concentrations and dry deposition. However, in addition, sources located in North America and eastern Siberia also contribute strongly to wet deposition. The maximum over the ocean close to the North American east coast is likely due to lifting in a warm conveyor belt, followed by fast transport at high altitude.

Concentration, dry deposition and wet deposition at the receptor can be calculated from the SRR fields shown in Fig. 3 as follows.

Here, c is the modeled concentration (in kg m−3), dd the dry deposition rate and dw the wet deposition rate (both in kg m−2 s−1). In this specific case with only a single scalar receptor, the source–receptor matrix degenerates to a vector of the SRR values, one for each of the three types of receptor (mc for concentration in units of seconds, md for dry deposition and mw for wet deposition, both in units of meters). In order to obtain the concentration or the deposition rates, these vectors need to be multiplied with the vector of emissions q (kg m−3 s−1). If the total deposition is desired, the deposition rates dd and dw can be multiplied with the receptor time interval ΔTr, in our case 86 400 s (24 h). Note that this is the period during which particles are released according to the specification of the RELEASES file. The emission fluxes must be volume averages over the output grid cells specified in the OUTGRID file, typically surface emission fluxes (in kg m−2 s−1) divided by the height of the lowermost model layer.

2.6 Sensitivity to initial conditions

Backward simulations with FLEXPART in the context of inverse modeling problems typically track particles for several days up to a few weeks. This is sufficient to estimate concentrations at the receptor only for species with atmospheric lifetimes shorter than this period. Many important species (e.g., greenhouse gases such as methane) have considerably longer lifetimes. For such long-lived species, most of the atmospheric concentration variability is still caused by emission and loss processes occurring within the last few days before a measurement because the impact of processes occurring at earlier times is smoothed out by atmospheric mixing. This leads to a relatively smooth “background” (in time series analyses sometimes also called a baseline) that is often a dominant fraction of the total concentration but that does not vary much with time, with short-term fluctuations on top of it. The signal of the regional emissions around the measurement site is mostly contained in the short-term concentration fluctuations but in order to use it in inverse modeling, the background still needs to be accounted for, as otherwise no direct comparison to measurements is possible.

One simple method is to estimate the background from the measurements as, e.g., in Stohl et al. (2009). A better approach is to use a concentration field taken from a long-term forward simulation with an Eulerian model or with FLEXPART itself, especially if nudged to observations (Groot Zwaaftink et al., 2018), as an initial condition for the backward simulation. This field needs to be interfaced with the FLEXPART backward simulation by calculating the receptor sensitivity to the initial conditions (see Eqs. 2–6 in Seibert and Frank, 2004). For instance, for a 10 d backward simulation, the concentration field needs to be sampled at those points in time and space when and where each particle trajectory terminates 10 d back in time. Furthermore, it is necessary to quantify the effects of deposition or chemical loss during the backward simulation on this background (the factor p(0) in Seibert and Frank, 2004). For example, chemical reactions with hydroxyl radicals will reduce initial concentrations of methane en route to the receptor, even though this is not much during a 10 d period.

Since version 8.2, FLEXPART has provided an option to quantify the influence of initial conditions on the receptor in backward simulations, which is activated with the switch LINIT_COND in file COMMAND. Then, gridded fields containing the sensitivities to background mixing ratios (or concentrations, depending on user settings for the switch LINIT_COND in file COMMAND) are produced and stored in the output files grid_initial_nnn (nnn stands for the species number) on the same 3-D grid as the regular output, defined in the files OUTGRID and OUTGRID_NEST. In this case, a concentration would be calculated as

where mi denotes the sensitivity to the initial condition and cb the background concentration when and where particles are terminated.

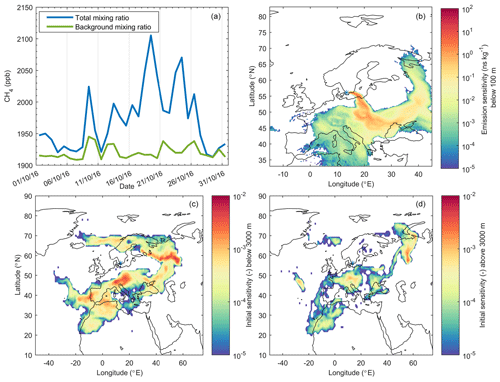

Figure 4 shows an example of the use of the sensitivities of receptor mixing ratios (here, of methane) to both surface emissions and initial conditions. The panel (b) shows the sensitivity to surface emissions on one particular day, and the panels (c) and (d) show the sensitivity to initial conditions below and above 3000 m for the same day. Both results are from an 8 d backward simulation from one receptor site in Sweden. It can be seen that the sensitivity to emissions is highest close to the station, but there is also substantial sensitivity to emission uptake over large parts of central and eastern Europe. The particles terminated 8 d before arrival at the receptor in a roughly croissant-shaped area covering large parts of Europe and the North Atlantic, as indicated by the sensitivity to initial conditions. Most of the sensitivity is located below 3000 m but there is also some influence from higher levels. Notice that only two layers are shown in Fig. 4, whereas the real model output has much higher vertical resolution.

Figure 4Example of FLEXPART 8 d backward runs for methane from a site in southern Sweden (Hyltemossa) demonstrating the combined use of sensitivities to emissions and initial conditions. (a) Time series of methane background mixing ratios and total mixing ratios in October 2016. (b) Sensitivity of the methane mixing ratio at Hyltemossa on 19 October 2016 to methane emissions at the surface. (c) Sensitivity of the methane mixing ratio at Hyltemossa on 19 October 2016 to methane initial conditions below 3000 m. (d) Sensitivity of the methane mixing ratio at Hyltemossa on 19 October 2016 to methane initial conditions above 3000 m. Blue asterisks on the maps mark the receptor location.

The sensitivity to initial conditions was interfaced with a domain-filling methane forward simulation as described in Groot Zwaaftink et al. (2018) (not shown), while the emission sensitivity was interfaced with an emission inventory for methane (not shown), as given by Eq. (11). This was done for daily simulations throughout 1 month, thus generating a time series of background mixing ratios (from the first term in Eq. 11 only) and total mixing ratios (Fig. 4a). The latter include the contributions from emissions during the 8 d backward simulation. It can be seen that the methane background advected from 8 d back varies relatively little between about 1910 and 1940 ppbv, while the emission contributions vary from 0 (on 29 October) to about 200 ppbv (on 19 October, the date for which the sensitivity plots are shown).

In practical applications for inverse modeling, source–receptor sensitivities are often only needed at the surface (as most emissions occur there), while sensitivities to the background are needed in 3-D. By setting the option SURF_ONLY to 1 in the COMMAND file, the regular output files grid_time_date_nnn containing the source–receptor sensitivities will include only the first vertical level as defined in the file OUTGRID, while the full vertical resolution is retained in grid_initial_nnn files containing the sensitivities to the initial conditions. Since the data amounts stored in the grid_time_date_nnn files can be much larger than in the grid_initial_nnn files, this is a highly efficient way to save storage space. This setup also interfaces directly with the inverse modeling package FLEXINVERT (Thompson and Stohl, 2014). An application can be found in Thompson et al. (2017) wherein initial conditions were taken from a gridded observation product. A further output option, which was also introduced for practical considerations of inverse modeling, is the LINVERSIONOUT option in the file COMMAND. If LINVERSIONOUT is set to 1, then the grid_time_date_nnn and grid_initial_nnn files are written per release with a time dimension of footprints instead of the default per footprint with a time dimension of releases. Since inverse modeling assimilates atmospheric observations and each observation is represented by a single release, it is computationally more efficient to read in the grid files separated by release. This output format also interfaces directly with FLEXINVERT.

2.7 Chemical reactions with the hydroxyl radical (OH)

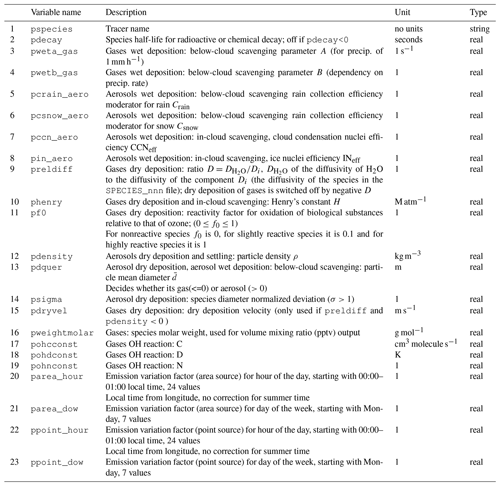

The hydroxyl (OH) radical reacts with many gases and is the main cleansing agent in the atmosphere. While it is involved in highly nonlinear atmospheric chemistry, for many substances (e.g., methane) a simplified linear treatment of loss by OH is possible using prescribed OH fields. For this, monthly averaged resolution OH fields for 17 atmospheric layers are used in FLEXPART. The fields were obtained from simulations with the GEOS-Chem model (Bey et al., 2001) and are read from the file OH_variables.bin by the subroutine readOHfield.f90.

Tracer mass is lost by reaction with OH if a positive value for the OH reaction rate is given in the file SPECIES_nnn. In FLEXPART v10.4, the OH reaction scheme was modified to account for (i) hourly variations in OH and (ii) the temperature dependence of the OH reaction rate (Thompson et al., 2015). This makes the chemical loss calculations more accurate, especially for substances with shorter lifetimes (of the order of weeks to months), for example ethane. Hourly OH fields are calculated from the stored monthly fields by correcting them with the photolysis rate of ozone calculated with a simple parameterization for cloud-free conditions based on the solar zenith angle (gethourlyOH.f90):

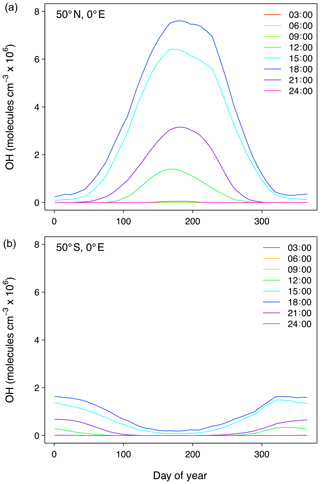

where j represents the hourly photolysis rates calculated for all 3-D locations in the field, while j* represents the corresponding monthly mean rates, precalculated and stored in file OH_variables.bin together with the monthly mean fields OH* (see Sect. 5.1.8). The motivation for this is that OH production closely follows the production of O(1D) by the photolysis of ozone, allowing for this simple parameterization of OH variability. At any time, two hourly OH fields are in memory and are interpolated to the current time step. Figure 5 shows the annual and daily variation of OH for two locations as obtained with this simple parameterization.

Figure 5Annual and daily OH concentration variation as obtained with the simple parameterization based on photolysis rates of ozone for two locations, one in the Northern Hemisphere (a) and one in the Southern Hemisphere (b). Line labels correspond to the time of day.

The OH reaction rate κ (s−1) is calculated in ohreaction.f90 using the temperature-dependent formulation

where C, N and D are species-specific constants (assigned in the SPECIES_nnn files), T is the absolute temperature, and [OH] the OH concentration (Atkinson, 1997). As the OH concentration in file OH_variables.bin is given in units of molecules per cubic centimeter, the unit of C needs to be in cubic centimeters per molecule per second (cm3 molecule−1 s−1). The mass m of a given species after reaction with OH is determined as

where Δt′ is the reaction time step (given by lsynctime).

Backwards compatibility with the former temperature-independent specification of the OH reaction (version 9 and before) can be achieved by setting the constant N in the SPECIES_nnn file to zero. The constants C and D can be derived from the former parameters as follows:

and

where A is the activation energy, R is the gas constant and κr is the former OH reaction rate (referring to Tr=298 K), which were specified in the SPECIES_nnn file for earlier versions.

OH fields other than those provided with the model code have been tested in FLEXPART. These fields may have higher spatial and temporal resolution (e.g., Fang et al., 2016), which is important for chemical species with short lifetimes. Users are required to modify readOHfield.f90 and gethourlyOH.f90 to read in other OH fields and be aware that expressions of the OH reaction rate or reaction with OH might differ from those in the above equations. If this is the case users need to modify ohreaction.f90, too.

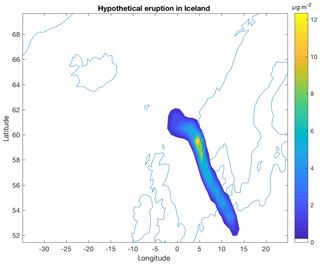

2.8 Dust mobilization scheme

Desert dust is a key natural aerosol with relevance for both climate and air quality. FLEXPART has been used earlier with preprocessors to initialize dust amounts from wind speed and surface properties following Tegen and Fung (1994) (Sodemann et al., 2015). Now a dust mobilization routine has been included as a preprocessing tool in FLEXPART v10.4. The scheme, called FLEXDUST, was developed to simulate mineral dust transport with FLEXPART in forward or backward simulations (Groot Zwaaftink et al., 2016). This module runs independently from FLEXPART and produces gridded output of mineral dust emissions as well as input files (RELEASES) that can be used for FLEXPART simulations of atmospheric transport. It can thus be considered a preprocessing (for forward simulations) or post-processing tool (for backward simulations) for FLEXPART v10.4.

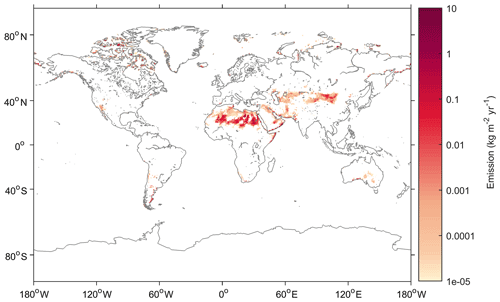

In FLEXDUST, emission rates are estimated according to the emission scheme proposed by Marticorena and Bergametti (1995). We thereby assume that sandblasting occurs in the case that sand is present and a minimum threshold based on the size-dependent threshold friction velocity following Shao and Lu (2000) can be applied. The following are used as input for the model: ECMWF operational analysis or ERA-Interim reanalysis data, Global Land Cover by National Mapping Organizations version 2 (Tateishi et al., 2014), and and sand and clay fractions from the Global Soil Data Task (2014). Erodibility is enhanced in topographic depressions, and dust emissions are modified by soil moisture and snow cover. The module includes high-latitude dust sources in the Northern Hemisphere. These sources are rarely included in global dust models, even though they appear important for the climate system and substantially contribute to dust in the Arctic (Bullard et al., 2016; Groot Zwaaftink et al., 2016). Icelandic deserts in particular are known to be highly active, and a high-resolution surface type map for Iceland can therefore be included in FLEXDUST simulations (Arnalds et al., 2016; Groot Zwaaftink et al., 2017). Like in FLEXPART, nested meteorological fields can be used for specific regions of interest. The size distribution of emitted dust follows Kok (2011), is independent of friction velocity and is by default represented by 10 size bins. This can be changed depending on known properties or assumptions of dust sources. The dust particles are assumed to be spherical in FLEXPART. An example of annual mean dust emissions from 1990 to 2012 calculated with FLEXDUST driven with ERA-Interim meteorology is shown in Fig. 6. Further details on FLEXDUST, including model evaluation, are given by Groot Zwaaftink et al. (2016). The source code is available from the git repository: https://git.nilu.no/christine/flexdust.git (last access: 30 October 2019).

Figure 6Average annual dust emission for the period 1990–2012 estimated with FLEXDUST driven with ERA-Interim meteorology.

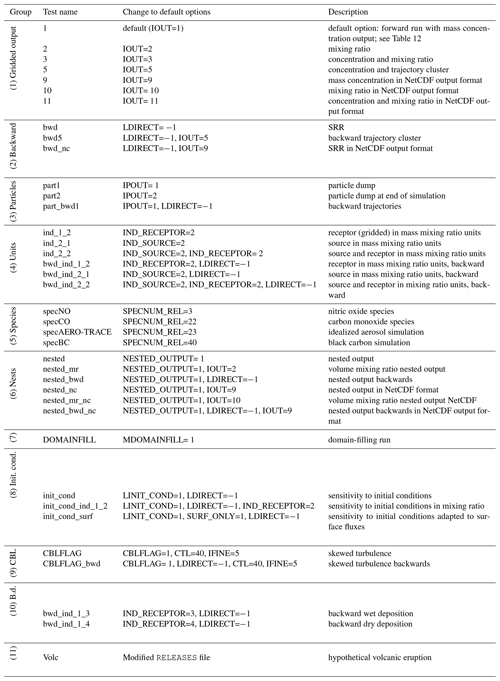

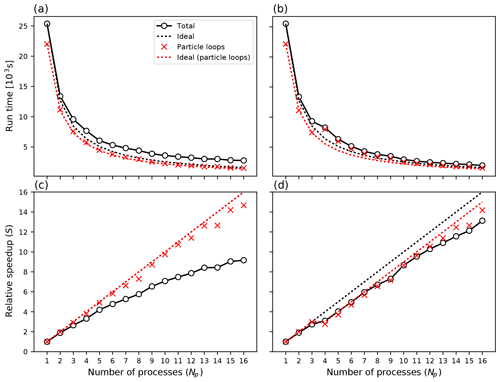

Figure 7Computational time (a, b) and speedup (c, d) for up to 16 processes on a single node. In panels (a, c), all processes read meteorological input data, whereas in panels (b, d), a dedicated process reads and distributes input data for Np≥4.

In a Lagrangian model like FLEXPART, particles move totally independently of each other. This facilitates efficient parallelization of the code. The most simple and often most effective way is running several instances of the model in parallel. For example, if the model is to be run backwards (for 10 d, for example) at regular intervals from a measurement site for a year, one could run the model separately, in parallel, for monthly subperiods. The total computation time of the 12 monthly processes together is nearly the same as if the model is run as one process for the whole year. Some overhead in processing input data occurs because, in the above example, 10 extra days of data per process are needed to calculate trajectories 10 d back into the preceding month. One disadvantage of that approach is that the memory needed for holding the meteorological input data and the model output fields is multiplied. However, this overhead is often small; thus, this approach has been used very often by FLEXPART users in the past.

Even if a task cannot easily be decomposed into runs for different periods or sources, trivial parallelization is still possible if a large number of particles is desired, for example in a domain-filling simulation for which tens of millions of particles may be used. The strategy in this case would be to assign a fraction of the particles to each run. Note that different random seeds should be used for each run, which requires a manual change and recompilation of the code.

As a user-friendly alternative, FLEXPART v10.4 has been parallelized using standard parallelization libraries. Common parallelization libraries are Open Multi-Processing (OpenMP; http://www.openmp.org/, last access: 30 October 2019), which is designed for multicore processors with shared memory, and Message Passing Interface (MPI, 2015) for distributed memory environments. Examples of other Lagrangian particle models that have been parallelized are NAME (Jones et al., 2007) and FLEXPART–WRF (Brioude et al., 2013), which use a hybrid approach (OpenMP+MPI). For FLEXPART v10.3 we decided to use a pure MPI approach for the following reasons.

-

It is simpler to program than a hybrid model and more flexible than a pure OpenMP model.

-

While OpenMP in principle may be more effective in a shared memory environment, MPI can often perform equally well or better provided there is not excessive communication between the processes.

-

MPI offers good scalability and potentially low overhead when running with many processes.

3.1 Implementation

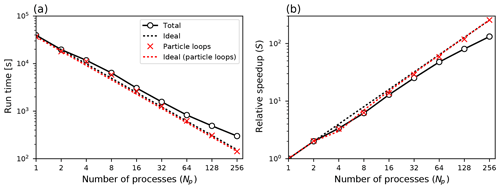

The FLEXPART code contains several computational loops over all the particles in the simulation, which is where most of the computational time is spent for simulations with many particles. The basic concept behind our parallel code closely resembles the “trivial parallelization” concept described above. When launched with a number of processes, Np, each process will separately calculate how many particles to release per location, attempting to achieve an approximately even distribution of particles among the processes while keeping the total number of particles the same as for a simulation with the serial version. Each running process will generate an independent series of random numbers and separately calculate trajectories and output data for its set of particles. Explicit communications between processes are only used when the output fields are combined at the master process (MPI rank 0) using MPI_Reduce operations, before writing the output. Also, in the case in which the output of all individual particle properties is desired (option IPOUT1 = 1 or 2 in file COMMAND), we let each process append its data to the same file. We thus avoid the costly operation of transferring particle properties between processes. The performance of the implementation is discussed in Sect. 3.2 (see Fig. 7).

Some parts of the code are not simply loops over particles, most notably the routines for reading and transforming the input meteorological data. It follows that the performance gain of using parallel FLEXPART in general is better for simulations with a larger number of particles. We have, however, implemented a feature whereby instead of having each MPI process read and process the same input data, one dedicated MPI process is set aside for this purpose. When the simulation time t lies in the interval between wind field time Ti and Ti+1, all other processes calculate particle trajectories, while this dedicated process ingests input fields from time Ti+2. At simulation time the dedicated “reader process” will distribute the newest data to the other processes and immediately start reading fields for time Ti+3, while the other processes continue doing trajectory calculations. A hard-coded integer (read_grp_min in file mpi_mod.f90) is used to set the minimum number of total MPI processes for which this separate process will be reserved for reading input data. For the examples shown in Sect. 3.2 a value of 4 was used (Figs. 7 and 8).

3.2 Performance aspects

To assess the performance of the parallel code we performed three scaling experiments of various size on different computational platforms.

3.2.1 40 million particles, single 16-core node

In the following we present the results from running the code on a machine equipped with an Opteron 6174 processor with 16 cores. Compilation was done using gfortran version 4.9.1 and OpenMPI version 1.8.3. For the experiment, 40 million particles were released and propagated 48 h forward in time. We ran with this setup with an increasing number of processes, from 1 to 16. All time measurements in the code were made with the MPI_wtime() subroutine.

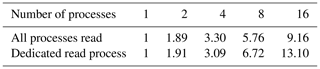

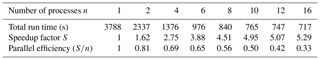

For the first experiment, every process separately processed the meteorological input data. Figure 7a and c show the CPU time Tn used in the case of n processes and the relative speedup factor . Time and speedup shown for “particle loops” includes the three most computationally demanding particle loops (integration of the Langevin equation, wet deposition and concentration calculations), but, in addition, FLEXPART contains a few smaller loops over particles that exhibit similar performance improvements. We see that for 40 million particles, the loops over particles take the largest share, at least 87 % of the total time when run with one process. Close-to-perfect speedup is expected and observed for these loops (compare results for “particle loops” and “ideal (particle loops)” in Fig. 7a and c). The major bottleneck for overall performance in this case is that each process reads the same input files from disk, thus forcing the others to wait. This bottleneck causes the speedup to deviate substantially from the ideal situation when more than a few processes are used (compare results for “total” and “ideal” in Fig. 7a and c).

Next we repeated the experiment above but set aside a dedicated process for reading the meteorological data whenever n≥4. The results are shown in Fig. 7b and d. Numerical values for the speedup factors for selected numbers of processes are given in Table 3. We observed that with n≥7 there was consistently a benefit to setting aside the dedicated reader process, whereas for n<7 it was more effective to have all processes read data and thus an extra process available for doing the trajectory calculations. These results will of course vary with the resolution of the input data, the number of particles and the system on which the program is run.

3.2.2 500 million particles, multiple 16-core nodes

We performed a larger-scale experiment at the Abel computer cluster1 using up to 256 cores on 16 nodes with Intel Xeon E5-2670 CPUs. For each node, up to 16 cores were used, and then the number of nodes was determined by the total number of processes launched. The FLEXPART setup was similar to the previous single-node experiment, but we increased the number of particles to 500 million and reduced the simulated time to 12 h. Compilation was done with Intel Fortran v16.0.1 and OpenMPI v1.10.2.

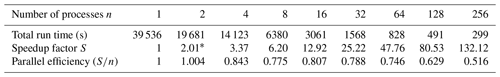

Run time and speedup factors are shown in Table 4 and Fig. 8. As before we see essentially perfect speedup of the computationally intensive parts (the particle loops), which is expected. Table 4 also gives the parallel efficiency, which is seen decreasing for larger Np. This is partly due to the increased cost of MPI communications and also because the nonparallel parts of the code have relatively higher impact. With 256 processes there are only about 2 million particles per process and the CPU time is not as clearly dominated by the particle loops as when 500 million particles all run in one process. In addition, the initialization of the code (allocation of arrays, reading configuration files) takes around 20 s for this run, which is significant given a total run time of 299 s. Thus, parallel efficiency would increase for longer simulation times and/or for simulations with more particles per process, i.e., realistic cases that are more likely to be run with such a large number of processes.

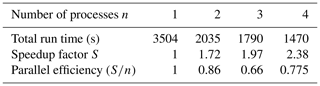

3.2.3 900 000 particles, laptop and single 16-core node

Finally, we examined a small-scale experiment in which we released 900 000 particles and simulated 15 d of transport. The performance was tested on two systems; a ThinkPad P52s laptop (Intel i7-8550U CPU with four cores; results in Table 5) and a machine equipped with an AMD Opteron 6386 SE processor (16 cores; results in Table 6). With this relatively lower number of particles it is not surprising to see that the parallel efficiency is lower than in the preceding examples. Still, we see that a speedup of 2.38 on a 4-core laptop and 5.25 on a 16-core machine is attainable. We also note that for practical applications, users would likely use the serial version for applications with so few particles and, if there are many such runs to be done, use trivial parallelization by submitting many separate serial runs in parallel. The parallelization feature is most useful for cases with a very large number of particles that cannot so easily be split in many separate runs, such as domain-filling simulations.

3.3 Validation

In order to ensure that the parallel version produces results with the same accuracy as the serial version, we have performed a set of tests and validation experiments. A direct comparison between the versions can only be performed in statistical terms because FLEXPART uses Gaussian-distributed random numbers for calculating the turbulent velocities of the particles. For the parallel version we let each process independently calculate a set of random numbers, which leads to small numeric differences (arising from the random “noise”) between the versions.

To confirm that the only source of differences between the serial and parallel code is in the random number generation, we first observe that when the parallel executable is run using only one process, it produces results identical to the serial version. This is as expected, as the first MPI process (rank 0) always uses the same random number seeds as the serial version.

Next, we have done tests in which all random numbers are set to zero in both codes, corresponding to switching off the turbulent displacements, and we run the parallel version using multiple processes. The outputs from the serial and parallel versions of the code when run this way are identical except for small differences due to round-off errors (e.g., in concentration calculations – these round-off errors are typically larger in the serial version due to the larger number of particles).

FLEXPART is usually used in a Linux environment, which we also assume for the following instructions. However, the model has also been implemented successfully under MacOS and MS Windows. The default Fortran compiler for FLEXPART v10.4 is gfortran, but ifort, Absoft and PGI compilers have been used as well.

4.1 Required libraries and FLEXPART download

As the meteorological data from numerical weather prediction models are usually distributed in GRIB format, a library for reading GRIB data is required. It is recommended to use ecCodes (https://software.ecmwf.int/wiki/display/ECC, last access: 30 October 2019), the primary GRIB encoding–decoding package used at the ECMWF (recent enough versions of its predecessor grib_api, no longer supported after 2018, can also be used). Data in GRIB-2 format can be compressed. If this is the case for the input data, the jasper library is needed2. If it is desired to produce FLEXPART output in the NetCDF format, the NetCDF Fortran Library (https://www.unidata.ucar.edu/software/netcdf/, last access: 30 October 2019) is also required.

In order to obtain the FLEXPART source code, download the appropriate v10.4 tarball from the FLEXPART website3 and unpack it.

tar -xvf flexpart10.4.tar

To obtain the latest available model version, clone the FLEXPART git repository from the FLEXPART community website.

git clone https://www.flexpart.eu/ gitmob/flexpart

This repository mirrors https://transport.nilu.no (https://git.nilu.no/flexpart/flexpart/-/releases, last access: 18 November 2019). Additional mirrors exist, e.g., at GitHub (https://github.com/flexpart/flexpart, last access: 30 October 2019) and BitBucket (https://bitbucket.org/flexpart/flexpart, last access: 30 October 2019). See the “Code and data availability” section for additional information. After unpacking the tarball or cloning the repository, a local directory structure as shown in Table 1 is created.

The directory src contains the code and a makefile. The makefile needs to be adapted to the compiler and libraries present on the local system. Appendix A4 describes these steps in detail, including manual installation of the libraries. This was tested for Ubuntu 16.04.3 as well as 18.04.3 LTS Linux and MacOS (OS versions 14.5.0 and 18.6.0). Both a serial and a parallel executable can be built from the FLEXPART v10.4 source files.

4.2 Compiling and running the serial version

After correctly setting the library paths in the makefile, the command make produces the executable called FLEXPART. It can be executed from the command line by ./FLEXPART and then expects a file pathnames to exist in the current working directory. This file contains information on where input data are located and where output data shall be produced (see Sect. 5). Note that pathnames is expected in the directory from which FLEXPART is started, which can be different from where the executable file is located. A different name of a pathnames file can be also given as an argument. FLEXPART can thus be invoked according to the following generic syntax:

path_to_flexpart/flexpart_executable path_to_pathnames/pathnames_file.