the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

The Parcels v2.0 Lagrangian framework: new field interpolation schemes

Philippe Delandmeter

Erik van Sebille

With the increasing number of data produced by numerical ocean models, so increases the need for efficient tools to analyse these data. One of these tools is Lagrangian ocean analysis, where a set of virtual particles is released and their dynamics are integrated in time based on fields defining the ocean state, including the hydrodynamics and biogeochemistry if available. This popular methodology needs to adapt to the large variety of models producing these fields at different formats.

This is precisely the aim of Parcels, a Lagrangian ocean analysis framework designed to combine (1) a wide flexibility to model particles of different natures and (2) an efficient implementation in accordance with modern computing infrastructure. In the new Parcels v2.0, we implement a set of interpolation schemes to read various types of discretized fields, from rectilinear to curvilinear grids in the horizontal direction, from z to s levels in the vertical direction and using grid staggering with the Arakawa A, B and C grids. In particular, we develop a new interpolation scheme for a three-dimensional curvilinear C grid and analyse its properties.

Parcels v2.0 capabilities, including a suite of meta-field objects, are then illustrated in a brief study of the distribution of floating microplastic in the northwest European continental shelf and its sensitivity to various physical processes.

- Article

(8330 KB) - Full-text XML

-

Supplement

(92368 KB) - BibTeX

- EndNote

Numerical ocean modelling has evolved tremendously in the past decades, producing more accurate results, with finer spatial and temporal resolutions (Prodhomme et al., 2016). With the accumulation of very large data sets resulting from these simulations, the challenge of ocean analysis has grown. Lagrangian modelling is a powerful tool to analyse flows in several fields of engineering and physics, including geophysics and oceanography (van Sebille et al., 2018).

While Lagrangian modelling can be used to simulate the flow dynamics itself (e.g. Monaghan, 2005), most of the modelling effort in geophysical fluid dynamics is achieved with an Eulerian approach. Lagrangian methods can, in turn, be used to analyse the ocean dynamic given the flow field from an Eulerian model. The flow field can also be taken from land-based measurement such as high-frequency radar (Rubio et al., 2017) and satellite imagery that measures altimetry (Holloway, 1986) or directly computes the currents using Doppler radar (Ardhuin et al., 2018).

Lagrangian analysis simulates the pathways of virtual particles, which can represent water masses, tracers such as temperature, salinity, or nutrients, or particulates like seagrass (e.g. Grech et al., 2016), kelp (e.g. Fraser et al., 2018), coral larvae (e.g. Thomas et al., 2014), plastics (e.g. Lebreton et al., 2012; Onink et al., 2019), fish (e.g Phillips et al., 2018), icebergs (e.g. Marsh et al., 2015), etc. It is used at a wide range of temporal and spatial scales, e.g. for the modelling of plastic dispersion, from coastal applications (e.g. Critchell and Lambrechts, 2016) to regional (e.g. Kubota, 1994) or global scales (e.g. Maximenko et al., 2012).

The method consists in advancing, for each particle, the coordinates and other state variables by first interpolating fields of interest, such as the velocity or any tracer, at the particle position and integrating in time the ordinary differential equations defining the particle dynamics.

A number of tools are available to track virtual particles, with diverse characteristics, strengths and limitations, including Ariane (Blanke and Raynaud, 1997), TRACMASS (Döös et al., 2017), CMS (Paris et al., 2013) and OpenDrift (Dagestad et al., 2018). An extensive list and description of Lagrangian analysis tools is provided in van Sebille et al. (2018). One of the tools is Parcels.

Parcels (“Probably A Really Computationally Efficient Lagrangian Simulator”) is a framework for computing Lagrangian particle trajectories (http://www.oceanparcels.org, last access: 10 March 2019, Lange and van Sebille, 2017). The main goal of Parcels is to process the continuously increasing number of data generated by the contemporary and future generations of ocean general circulation models (OGCMs). This requires two important features of the model: (1) not to be dependent on one single format of fields and (2) to be able to scale up efficiently to cope with up to petabytes of external data produced by OGCMs. In Lange and van Sebille (2017), the concept of the model was described and the fundamentals of Parcels v0.9 were stated. Since this version, the model essence has remained the same, but many features were added or improved, leading to the current version 2.0. Among all the developments, our research has mainly focused on developing and implementing interpolation schemes to provide the possibility to use a set of fields discretized on various types of grids, from rectilinear to curvilinear in the horizontal direction, with z or s levels in the vertical direction, and using grid staggering with A, B and C Arakawa staggered grids (Arakawa and Lamb, 1977). In particular an interpolation scheme for curvilinear C grids, which was not defined in other Lagrangian analysis models, was developed for both z and s levels.

In this paper, we detail the interpolation schemes implemented into Parcels, with special care in the description of the new curvilinear C-grid interpolator. We describe the new meta-field objects available in Parcels for easier and faster simulations. We prove some fundamental properties of the interpolation schemes. We then validate the new developments through a study of the sensitivity of floating microplastic dispersion and 3-D passive particle distribution on the northwest European continental shelf and discuss the results.

To simulate particle transport in a large variety of applications, Parcels relies on two key features: (1) interpolation schemes to read external data sets provided on different formats and (2) customizable kernels to define the particle dynamics.

The interpolation schemes are necessary to obtain the field value at the particle location. They have been vastly improved in this latest version 2.0. Section 2.1 describes the interpolation of fields and Sect. 2.2.1 their structure in Parcels.

The kernels are already available since the earliest version of Parcels (Lange and van Sebille, 2017). They are the core of the model, integrating particle position and state variables. The built-in kernels and the development achieved are briefly discussed in Sect. 2.2.2.

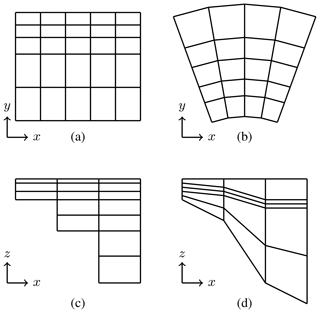

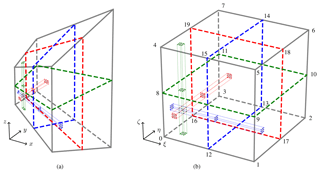

2.1 Field interpolation

External data sets are provided to Parcels as a set of fields. Each field is discretized on a structured grid that provides the node locations and instants at which the field values are given. It is noteworthy that the fields in a field set are not necessarily based on the same grid. In the horizontal plane, rectilinear (Fig. 1a) and curvilinear (Fig. 1b) grids are implemented. Three-dimensional data are built as the vertical extrusion of the horizontal grid, using either z levels (Fig. 1c) or s levels (Fig. 1d). A three-dimensional mesh is a combination of a rectilinear or a curvilinear in the horizontal direction mesh with either z or s levels in the vertical. Another class of meshes are the unstructured grids (e.g. Lambrechts et al., 2008), which are not yet supported in Parcels.

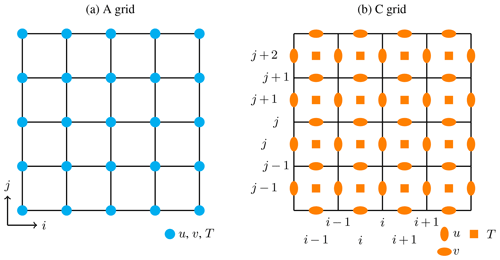

Fields can be independent from each other (e.g. water velocity from one data set and wind stress from a different data set) and interpolated separately. Often, fields come from the same data set, for example when they result from a numerical model, and form a coherent structure that must be preserved in Parcels; an example is the zonal and meridional components of the velocity field. A coherent field structure is discretized on the same grid, but the variables are not necessarily distributed evenly, leading to a so-called staggered grid (Arakawa and Lamb, 1977). The various types of staggered grids have their pros and cons (Cushman-Roisin and Beckers, 2011). Parcels reads the more popular staggered grids occurring in geophysical fluid dynamics: A, B and C grids. While A and C are fundamentally different (Fig. 2), the B grid can be considered a hybrid configuration in between the A and C grids. D and E grids are less common and are not implemented in the framework so far. The methods to interpolate fields on the A, C and B grids are presented in this section.

Figure 1 Grid discretizations processed by Parcels. In the horizontal plane: (a) rectilinear, (b) curvilinear; in the vertical: (c) z levels and (d) s levels. Four combinations of horizontal and vertical grids are possible to form a three-dimensional mesh.

2.1.1 A grid

The A grid is the un-staggered Arakawa's grid: zonal velocity (u), meridional velocity (v) and tracers (T) are collocated (Fig. 2a). This grid is used in many reanalysis data sets for global currents (e.g. Globcurrent, Rio et al., 2014) or tidal dynamics (e.g. TPXO, Egbert and Erofeeva, 2002), as well as regridded products such as the OGCM for the Earth simulator (OFES; Sasaki et al., 2008) or data sets on platforms like the Copernicus Marine Environment Monitoring Service. In this section we first consider the two-dimensional case before describing three-dimensional fields.

2-D field

In a two-dimensional context, field f is interpolated in cell (j,i) where the particle is located, with the bilinear Lagrange polynomials and the four nodal values Fn with , surrounding the cell, resulting in the following expression:

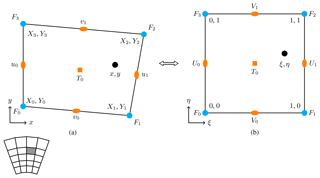

ξ, η are the relative coordinates in the unit cell (Fig. 3b), corresponding to the particle-relative position in the physical cell (Fig. 3a). (Xn,Yn) are the coordinates of the cell vertices. The two-dimensional Lagrange polynomials are the bilinear functions

Note that in a rectilinear mesh, solving Eq. (2) reduces to the usual solutions:

Figure 2 Arakawa's staggered grids (Arakawa and Lamb, 1977): (a) A grid and (b) C grid. In the C grid, i and j represent the variable column and row indexing in arrays where the variables are stored. The indexing of the C grid follows the NEMO notations (Madec and the NEMO team, 2016).

3-D field

To read three-dimensional fields, for both z and s levels, the scheme interpolates the eight nodal values located on the hexahedron vertices using the trilinear Lagrangian polynomials :

ξ and η are obtained as in the 2-D case, using the first four vertices of the hexahedron. The vertical relative coordinate is obtained as

with

The interpolation results in

2.1.2 C grid

In a C-grid discretization, the velocities are located on the cell edges and the tracers are at the middle of the cell (Fig. 2b). One could attempt to interpolate zonal and meridional velocities as if they would be on two different A grids, shifted from half a cell, but this would not be in accordance with the C-grid formulation: in particular, such an interpolation would break impermeability at the coastal boundary conditions. Instead, we use the interpolation principle based on the scheme used in the Lagrangian model TRACMASS (Jönsson et al., 2015; Döös et al., 2017), but generalized to curvilinear meshes.

The tracer is computed as a constant value all over the cell, in accordance with the mass conservation schemes of C grids. The formulations for the two-dimensional and three-dimensional velocities consist of four steps:

-

define a mapping between the physical cell and a unit cell (as for the A grid, Fig. 3);

-

compute the fluxes on the unit cell interfaces, as a function of the velocities on the physical cell interfaces;

-

interpolate those fluxes to obtain the relative velocity;

-

transform the relative velocity to the physical velocity.

Step 1 consists in applying Eq. (2) for the 2-D case and Eqs. (2) and (4) for 3-D fields. The other three steps are defined below, for both 2-D and 3-D fields.

2-D field

The velocities at the edges of cell (j, i) are (, , , ) (Fig. 2b). This indexing was chosen to be consistent with the notation used by the NEMO model (Madec and the NEMO team, 2016). For readability, they will be renamed in this section to (u0, u1, v0, v1), using local indices (Fig. 3a) instead of the global indices . The velocity at (x, y) is not obtained by linear interpolation but, like in finite-volume schemes, it is approximated by linearly interpolating the fluxes (U0, U1, V0, V1) through the cell edges (Fig. 3b), which read (Step 2)

where L03, L12, L01 and L23 are the edge lengths. Secondly, J2-D(ξ,η), the Jacobian matrix of the transformation from the physical cell to the unit cell (Fig. 3), is defined:

The determinant of the Jacobian matrix, which will be called Jacobian, is computed: . The Jacobian defines the ratio between an elementary surface in the physical cell and the corresponding surface in the unit cell. The relative velocity in the unit cell is defined as (Step 3)

Finally (Step 4), the velocity is obtained by transforming the relative velocity (Eq. 9) to the physical coordinate system

3-D field

The three-dimensional interpolation on C grids is different for z and s levels.

For z levels, the horizontal and vertical directions are independent. The horizontal velocities in cell (k, j, i) are thus interpolated as in the 2-D case, using the data at level k: (, , , ), and the vertical velocity is interpolated as

with , and . The 3-D indexing is again consistent with NEMO notation.

For s levels, the three velocities must be interpolated at once. The three-dimensional interpolation is similar to its two-dimensional version, but it is not the straightforward extension, which would linearly interpolate the fluxes as in Eq. (9) and divide this result by the Jacobian. Indeed, in 2-D the interpolation scheme is built specifically such that a uniform velocity field is exactly interpolated by Eq. (10) (see demonstration in Sect. 3.1). This is made possible since when developing the right-hand side of Eq. (10), one obtains a numerator which is a bilinear function of ξ and η and a denominator which is the Jacobian, precisely bilinear in ξ and η. Following a similar approach in 3-D would lead to a trilinear numerator, but the Jacobian J3-D is a tri-quadratic function of the coordinates ξ, η and ζ:

The interpolation order must then be increased as well to a quadratic function. Here, as in 2-D, the velocities u, v and w are still derived from the coordinate transformation (Step 4):

but this time the relative velocities interpolate the fluxes using quadratic Lagrangian functions (Step 3):

Figure 4 Fluxes used for 3-D interpolation on a C grid for (a) the physical cell and (b) the unit cell. The node indices in (b) are used to define the faces in Eq. (16).

Step 2 is more complex than in the 2-D case. The fluxes interpolated in Eq. (14) are the product of the velocities with the face Jacobian , as illustrated in Fig. 4. The Jacobian is defined as follows (Weisstein, 2018):

with Mj,i(J3-D), the j,i minor of J3-D, i.e. the determinant of the Jacobian matrix J3-D from which row j and column i were removed, leading to a 2×2 matrix. Fluxes through a face that is normal to ξ, η or ζ in the unit cell are computed with Jacobian , or , respectively. Using the node indices defined in Fig. 4b, the Jacobians and their respective fluxes then read

It is important to note another difference between the 2-D and the 3-D approaches here. While in 2-D, the fluxes were simply the product of the velocity and the edge length and were independent from ξ and η, this is not the case in 3-D anymore. The Jacobian is a function of the relative coordinates. This is because an interface will not be evenly distorted from the physical to the unit cell. In fact, the 2-D is a particular case of the 3-D, where the edge length corresponds to the edge Jacobian, which is independent of the relative coordinates.

Finally, the velocities u0, u1, v0, v1, w0 and w1 are provided by the grid, but this is not the case for u½, v½ and w½. For ocean applications under the Boussinesq approximation, mass conservation is reduced to volume conservation (Cushman-Roisin and Beckers, 2011), leading to the continuity equation. Using this equation, the flux through faces (in blue in Fig. 4), (in red) and (in green) can be computed if the mesh is fixed. Otherwise, for example with vertically adaptive grids, the change in volume of the mesh must be considered, which is currently not implemented in Parcels. Ignoring this term in vertically adaptive grids would lead to errors on the order of 1 % (Kjellsson and Zanna, 2017).

Here we write the development for face . The two other faces follow the same process. Let us consider the hexahedron ; the Jacobians referring to it will be noted J*. The flux going through reads

from which flux U½ can be computed:

then corresponds to the flux through the physical face and U½ is the flux that should be interpolated, which results from transformed to u½, the velocity at the physical face, before computing the flux in the relative cell interface. It is noteworthy that .

For compressible flows, fluxes , and cannot be computed using only half of the hexahedron. But since density is constant throughout the entire element, the actual flux can be computed as the average between the flux going through face using hexahedron and the one using hexahedron .

2.1.3 B grid

The B grid is a combination between the A and C grids. It is used by OGCMs such as MOM (Griffies et al., 2004) or POP (Smith et al., 2010).

For two-dimensional fields, the velocity nodes are located on the cell vertices as in an A grid and the tracer nodes are at the centre of the cells as in a C grid. The velocity field is thus interpolated exactly as for an A grid (Eq. 1), and the tracer is like in a C grid, constant over the cell.

For a three-dimensional cell, the tracer node is still at the centre of the cell and the field is constant; the four horizontal velocity nodes are located at the middle of the four vertical edges; the two vertical velocity nodes are located at the centre of the two horizontal faces (Wubs et al., 2006). The horizontal component of the interpolated velocity in the cell thus only varies as a function of ξ and η and the vertical component is a function of only ζ:

with the local indices (u0, u1, u2, u3) corresponding to global indices (, , , ) and similarly for the v field. w0 and w1 correspond to and and tracer t0 to . In Parcels v2.0, this field interpolation is only available for z levels.

2.2 Implementation into Parcels

2.2.1 Fields

General structure

Parcels relies on a set of objects, combined in a FieldSet, to interpolate different quantities at the particle location.

As explained in Sect. 2.1, a field is discretized on a grid. In Parcels v2.0, four grid objects are defined: RectilinearZGrid, RectilinearSGrid, CurvilinearZGrid and CurvilinearSGrid.

The main variables of the grids are the time, depth, latitude and longitude coordinates. Longitude and latitude are defined as vectors for rectilinear grids and 2-D arrays for curvilinear ones. The depth variable is defined as a vector for z-level grids. A two-dimensional horizontal grid is simply a RectilinearZGrid or a CurvilinearZGrid in which the depth variable is empty. For s levels, the depth is defined as a 3-D array or a 4-D array if the grid moves vertically in time. The time variable can be empty for steady-state fields. Note that models such as HYCOM using hybrid grids combining z and σ levels are treated by Parcels as general RectilinearSGrid or CurvilinearSGrid objects, for which the depth of the s levels is provided.

A Field has an interp_method attribute, which is set to linear for fields discretized on an A grid,

bgrid_velocity, bgrid_w_velocity, and bgrid_tracer for horizontal and vertical velocity and tracer fields on a B grid, and cgrid_velocity and cgrid_tracer for velocity and tracer on a C grid. Note that a nearest interpolation method is also available, which should not be used for B and C grids.

Parcels can load field data from various input formats. The most common approach consists in reading netCDF files using the FieldSet.from_netcdf() method or one of its derivatives. However, Python objects such as xarray or numpy arrays can also be loaded using FieldSet.from_xarray_dataset() or FieldSet.from_data(), respectively.

Loading a long time series of data often requires a significant memory allocation, which is not always available on the computer. The previous Parcels version circumvented the problem by loading the data step by step. Using the deferred_load flag, which is set by default, this process is fully automated in v2.0 and allows the use of long time series while under the hood the time steps of the data are loaded only when they are strictly necessary for computation.

Meta-field objects

In Parcels, a variety of other objects enable us to easily read a field. In this section, we describe the new objects recently added to the framework.

The first object is the VectorField, which jointly interpolates the two or three components of a vector field such as velocity. This object is not only convenient but necessary since u and v fields are both required to interpolate the zonal and meridional velocity in the C-grid curvilinear discretization.

Another useful object is the SummedField, which is a list of fields that can be summed to create a new field. The fields of the SummedField do not necessarily share the same grid. For example, this object can be used to create a velocity which is the sum of surface water current and Stokes drift. This object has no other purpose than greatly simplifying the kernels defining the particle dynamics.

The fields do not necessarily have to cover the entire region of interest. If a field is interpolated outside its boundary, an ErrorOutOfBounds is raised, which leads to particle deletion except if this error is processed through an appropriate kernel.

A sequence of various fields covering different regions, which may overlap or not, can be interpolated under the hood by Parcels with a NestedField. In this case, the fields composing the NestedField must represent the same physical quantity.

The NestedField fields are ranked to set the priority order in which they must be interpolated.

Available data are not always provided with the expected units. The most frequent example is the velocity given in metres per second while the particle position is in degrees. The same problem occurs with diffusivities in square metres per second. UnitConverter objects allow to convert automatically the units of the data. The two examples mentioned above are defined into Parcels and other UnitConverter objects can be implemented by the user for other transformations.

2.2.2 Kernels

The kernels define the particle dynamics (Lange and van Sebille, 2017).

Various built-in kernels are already available in Parcels.

AdvectionRK4, AdvectionRK45 and AdvectionEE implement the Runge–Kutta 4, Runge–Kutta–Fehlberg and explicit Euler integration schemes for advection.

While other explicit discrete time schemes can be defined, analytical integration schemes (Blanke and Raynaud, 1997; Chu and Fan, 2014) are not yet available in Parcels.

BrownianMotion2D and SpatiallyVaryingBrownianMotion2D implement different types of Brownian motion available as kernels. Custom kernels can be defined by the user for application-dependent dynamics.

The kernels are implemented in Python but are executed in C for efficiency (Lange and van Sebille, 2017) even if a full Python mode is also available. However, the automated translation of the kernels from Python to C somehow limits the freedom in the syntax of the kernels. For advanced kernels, the possibility to call a user-defined C library is available in version 2.0.

3.1 Uniform velocity on a 2-D C grid

In this section, we prove that the C-grid interpolation exactly preserves a uniform velocity in a quadrilateral. To do so, let us define a uniform velocity and a quadrilateral with x coordinates and y coordinates . On such an element, the velocities u0, u1, v0 and v1 are the scalar product of u and n, the unit vector normal to the edge, and the fluxes U0, U1, V0 and V1 are the velocities multiplied by the edge lengths, leading to

Therefore, developing Eq. (9) results in

and then applying Eq. (10)

independently from ξ and η. For the 3-D case, the same result is obtained numerically. It can be evaluated using the simple Python C-grid interpolator code available at https://doi.org/10.5281/zenodo.3253697 (Delandmeter, 2019b).

3.2 z- and s-level C-grid compatibility

As mentioned above, the horizontal and vertical directions in grids using z levels are completely decoupled, such that horizontal velocity can be computed as for a 2-D field, and vertical interpolation is computed linearly. But a z-level grid is a particular case of an s-level grid. We show that the 3-D C-grid interpolator reduces to the simpler z-level C grid when and .

First, for z levels, it is noteworthy that

and similarly for , , , J3-D is

and . All the fluxes through the vertical faces reduce to the product of the velocity, the horizontal edge length and the element height, as for U0:

The fluxes through the horizontal faces are

Therefore, the inner fluxes result in

and similarly for V½ and W½. The first two lines of Eq. (13) reduce to Eq. (10) and the third line is

Finally using Eq. (19), Eq. (14) becomes

from which the first two lines correspond to Eq. (9) and the third line combined with Eq. (20) reduces to Eq. (11).

Microplastic (MP) is transported through all marine environments and has been observed in large quantities at both coastlines (e.g. Browne et al., 2011) and open seas (e.g. Barnes et al., 2009), at the surface and the seabed. It represents potential risks to the marine ecosystem that cannot be ignored (Law, 2017). At a global scale, high concentrations are reported in the subtropics (Law et al., 2010) but also in the Arctic (Obbard et al., 2014). Various studies have already modelled the accumulation of MP in the Arctic (van Sebille et al., 2012, 2015; Cózar et al., 2017), highlighting the MP transport from the North Atlantic and the North Sea. Meanwhile, at smaller scales, other studies have focused on marine litter in the southern part of the North Sea (Neumann et al., 2014; Gutow et al., 2018; Stanev et al., 2019) and have included diffusion and wind drift in their model as well as used a higher resolution.

Here we study how the modelled accumulation of floating MP in the Arctic depends on the incorporation of physical processes and model resolutions used for the southern part of the North Sea. Parcels is used to evaluate the sensitivity of the floating MP distribution under those constraints. To do so, virtual floating MP particles are released off the Rhine and Thames estuaries and tracked for 3 years. The floating MP distribution is then compared with the trajectories of passive 3-D particles, which are not restricted to stay at the sea surface. Note that this section is not meant as a comprehensive study of the MP transport off the North Sea, but rather an application of the new features implemented into Parcels, in both two and three dimensions.

4.1 Input data

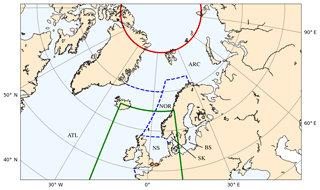

We study the influence of the different physical processes impacting surface currents like density- and wind-driven currents, tidal residual currents, and Stokes drift, but also the impact of mesh resolution and diffusion. The data come from various data sets (Fig. 5), described in this section. Links to access the data are provided in the code and data availability sections.

4.1.1 NEMO

The main data we use are ORCA0083-N006 and ORCA025-N006, which are standard set-ups from NEMO (Madec and the NEMO team, 2016), an ocean circulation model forced by reanalysis and observed flux data: the Drakkar forcing set (Dussin et al., 2016). The forcings consist of wind, heat and freshwater fluxes at the surface.

The data are available globally at resolutions of 1∕4∘ (ORCA025-N006) and 1∕12∘ (ORCA0083-N006). They are discretized on an ORCA grid (Madec and Imbard, 1996), a global ocean tripolar grid, which is curvilinear. The mesh is composed of 75 z levels and the variables are positioned following a C grid. The temporal resolution is 5 d.

4.1.2 Northwest shelf reanalysis

The northwest shelf reanalysis (Mahdon et al., 2017) is an ocean circulation flow data set based on the Forecasting Ocean Assimilation Model 7 km Atlantic Margin Model, which is a coupling of NEMO for the ocean with the European Regional Seas Ecosystem Model (Blackford et al., 2004). The reanalysis contains tidal residual currents.

The data are freely available on the Copernicus Marine Environment Monitoring Service (CMEMS). They have a resolution of about 7 km (1∕9∘ long × 1∕15∘ lat), from (40∘ N, 20∘ W) to (65∘ N, 13∘ E), with a temporal resolution of 1 d. The data are originally computed on a C grid, but are re-interpolated on the tracer nodes to form an A-grid data set with z levels, which is available on CMEMS.

The data, which will be referred to as NWS, do not cover the entire modelling region, such that a NestedField is used to interpolate it within the available region (green zone in Fig. 5), and we use the NEMO data for particles outside that region.

4.1.3 WaveWatch III

Stokes drift, i.e. the surface residual current due to waves, was obtained from WaveWatch III (Tolman, 2009), which was run using wind forcings from the NCEP Climate Forecast System Reanalysis (CFSR; Saha et al., 2010). The data have a spatial resolution of 1∕2∘, extending until 80∘ N, and a temporal resolution of 3 h.

Figure 5 Spatial coverage of the OGCM data used to study North Sea microplastic transport. NEMO data are available globally. NWS data are available for the North Sea region (green boundary) and WaveWatch III data are available south of 80∘ N (red boundary). The particle releasing locations are the Rhine and Thames estuaries (orange dots) and the region is split into six zones: North Sea (NS), Skagerrak and Kattegat (SK), Baltic Sea (BS), Norwegian Coast (NOR), Arctic Ocean (ARC), and Atlantic Ocean (ATL), which are separated by the dashed blue lines.

4.2 Simulations

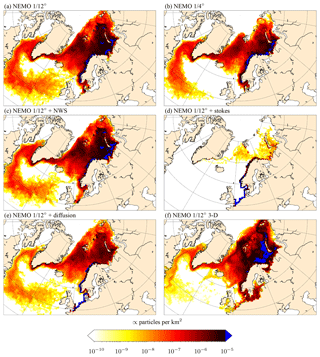

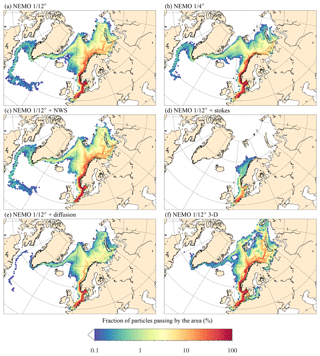

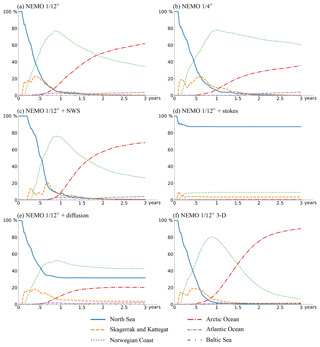

Six simulations are run in the following configurations: (a) NEMO hydrodynamics at a 1∕12∘ resolution, (b) NEMO at a 1∕4∘ resolution, (c) NWS hydrodynamics in the North Sea nested into NEMO 1∕12∘, (d) NEMO 1∕12∘ coupled with WaveWatch III Stokes drift, (e) NEMO 1∕12∘ with diffusion and finally (f) NEMO hydrodynamics at a 1∕12∘ resolution in which the particles are not constrained to the sea surface.

Every day of the year 2000, 100 particles are released in the mouth of the Thames estuary and 100 more particles in the mouth of the Rhine, before being tracked for 3 years. For the two 2-D runs, (a) to (e), the particles are released at the sea surface and follow the horizontal surface currents. For the 3-D run (f), the particles are released at 0.1, 0.5 and 1 m depths and follow the 3-D NEMO flow field.

The diffusion, which parametrizes the unresolved processes, is modelled as a stochastic zero-order Markov model (van Sebille et al., 2018). The diffusion parameter is proportional to the mesh size, exactly as in the study of North Sea marine litter by Neumann et al. (2014):

with D0=1 m2 s−1 the reference diffusivity, l the square root of the mesh size and l0=1 km. This formulation leads to the same order of magnitude diffusivity as the constant value of Gutow et al. (2018).

The beaching of MP is non-negligible in the North Sea (Gutow et al., 2018) even if it is still poorly understood and often ignored (Neumann et al., 2014) or resulting from the low-resolution current and wind conditions (Gutow et al., 2018). Here we distinguish two flow types, the first based on NEMO and NWS data, which have impermeable boundary conditions at the coast, and the second which includes Stokes drift and diffusion, thus allowing beaching.

For numerical reasons, due to the integration time step of 15 min and the Runge–Kutta 4 scheme, it is theoretically possible that particles beach even with NEMO or NWS data. This could happen for example in a region of coastal downwelling since the particles are forced to stay at the surface and could be constantly transported towards the coast. The particle dynamics are thus implemented using separate kernels. At each time step, the particle position is first updated following NEMO or NWS advection. Then the particle is checked to still be located in a wet cell, otherwise it is pushed back to the sea using an artificial current. In a second step, the Stokes or diffusion kernels are run, where if the particle beaches, it stops moving. In a final step, the particle age is updated. The kernel code as well as all the scripts running and post-processing the simulations are available at https://doi.org/10.5281/zenodo.3253693 (Delandmeter, 2019a).

To compare the simulations, the Parcels raw results, consisting of particle position, age and beaching status exported every 2 d, are post-processed into the following maps and budgets.

The particle density (Fig. 6) is computed as the number of particles per square kilometre, averaged over the third year of particle age. Note that the absolute value of the concentration is not particularly meaningful since it is simply proportional to the number of particles released.

To analyse the particle path, the ocean is discretized into cells of 1∕4∘ longitude × 1∕8∘ latitude resolution, and for each cell the fraction of particles that has visited the cell at least once is computed (Fig. 7).

To study the temporal dynamics of the particles, the region is divided into six zones (Fig. 5): North Sea, Skagerrak and Kattegat, Baltic Sea, Norwegian Coast, Arctic Ocean, and Atlantic Ocean, and the evolution of the distribution of the particles in those zones is computed (Fig. 8). The time axis represents the particle age in years.

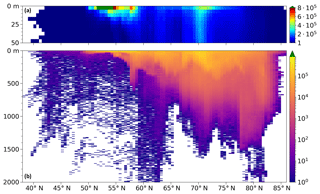

Finally, the integrated vertical distribution (Fig. 9) of the particles as a function of the latitude is computed for the 3-D run. For this profile, the domain is divided into bins of 0.5∘ latitude by 5 m depth and every 2 d each particle is mapped to the cell it belongs to, leading to the integrated vertical distribution. Note the linear scale for the upper 50 m depth and the logarithmic scale for the full vertical profile.

Animations showing the particle dynamics are available in the article Supplement.

Figure 6 Density of floating microplastic (a–e) and passive 3-D particles (f), averaged over the third year of particle age, for the different simulation scenarios: (a) NEMO 1∕12∘, (b) NEMO 1∕4∘, (c) NWS nested into NEMO 1∕12∘, (d) NEMO 1∕12∘ coupled with Stokes drift from WaveWatch III, (e) NEMO 1∕12∘ coupled with diffusion, (f) NEMO 1∕12∘ 3-D. Note the logarithmic scale.

Figure 7 Fraction of floating microplastic (a–e) and passive 3-D particles (f) originally released in the Thames and Rhine estuaries reaching the domain. It is computed for each cell of a 1∕4∘ longitude × 1∕8∘ latitude grid as the proportion of the particles that have visited the cell at least once. Note the logarithmic scale.

Figure 8 Evolution of the distribution of floating microplastic (a–e) and passive 3-D particles (f) in the six zones (Fig. 5) as a function of particle age.

4.3 Results

The results show various minor and major differences between the scenarios.

While NEMO 1∕12∘ and NEMO 1∕4∘ show similar dynamics for the first year (Fig. 8), the Norwegian fjords have a higher trapping role in the 1∕4∘ resolution run, even if the plastic does not beach in both runs. This increased particle trapping could be a consequence of the lower data resolution that results in a reduced horizontal velocity shear, while a strong shear layer behaves as a barrier isolating the coast from the open waters (Delandmeter et al., 2017). This is also observed in the Supplement animations, in which the main particle path is observed further from the coast for the NEMO 1∕12∘ run. As a consequence of the trapping, the amount or MP reaching the Arctic is reduced in NEMO 1∕4∘. This run also produces significantly lower densities north of 80∘ N. Although none of the runs resolve coastal dynamics, because of the low temporal and space resolution and the lack of tides, they show important differences. Since no validation was achieved for those MP simulations, there is no reason to argue that the 1/12∘ resolution is high enough to simulate MP dynamics, but this resolution is similar to other studies of plastic litter in the region (Gutow et al., 2018).

The main differences of using NWS result from the dynamics during the first year, when the particles are located south of 65∘ N, explaining the lack of differences in the Arctic region in Figs. 6a and c and 7a and c. The residence time in the North Sea is increased relative to NEMO 1∕12∘, and different peak events occur in the Skagerrak and Kattegat, resulting in final concentrations of 68 % in the Arctic and 27 % on the Norwegian coast, respectively 7 % higher and 8 % lower than with NEMO 1∕12∘ (Fig. 8).

Including Stokes drift has a major impact on MP dynamics in the northwest European continental shelf, due to prevailing westerly winds (Gutow et al., 2018), with close to 90 % of the plastic staying in the North Sea, 9 % beaching on the Norwegian coast and less than 0.25 % reaching the Arctic. Particles beach very quickly, with 90 % in less than 3.5 months and 99 % within 10 months. While those numbers are not validated here, we can still point out that even if Stokes drift has an important contribution to surface dynamics for large scales (Onink et al., 2019), using it on smaller scales needs proper validation. Especially the boundary condition should be treated with care. It has a large impact in this application where the particles are released next to the coast.

The parametrization of sub-grid scales and diffusion is still an important field of research in the Lagrangian community, but it is generally agreed upon that it cannot be neglected. In this application, we observe how adding diffusion impacts the fate of MP. The amount of MP reaching the Arctic is reduced by 68 % compared to NEMO 1∕12∘, with large accumulation in the North Sea and on the Norwegian coast, but not in the Skagerrak and Kattegat. Overall, the proportion of beached particles increases linearly to 73 % during the first year, before slowly reaching 83 % during the next 2 years.

Maintaining the MP at the surface is a strong assumption: biofouling, degradation and hydrodynamics affect the plastic depth, which impacts its lateral displacement. In the 3-D particle run (Fig. 6f), we do not take all the processes driving MP vertical dynamics, whose parameterizations are currently being developed by the community, but simply model the path of passive particles following the three-dimensional current. Passive particles do not accumulate along the coast like floating MP (Fig. 6f). This results in an increased transport towards the Arctic (Figs. 7f and 8f). There are overall higher concentrations in the Arctic, including north of 80∘ N and in the eastern part of the Kara Sea. Around 1 % of the passive particles end up in the Baltic Sea, crossing the Skagerrak and Kattegat using favourable deeper currents (Gustafsson, 1997), while such transport was negligible for floating MP plastic. The vertical distribution of the particles is also analysed (Fig. 9): the majority of the particle, originally released in shallow waters, is still observed close to the surface: 21 %, 63 % and 97 % of the particle records are above 10, 100 and 500 m depth, respectively. The concentration vertical gradient decreases while the latitude increases, with almost no gradient in the polar region. Passive particles have a significantly different path than surface ones, highlighting the importance of better understanding the vertical dynamics of the plastic to improve the accuracy of its distribution modelling.

This brief study of the sensitivity of North Sea floating MP distribution is an illustration of how Parcels is used to gather and compare flow fields from a multitude of data sets in both two and three dimensions, which was made possible by the development of the different field interpolation schemes and meta-field objects. To validate the MP dynamics observed, it is essential to couple such a numerical study with an extensive field study.

Parcels, a Lagrangian ocean analysis framework, was considerably improved since version 0.9, allowing us to read data from multiple fields discretized on different grids and grid types. In particular, a new interpolation scheme for curvilinear C grids was developed and implemented into Parcels v2.0. This article described this new interpolation as well as the other schemes available in Parcels, including A, B, and C staggered grids, rectilinear and curvilinear horizontal meshes, and z and s vertical levels. Numerous features were implemented, including meta-field objects, which were described here.

Parcels v2.0 was used to simulate the dynamics of the northwest European continental shelf floating microplastic, virtually released during 1 year off the Thames and Rhine estuaries, before drifting towards the Arctic, and the sensitivity of this transport to various physical processes and numerical choices such as mesh resolution and diffusion parametrization. While those simulations do not provide a comprehensive study of microplastic dynamics in the area, they highlight key points to consider and illustrate the interest of using Parcels for such modelling.

The next step in Parcels development will involve increasing the model efficiency and developing a fully parallel version of the Lagrangian framework.

The code for Parcels is licensed under the MIT licence and is available through GitHub at https://www.github.com/OceanParcels/parcels (last access: 10 March 2019). Version 2.0 described here is archived at Zenodo at https://doi.org/10.5281/zenodo.3257432 (van Sebille et al., 2019). More information is available on the project web page at http://www.oceanparcels.org (last access: 10 March 2019).

Independently of Parcels, a simple Python code also implements all the C-grid interpolation schemes developed in this paper. It is available at https://doi.org/10.5281/zenodo.3253697 (Delandmeter, 2019b).

All the scripts running and post-processing the North Sea MP simulations are available at https://doi.org/10.5281/zenodo.3253693 (Delandmeter, 2019a).

The NEMO N006 data are kindly provided by Andrew Coward at NOC Southampton, UK, and can be downloaded at http://opendap4gws.jasmin.ac.uk/thredds/nemo/root/catalog.html (last access: 10 March 2019).

Northwest shelf reanalysis data are provided by the Copernicus Marine Environment Monitoring Service (CMEMS). They can be downloaded at http://marine.copernicus.eu/services-portfolio/access-to-products/?option=com_csw&view=details&product_id=NORTHWESTSHELF_REANALYSIS_PHY_004_009 (last access: 10 March 2019).

WaveWatch III data come from the Ifremer Institute, France. They can be downloaded at ftp://ftp.ifremer.fr/ifremer/ww3/HINDCAST/GLOBAL/ (last access: 10 March 2019).

The supplement related to this article is available online at: https://doi.org/10.5194/gmd-12-3571-2019-supplement.

PD and EvS developed the code and wrote the paper jointly.

The authors declare that they have no conflict of interest.

Philippe Delandmeter and Erik van Sebille are supported through funding from the European Research Council (ERC) under the European Union Horizon 2020 research and innovation programme (grant agreement no. 715386, TOPIOS). The North Sea microplastic simulations were carried out on the Dutch National e-Infrastructure with the support of SURF Cooperative (project no. 16371). This study has been conducted using EU Copernicus Marine Service Information.

We thank Henk Dijkstra for the fruitful discussions, Andrew Coward for providing the ORCA0083-N006 and ORCA025-N006 simulation data and the IMMERSE project from the European Union Horizon 2020 research and innovation programme (grant agreement no. 821926).

This research has been supported by the European Commission (TOPIOS (grant no. 715386)).

This paper was edited by Robert Marsh and reviewed by Joakim Kjellsson and Knut-Frode Dagestad.

Arakawa, A. and Lamb, V. R.: Computational design of the basic dynamical processes of the UCLA general circulation model, Gen. Circ. Model. Atmos., 17, 173–265, 1977. a, b, c

Ardhuin, F., Aksenov, Y., Benetazzo, A., Bertino, L., Brandt, P., Caubet, E., Chapron, B., Collard, F., Cravatte, S., Delouis, J.-M., Dias, Frederic Dibarboure, G., Gaultier, L., Johannessen, J., Korosov, A., Manucharyan, G., Menemenlis, D., Menendez, M., Monnier, G., Mouche, A., Nouguier, F., Nurser, G., Rampal, P., Reniers, A., Rodriguez, E., Stopa, J., Tison, C., Ubelmann, C., Van Sebille, E., and Xie, J.: Measuring currents, ice drift, and waves from space: the Sea surface KInematics Multiscale monitoring (SKIM) concept, Ocean Sci., 14, 337–354, 2018. a

Barnes, D. K., Galgani, F., Thompson, R. C., and Barlaz, M.: Accumulation and fragmentation of plastic debris in global environments, Philos. Trans. Roy. Soc. B,, 364, 1985–1998, 2009. a

Blackford, J., Allen, J., and Gilbert, F. J.: Ecosystem dynamics at six contrasting sites: a generic modelling study, J. Mar. Syst., 52, 191–215, 2004. a

Blanke, B. and Raynaud, S.: Kinematics of the Pacific equatorial undercurrent: An Eulerian and Lagrangian approach from GCM results, J. Phys. Oceanogr., 27, 1038–1053, 1997. a, b

Browne, M. A., Crump, P., Niven, S. J., Teuten, E., Tonkin, A., Galloway, T., and Thompson, R.: Accumulation of microplastic on shorelines woldwide: sources and sinks, Environ. Sci. Technol., 45, 9175–9179, 2011. a

Chu, P. C. and Fan, C.: Accuracy progressive calculation of Lagrangian trajectories from a gridded velocity field, J. Atmos. Ocean. Technol., 31, 1615–1627, 2014. a

Cózar, A., Martí, E., Duarte, C. M., García-de Lomas, J., Van Sebille, E., Ballatore, T. J., Eguíluz, V. M., González-Gordillo, J. I., Pedrotti, M. L., Echevarría, F., Troublè, R., and Irigoien, X.: The Arctic Ocean as a dead end for floating plastics in the North Atlantic branch of the Thermohaline Circulation, Sci. Adv., 3, e1600582, https://doi.org/10.1126/sciadv.1600582, 2017. a

Critchell, K. and Lambrechts, J.: Modelling accumulation of marine plastics in the coastal zone; what are the dominant physical processes?, Estuarine, Coast. Shelf Sci., 171, 111–122, 2016. a

Cushman-Roisin, B. and Beckers, J.-M.: Introduction to geophysical fluid dynamics: physical and numerical aspects, Vol. 101, Academic press, 2011. a, b

Dagestad, K.-F., Röhrs, J., Breivik, Ø., and Ådlandsvik, B.: OpenDrift v1.0: a generic framework for trajectory modelling, Geosci. Model Dev., 11, 1405–1420, https://doi.org/10.5194/gmd-11-1405-2018, 2018. a

Delandmeter, P.: OceanParcels/Parcelsv2.0PaperNorthSeaScripts: Parcelsv2.0PaperNorthSeaScripts, Zenodo, https://doi.org/10.5281/zenodo.3253693, 2019a. a, b

Delandmeter, P.: OceanParcels/parcels_cgrid_interpolation_schemes: Parcels v2.0 C-grid interpolation schemes, Zenodo, https://doi.org/10.5281/zenodo.3253697, 2019b. a, b

Delandmeter, P., Lambrechts, J., Marmorino, G. O., Legat, V., Wolanski, E., Remacle, J.-F., Chen, W., and Deleersnijder, E.: Submesoscale tidal eddies in the wake of coral islands and reefs: satellite data and numerical modelling, Ocean Dynam., 67, 897–913, 2017. a

Döös, K., Jönsson, B., and Kjellsson, J.: Evaluation of oceanic and atmospheric trajectory schemes in the TRACMASS trajectory model v6.0, Geosci. Model Dev., 10, 1733–1749, https://doi.org/10.5194/gmd-10-1733-2017, 2017. a, b

Dussin, R., Barnier, B., Brodeau, L., and Molines, J.-M.: The making of the Drakkar Forcing Set DFS5, Tech. rep., DRAKKAR/MyOcean, 2016. a

Egbert, G. D. and Erofeeva, S. Y.: Efficient inverse modeling of barotropic ocean tides, J. Atmos. Ocean. Technol., 19, 183–204, 2002. a

Fraser, C. I., Morrison, A. K., Hogg, A. M., Macaya, E. C., van Sebille, E., Ryan, P. G., Padovan, A., Jack, C., Valdivia, N., and Waters, J. M.: Antarctica’s ecological isolation will be broken by storm-driven dispersal and warming, Nat. Clim. Change, 8, 704–708, 2018. a

Grech, A., Wolter, J., Coles, R., McKenzie, L., Rasheed, M., Thomas, C., Waycott, M., and Hanert, E.: Spatial patterns of seagrass dispersal and settlement, Div. Distrib., 22, 1150–1162, 2016. a

Griffies, S., Harrison, M., Pacanowski, R., and Rosati, A.: A technical guide to mom4 gfdl ocean group technical report no. 5, NOAA, Geophys. Fluid Dynam. Laboratory, 339 pp., 2004. a

Gustafsson, B.: Interaction between Baltic Sea and North Sea, Deutsche Hydrogr. Z., 49, 165–183, 1997. a

Gutow, L., Ricker, M., Holstein, J. M., Dannheim, J., Stanev, E. V., and Wolff, J.-O.: Distribution and trajectories of floating and benthic marine macrolitter in the south-eastern North Sea, Mar. Pollut. Bull., 131, 763–772, 2018. a, b, c, d, e, f

Holloway, G.: Estimation of oceanic eddy transports from satellite altimetry, Nature, 323, 243–244, 1986. a

Jönsson, B., Döös, K., and Kjellsson, J.: TRACMASS: Lagrangian trajectory code, https://doi.org/10.5281/zenodo.34157, 2015. a

Kjellsson, J. and Zanna, L.: The impact of horizontal resolution on energy transfers in global ocean models, Fluids, 2, 45, https://doi.org/10.3390/fluids2030045, 2017. a

Kubota, M.: A mechanism for the accumulation of floating marine debris north of Hawaii, J. Phys. Oceanogr., 24, 1059–1064, 1994. a

Lambrechts, J., Comblen, R., Legat, V., Geuzaine, C., and Remacle, J.-F.: Multiscale mesh generation on the sphere, Ocean Dynam., 58, 461–473, 2008. a

Lange, M. and van Sebille, E.: Parcels v0.9: prototyping a Lagrangian ocean analysis framework for the petascale age, Geosci. Model Dev., 10, 4175–4186, https://doi.org/10.5194/gmd-10-4175-2017, 2017. a, b, c, d, e

Law, K. L.: Plastics in the marine environment, Ann. Rev. Mar. Sci., 9, 205–229, 2017. a

Law, K. L., Morét-Ferguson, S., Maximenko, N. A., Proskurowski, G., Peacock, E. E., Hafner, J., and Reddy, C. M.: Plastic accumulation in the North Atlantic subtropical gyre, Science, 329, 1185–1188, 2010. a

Lebreton, L.-M., Greer, S., and Borrero, J. C.: Numerical modelling of floating debris in the world’s oceans, Mar. Pollut. Bull., 64, 653–661, 2012. a

Madec, G. and Imbard, M.: A global ocean mesh to overcome the North Pole singularity, Clim. Dynam., 12, 381–388, 1996. a

Madec, G. and the NEMO team: NEMO ocean engine, Tech. rep., Note du Pôle modélisation, Inst. Pierre Simon Laplace, 2016. a, b, c

Mahdon, R., McConnell, N., and Tonani, M.: Product user manual for North-West Shelf Physical Reanalysis Products NORTHWESTSHELF_REANALYSIS_PHYS_004_009 and NORTHWESTSHELF_REANALYSIS_BIO_004_011, available at: http://cmems-resources.cls.fr/documents/PUM/CMEMS-NWS-PUM-004-009-011.pdf (last access: 10 March 2019), 2017. a

Marsh, R., Ivchenko, V. O., Skliris, N., Alderson, S., Bigg, G. R., Madec, G., Blaker, A. T., Aksenov, Y., Sinha, B., Coward, A. C., Le Sommer, J., Merino, N., and Zalesny, V. B.: NEMO–ICB (v1.0): interactive icebergs in the NEMO ocean model globally configured at eddy-permitting resolution, Geosci. Model Dev., 8, 1547–1562, https://doi.org/10.5194/gmd-8-1547-2015, 2015. a

Maximenko, N., Hafner, J., and Niiler, P.: Pathways of marine debris derived from trajectories of Lagrangian drifters, Mar. Pollut. Bull., 65, 51–62, 2012. a

Monaghan, J. J.: Smoothed particle hydrodynamics, Rep. Progr. Phys., 68, 1703–1759, 2005. a

Neumann, D., Callies, U., and Matthies, M.: Marine litter ensemble transport simulations in the southern North Sea, Mar. Pollut. Bull., 86, 219–228, 2014. a, b, c

Obbard, R. W., Sadri, S., Wong, Y. Q., Khitun, A. A., Baker, I., and Thompson, R. C.: Global warming releases microplastic legacy frozen in Arctic Sea ice, Earth's Fut., 2, 315–320, 2014. a

Onink, V., Wichmann, D., Delandmeter, P., and Van Sebille, E.: The role of Ekman currents, geostrophy and Stokes drift in the accumulation of floating microplastic, J. Geophys. Res.-Oceans, 124, 1474–1490, https://doi.org/10.1029/2018JC014547, 2019. a, b

Paris, C. B., Helgers, J., Van Sebille, E., and Srinivasan, A.: Connectivity Modeling System: A probabilistic modeling tool for the multi-scale tracking of biotic and abiotic variability in the ocean, Environ. Modell. Softw., 42, 47–54, 2013. a

Phillips, J. S., Gupta, A. S., Senina, I., van Sebille, E., Lange, M., Lehodey, P., Hampton, J., and Nicol, S.: An individual-based model of skipjack tuna (Katsuwonus pelamis) movement in the tropical Pacific ocean, Progr. Oceanogr., 164, 63–74, 2018. a

Prodhomme, C., Batté, L., Massonnet, F., Davini, P., Bellprat, O., Guemas, V., and Doblas-Reyes, F.: Benefits of increasing the model resolution for the seasonal forecast quality in EC-Earth, J. Climate, 29, 9141–9162, 2016. a

Rio, M.-H., Mulet, S., and Picot, N.: Beyond GOCE for the ocean circulation estimate: Synergetic use of altimetry, gravimetry, and in situ data provides new insight into geostrophic and Ekman currents, Geophys. Res. Lett., 41, 8918–8925, 2014. a

Rubio, A., Mader, J., Corgnati, L., Mantovani, C., Griffa, A., Novellino, A., Quentin, C., Wyatt, L., Schulz-Stellenfleth, J., Horstmann, J., Lorente, P., Zambianchi, E., Hartnett, M., Fernandes, C., Zervakis, V., Gorringe, P., Melet, A., and Puillat, I.: HF radar activity in European coastal seas: next steps toward a pan-European HF radar network, Front. Mar. Sci., 4, 8, https://doi.org/10.3389/fmars.2017.00008, 2017. a

Saha, S., Moorthi, S., Pan, H.-L. et al.: The NCEP climate forecast system reanalysis, B. Am. Meteorol. Soc., 91, 1015–1058, 2010. a

Sasaki, H., Nonaka, M., Masumoto, Y., Sasai, Y., Uehara, H., and Sakuma, H.: An eddy-resolving hindcast simulation of the quasiglobal ocean from 1950 to 2003 on the Earth Simulator, in: High resolution numerical modelling of the atmosphere and ocean, 157–185, Springer, 2008. a

Smith, R., Jones, P., Briegleb, B., Bryan, F., Danabasoglu, G., Dennis, J., Dukowicz, J., Eden, C., Fox-Kemper, B., Gent, P., Hecht, M., Jayne, S., Jochum, M., Large, W., Lindsay, K., Maltrud, M., Norton, N., Peacock, S., Vertenstein, M., and Yeager, S.: The parallel ocean program (POP) reference manual ocean component of the community climate system model (CCSM) and community earth system model (CESM), Rep. LAUR-01853, 141, 1–140, 2010. a

Stanev, E., Badewien, T., Freund, H., Grayek, S., Hahner, F., Meyerjürgens, J., Ricker, M., Schöneich-Argent, R., Wolff, J., and Zielinski, O.: Extreme westward surface drift in the North Sea: Public reports of stranded drifters and Lagrangian tracking, Continent. Shelf Res., 177, 24–32, 2019. a

Thomas, C. J., Lambrechts, J., Wolanski, E., Traag, V. A., Blondel, V. D., Deleersnijder, E., and Hanert, E.: Numerical modelling and graph theory tools to study ecological connectivity in the Great Barrier Reef, Ecol. Modell., 272, 160–174, 2014. a

Tolman, H. L.: User manual and system documentation of WAVEWATCH III TM version 3.14, Tech. rep., MMAB Contribution, 2009. a

van Sebille, E., England, M. H., and Froyland, G.: Origin, dynamics and evolution of ocean garbage patches from observed surface drifters, Environ. Res. Lett., 7, 044040, https://doi.org/10.1088/1748-9326/7/4/044040, 2012. a

van Sebille, E., Wilcox, C., Lebreton, L., Maximenko, N., Hardesty, B. D., Van Franeker, J. A., Eriksen, M., Siegel, D., Galgani, F., and Law, K. L.: A global inventory of small floating plastic debris, Environ. Res. Lett., 10, 124006, https://doi.org/10.1088/1748-9326/10/12/124006, 2015. a

van Sebille, E., Griffies, S. M., Abernathey, R. et al.: Lagrangian ocean analysis: Fundamentals and practices, Ocean Modell., 68, 49–75, 2018. a, b, c

van Sebille, E., Delandmeter, P., Lange, M., Rath, W., Phillips, J. S., Simnator101, pdnooteboom, Kronborg, J., Thomas-95, Wichmann, D., nathanieltarshish, Busecke, J., Edwards, R., Sterl, M., Walbridge, S., Kaandorp, M., hart-davis, Miron, P., Glissenaar, I., Vettoretti, G., and Ham, D. A.: OceanParcels/parcels: Parcels v2.0.0: a Lagrangian Ocean Analysis tool for the petascale age, Zenodo, Version v2.0.0, https://doi.org/10.5281/zenodo.3257432, 2019. a

Weisstein, E. W.: Surface Parameterization, available at: http://mathworld.wolfram.com/SurfaceParameterization.html (last access: 10 March 2019), from MathWorld – A Wolfram Web Resource, 2018. a

Wubs, F. W., Arie, C., and Dijkstra, H. A.: The performance of implicit ocean models on B-and C-grids, J. Comput. Phys., 211, 210–228, 2006. a

- Abstract

- Introduction

- Parcels v2.0 development

- Validation

- Simulating the sensitivity of northwest European continental shelf floating microplastic distribution

- Conclusions

- Code and data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References

- Supplement

stuffsuch as plankton and plastic around. In the latest version 2.0 of Parcels, we focus on more accurate interpolation schemes and implement methods to seamlessly combine data from different sources (such as winds and currents, possibly in different regions). We show that this framework is very efficient for tracking how microplastic is transported through the North Sea into the Arctic.

stuffsuch as plankton and...

- Abstract

- Introduction

- Parcels v2.0 development

- Validation

- Simulating the sensitivity of northwest European continental shelf floating microplastic distribution

- Conclusions

- Code and data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References

- Supplement