the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Introducing Iterative Model Calibration (IMC) v1.0: a generalizable framework for numerical model calibration with a CAESAR-Lisflood case study

Chayan Banerjee

Clinton Fookes

Gregory Hancock

Thomas Coulthard

In geosciences, including hydrology and geomorphology, the reliance on numerical models necessitates the precise calibration of their parameters to effectively translate information from observed to unobserved settings. Traditional calibration techniques, however, are marked by poor generalizability, demanding significant manual labor for data preparation and the calibration process itself. Moreover, the utility of machine-learning-based and data-driven approaches is curtailed by the requirement for the numerical model to be differentiable for optimization purposes, which challenges their generalizability across different models. Furthermore, the potential of freely available geomorphological data remains underexploited in existing methodologies. In response to these challenges, we introduce a generalizable framework for calibrating numerical models, with a particular focus on geomorphological models, named Iterative Model Calibration (IMC). This approach efficiently identifies the optimal set of parameters for a given numerical model through a strategy based on a Gaussian neighborhood algorithm. Through experiments, we demonstrate the efficacy of IMC in calibrating the widely used landscape evolution model CAESAR-Lisflood (CL). The IMC process substantially improves the agreement between CL predictions and observed data (in the context of gully catchment landscape evolution), surpassing both uncalibrated and manual approaches.

- Article

(3702 KB) - Full-text XML

- BibTeX

- EndNote

Parameters of numerical (e.g., geomorphic) models play a crucial role in predicting their behavior. These models are usually calibrated based on observations at known data points or settings. However, it is often necessary to forecast how the system would behave at test data points or settings where direct observations are not possible.

A qualitative calibration approach involves a manual comparison of model and field data, making it time-consuming and less likely to reveal the optimal model parameter configuration. On the other hand, a quantitative calibration of a numerical model involves assessing the model's error using statistics and is more suitable for complicated models with many parameters. Recently, there has been renewed interest in developing such automatic calibration routines to explore a model's parameter space (Becker et al., 2019; Brunetti et al., 2022; Beck et al., 2018; Tsai et al., 2021). Still, a large number of conventional approaches suffer from limitations like calibration of selective parameters, poor generalizability, extensive manual components in data pre-processing and model calibration, and restrictive assumptions like differentiable and learning-data-driven surrogate numerical models.

We propose a novel calibration algorithm: Iterative Model Calibration (IMC). The IMC is a fully automated calibration approach, which needs minimal manual interference and requires minimal data pre-processing. The method operates on a simple but effective concept of Gaussian-guided iterative parameter search. The process calibrates a defined list of parameters sequentially (high to low priority), with one parameter being adjusted at a time, keeping others fixed. The parameter values are sampled from a Gaussian neighborhood surrounding the latest parameter value. The model's output due to each predicted parameter is then compared to the observed ground truth data, and an error is calculated. This error serves as a fitness measure and a minimum threshold for finalizing the value corresponding to that particular parameter.

In the following segment, we present a brief review of conventional approaches for calibrating geoscientific numerical models, specifically concerning landscape evolution models (LEMs) such as CAESAR-Lisflood (CL). Some qualitative calibration strategies concentrate on one or a few chosen model parameters for calibration. For example, in Ramirez et al. (2022), the focus was on the “m value” of CL's hydrology model (TOPMODEL), which is responsible for controlling the change in soil moisture storage for ungauged primary sub-catchments. They used a three-step approach: first, they ran a 5-year simulation of the CL model with a 1 km spatial resolution. Second, they repeated this process for a secondary sub-catchment, using the same rainfall input and calibrated parameters, lumped and spatially distributed. Lastly, they ran the calibrated primary sub-catchment hydrological model, which had spatially distributed m values, for a crucial short-term (3 h) extreme weather event, obtaining a simulated discharge from the primary sub-catchment.

In a study by Peleg et al. (2020), the hydrological TOPMODEL parameter m and Courant number were calibrated through selective calibration. This was done by finding an optimal fit between simulated hydrographs of 14 d and observed hydrographs. While carrying out this calibration, a number of parameters were manually set, with the help of published data from nearby locations and domain knowledge. In another work by Wang et al. (2023), CL calibration was carried out at selected locations by reproducing the geomorphic changes and water depth driven by an extreme rainfall event. The parameter settings were set manually, based on domain knowledge and research data. Feeney et al. (2020) started with choosing CL parameter values from prior published literature. They then tested various combinations of the values to satisfy the two equations utilized in the lateral erosion algorithm in CL. Additionally, during calibration, they modified one parameter at a time while keeping the others constant. Skinner et al. (2018) employed the Morris method on the CL model in two diverse catchments to discern the impact of parameters on model behavior. Though centered on sensitivity analysis, this work indirectly aids model calibration by pinpointing key parameters for effective adjustments, thereby refining the calibration process.

The tool described in Beck et al. (2018) serves to calibrate the Lisflood hydrological model for designated catchment areas, deliberately omitting the upstream catchment region. It employs a genetic algorithm, LEAP, for the calibration process and is developed using Python. Nevertheless, a considerable amount of manual pre-processing of the input files, specifically scripts, is necessary prior to initiating calibration runs. In contrast to previous approaches, Tsai et al. (2021) proposed a data-driven differentiable parameter learning (dPL) framework. This approach involves a parameter estimation module that maps raw input data to model parameters. These parameters are then fed into a differentiable model or its surrogate, such as a neural-network-based model. Differentiability allows for gradient calculation with respect to model variables or parameters, facilitating the discovery of hidden relationships in high-dimensional data through variable optimization. However, many physical or numerical models are not fully differentiable. Re-implementing a non-differentiable model into a differentiable one demands significant domain knowledge (Shen et al., 2023). Alternatively, a differentiable model can be developed from data using neural networks as surrogate models (Tang et al., 2020; McCabe et al., 2023), but this method requires extensive, often costly, field data collection and may struggle without specific historical data. These challenges limit the applicability and generalization of differentiable models and data-driven surrogates to complex numerical models like CL. A number of approaches leverage machine learning (ML) algorithms and general optimization algorithms for calibration. Brunetti et al. (2022) introduce a hybrid strategy calibration approach for hydrological models, combining precision ML algorithms like Marquardt–Levenberg with comprehensive learning particle swarm optimization (CLPSO). Central to this approach is an objective function aimed at reducing the gap between HYDRUS model forecasts and empirical observations.

To sum up, the calibration of numerical models is hindered by reliance on extensive domain knowledge and manual tuning, and the high cost of data collection for ML approaches restricts their effectiveness and applicability. The expertise needed for model differentiation further limits widespread usage, underscoring the demand for adaptable and data-efficient calibration strategies in geoscientific modeling. A large number of conventional calibration techniques are tailored for hydrological models and have access to their wealth of data from global networks. But they fall short for geomorphological models (Abbaspour et al., 2004; Jetten et al., 2003) due to a lack of diverse and accessible data such as DEMs and information on soil, sediment, vegetation, and geology. This data scarcity undermines traditional calibration methods and hampers the use of newer data-driven ML in geomorphology, which depends on large datasets for accuracy. Our calibration approach aims to leverage limited DEM data to effectively calibrate geomorphological models, addressing a critical gap in current methodologies.

The IMC algorithm introduces the following unique contributions:

-

Highly customizable approach. Due to the simplicity of the underlying process of iterative-error-based search and parameter calibration, the algorithm is adaptable to any numerical model. Besides depending on the application, input–output files and loss functions may be customized and substituted with ease.

-

Capable of calibrating a large number of parameters. The IMC is highly scalable and can calibrate for any number of numeric valued parameters of numerical models.

-

Minimal manual involvement requirement with a complete automated process. Apart from minimal data pre-processing and parameter initialization, IMC can run without any human supervision.

-

Generalizable for any numerical model. The algorithm does not have any restrictions regarding the type of numerical model. Being gradient-free, our approach requires neither the differentiability of the numerical model nor a neural-network-based surrogate. With its generalization, it can be used as an add-on module and patched with any numerical model for calibration.

In the following sections we elaborate on the IMC algorithm for calibrating numerical models, specifically targeting geomorphological models. We showcase the effectiveness of IMC by applying it to the landscape evolution model CAESAR-Lisflood in the context of gully erosion modeling. The rest of the paper is structured as follows: Sect. 2 introduces the foundational concepts of model calibration techniques and establishes a general mathematical framework for addressing the problem. Section 3.1 is dedicated to a comprehensive exposition of our proposed IMC algorithm, including a detailed description of the algorithm itself and a discussion on each component of the IMC, referenced against the functional diagram shown in Fig. 2. In Sect. 3.2, we offer a concise rationale for choosing mean square error (MSE) as the metric for performance evaluation in our IMC algorithm. Section 4 outlines our case study, including the problem statement, details about the study location, and a discussion of the calibration results, supported by various tables and figures. In Sect. 5, we present a factual comparison of different calibration methods reviewed in this study against our IMC, complemented by an in-depth experimental analysis and additional experiments. The paper concludes with Sect. 6, where we summarize our findings and suggest promising directions for future enhancements to our work.

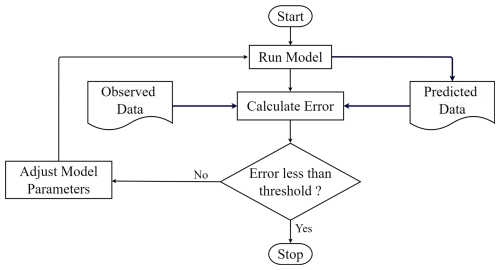

Calibration is an essential process in which the parameters of a model are adjusted to ensure that its output matches the observed historical data. The objective is to determine a set of parameter values enabling the model to produce data similar to the studied system (Oreskes et al., 1994; Gupta et al., 1998; Beven, 2006). Usually, a single fitness or loss value is sought to summarize the relationship between the predicted and observed data. As shown in Fig. 1, the model's parameters are adjusted repeatedly until the difference between the model output and the observed data is reduced below a certain threshold. Once a predetermined level of accuracy or error is attained, the calibration process is concluded, and the model is deemed effective in simulating the real system or scenario.

When it comes to simple models, adjusting parameters and calculating errors is usually straightforward. However, numerical geomorphic models, e.g., landscape evolution models, are more complex and have many configurable parameters. These model parameters can often have inter-related nonlinear effects on the model's behavior, making it challenging to anticipate how the model will behave with new parameter configurations (Skinner et al., 2018; Tucker and Hancock, 2010; Coulthard et al., 2007; Braun and Willett, 2013). As a result, doing trial-and-error matching of a model's parameters to specific field conditions is often complex, intricate, and time-consuming.

Furthermore, LEMs often exhibit equifinality, where diverse parameter sets yield similar outcomes, highlighting the complexity of interpreting these models (Phillips, 2003). This phenomenon suggests multiple evolutionary pathways can lead to comparable landscapes, challenging model solution uniqueness and necessitating meticulous calibration and validation efforts (Beven and Freer, 2001). Additionally, equifinality may result in seemingly accurate landscape representations for incorrect reasons, pointing to the oversimplification of geomorphic processes (Lane et al., 1999).

Here we introduce mathematical notation to explain the calibration mechanism in general. Let p and θ denote the vectors of constant and calibration input parameters of dimension d1 and d2 respectively of a certain numerical model M. Constant input parameters stay the same over the whole calibration process, while the calibration parameters are sequentially optimized by the IMC algorithm. Also, let S represent a collection of all input data, typically constituting DEMs, rainfall and soil data, etc. Formally we can describe the mapping of the constant and calibration input parameters and input data to the expected model output as follows:

where ξ represents the inherent randomness in the output of the numerical model, which is the uncertainty or variability that arises due to certain features within the simulation process. Sources of inherent randomness include system variability, incomplete knowledge, model imperfections, and numerical approximations. Here, the output of the numerical model is denoted by y(.), which is a function of constant and calibration input parameters as well as input data. When calibrating a certain numerical model (M), we assume we have certain information available to us:

-

the n observations of the real system (e.g., natural processes) response , corresponding to n initial condition data ;

-

the n outputs generated by the numerical model for n given input (initial condition) data and constant and calibration parameter vectors, i.e., .

The objective of the calibration algorithm is to iteratively search for the unknown true calibration parameter vector θ*, which is the θ that parameterizes the numerical model to best match the observation of the real system or physical process. This naive calibration approach or direct calibration may be typically formulated as the following optimization problem:

where the goal is to find θ such that it minimizes the above loss L(.). The loss is calculated considering the observed response x and the model-generated output .

3.1 Details of IMC algorithm

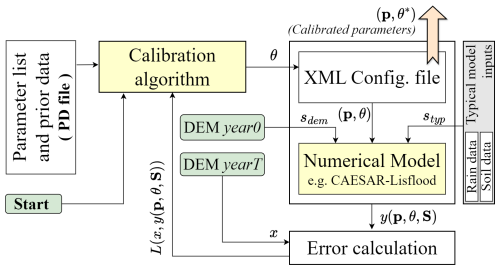

Figure 2 presents a high-level overview of the interface of the IMC (proposed calibration algorithm) with the numerical model and their connection with other components and operations.

Figure 2The calibration algorithm with a LEM numerical model presented here works in the following way. Firstly, the algorithm reads information regarding parameters from a PD file and updates the parameter suggestions in the XML file. Then, the numerical model reads the parameter values from the XML file and generates the output. The generated and target data are then compared, and the error is calculated based on a loss function. This loss is fed back to the algorithm, which uses it to set or update its loss threshold. The algorithm uploads a new parameter suggestion in the XML. This cycle continues until a stopping criterion is reached.

The following list briefly introduces the primary components of the setup:

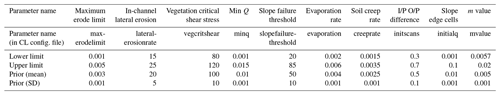

-

The parameter list and prior data (PD) file contains a list of all the parameters θ that need to be calibrated. Along with the list, the file also contains prior best-known values of these parameters, the value's lower–upper limits (upθ,lwθ), and standard deviation σθ values, which are used to range the Gaussian search neighborhood. For more details on the contents and structure of this file, refer to the appendix and Table A1.

-

The calibration algorithm is the proposed IMC algorithm that initiates by reading the calibrated parameter list, corresponding prior best-known values, value limits, and constraints (from the PD file) and outputs a new parameter value. This parameter value is then passed on to the XML configuration file, which updates its parameter vector and forwards it to the numerical model. The IMC later reads the error calculated from comparing the model output and observed data.

-

The XML configuration file holds the intermediate parameter values after being generated by the calibration algorithm. The numerical model reads the updated parameter vector from this file and generates simulated results accordingly. After the completion of the calibration process, the same file serves as the output, since the final calibrated values of the parameters are updated to the file.

-

The numerical model (M) is the model whose parameters are being calibrated. The model loads the constant and calibrated parameter vector sets (p,θ) along with input data and generates output . Here sdem refers to the initial-year DEM (i.e., DEM year 0), and styp represents all the other types of typical data inputs that are loaded by the model, e.g., rainfall data and soil data.

-

The error calculation is based on a predetermined loss function. This module compares the observed system response (x) with the model's output and quantifies the difference or similarity between them through a numerical value or score. We used mean square error (MSE) as the error-generating function, which is represented as follows:

where and are the ground truth and model-predicted 2D numeric arrays respectively of dimension i×j.

In the below explanation and the algorithm that follows, we have relaxed the dependence of y on input data S from the notations, but it is understood that outputs are with respect to these inputs.

In the IMC algorithm, each model parameter is numerically adjusted through a search process within its latest Gaussian neighborhood. A Gaussian neighborhood refers to the local region around a current parameter value, defined by the spread of the Gaussian distribution (typically within 1 standard deviation of the mean). Initially, the mean and standard deviation are set as prior values, establishing a Gaussian distribution for each parameter. This distribution guides the exploration of parameter space during the calibration process. For each parameter θi∈θ where , the algorithm conducts a series of searches to find the optimal parameter value. Specifically, it performs J×C rounds of searching, where J is the number of iterations for each parameter, and C is the number of rounds in each iteration. The optimal parameter search is represented by rounds, where a model parameter value from its latest Gaussian neighborhood is selected and tested in the numerical model. Here, the parameter refers to the specific value being tested to see how well it performs. An iteration consists of a set of such rounds (=C), representing multiple parameter searches. At the end of each iteration, if a better numerical model parameter is found that reduces the loss (beyond a certain threshold), the mean of its Gaussian distribution is updated. This update process refines the distribution, improving the chances of selecting better numerical model parameters in future rounds. Therefore, the number of iterations represents the number of instances (for each parameter) where the Gaussian distribution's parameter is considered for an update.

The calibration is sequential, and while calibrating for a certain parameter, say θi, all other θ\θi parameter values are kept constant. Each jth iteration (where ) runs multiple rounds of random searches in the Gaussian neighborhood of the last best-known parameter value. The Gaussian neighborhood is determined by the parameter's best-known value (known as prior information or passed on from previous iteration) and its fixed standard deviation , i.e., . A randomly sampled data point (γ) from this neighborhood serves as the parameter value for the current round. It is also ensured that the sampled value γ is well within the upper and lower value limits of the current parameter, i.e., .

Each iteration also keeps track of the best parameter value across its C rounds, based on the minimum loss scored . Besides a minimum loss threshold, ℒ is also maintained across all iterations and parameters. After each iteration, if , then its corresponding best parameter is saved as the best value of the current parameter θi, i.e., , and the minimum loss threshold is updated, i.e., . The whole process is elaborated as an algorithm as follows.

Algorithm 1The complete IMC algorithm.

3.2 Choosing LEM performance evaluation metric

Assessing model performance is crucial for accurately depicting geomorphic changes. Choosing the right evaluation metrics, like the MSE of DEMs, is an efficient metric since it directly measures topographic accuracy, a fundamental aspect of landscape studies.

LEM performance can be evaluated through various lenses, including erosion and deposition rates, sediment yield, hydrological accuracy, and more. These metrics serve to assess different facets of landscape dynamics and processes simulated by the model (Hancock and Willgoose, 2001; Tucker and Slingerland, 1997; Skinner et al., 2018; Barnhart et al., 2020; Skinner and Coulthard, 2023). Each metric focuses on specific attributes of landscape evolution, from the quantification of sediment transport to the replication of hydrological responses under varying climatic conditions. Notably, topographic accuracy emerges as a fundamental criterion, as it encapsulates the geomorphological fidelity of model simulations in replicating real-world landscapes (Temme and Schoorl, 2009).

The rationale for employing MSE between observed and LEM-estimated DEMs as a metric lies in its direct quantification of the discrepancy in topographical features. This approach allows for a granular assessment of model performance in simulating the spatial configuration of landscapes. Given the critical role of topography in governing hydrological and geomorphic processes, the accuracy of DEM simulations directly influences the reliability of LEM outputs in representing erosion patterns, sediment transport, and hydrological dynamics.

Moreover, the use of MSE aligns with the principle of evaluating model efficiency through quantitative measures that provide clear benchmarks for improvement (Nash and Sutcliffe, 1970). By quantifying errors in elevation across the landscape, MSE offers a comprehensive overview of model performance in capturing the intricate details of terrain morphology.

Additionally, the comparison of DEMs through MSE facilitates the identification of systematic biases or inaccuracies in model simulations, guiding further calibration and refinement of LEM parameters (Beven and Binley, 1992). This aspect is particularly crucial in landscape evolution modeling, where the spatial distribution of elevation changes significantly influences erosion and sedimentation processes.

4.1 Problem statement

Our primary objective is to calibrate the numerical model (here CL) using geomorphological data from 2 distinct years, 2019 and 2021, including DEMs and soil and rainfall data. IMC calibration aims to enhance the model's reliability by ensuring its outputs closely match observed data. Achieving this alignment is essential for gaining accurate insights into landscape evolution dynamics. Additional objectives include comparing our calibration method with existing approaches to highlight its broader applicability and reduced human effort. We aim to conduct experiments with varying calibration run lengths to assess their impact on calibration quality, focusing on erosion volume and spatial accuracy. Furthermore, we seek to evaluate the efficiency of the proposed IMC algorithm in re-estimating known parameter values from deliberate perturbations, demonstrating its accuracy and robustness.

4.2 Study area and data

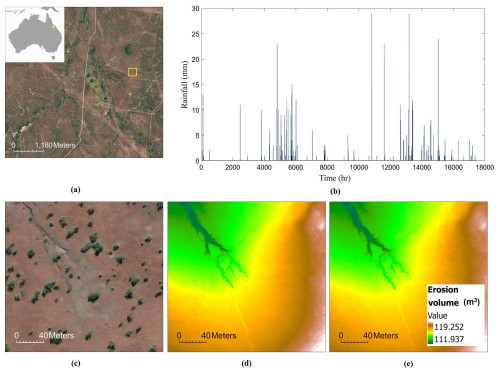

The study area is a gully catchment region situated 20 km to the east of Mount Abbot National Park (Scientific) in the Bowen Basin region of Northern Queensland at a location 20°13′ S, 147°33′20′′ E; see Fig. 3. For hourly rainfall data (see Fig. 3b), we used pluviometer readings from the Ernest Creek pluvio of Burdekin Basin, Queensland (WMIP, 2024), between the dates 1 July 2019–2021. The DEMs are collected using airborne laser scanning (ALS) by the Department of Agriculture, Water and the Environment, Australia, under project names Bogie 2019 and Strathbogie 2021 and are hosted on an online repository (ELVIS, 2024). The required DEMs are downloaded from the mentioned source with the following specifications: resolution, 0.5 m; vertical accuracy, ±0.15 m at 67 % CI; and horizontal accuracy, ±0.3 m at 67 % CI. For ease of computation, we used a downsampled version (i.e., 1 m) of the original DEMs in all our experiments.

We chose gully erosion in Australia as a case study due to its environmental significance, the availability of extensive data, and the unique challenges posed by Australia's climate and soil. The study aims to inform local policymakers and land managers, fill research gaps, and develop targeted strategies for erosion mitigation. Additionally, the insights gained from this specific context can illustrate the framework's adaptability and transferability to other regions facing similar environmental challenges.

Figure 3Study site information. (a) Satellite image of the Bowen Basin, with the yellow box highlighting the study location. Inset shows the location of the Bowen Basin (yellow star) in Australia. (b) Hourly rainfall data between July 2019–2021, pluviometer reading. (Source: basemap and data provided by Esri and its Community Map contributors. The pluviometer reading is from the Ernest Creek pluvio of Burdekin Basin, Queensland (WMIP, 2024), between the dates 1 July 2019–2021). (c) Magnified (zoomed-in) view of the study region. (d) Observed DEM of the year 2019 in color map, used as CL’s input. (e) Observed 2021 DEM in color map.

4.3 Calibration experiments and results

In the following sections, we introduce the study area and present the essential parameters and settings used for running IMC in CL parameter calibration. Additionally, we provide comparative results from the experiments, including CL with uncalibrated parameters, CL with manually calibrated parameters, and CL with manually calibrated and IMC-calibrated parameters.

4.3.1 Calibration details and experimental setup

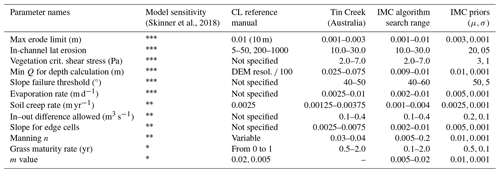

We present Table 1, which summarizes essential information regarding the primary parameters of the CL numerical model, including numerical values from existing literature. Additionally, the table shows the prior values used to initialize the IMC for each parameter to be calibrated in the PD file. In the IMC's calibration process, the loss function is very important. As mentioned in Sect. 4, we consider the MSE of ground truth target data and CL-predicted data in image format, for calculation of errors in each round. We explore different forms of ground truth and CL-predicted data (such as DEMs and DEM of difference, i.e., DoD) and show how they can be purposed for specific experimentation.

(Skinner et al., 2018)Table 1Primary CL parameters, their manually calibrated values, values from the literature, and their model's sensitivity. Sensitivity scoring uses asterisks (*) to indicate the impact of parameters on model outcomes, from very high () to low (*'). The table also presents the default parameter and prior values assumed by the IMC algorithm.

Our primary experiments investigate the effectiveness of the IMC approach in calibrating the parameters of CL, with a particular focus on accurately predicting erosion volume. This is important because erosion volume impacts landform stability, environmental health, and cost-effectiveness and is significant for landform design and risk assessment. We use the input and predicted DEMs (i.e., DEM year0, DEM yearT, and ) to generate the target and predicted difference of DEMs, i.e., DoD and , as follows:

In order to focus the calibration on the erosion volume, we multiplied the DoDs by a mask (), which can be defined as follows:

where (e,f) represents a location on a DoD, and val(e,f) represents the signed magnitude of that data point. The final DoDs can then be written as

In later experiments (Sect. 5.3), we also investigated the accuracy of IMC-based calibration of CL's default parameters. In the experiments we try to estimate a single parameter at a time from a perturbed value, keeping all other parameters fixed. In that context we have simply considered the following:

See the relevant section for more details on the experiments.

The code for the proposed IMC algorithm with CL as a case study, along with the data used in this study, is archived for easy accessibility (Banerjee, 2024).

4.3.2 Calibration results

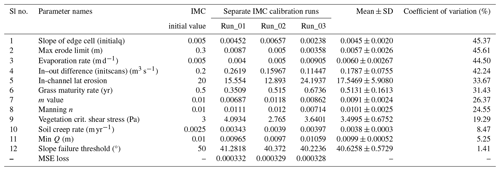

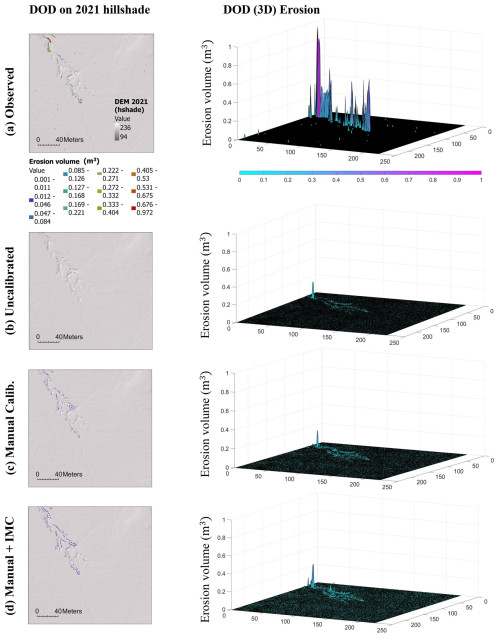

In this section, we present and discuss the results of the calibration process. Comparative results are presented in Table 2 and Fig. 4, highlighting the differences between the CL model results obtained using different variations in calibrated and uncalibrated parameter sets. For the uncalibrated set, we consider the default CL parameters and simply adapt them to our study area and DEM dimension. In the manual calibration set, we use existing literature-based knowledge of parametric values with respect to the study area and update the default CL parameter set. Finally, in the manual + IMC set (also referred to as IMC for brevity), we start or initialize the IMC calibration process with the manual calibration set data. Additionally, Table 3 provides comprehensive results of the IMC calibration process for all CL parameters, across three separate calibration runs of the same length (5×5).

In detail, Table 2 numerically shows that IMC-based calibration of CL parameters encourages the CL to predict future erosion volume with substantial accuracy as compared to the CL's results with uncalibrated and manually calibrated parameters. We also show that using only basic knowledge of the value range of parameters of the study region, two temporally separated DEMs (i.e., 2019 and 2021), and the rainfall data over this period, the IMC can calibrate the CL parameters, evident by its prediction of the target erosion volume. The target erosion volume is derived from the difference between the 2021 DEM and the 2019 DEM.

Table 2Comparison of total erosion volume and corresponding MSE loss: observed data vs. CL using uncalibrated, manually calibrated, and IMC-calibrated parameters. The results presented below are from three separate calibration runs, each with fixed-length runs (5×5) and taking around 5 h. Due to the stochastic nature of the calibration process, the mean values are reported along with their standard deviations (mean ± SD).

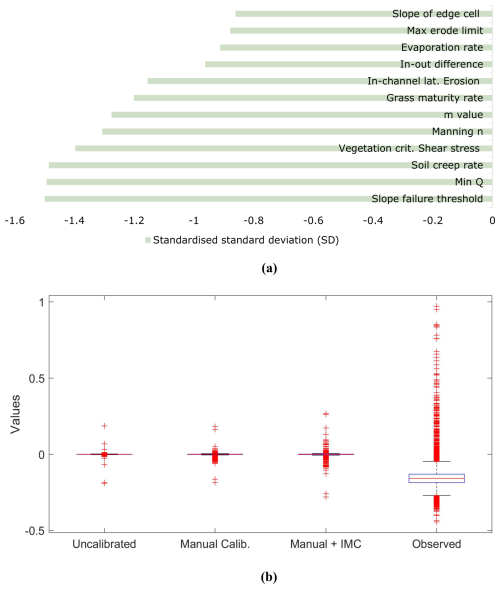

Table 3CL parameters calibrated via IMC across three separate calibration runs of a fixed length (5×5), denoted as Run_01, Run_02, and Run_03. The IMC initial value column presents the parameter initialization value for each run. The last two columns display the mean with standard deviation and the coefficient of variation (CV). The CV, a standardized measure of dispersion, is defined as the ratio of the standard deviation to the mean, expressed as a percentage. It is useful for comparing the relative variability of parameters with different units or scales. High variability is observed in parameters 1 to 4, indicated by higher CV values (see also Fig. 5a). The concluding row showcases MSE loss, which identifies Run03 as the optimal calibration run.

Figure 4The left column shows the DoD between the 2019 and 2021 DEMs, focusing only on erosion volume. This erosion data are overlaid on the 2021 DEM hillshade to provide spatial context. Areas with nearly zero erosion volume are shown as transparent to highlight regions with more significant erosion. The right column presents corresponding 3D plots of the DoD, focusing on erosion volume. Compared to all other approaches, the manual + IMC calibration (d) shows the closest resemblance of erosion volume to the observed (a), both spatially and volumetrically (DEM source: ELVIS, 2024).

In Fig. 4, we further elaborate on the numerical results presented in Table 2 through extensive visual comparison. Here, we compare the CL's prediction of erosion volume using three different sets of parameters: uncalibrated, manual, and manual + IMC. The results demonstrate that the combination of basic manual calibration with the automated IMC process significantly enhances CL's accuracy in predicting the target erosion volume.

In Fig. 5b we present a detailed side-by-side comparison of DoD value distributions across various calibration settings, benchmarking them against observed DoD values. To effectively summarize statistical variations in DoD, we use boxplots representing 1D vectors derived from flattened 2D DoD arrays. These boxplots offer a concise visual summary of central tendencies, spread, and outlier behavior across calibration settings, allowing us to assess how each calibration scenario aligns with observed DoD and identify systematic biases or deviations. The comparison of DoD values across different calibration settings reveals improvements with the manual + IMC approach, which better approximates observed DoD values. The uncalibrated model's DoD shows a narrow whisker range () and a median of −0.000080, reflecting minimal variability (IQR =0.001214) and suggesting underestimation of observed terrain changes. In contrast, in the manually calibrated model, the median shifts to 0.000024 and broadens the IQR to 0.002129, capturing more terrain variability. The manual + IMC approach further refines the model, with a near-zero median (−0.000001) and expanded IQR (0.002826), increasing sensitivity to subtle changes. While observed data display a much wider IQR (0.056000) and whisker range (), reflecting significant erosion, the widened whiskers () in manual + IMC (133.44 % broader than uncalibrated and 33.02 % broader than manual calibration approaches) show improved robustness, thus enabling reflection of real-world geomorphic changes more accurately, improving the sensitivity to natural variations and localized changes in terrain.

Figure 5(a) Comparison of parameter variability across three calibration runs, using standardized standard deviation (SD). Standardized SD represents the z score of the standard deviation of each parameter's repeated experiment (three individual calibration) values. This metric quantifies how many standard deviations a parameter's variability deviates from the mean variability of all parameters, facilitating a direct comparison of consistency and stability among different parameters. Lower standardized SD values indicate parameters with variability below the average, signifying higher consistency, while higher standardized SD values indicate greater variability relative to the repeated experiment average. (b) Comparison of DoD data distribution across various calibration settings of CL parameters. The uncalibrated CL data cluster near zero, demonstrating low accuracy. In contrast, manual calibration – especially when combined with IMC – enhances the results, capturing greater variability in the data.

5.1 Comparison with existing calibration approaches

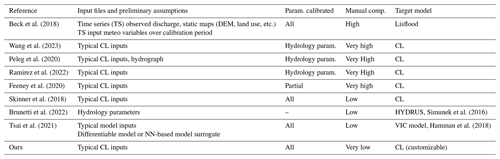

The majority of calibration approaches surveyed so far calibrates for specific and partial parameters only, involves a considerable human effort towards parameter value selection/customization (Wang et al., 2023; Peleg et al., 2020; Ramirez et al., 2022; Feeney et al., 2020), and operates for a particular type of numerical model, e.g., Lisflood (Beck et al., 2018), CAESAR-Lisflood (CL) (Wang et al., 2023; Peleg et al., 2020; Ramirez et al., 2022; Feeney et al., 2020), HYDRUS (Brunetti et al., 2022), and Victoria (Tsai et al., 2021).

The usability and generalizability of a certain approach directly depend on the set of input data required during parameter calibration. The requirement of data in addition to the ones used by the target numerical model increases the complexity to adapt the calibration for different settings and adds a heavy overhead. Table 4 summarizes the differences between the existing calibration approaches for LEMs, specifically CL.

Beck et al. (2018)Wang et al. (2023)Peleg et al. (2020)Ramirez et al. (2022)Feeney et al. (2020)Skinner et al. (2018)Brunetti et al. (2022)Simunek et al. (2016)Tsai et al. (2021)Hamman et al. (2018)

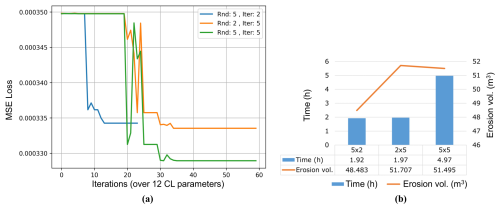

Figure 6Calibration run/duration. Panel (a) shows comparison of error or calibration losses for different lengths of calibration runs ( run for 1 m resolution DEM. Panel (b) shows a side-by-side comparison of the total time taken by different calibration runs and the erosion volume achieved (target being 49.551).

5.2 Experimental analysis

In this section we discuss experiments with different lengths of calibration runs, which are equal to the total rounds () of calibration operated per parameter (see Fig. 6); refer to Sect. 3.1 for an explanation of the terms round and iteration. It is important to understand that the quality of calibration of CL parameters using IMC would be reflected through a couple of quantities: first, the proximity of the predicted and the observed DoDs in terms of the total volume of erosion (numerically). Second, both volumetric and spatial similarity of the erosion and its location of occurrence are quantifiable by the MSE loss.

Moreover, the similarity of total erosion volume of the predicted and observed DoDs/DEMs does not alone guarantee actual similarity, and they still may be far apart if their MSEs are substantially different. This phenomenon can be seen in Fig. 6b, where the calibrations with a shorter calibration (i.e., 2×5 and 5×2) duration have a close enough erosion volume to the observed but show higher MSE. This portrays that the parameter exploration has been inadequate, and due to the selection of sub-optimal parameters, the end resulting erosion volume, though numerically similar, is spatially misplaced or distributed on the surface.

5.3 Further experiments: evaluating IMC's efficiency in CL parameter re-estimation

In this experiment, we want to show how accurate and efficient IMC is at re-estimating known (referred to as benchmark) parameter values for CL software after deliberately changing them. These known parameter values are the default settings provided with the CL software distribution. We use the default CL parameters, the initial DEM (as DEMyear0), and other provided data and create a future (or DEMyearT) DEM. Next, we use these two DEMs to re-estimate the parameters with IMC, starting from their deliberately perturbed versions. We intend to show that IMC can accurately return to the known parameter values.

To ensure the experiment remains both insightful and manageable, we focus on two key parameters: maximum erode limit and lateral erosion rate. They are selected due to CL's pronounced sensitivity to these, as seen in Skinner et al. (2018) and listed in Table 1. In this experiment, we start with producing a target DEM (i.e., DEMyearT, where T=2 years) entirely using CL's default parameters and dataset (provided with distribution, Coulthard et al., 2024). Next, we individually alter each of these two key parameters mentioned earlier, maintaining the rest at their original values. Subsequently, we employed the IMC algorithm to accurately estimate the true values of these parameters from their altered states.

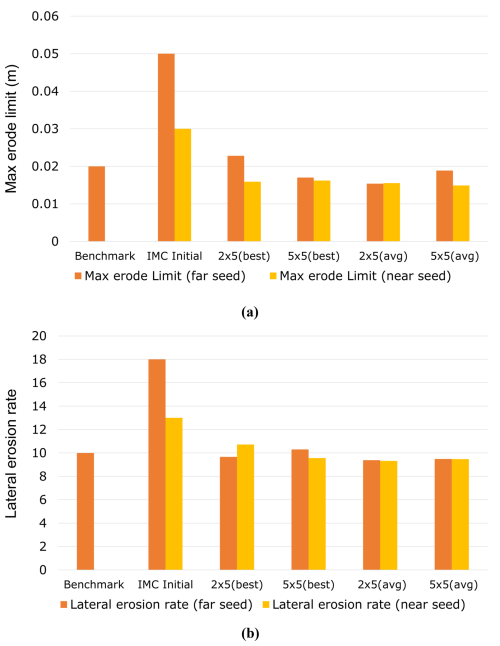

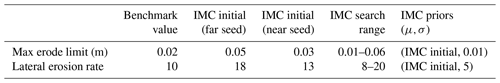

Figure 7Estimating known numerical values (benchmark) of CL parameters from their deliberately perturbed versions. (a) Estimation of max erode limit parameter and (b) estimation of lateral erosion rate parameter. Benchmark refers to the known CL parameter value, and IMC initial is the perturbed version of the same, from where the IMC starts calibrating. The IMC is run at different lengths ( repeatedly, and the best and average (of the three separate calibration runs) of estimated parameter values are presented.

Table 5Calibration data regarding CL known parameter re-estimation experiment, detailed in Sect. 5.3.

The parameters are estimated through individual IMC calibration runs, which are repeated three times to account for the stochastic nature of the process. The mean value of the repeated runs is calculated and presented alongside the best value, which is closest to the observed. Refer to Fig. 7 for a visual representation of these data.

At the beginning of each calibration, we set the values of the maximum erode limit and lateral erosion rate parameters to their respective IMC initial values, which are deliberately perturbed from observed values. We conducted the experiments using IMC initial values selected from positions both proximal (termed near seed) and distal (termed far seed) relative to the observed values of each parameter. This approach was designed to affirm IMC's effectiveness irrespective of the initial proximity of the IMC initial values to their observed counterparts.

The experimental outcomes are detailed in Fig. 7, with corresponding calibration data provided in Table 5. These results illustrate IMC's capability to accurately re-estimate the true values of both the parameters. Specifically, for the maximum erode limit, we observe a minimum absolute error of 0.0028 (), with the best-estimated value being 0.0228 (2×5 (best)) compared to the observed value of 0.02. In the case of the lateral erosion rate, the minimum absolute error recorded was 0.302, where the best-estimated value reached 10.302 (5×5 (best)), closely aligning with the observed value of 10.

The slight deviations in accurately estimating the observed parameter values can potentially be linked to the sensitivity of the MSE loss function to noise, wherein minor discrepancies could be amplified into seemingly larger differences. Moreover, the intricate nonlinear relationship between a parameter in the CL model and its resultant geomorphic output can occasionally lead IMC into local optima traps. These challenges could be mitigated by adopting a tailored loss function specifically designed to capture the complex geomorphological dynamics more effectively. Additionally, incorporating strategies such as stochastic perturbation and advanced optimization techniques may facilitate overcoming the hurdles of local minima, thereby enhancing the fidelity of parameter estimation in geomorphological simulations.

This study introduces a versatile, adaptable, and scalable calibration algorithm for numerical models, demonstrated through its application in calibrating the landscape evolution model CAESAR-Lisflood.

The outcome of this calibration is the generation of geomorphic data for a gully catchment landscape evolution scenario, with significantly closer predictions to observed data compared to uncalibrated and manual approaches.

The proposed calibration technique is adaptable to various numerical models and requires minimal extra input beyond conventional CL inputs. However, it has its limitations. Although erosion volumes are similar to target patterns in both space and volume, discrepancies remain. Specifically, the manual + IMC approach tends to spread erosion volume across the study area in small amounts, affecting calibration precision. Additionally, the calibration process is inherently stochastic, resulting in non-unique, varying parametric vectors across calibration sessions, even under identical conditions. We used mean square error (MSE) for its ease and ability to emphasize large errors, widely applied in areas such as computer vision. However, MSE's equal treatment of all data points overlooks differences in regional importance, potentially resulting in high MSE scores that fail to reflect true perceptual resemblance.

In future work, the development of a custom loss function tailored to intricately capture the dynamic complexities present in geomorphic imagery is proposed. Such advancement aims to refine the measure of similarity between modeled and real landscapes, resulting in a more accurate and precise loss function. This enhancement is anticipated to significantly improve calibration accuracy within geomorphological modeling. It is important to highlight that our IMC framework offers flexibility and can readily accommodate alternative evaluation metrics, should they better suit the user's specific requirements.

However, exploring the applicability and effectiveness of the IMC approach in calibrating other physical or numerical models beyond the CL model warrants investigation. Assessing the IMC method's performance across diverse geomorphic environments, spanning various geographical locations and temporal scales, is crucial. Such comprehensive evaluation will illuminate the strengths and potential limitations of the IMC approach when applied to specific geomorphic contexts or environmental settings. Additionally, it would be intriguing to create a synthetic final landscape or DEM. Investigating how the IMC method autonomously calibrates CL or other numerical geomorphic models to achieve this predetermined end state could offer novel insights into the method's predictive capabilities and its utility in forward modeling geomorphological changes.

A1 Parameter list preparation and value selection

In Table A1, we present the exact structure of the PD file for reference. The names of all the parameters that need to be calibrated are included in the top row. In the second row, we include the names of these parameters as represented in the CL configuration XML file; e.g., the parameter max erode limit is represented using “maxerodelimit”. The next two rows present the numeric upper and lower limits of the IMC search for a certain parameter. Finally, the last two rows present the prior (μ,σ) and (mean, SD) values that define the Gaussian distribution from where the IMC starts its search. The prior (mean), also called the IMC initial values, can be adjusted with the help of values published in the literature. The prior (SD) value is set on intuition and may be updated based on the search space and the scale of values for a certain parameter.

A2 Procedure to set up the calibration

A2.1 Data preparation

The DEMs should be aligned, have the same resolution, and have all no-data values set to −9999. One can use the setnull tool from ArcGis Pro for this purpose. We tested with DEM rasters that have been converted to Esri ASCII text files (with .txt extension).

A2.2 Initializing the calibration

As mentioned before, the XML file serves as a read–write center for the calibration algorithm and the numerical algorithm. Thus, we follow the following two-step process for initiating the calibration process:

-

Prepare your template XML and take care of all warnings. Open a CL (orig.) exe and load the template XML file. Next, browse and select each of the relevant DEM and rainfall time series data files. Finally, save the changes back to the XML template and load the data to check for warnings.

Some parameters also need to be adjusted depending on the data/DEM and the temporal separation between DEM year 0 and DEM year T. Calculate the hour (h) and minute (min) equivalent of the time difference between the two DEMs. Update parameter “Save file every min” with minutes and all other time parameters on the “Files” page on CL (orig.). Next, on the “Numerical” page, update “max run duration” with hours+1. For example, in the case where DEM year 0 (July 2019) and DEM year T (July 2021), i.e., a difference of 3 years, so h=17544 and min=1 052 640.

Resolve all the warnings and exit.

These changes can also be made directly in the template XML file through an XML editor, but using the CL GUI is more efficient and error-free.

-

Use updated template XML. Now this template XML is updated with the relevant file locations and other data relevant to the experiment. It should be placed in the Calibration-alg. package, and calibration may be initiated from the console.

Source code is maintained on GitHub at https://github.com/cbanerji/IMC (last access: 16 December 2024), and the exact version used in this study (including executable code, data, and other relevant files) is archived on Zenodo at https://doi.org/10.5281/zenodo.12747679 (Banerjee, 2024).

CB led the research on the topic and designed and implemented the IMC algorithm, making substantial contributions to the conception and execution of the study. KN, whose vision inspired this work, provided critical insights, played a significant role in writing the manuscript draft, and contributed to substantial revisions that enhanced the intellectual content. CF, GH, and TC significantly contributed through their insightful feedback and thorough review of both the technical content and the overall paper. Their rigorous assessments were crucial in ensuring the accuracy, validity, and robustness of the research article.

The contact author has declared that none of the authors has any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This research has been supported by Advance Queensland Industry Research Fellowships (grant no. AQIRF024-2021RD4).

This paper was edited by Andy Wickert and reviewed by Jorge Ramirez and Christopher Feeney.

Abbaspour, K. C., Johnson, C. A., and van Genuchten, M. T.: Estimating uncertain flow and transport parameters using a sequential uncertainty fitting procedure, Vadose Zone J., 3, 1340–1352, https://doi.org/10.2136/vzj2004.1340, 2004. a

Banerjee, C.: Iterative Model Calibration (IMC), Zenodo [code and data set], https://doi.org/10.5281/zenodo.12747679, 2024. a, b

Barnhart, K. R., Tucker, G. E., Doty, S. G., Shobe, C. M., Glade, R. C., Rossi, M. W., and Hill, M. C.: Inverting topography for landscape evolution model process representation: 1. Conceptualization and sensitivity analysis, J. Geophys. Res.-Earth, 125, e2018JF004961, https://doi.org/10.1029/2018JF004961, 2020. a

Beck, H., Hirpa, F. A., Lorini, V., Lorenzo, A., and Tomer, S. K.: LISFLOOD Hydrological Model Calibration Tool, Joint Research Centre (JRC), Princeton; European Commission, https://ec-jrc.github.io/lisflood-calibration/1_introduction/ (last access: 2 January 2024), 2018. a, b, c, d

Becker, R., Koppa, A., Schulz, S., Usman, M., aus der Beek, T., and Schueth, C.: Spatially distributed model calibration of a highly managed hydrological system using remote sensing-derived ET data, J. Hydrol., 577, 123944, https://doi.org/10.1016/j.jhydrol.2019.123944, 2019. a

Beven, K.: A manifesto for the equifinality thesis, J. Hydrol., 320, 18–36, 2006. a

Beven, K. and Binley, A.: The future of distributed models: Model calibration and uncertainty prediction, Hydrol. Process., 6, 279–298, 1992. a

Beven, K. and Freer, J.: Equifinality, data assimilation, and uncertainty estimation in mechanistic modelling of complex environmental systems using the GLUE methodology, J. Hydrol., 249, 11–29, 2001. a

Braun, J. and Willett, S. D.: A very efficient O(n), implicit and parallel method to solve the stream power equation governing fluvial incision and landscape evolution, Geomorphology, 180-181, 170–179, 2013. a

Brunetti, G., Stumpp, C., and Simunek, J.: Balancing exploitation and exploration: A novel hybrid global-local optimization strategy for hydrological model calibration, Environ. Modell. Softw., 150, 105341, https://doi.org/10.1016/j.envsoft.2022.105341, 2022. a, b, c, d

Coulthard, T. J., Kirkby, M. J., and Macklin, M. G.: Modelling geomorphic response to environmental change in an upland catchment, Hydrol. Process., 21, 2895–2913, 2007. a

Coulthard, T. J., Neal, J. C., Bates, P. D., Ramirez, J., de Almeida, G. A., and Hancock, G. R.: CAESAR Lisflood Download-1.9j, Sourceforge [code], https://sourceforge.net/projects/caesar-lisflood/, last access: 28 February 2024. a

ELVIS: ELVIS-Elevation and Depth – Foundation Spatial Data, ELVIS [data set], https://elevation.fsdf.org.au/, last access: 19 January 2024. a, b

Feeney, C. J., Chiverrell, R. C., Smith, H. G., Hooke, J. M., and Cooper, J. R.: Modelling the decadal dynamics of reach-scale river channel evolution and floodplain turnover in CAESAR-Lisflood, Earth Surf. Proc. Land., 45, 1273–1291, 2020. a, b, c, d

Gupta, H. V., Sorooshian, S., and Yapo, P. O.: Toward improved calibration of hydrologic models: Multiple and noncommensurable measures of information, Water Resour. Res., 34, 751–763, 1998. a

Hamman, J. J., Nijssen, B., Bohn, T. J., Gergel, D. R., and Mao, Y.: The Variable Infiltration Capacity model version 5 (VIC-5): infrastructure improvements for new applications and reproducibility, Geosci. Model Dev., 11, 3481–3496, https://doi.org/10.5194/gmd-11-3481-2018, 2018. a

Hancock, G. R. and Willgoose, G. R.: The use of a landscape simulator in the validation of the SIBERIA landscape evolution model: transient landforms, Earth Surf. Proc. Land., 27, 1321–1334, https://doi.org/10.1002/esp.414, 2001. a

Jetten, V., Govers, G., and Hessel, R.: Erosion models: quality of spatial predictions, Hydrol. Process., 17, 887–900, https://doi.org/10.1002/hyp.1131, 2003. a

Lane, S. N., Richards, K. S., and Chandler, J. H.: Landform monitoring, modelling, and analysis, John Wiley & Sons, ISBN 978-0-471-96977-8, 1999. a

McCabe, M., Blancard, B. R.-S., Parker, L. H., Ohana, R., Cranmer, M., Bietti, A., Eickenberg, M., Golkar, S., Krawezik, G., Lanusse, F., Pettee, M., Tesileanu, T., Cho, K., and Ho, S.: Multiple physics pretraining for physical surrogate models, arXiv [preprint], https://doi.org/10.48550/arXiv.2310.02994, 2023. a

Nash, J. and Sutcliffe, J.: River flow forecasting through conceptual models part I – A discussion of principles, J. Hydrol., 10, 282–290, 1970. a

Oreskes, N., Shrader-Frechette, K., and Belitz, K.: Verification, validation, and confirmation of numerical models in the earth sciences, Science, 263, 641–646, 1994. a

Peleg, N., Skinner, C., Fatichi, S., and Molnar, P.: Temperature effects on the spatial structure of heavy rainfall modify catchment hydro-morphological response, Earth Surf. Dynam., 8, 17–36, 2020. a, b, c, d

Phillips, J. D.: Sources of nonlinearity and complexity in geomorphic systems, Prog. Phys. Geog., 27, 1–23, https://doi.org/10.1191/0309133303pp340ra, 2003. a

Ramirez, J. A., Mertin, M., Peleg, N., Horton, P., Skinner, C., Zimmermann, M., and Keiler, M.: Modelling the long-term geomorphic response to check dam failures in an alpine channel with CAESAR-Lisflood, Int. J. Sediment Res., 37, 687–700, 2022. a, b, c, d

Shen, C., Appling, A. P., Gentine, P., Bandai, T., Gupta, H., Tartakovsky, A., Baity-Jesi, M., Fenicia, F., Kifer, D., Li, L., et al.: Differentiable modelling to unify machine learning and physical models for geosciences, Nature Reviews Earth & Environment, 4, 552–567, 2023. a

Simunek, J., Van Genuchten, M. T., and Sejna, M.: Recent developments and applications of the HYDRUS computer software packages, Vadose Zone J., 15, 1–25, https://doi.org/10.2136/vzj2016.04.0033, 2016. a

Skinner, C. J. and Coulthard, T. J.: Testing the sensitivity of the CAESAR-Lisflood landscape evolution model to grid cell size, Earth Surf. Dynam., 11, 695–711, 2023. a

Skinner, C. J., Coulthard, T. J., Schwanghart, W., Van De Wiel, M. J., and Hancock, G.: Global sensitivity analysis of parameter uncertainty in landscape evolution models, Geosci. Model Dev., 11, 4873–4888, https://doi.org/10.5194/gmd-11-4873-2018, 2018. a, b, c, d, e, f

Tang, M., Liu, Y., and Durlofsky, L. J.: A deep-learning-based surrogate model for data assimilation in dynamic subsurface flow problems, J. Comput. Phys., 413, 109456, https://doi.org/10.1016/j.jcp.2020.109456, 2020. a

Temme, A. and Schoorl, J.: Quantitative modeling of landscape evolution, Neth. J. Geosci., 88, 207–224, 2009. a

Tsai, W.-P., Feng, D., Pan, M., Beck, H., Lawson, K., Yang, Y., Liu, J., and Shen, C.: From calibration to parameter learning: Harnessing the scaling effects of big data in geoscientific modeling, Nat. Commun., 12, 5988, https://doi.org/10.1038/s41467-021-26107-z, 2021. a, b, c, d

Tucker, G. and Slingerland, R.: Drainage basin responses to climate change, Water Resour. Res., 33, 2031–2047, https://doi.org/10.1029/97WR00409, 1997. a

Tucker, G. E. and Hancock, G. R.: Modelling landscape evolution, Earth Surf. Proc. Land., 35, 28–50, 2010. a

Wang, D., Wang, M., Liu, K., and Xie, J.: An assessment of short–medium-term interventions using CAESAR-Lisflood in a post-earthquake mountainous area, Nat. Hazards Earth Syst. Sci., 23, 1409–1423, https://doi.org/10.5194/nhess-23-1409-2023, 2023. a, b, c, d

WMIP, Q. G.: Water monitoring information, QLD,, https://water-monitoring.information.qld.gov.au/, last access: 19 January 2024. a, b

- Abstract

- Introduction

- Preliminaries of model calibration

- Iterative Model Calibration (IMC)

- Case study: Calibration of LEMs for predicting gully evolution

- Comparisons and experimental analysis

- Conclusions

- Appendix A

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Financial support

- Review statement

- References

- Abstract

- Introduction

- Preliminaries of model calibration

- Iterative Model Calibration (IMC)

- Case study: Calibration of LEMs for predicting gully evolution

- Comparisons and experimental analysis

- Conclusions

- Appendix A

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Financial support

- Review statement

- References