the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Development of a high-resolution coupled SHiELD-MOM6 model – Part 1: Model overview, coupling technique, and validation in a regional setup

Kun Gao

Brandon G. Reichl

Lauren Chilutti

Lucas Harris

Rusty Benson

Niki Zadeh

Jing Chen

Jan-Huey Chen

Cheng Zhang

We present a new high-resolution coupled atmosphere–ocean model, SHiELD-MOM6, which integrates the Geophysical Fluid Dynamics Laboratory (GFDL) advanced atmospheric model, the System for High-resolution modeling for Earth-to-Local Domains (SHiELD), the Modular Ocean Model version 6 (MOM6), and the Sea Ice Simulator (SIS2). The model leverages the Flexible Modeling System (FMS) coupler and its innovative exchange grid to enable a robust and scalable two-way interaction between the atmosphere and ocean. The atmospheric component is built on the non-hydrostatic Finite-Volume Cubed-Sphere Dynamical Core (FV3) with the latest version of the SHiELD physics parameterization suite, while the ocean component is the latest version of MOM, supporting kilometer-scale, high-resolution and regional applications. Validation of this new coupled model is demonstrated through a suite of experiments, including idealized hurricane simulations and a realistic North Atlantic case study featuring Hurricane Helene of 2024. By analyzing the storm intensity, its structure, and its effects on the ocean phenomena such as the upwelling and sea-level changes, the results reveal that air–sea interactions are effectively captured. Scalability tests further confirm the model's computational efficiency. This work established a unified modular cornerstone for advancing high-resolution coupled modeling with significant implications for weather forecasting and climate research.

- Article

(8968 KB) - Full-text XML

- BibTeX

- EndNote

With the increasingly larger computing resources, advancing weather and climate models to higher resolutions has become both feasible and a natural evolution in model development. This progression enables a more accurate representation of key processes from the explicit simulation of small-scale convection, which often interacts with larger mesoscale atmospheric dynamics, to essential air–sea exchanges and oceanic processes. These improvements are vital for enhancing forecast skill and refining climate projections. Moreover, accurately simulating weather and climate extremes requires models that capture the full spectrum of underlying physical mechanisms, deepening our understanding of event formation, intensity, and impacts. Such capabilities are essential for comprehensive risk assessment and the development of effective mitigation strategies.

Building on decades of advancements in numerical modeling at the Geophysical Fluid Dynamics Laboratory (GFDL), this work introduces the SHiELD-MOM6 model, a high-resolution coupled system that seamlessly integrates GFDL's advanced atmospheric model, SHiELD, with the Modular Ocean Model version 6 (MOM6) and the Sea Ice Simulator (SIS2). By coupling these components, the model provides a robust framework for investigating air–sea interactions at fine scales, offering new insights into extreme weather events and climate variability by exploring key processes such as tropical cyclone dynamics, storm surges, oceanic heat transport, and sea ice variability.

Coupling the atmospheric and oceanic components presents inherent challenges due to their differing grid geometries, numerical schemes, and time-stepping protocols. To overcome these obstacles, the SHiELD-MOM6 model employs the Flexible Modeling System (FMS) full coupler along with its innovative exchange grid framework (Balaji et al., 2006). This approach facilitates the robust two-way transfer of prognostic variables, fluxes, and key physical processes between the grid of the atmospheric model and that of the ocean model. In doing so, the framework preserves the conservation of mass, heat, and momentum across scales while accurately capturing the feedback mechanisms critical to any physical phenomenon. This coupling technique has been used in GFDL's flagship models such as SPEAR, CM4, and ESM4 (Delworth et al., 2020; Held et al., 2019; Dunne et al., 2020) and their predecessors for many years, demonstrating its reliability in simulating complex earth system processes across scales.

This paper is organized as follows. Section 2 describes the primary components of the model, including detailed overviews of the infrastructure layer and the atmosphere and ocean components. Section 3 outlines the coupling methodology, including the science framework and software infrastructure. This emphasizes the role of the FMS full coupler and exchange grid in coupling the model components and details the fluxes exchanged between atmosphere and ocean. Section 4 presents the validation experiments, discussing both idealized hurricane simulation and a realistic simulation of Hurricane Helene of September 2024. Section 5 details the model scalability and computational performance. Section 6 summarizes the key findings and outlines current and future development efforts.

In this section, we present the main components used to build the SHiELD-MOM6 model. GFDL's infrastructure layer, the Flexible Modeling System (FMS), is presented first. Second is the atmosphere model SHiELD, based on the Finite-Volume Cubed-Sphere Dynamical Core (FV3). Last are GFDL's Modular Ocean Model version 6 (MOM6) and the Sea Ice Simulation version 2 (SIS2). References for each component can be found in the model documentation or through the links in Appendix A.

2.1 Infrastructure layer

The Flexible Modeling System (FMS) was one of the first modeling frameworks developed to facilitate the construction of coupled models and has been under continued development since 1998 at GFDL. It is a software environment that supports the efficient development, construction, execution, and scientific interpretation of atmospheric, oceanic, and climate system models written in Fortran for high performance computing (HPC) systems. This framework allows an efficient development of numerical algorithms and computational tools across various high-end computing architectures using common user-friendly representations of the underlying platforms. It supports distributed and shared memory systems, as well as high-performance architectures. At GFDL, scientific groups can simultaneously develop new physics and algorithms, coordinating periodically through this framework. FMS does not determine model configurations and parameter settings or choose among various options, as these require scientific research. The development of new earth system model components, from a science perspective, lies outside its scope; however, the collaborative software review process for contributed model components remains a vital part of the framework in promoting scientifically sound implementation and model behavior. FMS includes the following:

-

Message Passing Interface (MPI) domain decomposition: This provides software infrastructure for the seamless and efficient utilization of MPI libraries for scalable parallel computations.

-

Software infrastructure: This provides tools for parallelization, I/O, data exchange between model grids, time-stepping orchestration, makefiles, and sample run scripts, insulating users from machine-specific details.

-

Standardized interfaces: This ensures standardized interfaces between component models, coordinates diagnostic calculations, and prepares input data. It includes common pre-processing and post-processing software when necessary.

2.2 Atmosphere components

The atmospheric component model we use in the regional coupled system is the System for High-resolution modeling for Earth-to-Local Domain (SHiELD), which was built and has been continuously developed at GFDL as an advanced model for a broad range of applications (Harris et al., 2020).

SHiELD employs FV3, a non-hydrostatic finite-volume cubed-sphere dynamical core that has been in development at GFDL for almost three decades (Lin and Rood, 1996, 1997; Lin, 2004; Putman and Lin, 2007; Harris and Lin, 2013; Chen et al., 2013; Harris et al., 2016; Mouallem et al., 2022, 2023; Santos et al., 2025). It is used in many weather and climate models for a wide range of applications, from short-term weather forecasts to centuries-long climate simulations, moving nest hurricane forecasts, chemical and aerosol transport modeling, cloud-resolving modeling, and so on (Cheng et al., 2024; Ramstrom et al., 2024; Harris et al., 2023; Bolot et al., 2023; Merlis et al., 2024a, b). FV3 solves the hydrostatic or non-hydrostatic compressible Euler equations on a gnomonic cubed-sphere grid with a Lagrangian vertical coordinate. The algorithm is fully explicit except for fast vertically propagating sound and gravity waves which are solved by the semi-implicit method. The long time step of the solver also serves as the physics time step. Within each long time step, the user can specify the number of vertical remapping loops, during which subcycled tracer advection is performed. Additionally, the number of acoustic time steps per remapping loop can be set, defining an acoustic time step in which sound and gravity wave processes are advanced and thermodynamic variables are advected. Coupling with other components, such as the ocean, will occur at intervals corresponding to a multiple of the long time step (physics time step).

The detailed description of the solver's horizontal and vertical Lagrangian discretizations can be found in Lin and Rood (1996, 1997) and Lin (2004). FV3's numerics are extensively described in the aforementioned references and will not be repeated here. However, its versatility and computational efficiency make it a strong foundation for a variety of atmospheric modeling applications, including high-resolution weather forecasting and climate simulations.

The physics parameterizations in SHiELD were originally adopted from the Global Forecast System (GFS) physics package but have been significantly updated. Currently, we use the GFDL microphysics scheme (Zhou et al., 2019), the eddy-diffusivity mass-flux (EDMF) boundary layer scheme (Zhang et al., 2015), the scale-aware simplified Arakawa–Schubert (SAS) of Han et al. (2017), the Noah land surface model of Ek et al. (2003) or Noah-MP of Niu et al. (2011), and a modified version of the mixed-layer ocean of Pollard et al. (1973). Three major SHiELD configurations are being heavily tested and updated continuously: (a) global SHiELD (Harris et al., 2020; Zhou et al., 2024), (b) T-SHiELD (Gao et al., 2021, 2023), and (c) C-SHiELD (Harris et al., 2019; Kaltenbaugh et al., 2022). SHiELD could also be configured differently depending on the application of interest; for example, S-SHiELD for seasonal to subseasonal prediction is being developed. Notably, all SHiELD configurations share the same codebase and executable and pre/post-processing tools, adhering to the unified modeling philosophy of “one code, one executable, one workflow”. In this work, we employ a regional configuration of SHiELD based on the limited-area configuration of FV3 (Black et al., 2021), which has been widely utilized in both research and operational settings. This setup has demonstrated skill in providing accurate forecasts of up to 60 h with minimal computational resources (Black et al., 2021).

2.3 Ocean components

The Modular Ocean Model version 6 (MOM6) and the Sea Ice Simulator version 2 (SIS2), developed at GFDL, provide a robust framework for simulating ocean and sea ice processes with high accuracy and computational efficiency (Adcroft et al., 2019). MOM6 employs a finite-volume approach on a C-grid, enabling the conservation of mass, heat, and tracers while allowing for flexibility in resolving complex oceanic features, such as boundary currents, mesoscale eddies, and thermohaline circulations. Its vertical Lagrangian remapping algorithm allows the usage of any vertical coordinate to remap horizontal layers to a Eulerian reference, implicitly resolving advection and effectively eliminating the Courant–Friedrichs–Lewy (CFL) restriction in the vertical direction (similar to FV3) (see Griffies et al., 2020). MOM6 is highly configurable, supporting applications ranging from idealized studies to high-resolution global simulations and earth system models.

SIS2 complements MOM6 by simulating the dynamics and thermodynamics of sea ice, including the growth, melt, deformation, and ridging processes of ice. It incorporates advanced parameterizations to model sea ice interactions with the ocean and atmosphere, such as brine rejection, surface albedo, and momentum fluxes. These capabilities allow SIS2 to capture the essential feedback mechanisms between sea ice, ocean circulation, and atmospheric forcing.

The coupling of MOM6 and SIS2 through the FMS framework enables the seamless integration of ocean and sea ice dynamics with atmospheric processes. The exchange grid facilitates conservative and accurate flux exchanges, ensuring a realistic representation of interfacial processes, such as heat and momentum transfer. The inclusion of SIS2 enhances the model's ability to simulate polar and high-latitude phenomena, such as sea ice extent variability and its impact.

The physical model configuration of MOM6 follows closely to that of Adcroft et al. (2019). We make a few changes, shifting from a hybrid vertical coordinate designed for climate simulation to employ a telescoping resolution z* vertical coordinate that yields relatively fine grid spacing in the upper ocean (see Reichl et al., 2024, their Fig. 1). The surface vertical grid spacing is 2 m in this configuration, increasing to 10 m at about 100 m depth. The ocean initial condition imposes a 20 m mixed-layer depth everywhere, with a 31 °C mixed-layer temperature and a gradient of 0.05 °C m−1 below. Vertical mixing in the ocean surface boundary layer is described by Reichl and Hallberg (2018), including a wind-speed-dependent Langmuir turbulence parameterization following Reichl and Li (2019) and Li et al. (2017). Stratified shear-driven mixing is parameterized following Jackson et al. (2008). While MOM6 can be configured to work with open boundary conditions, we do not employ that since it has little impact on the simulations presented here.

This section outlines the coupling methodology. The first subsection thoroughly examines the variables exchanged that underpin the primary physical processes essential to the coupling procedure. The second subsection details the technical framework and implementation strategy that facilitate an efficient exchange and interaction among model components. It is worth mentioning that the land component is still coupled through the SHiELD physics suite rather than at the full coupler level. Work is currently in progress to integrate GFDL's latest land model at the coupler level.

3.1 Physical processes

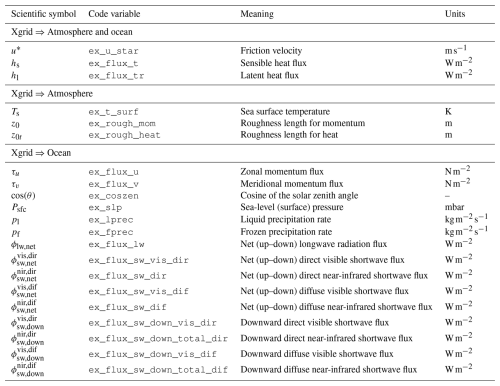

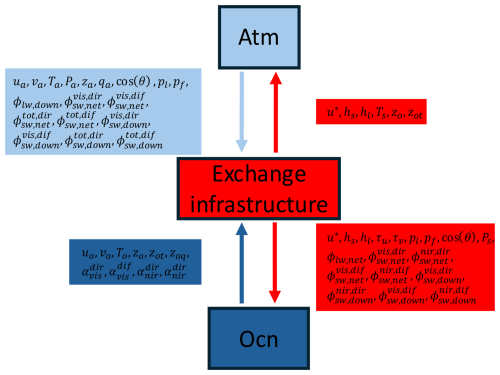

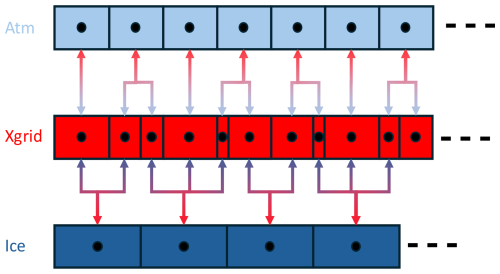

As discussed in the previous section, any variable or parameter can be projected between the native grids of model components and the exchange grid. In the current model, several dynamic and physical variables from both the atmosphere and ocean are mapped onto the exchange grid (called Xgrid hereafter), where relevant quantities are computed and then projected back to each component, as shown in Fig. 1.

Figure 1Key variable exchanges through the atmosphere, exchange grid, and ocean components. A full description is shown in Tables 1 and 2.

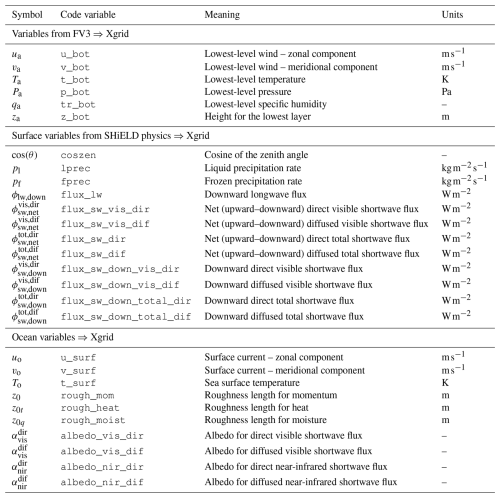

Table 1 details the atmosphere- and ocean-related variables projected onto the exchange grid. Atmosphere variables are categorized into dynamic and physics variables. The dynamic variables from FV3 include wind components at the lowest level, surface pressure, temperature, sea-level pressure, lower-layer height, and tracer data. The physics variables, which reflect surface-level outputs from the model's physics suite, encompass different shortwave and longwave radiation fluxes, liquid and frozen precipitation rates, and the cosine of the zenith angle. The ocean-related variables include surface parameters (at the shallowest model level) such as surface current components, sea surface temperature, and various roughness factors for momentum, heat, and moisture. In addition, albedos and their directional fluxes in both visible and near-infrared wavelengths are passed through the coupler, which are crucial for the energy balance between the ocean surface and the atmosphere.

Table 1Summary of the key atmosphere and ocean variables projected onto the Xgrid. Atmosphere variables are categorized into dynamic variables (output from the FV3 dynamical core) and physics variables (surface-level outputs from the physics suite).

In the GFDL FMS coupler (described in the following section), air–sea fluxes of momentum, heat, and moisture (u*, Hs, Hl) are computed using a bulk aerodynamic method. These fluxes are essential for transferring energy and mass between the atmosphere and ocean components, such as SHiELD and MOM6. At the heart of this calculation is the Monin–Obukhov similarity theory (MOST), which accounts for the impact of atmospheric stability on the surface exchange coefficients for momentum, heat, and moisture (Held, 2001). These coefficients are then used in the bulk formulas to calculate the fluxes, based on input fields like near-surface air properties, sea surface temperature, and surface ocean currents.

Table 2 summarizes the variables computed on the exchange grid and projected back to the atmosphere and ocean. For the atmosphere, this includes frictional velocity and ocean surface temperature; sensible and latent heat fluxes; and roughness lengths for momentum, heat, and moisture. For the ocean, sensible and latent heat fluxes, friction velocity, zonal and meridional momentums, precipitation rates, and several shortwave and longwave fluxes are considered.

3.2 Software framework

The SHiELD_build system was initially developed by the Modeling Systems Division (MSD) at GFDL in 2015. It was then known as the fv3GFS_build system before being renamed to SHiELD_build in 2020. It employed a simplified version of the FMS coupler, which is used by other GFDL models such as SPEAR, CM4, and ESM4. The official repository on GitHub has been actively maintained and today it supports various model workflows, including a SOLO core FV3, SHiELD, SHiELD employing the full coupler, SHiELD and MOM6, and SHiELD MOM6 and WaveWatch III (under current development). The system offers multiple compilation modes and compiler options based on model configurations, including Intel, GNU, and NVHPC compilers. The main workflow is to compile model components into libraries, starting from a basic underlying infrastructure layer such as NCEP and FMS libraries to other model components such as the atmosphere and ocean, linking them through the FMS coupler to finally produce the final executable. For a code overview, please refer to Appendix A.

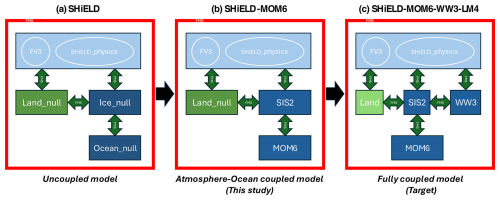

The FMS full coupler is a fundamental infrastructure layer serving as the main program driver and coupling component models: atmosphere, ocean, ice, and land. All GFDL models utilize this driver, even those running an individual model component such as AMIP (Atmospheric Model Intercomparison Project) or OMIP (Ocean Model Intercomparison Project) configurations. For example, in an AMIP configuration, the atmospheric model is run using observed or forced sea surface temperatures and sea ice as boundary conditions, without coupling to a physically based ocean model that integrates in time. This setup is used to assess the performance of atmospheric models and to understand how the simulated climate responds to the prescribed conditions. The FMS full coupler supports this configuration by utilizing null modules for ocean, ice, and land. An illustrative schematic is shown in Fig. 2. The FV3 dynamical core computes prognostic dynamic quantities in the atmosphere; SHiELD_physics drives the physics tendencies from radiation, planetary boundary layer (PBL), precipitation, etc.; and FMS represents the libraries described in Sect. 2.1. The other null components, including ocean_null, ice_null and land_null, represent no-op modules to satisfy the full coupler requirements. For an OMIP configuration, the null modules of the ocean and ice are replaced by the corresponding source codes of the ocean and ice, accompanied by a null module for the atmosphere.

Figure 2Schematic of the SHiELD model component infrastructure, showing the configuration utilizing the FMS full coupler (a), the updated configuration incorporating MOM6 and SIS2 for the ocean and ice components (b), and the future target model including land and wave model components (c). Red components represent the FMS infrastructure layer, and striped diagonal boxes represent null components.

It should be noted that, previously, SHiELD did not utilize the FMS full coupler; however, it has now been fully integrated with other GFDL models using the complete FMS coupler infrastructure, as detailed in Mouallem (2024).

In the coupled SHiELD and MOM6/SIS2, the ocean and ice null components are replaced by the MOM6 and SIS2 source codes, fulfilling the coupler requirements for the ocean and ice modules. This process is illustrated in the center schematic of Fig. 2. To achieve a consistent two-way coupling between the atmosphere and ocean, dynamic variables from FV3 and physics variables from SHiELD's physics must be accurately passed from the atmosphere to the exchange grid; meanwhile, ocean variables projected onto the exchange grid must be properly passed into the atmosphere dynamics and physics suite. The ultimate goal is to develop a fully coupled model, including comprehensive components of the land and wave models, extending the setup presented in this paper.

It is important to note that the ice model, represented by SIS2 here, is required for the full coupler to enable complete ocean–atmosphere coupling, as atmospheric fluxes projected onto the exchange grid must first pass through the ice layer before reaching the ocean component and vice versa. Consequently, from an infrastructure standpoint, SIS2 is included in this setup even though no ice is present in the simulations shown later on. Additionally, achieving a fully realistic configuration including realistic ice will require further development.

This design choice reflects the broader architecture of GFDL coupled model framework, where the atmosphere communicates with the land and sea ice components via the exchange grid. It is worth noting that the ice and ocean run on the same grid, eliminating the need for interpolation when exchanging fluxes between them. From a physical standpoint, when sea ice is present, it acts as a barrier between the atmosphere and ocean (modulating fluxes). Therefore, atmospheric fluxes have to pass through this layer before being passed to the ocean and vice versa. This ensures a realistic representation of air–ice–ocean interactions. To keep the algorithm simple and consistent, the flux route structure is used even over open waters; however, in this case there is no physical atmosphere–ice and ice–ocean interactions. This approach keeps the algorithm consistent without introducing computational costs.

The GFDL exchange grid has been a main component of FMS used to facilitate data exchange between different model components, obeying scientific principles and maximizing computing efficiency (Balaji et al., 2006). Each component is discretized in a different way on a different grid depending on the science and computational requirements; for example, FV3 employs a cubed sphere grid, and the ice and ocean utilize a tripolar grid. Figure 3 presents a schematic representation of the exchange process between the atmosphere and ice. The process begins with projecting relevant variables onto the exchange grid: lower-layer atmospheric variables and surface-layer ocean variables, as indicated by the light-blue and mauve sides of the double arrows for the atmosphere and ice, respectively. Next, fluxes and physical processes within the surface boundary layer are computed on this intermediate grid. Finally, the updated quantities are mapped back to their respective native grids, with the projection directions represented by the red side of the double arrow. This coupling framework is highly flexible, allowing flux exchanges to occur at a user-defined time step, which should be a multiple of the model component time steps. Notably, the exchange process maintains conservation properties, making it suitable for applications ranging from short-term weather forecasting to long-term climate prediction.

Figure 3Schematic of a one-dimensional exchange grid and communication map between the atmosphere and ice components at different resolutions. The red sides of the arrow indicate the step where variables are projected from the exchange grid. The light-blue and mauve sides of the arrows represent the projection of variables onto the exchange grid from the atmosphere or ice components, respectively.

4.1 Idealized doubly periodic simulations

We perform idealized simulations of an axisymmetric hurricane following the initial condition of Reed and Jablonowski (2012). No steering flow is prescribed and a constant f-plane is imposed over the whole domain. The computational domain is similar to that of Gao et al. (2024), employing a square doubly periodic domain of 1000 km×1000 km and a resolution of 2 km centered at 20 N. Different from Gao et al. (2024), we do not employ telescopic nesting and only consider the top parent grid as the computational domain. The initial vortex has a maximum wind of 20 m s−1 at 125 km radius. The physical parameterization is consistent with the nested domain of the T-SHiELD configuration, as in Gao et al. (2021, 2023). We use the GFDL single-moment five-category microphysics scheme following Zhou et al. (2022); a turbulent kinetic energy-based eddy diffusivity mass flux (TKE-EDMF) boundary layer scheme, as for Han and Bretherton (2019); the Rapid Radiative Transfer Model for General Circulation Models radiation scheme, described in Iacono et al. (2008); and the scale-aware deep and shallow convection parameterizations in Han et al. (2017). For simplicity, and to validate the coupling workflow, we employ a matching ocean grid in terms of domain size and resolution. The ocean model configuration is as described in Sect. 2.3.

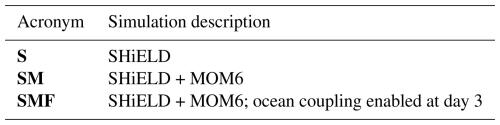

First, we run the standalone SHiELD model for 9 d, with the results denoted as S in Table 3. The second case, SM, couples SHiELD with MOM6 throughout the simulation. In the third case, we initially run the standalone SHiELD model for 3 d to spin up the tropical cyclone (TC) close to the rapid intensification phase, then introduce a dynamic MOM6 ocean just as the storm approaches full intensity. This case is referred to as SMF.

Table 3Acronyms and descriptions for the three simulations: standalone SHiELD, SHiELD coupled with MOM6, and SHiELD and MOM6 restarted from standalone SHiELD on day 3.

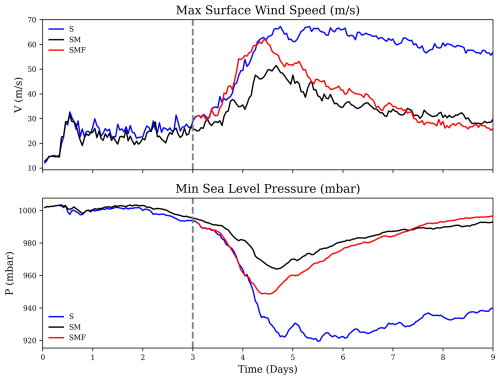

Figure 4 shows the time evolution of the maximum surface wind speed and minimum sea-level pressure for the simulations listed in Table 3. As observed, there is an initial transient period of approximately 3 d, after which the hurricane intensifies, reaching its peak just before day 5, as indicated by the S curve. In the SM simulation, dynamic ocean coupling enables surface cooling beneath the storm, which limits heat and enthalpy fluxes into the atmosphere and ultimately weakens the storm. In contrast, the S case uses a prescribed constant sea surface temperature, preventing ocean cooling and thus sustaining a stronger storm. Case SMF initiates from case S at day 3 and slowly converges to case SMF just before the 6th day. This demonstrates the progressive adjustment of the coupled system, highlighting the role of air–sea interactions in regulating storm intensity and further validating the atmosphere–ocean coupling mechanism as the system evolves toward a dynamically consistent state dictated by energy exchanges between the hurricane and the ocean.

Figure 4Time series of simulated maximum surface wind speed and minimum sea-level pressure for the simulation S, SM, and SMF, listed in Table 3.

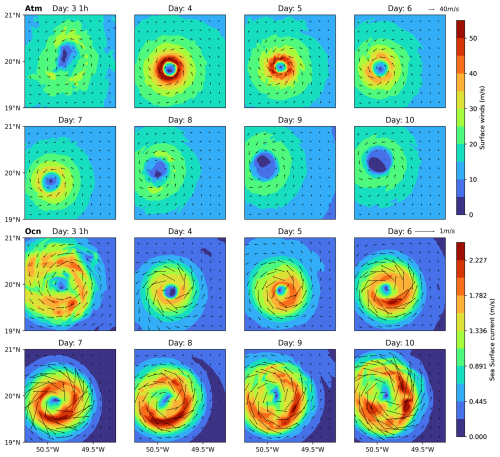

Figure 5 presents the time evolution of surface winds (top two rows) and sea surface currents (bottom two rows) from days 3 to 10 for the SMF simulation. The atmospheric response exhibits an intensifying hurricane, with maximum surface wind speeds peaking between day 4 and day 5, followed by a gradual weakening. The wind field structure maintains a well-defined circulation throughout the simulation, with a distinct eye forming during peak intensity. In the ocean response, strong surface currents develop in conjunction with the atmospheric forcing, with peak currents observed starting the 7th day. The currents exhibit a cyclonic structure, intensifying in response to the storm's wind stress and progressively evolving as the system reaches a more dynamically coupled state. The emergence of asymmetries in the ocean currents after day 7 highlights the increasing role of oceanic processes such as eddy formation and energy dissipation. As expected, due to the Coriolis effect, these currents are deflected to the right, resulting in a net outward flow away from the center. This outward transport induces upwelling, bringing colder water from deeper layers to the surface. Combined with wind-inducing vertical mixing at the ocean surface boundary layer, this leads to a cooling effect observable in the temperature profile shown next. This figure underscores the strong two-way interaction between the atmosphere and ocean, where momentum and energy exchange drive the evolution of both the storm and the oceanic circulation.

Figure 5Two-dimensional time evolution snapshots of surface winds and sea surface currents for simulation SMF. Black arrows correspond to localized velocity vectors.

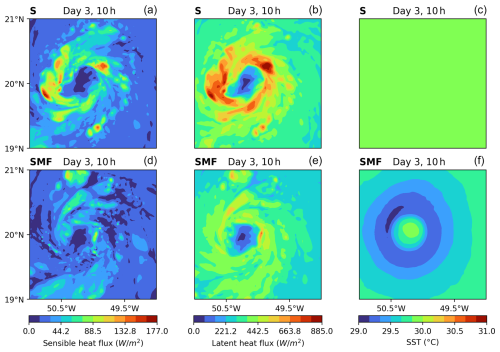

Figure 6 shows two-dimensional snapshots of sensible and latent heat fluxes and sea surface temperature (SST) at t=10 h into day 3 for simulations S and SMF. The top row corresponds to S, where the SST is held constant throughout the simulation, while the bottom row represents SMF, which includes ocean feedback mechanisms. Both the sensible and latent heat fluxes exhibit intense magnitudes in S compared to SMF. This difference is primarily attributed to the prescribed constant SST in S, which maintains a sustained and continuous supply of heat to the atmosphere. In contrast, SMF shows a reduction in both fluxes due to SST cooling induced by oceanic upwelling, which brings colder subsurface water to the surface. The cooling effect is clear in the SST panel for SMF, where a well-defined cold wake forms beneath the storm. This behavior is discussed in the subsequent figures.

Figure 6Two-dimensional snapshots of sensible and latent heat fluxes and sea surface temperature at t=10 h into day 3 for simulations S (a–c) and SMF (d–f).

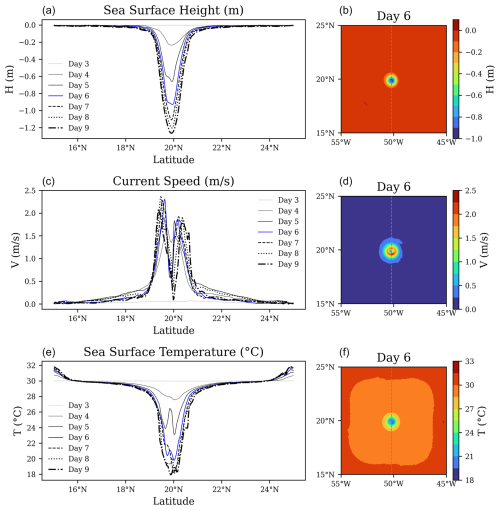

Figure 7 illustrates the ocean response to the hurricane from days 3 to 9, showing the daily evolution of key surface variables: sea surface height (SSH), current speed, and sea surface temperature (SST) in a latitudinal cross-section at the storm's center. The left panels depict the temporal evolution of these variables on each day, while the right panels show spatial snapshots on day 6. The SSH (top panel) exhibits a pronounced depression at the storm's center, which deepens over time in response to the intensifying low pressure, mirroring the hurricane's intensification phase. The middle panel shows the evolution of surface current speed, with peak currents forming near the storm's core and intensifying through day 9. The bottom panel illustrates the SST response, where significant cooling is observed beneath the storm, driven by wind-induced mixing and upwelling of colder subsurface water. The strong correlation between SSH, current speed, and SST highlights the dynamic coupling between the ocean and the atmosphere, reinforcing the role of ocean feedback in modulating storm intensity.

Figure 7Time evolution of sea surface height (a, b), sea surface current speed (c, d), and sea surface temperature (e, f) along the latitude section passed by the storm center in the SMF domain (a, c, e) from day 3 to day 9. Corresponding two-dimensional spatial snapshots of these variables on day 6 are shown on the right (b, d, f).

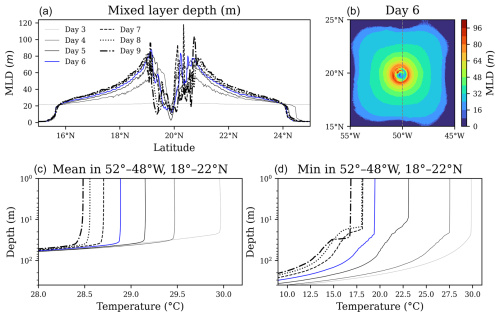

Figure 8 illustrates the evolution of key subsurface quantities, the ocean mixed-layer depth (MLD), and temperature in response to the simulated hurricane. We notice a progressive deepening of the mixed layer as the storm intensifies. The most pronounced deepening occurs at the storm's core at around days 8 and 9. This indicates a strong vertical mixing induced by hurricane-driven wind stress and turbulent processes. The top-right panel provides a spatial snapshot of MLD on day 6, revealing a well-defined radial structure with the deepest mixing concentrated near the storm's center.

Figure 8(a, b) Time evolution of the mixed-layer depth along a central latitude cross-section from day 3 to day 9, with a two-dimensional spatial snapshot of day 6 shown on the right. (c, d) The ocean temperature minimum and mean as a function of depth from day 3 to day 9.

The bottom panel represents the subsurface ocean temperature minimum and mean as a function of depth and time for a 4° box section at the domain's center. A progressive subsurface cooling is evident for both quantities, with the thermocline deepening over time as mixing entrains colder subsurface water. This further explains the evolution of the ocean surface cooling trend seen in the previous figures.

4.2 Realistic simulations

In this section, we consider a realistic domain spanning the North Atlantic region. We perform a simulation of Hurricane Helene to analyze and capture the ocean response. In the current simulation, the model domain is initialized at 00:00Z on 26 September 2024, when Hurricane Helene was still a Category 1 hurricane before undergoing rapid intensification to a Category 4 storm. The atmospheric initial conditions are taken from a GFS analysis. For the first case, the ocean is initialized at rest with a constant SST, while for the second case, the ocean is initialized from the GLORYS dataset (GLORYS12V1, 2018; Lellouche et al., 2021). The atmosphere component runs at a 1 km resolution, while the ocean component runs at a 3 km resolution to further validate the coupling process. The land surface model employed is NOAH-MP, which serves as the default land model in SHiELD. It is worth noting again that the coupling to the land model here is done through the SHiELD_physics suite and not at the full coupler level.

The goal of this analysis is to assess the coupling framework's performance qualitatively rather than to provide an accurate forecast of Helene's track and intensity, particularly in the first case. By using a quiescent ocean with constant SST, we can isolate the atmospheric effects on the ocean and evaluate the coupled model's behavior without additional environmental complexities.

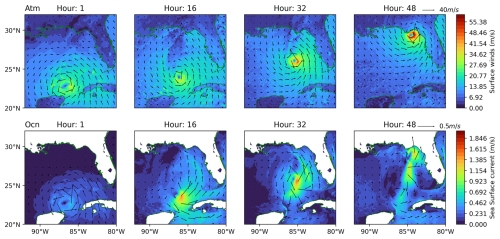

Figure 9 illustrates the interaction between atmospheric surface winds (top row) and ocean surface currents (bottom row). Initially, a symmetric wind field surrounds the storm, but as the hurricane intensifies, wind asymmetries emerge, particularly near the coastline. The strong hurricane surface winds generate rapid responses in the ocean, leading to the formation of strong currents. The ocean surface currents display a classic hurricane-induced structure (Bender et al., 1993), with intensified flow on the right-hand side of the storm track due to inertial resonance (Price, 1981). As the storm moves northward, ocean currents become more pronounced along the continental shelf, highlighting the role of bathymetric effects in modulating the ocean response.

Figure 9Two-dimensional time evolution snapshots of surface winds and sea surface currents for Hurricane Helene with an idealized ocean. Black arrows correspond to localized velocity vectors.

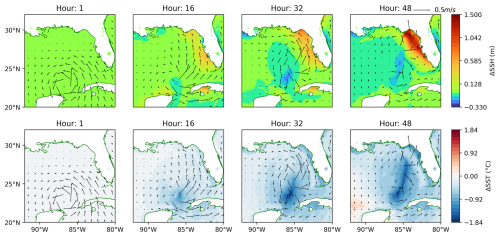

Figure 10 presents the evolution of SSH and SST in response to the hurricane in 16 h intervals compared to their initial values. The SSH panels (top row) indicate a developing storm surge along the northern Gulf Coast, with significant sea-level anomalies forming at the storm center. Over time, the SSH field shows a pronounced dip directly under the storm, corresponding to the atmospheric pressure drop as seen in the idealized test case, while coastal regions experience positive SSH anomalies indicative of a storm surge. The SST panels (bottom row) reveal strong ocean cooling along the hurricane's path, driven by wind-induced mixing and upwelling. This cooling intensifies as the storm strengthens, particularly in regions where wind stress is highest. This is also in line with the behavior seen in the idealized test case.

Figure 10Two-dimensional time evolution snapshots of sea surface height (top) and temperature (bottom) compared to their initial values for Hurricane Helene with an idealized ocean. Black arrows correspond to localized velocity vectors.

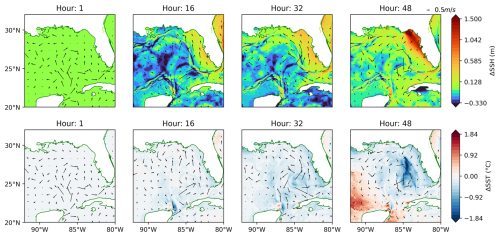

Figure 11 shows the evolution of the SSH and SST anomalies from a GLORYS-initialized ocean in response to the same atmosphere. Compared to the idealized case, shown in Fig. 10, the realistic ocean features realistic mesoscale structures influencing both spatial patterns of SSH and SST anomalies. The SSH response still exhibits a developing storm surge along the northern gulf coast. SST changes are still dominated by wind-driven mixing and upwelling and exhibit a cooling pattern along the hurricane's path. These responses are consistent with those observed in the idealized ocean setup. An additional comparative analysis could be performed here, but it is beyond the scope of this study. Overall, these results demonstrate that the coupling framework performs reliably in a realistic setting, reproducing key oceanic responses to tropical hurricane forcing.

Figure 11As for Fig. 10 but for a realistic ocean.

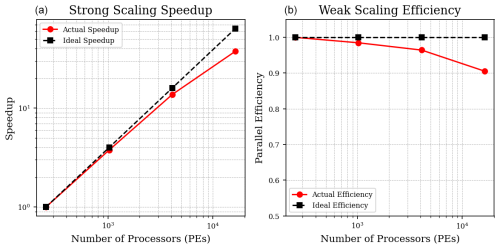

Parallel efficiency is a key factor for the performance of coupled ocean–atmosphere models, particularly when simulating large-scale high-resolution problems. There are already reports on the scalability of standalone SHiELD and standalone MOM6. In this section, we investigate the parallel efficiency of the coupled model SHiELD-MOM6, focusing on three main objectives: (a) validating the scalability of the full coupled model, (b) demonstrating that the usage of the FMS coupler and exchange grid can effectively handle massive parallel simulations, and (c) assessing the coupling process additional computational overhead.

We evaluate the parallel speed-up of the SHiELD/MOM6 system to understand its performance under varying numbers of CPU cores, investigating strong scaling by simulating a constant size problem with different core configurations and weak scaling by simulating increasingly larger problem sizes while maintaining a constant number of grid cells per processing element.

These tests were performed on the supercomputer GAEA, operated by the National Climate-Computing Research Center located at the Oak Ridge National Laboratory (ORNL). The C6 cluster partition of GAEA is an HPE-EX Cray X3000 system with 2048 compute nodes (2 × AMD EPYC 9654 2.4 GHz base, 96 cores per socket), HPE Slingshot Interconnect, 384 GB DDR4 per node, 584 TB totaling an expected peak of 11.21 PF.

For the strong scaling, we consider the idealized doubly periodic simulations, shown in Sect. 4, with a fixed larger domain size of 1024×1024 grid cells. Scaling tests were conducted using various processor layouts: 8×16, 16×32, 32×64, and 64×128, which correspond to 128×64, 64×32, 32×16, and 16×8 grid cells per processing element, respectively. Additionally, two cores were allocated per task. In these tests, the atmosphere and ocean were run serially on the same set of processors. The coupling time step is still unchanged and is set to match the atmosphere physics time step (or the long atmosphere time step). The strong scaling performance of the model is illustrated in Fig. 12, which shows the achieved speedup factor as a function of the number of processing elements (PEs). The actual speedup (red) is compared to the ideal linear scaling (black). The reference case considers the case with the smallest number of PEs. The results demonstrate a near-ideal scaling up to 16 000, after which the actual speedup begins to deviate from the ideal case. Despite this deviation, the model maintains strong parallel efficiency, demonstrating substantial computational gains with increasing processor count. In addition to strong scaling, we also performed a weak scaling analysis where we fixed the grid cell count per PE at 32×16 and increased the number of PEs up to 16 000. The results indicate that the model maintains high efficiency (>90%), demonstrating excellent parallel scalability. The slight decrease in efficiency at higher processor counts can be attributed to increased communication overhead and load imbalance. Overall, the strong and weak scaling results demonstrate the model's excellent parallel performance, making it well suited to large-scale simulations of high-performance computing architectures. Additional work is needed to assess model scalability when the atmosphere and ocean components run concurrently on separate processor sets. This includes identifying an efficient processor distribution strategy that optimizes load balance for practical applications.

In this work, we have developed and validated the new coupled SHiELD-MOM6 model that advances our ability to simulate complex interactions between the atmosphere and ocean. Built upon the robust frameworks of GFDL's FMS, FV3-based SHiELD atmospheric model, MOM6 ocean model, and SIS2 Sea Ice Simulator, the coupled system achieves a two-way atmosphere–ocean integration through the FMS coupler and exchange grid. During the implementation process, we have ensured that the coupling method enables a precise conservative transfer of dynamic and physics variables between the atmosphere and ocean within the essential physical processes such as momentum, heat, and moisture exchanges for their accurate representation.

The idealized and realistic scenario simulations highlight the new system's capabilities. In the idealized hurricane test case, the model successfully captured key features of the storm development, including its intensification phase, evolution of storm structure, and corresponding ocean responses like surface current adjustment and wind-induced upwelling. Similarly, realistic simulations of Hurricane Helene of September 2024 demonstrated the model capability to simulate and reproduce complex phenomena such as storm surge development, coastal current modulation, and significant sea surface temperature changes driven by air–sea interactions.

Additionally, scalability tests on high-performance computing systems revealed that the SHiELD-MOM6 model is not only scientifically robust but also computationally efficient for the extent of the current configuration and tests. The effective parallel performance achieved through the optimized coupling strategy and exchange grid paves the way for its application in operational settings, ranging from short-term weather forecasts to extended future climate simulations.

Overall, the new SHiELD-MOM6 model represents a major advancement in coupled model development at GFDL. Its flexible and modular design, combined with state-of-the-art numerical frameworks and infrastructure, provide a solid foundation for future studies and forecasts on severe weather systems, which need correct representations of air–sea interactions like hurricanes. Current development efforts include integrating additional model components such as WaveWatch III for wave dynamics, further refinement of physical parameterizations, and extensive validation against observational datasets. These improvements will further enhance our understanding of air–sea interactions and contribute to more accurate forecasting and climate prediction efforts.

This work highlights the critical role of unified modeling approaches in addressing the inherent complexities of coupled systems and sets a foundation for continued progress in model development for weather and climate science.

The official code repositories are all on GitHub under NOAA-GFDL. The main branches are up to date and continuously under development. The source code for the main components discussed in Sect. 2 are as follows:

-

https://github.com/NOAA-GFDL/GFDL_atmos_cubed_sphere (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/FMScoupler (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/FMS (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/atmos_drivers (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/SHiELD_physics (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/land_null (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/ice_param (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/MOM6 (last access: 22 September 2025)

-

https://github.com/NOAA-GFDL/SIS2 (last access: 22 September 2025)

The source code for the build component is under:

-

https://github.com/NOAA-GFDL/SHiELD_build (last access: 22 September 2025)

SHiELD-MOM6 is under active development and can be built and run from the latest official releases from the NOAA-GFDL GitHub repository as listed in Appendix A. The simulation files and source code for the static version of the model used in this study are available at https://doi.org/10.5281/zenodo.15178709 (Mouallem, 2025).

JM and KG developed the source code and model coupling. BGR, JC, and CZ contributed to the configuration and setup of the ocean component. LC, RB, and NZ contributed to the build system and exchange grid. JC prepared the realistic ocean initial conditions. JM ran the simulations. JM and KG prepared the initial draft of the manuscript. All authors participated in discussions during various stages of the model development and evaluation and contributed to the writing of the finalized paper.

The contact author has declared that none of the authors has any competing interests.

The statements, findings, conclusions, and recommendations are those of the author(s) and do not necessarily reflect the views of the National Oceanic and Atmospheric Administration or the U.S. Department of Commerce.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

We thank Jacob Steinberg for their review and useful comments that improved the quality of the paper.

This research has been supported by the National Oceanic and Atmospheric Administration (grant nos. NA18OAR4320123, NA23OAR4320198, and NA23OAR4050432I, the NOAA Research Global-Nest Initiative, and the Bipartisan Infrastructure Law). Joseph Mouallem, Kun Gao, Cheng Zhang and Jing Chen are supported under the awards NA18OAR4320123, NA23OAR4320198, and NA23OAR4050432I from the National Oceanic and Atmospheric Administration, U.S. Department of Commerce. This work is also supported by the NOAA Research Global-Nest Initiative and the Bipartisan Infrastructure Law (BIL).

This paper was edited by Riccardo Farneti and reviewed by two anonymous referees.

Adcroft, A., Anderson, W., Balaji, V., Blanton, C., Bushuk, M., Dufour, C. O., Dunne, J. P., Griffies, S. M., Hallberg, R., Harrison, M. J., Held, I. M., Jansen, M. F., John, J. G., Krasting, J. P., Langenhorst, A. R., Legg, S., Liang, Z., McHugh, C., Radhakrishnan, A., Reichl, B. G., Rosati, T., Samuels, B. L., Shao, A., Stouffer, R., Winton, M., Wittenberg, A. T., Xiang, B., Zadeh, N., and Zhang, R.: The GFDL Global Ocean and Sea Ice Model OM4.0: Model Description and Simulation Features, J. Adv. Model. Earth Sy., 11, 3167–3211, https://doi.org/10.1029/2019MS001726, 2019. a, b

Balaji, V., Anderson, J., Held, I., Winton, M., Durachta, J., Malyshev, S., and Stouffer, R. J.: - The Exchange Grid: A mechanism for data exchange between Earth System components on independent grids, in: Parallel Computational Fluid Dynamics 2005, edited by: Deane, A., Ecer, A., McDonough, J., Satofuka, N., Brenner, G., Emerson, D. R., Periaux, J., and Tromeur-Dervout, D., Elsevier, Amsterdam, 179–186, https://doi.org/10.1016/B978-044452206-1/50021-5, 2006. a, b

Bender, M. A., Ginis, I., and Kurihara, Y.: Numerical simulations of tropical cyclone-ocean interaction with a high-resolution coupled model, J. Geophys. Res.-Atmos., 98, 23245–23263, https://doi.org/10.1029/93JD02370, 1993. a

Black, T. L., Abeles, J. A., Blake, B. T., Jovic, D., Rogers, E., Zhang, X., Aligo, E. A., Dawson, L. C., Lin, Y., Strobach, E., Shafran, P. C., and Carley, J. R.: A Limited Area Modeling Capability for the Finite-Volume Cubed-Sphere (FV3) Dynamical Core and Comparison With a Global Two-Way Nest, J. Adv. Model. Earth Sy., 13, e2021MS002483, https://doi.org/10.1029/2021MS002483, 2021. a, b

Bolot, M., Harris, L. M., Cheng, K.-Y., Merlis, T. M., Blossey, P. N., Bretherton, C. S., Clark, S. K., Kaltenbaugh, A., Zhou, L., and Fueglistaler, S.: Kilometer-scale global warming simulations and active sensors reveal changes in tropical deep convection, npj Climate and Atmospheric Science, 6, 209, https://doi.org/10.1038/s41612-023-00525-w, 2023. a

Chen, X., Andronova, N., Leer, B. V., Penner, J. E., Boyd, J. P., Jablonowski, C., and Lin, S. J.: A control-volume model of the compressible Euler equations with a vertical Lagrangian coordinate, Mon. Weather Rev., 141, 2526–2544, https://doi.org/10.1175/MWR-D-12-00129.1, 2013. a

Cheng, K.-Y., Lin, S.-J., Harris, L., and Zhou, L.: Supercells and Tornado-Like Vortices in an Idealized Global Atmosphere Model, Earth and Space Science, 11, e2023EA003368, https://doi.org/10.1029/2023EA003368, 2024. a

Delworth, T. L., Cooke, W. F., Adcroft, A., Bushuk, M., Chen, J.-H., Dunne, K. A., Ginoux, P., Gudgel, R., Hallberg, R. W., Harris, L., Harrison, M. J., Johnson, N., Kapnick, S. B., Lin, S.-J., Lu, F., Malyshev, S., Milly, P. C., Murakami, H., Naik, V., Pascale, S., Paynter, D., Rosati, A., Schwarzkopf, M., Shevliakova, E., Underwood, S., Wittenberg, A. T., Xiang, B., Yang, X., Zeng, F., Zhang, H., Zhang, L., and Zhao, M.: SPEAR: The Next Generation GFDL Modeling System for Seasonal to Multidecadal Prediction and Projection, J. Adv. Model. Earth Sy., 12, e2019MS001895, https://doi.org/10.1029/2019MS001895, 2020. a

Dunne, J. P., Horowitz, L. W., Adcroft, A. J., Ginoux, P., Held, I. M., John, J. G., Krasting, J. P., Malyshev, S., Naik, V., Paulot, F., Shevliakova, E., Stock, C. A., Zadeh, N., Balaji, V., Blanton, C., Dunne, K. A., Dupuis, C., Durachta, J., Dussin, R., Gauthier, P. P. G., Griffies, S. M., Guo, H., Hallberg, R. W., Harrison, M., He, J., Hurlin, W., McHugh, C., Menzel, R., Milly, P. C. D., Nikonov, S., Paynter, D. J., Ploshay, J., Radhakrishnan, A., Rand, K., Reichl, B. G., Robinson, T., Schwarzkopf, D. M., Sentman, L. T., Underwood, S., Vahlenkamp, H., Winton, M., Wittenberg, A. T., Wyman, B., Zeng, Y., and Zhao, M.: The GFDL Earth System Model Version 4.1 (GFDL-ESM 4.1): Overall Coupled Model Description and Simulation Characteristics, J. Adv. Model. Earth Sy., 12, e2019MS002015, https://doi.org/10.1029/2019MS002015, 2020. a

Ek, M. B., Mitchell, K. E., Lin, Y., Rogers, E., Grunmann, P., Koren, V., Gayno, G., and Tarpley, J. D.: Implementation of Noah land surface model advances in the National Centers for Environmental Prediction operational mesoscale Eta model, J. Geophys. Res.-Atmos., 108, https://doi.org/10.1029/2002JD003296, 2003. a

Gao, K., Harris, L., Zhou, L., Bender, M., and Morin, M.: On the Sensitivity of Hurricane Intensity and Structure to Horizontal Tracer Advection Schemes in FV3, J. Atmos. Sci., 78, 3007–3021, https://doi.org/10.1175/JAS-D-20-0331.1, 2021. a, b

Gao, K., Harris, L., Bender, M., Chen, J.-H., Zhou, L., and Knutson, T.: Regulating Fine-Scale Resolved Convection in High-Resolution Models for Better Hurricane Track Prediction, Geophys. Res. Lett., 50, e2023GL103329, https://doi.org/10.1029/2023GL103329, 2023. a, b

Gao, K., Mouallem, J., and Harris, L.: What Are the Finger-Like Clouds in the Hurricane Inner-Core Region?, Geophys. Res. Lett., 51, e2024GL110810, https://doi.org/10.1029/2024GL110810, 2024. a, b

GLORYS12V1: Global Ocean Reanalysis Products (GLORYS12V1), Copernicus Marine Environment Monitoring Service (CMEMS), https://doi.org/10.48670/moi-00021, 2018. a

Griffies, S. M., Adcroft, A., and Hallberg, R. W.: A Primer on the Vertical Lagrangian-Remap Method in Ocean Models Based on Finite Volume Generalized Vertical Coordinates, J. Adv. Model. Earth Sy., 12, e2019MS001954, https://doi.org/10.1029/2019MS001954, 2020. a

Han, J. and Bretherton, C. S.: TKE-Based Moist Eddy-Diffusivity Mass-Flux (EDMF) Parameterization for Vertical Turbulent Mixing, Weather Forecast., 34, 869–886, https://doi.org/10.1175/WAF-D-18-0146.1, 2019. a

Han, J., Wang, W., Kwon, Y. C., Hong, S.-Y., Tallapragada, V., and Yang, F.: Updates in the NCEP GFS Cumulus Convection Schemes with Scale and Aerosol Awareness, Weather Forecast., 32, 2005–2017, https://doi.org/10.1175/WAF-D-17-0046.1, 2017. a, b

Harris, L., Zhou, L., Lin, S. J., Chen, J. H., Chen, X., Gao, K., Morin, M., Rees, S., Sun, Y., Tong, M., Xiang, B., Bender, M., Benson, R., Cheng, K. Y., Clark, S., Elbert, O. D., Hazelton, A., Huff, J. J., Kaltenbaugh, A., Liang, Z., Marchok, T., Shin, H. H., and Stern, W.: GFDL SHiELD: A Unified System for Weather-to-Seasonal Prediction, J. Adv. Model. Earth Sy., 12, 1–25, https://doi.org/10.1029/2020MS002223, 2020. a, b

Harris, L., Zhou, L., Kaltenbaugh, A., Clark, S., Cheng, K.-Y., and Bretherton, C.: A Global Survey of Rotating Convective Updrafts in the GFDL X-SHiELD 2021 Global Storm Resolving Model, J. Geophys. Res.-Atmos., 128, e2022JD037823, https://doi.org/10.1029/2022JD037823, 2023. a

Harris, L. M. and Lin, S. J.: A two-way nested global-regional dynamical core on the cubed-sphere grid, Mon. Weather Rev., 141, 283–306, https://doi.org/10.1175/MWR-D-11-00201.1, 2013. a

Harris, L. M., Lin, S.-J., and Tu, C.: High-Resolution Climate Simulations Using GFDL HiRAM with a Stretched Global Grid, J. Climate, 29, 4293–4314, https://doi.org/10.1175/JCLI-D-15-0389.s1, 2016. a

Harris, L. M., Rees, S. L., Morin, M., Zhou, L., and Stern, W. F.: Explicit Prediction of Continental Convection in a Skillful Variable-Resolution Global Model, J. Adv. Model. Earth Sy., 11, 1847–1869, https://doi.org/10.1029/2018MS001542, 2019. a

Held, I.: The Monin-Obukhov Module for FMS, Copernicus Marine Environment Monitoring Service (CMEMS), https://github.com/NOAA-GFDL/FMS/tree/main/monin_obukhov (last access: 22 September 2025), 2001. a

Held, I. M., Guo, H., Adcroft, A., Dunne, J. P., Horowitz, L. W., Krasting, J., Shevliakova, E., Winton, M., Zhao, M., Bushuk, M., Wittenberg, A. T., Wyman, B., Xiang, B., Zhang, R., Anderson, W., Balaji, V., Donner, L., Dunne, K., Durachta, J., Gauthier, P. P. G., Ginoux, P., Golaz, J.-C., Griffies, S. M., Hallberg, R., Harris, L., Harrison, M., Hurlin, W., John, J., Lin, P., Lin, S.-J., Malyshev, S., Menzel, R., Milly, P. C. D., Ming, Y., Naik, V., Paynter, D., Paulot, F., Ramaswamy, V., Reichl, B., Robinson, T., Rosati, A., Seman, C., Silvers, L. G., Underwood, S., and Zadeh, N.: Structure and Performance of GFDL's CM4.0 Climate Model, J. Adv. Model. Earth Sy., 11, 3691–3727, https://doi.org/10.1029/2019MS001829, 2019. a

Iacono, M. J., Delamere, J. S., Mlawer, E. J., Shephard, M. W., Clough, S. A., and Collins, W. D.: Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models, J. Geophys. Res.-Atmos., 113, https://doi.org/10.1029/2008JD009944, 2008. a

Jackson, L., Hallberg, R., and Legg, S.: A Parameterization of Shear-Driven Turbulence for Ocean Climate Models, J. Phys. Oceanogr., 38, 1033–1053, https://doi.org/10.1175/2007JPO3779.1, 2008. a

Kaltenbaugh, A., Harris, L., Cheng, K.-Y., Zhou, L., Morrin, M., and Stern, W.: Using GFDL C-SHiELD for the prediction of convective storms during the 2021 spring and summer, NOAA technical memorandum OAR GFDL, 2022-001, https://doi.org/10.25923/ednx-rm34, 2022. a

Lellouche, J.-M., Greiner, E., Bourdallé-Badie, R., Garric, G., Melet, A., Drévillon, M., Bricaud, C., Hamon, M., Le Galloudec, O., Regnier, C., Candela, T., Testut, C.-E., Gasparin, F., Ruggiero, G., Benkiran, M., Drillet, Y., and Le Traon, P.-Y.: The Copernicus Global ° Oceanic and Sea Ice GLORYS12 Reanalysis, Front. Earth Sci., 9, https://doi.org/10.3389/feart.2021.698876, 2021. a

Li, Q., Fox-Kemper, B., Breivik, Ø., and Webb, A.: Statistical models of global Langmuir mixing, Ocean Model., 113, 95–114, https://doi.org/10.1016/j.ocemod.2017.03.016, 2017. a

Lin, S. J.: A “vertically Lagrangian” finite-volume dynamical core for global models, Mon. Weather Rev., 132, 2293–2307, https://doi.org/10.1175/1520-0493(2004)132<2293:AVLFDC>2.0.CO;2, 2004. a, b

Lin, S.-J. and Rood, R. B.: Multidimensional Flux-Form Semi-Lagrangian Transport Schemes, Mon. Weather Rev., 124, 2046–2070, https://doi.org/10.1175/1520-0493(1996)124<2046:MFFSLT>2.0.CO;2, 1996. a, b

Lin, S.-J. and Rood, R. B.: An explicit flux-form semi-Lagrangian shallow-water model on the sphere, Q. J. Roy. Meteor. Soc., 123, 2477–2498, https://doi.org/10.1002/qj.49712354416, 1997. a, b

Merlis, T. M., Cheng, K.-Y., Guendelman, I., Harris, L., Bretherton, C. S., Bolot, M., Zhou, L., Kaltenbaugh, A., Clark, S. K., Vecchi, G. A., and Fueglistaler, S.: Climate sensitivity and relative humidity changes in global storm-resolving model simulations of climate change, Science Advances, 10, eadn5217, https://doi.org/10.1126/sciadv.adn5217, 2024a. a

Merlis, T. M., Guendelman, I., Cheng, K.-Y., Harris, L., Chen, Y.-T., Bretherton, C. S., Bolot, M., Zhou, L., Kaltenbaugh, A., Clark, S. K., and Fueglistaler, S.: The Vertical Structure of Tropical Temperature Change in Global Storm-Resolving Model Simulations of Climate Change, Geophys. Res. Lett., 51, e2024GL111549, https://doi.org/10.1029/2024GL111549, 2024b. a

Mouallem, J.: Running SHiELD with GFDL's FMS full coupler infrastructure, NOAA technical memorandum OAR GFDL, 2024-002, https://doi.org/10.25923/ezfm-az21, 2024. a

Mouallem, J.: Development of a High-Resolution Coupled SHiELD- MOM6 Model. Part I – Model Overview, Coupling Technique, and Validation in a Regional Setup [data set and code], https://doi.org/10.5281/zenodo.15178709, 2025. a

Mouallem, J., Harris, L., and Benson, R.: Multiple same-level and telescoping nesting in GFDL's dynamical core, Geosci. Model Dev., 15, 4355–4371, https://doi.org/10.5194/gmd-15-4355-2022, 2022. a

Mouallem, J., Harris, L., and Chen, X.: Implementation of the Novel Duo-Grid in GFDL's FV3 Dynamical Core, J. Adv. Model. Earth Sy., 15, e2023MS003712, https://doi.org/10.1029/2023MS003712, 2023. a

Niu, G.-Y., Yang, Z.-L., Mitchell, K. E., Chen, F., Ek, M. B., Barlage, M., Kumar, A., Manning, K., Niyogi, D., Rosero, E., Tewari, M., and Xia, Y.: The community Noah land surface model with multiparameterization options (Noah-MP): 1. Model description and evaluation with local-scale measurements, J. Geophys. Res.-Atmos., 116, https://doi.org/10.1029/2010JD015139, 2011. a

Pollard, R. T., Rhines, P. B., and Thompson, R. O. R. Y.: The deepening of the wind-Mixed layer, Geophysical Fluid Dynamics, 4, 381–404, https://doi.org/10.1080/03091927208236105, 1973. a

Price, J. F.: Upper Ocean Response to a Hurricane, J. Phys. Oceanogr., 11, 153–175, https://doi.org/10.1175/1520-0485(1981)011<0153:UORTAH>2.0.CO;2, 1981. a

Putman, W. M. and Lin, S. J.: Finite-volume transport on various cubed-sphere grids, J. Comput. Phys., 227, 55–78, https://doi.org/10.1016/j.jcp.2007.07.022, 2007. a

Ramstrom, W., Zhang, X., Ahern, K., and Gopalakrishnan, S.: Implementation of storm-following nest for the next-generation Hurricane Analysis and Forecast System (HAFS), Front. Earth Sci., 12, https://doi.org/10.3389/feart.2024.1419233, 2024. a

Reed, K. A. and Jablonowski, C.: Idealized tropical cyclone simulations of intermediate complexity: A test case for AGCMs, J. Adv. Model. Earth Sy., 4, https://doi.org/10.1029/2011MS000099, 2012. a

Reichl, B. G. and Hallberg, R.: A simplified energetics based planetary boundary layer (ePBL) approach for ocean climate simulations, Ocean Model., 132, 112–129, https://doi.org/10.1016/j.ocemod.2018.10.004, 2018. a

Reichl, B. G. and Li, Q.: A Parameterization with a Constrained Potential Energy Conversion Rate of Vertical Mixing Due to Langmuir Turbulence, J. Phys. Oceanogr., 49, 2935–2959, https://doi.org/10.1175/JPO-D-18-0258.1, 2019. a

Reichl, B. G., Wittenberg, A. T., Griffies, S. M., and Adcroft, A.: Improving Equatorial Upper Ocean Vertical Mixing in the NOAA/GFDL OM4 Model, Earth and Space Science, 11, e2023EA003485, https://doi.org/10.1029/2023EA003485, 2024. a

Santos, L. F., Mouallem, J., and Peixoto, P. S.: Analysis of finite-volume transport schemes on cubed-sphere grids and an accurate scheme for divergent winds, J. Comput. Phys., 522, 113618, https://doi.org/10.1016/j.jcp.2024.113618, 2025. a

Zhang, J. A., Nolan, D. S., Rogers, R. F., and Tallapragada, V.: Evaluating the Impact of Improvements in the Boundary Layer Parameterization on Hurricane Intensity and Structure Forecasts in HWRF, Mon. Weather Rev., 143, 3136–3155, https://doi.org/10.1175/MWR-D-14-00339.1, 2015. a

Zhou, L., Lin, S.-J., Chen, J.-H., Harris, L. M., Chen, X., and Rees, S. L.: Toward Convective-Scale Prediction within the Next Generation Global Prediction System, B. Am. Meteorol. Soc., 100, 1225–1243, https://doi.org/10.1175/BAMS-D-17-0246.1, 2019. a

Zhou, L., Harris, L., and Chen, J.-H.: The GFDL Cloud Microphysics Parameterization, NOAA technical memorandum OAR GFDL, 2022-002, https://doi.org/10.25923/pz3c-8b96, 2022. a

Zhou, L., Harris, L., Chen, J.-H., Gao, K., Cheng, K.-Y., Tong, M., Kaltenbaugh, A., Morin, M., Mouallem, J., Chilutti, L., and Johnston, L.: Bridging the Gap Between Global Weather Prediction and Global Storm-Resolving Simulation: Introducing the GFDL 6.5 km SHiELD, J. Adv. Model. Earth Sy., 16, e2024MS004430, https://doi.org/10.1029/2024MS004430, 2024. a