the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Multi-year simulations at kilometre scale with the Integrated Forecasting System coupled to FESOM2.5 and NEMOv3.4

Xabier Pedruzo-Bagazgoitia

Tobias Becker

Sebastian Milinski

Irina Sandu

Razvan Aguridan

Peter Bechtold

Sebastian Beyer

Jean Bidlot

Souhail Boussetta

Willem Deconinck

Michail Diamantakis

Peter Dueben

Emanuel Dutra

Richard Forbes

Rohit Ghosh

Helge F. Goessling

Ioan Hadade

Jan Hegewald

Thomas Jung

Sarah Keeley

Lukas Kluft

Nikolay Koldunov

Aleksei Koldunov

Tobias Kölling

Josh Kousal

Christian Kühnlein

Pedro Maciel

Kristian Mogensen

Tiago Quintino

Inna Polichtchouk

Balthasar Reuter

Domokos Sármány

Patrick Scholz

Dmitry Sidorenko

Jan Streffing

Birgit Sützl

Daisuke Takasuka

Steffen Tietsche

Mirco Valentini

Benoît Vannière

Nils Wedi

Lorenzo Zampieri

Florian Ziemen

We report on the first multi-year kilometre-scale global coupled simulations using ECMWF's Integrated Forecasting System (IFS) coupled to both the NEMO and FESOM ocean–sea ice models, as part of the H2020 Next Generation Earth Modelling Systems (nextGEMS) project. We focus mainly on an unprecedented IFS-FESOM coupled setup, with an atmospheric resolution of 4.4 km and a spatially varying ocean resolution that reaches locally below 5 km grid spacing. A shorter coupled IFS-FESOM simulation with an atmospheric resolution of 2.8 km has also been performed. A number of shortcomings in the original numerical weather prediction (NWP)-focused model configurations were identified and mitigated over several cycles collaboratively by the modelling centres, academia, and the wider nextGEMS community. The main improvements are (i) better conservation properties of the coupled model system in terms of water and energy budgets, which also benefit ECMWF's operational 9 km IFS-NEMO model; (ii) a realistic top-of-the-atmosphere (TOA) radiation balance throughout the year; (iii) improved intense precipitation characteristics; and (iv) eddy-resolving features in large parts of the mid- and high-latitude oceans (finer than 5 km grid spacing) to resolve mesoscale eddies and sea ice leads. New developments at ECMWF for a better representation of snow and land use, including a dedicated scheme for urban areas, were also tested on multi-year timescales. We provide first examples of significant advances in the realism and thus opportunities of these kilometre-scale simulations, such as a clear imprint of resolved Arctic sea ice leads on atmospheric temperature, impacts of kilometre-scale urban areas on the diurnal temperature cycle in cities, and better propagation and symmetry characteristics of the Madden–Julian Oscillation.

- Article

(20756 KB) - Full-text XML

- BibTeX

- EndNote

Current state-of-the-art climate models with typical spatial resolutions of 50–100 km still rely heavily on parametrizations for under-resolved processes, such as deep convection, the effects of sub-grid orography and gravity waves in the atmosphere, or the effects of mesoscale eddies in the ocean. The emerging new generation of kilometre-scale climate models can explicitly represent and combine several of these energy-redistributing small-scale processes and physical phenomena that were historically approximated or even neglected in coarse-resolution models (Palmer, 2014). The advantage of kilometre-scale models thus lies in their ability to more directly represent phenomena such as tropical cyclones (Judt et al., 2021) or the atmospheric response to small-scale features in the topography, for example, mountains, orography gradients, lakes, urban areas, and cities. The distribution and intensity (and particularly the extremes) of precipitation (Judt and Rios-Berrios, 2021), winds, and potentially also temperature will be different at improved spatial resolution. Importantly, features of deep convection start to be explicitly resolved at kilometre-scale resolutions. This does not only improve the local representation of the diurnal cycle, convective organization, and the propagation of convective storms (Prein et al., 2015; Satoh et al., 2019; Schär et al., 2020) but can also impact the large-scale circulation (Gao et al., 2023). Ultimately, the replacement of parametrizations by explicitly resolved atmospheric dynamics is also expected to narrow the still large uncertainty range of cloud-related feedbacks and thus climate sensitivity (Bony et al., 2015; Stevens et al., 2016).

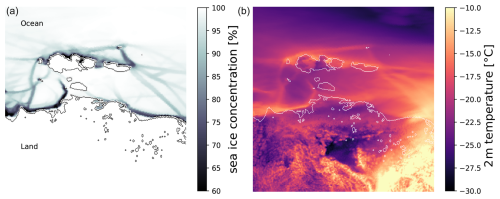

Kilometre-scale resolutions are also particularly beneficial for the ocean, where mesoscale ocean eddies (Frenger et al., 2013), leads opening up in the sea ice cover, and the response of oceanic heat transport to the presence of narrow canyons (Morrison et al., 2020) can be studied directly. The small scales in the ocean, in particular mesoscale ocean eddies, have large-scale impacts on climate and control the distribution of nutrients, heat uptake, and carbon cycling (Hogg et al., 2015). Eddies also play an important role in the comprehensive response of the climate system to warming (Hewitt et al., 2022; Rackow et al., 2022; Griffies et al., 2015). In addition to the influence of mesoscale ocean features on the predictability of European weather downstream of the Gulf Stream area (Keeley et al., 2012), it has been proposed that higher-resolution simulations can enhance the representation of local heterogeneities in the sea ice cover (Hutter et al., 2022). Via their impact on small-scale ocean features such as eddies, atmospheric storms can impact deep water formation in the Labrador Sea (Gutjahr et al., 2022), an ocean region of global significance because of its role in the meridional overturning circulation of the ocean. Coupled ocean–atmosphere variability patterns such as the El Niño–Southern Oscillation (ENSO), the largest signal of interannual variability on Earth, may also benefit from kilometre-scale resolutions since ENSO-relevant ocean mesoscale features (Wengel et al., 2021) and westerly wind bursts should be better resolved.

High-resolution simulations pose significant challenges in terms of numerical methods, data management, storage, and analysis (Schär et al., 2020). To exploit the potential of kilometre-scale modelling, it is essential to develop scalable models that can run efficiently on large supercomputers and take advantage of the next generation of exascale computing platforms (Bauer et al., 2021; Taylor et al., 2023). Global atmosphere-only climate simulations at kilometre scale were pioneered by the NICAM group (Nonhydrostatic ICosahedral Atmospheric Model) almost 2 decades ago. On sub-seasonal to seasonal timescales, a global aqua-planet configuration at 3.5 km resolution was performed (Tomita et al., 2005), and the Madden–Julian Oscillation (MJO) was realistically reproduced at 7 and 3.5 km resolutions (Miura et al., 2007). In the last decade, the NICAM group, as well as the European Centre for Medium-Range Weather Forecasts (ECMWF), has run simulations on climate timescales at around 10–15 km spatial resolution. In particular, 14 km resolution 30-year AMIP (Kodama et al., 2015) and HighResMIP simulations (Kodama et al., 2021) were performed with NICAM. During Project Athena, the climate and seasonal predictive skill of ECMWF's Integrated Forecasting System was analysed at resolutions up to 10 km based on many 13-month simulations (totalling several decadal simulations), complemented with a 48-year AMIP-style simulation with future time slices at 15 km resolution (Jung et al., 2012). Recently, the NICAM group presented 10-year AMIP simulations at 3.5 km using an updated NICAM version (Takasuka et al., 2024). Other modelling groups around the world have also increased their model resolution towards the kilometre scale, and many participated in the recent DYAMOND intercomparison project (DYnamics of the Atmospheric general circulation Modeled On Non-hydrostatic Domains) with a grid spacing as fine as 2.5 km, simulations running over 40 d, and some of them already coupled to an ocean (Stevens et al., 2019).

While different modelling groups push global atmosphere-only simulations towards unprecedented resolutions (e.g. 220 m resolution in short simulations with NICAM), another scientific frontier has emerged around running kilometre-scale simulations on multi-year timescales, coupled to an equally refined ocean model. Indeed, in recent years, several kilometre-scale simulations have been run on up to monthly and seasonal timescales (Stevens et al., 2019, Wedi et al., 2020) but not many beyond these timescales and not yet with a kilometre-scale ocean (Miyakawa et al., 2017). This is due to the fact that even the most efficient high-resolution coupled models that are currently available require substantial computing resources to run, and the comprehensive and diverse code bases are also challenging to adapt to the latest computing technologies. As a result, the number of simulations and realizations that can be performed is limited, making it difficult to calibrate and optimize the model settings. Coarser-resolution models have been tuned for decades to be relatively reliable on the spatial scales that they can resolve and to match the historical period well for which high-quality observations are available. Nevertheless, this is often achieved by compensating errors, which cannot necessarily be expected to work similarly in a warming climate. These models also have some long-standing biases that can locally be larger than the interannual variability or the climate change signal (Rackow et al., 2019; Palmer and Stevens, 2019). The lack of explicitly simulated small-scale features is one likely source for these long-standing biases in weather and climate models (Schär et al., 2020). Coarser-resolution models also struggle with answering some important climate questions, such as the behaviour of extreme events in a warmer world and the impact of climate changes at the regional scale.

The European H2020 Next Generation Earth Modelling Systems (nextGEMS) project aims to build a new generation of eddy- and storm-resolving global coupled Earth system models to be used for multi-decadal climate projections at kilometre scale. By providing globally consistent information at scales where extreme events and the effects of climate change matter and are felt, global kilometre-scale multi-decadal projections will support the increasing need to provide localized climate information to inform local adaptation measures. The nextGEMS models build upon models that are also operationally used for numerical weather prediction (NWP): ICON, which is jointly developed by DWD and MPI-M (Hohenegger et al., 2023), and the Integrated Forecasting System (IFS) of ECMWF, coupled to the NEMO and FESOM ocean models. The nextGEMS project revolves around a series of hackathons, in which the simulations performed with the two models are examined in detail by an international community of more than 100 participants, followed by new model development iterations or “cycles”. The nextGEMS models have been (re-)designed for scalability and portability across different architectures (Satoh et al., 2019; Schulthess et al., 2019; Müller et al., 2019; Bauer et al., 2020, 2022) and lay the foundation for the Climate Change Adaptation Digital Twin developed in the EU's Destination Earth initiative (DestinE).

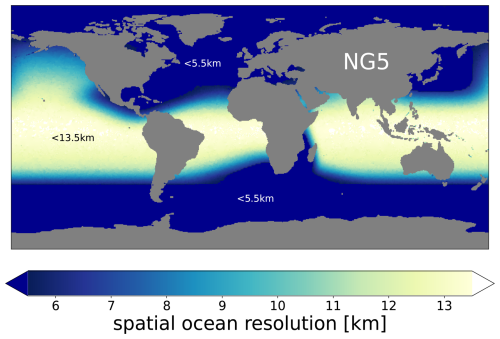

The operational NWP system at ECMWF uses an average 9 km grid spacing for the atmosphere coupled to an ocean at 0.25° spatial resolution (NEMO v3.4), which translates to a horizontal grid spacing of about 25 km along the Equator. While many coupled effects such as the atmosphere–ocean interactions during tropical cyclone conditions (Mogensen et al., 2017) can be realistically simulated at this resolution, ocean eddies in the mid-latitudes are still only “permitted” due to their decreasing size with latitude (Hallberg, 2013). This setup is far from our goal to explicitly resolve mesoscale ocean eddies all around the globe (Sein et al., 2017). In this study, we therefore focus mainly on configurations in which kilometre-scale versions of IFS (the main one at 4.4 km grid spacing in the atmosphere and land) are coupled to the FESOM2.5 ocean–sea ice model at about 5 km grid spacing, developed by the Alfred Wegener Institute, Helmholtz Centre for Polar and Marine Research (AWI). These configurations allow us to resolve many essential climate processes directly, for example mesoscale ocean eddies and sea ice leads in large parts of the mid- and high-latitude ocean, atmospheric storms, and certain small-scale features in the topography and land surface. We also test new developments of the IFS carried out in recent years at ECMWF to improve the representation of snow cover, land surface, and cities worldwide.

This paper documents the coupled kilometre-scale model configurations with the Integrated Forecasting System in Sect. 2. The technical and scientific model improvements, carried out along the nextGEMS model development cycles based on feedback by the nextGEMS community, are presented in Sect. 3. A first set of emerging advances stemming from the kilometre-scale character of the simulations is presented in Sect. 4, and more in-depth process studies will be the focus of dedicated future work. The paper closes with a summary and discussion of future steps in Sect. 5.

2.1 The Integrated Forecasting System and its coupling to NEMO and FESOM

The Integrated Forecasting System (IFS) is a spectral-transform atmospheric model with two-time-level semi-implicit, semi-Lagrangian time stepping (Temperton et al., 2001; Hortal, 2002; Diamantakis and Váňa, 2021). It is coupled to other Earth system components (land, waves, ocean, sea ice), and it is used in its version Cy48r1 (https://www.ecmwf.int/en/publications/ifs-documentation, last access: 7 November 2024), which has been used for operational forecasts at ECMWF since July 2023 (and modifications that will be detailed in this study). In its operational configuration (“oper”), the atmospheric component is coupled to the NEMO v3.4 ocean model. The octahedral reduced Gaussian grid (short “octahedral grid”) with a cubic (spectral) truncation (TCo) is used in the IFS (Malardel et al., 2016). The cubic truncation with the TCo grid implies higher effective resolution and better efficiency than the former linear truncation. It acts as a numerical filter without the need for expensive de-aliasing procedures, requires little diffusion, and produces small total mass conservation errors for medium-range forecasts; see Wedi (2014), Wedi et al. (2015), and Malardel et al. (2016), for further discussion. A hybrid, pressure-based vertical coordinate is used, which is a monotonic function of pressure and depends on the surface pressure (Simmons and Strüfing, 1983). The vertical coordinate follows the terrain at the lowest level and relaxes to a pure pressure-level vertical coordinate system in the upper part of the atmosphere. The vertical discretization scheme is a finite-element method using cubic B-spline basis functions (Vivoda et al., 2018; Untch and Hortal, 2004).

The atmosphere component of the IFS has a full range of parametrizations described in detail in ECMWF (2023a, b). The moist convection parametrization, originally described in Tiedtke (1989), is based on the mass-flux approach, and represents deep, shallow, and mid-level convection. For deep convection the mass flux is determined by removing a modified Convective Available Potential Energy (CAPE) over a given timescale (Bechtold et al., 2008, 2014), taking into account an additional dependence on total moisture convergence and a grid resolution dependent scaling factor to reduce the cloud-base mass flux further at grid resolutions higher than 9 km (Becker et al., 2021). The sub-grid cloud and precipitation microphysics scheme is based on Tiedtke (1993) and has since been substantially upgraded with separate prognostic variables for cloud water, cloud ice, rain, snow, and cloud fraction and an improved parametrization of microphysical processes (Forbes et al., 2011; Forbes and Ahlgrimm, 2014). The parametrization of sub-grid turbulent mixing follows the eddy-diffusivity–mass-flux (EDMF) framework, with a K-diffusion turbulence closure and a mass-flux component to represent the non-local eddy fluxes in unstable boundary layers (Siebesma et al., 2007; Köhler et al., 2011). The orographic gravity wave drag is parametrized following Lott and Miller (1997) and Beljaars et al. (2004) and a non-orographic gravity wave drag parametrization is described in Orr et al. (2010). The radiation scheme is described in Hogan and Bozzo (2018, ecRad). Full radiation computations are calculated on a coarser grid every hour, with approximate updates for radiation–surface interactions every time step at the model resolution.

The IFS land model ECLand (Boussetta et al., 2021) runs on the model grid and is fully coupled to the atmosphere through an implicit flux solver. ECLand represents the surface processes that interact with the atmosphere in the form of fluxes. The ECLand version in this work contains, among others, a four-layer soil scheme, a lake model, an urban model, a simple vegetation model, a multi-layer snow scheme, and a vast range of global maps describing the surface characteristics. A wave model component is provided by ecWAM to account for sea-state-dependent processes in the IFS (ECMWF, 2023c). The wave model runs on a reduced lat–long 0.125° grid with 36 frequencies and 36 directions. This means that the distance between latitudes is 0.125°, and the number of points per latitude is reduced polewards in order to keep the actual distance between grid points roughly equal to the spacing between two consecutive latitudes. The frequency discretization is such that ocean waves with periods between 1 and 28 s are represented.

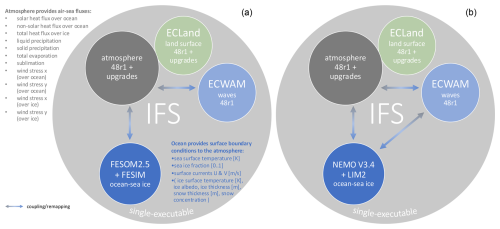

For the purpose of nextGEMS and other related projects such as the DestinE Climate Change Adaptation Digital Twin, where an IFS-NEMO configuration with a ° ocean (NEMO v4) is also applied, the complementary IFS-FESOM model option was developed. We coupled the Finite VolumE Sea ice-Ocean Model, FESOM2 (Danilov et al., 2017; Scholz et al., 2019; Koldunov et al., 2019; Sidorenko et al., 2019), to IFS (see details below). Instead of using a coupler for this task, as for the OpenIFS-FESOM (Streffing et al., 2022), the alternative adopted here is to follow the strategy for IFS-NEMO coupling, where the ocean and IFS models are integrated into a single executable and share a common time-stepping loop (Mogensen et al., 2012). In this sequential coupling approach (akin to the model physics–dynamics and land surface coupling that occurs every model time step), the atmosphere advances for 1 h (length of the coupling interval), and fluxes are passed as upper boundary conditions to the ocean. It then in turn advances for 1 h, up to the same checkpoint. The following atmospheric step then uses updated surface ocean fields as lower boundary conditions for the next coupling interval (Mogensen et al., 2012). Note that there is no need to introduce a lag of one coupling time step because the ocean and atmosphere models run sequentially and not overlapping in parallel. A study into the effect of model lag on flux/state convergence by Marti et al. (2021) found that sequential instead of parallel coupling reduces the error nearly to the fully converged solution.

In the operational IFS, in areas where sea ice is present in the ocean model, currently a sea ice thickness of 1.5 m and no snow cover are assumed for the computation of the conductive heat flux on the atmospheric side. Our initial implementation for the multi-year simulations carried out in nextGEMS does not divert yet from this assumption of the operational configuration, in which the atmosphere only “sees” the sea ice fraction computed by the ocean–sea ice model. There are more consistent options available to couple the simulated sea ice albedo, ice surface temperature, ice, and snow thickness from the ocean models to the atmospheric component (Mogensen et al., 2012), and those will also be considered in future setups.

Figure 1Coupling of the Integrated Forecasting System (IFS) components in (a) IFS-FESOM and (b) IFS-NEMO in nextGEMS configurations. Coupling between the unstructured FESOM grid and the Gaussian grid of the atmosphere is via pre-prepared remapping weights in SCRIP format (Jones, 1999). Direct coupling between the surface wave model (ECWAM) and the ocean is only implemented in IFS-NEMO at the moment; in IFS-FESOM, the ocean and waves only interact indirectly via the atmosphere. ECWAM and the atmosphere have their own set of remapping weights for direct coupling, while ECLand and the atmosphere are more closely coupled to each other.

The oceans provide surface boundary conditions to the atmosphere (sea surface temperature, sea ice concentration, zonal and meridional surface currents), while the atmospheric component provides air–sea fluxes to the ocean models (as listed in Fig. 1). The exchange between the different model grids is implemented as a Gaussian distance-weighted interpolation for both directions. Since the implementation accepts any weight files as long as they are provided in SCRIP format (Jones, 1999), future setups will explore other interpolation strategies, such as the use of conservative remapping weights for the air–sea fluxes to ensure better flux conservation. River runoff for the ocean models is taken from climatology; for IFS-FESOM, the runoff from the COREv2 (Large and Yeager, 2009) flux dataset is applied based on Dai et al. (2009).

In order to couple FESOM with IFS, the existing single-executable coupling interface (i.e. the set of Fortran subroutines) between IFS and NEMO (Mogensen et al., 2012) has been extracted and newly implemented directly in the FESOM source code (Rackow et al., 2023c). From the perspective of the atmospheric component, after linking, FESOM and NEMO thus appear to IFS virtually identical in terms of provided fields and functionality in forecast runs with IFS. Clear gaps and differences to the operational configuration with NEMO v3.4 remain in terms of ocean data assimilation capabilities (NEMOVAR), ocean initial condition generation, and missing surface ocean-wave coupling (Fig. 1). However, these differences do not critically impact the multi-year simulations for nextGEMS described in this study or multi-decadal simulations planned for nextGEMS and DestinE.

2.2 Performed nextGEMS runs and cycles

The nextGEMS project relies on several model development cycles, in which the high-resolution models are run and improved based on community feedback from the analysis of successive runs. In an initial set of kilometre-scale coupled simulations (termed “Cycle 1”), the models were integrated for 75 d, starting on 20 January 2020 (Table 1). For Cycle 1, ECMWF's IFS in Cy47r3 (Cy46r1 for IFS-FESOM) was run at 9 km (TCo1279 in Gaussian octahedral grid notation) and 4.4 km (TCo2559) global spatial resolution. The runs at 9 km were performed with the deep convection parametrization, while at 4.4 km, the IFS was run with and without the deep convection parametrization. The underlying ocean models NEMO and FESOM2.1 had been run on an eddy-permitting 0.25° resolution grid in this initial model cycle (ORCA025 for NEMO and a triangulated version of this for FESOM, tORCA025). Based on the analysis by project partners during a hackathon organized in Berlin in October 2021, several key issues were identified both in the runs with IFS and in those runs with ICON (Hohenegger et al., 2023).

As will be detailed below, the IFS has been significantly improved for the longer “Cycle 2” simulations based on IFS Cy47r3 (Pedruzo-Bagazgoitia et al., 2022a; Wieners et al., 2023), where a 2.8 km simulation (TCo3999) has also been performed. For the purpose of nextGEMS Cycle 2 and 3, an ocean grid with up to 5 km resolution (“NG5”) has been introduced for the FESOM model, which is eddy-resolving in most parts of the global ocean (see Appendix B). The NG5 ocean has been spun up for a duration of 5 years in stand-alone mode, with ERA5 atmospheric forcing (Hersbach et al., 2020) until 20 January 2020. In contrast, NEMO performs active data assimilation to estimate ocean initial conditions for 20 January 2020.

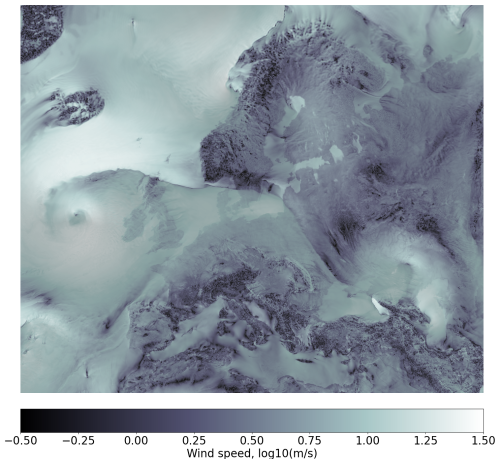

Based on feedback from the second hackathon in Vienna in 2022, “Cycle 3” simulations based on IFS Cy48r1 for the third hackathon in Madrid (June 2023) have been further improved. The ocean has been updated to FESOM2.5 (Rackow et al., 2023c) and run coupled for up to 5 years (see Fig. 2 for an example wind speed snapshot at 4.4 km resolution). In Sect. 3, we will detail the series of scientific improvements in the atmosphere, ocean, and land components of IFS-NEMO/FESOM that were performed to address the identified key issues and how these successive steps result in a better representation of the coupled physical system.

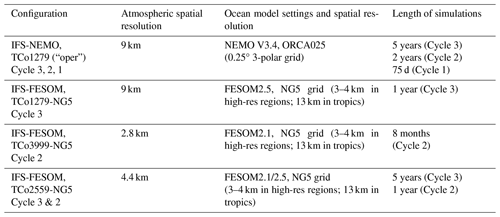

Table 1nextGEMS configurations of the IFS and coupled simulations analysed in this study. The Gaussian octahedral grid notations TCo1279, TCo2559, and TCo3999 refer to 9, 4.4, and 2.8 km global atmospheric spatial resolution, respectively. The simulations were performed with constant greenhouse gas forcing from the year 2020 (CO2 = 413.72 ppmv, CH4 = 1914.28 ppbv, N2O = 331.80 ppbv, CFC11 = 857.38 pptv, CFC12 = 497.10 pptv), prognostic ozone, no volcanic aerosols, and the CAMS aerosol climatology (Bozzo et al., 2020).

2.3 Technical refactoring for the FESOM2.5 ocean–sea ice model code

Prior to the start of nextGEMS, FESOM had been fully MPI-parallelized only and was shown to scale well on processor counts beyond 100 000 (Koldunov et al., 2019). In order to fully support hybrid MPI-OpenMP parallelization in the single-executable framework with IFS, numerous non-iterative loops in the ocean model code were rewritten with the release of FESOM version 2.5. The FESOM model has also been significantly refactored in other aspects over recent years to support coupling with IFS. In the single executable coupled system, the IFS initializes the MPI communicator (Mogensen et al., 2012) and passes it to the ocean model for initialization of FESOM. In particular, FESOM's main routine has been split into three cleanly defined steps, namely the initialization, time-stepping, and finalization steps. This was a necessary step for the current single-executable coupled model strategy at ECMWF, where the ocean is called and controlled from within the atmospheric model. The single-executable configuration is a necessary condition for coupled data assimilation at ECMWF. The adopted strategy means that some IFS-NEMO developments can be directly applied also to IFS-FESOM configurations. Similar to what is done for the wave and atmosphere components of the IFS, we implemented a fast “memory dump” restart mechanism for FESOM. This has the advantage that the whole coupled model can be quickly restarted as long as the parallel distribution (number of MPI tasks and OpenMP processes) does not change during the simulation.

2.4 Model output and online diagnostics

One of the concerns for the scientific evaluation of multi-year high-resolution simulations is the need to read large volumes of output from the global parallel filesystem. This is required for certain processing tasks, such as the computation of monthly averages in a climate context and regridding to regular meshes, so that the relevant information can be easily analysed and visualized. One way to mitigate this burden is to move these computations closer to where the data are produced and process the data in memory. Many of these computations are currently not possible in the IFS code, so starting in Cycle 3 we used MultIO (Sármány et al., 2024), a set of software libraries that provide, among other functionalities, user-programmable processing pipelines that operate on model output directly. IFS has its own Fortran-based I/O server that is responsible for aggregating geographically distributed three-dimensional information and creating layers of horizontal two-dimensional fields. It passes these pre-aggregated fields directly to MultIO for the on-the-fly computation of temporal means and data regridding.

One of the key benefits of this approach is that with the in-memory computation of, for example, monthly statistics, the requirement of storage space may be reduced significantly. Higher-frequency data may only be required for the computation of these statistics and as such would not need to be written to disk at all. For the nextGEMS runs in this study, however, the decision was taken to make use of MultIO mostly for user-convenience, i.e. to produce post-processed output in addition to the native high-frequency output. The computational overhead associated with this (approximately 15 % in this case) is more than offset by the increased productivity gained from much faster and easier evaluation of high-resolution climate output, particularly in the context of hackathons with a large number of participants. As a result, the MultIO pipelines have been configured to support the following five groups of output:

-

hourly or 6-hourly output (depending on variable) on native octahedral grids;

-

hourly or 6-hourly output (depending on variable), interpolated to regular (coarser) meshes for ease of data analysis (the MultIO configuration uses parts of the functionality of the Meteorological Interpolation and Regridding package (MIR), ECMWF's open-source re-gridding software, to be able to execute this in memory);

-

monthly means for all output variables on native grids;

-

monthly means for all output variables on regular (coarser) meshes, interpolated by MultIO calling MIR;

-

all fields encoded or re-encoded in GRIB by MultIO calling ECCODES, an open-source encoding library.

At the end of each pipeline, all data are streamed to disk, more specifically to the Fields DataBase (FDB; Smart et al., 2017), an indexed domain-specific object store for archival and retrieval – according to a well-defined schema – of meteorological and climate data. This mirrors the operational setup at ECMWF. For the nextGEMS hackathons, all simulations and their GRIB data in the corresponding FDBs have been made available in Jupyter Notebooks (Kluyver et al., 2016) via intake catalogs (https://intake.readthedocs.io/en/latest/, last access: 7 November 2024) using gribscan. The gribscan tools scans GRIB files and creates Zarr-compatible indices (Kölling et al., 2024).

Figure 2Wind speed snapshot over Europe as simulated by the IFS with a 4.4 km spatial resolution in the atmosphere. The wind speed map is overlaid with a map of the zonal wind component in a grey-scale colour map for further shading, which has been made partly transparent. The figure does not explicitly plot land. Nevertheless, the high-resolution simulation clearly exposes the continental land masses and orographic details due to larger surface friction and hence smaller wind speeds (darker areas depict lower wind speeds). The image is a reproduction with Cycle 3 data of the award winning entry by Nikolay Koldunov for 2022's Helmholtz Scientific Imaging Contest, https://helmholtz-imaging.de/about_us/overview/index_eng.html (last access: 7 November 2024).

This section details model developments for the atmosphere (Sect. 3.1); ocean, sea ice, and wave (Sect. 3.2); and land (Sect. 3.3) components of IFS-FESOM and NEMO in the different cycles of nextGEMS. Following a short overview of identified key issues and developments at the beginning of each section, we present how those successive development steps translate to a better representation of the coupled physical system.

3.1 Atmosphere

3.1.1 Key issues and model developments

Water and energy imbalances

At the first nextGEMS hackathon, large water and energy imbalances were identified as key issues in the Cycle 1 simulations, which led to large biases in the top-of-the-atmosphere (TOA) radiation balance. If run for longer than the 75 d of Cycle 1, e.g. multiple years, this would lead to a strong drift in global mean 2 m temperature. Analysis confirmed that most of the energy imbalance in the IFS was related to water non-conservation and that this issue gets worse (i) when spatial resolution is increased, and (ii) when the parametrization of deep convection is switched off (hereafter “Deep Off”). This is because the semi-Lagrangian advection scheme used in the IFS is not conserving the mass of advected tracers, e.g. the water species (see Appendix A). However, while this issue was acknowledged to be detrimental for the accuracy of climate integrations, so far it has been thought that it was small enough to not significantly affect the quality of numerical weather forecasts, which span timescales ranging from a few hours to seasons ahead. To address the problem of water non-conservation in the IFS, a tracer global mass fixer was activated for all prognostic hydrometeors (cloud liquid, ice, rain, and snow) in nextGEMS Cycle 2, as well as water vapour (for more details, see Appendix A, which describes the mass fixer approach). The tracer mass fixer ensures global mass conservation, but it cannot guarantee local mass conservation. However, it estimates where the mass conservation errors are larger and inserts larger corrections in such regions, which is often beneficial for local mass conservation and accuracy (see Diamantakis and Agusti-Panareda, 2017). When adding tracer mass fixers to a simulation, the computational cost increases by a few percentage points (typically less than 5 %). Water and energy conservation in Cycle 1 versus Cycle 2 is discussed in Sect. 3.1.2.

Top-of-the-atmosphere radiation balance

To reduce drift in global mean surface temperature, it is essential that the global top-of-the-atmosphere (TOA) radiation imbalance is small. In the nextGEMS Cycle 2 simulation at 4.4 km resolution coupled to FESOM2.1 (Table 1), the TOA net imbalance, relative to observed fluxes from the CERES-EBAF product (Loeb et al., 2018), had been about +3 W m−2 (positive values indicate downward fluxes), resulting from a +5 W m−2 shortwave imbalance that was partly balanced by a −2 W m−2 longwave imbalance. Because of anthropogenic greenhouse gas emissions, CERES shows a +1 W m−2 imbalance. Due to the larger TOA imbalance, the nextGEMS Cycle 2 simulations warmed too much, by about 1K over the course of 1 year (see Sect. 3.1.3). Thus, addressing the TOA radiation imbalance was a major development focus in preparation for the 5-year integration in nextGEMS Cycle 3.

On top of IFS 48r1, in Cycle 3, we used a combination of model changes targeting a reduced TOA radiation imbalance, mostly affecting cloud amount. Changes that increased the fraction of low clouds are (i) a change restricting the detrainment of mid-level convection to the liquid phase; (ii) a reduction of cloud edge erosion following Fielding et al. (2020); and (iii) a reduction of the cloud inhomogeneity, which increases cloud amount as it reduces the rate of accretion. This change is in line with nextGEMS's kilometre-scale resolutions as cloud inhomogeneity is expected to be smaller at high resolutions. High clouds were increased in areas with strong deep convective activity by (iv) decreasing a threshold that limits the minimum size of ice effective radius, in agreement with observational evidence and (v) changing from cubic to linear interpolation for the departure point interpolation of the semi-Lagrangian advection scheme for all moist species except water vapour. The resulting TOA balance in Cycle 3 is discussed in Sect. 3.1.3.

Representation of intense precipitation and convective cells

Precipitation has many important roles in the climate system. It is not only important for the water cycle over land and ocean, but also provides a source of energy to the atmosphere, as heat is released when water vapour condensates and rain forms, which balances radiative cooling. Precipitation is also often associated with mesoscale or large-scale vertical motion, and the corresponding overturning circulation is crucial for the horizontal and vertical redistribution of moisture and energy within the atmosphere.

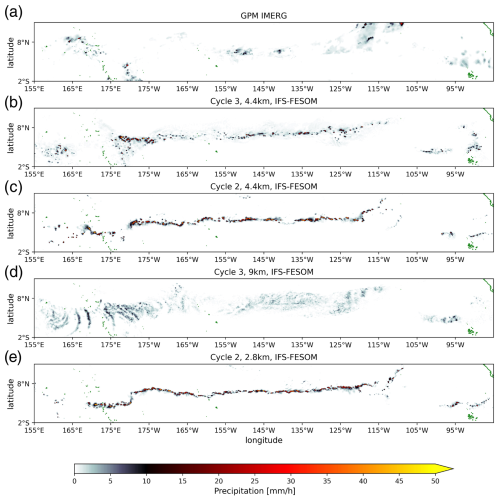

In kilometre-scale simulations in which the deep convection parametrization is switched off (e.g. Cycle 2 at 4.4 and 2.8 km resolution), convective cells tend to be too localized and too intense, and they lack organization into larger convective systems (e.g. Crook et al., 2019; Becker et al., 2021). The tropical troposphere also gets too warm and too dry, and these mean biases as well as biases that concern the characteristics of mesoscale organization of convection also affect the larger scales, for instance zonal-mean precipitation and the associated large-scale circulation. For example, with deep convection parametrization off in Cycle 2 (Deep Off), the Intertropical Convergence Zone (ITCZ) often organizes into a continuous and persistent line of deep convection over the Pacific at 5° N (see Fig. D1 in Appendix D), and the zonal-mean precipitation at 5° N is strongly overestimated.

To address these issues, instead of switching the deep convection scheme off completely, we have reduced its activity by reducing the cloud-base mass flux in Cycle 3. The cloud-base mass flux is the key ingredient of the convective closure, and depends on the convective adjustment timescale τ, which ensures a transition to resolved convection at high resolution via an empirical scaling function that depends on the grid spacing (discussed in more detail in Becker et al., 2021). To significantly reduce the activity of the deep convection scheme in Cycle 3, we use the value of the empirical scaling function that is by default used at 700 m resolution (TCo15999) already at 4.4 km resolution (TCo2559), which corresponds to a reduction of the empirical value that determines the cloud-base mass flux by a factor of 6 compared to its value at 9 km resolution. Precipitation characteristics in Cycle 3 vs Cycle 2 are discussed in Sect. 3.1.4.

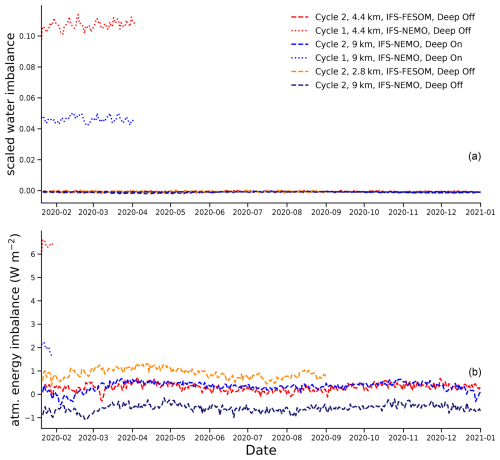

3.1.2 Improvements of mass and energy conservation in Cycle 2 vs Cycle 1

To address the water non-conservation mentioned in Sect. 3.1.1, tracer mass fixers for all moist species were introduced in Cycle 2. Figure 3 shows that the Cycle 1 simulations with the IFS have an artificial source of water in the atmosphere. This artificial source is responsible for 4.6 % of total precipitation in the 9 km simulation with deep convection parametrization switched on (hereafter “Deep On”), which is also used for ECMWF's operational high-resolution 10 d forecasts, and for 10.7 % at 4.4 km with Deep Off. Further analysis after the hackathon by the modelling teams at ECMWF has shown that about 50 % of the artificial atmospheric water source is created as water vapour. The additional water vapour not only affects the radiation energy budget of the atmosphere but can also cause energy non-conservation when heat is released through condensation. The other 50 % of water is created as cloud liquid, cloud ice, rain, or snow. This is related to the higher-order interpolation in the semi-Lagrangian advection scheme introduced for cloud liquid, cloud ice, rain, and snow in IFS Cycle 47r3, which can result in spurious maxima and minima, including negative values, which are then clipped to remain physical. It turns out that the spurious minima are in excess of the spurious maxima, and by clipping them, the mass of cloud liquid, cloud ice, rain, and snow is effectively increased. When activating global tracer mass fixers, global water non-conservation is essentially eliminated (about 0.1 %) in the Cycle 2 simulations (Fig. 3).

On a global scale, the total energy budget of the atmosphere can be defined as

where T is temperature, and qv, qi, and qs are water vapour, cloud ice, and snow. Together, these terms describe the change in vertically integrated frozen moist static energy over time, while the last term on the left-hand side of the equation is the change in vertically integrated kinetic energy (KE). Sources and sinks of the atmosphere's total energy are Fs and Fq, which are the surface turbulent sensible and latent heat fluxes, and , which are the TOA and surface net radiative shortwave and longwave fluxes, and (Ls0−Lv0)Ps is the energy required to melt snow at the surface. Note that dissipation is not a source or sink of total energy.

Using this equation to calculate the global energy budget imbalance in Fig. 3, the Cycle 1 simulation with 9 km resolution has an atmospheric energy imbalance of 2.0 W m−2, and this imbalance increased to 6.4 W m−2 at 4.4 km resolution with Deep Off. In Cycle 2, the energy budget imbalance due to the mass conservation of water species is substantially smaller, having reduced to less than 1 W m−2. This remaining imbalance can be related to the explicit and semi-implicit dynamics because they are still non-conserving, for example causing an error in surface pressure, as well as the mass fixers. The remaining imbalance could be removed by adding a total energy fixer to the model.

As a result of activating the tracer mass fixers for all moist species, the overestimate of mean precipitation reduces, and the troposphere gets slightly colder and drier. While these changes are dominated on climate timescales by the effects that energy conservation has on global mean temperature, they can have a significant impact on timescales of numerical weather prediction. Indeed, the discussed setup with improved water and energy conservation is part of ECMWF's recent operational IFS upgrade in June 2023 (48r1) because it improves the skill scores of the operational weather forecasts (ECMWF Newsletter 172, 2022).

Figure 3(a) Daily mean water non-conservation and (b) daily-mean atmospheric energy imbalance, as a function of lead time for Cycle 1 and Cycle 2 simulations. Water non-conservation is computed as the daily change in globally integrated total water, taking account of surface evaporation and precipitation, as a fraction of the daily precipitation. The atmospheric energy imbalance is calculated with Eq. (1).

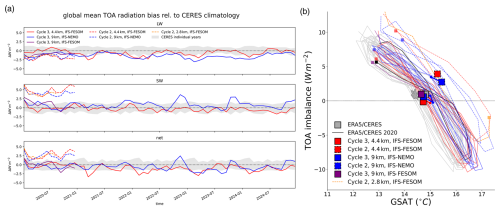

3.1.3 Realistic TOA radiation balance and surface temperature evolution in Cycle 3

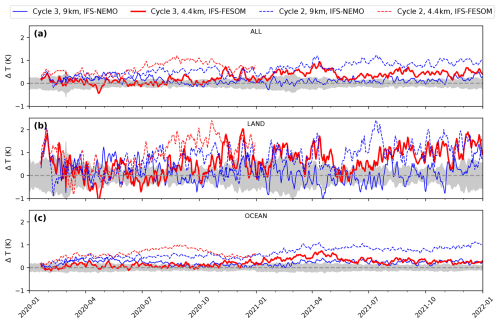

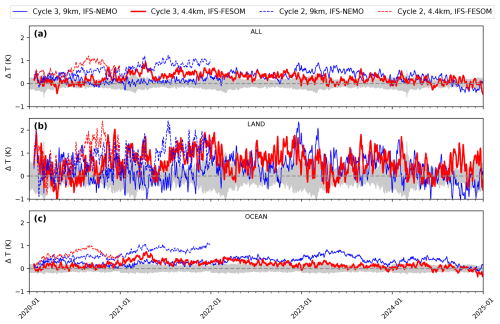

Due to the model changes detailed in Sect. 3.1.1, the nextGEMS Cycle 3 simulations with the IFS have a TOA radiation imbalance that is within observational uncertainty, with respect to the net, shortwave, and longwave fluxes, at all resolutions (Fig. 4). This is not only true for the annual mean value, but also for the annual cycle of TOA imbalance (figure of 8 shape in Fig. 4). As a result, the global mean surface temperature in the Cycle 3 simulations is in close agreement with the ERA5 reanalysis (Hersbach et al., 2020) and stays in close agreement over the 5 years of coupled simulations (Figs. 5 and C1 in Appendix C). Going from Cycle 2 to Cycle 3, the warming over time is not evident anymore in IFS-FESOM and IFS-NEMO (Fig. 5). Differences in local warming over the Southern Ocean in the two models are further discussed in Sect. 3.2.2.

Figure 4Global-mean TOA radiation deviation from the CERES climatology in the 5-year-long nextGEMS simulations and global-mean TOA imbalance as a function of global-mean surface air temperature (GSAT). (a) Grey shading shows the climatological range of individual CERES years. Due to the free-running nature of the nextGEMS simulations, variations within the grey envelope are to be expected even in the absence of any bias. (b) Grey lines show the climatological range of individual CERES years (2001–2020) over ERA5 GSAT data (Hersbach et al., 2020). Thin lines are tracing monthly mean values, with a small square marking the final month for each simulation. Big squares depict annual means (dashed for Cycle 2, solid for Cycle 3), and for multi-year simulations, thick solid lines are tracing annual means for each year, with the big square marking the last simulated annual mean.

Locally, some of the persistent TOA radiation biases in Cycle 2 are also still evident in Cycle 3, for example a positive shortwave bias along coastlines in stratocumulus regions, while other biases, for example associated with deep convective activity over the Maritime Continent, have significantly reduced (not shown).

3.1.4 Improved precipitation characteristics in Cycle 3 vs Cycle 2 and larger-scale impacts

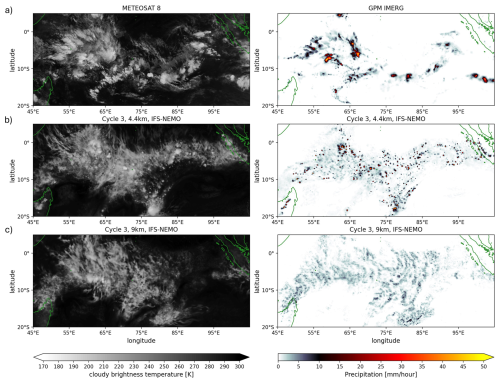

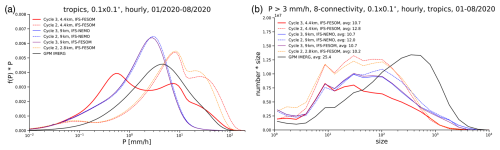

Snapshots of cloudy brightness temperature and precipitation over the Indian Ocean (Fig. 6) illustrate that after 12 d of simulation in Cycle 3, there are biases in the characteristics of precipitating deep convection compared to satellite observations, even after the developments for Cycle 3 (see Sect. 3.1.1) were introduced. The observations show multiple mesoscale convective systems (MCSs), which are associated with strong precipitation intensities and large anvil clouds. Neither the baseline 9 km Cycle 3 simulation nor the 4.4 km simulation manages to represent the MCS as observed. At 9 km, the convective cells are not well defined, with widespread areas of weak precipitation. Indeed, precipitation intensity is underestimated in this setup, with precipitation intensity rarely exceeding 10 mm h−1 (Fig. 7a). Instead of organizing into MCSs, hints of spurious gravity waves initiated from parametrized convective cells can be seen in the precipitation snapshot, emanating in different directions.

Figure 6Snapshot for 31 January 2020 at 21:00 UTC of infrared brightness temperature (left) and hourly precipitation rate (right) over the Indian Ocean, from (a) observations (Meteosat 8 SEVIRI channel 9 and GPM IMERG) and on forecast day 12 of (b) IFS-NEMO 4.4 km and (c) 9 km (third row) simulations. The simulations use the nextGEMS Cycle 3 setup except that they are run with a satellite image simulator and, for technical reasons, are coupled to NEMO V3.4 (ORCA025) here.

However, at 4.4 km resolution, the deep convection scheme is much less active, as the cloud-base mass flux has been reduced by a factor of 6 compared to its value at 9 km (see Sect. 3.1.1). Compared to the Cycle 2 simulations with Deep Off, the tropical troposphere is colder and more humid. This setup also features more realistic precipitation intensities, and particularly the strong precipitation of more than 10 mm h−1 is close to the satellite retrieval GPM IMERG (Fig. 7a), while the Cycle 2 simulations with Deep Off overestimate and with Deep On underestimate intense precipitation. In contrast, weak precipitation of 0.1 to 1 mm h−1 is most strongly overestimated at 4.4 km resolution in Cycle 3. This is mostly precipitation that stems from the weakly active deep convection scheme. Solutions of how to reduce this drizzle bias are being worked on, for example, through an increase in the rain evaporation rate.

A related issue is that the size of convective cells is too small, as illustrated by the size distribution of connected grid cells with precipitation exceeding 3 mm h−1 (Fig. 7b). The average size of a precipitation cell is rather similar in all simulations and only about half the value of that in GPM IMERG. While GPM IMERG has a substantial number of precipitation cells that exceed a size of 103 grid points, which for example would correspond to a precipitation object of 5°×2°, this size is almost never reached in the IFS simulations. The baseline simulations reach this size more often than the higher-resolution simulations but mainly in association with the spurious gravity waves, not because an MCS would be correctly represented. In summary, the representation of intense precipitation has been improved from Cycle 2 to Cycle 3, but that has not led to more realistic precipitation cell sizes. Even though it is possible that GPM IMERG overestimates precipitation cell size, cloudy brightness temperature shows the same issue (Fig. 6). Work with other models (e.g. ICON, NICAM, SCREAM) has also shown that an underestimation of precipitation cell size is a common issue in global kilometre-scale resolution simulations, in some models even leading to “popcorn” convection, and will require more attention in the future.

Figure 7(a) Frequency times bin intensity of hourly precipitation intensity in the tropics (30° S–30° N), conservatively interpolated to a 0.1° grid from January to August 2020. Following Berthou et al. (2019), the bins are exponential, meaning that the area under the curve represents the contribution of that intensity range to the mean. (b) Histogram of precipitation cell size times bin size, using a similar approach to that in (a). The precipitation cell size is defined as the number of connected grid cells on a 0.1° grid (also considering diagonal neighbours) where precipitation exceeds 3 mm h−1, counting cells in the whole tropics (30° S–30° N), again from January to August 2020. The average precipitation cell size is given in the legend. The observational estimate is from GPM IMERG.

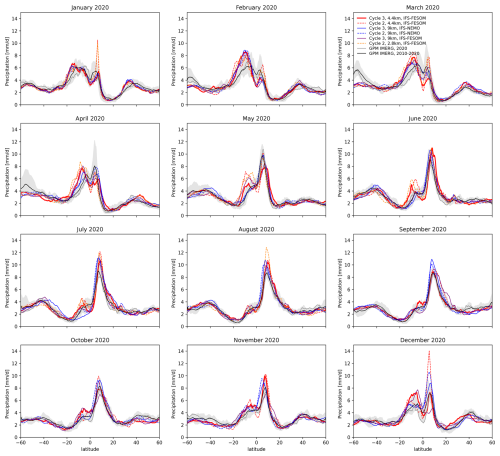

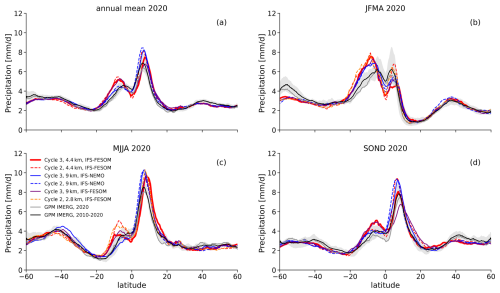

As already mentioned in Sect. 3.1.1, the characteristics of mesoscale organization of convection also affect the larger scales. For example, in Cycle 2 simulations with Deep Off, the ITCZ often organizes into a continuous and persistent line of deep convection over the Pacific at 5° N (see Fig. D1 in Appendix D), and as a consequence, the zonal-mean precipitation is strongly overestimated. This bias improved significantly from Cycle 2 to Cycle 3, when switching from a setup with no deep convection scheme in Cycle 2 (at 2.8 and 4.4 km resolution) to a setup with reduced cloud-base mass flux in Cycle 3 (at 4.4 km). While the peak of precipitation around 5° N was overestimated by a factor of 2 during individual winter months in the 2.8 and 4.4 km Cycle 2 run (see Fig. D2 in Appendix D), the 4.4 km Cycle 3 run shows a very reduced bias, and the peak at 5° N is thus perfectly aligned with the GPM IMERG observations during September–December (Fig. 8d). The 9 km baseline run did not change significantly from Cycle 2 to Cycle 3, but it also shows some small improvements with regards to the overestimation of the precipitation peak at 5° N.

Comparing the FESOM and NEMO runs, it is striking that all FESOM runs overestimate precipitation in the Southern Hemisphere tropics around 10° S, hinting at a biased large-scale circulation, while NEMO runs show some good agreement with observations. The different seasons (Fig. 8b–d) show an overestimation of precipitation at 10° S only during January–April in the NEMO runs, while FESOM runs overestimate precipitation at 10° S during most of the year. Additionally, the FESOM runs also slightly underestimate precipitation at the Equator (particularly during January–April), hinting at a double ITCZ bias, which is a common issue in coupled simulations at kilometre-scale resolutions during boreal winter, e.g. in ICON (Hohenegger et al., 2023). Compared to ICON and other global coupled kilometre-scale models that contributed to the DYAMOND model intercomparison project (Stevens et al., 2019), the zonal-mean precipitation biases in IFS nextGEMS Cycle 3 are of similar nature to and in part smaller than in the other models.

Figure 8Zonal-mean precipitation in nextGEMS Cycle 2 and 3, averaged over (a) the year 2020 and for different 4-month periods in 2020, (b) January–April, (c) May–August, and (d) September–December. Observations are from GPM IMERG for the year 2020 and for the 2010–2020 climatological period, indicating the climatological range of individual years via the grey shading.

3.1.5 Stratospheric Quasi-Biennial Oscillation

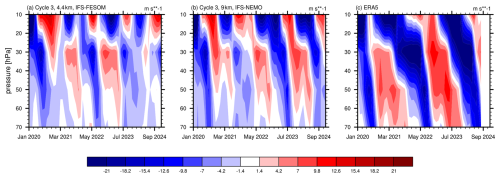

The Quasi-Biennial Oscillation (QBO) in the equatorial stratospheric winds is driven by momentum deposited by breaking small-scale convectively generated gravity waves (GWs) and large-scale Kelvin and Rossby-gravity waves (e.g. Baldwin et al., 2001). The QBO can have a downward influence on the troposphere (e.g. Scaife et al., 2022), and it is thus important to simulate it well in seasonal and decadal prediction models. As kilometre-scale models explicitly resolve GWs to a large extent, they have the potential to better simulate the QBO than lower-resolution models (e.g. CMIP), which fully rely on GW parametrizations. However, GW parametrizations are often tuned to get a good QBO in lower-resolution models (Garfinkel et al., 2022; Stockdale et al., 2022), and at higher resolution the resolved GW forcing can be overestimated with less freedom for tuning. For example, whether parametrized deep convection is switched on or off has a large impact on resolved GWs, with fully resolved convection generating more than 2 times stronger GW forcing (Stephan et al., 2019; Polichtchouk et al., 2021) and a QBO period that is – as a result – too fast.

Figure 9Time evolution of monthly-mean zonal winds, averaged over the equatorial band 10° S–10° N, for (a) the 9 km Cycle 3 simulation with IFS-NEMO, (b) the 4.4 km Cycle 3 simulation with IFS-FESOM, and (c) the ERA5 reanalysis for reference.

We find that the QBO is reasonably well simulated in the nextGEMS Cycle 3 simulations at 9 km and even at kilometre-scale (4.4 km) resolution (Fig. 9). The periodicity is reasonable, peaking at around 20 months at 30 hPa for both simulations (calculated by performing fast Fourier transform (FFT) on the monthly time series). This can probably be further improved by tuning the strength of parametrized non-orographic GW drag, which is still on with reduced magnitude in both 9 and 4.4 km simulations, reduced to 70 % and 35 %, respectively, compared to that at 28 km resolution.

In the lower stratosphere below 40 hPa, the amplitude of the QBO, however, is underestimated (compare panels a–b to panel c in Fig. 9), especially for the eastward phase. This deficiency is also observed in many lower-resolution models (Bushell et al., 2022). We hypothesize that the overall reasonable QBO simulation at kilometre-scale resolution might partly be due to the parametrization for deep convection being still “slightly on” in the Cycle 3 simulations with IFS, as detailed in the previous section.

3.2 Ocean, sea ice, and waves

3.2.1 Key issues and model developments

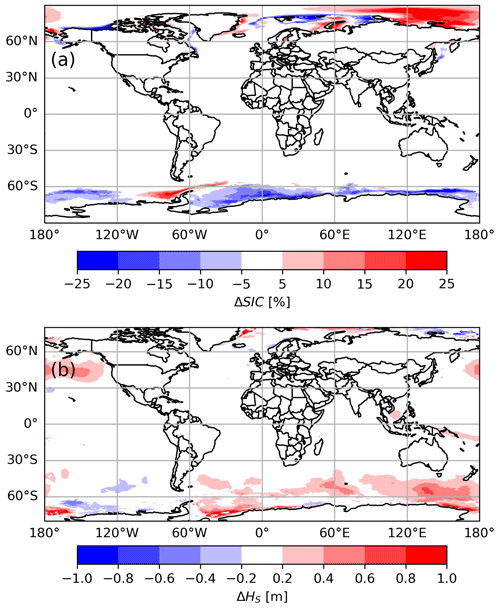

From a model development point of view, one of the main purposes of the nextGEMS Cycle 3 simulations was to set up and test a fully coupled global model that runs over multiple years and still does not show drift in global mean surface temperature and other main climate characteristics, prior to performing the final multi-decadal integrations foreseen in nextGEMS. To improve the general ocean state, an eddy-resolving ocean grid had been introduced already from Cycle 2 onwards. To reduce the drift further (Fig. 5), in particular over the Southern Ocean where the model in Cycle 2 had still shown a strong warming over the ocean with time compared to the ERA5 range for 2020–2021, the FESOM ocean component has been updated to the latest release version 2.5, and coupling between the ocean and atmosphere has been improved.

Warm biases over the ocean

The warming ocean in Cycle 2 leads to an overall warming of the atmosphere as well. The 4.4 km IFS-FESOM simulations in Cycle 2 with 5 km resolution in the ocean had shown a warming over the Southern Ocean in winter and year-round in the tropics. For Cycle 3, the latter has been significantly improved by tuning the TOA balance and using partially active parametrized convection, while the former has been solved by a combination of different factors, namely (i) improvements in the consistency of the heat flux treatment between the atmosphere and ocean/sea ice component; (ii) heat being taken from the ocean in order to melt snow falling into the ocean, which had been overlooked before; (iii) the activation of a climatological runoff/meltwater flux around Antarctica (COREv2, Large and Yeager 2009); and (iv) a general update from FESOM2.1 to FESOM2.5 (Rackow et al., 2023c, https://github.com/FESOM/fesom2/releases/tag/2.5/, last access: 7 November 2024). The resulting more realistic temperature evolution in Cycle 3 is discussed in Sect. 3.2.2.

Ocean currents, eddy variability, and the mixed layer

The eddy-permitting ocean grid in Cycle 1 simulations with IFS-FESOM can impact not just the temperature evolution but also the simulated eddy variability, mean currents, and details of the simulated mixed layer, which all evolve on sub-5-year timescales and are thus relevant to the longer-term performance of a coupled model. An analysis of the resulting simulated ocean state, including mesoscale eddy statistics and the mixed layer, with the final ocean eddy-resolving IFS-FESOM simulations in Cycle 3, is presented in Sect. 3.2.3.

Sea ice performance

In Cycle 1 and 2, the sea ice representation in IFS-FESOM showed prominent deviations from the observed seasonal cycle in the Ocean and Sea Ice Satellite Application Facility (OSI-SAF) dataset. This could mainly be addressed by correcting the shortwave flux over ice with the release of FESOM version 2.5. The resulting sea ice performance in Cycle 3 is discussed in Sect. 3.2.4.

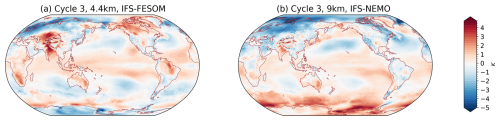

3.2.2 Improved Southern Ocean temperature evolution

As already mentioned in Sect. 3.1.3, IFS-FESOM simulations in Cycle 2 (TCo2559 and NG5 grid in the ocean) had shown a warming over the Southern Ocean in winter and year-round in the tropics. For Cycle 3, the improvement in IFS-FESOM 4.4 km is particularly evident when comparing to the operational 9 km IFS setup with NEMO V3.4. While the Southern Ocean shows a similar magnitude of anomalies in IFS-FESOM TCo2559-NG5 in year 5 compared to the first year, there appears to be an increase in anomalies over time in IFS-NEMO (Fig. 10). This has been confirmed in a second set of IFS-FESOM simulations at TCo399 resolution (28 km) and on the tORCA025 ocean grid (not shown).

3.2.3 Simulated ocean state in terms of currents, eddy variability, and mixed layer

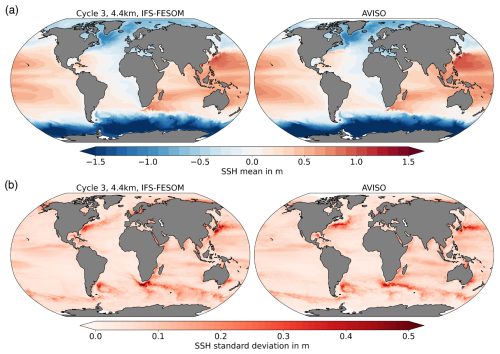

Daily sea surface height (SSH) data are taken from the IFS-FESOM outputs and compared with the AVISO multi satellite altimeter data of daily gridded absolute dynamic topography, representing the observed SSH (Pujol et al., 2016). While ocean eddy variability in the 4.4 km IFS-FESOM Cycle 3 simulation and AVISO can be diagnosed from standard deviation of sea surface height, the structure of (geostrophic) mean currents is diagnosed here from the time-mean SSH.

Figure 11Mean ocean currents and eddy variability expressed (a) as the time mean of the daily sea surface height (SSH) and (b) as the standard deviation of daily SSH data. The left column shows data from the Cycle 3 simulation of IFS-FESOM model, while the right column shows AVISO multi-satellite altimeter data. In AVISO, the global mean SSH is removed from each grid point. AVISO data consist of the time period 2017–2021, while 2020–2024 is used for IFS-FESOM.

Both the time mean and variability of SSH show excellent agreement between the simulation and observations from AVISO (Fig. 11). The position of the main gyres and the gradient of SSH are well reproduced, indicating a good performance in terms of position and strength of the main ocean currents. Ocean eddy variability is also very similar to the eddy-resolving NG5 grid that has been introduced for IFS nextGEMS simulations (see Fig. B1 in Appendix B). However, while there are positive indications, the North Atlantic Current as a northward extension of the Gulf Stream still underestimates SSH variability over the north-west corner. Moreover, Agulhas rings forming at the southern tip of Africa seem to follow a too narrow, static path compared to observations.

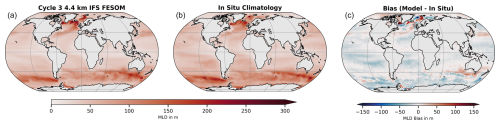

Mixed-layer depth (MLD) is calculated using a density threshold criterion of 0.03 kg m3 from the 10 m depth value. The in situ MLD climatology dataset produced by de Boyer Montégut et al. (2004) and de Boyer Montégut (2023) is based on about 7.3 million casts/profiles of temperature and salinity measurements made at sea between January 1970 and December 2021. While the qualitative agreement between the 4.4 km IFS-FESOM Cycle 3 simulation and observations is excellent (Fig. 12), IFS-FESOM underestimates MLD across most of the ocean areas, with values not exceeding 0–50 m. The largest biases are in the North Atlantic sector, which aligns with MLD bias results from stand-alone FESOM simulations (Treguier et al., 2023) for a 10–50 km ocean grid. Specifically, FESOM overestimates (deepens) MLD in the Labrador Sea, over the Reykjanes Ridge, and in the Norwegian Sea, while underestimating MLD in the Irminger Sea and the Greenland Sea.

Overall, the distribution of MLD in IFS-FESOM is comparable to the stand-alone lower-resolution FESOM ocean simulations. In coupled models, we could typically expect larger biases than presented here, although the relatively short 5-year period of the Cycle 3 simulation may not be sufficient to fully develop the MLD biases. In particular, IFS-FESOM does not show open-ocean convection in the Southern Ocean's Weddell Sea, which is a common bias in CMIP models.

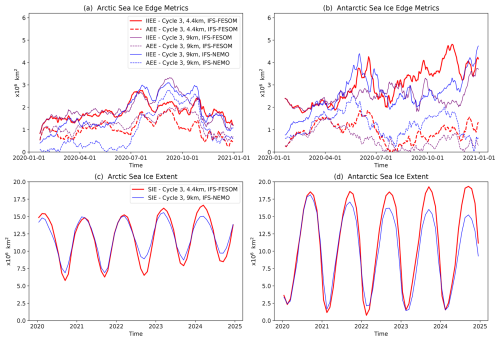

3.2.4 Integrated sea ice performance metrics

The performance of the nextGEMS Cycle 3 simulations is analysed in terms of the sea ice extent and sea ice edge position (Fig. 13). The integrated ice edge error (IIEE), the absolute extent error (AEE), and the sea ice extent (SIE) metrics are used for comparing the model simulations and daily 2020 remote-sensing sea ice concentration observations from the Ocean and Sea Ice Satellite Application Facility (OSI SAF). Specifically, the recently released Global Sea Ice Concentration climate data record (SMMR/SSMI/SSMIS), release 3 (OSI-450-a; OSI SAF, 2022) is considered in our analysis. The IIEE is a positively defined metric introduced by Goessling et al. (2016), and it is commonly used for evaluating the correctness of the sea ice edge position in Arctic and Antarctic sea ice predictions (Zampieri et al., 2018, 2019). We compute the IIEE by summing the areas where the model overestimates and underestimates the observed sea ice edge, here defined by the 15 % sea ice concentration contour. The SIE is the hemispherically integrated area where the sea ice concentration is larger than 15 %. Finally, the AEE represents the absolute difference in the hemispheric SIE of models and observations, therefore not accounting for errors arising from a different distribution of the ice edge in the two sets.

Figure 13(a) Arctic daily integrated ice edge error (IIEE; solid lines) and absolute extent error (AEE; dashed lines) for three different Cycle 3 simulations. Panel (b) is the same as panel (a) but for Antarctic sea ice. The IIEE and AEE metrics are computed by comparing the three model runs against remote-sensing sea ice concentration observations from OSI-SAF. Panels (c) and (d) show the Arctic and Antarctic sea ice extent for two different Cycle 3 simulations from 2020 until the end of 2024.

All model configurations show substantial errors in representing the initial state. In the Arctic, the error grows in the first simulation days in response to the active coupling between the sea ice components and the IFS atmospheric model (Fig. 13a). In the Antarctic, an initial error growth takes place for the IFS-NEMO model configuration, while modest error mitigation is seen for the two IFS-FESOM configurations (Fig. 13b). The latter feature suggests that a coupled setup could be better suited to represent the Antarctic sea ice processes in the FESOM models, at least for this specific instance. Both in the Arctic and Antarctic, the initial error of the IFS-NEMO configuration is substantially lower than that of the IFS-FESOM configurations. This behaviour is expected since NEMO performs active data assimilation, while the sea ice in FESOM is only constrained by the ERA5 atmospheric forcing (Hersbach et al., 2020) imposed during the ocean–sea ice model spinup. In the Antarctic, the initial error differences diminish quickly, and, after a couple of months, the errors of IFS-NEMO and IFS-FESOM are similar. In the Arctic, IFS-NEMO exhibits residual prediction skill over IFS-FESOM in late spring, 4–6 months after the initialization, possibly due to a more accurate description of the Arctic Ocean heat content influenced by the use of proper ocean data assimilation techniques. After the initialization, the pan-hemispheric sea ice model performance is similar for the three configurations, and attributing the error differences to the use of different model resolution or complexity is not obvious, confirming previous findings (e.g. Streffing et al., 2022; Selivanova et al., 2024). Overall, the model errors for the first year of simulations are in line with state-of-the-art seasonal prediction systems (Johnson et al., 2019; Mu et al., 2020, 2022), showing similar features in terms of seasonal error growth.

When considering longer timescales (5-year simulations), model drifts are visible for the IFS-NEMO configuration and, to a lesser extent, for the IFS-FESOM setup. In particular, the NEMO setup appears to progressively lose the winter sea ice cover in the Southern Ocean (Fig. 13d). This behaviour is not compatible with the observed interannual variability of the Antarctic sea ice, and it is likely due to the near-surface temperature warming, which is not affecting the IFS-FESOM setup. Our hypothesis is that the initialization strategy for FESOM and NEMO accounts for some of the discrepancies in the multi-year drift between IFS-NEMO and IFS-FESOM. We found that active data assimilation improved the model performance for the initial months, while an uncoupled ocean spinup might be preferable for minimizing the drift towards the ocean model's equilibrium state during the 5-year coupled simulation. In the Arctic, the sea ice extent tends to increase progressively in both the FESOM and NEMO setups, with an additional dampening of the seasonal cycle observed for NEMO (Fig. 13c). Different multi-year drift regimes between NEMO and FESOM could also be attributed to diverse complexity of the underlying sea ice models. The more sophisticated physical parametrizations of the NEMO V3.4 configuration could respond more to the active coupling with IFS compared to the FESOM setups.

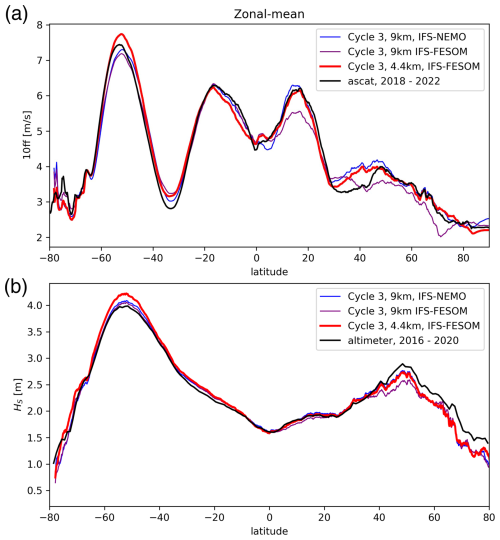

3.2.5 Wind and waves

As written above in Sect. 2, in the IFS there is an active two-way coupling between the atmosphere and ocean waves. Surface wind stress generates ocean surface waves, and in turn those waves modulate the wind stress. The increase in resolution from 4.4 km relative to the 9 km for the IFS-FESOM simulations results in significant increases in wind speed in the storm tracks (∼ 50° S and ∼ 45° N; Fig. 14a), most likely due to the increased ability to resolve the intense winds in the extratropical cyclones. This increased resolution looks to be particularly important for the Southern Ocean, as the 4.4 km simulation is the only one of the three simulations that can achieve winds of realistic intensity in this area. We also note a significant improvement in the trade winds (∼ 15° N) for the 4.4 km IFS-FESOM simulation.

Figure 14Zonal means of 10 m wind speed “10ff” over ocean (a) and significant wave height (b) in nextGEMS Cycle 3. Observations in black are from Copernicus Marine Service for wind speed (“ascat”; scatterometer combined with ERA5), and the ESA-CCI (v3) cross-calibrated altimeter record for wave height (“altimeter”).

The waves in the storm tracks are also significantly larger (Fig. 14b). The increased wind is likely partly responsible for this increase. The second factor likely playing a role here is the change in fetch, i.e. the area of ocean over which the wind is contributing to wave growth. A notable decrease in mean sea ice concentration (more than 10 %) takes place in the 4.4 km simulation (Fig. E1a), thereby freeing up the ocean surface here for wave growth. These changes can be directly seen in the wave field in the according areas (Fig. E1b). These waves then continue to grow with the wind as they propagate into the Southern Ocean, thereby contributing to the larger waves seen in this region. For the NH storm track, this points to an improvement with respect to altimeter observations, but for the Southern Ocean the 4.4 km simulation is now somewhat overestimating the waves.

3.3 Land

Performing simulations at the kilometre scale inherently brings a richer picture in the atmosphere and ocean in terms of small-scale features, as more scales become explicitly resolved. To gain the full benefit of the resolution over land, it is important that the surface information is also at an equivalent or finer resolution. Therefore, work at ECMWF in recent years has been directed to provide the IFS surface model ECLand (Boussetta et al., 2021) with surface global ancillary information of a resolution down to 1 km or finer and to include additional processes that become relevant at those scales. These developments always had the improvement of the operational IFS as a goal and focused, therefore, on timescales from days to a few months. nextGEMS simulations present a timely opportunity to test these changes in parallel before they become operational and to assess their impact when fully coupled on multi-annual timescales. Most of the developments in this section are described in more detail by Boussetta et al. (2021). Here in this section, nextGEMS Cycle 2 and Cycle 3 will refer to IFS CY48r1 (ECMWF, 2023b) and CY49r1 (scheduled for 2024), respectively.

3.3.1 Kilometre-scale surface information

An improved land–water mask was included for nextGEMS Cycle 2. The original source belonging to the Joint Research Centre (JRC) had a nominal resolution of 30 m. The mask was further improved by including glacier data and new land–water and lake fraction masks. In parallel, lake depth data were improved (Boussetta et al., 2021).

Further changes to the land–water mask were tested in nextGEMS Cycle 3. The land use–land cover maps (LU/LC) used before nextGEMS Cycle 3 were based on those from GLCCv1.2 data (Loveland et al., 2000), which is based on observations from the Advanced Very High Resolution Radiometer (AVHRR) covering the period 1992–1993. They had a nominal resolution of about 1 km. In nextGEMS Cycle 3, we used new maps, based on ESA-CCI, which exploit the high resolution of recent remote-sensing products down to 300 m and will pave the way to enable observation-based time-varying LU/LC maps in the future. These maps lead to a more realistic overall increase in low vegetation cover compared to the GLCCv1.2-based maps, at the expense of the high vegetation cover. The new conversion from ESA-CCI to the Biosphere-Atmosphere Transfer Scheme (BATS) vegetation types used by ECLand also reduces the presence of ambiguous vegetation types like “interrupted forest” or “mixed forest”. In addition, work has been done on upgrading the leaf area index (LAI) seasonality and its disaggregation into low- and high-vegetation LAI. This improves, among others, the previously found overestimation of total LAI during March–April–May (MAM) and September–October–November (SON). This revised description of the vegetation will also be used in the next operational IFS cycle (49R1), and an initial implementation and evaluation is presented in Nogueira et al. (2021).

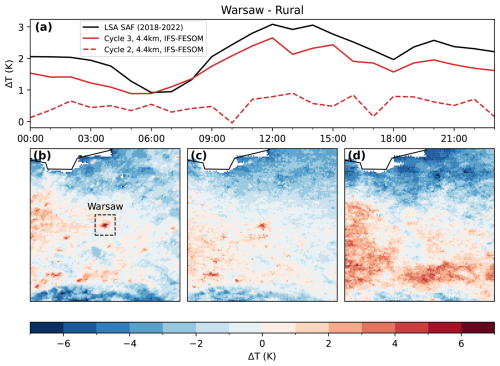

The thermodynamic effects of urban environments emerge at the surface as models refine resolution down to the kilometre scale, and the rural–urban contrast sharpens. To determine where to activate the urban processes at the surface, a global map of urban land cover is used in our nextGEMS Cycle 3 simulations. This map, based on information provided by ECOCLIMAP-SG at an initial 300 m horizontal resolution (McNorton et al., 2023; Faroux et al., 2013), will also be used in the next operational IFS cycle 49R1.

3.3.2 Kilometre-scale surface processes

The presence of the fine spatial information described above opens the path to simulate relevant kilometre-scale processes and interactions. In particular, the representation of snow, 2 m temperature, and urban areas was improved, as explained in the following.

A newly developed multi-layer snow scheme was implemented in IFS CY48r1 and was already used in the nextGEMS Cycle 2 (Arduini et al., 2019), substituting the existing snow bulk-layer scheme. The new scheme dynamically varies the number of snow model layers depending on the snow depth and provides snow temperature, density, liquid water content, and albedo as prognostic variables. In addition, snow and frozen soil parameters were modified for improved river discharge (Zsoter et al., 2022) and permafrost extent (Cao et al., 2022). An additional upgrade in nextGEMS Cycle 3 was a package of changes to ECLand, which will be included in the next operational IFS cycle (49R1). This contains an improved post-processing of 2 m temperature, reducing the warm bias present occasionally under very stable conditions. It also contains a significant upgrade to the representation of the near-surface impact of urban areas. For this purpose, the urban scheme developed in ECLand was activated. This scheme considers the urban environment as an interface connecting the sub-surface soil and the atmosphere above (McNorton et al., 2021, 2023). The urban tile comprises both a canyon and roof fraction. In terms of energy and moisture storage, the uppermost soil layer is not specific to the tile but represents a grid-cell average. This results in a weighted average that accounts for both urban and non-urban environments. The albedo and emissivity values used in radiation exchange computations (McNorton et al., 2021, 2023) are determined based on an assumption of an “infinite canyon”, taking into account “shadowing”. The roughness length for momentum and heat follows the model proposed by Macdonald et al. (1998) and varies according to urban morphology. Simplified assumptions regarding snow clearing and run-off are incorporated based on literature estimates (e.g. Paul and Meyer, 2001). Illustrative examples of urban cover characteristics and the impact of accounting for urbanized areas in Cycle 3 vs Cycle 2 simulations are highlighted in Sect. 4.3.

In this section, we will highlight three examples of notable advances in the Cycle 3 4.4 km nextGEMS simulations that emerge due to the kilometre-scale character of our simulations. Besides successes in the representation of the Madden–Julian Oscillation (MJO), an important variability pattern that is linked to the monsoons, we also provide examples of small-scale air–sea ice interactions in the Arctic and touch on atmospheric impacts due to the new addition of kilometre-scale cities in the IFS. We expect more in-depth process studies as part of ongoing analyses within the nextGEMS community and as part of dedicated future work.

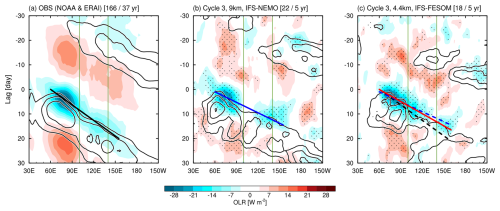

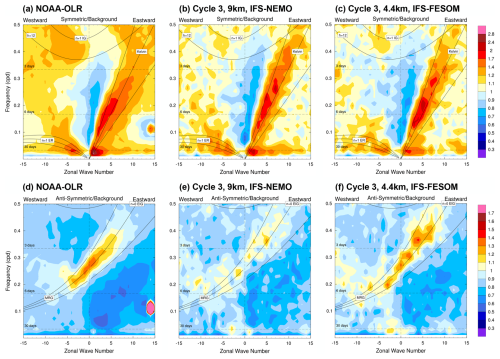

4.1 MJO propagation and spectral characteristics of tropical convection

The MJO is a dominant intraseasonal variability mode in the tropics, characterized by slow eastward propagation of large-scale convective envelopes over the Indo-Pacific Warm Pool (Madden and Julian, 1972). The MJO convection and circulations have profound impacts on weather and climate variability globally (Zhang, 2013); therefore it is important to reproduce the MJO in global circulation models (GCMs) targeting seasonal-to-decadal simulations. Having the MJO well represented in models is indicative of a better tropical or global circulation. Because the reproducibility of the MJO is highly sensitive to the treatment of cumulus convection (e.g. Hannah and Maloney, 2011), many conventional GCMs that adopt cumulus parametrizations, which have uncertainties in the estimation of cumulus mass fluxes and moistening and heating rates, still struggle with simulating important MJO characteristics such as amplitudes, propagation speeds, and occurrence frequencies appropriately (e.g. Ling et al., 2019; Ahn et al., 2020; Chen et al., 2022). This issue might be improved by kilometre-scale simulations as a result of more accurate representation of moist processes, as represented by the first success of an MJO hindcast simulation with NICAM (Miura et al., 2007), but also other physical processes (besides convection) play a role for skilful MJO simulations (Yano and Wedi, 2021).

Figure 15 illustrates the MJO propagation characteristics in the Cycle 3 4.4 km IFS-FESOM simulation in comparison with the observations and the 9 km IFS-NEMO simulation, using the MJO event-based detection method (Suematsu and Miura, 2018; Takasuka and Satoh, 2020). Note that the observational reference is made by the interpolated daily outgoing longwave radiation (OLR) from the NOAA polar-orbiting satellite (Liebmann and Smith, 1996) and ERA-Interim reanalysis (Dee et al., 2011) during the period of 1982–2018. While the 9 km simulation already does a very good job and both the 9 and 4.4 km simulations can reproduce the overall eastward propagation of MJO convection coupled with zonal winds (Fig. 15b and c), the 4.4 km simulation allows improvement even further in terms of amplitudes and propagation speeds. Specifically, MJO convective envelopes in the 4.4 km simulation are continuously organized when they propagate into the Maritime Continent (see OLR anomalies in 100–120° E), and their propagation speeds become slower than in the 9 km simulation and thus closer to those in the observation. We hypothesize that kilometre-scale resolutions and partially resolved convection can better represent convective systems around complex land-sea distributions and topography. Nevertheless, the 4.4 km simulation still retains several biases compared to the observed MJOs, such as much faster propagation and weaker convection amplitudes to the east of 120° E (i.e. the eastern part of the Maritime Continent).

Figure 15Propagation characteristics of MJO convection and circulations composited from (a) observations, (b) IFS 9 km simulation with NEMO, and (c) IFS 4.4 km simulation with FESOM. Time–longitude diagrams of lagged-composite intraseasonal OLR (shading) and 850 hPa westerly wind anomalies (contours) averaged over 10° N–10° S. Contour interval is 0.5 m s−1, with zero contours omitted. Stippling in (b) and (c) denotes statistical significance of OLR anomalies at the 90 % level (all shading in (a) satisfies this significance). The number of detected MJO cases is denoted at the top of the figures together with analysis periods. Green lines indicate the longitudinal range over the Maritime Continent, and black, blue, and red lines indicate the centre of MJO convective envelopes for the observations, for the 9 km simulation, and for the 4.4 km simulation, respectively.