the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Accelerating models for multiphase chemical kinetics through machine learning with polynomial chaos expansion and neural networks

Matteo Krüger

Aryeh Feinberg

Marcel Müller

Ulrich Pöschl

Ulrich K. Krieger

The heterogeneous chemistry of atmospheric aerosols involves multiphase chemical kinetics that can be described by kinetic multi-layer models (KMs) that explicitly resolve mass transport and chemical reactions. However, KMs are computationally too expensive to be used as sub-modules in large-scale atmospheric models, and the computational costs also limit their utility in inverse-modeling approaches commonly used to infer aerosol kinetic parameters from laboratory studies. In this study, we show how machine learning methods can generate inexpensive surrogate models for the kinetic multi-layer model of aerosol surface and bulk chemistry (KM-SUB) to predict reaction times in multiphase chemical systems. We apply and compare two common and openly available methods for the generation of surrogate models, polynomial chaos expansion (PCE) with UQLab and neural networks (NNs) through the Python package Keras. We show that the PCE method is well suited to determining global sensitivity indices of the KMs, and we demonstrate how inverse-modeling applications can be enabled or accelerated with NN-suggested sampling. These qualities make them suitable supporting tools for laboratory work in the interpretation of data and the design of future experiments. Overall, the KM surrogate models investigated in this study are fast, accurate, and robust, which suggests their applicability as sub-modules in large-scale atmospheric models.

- Article

(3236 KB) - Full-text XML

-

Supplement

(389 KB) - BibTeX

- EndNote

An accurate description of the heterogeneous chemistry of atmospheric particles requires explicit coupling of mass transport with chemical reactions (Pöschl et al., 2007; Kolb et al., 2010; Shiraiwa et al., 2014). Especially for particles containing secondary organic matter, field and laboratory experiments during the last decade showed severe transport limitations that affect chemical reactivity (Shiraiwa et al., 2011; Kuwata and Martin, 2012; Berkemeier et al., 2016). While the elementary processes are well understood, kinetic multi-layer models (KMs) describing mass transport and chemical reactions at the gas–particle interface and throughout the particle bulk are computationally expensive due to the need for spatial resolution within the particles (Pöschl et al., 2007; Shiraiwa et al., 2012; Roldin et al., 2014; Berkemeier et al., 2017; Semeniuk and Dastoor, 2020; Dou et al., 2021). For use in global or regional models, the KMs would have to be evaluated for every grid cell, time step, and particle class (size and composition). This computational volume makes the application of KM extremely costly, if not outright impossible.

A second complicating factor for KMs is the multitude of chemical and physical input parameters, such as transport parameters or chemical reaction rate coefficients, which are often poorly constrained or unknown. Thus, in a laboratory setting, KMs are often used in an inverse-modeling approach, in which model parameters are deduced or constrained with experimental data using global optimization (Berkemeier et al., 2017; Tikkanen et al., 2019; Berkemeier et al., 2021; Wei et al., 2021; Milsom et al., 2022). However, due to the inherently coupled nature of the underlying physical and chemical processes, input parameters are often ill constrained; i.e., their numerical value cannot be uniquely determined (Berkemeier et al., 2017). This is particularly problematic when extrapolating the KMs to conditions outside the calibration range, where the calculation outcome can depend strongly on previously insensitive and thus unconstrained parameters (or combinations of parameters). Fit ensembles, i.e., arrays of multiple solutions from repeated execution of a global optimization algorithm, can be utilized to propagate the uncertainty of the global fit to conditions outside the calibration range (Berkemeier et al., 2021). Solving the inverse problem is a complex task that becomes computationally more expensive with an increasing number of uncertain model input parameters, often requiring >105 model simulations (Xu et al., 2018). In some cases, this can be prohibitively expensive to do with a full model, and the problem is exacerbated when acquiring or evaluating fit ensembles.

Computationally inexpensive surrogate models can replace KMs in specialized tasks and help solve the issue of computational cost. These surrogate models are trained on a dataset consisting of a wide range of kinetic input parameters and the associated calculated outputs until they reproduce the KM output with the desired accuracy. Surrogate-based optimization methods are an active field of research (Booker et al., 1999; Vu et al., 2017; Xu et al., 2018). Some studies use an iterative approach, wherein the surrogate model is used to constrain the likely parameter space, and the full model is run within this likely parameter space to refine the surrogate model. Here, we illustrate the generation of surrogate models by introducing two suitable machine learning methods, namely artificial neural networks (NNs) through the Python package Keras (Gulli and Pal, 2017) and polynomial chaos expansion (PCE) with UQLab (Marelli and Sudret, 2014).

Artificial NNs represent a group of common machine learning algorithms. Their functionality is inspired by biological brains, where complex computational processes are based on comparably simple interactions of large numbers of interconnected nodes or neurons (Kröse and van der Smagt, 1996). Neural networks are commonly organized in layers, where an individual neuron obtains signals from neurons in the previous layer and maps them to a single new signal that is passed to neurons of the following layer (Almeida, 2001; Popescu et al., 2009). By systematic variation of the numerical weights of individual neuron operations, the so-called training, an NN can increase its predictive accuracy. The exact mathematical operations that are performed by neurons in specific layers and the arrangement of such layers (architecture of the NN) are determined by so-called hyperparameters. Hyperparameters can be adapted to obtain an NN that is specialized for a specific task, input data structure, or output type (Bishop, 1994; Sadeeq and Abdulazeez, 2020).

In the atmospheric sciences, NNs are used for air quality prediction, function approximation, and pattern recognition tasks (Gardner and Dorling, 1998), but their application as surrogate models for computationally expensive KMs is less well researched. Recently, popular applications of machine learning in atmospheric chemistry and physics include quantitative structure–activity relationship (QSAR) models that map molecular structures to compound properties as an alternative to time-consuming laboratory experiments or quantum mechanical calculations (Lu et al., 2021; Lumiaro et al., 2021; Galeazzo and Shiraiwa, 2022; Krüger et al., 2022; Xia et al., 2022). Holeňa et al. (2010) used surrogate models in computationally costly evolutionary optimization and successfully enhanced this approach with the application of NNs. Tripathy and Bilionis (2018) used an NN to create surrogate models for expensive high-dimensional uncertainty quantification. Other recent applications of NNs as surrogate models address chemical and process engineering (Cavalcanti et al., 2021; Esche et al., 2022) or materials science (Allotey et al., 2021). Machine-learning-based surrogate models have also found application as modules in geoscientific models, including large-scale atmospheric chemistry, transport, and climate models, to reduce computational cost in very demanding tasks such as atmospheric convection (O'Gorman and Dwyer, 2018), gas-phase and heterogeneous chemistry (Keller and Evans, 2019; Kelp et al., 2020; Sturm and Wexler, 2022), or aerosol and cloud microphysics (Rasp et al., 2018; Harder et al., 2022). These surrogate models function either as parameterizations for subgrid processes or replace the chemical integrator.

The second method applied in this work is polynomial chaos expansion (PCE), a method commonly used for uncertainty quantification (Sudret, 2008). In the PCE approach, the full model is represented as a series of suitably built, multivariate, and orthonormal polynomial functions (Marelli and Sudret, 2014). Surrogate models using PCE methods have been developed mainly within engineering fields (Ghanem and Spanos, 2003; Sudret, 2008). Several recent environmental chemistry investigations have applied PCE surrogate modeling, particularly because of its suitability for global sensitivity analysis problems (Thackray et al., 2015; Feinberg et al., 2020). The goal of global sensitivity analysis is to apportion the uncertainty in model output into contributions from the uncertainties of different model input variables, additionally considering interacting effects between input parameter uncertainties (Saltelli et al., 2008). The results from the sensitivity analysis indicate which are the most influential input parameters that should be further constrained and may therefore be a useful tool in designing or prioritizing laboratory experiments.

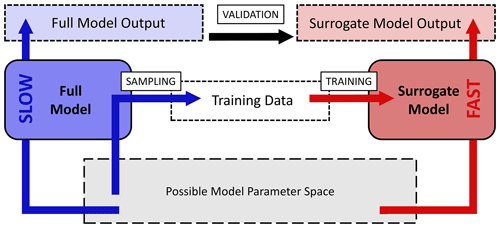

The surrogate-modeling workflow employed in this study is shown in Fig. 1. To acquire a fast-computing surrogate model for the computationally expensive KMs, training data are first acquired by sampling outputs of the full model from the possible model parameter space. The surrogate models are trained with Keras and UQLab on this data and are validated by comparison with a test dataset of full model output.

Figure 1Workflow chart for the surrogate-modeling process employed in this study. The possible or desired model parameter space (gray) is sampled with the slow-computing full model (blue) to acquire training data consisting of model input–output pairs. Training data are used for training of a fast-computing surrogate model (red). Surrogate models are validated by comparison of full-model output and surrogate-model output.

2.1 Kinetic multi-layer model KM-SUB

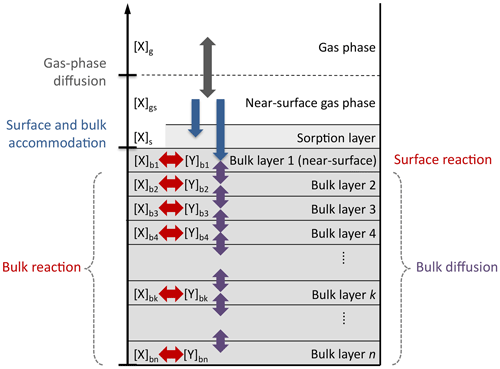

In this study, we employ the kinetic multi-layer model of aerosol surface and bulk chemistry (KM-SUB; Shiraiwa et al., 2010), but the statistical methods could be used with any process model. KM-SUB describes mass transport and chemical reaction at the surface and in the bulk of aerosol particles by solving a set of ordinary differential equations. The model explicitly treats gas diffusion, surface and bulk accommodation of gas molecules, surface–bulk exchange, and bulk diffusion, as well as chemical reaction at the surface and in the bulk of aerosol particles. For a schematic depiction of the processes and compartments of KM-SUB, see Fig. B1.

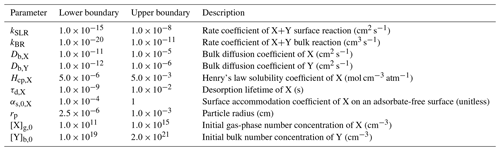

For the model calculations in this study, we chose a general model scenario of a single volatile reactant X (e.g., OH, O3, NO3) reacting with a single non-volatile reactant Y at the surface and in the bulk of the aerosol particle. The input parameters of KM-SUB resulting from this scenario include initial concentrations, reaction rate coefficients, and diffusion coefficients (Table 1). The outputs of KM-SUB are concentration profiles over space and time, but in this study, we summarized KM-SUB output as the total number of Y in a single aerosol particle at time t (NY,t). To minimize data storage requirements, we reduce the full KM-SUB time series to three output values, the time required to reach 90 %, 50 % (i.e., the chemical half-life), and 10 % of NY,0 by interpolation of the primary model output. The inputs and outputs of KM-SUB are then log-transformed. For the NN application, all input parameters and model outputs are additionally normalized to the interval [0:1]. Outputs are normalized by dividing by the longest time recorded to reach 10 % of NY,0.

Table 1KM-SUB input parameters with lower and upper boundaries and fit parameters to the laboratory dataset.

For each input parameter of KM-SUB, individual parameter boundaries are defined, representing a wide array of reactants and scenarios that can be found in either the atmosphere or in laboratory experiments (Table 1). As these ranges cover orders of magnitude, they are assumed to follow log-uniform probability distributions. The parameter space includes liquid to semisolid particles (as expressed by the reactant diffusivities) from 50 nm to 100 µm in size. Reaction rate coefficients range from reactivity close to the diffusion limit, typical for the OH radical (1×1011 cm3 s−1), down to reactions that are 9 orders of magnitude slower, and they may be associated with reactions involving ozone. The volatile reactant X is given a large variability in terms of partitioning properties (as expressed by surface accommodation coefficient αs,0 and desorption lifetime τd) and solubility properties (as expressed by the Henry’s law coefficient), each varying over several orders of magnitude. The initial concentration of non-volatile reactant Y ranges from 1019 to 2×1021 cm−3, which, for an organic substance with a molar mass of 250 g mol−1, corresponds roughly to a molar fraction from 0.5 % in relation to pure particles. The concentration of X in the gas phase is held constant over a simulation and is varied between simulations from a few parts per billion (1011 molec. cm−3) to about 200 parts per million (5×1015 molec. cm−3). For the explicit treatment of gas diffusion, we assume a temperature of 298 K and a fixed diffusion coefficient of 0.14 cm2 s−1.

2.2 Acquisition of training data

The KM is used to generate a training dataset for the surrogate models by randomly sampling parameters in log-uniform space within their associated boundaries. The number of KM samples obtained in this study is about 4.3×106 and required supercomputing. A random set of 1000 samples is removed from the dataset and withheld from model training for the visualization and validation of fully trained surrogate models. We refer to this set of data as test data.

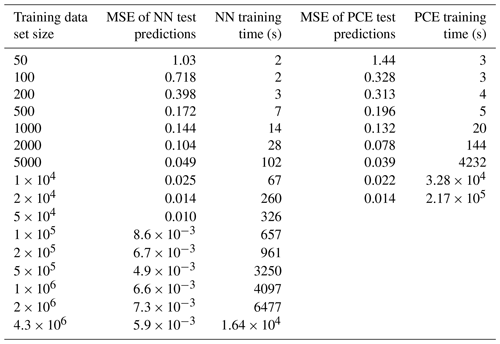

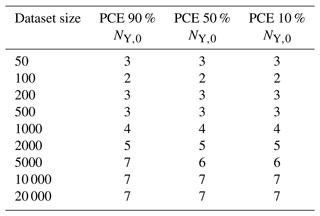

As not only the computational effort of sampling training data but also the time required for surrogate-model creation increases with the size of the training dataset, the surrogate-model performance is tested on different fractions of the total training dataset in order to find an optimal or sufficient computational expense for a given application (Table 2). Note that the PCE method is only applied to the first nine fractions (50–20 000) due to the computational expense of the method at higher training-set sizes.

2.3 Neural network (NN)

The neural network architecture employed in this study is a multi-layer perceptron (MLP), in which nodes are organized in consecutive layers. MLPs are characterized by a chosen number of so-called hidden layers that connect the visible input and output layers. Each node in a layer is connected with each node in the previous and following layers (fully connected layers). We test MLPs consisting of up to five hidden layers with variable numbers of neurons to determine a network architecture that suits the specified task. A detailed mathematical description of MLP functionality and architecture is given in Appendix A1. The processes of hyperparameter tuning, tested ranges, and suggested values for individual hyperparameters are described in Appendix A2. We apply 5-fold cross-validation to avoid over-fitting of the trained models during hyperparameter tuning (Stone, 1974; Wong and Yeh, 2020).

2.4 Polynomial chaos expansion (PCE)

The PCE surrogate-modeling approach will be briefly summarized here. For more technical descriptions, the reader can refer to Sudret (2008) and Le Gratiet et al. (2017). The principle behind PCE is that the model output Z is decomposed into an infinite series as follows (Ghanem and Spanos, 2003):

where M is the number of model input variables, α is a multi-index that defines the variable components of the polynomials, yα refers to coefficients, and ψα refers to orthonormal polynomials of either one input variable (representing first-order effects) or multiple input variables (representing interacting effects). The type of orthonormal polynomial in Eq. (1) depends on the probability distribution of the input parameters, with uniform probability distributions being represented by Legendre polynomials and Gaussian probability distributions being represented by Hermite polynomials (Xiu and Karniadakis, 2002). In practice, Eq. (1) is truncated by restricting the maximum degree of the polynomials. We calculate PCE coefficients (yα) using the implementation of least-angle regression (Blatman and Sudret, 2010) from the open-source MATLAB-based software UQLab (Marelli and Sudret, 2014). This software allows degree-adaptive calculation of the PCE, meaning that PCE models can be constructed from degree 1 to a maximum selected degree, which we set to 14. If the cross-validation error of the model does not decrease over two steps in degree, the algorithm stops, and the PCE with the lowest cross-validation error is selected. All PCEs calculated for this study are equal to or below degree 7 (Table A1).

2.5 Global sensitivity analysis

In global sensitivity analysis, Sobol’ indices describe the contribution of uncertainty from each input parameter and interactions between input parameters (Sobol', 2001). The variance D of the model output Z is decomposed into partial variances as follows:

i.e., the sum of first-order partial variances (Di), second order partial variances (Dij), and higher order terms. Sobol’ indices (S) are calculated by normalizing the partial variances by the total variances, e.g., for the first-order contribution of ith input parameter and for the contribution of the interaction between the ith and jth input parameters to the model uncertainty. In order to summarize the overall influence of a specific input parameter, including interactions, a total Sobol’ index () can be calculated:

Given the similarities between the PCE and Sobol’ decompositions, the Sobol’ sensitivity indices can be calculated analytically from the PCE coefficients rather than with Monte Carlo sampling (Sudret, 2008). This eliminates a potentially computationally expensive step of the sensitivity analysis process using other surrogate models.

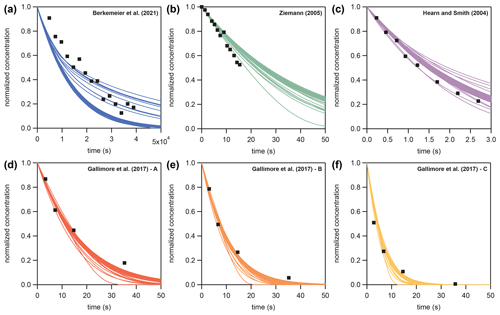

2.6 Acquisition of fit ensembles

With the trained NN model, we illustrate and test the application of surrogate models in inverse-modeling approaches with KM-SUB. Six sets of experimental data of the well-studied oleic acid ozonolysis heterogeneous reaction system (Hearn and Smith, 2004; Ziemann, 2005; Gallimore et al., 2017; Berkemeier et al., 2021) are used to determine kinetic parameter sets that minimize the mean squared (absolute) logarithmic error (MSLE) between model and experiments. More details about the specific optimization problem can be found in Appendix B.

where N is the number of experimental datasets, n is the number of data points in each set, zij is the model output, and yij is the value for experiment i and data point j. As this optimization problem does not offer a unique solution (Berkemeier et al., 2021), the aim is not to find a best-fitting parameter set but rather to find a fit ensemble, i.e., an array of parameter sets that all yield a sufficient agreement of the associated KM-SUB outputs with the experimental data. The fit ensemble then not only represents the ranges to which kinetic input parameters could be constrained but is also a means of assessing the uncertainty associated with the KM-SUB model fit when extrapolating the model to environmental conditions outside the calibration range (Berkemeier et al., 2021). For both purposes, the number of model fits in the ensemble must be sufficiently large to fully grasp the remaining model flexibility. The process of determining such a large set of fits can be computationally expensive. A surrogate model can either fully replace the KM or assist in the fitting process by suggesting sampling points.

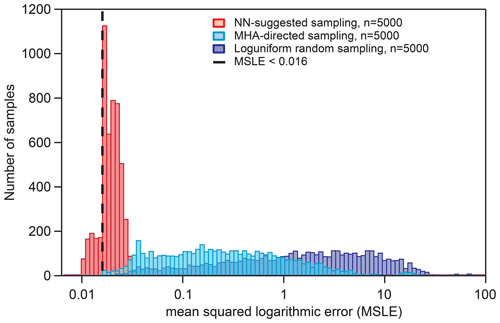

In this study, we evaluate the benefits of surrogate-model-supported sampling by comparing the distribution of KM-SUB output MSLE for three different sampling approaches within the parameter boundaries presented in Table 1. These approaches are

-

random log-uniform sampling,

-

Metropolis–Hastings algorithm (MHA)-directed sampling,

-

NN-suggested sampling.

We choose an MSLE of 0.016 as representing sufficient agreement between model and experiment. For NN-suggested sampling, we perform a random log-uniform screening of the NN surrogate model in batches of 10 000 samples until we find 5000 NN-suggested fits with MSLE <0.016; we then feed these pre-sampled parameter sets into KM-SUB. We refer to KM-SUB outputs with an MSLE below 0.016 as fits.

As a directed-sampling approach, we apply the Metropolis–Hastings algorithm (MHA), a common Markov chain Monte Carlo method to sample multivariate distributions with high numbers of dimensions (Chib and Greenberg, 1995; Robert and Casella, 1999). We determine the maximum step size of the MHA by basic testing on smaller subsets, and we find that a step size of 0.1 is a good compromise between a high acceptance ratio and sufficient exploration of the entire parameter space. Here, step size is defined as the maximal parameter variation as a fraction of the total logarithmic parameter space. For comparability of the aggregate computational effort, each sampling is performed on an 11th Generation Intel(R) Core(TM) i5-1145G7 CPU with 2.6 GHz.

2.7 Hardware and software tools

Training-data acquisition with KM-SUB was performed in MATLAB on the high-performance computing system Cobra at the Max Planck Computing and Data Facility (MPCDF). Model training of the NN was performed in Python 3.6 using the packages Keras 2.3.0 (Chollet et al., 2015), TensorFlow 1.14.0 (Abadi et al., 2015), scikit-learn 0.22.1 (Pedregosa et al., 2011), NumPy 1.18.1 (Harris et al., 2020), and pandas 0.25.3 (McKinney et al., 2010). Each model training was conducted on one NVIDIA GeForce GTX 1080 Ti on the high-performance computing cluster Mogon of the Johannes Gutenberg University Mainz. For the PCE and sensitivity analysis, we use the MATLAB-based software UQLab 1.3 (Marelli and Sudret, 2014), which provides a framework for surrogate modeling and uncertainty quantification. We performed PCE calculations on ETH Zurich's high-performance computing cluster Euler, using four CPUs per PCE calculation and up to 45 GB of memory for the largest sample size (20 000).

To determine training times of the NN and PCE models, the required time for sample loading and file writing is disregarded, and only the true training time is reported. For the PCE method, the time to reach 90 %, 50 %, and 10 % of the initial amount of Y, NY,0, is calculated by three separate models, and training times are added to yield a combined training time for each training sample size. For the NN method, one model can be set to return multiple values as output; thus, a single model is used for each dataset to predict all three output values collectively.

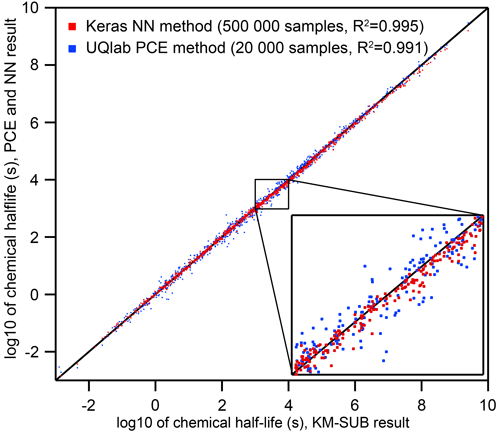

Figure 2Comparison of the two surrogate models predicting the chemical half-life for heterogeneous chemistry on aerosol particles for a wide range of KM-SUB output (N=1000 – test dataset not part of the training dataset). The surrogate models were trained on 20 000 (PCE) and 500 000 (NN) KM-SUB data samples, respectively. Training times of models with this complexity fall below an upper feasibility range on a personal computer within a few days of time. The inset shows a magnified section and spans from chemical half-lives of 103 s (≈15 min) to 104 s (≈3 h), a common range for laboratory experiments.

3.1 Surrogate-model training, accuracy, and speed

Neural networks (NNs) and polynomial chaos expansion (PCE) are used to emulate the reaction time of a multiphase chemical system in KM-SUB. Table 2 displays the test set errors and training times of surrogate models with the NN and PCE methods as a function of training-dataset size. The best surrogate models achieve mean square errors (MSEs) for logarithmic reaction times of 0.0049 for the NN method and 0.0137 for the PCE method. This corresponds to correlation coefficients R2 of 0.995 and 0.991, respectively. Figure 2 shows that these optimal versions for both surrogate models track the chemical half-life in the test dataset remarkably well. The MSE of test predictions is very similar between both approaches for the same training-dataset size. Error variance of the five cross-validation NN models for the unseen test data is very low at , indicating little to no over-fitting. We found no significant correlations between surrogate-model error and the values of the 10 model input parameters (Fig. S1 in the Supplement).

For dataset sizes above 2000, the PCE model requires much more training time than the NN model. However, note that these training times of individual NN models disregard the necessity of hyperparameter tuning. While hyperparameter tuning is not required in an already established application, the total computation times of NN surrogate-model training and hyperparameter tuning can be 2 orders of magnitude larger, depending on the extent of the hyperparameter tuning that is performed. Hence, the use of an NN method is advisable when a large amount of training data are easily available and when model accuracy is of high importance.

The PCE method, on the other hand, is limited in terms of training-dataset size (≤20 000) as a result of the calculation time and memory requirements in MATLAB. The PCE method is thus a good choice if the training dataset is small or if its acquisition is time limiting and when time-consuming hyperparameter tuning is not desired.

Both surrogate models calculate new output data orders of magnitude faster than the full model, KM-SUB. The computation time of KM-SUB lies on the order of a few seconds per model run, while both the PCE and NN methods can generate large arrays of 10 000 individual surrogate-model solutions in under 1 s.

3.2 Prediction of chemical loss and half-life

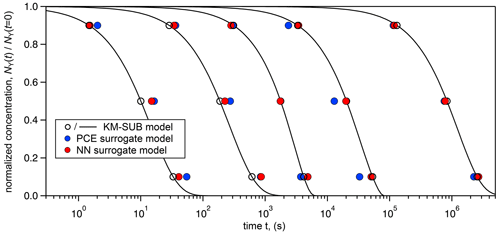

Figure 3 visualizes the accuracy of the surrogate models (training-set sizes 20 000 for PCE method and 500 000 for NN method) by generating five concentration–time curves from various input parameter combinations and comparing them to the full KM-SUB model. Input parameter sets were arbitrarily selected from the test set so that the results were spaced out homogeneously across KM-SUB chemical half-lives. We see that, over the wide range, both surrogate models closely represent the KM output, with the NN method slightly outperforming the PCE method as result of the larger training-set size.

Note that both methods are able to produce relatively good surrogate models (MSE ≈0.1) from only 1000 training-data samples (Table 2), which, depending on the user's application, may already be accurate enough. We conclude that KM-SUB is a rather well-behaved model and that it is suitable for these surrogate-modeling techniques.

Figure 3Comparison of time-dependent output of the surrogate models (PCE method – blue markers; NN method – red markers) with KM-SUB model output (solid black lines) for five arbitrarily chosen KM-SUB runs spanning seconds to weeks of reaction time. The surrogate models' predicted time for depletion of 10 %, 50 %, and 90 % of reactant Y in the aerosol phase. KM-SUB output at these three stages is highlighted with open black markers.

3.3 Global sensitivity analysis with surrogate models

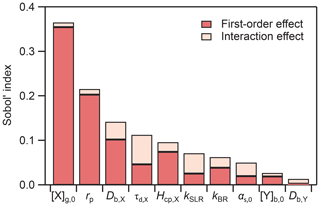

An advantage of using a PCE surrogate model is that the Sobol' sensitivity indices can be extracted analytically (Sudret, 2008). We present the global sensitivity analysis for the 50 % lifetime (i.e., the chemical half-life) PCE model in Fig. 4. We can differentiate between first-order effects of a model input parameter, wherein the parameter alone influences the output, and interaction effects, wherein combinations of parameter values influence the output. In Fig. 4, first-order effects dominate the total effect, accounting for 88 % of the model variance. Using the total Sobol' indices (ST) as a metric, we can assess the overall influence of individual model parameters on the uncertainty of the model output. The input parameters with the largest influence on the chemical half-life of Y are the initial gas-phase concentration of X ([X]g,0, ST=0.36) and the radius of the particle (rp, ST=0.22). Certain parameters have a very low influence (ST≤0.05) on the chemical half-life, including the accommodation coefficient (αs,0,X), the initial concentration of Y ([Y]b,0), and the bulk diffusion coefficient of Y (Db,Y). This means that variations in these parameters will, in many cases, not have a large effect on the chemical half-life, indicating that it will be difficult to constrain these parameters with measurements. Sensitivity analysis is thus a useful tool to understand model behavior and to identify parameters which have the largest influence on model output.

Figure 4Results of global sensitivity analysis showing Sobol' sensitivity indices for the chemical half-life PCE model.

It has to be noted that a low global sensitivity across the entire input parameter space does exclude the possibility that pockets in the parameter space exist where either of these parameters are very influential. Constraining the input parameter space to smaller subsets can constrain the model to special kinetic regimes or limiting cases that exhibit characteristic profiles of parameter sensitivity (Berkemeier et al., 2013).

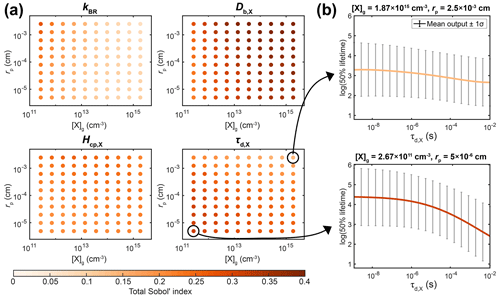

In most laboratory experiments, the particle radius and the initial concentration of X are known values. By fixing these parameters in the sensitivity analysis, a substantial fraction of the model variance is eliminated, and other unknown parameters account for a more significant fraction of the overall model variance. To demonstrate how the importance of parameters varies over different experimental conditions, we conducted sensitivity analyses by sampling the PCE surrogate model for specified values of [X]g,0 and rp (Fig. 5a). Certain input parameters are consistently important across the range of experimental conditions, e.g., oxidant diffusivity (Db,X) and solubility (Hcp,X). Other parameters, including kBR and τd,X, have varying influences depending on the experimental conditions. For example, at a high [X]g,0 and for large rp, the total Sobol' index of τd,X is 0.14. Accordingly, the upper panel of Fig. 5b shows that the chemical half-life of Y only decreases slightly with increasing τd,X. In contrast, at low [X]g,0 and for small rp, the total Sobol' index increases to 0.31. In the lower panel of Fig. 5b, the chemical half-life of Y shows a stronger dependence on τd,X. This can be understood because, for small particles, surface processes are more important, and the surface concentration of X depends on its lifetime for desorption, especially at low gas-phase concentrations. This information could be potentially useful for an experimental researcher, as it shows that experiments at low [X]g,0 and small rp could be more helpful for constraining τd,X than experiments under other experimental conditions.

These calculations would have been very time consuming when carried out with the full KM. Hence, the combination of surrogate modeling and sensitivity analysis is a helpful yet underutilized tool for designing experiments that are best suited to constraining certain model parameters.

Figure 5Detailed sensitivity analysis with the PCE method as a function of experimental conditions, i.e., the gas-phase concentration of X ([X]g,0) and particle radius (rp). (a) Total Sobol' indices of four KM input parameters: bulk reaction rate coefficient of X and Y (kBR), bulk diffusion coefficient of X (Db,X), solubility coefficient of X (Hcp,X), and desorption lifetime of X (τd,X). (b) Relationship between the value of τd,X and the chemical half-life of Y for two selected experimental conditions.

3.4 NN-supported global optimization

Utilizing the NN surrogate model, we illustrate the accelerated acquisition of parameter sets associated with KM-SUB outputs in good agreement with experimental data, which is the key step in inverse-modeling and optimization approaches. While uncertainty is introduced by surrogate models, their predictions can be obtained orders of magnitude faster than regular KM-SUB calculations. The uncertainty introduced by the NN method can be minimized by additional sampling of a much smaller number of parameter sets with the KM. Re-sampling of NN-suggested solutions with the KM can avoid collection of false-positive fits (i.e., meeting the conditions for a fit in the NN model but not in KM-SUB), and sampling in close vicinity of NN-suggested solutions might avoid false-negative fits (i.e., not meeting the conditions for a fit in the NN model but in KM-SUB).

We perform random parameter sampling in log-uniform space using the boundaries presented in Table 1, and we find about 5000 NN-suggested fits in 1.84×107 parameter sets (0.027 % acceptance), requiring a total of 13 847 s (<4 h). A comparable calculation with KM-SUB would take years on a desktop computer or days on a supercomputer. In contrast, re-sampling of the NN-suggested fits with KM-SUB to avoid false-positive fits is time-consuming but feasible. The time required for sampling 5000 kinetic parameter sets (i.e., 5000×6 runs in KM-SUB) on a desktop computer ranges from 51 646 s (≈14 h) for NN-suggested sampling to 103 530 s (≈29 h) for random log-uniform sampling. The differences may be a result of the fraction of parameter sets where differential equation calculations of the KM require a very long time to terminate. They are often associated with very long reaction times and thus with large MSLEs.

Figure 6Distribution of KM-SUB output MSLEs for three different sampling methods in comparison with six sets of experimental data, as described in Sect. 2.6. The dashed vertical line represents the threshold used for the acquisition of NN-suggested fits (MSLE <0.016). The maximum step size for the MHA-directed sampling is 0.1.

Figure 6 shows the distributions of KM-SUB output MSLE for three different sampling methods: log-uniform random sampling, MHA-directed sampling, and NN-suggested sampling (Sect. 2.6). The NN-suggested sampling method greatly outperforms both random and MHA-directed sampling. The number (fraction) of KM-SUB outputs with an MSLE <0.016 is 1602 (32.04 %) for NN-suggested sampling, 21 (0.42 %) for directed KM-SUB sampling, and 3 (0.06 %) for random sampling.

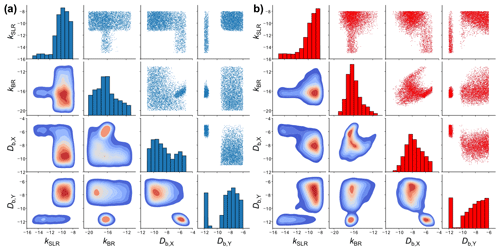

Figure 7Scatter plot matrices of the fitting parameter space of 5000 fits to six experimental datasets of the ozonolysis of oleic acid aerosols (Appendix B) obtained with (a) KM-SUB and (b) the NN surrogate model. Shown are four out of seven optimized kinetic parameters. The diagonal elements are histograms showing the distributions of the individual fit parameter densities. The off-diagonal elements are scatter plots (top right) or densities (bottom left) of solutions for all possible combinations of two kinetic parameters. The KM-SUB fit ensemble originates from the application of the MHA with a step size of 0.1 and the NN fit ensemble from log-uniform random sampling.

Figure 7 compares the fitting parameter space of 5000 fits obtained with KM-SUB (panel a) and the NN surrogate model (panel b), exemplary for four kinetic parameters in a so-called scatter plot matrix. The off-diagonal elements in each matrix show bivariate scatter plots (top right) or density plots (bottom left) depicting the relationship of two kinetic parameters within the fit ensemble. The diagonal elements are histograms showing frequency distributions of the individual parameters. The two scatter plot matrices show a clear resemblance in terms of the fit parameter spaces between the surrogate model and the original KM. Much like the scatter plots of the original-model fits, the scatter plots of the surrogate-model fits can be used to identify areas that will not produce a fit to experimental data. For example, there are no fits with a slow surface reaction rate coefficient (kSLR) and a high oxidant solubility (Hcp,X). However, some features in the scatter plots of the surrogate model deviate from those in the scatter plots of the original KM. We can visually identify areas in the scatter plots that indicate false-positive fits, i.e., areas that are only occupied in the plots for the surrogate model. An absence of density in other areas, compared to the plots for the original model, suggests the existence of false-negative fits.

Whether it is worthwhile to train a surrogate model for a given optimization task depends strongly on the complexity of the KM and the difficulty of the optimization problem. For every application, there is a break-even point where the computational expense of training a surrogate model is compensated for by the acceleration of the optimization task(s). In this study, the computational effort required to obtain the training data for the best-performing surrogate model (500 000 KM-SUB sample runs) would only find ∼350 fits if we had directed this initial sampling effort into fit acquisition using only KM-SUB. This is due to the very low fraction of fits (0.42 %) without the aid of surrogate models and because KM-SUB has to be evaluated six times, once for each laboratory dataset. Thus, if the uniqueness of an optimization result must be determined, large amounts of laboratory data are available, or simply, if global optimization of the same model is required on a regular basis, training of a surrogate model for this task quickly becomes worthwhile.

In this study, we illustrate the application of artificial neural networks (NNs) and polynomial chaos expansion (PCE) to generate fast surrogate models for computationally expensive kinetic models (KMs). As a template KM, we use the kinetic multi-layer model of aerosol surface and bulk chemistry (KM-SUB; Shiraiwa et al., 2010), but the presented methods can equally be applied to other process models. To reduce data storage requirements in sampling and to simplify emulation, the complex model output of KM-SUB, i.e., the concentration profiles of all reactants over space and time, is reduced to the reaction time of the system to reach a certain reaction progress, as this is a typical observable in laboratory experiments. We note that other derivatives of KM-SUB model output, such as the uptake coefficient of the reactant gas to the aerosol surface, could be chosen depending on the target application of the surrogate model. Emulation of the entire KM-SUB output may be feasible and could be facilitated by data compression methods such as auto-encoders, singular-value decomposition, or principal component analysis.

Our findings suggest that, after an initial investment of computational effort for training-data sampling and model training, both methods yield models with very good correlations to KM-SUB outputs (R2>0.99). Furthermore, we provide examples for the application of such surrogate models for inverse modeling and kinetic parameter optimization: global sensitivity analysis with the PCE method and acceleration of global optimization with the NN method. The results indicate that surrogate models can aid in costly optimization tasks or help to select environmental system parameters for experiments that significantly constrain KM solution space and thus global fit uncertainty.

It is important to note that errors of surrogate models are not simply based on a random deviation of surrogate-model predictions from the values of the original KM but on a divergence of the predicted parameter hyper-surface in specific areas, for instance where training data are sparse. False-positive fits, i.e., parameter sets with associated surrogate-model predictions in better agreement with experimental data as the delineated KM output, can simply be eliminated by re-sampling the parameter sets in question with the KM (Fig. 6). On the other hand, false-negative fits and their implications for inverse-modeling approaches are much more difficult to address. While optimization hyper-surfaces can be scanned relatively quickly with a surrogate model, this is not the case for the much slower KM. Scatter plot matrices of the fitting parameter space are a valid means of identifying areas that are occupied by false-negative fits, but a proper comparison (Fig. 7) requires computationally costly sampling with the KM.

Another potential application of surrogate models for KM is their utilization as modules in large-scale chemical transport models. As such models often require many calls of the respective module, direct use of models such as KM-SUB, where calculation time is on the order of seconds, is not feasible. Trained, predictive surrogate models, however, can easily be integrated into existing modeling programs. This potentially allows the coupling of small-scale kinetic process models with large-scale chemical transport models for the simulation of weather, pollution, and climate. Kelp et al. (2022) recently demonstrated acceleration of a global model with an online-learned NN as a chemistry module. The machine learning models presented in this study could be embedded in existing FORTRAN code in a similar fashion.

A1 Neural network architecture

A multi-layer perceptron (MLP) represents a complex, non-linear function that maps an input to an output vector. Each individual node in an MLP represents a non-linear function, mapping from the sum of its inputs to an output, which is passed to the following interconnected nodes. Connections between nodes are associated with weights that are optimized during training in order to reduce model output error in comparison with the dataset values. For this purpose, an optimization algorithm is used to minimize a previously defined loss function based on the final model output. In their entirety, these weights determine the output of the MLP based on a specific input, and their adaptation, based on the training data, represents the learning process. The following equations show the principal mathematical functionality of neurons in an MLP, as elaborated upon in Kröse and van der Smagt (1996):

where sk(t) is the effective input of a neuron k at time t, wjk is the weight between neuron j and k, and yj(t) is the activation of the previous neuron j. This equation represents the input of a single computational node in the NN, which is based on the activation of connected previous nodes and the associated (trained or initialized) weights. Θk(t) represents an offset term. Of this so-called propagation rule, different adaptations have been proposed (Feldman and Ballard, 1982).

This equation introduces the activation function of neuron k (Fk) that maps the neuron input sk(t) and the current activation yk(t) of the neuron to a new activation value. A common type of the activation function is a sigmoid-like function, as shown in the following equation:

The definition of the input and activation functions of neurons determines the output of any NN given a specific input and a set of weights. NN model training or learning describes the process of iterative modification of weights in order to shift the output in a desired way. In most cases, this desired shift is a reduction of error towards the associated predictable values in the underlying population associated with the training data. If the model is well fitted to the training data but predicts further data of the same population with much larger error, it is referred to as over-fitted. Over-fitting describes overall ill generalization of an NN model. A common learning rule for nodes, the so-called perceptron learning rule, is shown in the following equation:

Table A1Employed polynomial degree of the three PCE models (90 %, 50 %, and 10 % lifetime) as function of training-dataset size.

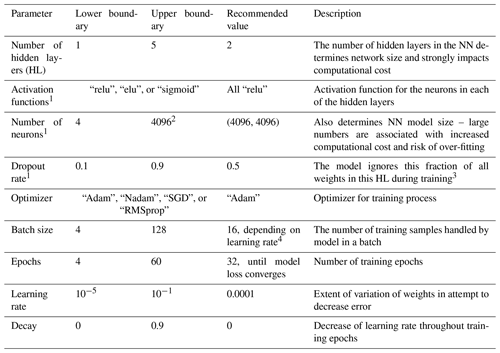

Table A2Descriptions and tested ranges for neural network hyperparameters used in the Python package Keras, as well as the recommendation based on our best-performing model.

1 Must be set for each individual HL. 2 Larger numbers of neurons per layer lead to over-fitting and, with the hardware setup in this study, memory limitations on the computational cluster. 3 A random fraction of weights obtained in previous training, determined in size by this parameter, is not considered during the current training. This handicap or restriction ensures that the model is not capable of just saving or learning all the inputs and associated outputs in the training dataset throughout multiple training epochs (as this would be over-fitting). 4 A larger batch size decreases training time and requires higher learning rates.

In order to adjust the weights, the output of the NN is compared with the associated training-data values. If the prediction is inaccurate, the modification Δwi is applied. For this iterative adjustment to be target-oriented, an optimizer is necessary to reduce the prediction error of the NN during training. Different optimizers are commonly used in machine learning applications, such as simple gradient methods like stochastic gradient descent (SGD), where an estimate of the gradient (the direction of the steepest descent) along with a selected step size determines the variation of input parameters in the current step. As information in a feed-forward NN, like an MLP, is only passed in one direction, a method called back propagation is used to determine the direction and amount of weight adjustment in previous NN layers based on the error of the final prediction. More in-depth explanations, definitions, and examples for back propagation and optimization throughout the learning process can be found in Rumelhart et al. (1995) and Hecht-Nielsen (1992); for further information regarding MLPs and NNs in general, see Almeida (2001) or Popescu et al. (2009).

A2 Hyperparameter tuning

Comprehensive hyperparameter tuning is conducted every time a surrogate model is trained on different training data. In this study, we focus on the investigation of dataset sizes and training times. For this reason and because our application of NN is not very common and only a small amount of information regarding successful model architectures and hyperparameters is available, only basic, plain network architectures are tested (i.e., MLPs with up to five fully connected hidden layers and up to 4096 neurons in each of the layers). We perform hyperparameter tuning in three steps, aiming for an optimization of number of layers, layer activation functions, learning rate, and batch size in the first step; number of neurons in each layer in the second step; and dropout rate in the third step. For each step, we apply an adapted grid search where multiple well-performing hyperparameter sets from the previous step are extended by variation of the additionally optimized hyperparameter of the current step.

We performed relatively comprehensive hyperparameter tuning with 60 to 120 hyperparameter sets for each data subset, with each tested set resulting in five models for the individual cross-validation folds. Sets of hyperparameters that lead to well-performing models can, to some extent, be adopted for approaches with similar preconditions regarding the number of inputs and outputs or training-dataset size. For a similar approach, we recommend a basic hyperparameter tuning with at least 10 hyperparameter sets and 5-fold cross-validation. The best models are selected by the average test set error of the five models for each of the cross-validation folds using the mean squared error. The ranges of hyperparameters tested in this study are listed in Table A2 along with the hyperparameter values of the best-performing models for large datasets.

Besides NNs from the Keras package, other deep-learning algorithms tested for this study are the random forest regressor, the decision tree regressor, the SGD regressor, the ridge regressor, least absolute shrinkage and selection operator (LASSO), logistic regression, and the MLP regressor, provided by the Python library scikit-learn (Pedregosa et al., 2011). As most of the tested algorithms did not perform very well in basic tests, we focus on Keras as a common and versatile tool for neural network application.

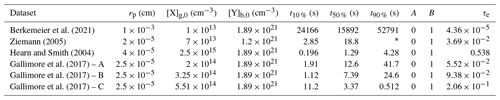

In Sect. 3.4, KM-SUB and the NN surrogate model are applied to six experimental datasets of the ozonolysis of oleic acid aerosols – these are available in the literature (Hearn and Smith, 2004; Ziemann, 2005; Gallimore et al., 2017; Berkemeier et al., 2021). These datasets comprise flow tube, environmental chamber, and single-particle levitation techniques and are a subset of data investigated earlier by Berkemeier et al. (2021), omitting the studies that investigated particles with a sodium chloride core or in which the particle size was not measured. The experimental datasets are converted to normalized concentrations (NY,t and NY,0) and are further simplified by fitting a mono-exponential decay () and evaluating the reaction time at which 10 %, 50 %, and 90 % of oleic acids are consumed. Table B1 shows the environmental parameters (particle radius rp, ozone concentration [X]g,0, and initial oleic acid concentration [Y]b,0), the derived reaction times, and the mono-exponential fit parameters. The remaining seven KM-SUB input parameters listed in Table 1 are optimized. Figure B2 shows all datasets alongside a fit ensemble of 50 KM-SUB fits with a fit correlation MSLE of less than 0.016.

Figure B1Compartments and processes of the kinetic multi-layer model of aerosol surface and bulk chemistry (KM-SUB).

Table B1Model parameters for the global optimization of six oleic acid ozonolysis datasets.

* Too far outside data range.

| KM | Kinetic multi-layer model |

| KM-SUB | Kinetic multi-layer model of aerosol |

| surface and bulk chemistry | |

| MHA | Metropolis–Hastings algorithm |

| MLP | Multi-layer perceptron |

| MSE | Mean square error |

| MSLE | Mean squared (absolute) logarithmic error |

| NN | Neural network |

| PCE | Polynomial chaos expansion |

All training data, as well as the source code used for obtaining NN and PCE models, are archived on Zenodo (https://doi.org/10.5281/zenodo.7214880; Berkemeier et al., 2022).

The supplement related to this article is available online at: https://doi.org/10.5194/gmd-16-2037-2023-supplement.

TB and UKK conceived the study. All authors designed the research. TB (KM-SUB model), MK (NN model), and AF and MM (PCE model) wrote the code and performed the simulations. All authors discussed and interpreted the calculation results. TB and MK led the writing of the paper and the overall design of graphics and tables. AF and MM co-led the writing and graphics for the sections applying PCE models. All authors contributed to the writing and editing of the paper.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors thank Coraline Mattei and Jake Wilson for the helpful discussions. We thank Paul Ziemann, Geoffrey Smith, and Peter Gallimore for providing the published data in tabulated form. The authors gratefully acknowledge the computing time granted on the supercomputer Mogon at Johannes Gutenberg University Mainz (https://hpc.uni-mainz.de/, last access: 11 April 2023) and on the supercomputer Cobra at the Max Planck Computing and Data Facility (https://www.mpcdf.mpg.de/, last access: 11 April 2023).

This work was funded by the Max Planck Society (MPG) and supported by ETH Zurich through the ETH Research Grant (grant no. ETH-03 17-2). Matteo Krüger was supported by the Max Planck Graduate Center with the Johannes Gutenberg University (MPGC). Aryeh Feinberg acknowledges financial support from ETH Zurich (grant no. ETH-39 15-2).

The article processing charges for this open-access publication were covered by the Max Planck Society.

This paper was edited by Po-Lun Ma and reviewed by two anonymous referees.

Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., Corrado, G. S., Davis, A., Dean, J., Devin, M., Ghemawat, S., Goodfellow, I., Harp, A., Irving, G., Isard, M., Jia, Y., Jozefowicz, R., Kaiser, L., Kudlur, M., Levenberg, J., Mané, D., Monga, R., Moore, S., Murray, D., Olah, C., Schuster, M., Shlens, J., Steiner, B., Sutskever, I., Talwar, K., Tucker, P., Vanhoucke, V., Vasudevan, V., Viégas, F., Vinyals, O., Warden, P., Wattenberg, M., Wicke, M., Yu, Y., and Zheng, X.: TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, [code], https://www.tensorflow.org/ (last access: 11 April 2023), 2015. a

Allotey, J., Butler, K. T., and Thiyagalingam, J.: Entropy-based active learning of graph neural network surrogate models for materials properties, J. Chem. Phys., 155, 174116, https://doi.org/10.1063/5.0065694, 2021. a

Almeida, L. B.: Multilayer Perceptrons, in: The Algebraic Mind: Integrating Connectionism and Cognitive Science, The MIT Press, https://doi.org/10.7551/mitpress/1187.003.0004, 2001. a, b

Berkemeier, T., Huisman, A. J., Ammann, M., Shiraiwa, M., Koop, T., and Pöschl, U.: Kinetic regimes and limiting cases of gas uptake and heterogeneous reactions in atmospheric aerosols and clouds: a general classification scheme, Atmos. Chem. Phys., 13, 6663–6686, https://doi.org/10.5194/acp-13-6663-2013, 2013. a

Berkemeier, T., Steimer, S. S., Krieger, U. K., Peter, T., Pöschl, U., Ammann, M., and Shiraiwa, M.: Ozone uptake on glassy, semi-solid and liquid organic matter and the role of reactive oxygen intermediates in atmospheric aerosol chemistry, Phys. Chem. Chem. Phys., 18, 12662–12674, https://doi.org/10.1039/C6CP00634E, 2016. a

Berkemeier, T., Ammann, M., Krieger, U. K., Peter, T., Spichtinger, P., Pöschl, U., Shiraiwa, M., and Huisman, A. J.: Technical note: Monte Carlo genetic algorithm (MCGA) for model analysis of multiphase chemical kinetics to determine transport and reaction rate coefficients using multiple experimental data sets, Atmos. Chem. Phys., 17, 8021–8029, https://doi.org/10.5194/acp-17-8021-2017, 2017. a, b, c

Berkemeier, T., Mishra, A., Mattei, C., Huisman, A. J., Krieger, U. K., and Pöschl, U.: Ozonolysis of Oleic Acid Aerosol Revisited: Multiphase Chemical Kinetics and Reaction Mechanisms, ACS Earth Space Chem., 5, 3313–3323, https://doi.org/10.1021/acsearthspacechem.1c00232, 2021. a, b, c, d, e, f, g, h

Berkemeier, T., Krüger, M., Feinberg, A., Müller, M., Pöschl, U., and Krieger, U.: Generation of surrogate models with artificial neural networks and polynomial chaos expansion (training data and source code), Zenodo [code, data set], https://doi.org/10.5281/zenodo.7214880, 2022. a

Bishop, C. M.: Neural networks and their applications, Rev. Sci. Instrum., 65, 1803–1832, 1994. a

Blatman, G. and Sudret, B.: Adaptive sparse polynomial chaos expansion based on least angle regression, J. Comput. Phys., 230, 2345–2367, https://doi.org/10.1016/j.jcp.2010.12.021, 2010. a

Booker, A. J., Dennis, J. E., Frank, P. D., Serafini, D. B., Torczon, V., and Trosset, M. W.: A rigorous framework for optimization of expensive functions by surrogates, Struct. Multidiscip. O., 17, 1–13, 1999. a

Cavalcanti, F. M., Kozonoe, C. E., Pacheco, K. A., and de Brito Alves, R. M.: Application of artificial neural networks to chemical and process engineering, IntechOpen, https://doi.org/10.5772/intechopen.96641, 2021. a

Chib, S. and Greenberg, E.: Understanding the Metropolis-Hastings algorithm, Am. Stat., 49, 327–335, 1995. a

Chollet, F. et al.: Keras, [code], https://github.com/fchollet/keras (last access: 11 April 2023), 2015. a

Dou, J., Alpert, P. A., Corral Arroyo, P., Luo, B., Schneider, F., Xto, J., Huthwelker, T., Borca, C. N., Henzler, K. D., Raabe, J., Watts, B., Herrmann, H., Peter, T., Ammann, M., and Krieger, U. K.: Photochemical degradation of iron(III) citrate/citric acid aerosol quantified with the combination of three complementary experimental techniques and a kinetic process model, Atmos. Chem. Phys., 21, 315–338, https://doi.org/10.5194/acp-21-315-2021, 2021. a

Esche, E., Weigert, J., Rihm, G. B., Göbel, J., and Repke, J.-U.: Architectures for neural networks as surrogates for dynamic systems in chemical engineering, Chem. Eng. Res. Des., 177, 184–199, 2022. a

Feinberg, A., Maliki, M., Stenke, A., Sudret, B., Peter, T., and Winkel, L. H. E.: Mapping the drivers of uncertainty in atmospheric selenium deposition with global sensitivity analysis, Atmos. Chem. Phys., 20, 1363–1390, https://doi.org/10.5194/acp-20-1363-2020, 2020. a

Feldman, J. A. and Ballard, D. H.: Connectionist Models and Their Applications: Introduction, Cogn. Sci., 6, 205–254, https://doi.org/10.1207/s15516709cog0901_1, 1982. a

Galeazzo, T. and Shiraiwa, M.: Predicting glass transition temperature and melting point of organic compounds via machine learning and molecular embeddings, Environ. Sci. Atmos., 2, 362–374, https://doi.org/10.1039/D1EA00090J, 2022. a

Gallimore, P., Griffiths, P., Pope, F., Reid, J., and Kalberer, M.: Comprehensive modeling study of ozonolysis of oleic acid aerosol based on real-time, online measurements of aerosol composition, J. Geophys. Res.-Atmos., 122, 4364–4377, 2017. a, b, c, d, e

Gardner, M. W. and Dorling, S. R.: Artificial neural networks (the multilayer perceptron) – a review of applications in the atmospheric sciences, Atmos. Environ., 32, 2627–2636, https://doi.org/10.1016/S1352-2310(97)00447-0, 1998. a

Ghanem, R. G. and Spanos, P. D.: Stochastic finite elements: a spectral approach, Courier Corporation, ISBN 10 0486428184, ISBN 13 9780486428185, 2003. a, b

Gulli, A. and Pal, S.: Deep learning with Keras, Packt Publishing Ltd, ISBN 10 1787128423, ISBN 13 9781787128422, 2017. a

Harder, P., Watson-Parris, D., Stier, P., Strassel, D., Gauger, N. R., and Keuper, J.: Physics-informed learning of aerosol microphysics, Environ. Data Sci., 1, e20, https://doi.org/10.1017/eds.2022.22, 2022. a

Harris, C. R., Millman, K. J., van der Walt, S. J., Gommers, R., Virtanen, P., Cournapeau, D., Wieser, E., Taylor, J., Berg, S., Smith, N. J., Kern, R., Picus, M., Hoyer, S., van Kerkwijk, M. H., Brett, M., Haldane, A., del Río, J. F., Wiebe, M., Peterson, P., Gérard-Marchant, P., Sheppard, K., Reddy, T., Weckesser, W., Abbasi, H., Gohlke, C., and Oliphant, T. E.: Array programming with NumPy, Nature, 585, 357–362, https://doi.org/10.1038/s41586-020-2649-2, 2020. a

Hearn, J. D. and Smith, G. D.: Kinetics and product studies for ozonolysis reactions of organic particles using aerosol CIMS, J. Phys. Chem. A, 108, 10019–10029, 2004. a, b, c

Hecht-Nielsen, R.: Theory of the backpropagation neural network, in: Neural networks for perception, 65–93, Elsevier, https://doi.org/10.1016/B978-0-12-741252-8.50010-8, 1992. a

Holeňa, M., Linke, D., Rodemerck, U., and Bajer, L.: Neural networks as surrogate models for measurements in optimization algorithms, in: International Conference on Analytical and Stochastic Modeling Techniques and Applications, Cardiff, UK, 14–16 June 2010, 351–366, Springer, https://doi.org/10.1007/978-3-642-13568-2_25, 2010. a

Keller, C. A. and Evans, M. J.: Application of random forest regression to the calculation of gas-phase chemistry within the GEOS-Chem chemistry model v10, Geosci. Model Dev., 12, 1209–1225, https://doi.org/10.5194/gmd-12-1209-2019, 2019. a

Kelp, M. M., Jacob, D. J., Kutz, J. N., Marshall, J. D., and Tessum, C. W.: Toward Stable, General Machine-Learned Models of the Atmospheric Chemical System, J. Geophys. Res.-Atmos., 125, e2020JD032759, https://doi.org/10.1029/2020JD032759, 2020. a

Kelp, M. M., Jacob, D. J., Lin, H., and Sulprizio, M. P.: An online-learned neural network chemical solver for stable long-term global simulations of atmospheric chemistry, J. Adv. Model. Earth Sy., 14, e2021MS002926, https://doi.org/10.1029/2021MS002926, 2022. a

Kolb, C. E., Cox, R. A., Abbatt, J. P. D., Ammann, M., Davis, E. J., Donaldson, D. J., Garrett, B. C., George, C., Griffiths, P. T., Hanson, D. R., Kulmala, M., McFiggans, G., Pöschl, U., Riipinen, I., Rossi, M. J., Rudich, Y., Wagner, P. E., Winkler, P. M., Worsnop, D. R., and O' Dowd, C. D.: An overview of current issues in the uptake of atmospheric trace gases by aerosols and clouds, Atmos. Chem. Phys., 10, 10561–10605, https://doi.org/10.5194/acp-10-10561-2010, 2010. a

Kröse, B. and van der Smagt, P.: An Introduction to Neural Networks, The University of Amsterdam, https://www.infor.uva.es/~teodoro/neuro-intro.pdf (last access: 11 April 2023), 1996. a, b

Krüger, M., Wilson, J., Wietzoreck, M., Bandowe, B. A. M., Lammel, G., Schmidt, B., Pöschl, U., and Berkemeier, T.: Convolutional neural network prediction of molecular properties for aerosol chemistry and health effects, Nat. Sci., 2, e20220016, https://doi.org/10.1002/ntls.20220016, 2022. a

Kuwata, M. and Martin, S. T.: Phase of atmospheric secondary organic material affects its reactivity, P. Natl. Acad. Sci. USA, 109, 17354–17359, 2012. a

Le Gratiet, L., Marelli, S., and Sudret, B.: Metamodel-based sensitivity analysis: polynomial chaos expansions and Gaussian processes, in: Handbook of Uncertainty Quantification, 1289–1325, Springer, https://doi.org/10.1007/978-3-319-12385-1_38, 2017. a

Lu, J., Zhang, H., Yu, J., Shan, D., Qi, J., Chen, J., Song, H., and Yang, M.: Predicting rate constants of hydroxyl radical reactions with alkanes using machine learning, J. Chem. Inf. Model., 61, 4259–4265, 2021. a

Lumiaro, E., Todorović, M., Kurten, T., Vehkamäki, H., and Rinke, P.: Predicting gas–particle partitioning coefficients of atmospheric molecules with machine learning, Atmos. Chem. Phys., 21, 13227–13246, https://doi.org/10.5194/acp-21-13227-2021, 2021. a

Marelli, S. and Sudret, B.: UQLab: A framework for uncertainty quantification in Matlab, in: Vulnerability, uncertainty, and risk: quantification, mitigation, and management, 2554–2563, American Society of Civil Engineers, [code], https://doi.org/10.1061/9780784413609.257, 2014. a, b, c, d

McKinney, W. et al.: Data structures for statistical computing in python, in: Proceedings of the 9th Python in Science Conference, Austin, TX, 28 June–3 July 2010, [code], 445, 51–56, https://doi.org/10.25080/Majora-92bf1922-00a, 2010. a

Milsom, A., Squires, A. M., Ward, A. D., and Pfrang, C.: The impact of molecular self-organisation on the atmospheric fate of a cooking aerosol proxy, Atmos. Chem. Phys., 22, 4895–4907, https://doi.org/10.5194/acp-22-4895-2022, 2022. a

O'Gorman, P. A. and Dwyer, J. G.: Using Machine Learning to Parameterize Moist Convection: Potential for Modeling of Climate, Climate Change, and Extreme Events, J. Adv. Model. Earth Syst., 10, 2548–2563, https://doi.org/10.1029/2018MS001351, 2018. a

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., and Duchesnay, E.: Scikit-learn: Machine learning in Python, J. Mach. Learn. Res., 12, 2825–2830, 2011. a, b

Popescu, M.-C., Balas, V. E., Perescu-Popescu, L., and Mastorakis, N.: Multilayer perceptron and neural networks, WSEAS Trans. Circuits Syst., 8, 579–588, 2009. a, b

Pöschl, U., Rudich, Y., and Ammann, M.: Kinetic model framework for aerosol and cloud surface chemistry and gas-particle interactions – Part 1: General equations, parameters, and terminology, Atmos. Chem. Phys., 7, 5989–6023, https://doi.org/10.5194/acp-7-5989-2007, 2007. a, b

Rasp, S., Pritchard, M. S., and Gentine, P.: Deep learning to represent subgrid processes in climate models, P. Natl. Acad. Sci. USA, 115, 9684–9689, https://doi.org/10.1073/pnas.1810286115, 2018. a

Robert, C. P. and Casella, G.: The Metropolis-Hastings Algorithm, in: Monte Carlo statistical methods, 231–283, Springer, https://doi.org/10.1007/978-1-4757-3071-5_6, 1999. a

Roldin, P., Eriksson, A. C., Nordin, E. Z., Hermansson, E., Mogensen, D., Rusanen, A., Boy, M., Swietlicki, E., Svenningsson, B., Zelenyuk, A., and Pagels, J.: Modelling non-equilibrium secondary organic aerosol formation and evaporation with the aerosol dynamics, gas- and particle-phase chemistry kinetic multilayer model ADCHAM, Atmos. Chem. Phys., 14, 7953–7993, https://doi.org/10.5194/acp-14-7953-2014, 2014. a

Rumelhart, D. E., Durbin, R., Golden, R., and Chauvin, Y.: Backpropagation: The basic theory, in: Backpropagation: Theory, architectures and applications, 1–34, Lawrence Erlbaum Hillsdale, NJ, USA, ISBN 0-8058-1259-8, 1995. a

Sadeeq, M. A. and Abdulazeez, A. M.: Neural networks architectures design, and applications: A review, in: 2020 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 23–24 December 2020, IEEE, 199–204, https://doi.org/10.1109/ICOASE51841.2020.9436582, 2020. a

Saltelli, A., Ratto, M., Andres, T., Campolongo, F., Cariboni, J., Gatelli, D., Saisana, M., and Tarantola, S.: Global sensitivity analysis: the primer, John Wiley & Sons, ISBN 978-0-470-05997-5, 2008. a

Semeniuk, K. and Dastoor, A.: Current state of atmospheric aerosol thermodynamics and mass transfer modeling: A review, Atmosphere, 11, 156, https://doi.org/10.3390/atmos11020156, 2020. a

Shiraiwa, M., Pfrang, C., and Pöschl, U.: Kinetic multi-layer model of aerosol surface and bulk chemistry (KM-SUB): the influence of interfacial transport and bulk diffusion on the oxidation of oleic acid by ozone, Atmos. Chem. Phys., 10, 3673–3691, https://doi.org/10.5194/acp-10-3673-2010, 2010. a, b

Shiraiwa, M., Ammann, M., Koop, T., and Pöschl, U.: Gas uptake and chemical aging of semisolid organic aerosol particles, P. Natl. Acad. Sci. USA, 108, 11003–11008, 2011. a

Shiraiwa, M., Pfrang, C., Koop, T., and Pöschl, U.: Kinetic multi-layer model of gas-particle interactions in aerosols and clouds (KM-GAP): linking condensation, evaporation and chemical reactions of organics, oxidants and water, Atmos. Chem. Phys., 12, 2777–2794, https://doi.org/10.5194/acp-12-2777-2012, 2012. a

Shiraiwa, M., Berkemeier, T., Schilling-Fahnestock, K. A., Seinfeld, J. H., and Pöschl, U.: Molecular corridors and kinetic regimes in the multiphase chemical evolution of secondary organic aerosol, Atmos. Chem. Phys., 14, 8323–8341, https://doi.org/10.5194/acp-14-8323-2014, 2014. a

Sobol', I. M.: Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates, Math. Comput. Simulat., 55, 271–280, https://doi.org/10.1016/S0378-4754(00)00270-6, 2001. a

Stone, M.: Cross-validatory choice and assessment of statistical predictions, J. R. Stat. Soc. B, 36, 111–133, 1974. a

Sturm, P. O. and Wexler, A. S.: Conservation laws in a neural network architecture: enforcing the atom balance of a Julia-based photochemical model (v0.2.0), Geosci. Model Dev., 15, 3417–3431, https://doi.org/10.5194/gmd-15-3417-2022, 2022. a

Sudret, B.: Global sensitivity analysis using polynomial chaos expansions, Reliab. Eng. Syst. Safe., 93, 964–979, https://doi.org/10.1016/j.ress.2007.04.002, 2008. a, b, c, d, e

Thackray, C. P., Friedman, C. L., Zhang, Y., and Selin, N. E.: Quantitative Assessment of Parametric Uncertainty in Northern Hemisphere PAH Concentrations, Environ. Sci. Technol., 49, 9185–9193, https://doi.org/10.1021/acs.est.5b01823, 2015. a

Tikkanen, O.-P., Hämäläinen, V., Rovelli, G., Lipponen, A., Shiraiwa, M., Reid, J. P., Lehtinen, K. E. J., and Yli-Juuti, T.: Optimization of process models for determining volatility distribution and viscosity of organic aerosols from isothermal particle evaporation data, Atmos. Chem. Phys., 19, 9333–9350, https://doi.org/10.5194/acp-19-9333-2019, 2019. a

Tripathy, R. K. and Bilionis, I.: Deep UQ: Learning deep neural network surrogate models for high dimensional uncertainty quantification, J. Comput. Phys., 375, 565–588, 2018. a

Vu, K. K., d'Ambrosio, C., Hamadi, Y., and Liberti, L.: Surrogate-based methods for black-box optimization, Int. T. Oper. Res., 24, 393–424, 2017. a

Wei, J., Fang, T., Lakey, P. S., and Shiraiwa, M.: Iron-Facilitated Organic Radical Formation from Secondary Organic Aerosols in Surrogate Lung Fluid, Environ. Sci. Technol., 56, 7234–7243, https://doi.org/10.1021/acs.est.1c04334, 2021. a

Wong, T.-T. and Yeh, P.-Y.: Reliable accuracy estimates from k-fold cross validation, IEEE T. Knowl. Data En., 32, 1586–1594, https://doi.org/10.1109/TKDE.2019.2912815, 2020. a

Xia, D., Chen, J., Fu, Z., Xu, T., Wang, Z., Liu, W., Xie, H.-B., and Peijnenburg, W. J.: Potential application of machine-learning-based quantum chemical methods in environmental chemistry, Environ. Sci. Technol., 56, 2115–2123, 2022. a

Xiu, D. and Karniadakis, G. E.: The Wiener–Askey polynomial chaos for stochastic differential equations, SIAM J. Sci. Comput., 24, 619–644, 2002. a

Xu, H., Zhang, T., Luo, Y., Huang, X., and Xue, W.: Parameter calibration in global soil carbon models using surrogate-based optimization, Geosci. Model Dev., 11, 3027–3044, https://doi.org/10.5194/gmd-11-3027-2018, 2018. a, b

Ziemann, P. J.: Aerosol products, mechanisms, and kinetics of heterogeneous reactions of ozone with oleic acid in pure and mixed particles, Faraday Discuss., 130, 469–490, 2005. a, b, c

- Abstract

- Introduction

- Methods

- Results and discussion

- Conclusions

- Appendix A: Neural networks

- Appendix B: Oleic acid ozonolysis datasets

- Appendix C: Abbreviations

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

- Supplement

- Abstract

- Introduction

- Methods

- Results and discussion

- Conclusions

- Appendix A: Neural networks

- Appendix B: Oleic acid ozonolysis datasets

- Appendix C: Abbreviations

- Code and data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

- Supplement