the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

The Multiple Snow Data Assimilation System (MuSA v1.0)

Esteban Alonso-González

Kristoffer Aalstad

Mohamed Wassim Baba

Jesús Revuelto

Juan Ignacio López-Moreno

Joel Fiddes

Richard Essery

Simon Gascoin

Accurate knowledge of the seasonal snow distribution is vital in several domains including ecology, water resources management, and tourism. Current spaceborne sensors provide a useful but incomplete description of the snowpack. Many studies suggest that the assimilation of remotely sensed products in physically based snowpack models is a promising path forward to estimate the spatial distribution of snow water equivalent (SWE). However, to date there is no standalone, open-source, community-driven project dedicated to snow data assimilation, which makes it difficult to compare existing algorithms and fragments development efforts. Here we introduce a new data assimilation toolbox, the Multiple Snow Data Assimilation System (MuSA), to help fill this gap. MuSA was developed to fuse remotely sensed information that is available at different timescales with the energy and mass balance Flexible Snow Model (FSM2). MuSA was designed to be user-friendly and scalable. It enables assimilation of different state variables such as the snow depth, SWE, snow surface temperature, binary or fractional snow-covered area, and snow albedo and could be easily upgraded to assimilate other variables such as liquid water content or snow density in the future. MuSA allows the joint assimilation of an arbitrary number of these variables, through the generation of an ensemble of FSM2 simulations. The characteristics of the ensemble (i.e., the number of particles and their prior covariance) may be controlled by the user, and it is generated by perturbing the meteorological forcing of FSM2. The observational variables may be assimilated using different algorithms including particle filters and smoothers as well as ensemble Kalman filters and smoothers along with their iterative variants. We demonstrate the wide capabilities of MuSA through two snow data assimilation experiments. First, 5 m resolution snow depth maps derived from drone surveys are assimilated in a distributed fashion in the Izas catchment (central Pyrenees). Furthermore, we conducted a joint-assimilation experiment, fusing MODIS land surface temperature and fractional snow-covered area with FSM2 in a single-cell experiment. In light of these experiments, we discuss the pros and cons of the assimilation algorithms, including their computational cost.

- Article

(4820 KB) - Full-text XML

- BibTeX

- EndNote

The snow cover has a profound effect on the water cycle (García-Ruiz et al., 2011) and ecosystems (Lin and West, 2022) of high-latitude and mountain regions. It represents a natural reservoir of freshwater resources, sustaining crop irrigation, hydropower generation, and drinking water supply to a fifth of humanity (Barnett et al., 2005). In addition, the ski industry is an important economic driver in many mountain areas. As a consequence, good knowledge of the snow cover properties has a strong scientific, societal, and economic value (Sturm et al., 2017).

Due to the harsh environmental conditions that often prevail in snow-dominated areas, in situ monitoring of the snowpack based on automatic devices and weather stations is both costly and logistically challenging. In addition, due to the spatiotemporal variability in the snowpack (López-Moreno et al., 2011), even dense monitoring networks may suffer from a lack of representativeness (Molotch and Bales, 2006; Cluzet et al., 2022). Yet, estimating SWE spatial distribution is important to make accurate predictions of snowmelt runoff in alpine catchments. Satellite remote sensing provides spatial information about snow-related variables including (i) snow cover spatial extent at various spatiotemporal scales (Aalstad et al., 2020; Gascoin et al., 2019; Hall et al., 2002; Hüsler et al., 2014), (ii) snow depth (Lievens et al., 2019; Marti et al., 2016), (iii) albedo (Kokhanovsky et al., 2020), and (iv) land surface temperature (Bhardwaj et al., 2017). However, the direct estimation of key variables such as the snow water equivalent (SWE) or density by means of remote sensing techniques remains challenging (Dozier et al., 2016). The only remote sensing tools that have shown some potential to retrieve SWE are passive microwave sensors. Unfortunately, their coarse resolution and the fact that they tend to saturate above a certain SWE threshold prevent their usage over mountainous regions or areas with a thick snowpack (Luojus et al., 2021).

Numerical models can estimate the distribution of SWE at different spatiotemporal scales using meteorological information derived from automatic weather stations (Essery, 2015; Liston and Elder, 2006a) or atmospheric models (Alonso-González et al., 2018; Wrzesien et al., 2018). Nonetheless, such snowpack models exhibit a number of limitations that may cause strong biases in the SWE simulations (Wrzesien et al., 2017). Snowpack model uncertainties originate partly from their simplified representation of physical processes (Günther et al., 2019; Fayad and Gascoin, 2020) but most importantly from errors in the meteorological forcing (Raleigh et al., 2015). In this context, the fusion of remote sensing products with snowpack models using data assimilation is key to improve snowpack simulations (Girotto et al., 2020; Largeron et al., 2020).

Several remotely sensed products may be used to update snowpack models. The fractional snow-covered area (FSCA) from optical sensors was the first product to be assimilated (Clark et al., 2006; Durand et al., 2008; Kolberg and Gottschalk, 2006). FSCA assimilation remains extensively used in both distributed (Margulis et al., 2016) and semi-distributed models (Thirel et al., 2013) due to the long time series of snow-covered area (SCA) observations and the development of new higher-resolution products (Baba et al., 2018). It is possible to further improve snowpack simulations by assimilating snow depths (Deschamps-Berger et al., 2022; Smyth et al., 2020). The assimilation of remotely sensed surface reflectances may also be beneficial (Charrois et al., 2016; Cluzet et al., 2020; Revuelto et al., 2021b), but further research is needed on this topic to demonstrate advantages over assimilating derived higher-level products such as FSCA and albedo.

Whereas snowpack models are increasingly available as open-source software and remote sensing products as open data, to our knowledge there is no standalone open-source application to develop multiple snow data assimilation experiments. This specific issue was highlighted by Fayad et al. (2017) as a strong limitation to advancing knowledge on the snow cover in regions which receive less attention from the mainstream research community. In addition, some data assimilation frameworks are based on highly specific implementations tied to operational constraints (Cluzet et al., 2021). This situation prevents the development of reproducible snow data assimilation studies and challenges the comparison of the performance of different snow data assimilation algorithms.

This is why we have developed a new open-source data assimilation toolbox, the Multiple Snow Data Assimilation System (MuSA). MuSA is an ensemble-based snow data assimilation tool. It enables the fusion of multiple observations with a physically based snowpack model while taking into account various sources of forcing and measurement uncertainty. It is an open-source collaborative project entirely written in the Python programming language. It should facilitate the development of snow data assimilation experiments, as well as the generation of snowpack reanalyses and near-real-time snowpack monitoring. It was designed with a modular structure to foster collaborative development, allowing advanced users to seamlessly implement new features with minimal effort. In the following sections, we describe the features of MuSA and show different examples of its usage, assimilating remote sensing products of different spatiotemporal resolutions.

The core of MuSA is an energy and mass balance of the snowpack model, Flexible Snow Model (FSM2; Essery, 2015). FSM2 has several options for the parameterizations of key processes related to the energy and mass balance of the snowpack. The most complex configuration is chosen by default in MuSA, leading to a more detailed simulation of the internal snowpack processes. Albedo is computed from the age of the snow, decreasing its value as snow ages and increasing it with fresh snowfalls. Thermal conductivity of the snowpack is computed as a function of the snow density. Snow density is computed considering overburden and thermal metamorphism. Turbulent energy fluxes are computed as a function of atmospheric stability. Meltwater percolation in the snowpack is computed using the gravitational drainage. Although this is the default configuration, it is possible to choose any other FSM2 setup, which may result in slight performance differences in terms of both the computational cost and the accuracy of the model (Günther et al., 2019). As envisaged in Westermann et al. (2022), the potential to run multiple model configurations leaves the door open for pursuing rigorous model comparison using the model evidence framework (MacKay, 2003) in the cryospheric sciences.

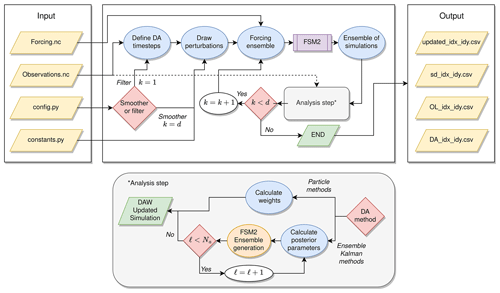

MuSA was designed as a Python program encapsulating the FSM2 Fortran code (Fig. 1). It handles the forcing and initial files, as well as the FSM2 runs and outputs, by internally generating the required ensemble of simulations from simple configuration (config.py) and constants (constants.py) files that should be filled by the user to both configure the MuSA environment and define the priors, respectively. Then, it solves most of the challenges of ensemble-based snow data assimilation frameworks for the user, as it internally handles the input/output (I/O) and parallelization while keeping track of the ensembles that should flow in different ways depending on the chosen data assimilation (DA) algorithm. The data assimilation algorithms are independently implemented on a grid cell basis, allowing both single-cell and parallel spatially distributed simulations. The outputs of MuSA consist of the posterior mean snow simulation from FSM2 (updated_idx_idy.csv), the posterior standard deviations of the FSM2 ensemble (sd_idx_idy.csv), information related to the posterior perturbation parameters and the observations (DA_idx_idy.csv), and the original simulation without perturbation (OL_idx_idy.csv). Additionally, it is possible to store the posterior ensemble of every cell.

Figure 1MuSA internal workflow. k, d, ℓ, Na, DAW, idx, and idy refer to the timing of the analysis step, number of observation times, current assimilation cycle number, total number of assimilation cycles, data assimilation window, longitude cell index, and latitude cell index, respectively.

The following data assimilation algorithms are currently implemented in MuSA:

-

particle filter (PF; Gordon et al., 1993; van Leeuwen et al., 2019);

-

ensemble Kalman filter (EnKF; Evensen, 1994; van Leeuwen, 2020);

-

ensemble Kalman filter with multiple data assimilation (EnKF-MDA; Emerick and Reynolds, 2012);

-

particle batch smoother (PBS; Margulis et al., 2015), a batch smoother variant of the PF;

-

ensemble smoother (ES; van Leeuwen and Evensen, 1996), a batch smoother variant of the EnKF;

-

ensemble smoother with multiple data assimilation (ES-MDA; Emerick and Reynolds, 2013).

In its current version, MuSA is able to assimilate the following variables:

-

SWE [mm]

-

snow depth [m]

-

land surface temperature [K]

-

fractional snow-covered area [–]

-

albedo [–]

-

sensible heat flux to the atmosphere [W m−2]

-

latent heat flux to the atmosphere [W m−2].

We expect to provide support for even more variables in the future. Note that the ensemble Kalman schemes involving multiple data assimilation are iterative schemes (Stordal and Elsheikh, 2015; van Leeuwen et al., 2019). For the PF, several standard resampling algorithms (see Li et al., 2015, for a review) are available in MuSA, namely bootstrapping, residual resampling, stratified resampling, and systematic resampling. In addition to these standard resampling techniques, we have also implemented a heuristic approach based on redrawing from a normal approximation to the posterior, which is loosely inspired by more advanced particle methods (Särkkä, 2013; van Leeuwen et al., 2019). This redrawing from the approximate posterior generates new samples of perturbation parameters at each assimilation step. In the case of complete degeneracy, where all the weight is on one particle, this redrawing technique instead uses the prescribed prior standard deviations for generating perturbation parameters, corrected by a scaling factor (to be selected by the user, fixed to 0.3 by default) to avoid overdispersing the ensemble.

The inputs of MuSA are composed of (i) gridded meteorological forcing to generate the ensemble of FSM2 simulations and (ii) gridded observations which are assimilated. Optionally, it is possible to provide a mask, covering areas where simulations should not be implemented in order to save computational resources or restrict the runs to a specific area inside the provided domain. These inputs must be provided as files encoded in the Network Common Data Form (netCDF; Rew and Davis, 1990) format. MuSA is able to handle different observational products as long as the meteorological forcing and the observations share the same geometry (i.e., the same number of cells in the longitudinal and latitudinal dimensions). As in many other distributed snowpack models, there is currently no communication between grid cells in MuSA. This makes MuSA easily parallelizable as each cell is simulated and updated completely independently of the others (i.e., embarrassingly parallel). Different parallelization schemes are already implemented, including multiprocessing for single-node runs and message passing interface (MPI) and Portable Batch System job arrays, to provide support for computing clusters. Due to the embarrassingly parallel nature (no communication between tasks) of the MuSA computational problem, other parallelization schemes can easily be implemented. All the data processing is done by default in the temporary file system, but the user can choose any other location such as a specific temporary folder in a multi-node cluster or a random access memory drive in a local workstation to speed up the I/O processes.

2.1 Ensemble generation

The DA algorithms implemented in MuSA all require a prior ensemble of simulations to represent uncertainty. The number of ensemble members (or, equivalently, particles; van Leeuwen, 2009), which we denote by Ne, should be specified by the user, as this drastically affects both the computational cost of the experiments and the performance of the data assimilation algorithms. To generate an ensemble of snowpack state trajectories, MuSA perturbs the meteorological forcing to run an ensemble of FSM2 simulations. An arbitrary number of forcing variables can be perturbed. The perturbation of the forcing is performed by drawing spatially independent random perturbation parameters from a normal distribution or lognormal distribution. The prior standard deviation and mean of these distributions should be specified by the user. MuSA supports normal or lognormal probability functions to generate additive or multiplicative perturbations, respectively, depending on the physical bounds of the variable to be perturbed. Additive perturbations are typically used for air temperature. Multiplicative perturbations are recommended for precipitation to avoid negative values as well as for shortwave radiation to avoid negative values and positive nighttime values.

Once the forcing is perturbed, MuSA partitions the precipitation between the liquid and solid phase. To estimate the phase of the precipitation, two different approaches are implemented. The simpler approach is based on a logistic function of the 2 m air temperature (Kavetski and Kuczera, 2007). A more complex psychrometric energy balance method is also implemented in MuSA. This approach uses the relative humidity and 2 m air temperature to infer the surface temperature of the falling hydrometeors, from which the phase of the precipitation can be estimated (Harder and Pomeroy, 2013).

2.2 Meteorological forcing

The variables required as forcing to run MuSA include downwelling (i.e., incoming) shortwave and longwave radiation [W m−2], total (sum of liquid and solid) precipitation , surface atmospheric pressure [Pa], 2 m air temperature [K], relative humidity [%], and 10 m wind speed [m s−1]. In the current version of MuSA, the forcing must be provided in the netCDF format at an hourly time step in a grid-based geometry, without any specific requirement regarding the native spatial resolution. The most likely candidates to be used as meteorological forcing for MuSA will be the outputs of atmospheric simulations or automatic weather station data. These include atmospheric reanalyses, such as ERA5 (Hersbach et al., 2020) or MERRA-2 (Gelaro et al., 2017), and regional climate model outputs (Alonso-González et al., 2021) as well as the outputs of different downscaling approaches driven by the aforementioned datasets (e.g., Fiddes and Gruber, 2014; Gutmann et al., 2016; Havens et al., 2017; Fiddes et al., 2022; Liston and Elder, 2006b). Other sources of information include automatic weather stations or gridded products derived from the interpolation of point-scale meteorological information. The forcing variables should be provided in the International System of Units, but it is possible to perform simple unit transformations internally to facilitate the preprocessing of different forcing data sources, using the following affine transformation.

where ϕk (for ) is a forcing time series provided by the user with units Φ and ψk with converted units Ψ is the transformed forcing time series after scaling by a with units Ψ Φ−1 and translating by b with units Ψ.

Each grid cell is solved independently, which includes the reading of the forcing that occurs along the time dimension. Otherwise, each process would have to store considerably more data in memory, leading to more costly I/O operations that would slow down the run time. Even so, just reading along the time dimension can come with a considerable computational cost if the time dimension is large. To alleviate this, the time spent reading the forcing can be reduced by setting the chunk (a subset of the file to be read or written as a single I/O operation) of the netCDF forcing files along the time dimension. To speed up the subsequent relaunching of the simulations when smoothing and filtering, MuSA generates an intermediate binary file with the forcing information needed to run a complete simulation for each grid cell.

2.3 Observations and masked cells

MuSA is able to assimilate an arbitrary number of different observations that can be irregularly distributed in time and space. It is also possible to perform joint-assimilation experiments that assimilate more than one variable type at the same time. A temporally and spatially constant scalar corresponding to the assumed observation error variance must be provided for each type of observation that is to be assimilated. This assumption implies a diagonal observation error covariance matrix, R, which is tantamount to assuming that observation errors are uncorrelated in both time and space. Note that this formulation allows the user to account for differences in observation error that arise in the case when a variable is observed by multiple sensors with varying accuracy. By modifying the likelihood, it would also be possible to account for non-Gaussian observation errors (Fletcher and Zupanski, 2006; Fowler and van Leeuwen, 2013), but this is not yet supported in MuSA. The time step of the observations does not have to be the same as that of the forcing so that the observations can be irregularly spaced in time. Also, it is not necessary that the observations of the different variables occur at the same time step, allowing the assimilation of products from different satellite platforms with different orbits. Optionally, a binary mask can be provided in a separate file to delineate the basin of interest. If provided, MuSA will skip the cells that are not covered by the mask. Again, the mask must be provided on the same grid as the forcing and the observations. Many remotely sensed products exhibit gaps due to the presence of clouds or failures in the remote sensing devices. As a general rule we do not recommend filling the gaps of the observed series. These filled data would exhibit a different error variance than the direct estimations from the remote sensors, introducing uncertainties which are not compatible with the data assimilation process. MuSA will internally handle the gaps in the observations, provided that the netCDF files are generated using the aforementioned conventions, assimilating just the time steps where information is available.

Data assimilation (DA) is the exercise of fusing uncertain information from observations and (typically) geoscientific models (Evensen et al., 2022; Reich and Cotter, 2015). The DA schemes used in MuSA vary in their approach to state estimation as well as in the underlying assumptions and algorithms employed. In this section we provide an overview of these schemes as well as the underlying theory. Readers well versed in DA could consider skipping this entire section or at least skipping to Sect. 3.4 and 3.5 where the algorithms are presented.

3.1 Bayesian inference

In line with most modern approaches to DA (Wikle and Berliner, 2007; Carrassi et al., 2018; van Leeuwen et al., 2019), the assimilation schemes used in MuSA are built on the foundation of Bayesian inference (e.g., Lindley, 2000; MacKay, 2003; Särkkä, 2013; Nearing et al., 2016). In this approach, the target quantity is the posterior distribution p(x|y), the probability of the model state x∈ℝm given the observations y∈ℝd, which can be inferred using Bayes' rule:

in which p(y|x), the probability of the observed data given a model state, is called the likelihood and p(x), the probability of the state before considering the observations, is known as the prior. According to the likelihood principle, the likelihood should be seen as a function of the model state and not of the fixed observations. So it is implicitly understood that the state is as a vector of random variables, while the observation vector is deterministic. In this Bayesian interpretation, rather than being narrowly synonymous with frequency, probability is a broad measure of uncertainty. The evidence (or marginal likelihood) in the denominator of Eq. (2) is given by

where the range of integration is understood to be over all possible values of x, namely the support of the prior. This evidence is just a function of the observations, so it simply serves as a normalizing constant in this incarnation of Bayes' theorem, which we can rewrite as

to emphasize that the posterior is proportional to the product of the likelihood (what the data tell us) and the prior (what we believed before considering the data). These are usually probability density rather than mass functions as we tend to deal with continuous variables in DA. Since the posterior must integrate to 1, we can safely ignore the evidence term as long as we are just dealing with the usual first level of inference where we fit a specific model. The evidence is nonetheless vital in the second level of inference where we compare models (see MacKay, 2003), but we do not pursue that here. Thus, we will henceforth use the notation Z=p(y) to emphasize that the evidence simply serves as a normalizing constant in this overview.

To get the Bayesian engine to run and infer the posterior, we need to specify the two input distributions on the right-hand side of Eq. (2), namely the prior and the likelihood. To construct the likelihood, we specify an observation (or equivalently measurement, data-generating, forward) model of the form

where x⋆ contains the true values of the state variables, ℋ(⋅) is the observation operator which maps from state to observation space, and ϵ is the observation error. Often, as is the case in MuSA, the observation operator picks out predicted observations, i.e., the state variables that correspond to observations, from the full state vector. As is commonly done in DA (Carrassi et al., 2018), we assume that the observation errors are additive, are unbiased, and follow a Gaussian distribution; i.e., , where R is the observation error covariance matrix. Thereby the likelihood becomes

where is a normalizing constant, (⋅)T denotes the transpose, denotes the predicted observations, and we have used conditional on x being true. Roughly speaking, the likelihood can be seen as a model misfit term which quantifies how likely the actual observations are given a particular model state. The states with a higher likelihood will correspond to those with predicted observations closer to the actual observations. The next ingredient is the prior distribution over states, p(x), which can be specified based on initial beliefs, which may include physical bounds, expert opinion, “objective” defaults (using, e.g., maximum entropy), or knowledge from earlier analyses (Lindley, 2000; MacKay, 2003; Banner et al., 2020). For the latter, consider the case of assimilating two conditionally independent observations y1 and y2. Then the posterior can be obtained using either batch or recursive estimation (Särkkä, 2013). The batch approach involves solving directly for

while for the recursive approach we note that

so we can split up the batch solution by first conditioning on y1 to obtain an intermediate posterior; then we use this as a prior when updating with y2 to obtain the full posterior as follows:

This recursive approach will give the same answer as the batch approach, but it can often be helpful to split the inference into smaller chunks, especially for dynamic problems. We can extend recursive estimation to an arbitrary number of observations as long as they are conditionally independent.

In theory, evaluating the posterior simply involves taking the product of two terms. Naïvely, this suggests that we can estimate the posterior through a simple grid approximation (MacKay, 2003). Unfortunately, inferring the posterior is usually akin to looking for a needle in a haystack due to the curse of dimensionality (Snyder et al., 2008), which manifests itself when we have a high dimensional state space and/or highly informative data. As such, we need to adopt efficient algorithms when performing DA in practice. Roughly speaking, the most widely used Bayesian DA schemes can be split into two major computational approaches: variational techniques and Monte Carlo methods (Carrassi et al., 2018). Despite success in operational meteorology (Bannister, 2017), the variational approach has received less attention in cryospheric applications (Dumont et al., 2012), since it involves gradient terms that are difficult to implement and has not yet shown any clear gains in performance over Monte Carlo. As such, in MuSA we restrict ourselves to Monte Carlo methods where the naming reflects that these approaches rely on using (pseudo)random numbers to sample from probability distributions (MacKay, 2003).

3.2 Prediction, filtering, and smoothing

The subset of Monte Carlo methods used in MuSA can be split into two classes: those derived from the EnKF (Evensen, 1994) and those derived from the PF (Gordon et al., 1993). These can be further subdivided into filters and smoothers depending on how time dynamics are handled (Carrassi et al., 2018). As such, it is instructive to appreciate the differences between stochastic prediction, filtering, and smoothing (see Jazwinski, 1970; Särkkä, 2013) as well as how these are implemented in MuSA. To do so, we introduce the following notation: we consider discrete points in time for , where t0 is the initial time and Δt is the model time step and we let xk=x(tk) denote the state at a given time. We employ analogous notation for the observations yk, but (without loss of generality) we assume that the initial time is unobserved. Note that in practice we usually do not have observations at every model time step; we have just assumed this here to simplify notation. The extension of filters and smoothers to the more realistic case with sparse observations in time is straightforward and merely requires a slight change in indexing so that filtering and smoothing only take place using predicted observations at observation times.

In DA, prediction involves estimating the future state given past observations: , where and ℓ<k. The archetypal example of this is the familiar task of weather forecasting through ensemble-based numerical weather prediction (Bauer et al., 2015). In the context of prediction, the concepts of future and past are just relative to one another and not necessarily representative of real time. So one can also make predictions of the past, as long as any observations considered are from the even more distant past. Since prediction is a step in both filtering and smoothing, we explain how to implement it probabilistically. Prediction from k−1 to k can be formulated as follows:

where ℳ(⋅) is the dynamical model (FSM2 in MuSA) and is the additive model error term which we assume to be independent in time and follow a zero-mean Gaussian distribution with covariance matrix Q. These assumptions can be relaxed without loss of generality, but their convenience and broad justifiability mean that they are often employed (Carrassi et al., 2018). Crucially, the above prediction step produces Markovian (memoryless) dynamics where the current state depends only on the previous state and noise. The Markov property is crucial in making the filtering and smoothing problems tractable. It implies , which lets us factorize and simplify distributions such as the full prior as follows:

where the transition density is Gaussian of the form . Applying this recursively we obtain

Using this kind of factorization together with marginalization also helps us construct the marginal predictive distribution , where ℓ<k as follows:

This is the Chapman–Kolmogorov equation (Särkkä, 2013), which can be applied recursively to obtain the predictive distribution at the current time step using the transition density together with previous predictive distributions.

From prediction we move to filtering, which is the estimation of the current state given current and past observations: . This is the problem solved by sequential DA where an archetypal example is the initialization of numerical weather predictions as new observations become available to delay the effects of chaos (Bauer et al., 2015). To construct the filtering distribution we first re-introduce our Gaussian observation model into the dynamical context and make the usual assumption that the current observations are conditionally independent of both the observation and state histories (Särkkä, 2013), resulting in the dynamic likelihood

where denotes the predicted observations and we have added a time index to the normalizing constant (Ak) and the observation error covariance matrix (Rk) to emphasize that both the number and types of observations may vary in time. By combining Markovian state dynamics with a conditionally independent observation model, we end up with a state-space or hidden Markov model (Cappé et al., 2005) where the states at each time step are hidden (or latent) because they are not observable due to measurement error. The filtering distribution is obtained by combining the predictive distribution (which serves as the prior) and the dynamic likelihood through Bayes' theorem:

As such, prediction and filtering can be applied one after the other in time to sequentially obtain the filtering distributions of interest for all integration time steps . That is, starting from the initial prior p(x0), we run the dynamical model to k=1 to obtain the transition density in the product p(x1|x0)p(x0) and marginalize to obtain the predictive distribution p(x1), which we use as the prior when assimilating the observations y1 to estimate the filtering distribution p(x1|y1). We continue this cycle, running the dynamical model to k=2 to obtain and marginalizing to obtain p(x2|y1), which we use as the prior when assimilating y2 to arrive at . This filtering process of “online” assimilation as observations become available sequentially in time is appealing for operational forecasting, since it can continue indefinitely with low memory requirements while outputting the filtering and prediction distributions of interest. In practice when using Monte Carlo methods, we do not operate with the distributions themselves but rather with an ensemble of samples from these. Typically, due to the often prohibitively large size of a full spatiotemporal ensemble, one would only store summary statistics such as the posterior mean and standard deviation of the state for each point in space and time as the outputs of the analysis.

In addition to filtering, we are also able to solve some smoothing problems in MuSA. The key difference between filtering and smoothing is that the latter considers future observations; i.e., the (marginal) smoothing distribution is where ℓ>k. Several types of smoothing problems exist (Cosme et al., 2012; Särkkä, 2013), namely fixed-lag smoothing ( with l constant), which is equivalent to filtering but where the state is lagged relative to the observations; fixed-point smoothing (k is fixed) where the posterior at a fixed point in time (such as an initial condition) is conditioned on both past and future observations; and fixed-interval smoothing (ℓ is fixed) where we estimate the posterior for points in a time interval given all observations in that interval. In MuSA we focus on a form of the fixed-interval smoothing known as batch smoothing, in which the states within the time interval, henceforth called the data assimilation window (DAW), are updated in a single batch using all observations such that ℓ=n.

The batch-smoothing approach has been shown to be especially well suited for reanalysis-type problems in land and snow data assimilation, since it allows information from observations to propagate backward in time (Dunne and Entekhabi, 2005; Durand et al., 2008). We will restrict our attention to strong-constraint batch smoothing with indirect updates (Evensen, 2019; Evensen et al., 2022) where it is assumed that the dynamical model is perfect (i.e., no model error η) and that all uncertainty stems from parameters that are constant within the DAW. This approach is adopted for both simplicity and the fact that it ensures that the updated states are dynamically consistent with the physics in the model. It also has the advantage of implicitly ensuring that state variables such as snow depth or SWE remain within their physically non-negative bounds. In this approach, an ensemble of realizations of the model are run forward for an entire DAW during which all the observations and the corresponding predicted observations from the model are stored. At the end of the DAW, these observations are all assimilated simultaneously in a batch update to provide samples from the posterior parameter distribution. For seasonal snow the water year becomes a natural choice for the DAW. With this choice, potentially highly informative observations made during the ablation season are able to inform the preceding accumulation season (Margulis et al., 2015). This is crucial in peak SWE reconstruction, which is of great interest to snow hydrologists (Dozier et al., 2016).

3.3 Consistency

A potential problem in DA is that the stochastic perturbations and updates that are imposed on the model and the resulting dynamics may lead to inconsistent model states. We can define (at least) two different types of inconsistencies: (i) dynamical inconsistency and (ii) physical inconsistency where model states and/or parameters violate their physical bounds.

Dynamical inconsistency with respect to the underlying deterministic model can enter into the DA exercise either through stochastic model error terms in prediction steps or through the assimilation step itself. In the case of weak-constraint DA, where imperfections in the model are represented by stochastic model error terms, this kind of weak inconsistency exists by design and is a feature rather than a bug. In particular, the dynamical inconsistency created by the stochastic terms is meant to explicitly account for model uncertainty arising for example due to unresolved processes (Palmer, 2019). As such, the open-loop (no DA) stochastic model dynamics will be inconsistent with a deterministic version of the model. Arguably calling such a stochastic model dynamically inconsistent is somewhat of a misnomer, since it aspires to be more consistent with reality than a purely deterministic version of the same model.

The assimilation step itself can also introduce dynamical inconsistencies, particularly when applying filtering algorithms (Dunne and Entekhabi, 2005). For the EnKF these manifest as sawtooth-like patterns (jumps) in the dynamics at each assimilation step due to the fact that the Kalman-based analysis actually moves the ensemble from being samples of the prior to being (approximate) samples from the posterior. For the PF dynamical inconsistency also enters at the assimilation step whenever resampling is performed. The resampling step tends to kill off unpromising low-weight particles while reproducing more promising higher-weight particles. Since this evolutionary step is implemented sequentially in time when filtering, it will also introduce discontinuities into the dynamics of the model ensemble. In the case of both the EnKF and the PF, the discontinuity introduced at the assimilation step is an expected result of filtering. If one wishes to avoid this behavior, one should instead turn to smoothing algorithms.

Physical inconsistency can also enter through both stochastic perturbations and the assimilation step itself. Many snowpack state variables (and potential observables) have a relatively limited dynamic range and are either lower bounded, e.g., snow depth and SWE are non-negative, or double bounded, e.g., snow albedo and FSCA are physically confined to the range [0,1]. This means that directly applying assimilation routines which assume unbounded (e.g., Gaussian) distributions on the prior, likelihood, or model error can lead to physical inconsistencies in snow DA.

A simple but naïve solution is to just explicitly enforce state variables to lie within their physical bounds using minimum and/or maximum functions. Unfortunately, this is the same as truncating the underlying probability distributions and may degrade the performance of the assimilation scheme due to a strong violation of assumptions. Although this is a major concern for the EnKF-based schemes, which make explicit assumptions about Gaussianity, in practice it may also impact the PF where Gaussian distributions remain a convenient choice. A better way to deal with issues around physical inconsistency is to apply transformations to bounded random variables. Typically, these transformations will map such random variables from the bounded physical space to an unbounded space that can accommodate Gaussian distributions through Gaussian anamorphosis techniques (Bertino et al., 2003). This can be carried out using transforms that have been empirically constructed or using analytic functions. In MuSA, following Aalstad et al. (2018), we employ analytical Gaussian anamorphosis where we use a logarithm transform for variables that are physically lower bounded and a logit transform for variables that are physically double bounded. The corresponding inverse functions, i.e., the exponential and logistic transforms, can be used to map these unbounded variables back to the bounded physical space. For bounded variables we will often construct the prior distributions using these transforms. For example, assigning a lognormal prior can ensure that a variable never exceeds its lower bounds. In such a case the assimilation step takes place in the unbounded log-transformed space where this variable is normally (i.e., Gaussian) distributed, while the model integration takes place in the bounded physical space, which can be recovered using the exponential transform, where the variable is lognormally (i.e., log-Gaussian) distributed.

A key step taken in MuSA to reduce inconsistencies in snow data assimilation is to split the state vector in two: , where denotes parameters and denotes internal model states. Only the parameters, which can include both internal model parameters and forcing perturbation parameters, are assumed to be random variables in MuSA. This approach corresponds to the so-called forcing formulation (as opposed to the model-state formulation) of the DA problem (Evensen et al., 2022). The internal model states are taken to be deterministic given these parameters. Such a setup is tantamount to assuming that all the uncertainty in the model dynamics stems from the forcing data and internal parameters. This assumption is largely in line with previous findings (Raleigh et al., 2015), particularly for intermediate-complexity snow models such as FSM2 (Günther et al., 2019). This is gradually becoming a relatively standard setup in snow data assimilation experiments (Margulis et al., 2015; Magnusson et al., 2017; Aalstad et al., 2018; Alonso-González et al., 2021; Cluzet et al., 2020, 2022) although it has arguably not been as properly formalized as elsewhere in the DA literature (Evensen, 2019; Evensen et al., 2022). Through such a split we ensure that internal model states remain both dynamically and physically consistent given the parameters. The parameters, in turn, are made physically consistent by applying analytical Gaussian anamorphosis transforms in the DA scheme. As such, we will let u denote anamorphosed parameters that have undergone a forward transform to the unbounded space. It is implicitly assumed that these are inverse-transformed to the physical space before they are passed to FSM2 to help evolve the internal states forward in time.

In the filtering algorithms in MuSA, both the stochastic parameters and the conditionally deterministic internal states are dynamic. The parameter dynamics evolve according to simple jitter (Farchi and Bocquet, 2018) of the following form:

where we recall that and that the parameters are defined in the unbounded transformed space. Probabilistically, this corresponds to the Markovian transition density . The internal state variables, on the other hand, evolve according to the full dynamical model (FSM2), which also depends on the dynamic parameters uk through . These dynamics correspond to the transition density (e.g., Evensen, 2018)

where δ(⋅) is the Dirac delta function, which emphasizes that the internal state is not only Markovian but also conditionally deterministic given the parameters. This just formalizes that the only uncertainty in the internal state dynamics stems from the parameters to which we assign an initial prior p(u0). Without loss of generality and to simplify the presentation of the theory, we assume that we are dealing with seasonal snow, so the initial prior for the internal snow states, p(v0), is known: v0=ν0. Thus , since the annual integration period starts at the beginning of the water year where we assume that a snowpack has not yet formed, so internal snow state variables are either 0 (snow depth, SWE) or undefined (e.g., snow surface temperature). Together with the dynamic likelihood p(yk|uk), these distributions can be used to construct the target marginal filtering distribution , which we can estimate with ensemble Kalman or particle-filtering algorithms. By combining this marginal distribution with appropriate densities, we can also estimate the joint filtering distribution from which we can compute posterior expectations for the internal states of interest. The derivation of this distribution is relatively analogous to that for the smoother below but involves more steps and is thus not included herein. In practice, (approximate) samples from this joint filtering distribution are obtained from the posterior ensemble after each observation time and the subsequent assimilation step while running the filtering schemes in MuSA.

For the smoothing algorithms in MuSA, we instead assume that the dynamical model is perfect, so the components of u are constant (i.e., time-invariant) but uncertain parameters, which can be either internal parameters or related to the forcing, while still denotes the dynamic internal state variables with initial state v0 at t0 and final state vn at tn, which is the end of what is now an annual DAW. The prediction step with the assumed perfect dynamical model then becomes , which implies the following transition density:

This is just a formal way of denoting that the only uncertainty in the dynamics stems from time-invariant but uncertain parameters to which we assign a prior. As noted by Evensen (2019), when these perturbation parameters are interpreted as errors, this can be viewed as a limiting case of perfect time correlation where the errors become constant biases. The lack of dynamics in the perturbation parameters is thus akin to assuming that errors in the forcing are constant in time within a particular water year. This simplifying assumption imposes a longer memory in the system than with jitter and facilitates the propagation of information backward in time using smoothers. Given this transition density, the full prior for the entire DAW can be factorized as follows:

where as before is the prior for the initial condition of the state. The full posterior then becomes

which can be marginalized to obtain the marginal posterior for the parameters

where the batch likelihood is

in which contains the predicted observations for all observation time steps and , where is the batch observation error covariance matrix, which is a block diagonal matrix containing the observation error covariance matrices for all observation time steps. Note that since the state v0:n is conditionally deterministic given u, it is not included in the batch likelihood. By combining the batch likelihood with the prior for the parameters, we have all that we need to estimate the posterior for the parameters . The main difference from the filtering distribution is that all the data are assimilated in a single batch to update static parameters. Once we have obtained the posterior for the parameters, due to the strong constraint, we recall that we can easily recover the joint posterior for the states and parameters from which we can compute posterior expectations for the internal states of interest. In practice, the steps involved in estimating the posterior and subsequent expectations depend on which batch-smoothing algorithm is used.

3.4 Particle methods

Particle methods (van Leeuwen, 2009; Särkkä, 2013), also known as sequential Monte Carlo methods (Cappé et al., 2005; Chopin and Papaspiliopoulos, 2020), are appealing, since they impose few assumptions, can be relatively easy to derive, and are simple to implement in their basic form. In principle, they can be used to solve any Bayesian inference problem, including the aforementioned filtering and smoothing problems. The so-called bootstrap filter described by Gordon et al. (1993) arguably marks the introduction of particle filters to the wider scientific community. Nonetheless, the underlying idea of sequential importance resampling (SIR) was already well known in the statistics literature from which it emerged (see Smith and Gelfand, 1992, and references therein). For a more up-to-date perspective, van Leeuwen et al. (2019) provide a comprehensive review of particle methods for geoscientific data assimilation, including a discussion of the state of the art and promising avenues for further developments.

Particle methods have rapidly become the most widely adopted approach to data assimilation within the snow science community. The PF, in particular, has become increasingly popular in snow data assimilation studies. This began with the seminal work of Leisenring and Moradkhani (2011) and Dechant and Moradkhani (2011) showing how the PF can outperform the EnKF in snow data assimilation applications, assimilating SNOTEL SWE and passive microwave data, at least if relatively simple models and a large ensemble are used. Charrois et al. (2016) subsequently applied the PF in synthetic (twin) experiments that assimilated MODIS-like reflectance data into the Crocus snow model for a site in the French Alps. Magnusson et al. (2017) showed how snow depth assimilation using the PF improved the estimation of several variables, including SWE and snowmelt runoff, in an intermediate-complexity snow model for many sites across the Alps. The study of Baba et al. (2018) applied the PF to assimilate novel SCA satellite retrievals from Sentinel-2, demonstrating marked improvements in snowpack simulations across the sparsely instrumented High Atlas Mountains of Morocco. Piazzi et al. (2018) investigated the potential of performing a joint assimilation of several snowpack variables obtained from ground-based observations at three sites in the Alps using the PF, noting that degeneracy was more likely to occur for this setup than in more typical uni-variate snow data assimilation experiments. Smyth et al. (2019) assimilated monthly snow depth observations into an intermediate-complexity snow model with the PF at a well-instrumented site in the California Sierra Nevada, improving both snow density and SWE estimates with promising implications for the assimilation of snapshots of snow depth retrieved from airborne and spaceborne platforms. These results were confirmed by Deschamps-Berger et al. (2022), who showed that the assimilation of a single snow depth map per season is sufficient to improve the simulated spatial variability in the snowpack. Recent efforts (Cantet et al., 2019; Cluzet et al., 2021, 2022; Odry et al., 2022) have focused on the challenge of spatially propagating snowpack information from observed to unobserved locations when assimilating in situ snow depth and SWE data using the PF.

Another line of studies has adopted particle smoothing schemes, particularly the particle batch smoother (PBS; Margulis et al., 2015), for snow reanalysis. Here a batch of remotely sensed, typically FSCA, observations are assimilated simultaneously to weight (assign probability mass to) an ensemble of model trajectories for the entire water year. Margulis et al. (2016) used the PBS to perform a 90 m resolution snow reanalysis for the California Sierra Nevada covering the entire Landsat era from 1985 to 2015. Cortés and Margulis (2017) applied a similar setup to conduct a snow reanalysis for the extratropical Andes. Aalstad et al. (2018) compared the performance of the PBS with ensemble-Kalman-based smoothers when assimilating FSCA data from Sentinel-2 and MODIS for sites in the high Arctic and found that the PBS markedly outperformed non-iterative ensemble-Kalman-based smoothers in line with Margulis et al. (2015). Baldo and Margulis (2018) developed a multi-resolution snow reanalysis framework using the PBS and demonstrated, through tests in a basin in Colorado, that this could match the performance of the original single-resolution approach at a fraction of the computational cost. In DA experiments in the Swiss Alps, Fiddes et al. (2019) showed how clustering offers an alternative promising avenue for speeding up snow DA with the PBS, allowing for hyper-resolution reanalyses. Alonso-González et al. (2021) carried out a snow reanalysis over the sparsely instrumented Lebanese mountains by forcing FSM2 with quasi-dynamically downscaled meteorological reanalysis data and subsequently assimilating MODIS-based FSCA retrievals with the PBS. Liu et al. (2021) recently performed an 18-year 500 m resolution snow reanalysis for High Mountain Asia by using the PBS to jointly assimilate MODIS and Landsat FSCA data. Although all of these studies have focused on using FSCA data for snow reanalysis, other remotely sensed retrievals could also be considered. For example, the work of Margulis et al. (2019) has shown that the PBS can also be used to assimilate infrequent lidar-based snow depth retrievals with marked improvements in the estimation of snowpack-related variables.

Importance sampling is key to understanding these particle methods. Despite what the name may suggest, this is actually not an approach to directly generate samples from a target distribution of interest. Instead, it allows us to estimate expectations of functions with respect to a target distribution by drawing from another distribution that it is easier to sample from (MacKay, 2003). In DA, the posterior is the target distribution of interest, and the expectation of some function g(x) with respect to the posterior is defined as follows (Särkkä, 2013):

where the expectation E[⋅] of g(x)=x yields the posterior mean and the expectation of yields the posterior variance . If we could generate Ne independent samples from the posterior, , we could estimate the expectation in Eq. (23) numerically using direct Monte Carlo integration as follows:

where the x(i) with denotes Ne independent samples from the posterior. Thanks to the law of large numbers and the central limit theorem, we know that this approximation will converge almost surely to the true expectation as Ne→∞ with a standard error inversely proportional to (Chopin and Papaspiliopoulos, 2020). Unfortunately, we are rarely able to generate independent samples directly from the posterior.

In importance sampling, we instead use a proposal (or importance) distribution q(x) that we know how to sample from, which must have at least the same support as the posterior (i.e., q(x)>0 wherever ). Then, by multiplying the integrand with , we can solve Eq. (23) using importance-sampling-based Monte Carlo integration:

where x(i) now denotes samples from the proposal and we have defined the normalized weights . Following the nomenclature of Chopin and Papaspiliopoulos (2020), these weights are normalized in the sense that their expectation with respect to q(x) equals 1. A hurdle remains in that we only know the posterior up to an unknown normalizing constant (i.e., the evidence Z), so in practice we can only evaluate the un-normalized posterior. Recalling the definition of the evidence in Eq. (3), we can nonetheless use importance sampling to approximate it as follows:

where ) is the un-normalized posterior and we have defined the un-normalized weights , which no longer have an expectation of 1 with respect to q(x). Using the samples x(i)∼q(x), this evidence approximation, and that , we can now solve Eq. (23) as follows:

where, letting w(i)=w(x(i)) for economy, the auto-normalized weights are given by with the property that . Note that with these auto-normalized weights, q(x) also only needs to be known up to a normalizing constant Zq, since any such constants cancel out in the auto-normalization step. Furthermore, direct Monte Carlo integration can be seen as a special case of importance sampling where the proposal is the target distribution itself which leads to uniformly equal weights with a value of .

Mathematically, importance sampling is tantamount to a particle representation of the posterior through a sum of weighted Dirac delta functions centered on the sampled states x(i)∼q(x) of the form (Särkkä, 2013). We can see this by recalling that the Dirac delta has the properties that and , such that by inserting the particle representation in Eq. (23) we have

which is equal to the result in Eq. (27). This particle representation is helpful, since we can conceptualize distributions as consisting of a set of particles (or points) in state space whose probability masses are given by their weights.

The catch with importance sampling is that, unless the proposal is nearly identical to the target distribution, all the probability mass tends to collapse onto just a few particles as the dimensions of a problem increase (MacKay, 2003). This is the so-called degeneracy problem of particle methods, which is closely tied to the aforementioned curse of dimensionality (Farchi and Bocquet, 2018; Snyder et al., 2008). One way to partly circumvent degeneracy in a sequential setting is to employ resampling techniques (see Li et al., 2015) where a new set of equally weighted particles is drawn based on the weights (i.e., probability masses) of the existing particles. Effectively, resampling tends to reproduce particles with a higher weight while removing particles with a lower weight. Resampling thus provides obvious links between particle methods and more heuristic genetic algorithms, since the weights can be interpreted as a kind of “fitness” (Chopin and Papaspiliopoulos, 2020). The standard metric (e.g., Särkkä, 2013) for monitoring symptoms of degeneracy is the effective sample size

where a healthy ensemble of particles would have Neff=Ne, while a completely degenerated ensemble has Neff=1. This metric can be used to adaptively determine the need for resampling based on a requirement that the ratio stays above some “healthy” threshold. Although resampling ensures that weight is more evenly spread among the particles, the occurrence of several identical particles results in sample impoverishment, which can in the worst case also lead to a degenerate representation of the posterior. Particle diversity can nonetheless often be rejuvenated implicitly through stochastic terms (jitter) in the dynamical model or more explicitly with a few iterations of Markov chain Monte Carlo (MCMC; Gilks and Berzuini, 2001).

Having outlined importance sampling and resampling, the final step is to tie these steps together with the sequential aspect of particle methods. In the context of both particle filtering and smoothing, importance sampling can be applied sequentially in time as observations become available to the state-space model. For the particle filter, this occurs by first drawing particles from the initial prior and then for performing SIR:

-

Propagate the particles forward in time through the dynamical model from time k−1 to k where new observations are available.

-

Calculate the auto-normalized weights given the current observations.

-

Resample the particles, possibly only if the ratio is below some threshold.

The recipes for implementing most flavors of particle smoothers tend to be a little bit more involved (Särkkä, 2013). Fortunately, the particle batch smoother (PBS) in MuSA is relatively straightforward, since in practice it is equivalent to standard sequential importance sampling (SIS), which is just SIR without the resampling step. As such, SIS is quite prone to degeneracy. Nonetheless, an advantage with SIS is that, due to the absence of resampling, the dynamical state history of each particle will be completely consistent with the dynamical model. For the PBS we are only interested in the final (i.e., at time tn) weights , which, when attached to the full state histories, give us an approximation of the full posterior rather than the filtering distribution . This is advantageous in snow data assimilation, since it allows information from observations during the ablation season to propagate backward in time and influence states in the preceding accumulation season. The final weights can be computed either sequentially using SIS or in a batch update, since these are equivalent when the dynamics are Markovian and the observations are conditionally independent in time. The batch approach used in the PBS is appealing, since it can be wrapped around the model, allowing the dynamics to evolve freely for the whole data assimilation window from t0 to tn without the need for I/O interruptions in the time integration and typically resulting in marked run time acceleration compared to sequential approaches.

Now that we have introduced the particle methods, what remains is to describe their implementation in MuSA and in particular the choice of proposal distribution. For simplicity, we adopt the standard and simplest approach for particle methods, which is to use the prior as the proposal (van Leeuwen and Evensen, 1996). Note that this is analogous to what was done in the seminal bootstrap filter of Gordon et al. (1993) and is to our knowledge the only approach considered so far for snow data assimilation. Thereby, recalling that and inserting for q(x)=p(x), then , which gives us the following simple expression for the auto-normalized weights:

which is simply the normalized likelihood of the prior particles x(i)∼p(x). With the usual Gaussian likelihood employed in MuSA, these weights are given by

where denotes the predicted observations for the ith particle. In practice, to ensure numerical stability, we first compute the natural logarithm of the weights by using the log-sum-exp trick to avoid potential overflow and minimize the effects of underflow (Murphy, 2022; Chopin and Papaspiliopoulos, 2020). Subsequently, the weights can be diagnosed by taking the exponential of these stable logarithms.

In the current version of MuSA we apply resampling at each observation time step for the PF independently of what the effective sample size is. Furthermore the state x is split into parameters u and internal states v. The PF in MuSA is then implemented as described in the pseudocode in Algorithm 1. For clarity, this pseudocode omits details such as the log-sum-exp trick (Murphy, 2022) and the way that resampling is performed given that there are several options available (Li et al., 2015).

Algorithm 1Particle filter.

Note that the particles will all have a posterior weight equal to due to the resampling which happens at each observation time step. Combined with the transition density, these weights form the prior for the next observation time. This explains why no weights from the prior enter into the posterior weight calculation, since these are all equal and so cancel out in the auto-normalization. For the PF, the DAW can be understood to be the time interval between two neighboring observation times. The prior mean and variance of the internal states can be obtained by taking the (unweighted) ensemble means and variances of the particle trajectories in the DAW before the resampling step. The posterior means and variances of the internal states can be obtained by taking the (unweighted) ensemble mean and variance of the particle trajectories in the DAW after the resampling step.

In MuSA, the PBS is even more straightforward to implement than the PF. Pseudocode for the PBS algorithm as implemented in MuSA is outlined in Algorithm 2. Here too we have emphasized clarity in the pseudocode and omit details from the actual code such as the log-sum-exp trick.

Algorithm 2Particle batch smoother.

For the PBS, the DAW is the entire water year. The prior mean and variance of the internal states can be obtained by taking the (unweighted) ensemble means and variances of the particle trajectories in the DAW. The posterior means and variances of the internal states are obtained by taking the weighted ensemble mean and variance of the particle trajectories in the DAW.

3.5 Ensemble Kalman methods

The ensemble Kalman filter (EnKF) was originally proposed by Evensen (1994) as a Monte Carlo version of the original Kalman filter (KF; see Jazwinski, 1970; Särkkä, 2013). The original KF assumes that all distributions involved in filtering are Gaussian and that both the dynamical and the observation models are linear. These assumptions come on top of the usual filtering assumptions of Markovian state dynamics and conditionally independent observations. If all these assumptions are met, the exact filtering distribution turns out to be a Gaussian one of the form and there is a set of closed-form (i.e., analytical) equations for the mean mk and covariance Pk, which completely defines this Gaussian filtering distribution, known as the KF equations (Särkkä, 2013). Geoscientific models are almost never linear, so these equations can usually not be applied directly in practice. Even when they can, the need to explicitly store and update the state covariance matrix Pk with dimensions m×m can be computationally prohibitive in high dimensional problems typical in geoscience (Carrassi et al., 2018).

There exist several modified versions of the KF such as the extended KF (EKF) based on Taylor series approximations and the unscented KF (UKF) based on the unscented transform that can both partly circumvent the linearity assumption (Särkkä, 2013). Since both the EKF and the UKF also require an explicit covariance matrix update and are considerably more challenging to implement than the KF, they have only rarely been used for geoscientific DA. Instead, since its introduction by Evensen (1994), it is the EnKF that has become one of the methods of choice for DA. The reasons for this are that the EnKF is relatively straightforward to implement and understand (Katzfuss et al., 2016), it can handle non-linear models, the state covariance evolves implicitly with the ensemble, and it has proven to work well in very high dimensional operational applications in both meteorology and oceanography (see Carrassi et al., 2018, for a review). Although it makes the same Gaussian linear assumptions as the KF, in practice it can often still function well when these assumptions are strongly violated thanks to techniques such as Gaussian anamorphosis (Bertino et al., 2003) and iterations (Emerick and Reynolds, 2013). The ensemble Kalman approach can also be applied to solve smoothing problems as first shown by van Leeuwen and Evensen (1996). These smoothers, particularly the so-called ensemble smoother (ES), play an important role in solving reanalysis problems that arise in both land surface and snow data assimilation (Dunne and Entekhabi, 2005; Durand and Margulis, 2006).

The EnKF was one of the first schemes to be used for snow data assimilation. Slater and Clark (2006) used the EnKF to assimilate SWE data into a conceptual snow model at several sites in Colorado, leading to marked improvements in SWE estimates compared to both the control model runs and interpolated observations. Clark et al. (2006) used a snow depletion curve to assimilate synthetic satellite retrievals of FSCA into a conceptual snow hydrology model of a catchment in Colorado, leading to minor improvements in simulated streamflow. Durand and Margulis (2006) jointly assimilated synthetic satellite retrievals of brightness temperature and albedo into the SSiB3 model using the EnKF at a site in the California Sierra Nevada to yield marked improvements compared to the open loop. Andreadis and Lettenmaier (2006) used the EnKF to assimilate MODIS FSCA into the VIC model for the Snake River basin in the Pacific Northwest region of the USA, improving model estimates of both FSCA and SWE.

Following on from these initial studies there have been several more recent snow DA applications using the EnKF. In a synthetic experiment in northern Colorado, De Lannoy et al. (2010) showed how the EnKF can be used to assimilate coarse-scale passive microwave SWE observations into high-resolution runs of the Noah land surface model, leading to large reductions in error in SWE estimation compared to the open loop. In a follow-up study, De Lannoy et al. (2012) jointly assimilated real MODIS FSCA and AMSR-E SWE retrievals into the Noah model to demonstrate the complementary nature of these types of satellite observations for SWE estimation. Magnusson et al. (2014) used the EnKF to assimilate data from ground-based stations in the Swiss Alps and showed that assimilating fluxes (snowmelt and snowfall), rather than directly assimilating SWE data, improved the SWE estimation. The study of Huang et al. (2017) demonstrated that the assimilation of in situ SWE data into a hydrological model with the EnKF could improve streamflow simulations for several basins in the western USA. Stigter et al. (2017) performed a joint assimilation of in situ snow depth data and FSCA satellite retrievals with the EnKF to optimize parameters and subsequently estimate the climate sensitivity of SWE and snowmelt runoff for a Himalayan catchment. More recently, Hou et al. (2021) showed how machine learning techniques can be used to construct empirical observation operators to assimilate satellite-based FSCA with the EnKF.

The ensemble smoother (ES), the batch smoother version of the EnKF, has also been used extensively for snow DA, mainly for reanalysis. Durand et al. (2008) were the first to demonstrate the value of the ES for snow reanalysis by noting that it could be used as a new approach to solve the traditional problem of snow reconstruction. They showed substantial improvements in SWE estimation for the posterior compared to the prior after having assimilated synthetic FSCA observations into SSiB3. A key advantage of the ES over the EnKF is that it allows information to propagate backward in time, so observations made in the ablation season can update the preceding accumulation season. Following up this study with a real experiment assimilating Landsat FSCA retrievals into SSiB3 using the ES at a basin in the California Sierra Nevada, Girotto et al. (2014a) demonstrated explicitly how snow reanalysis using the ES is a more robust probabilistic generalization of the traditional deterministic approach to snow reconstruction. Subsequently, Girotto et al. (2014b) applied the same ES snow reanalysis framework to produce a multi-decadal high-resolution snow reanalysis for the Kern River watershed in the California Sierra Nevada. Oaida et al. (2019) used the ES to assimilate MODIS FSCA observations into the VIC model to produce a kilometer-scale snow reanalysis over the entire western USA. Aalstad et al. (2018) introduced an iterative version of the ES, the ensemble smoother with multiple data assimilation (ES-MDA; Emerick and Reynolds, 2013), for snow reanalysis and showed how this could outperform both the ES and the PBS when assimilating FSCA retrievals at sites on the high-Arctic Svalbard archipelago. In this iterative scheme the prior ensemble moves gradually to the posterior ensemble through a tempering procedure (see Stordal and Elsheikh, 2015, and references therein). The iterations thus mitigate the impact of the linearity assumption inherent in ensemble Kalman methods (Evensen, 2018), typically leading to marked improvements compared to the ES for non-linear models without the risk of degeneracy associated with particle methods as the curse of dimensionality rears its head (Snyder et al., 2008; Pirk et al., 2022).

A derivation of the KF equations, which the EnKF and ES are largely based on, is beyond the scope of this work. Full multivariate Bayesian derivations of these equations can be found on p. 197 of Jazwinski (1970) and in Sect. 4.3 of Särkkä (2013). The EnKF itself is thoroughly derived in Evensen (2009) and Evensen et al. (2022). There are actually several variants of the ensemble Kalman analysis step (see Carrassi et al., 2018). In MuSA we use the so-called stochastic rather than deterministic (or square-root) implementation. This stochastic formulation adds perturbations to the predicted observations to ensure adequate ensemble spread and consistency with the underlying Bayesian theory (Burgers et al., 1998; van Leeuwen, 2020).

Here we present the equations for both the stochastic EnKF (van Leeuwen, 2020) and the ES (van Leeuwen and Evensen, 1996), as well as their iterative versions based on the multiple data assimilation (MDA) scheme of Emerick and Reynolds (2012, 2013), in the form that they are used in MuSA. The iterative versions tend to perform better than their non-iterative counterparts for non-linear problems (Evensen, 2018). Let Na denote the number of assimilation cycles (iterations) performed in a pseudotime (rather than model) time. For the standard EnKF and ES we set Na=1, while with their iterative variants Na>1, typically Na=4 (Emerick and Reynolds, 2013; Aalstad et al., 2018). The superscript ℓ is used to index these iterations. Let denote the mp×Ne parameter matrix containing the ensemble () of parameter vectors u(i)(ℓ) for iteration ℓ. Recall that the subset of these parameters that are physically bounded have undergone the relevant analytic transformations for Gaussian anamorphosis, and the corresponding inverse transforms are applied back to physical space when these are passed through the dynamical model (see Aalstad et al., 2018). Similarly, let denote the predicted observation matrix containing the ensemble of predicted observations . Adopting this notation, the stochastic ensemble Kalman methods in MuSA are implemented as described in the pseudocode in Algorithm 3. For clarity, this pseudocode also omits details such as how the matrix inversion for the ensemble Kalman gain in Eq. (32) is carried out. For this we follow the pseudoinverse and rescaling approach outlined in Appendix A of Emerick and Reynolds (2012).

Algorithm 3Ensemble Kalman analysis with multiple data assimilation.

Recall that for Na=1 we recover the non-iterative stochastic EnKF and ES, while for Na>1 we are using iterative versions of these schemes that involve multiple data assimilation with inflated observation errors. The term “multiple data assimilation” refers to the assimilation of the same data multiple times rather than an assimilation of different types of data (joint assimilation). We speak of inflated observation errors, since the role of the coefficients α(ℓ) is to inflate the observation error covariance R in the Kalman gain Kℓ as well as the observation error term . This inflation is tantamount to tempering the likelihood as discussed by Stordal and Elsheikh (2015) and van Leeuwen et al. (2019), which explains why these iterative schemes perform better than their non-iterative counterparts for non-linear models in that they involve a more gradual transition from the prior to the posterior. Despite what the name might suggest, this multiple data assimilation approach does not actually violate the consistency of Bayesian inference by using the data more than once due to the way the observation error inflation is constructed, particularly due to the constraint that . Simply stated, this constraint ensures consistent results with a linear model, since by construction we obtain the same result by assimilating the data once with the original uninflated (α=1) observation errors as we do assimilating the same data multiple times with inflated (α>1) observation errors. With a non-linear model, practice has shown that these iterations of the ensemble Kalman analysis ensure that the approximate posterior is closer to the true posterior than if a more conventional single uninflated iteration is used (Evensen, 2018). It is possible to satisfy the constraint on the α(ℓ) with both uniform and non-uniform inflation coefficients (Evensen, 2019). For simplicity, following Emerick and Reynolds (2013), we currently opt for the former in MuSA by setting α(ℓ)=Na for all ℓ values by default while allowing for non-uniform coefficients as an option.

To keep the notation as simple as possible, we have not explicitly introduced time indices for these ensemble Kalman methods. Instead we can simply note that for the iterative and non-iterative EnKF, the loop above runs inside another outer loop that runs across all observation times. That is to say, the ℓ loop is run several times sequentially for multiple DAWs where each window is from one observation time to the next, such that the posterior parameters and internal states obtained at ℓ=Na for the current DAW that ran up to the current observation time become the initial prior at ℓ=0 for the next DAW that runs from the current observation time up to the next observation time. Recall that the parameters also undergo jitter dynamics when filtering.

For the non-iterative and iterative ensemble batch Kalman smoothers used in MuSA, namely the ES and the ES-MDA, there is no longer an outer time loop encapsulating the iterations. Instead, the DAW is the entire water year. In these smoother schemes, the parameters do not undergo any jitter. As such, the entire prior and posterior ensemble of state trajectories for a given water year will be consistent with the prior and posterior ensemble of static parameters for the entire water year. Recall that each water year is typically updated independently in MuSA, which currently focuses on seasonal snow.

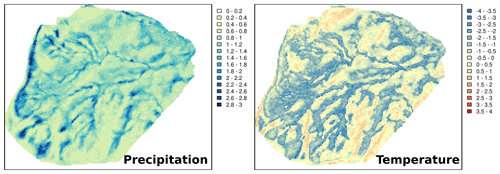

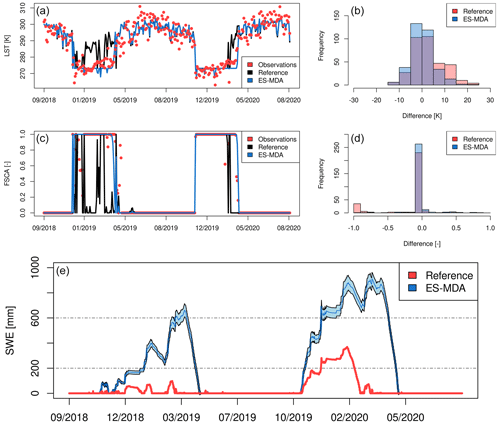

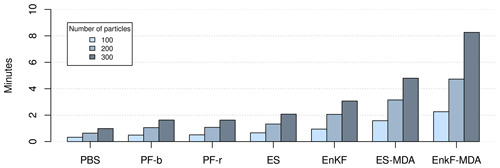

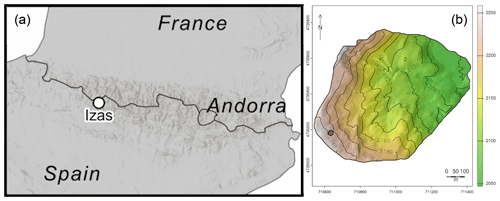

In the following section, we illustrate the capabilities of MuSA using a case study and perform a benchmarking of the implemented data assimilation algorithms. We developed two data assimilation experiments in the 55 ha Izas experimental catchment (Revuelto et al., 2017) in the Spanish Pyrenees (see Fig. 2). The first experiment shows the capabilities of MuSA to assimilate hyper-resolution products in both a single-cell and distributed fashion, whereas the second demonstrates the capabilities to develop joint-assimilation experiments. Note that all the variables that we assimilate in these experiments are state variables in FSM2. We generated hourly meteorological forcing at 5 m resolution over the Izas catchment using the MicroMet meteorological distribution system (Liston and Elder, 2006b) to downscale the ERA5 atmospheric reanalysis (Hersbach et al., 2020).

Figure 2Location of the Izas experimental basin in the Pyrenees (a) and topography (b). The red circle indicates the location of the cell used for the intercomparison of algorithms described below.

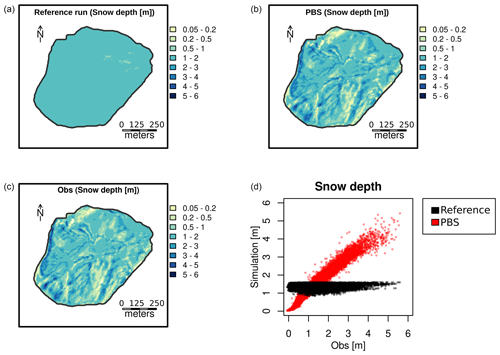

4.1 Single-cell and distributed assimilation of drone-based snow depth retrievals