the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Massive-Parallel Trajectory Calculations version 2.2 (MPTRAC-2.2): Lagrangian transport simulations on graphics processing units (GPUs)

Paul F. Baumeister

Zhongyin Cai

Jan Clemens

Sabine Griessbach

Gebhard Günther

Yi Heng

Mingzhao Liu

Kaveh Haghighi Mood

Olaf Stein

Nicole Thomas

Bärbel Vogel

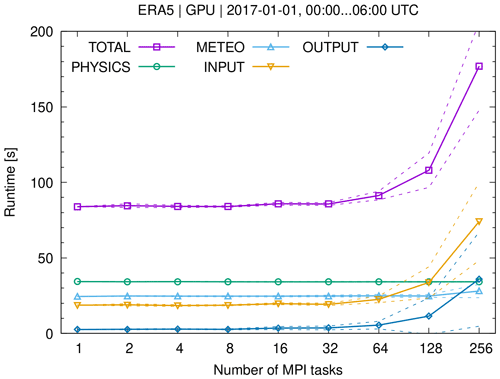

Lagrangian models are fundamental tools to study atmospheric transport processes and for practical applications such as dispersion modeling for anthropogenic and natural emission sources. However, conducting large-scale Lagrangian transport simulations with millions of air parcels or more can become rather numerically costly. In this study, we assessed the potential of exploiting graphics processing units (GPUs) to accelerate Lagrangian transport simulations. We ported the Massive-Parallel Trajectory Calculations (MPTRAC) model to GPUs using the open accelerator (OpenACC) programming model. The trajectory calculations conducted within the MPTRAC model were fully ported to GPUs, i.e., except for feeding in the meteorological input data and for extracting the particle output data, the code operates entirely on the GPU devices without frequent data transfers between CPU and GPU memory. Model verification, performance analyses, and scaling tests of the Message Passing Interface (MPI) – Open Multi-Processing (OpenMP) – OpenACC hybrid parallelization of MPTRAC were conducted on the Jülich Wizard for European Leadership Science (JUWELS) Booster supercomputer operated by the Jülich Supercomputing Centre, Germany. The JUWELS Booster comprises 3744 NVIDIA A100 Tensor Core GPUs, providing a peak performance of 71.0 PFlop s−1. As of June 2021, it is the most powerful supercomputer in Europe and listed among the most energy-efficient systems internationally. For large-scale simulations comprising 108 particles driven by the European Centre for Medium-Range Weather Forecasts' fifth-generation reanalysis (ERA5), the performance evaluation showed a maximum speed-up of a factor of 16 due to the utilization of GPUs compared to CPU-only runs on the JUWELS Booster. In the large-scale GPU run, about 67 % of the runtime is spent on the physics calculations, conducted on the GPUs. Another 15 % of the runtime is required for file I/O, mostly to read the large ERA5 data set from disk. Meteorological data preprocessing on the CPUs also requires about 15 % of the runtime. Although this study identified potential for further improvements of the GPU code, we consider the MPTRAC model ready for production runs on the JUWELS Booster in its present form. The GPU code provides a much faster time to solution than the CPU code, which is particularly relevant for near-real-time applications of a Lagrangian transport model.

- Article

(8837 KB) - Full-text XML

-

Supplement

(3020 KB) - BibTeX

- EndNote

Lagrangian transport models are frequently applied to study chemical and dynamical processes of the Earth's atmosphere. They have important practical applications in modeling and assessing the dispersion of anthropogenic and natural emissions from local to global scale, for instance, for air pollution (Hirdman et al., 2010; Lee et al., 2014), nuclear accidents (Becker et al., 2007; Draxler et al., 2015), volcanic eruptions (Prata et al., 2007; D'Amours et al., 2010; Stohl et al., 2011), or wildfires (Forster et al., 2001; Damoah et al., 2004). Concerning studies of atmospheric dynamics of the free troposphere and stratosphere, Lagrangian transport models have been used, for instance, to assess the circulation of the troposphere and stratosphere (Bowman and Carrie, 2002; Konopka et al., 2015; Ploeger et al., 2021), stratosphere–troposphere exchange (Wernli and Bourqui, 2002; James et al., 2003; Stohl et al., 2003), mixing of air (Konopka et al., 2005, 2007; Vogel et al., 2011), and large-scale features of the atmospheric circulation, such as the polar vortex (Grooß et al., 2005; Wohltmann et al., 2010) or the Asian monsoon anticyclone (Bergman et al., 2013; Vogel et al., 2019; Legras and Bucci, 2020).

A wide range of Lagrangian transport models has been developed for research studies and operational applications during the past decades (Draxler and Hess, 1998; McKenna et al., 2002b, a; Lin et al., 2003; Stohl et al., 2005; Jones et al., 2007; Stein et al., 2015; Sprenger and Wernli, 2015; Pisso et al., 2019). While Eulerian models represent fluid flows in the atmosphere based on the flow between regular grid boxes of the model, Lagrangian models represent the transport of trace gases and aerosols based on large sets of air parcel trajectories following the fluid flow. Both approaches have distinct advantages and disadvantages. Lagrangian models are particularly suited to study fine-scale structures, filamentary transport, and mixing processes in the atmosphere, as their spatial resolution is not inherently limited to the resolution of Eulerian grid boxes and numerical diffusion is particularly low for these models. However, Lagrangian transport simulations may become rather costly because subgrid-scale processes, such as diffusion and mesoscale wind fluctuations, need to be represented in a statistical sense, i.e., by adding stochastic perturbations to large sets of air parcel trajectories.

In this study, the Massive-Parallel Trajectory Calculations (MPTRAC) model is applied to exploit the potential of conducting Lagrangian transport simulations on graphics processing units (GPUs). MPTRAC was first described by Hoffmann et al. (2016), discussing Lagrangian transport simulations for volcanic eruptions with different meteorological data sets. Heng et al. (2016) and Liu et al. (2020) discussed inverse transport modeling to estimate volcanic sulfur dioxide emissions from the Nabro eruption in 2011 based on large-scale simulations with MPTRAC. Wu et al. (2017, 2018) conducted case studies on global transport of volcanic sulfur dioxide emissions of Sarychev Peak in 2009 and Mount Merapi in 2010. Cai et al. (2021) studied the Raikoke volcanic eruption in June 2019. Zhang et al. (2020) applied MPTRAC to study aerosol variations in the upper troposphere and lower stratosphere over the Tibetan Plateau. Smoydzin and Hoor (2021) used the model to assess the contribution of Asian emissions to upper tropospheric carbon monoxide over the remote Pacific. Hoffmann et al. (2017) presented an intercomparison of meteorological analyses and trajectories in the Antarctic lower stratosphere with Concordiasi superpressure balloon observations. Rößler et al. (2018) investigated the accuracy and computational efficiency of different integration schemes to solve the trajectory equation with MPTRAC. Based on the given applications, MPTRAC is designed primarily to study large-scale atmospheric transport in the free troposphere and stratosphere. Applications of the model to the planetary boundary layer are limited as MPTRAC lacks more sophisticated parameterizations of turbulence and mixing required for that region.

The idea of using specialized computation units for scientific computing goes back to the early 1980s when co-processors like Intel's 8087 and 8231 were introduced. It is more than 20 years since graphics processing units (GPUs) were leveraged for non-graphical general-purpose calculations (Hoff et al., 1999) and a decade since the first GPU-accelerated cluster appeared on the top 500 list of supercomputers (Dongarra et al., 2021). Today, 6 of the 10 fastest supercomputers on the top 500 list and 9 out of 10 on the green 500 list are GPU-accelerated machines. Most of the pre-exascale systems (CINECA, 2020; CSC, 2020), and likely the first exascale machine will be accelerated by GPUs (DOE, 2019a, b; LLNL, 2020). Besides energy efficiency, the software and hardware maturity contributed to the popularity of GPUs. GPUs are not only interesting high-performance computing (HPC) research topics anymore but also essential workhorses for modern scientific computing.

Large-scale and long-term Lagrangian transport simulations for climate studies or inverse modeling applications can become very compute-intensive (Heng et al., 2016; Liu et al., 2020). The benefits of parallel computing to accelerate Lagrangian transport simulations have been assessed in several studies (Jones et al., 2007; Brioude et al., 2013; Pisso et al., 2019). The aim of the present study is to investigate the potential of using GPUs for accelerating Lagrangian transport simulations, a topic first explored by Molnár et al. (2010). Related efforts to solve the advection–diffusion equation for a 3-D time-dependent Eulerian model on GPUs have been reported by de la Cruz et al. (2016). The Centre for Global Environmental Research at the National Institute for Environmental Studies, Japan, published online a Lagrangian particle dispersion model based on parts of the FLEXible PARTicle (FLEXPART) model (Stohl et al., 2005; Pisso et al., 2019), reporting that performance can be improved by more than 20 times by using a multi-core CPU with an NVIDIA GPU (CGER, 2016).

GPUs bear the potential to not only calculate the solutions of Lagrangian transport problems more quickly but also obtain them in a much more energy-efficient manner. For this study, we ported our existing Lagrangian transport model MPTRAC to GPUs by means of the open accelerator (OpenACC) programming model. Next to offloading calculations to GPUs, the code is also capable of distributing computing tasks employing the Message Passing Interface (MPI) over the compute nodes and the Open Multi-Processing (OpenMP) over the CPU cores of a heterogeneous supercomputer. A detailed evaluation and performance assessment of MPTRAC on GPUs was conducted on the Jülich Wizard for European Leadership Science (JUWELS) system (Jülich Supercomputing Centre, 2019) at the Jülich Supercomputing Centre, Germany.

Lagrangian transport simulations are often driven by global meteorological reanalyses or forecast data sets. The MPTRAC model has been used with the National Centers for Environmental Prediction and National Center for Atmospheric Research (NCEP/NCAR) reanalysis 1 (Kalnay et al., 1996), the National Aeronautics and Space Administration (NASA) Modern-Era Retrospective analysis for Research and Applications (Rienecker et al., 2011; Gelaro et al., 2017) as well as the European Centre for Medium-Range Weather Forecasts (ECMWF) ERA-Interim reanalysis (Dee et al., 2011) and operational analyses. More recently, we also applied ECMWF's fifth-generation reanalysis, ERA5 (Hersbach et al., 2020), to drive simulations with the MPTRAC model. Providing hourly data at 31 km horizontal resolution on 137 vertical levels from the surface up to 0.01 hPa (about 80 km of height) on a global scale, ERA5 poses a particular challenge for Lagrangian transport modeling due to the large amount of data that needs to be handled (Hoffmann et al., 2019). In this study, we conducted the model verification and performance analyses of MPTRAC with ERA5 data, as this is the data set we intend to mainly use in future works, to benefit from high resolution and other improvements of the forecasting model and data assimilation scheme. It also needs to be taken into account that the production of ERA-Interim stopped in August 2019 in favor of ERA5.

We provide a comprehensive description of the MPTRAC model in Sect. 2 of this paper. Next to describing the different implemented algorithms and the requirements and options for model input and output data, we discuss the parallelization strategy and the porting of the code to GPUs in more detail. Model verification as well as performance and scaling analyses are discussed in Sect. 3. As the CPU code of MPTRAC was already verified in earlier studies, we mainly focus on the direct comparisons of CPU and GPU calculations in the present work. The model evaluation covers comparisons of both individual kinematic trajectory calculations and global simulations of synthetic tracer distributions, including the effects of diffusion, convection, and chemical lifetime. We assessed the performance and scaling of all three components of the MPI–OpenMP–OpenACC hybrid parallelization on a single device and in a multi-GPU setup. Section 4 provides the summary and conclusions of this study.

2.1 Overview on model features and code structure

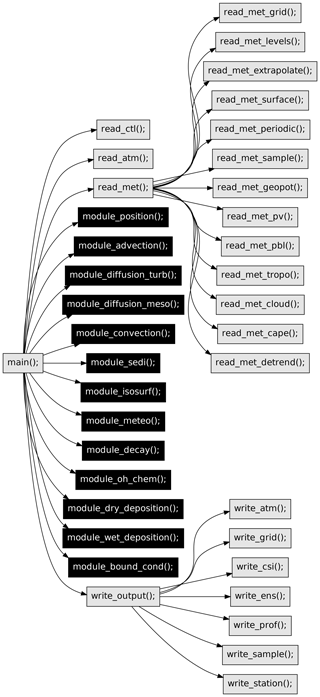

Figure 1 provides a simplified overview on the code structure and most of the features of the MPTRAC model. The call graph shown here was derived automatically from the C code of MPTRAC by means of the cflow tool, but it has been edited and strongly simplified in order to present only the most relevant features. Following the input–process–output (IPO) pattern, the call graph reveals the principle input functions (read_*), the processing functions (module_*), and the output functions (write_*) of MPTRAC.

Figure 1Call graph of the most relevant functions of the MPTRAC model. The individual functions are sorted following the IPO approach; see text for details. Black boxes highlight parts of the code that are ported to GPUs.

Three main input functions are available, i.e., read_ctl to read the model control parameters, read_atm to read the initial particle data, and read_met to read the meteorological input data. The read_met function further splits into functions that deal directly with reading of the meteorological data files (read_met_grid, read_met_levels, and read_met_surface), extrapolation and sampling of the meteorological data (read_met_extrapolate, read_met_periodic, read_met_sample, and read_met_detrend), and the calculation of additional meteorological variables (read_met_geopot, read_met_pv, read_met_pbl, read_met_tropo, read_met_cloud, and read_met_cape), also referred to as meteorological data preprocessing in this work. Although the additional meteorological variables are often available directly via the meteorological input data files, the MPTRAC model allows users to recalculate these data consistently from different meteorological input data sets. The MPTRAC model input data are further discussed in Sect. 2.2.

The processing functions of MPTRAC provide the capabilities to calculate kinematic trajectories of the particles using given ω velocities (module_advection) and to add stochastic perturbations to the trajectories to simulate the effects of diffusion and subgrid-scale wind fluctuations (module_diffusion_turb and module_diffusion_meso). The functions module_convection and module_ sedi may alter the particle positions along the trajectories by simulating the effects of unresolved convection and sedimentation. The function module_isosurf allows us to vertically constrain the particle positions to different types of isosurfaces. The function module_position enforces the particle to remain within the boundaries of the model domain. The function module_meteo allows us to sample the meteorological data along the trajectories at fixed time intervals. The functions module_decay, module_oh_chem, module_dry_deposition, module_wet_deposition, and

module_bound_cond affect the mass or volume mixing ratios of the particles based on a given e-folding lifetime, chemical decomposition by the hydroxyl radical, dry and wet deposition, or by enforcing boundary conditions, respectively. Most of the chemistry and physics modules are presented in more detail in Sect. 2.3.

Finally, the model output is directed via a generic function (write_output) towards individual functions that can be used to write particle data (write_atm), gridded data (write_grid), or ensemble data for groups of particles (write_ens). The functions write_csi, write_prof, write_sample, and write_station can be used to sample the model data in specific manners to enable comparisons with observational data, such as satellite measurements or data from measurement stations. The model output is further discussed in Sect. 2.4.

All the processing functions (module_*) are highlighted in Fig. 1 to indicate that they have been ported to GPUs in the present work. Porting these processing functions to GPUs is the natural choice as these functions typically require most of the computing time, in particular for simulations with many particles. The input and output functions cannot easily be ported to GPUs as the input and output operations are directed over the CPUs and utilize CPU memory in the first place. The approach used for porting the physics and chemistry modules to GPUs and the parallelization strategy of MPTRAC are discussed in more detail in Sect. 2.5.

2.2 Model input data

2.2.1 Initial air parcel positions

In order to enable trajectory calculations, MPTRAC requires an input file providing the initial positions of the air parcels. The initial positions are defined in terms of time, log-pressure height, longitude, and latitude. Internally, MPTRAC applies pressure as its vertical coordinate. For convenience, the initial pressure p is specified in terms of a log-pressure height z obtained from the barometric formula, , with a fixed reference pressure p0=1013.25 hPa and scale height H=7 km. Longitude and latitude refer to a spherical Earth approximation, with a constant radius of curvature of RE=6367.421 km.

Internally, MPTRAC uses the time in seconds since 1 January 2000, 00:00 UTC as its time coordinate. Tools are provided along with the model to convert the internal time from and to UTC time format. The internal time step Δt of the model simulations can be adjusted via a control flag. MPTRAC allows for arbitrary start and end times of the trajectories by adjusting (shortening) the initial and the final time steps to synchronize with the internal model time steps Δt. A control flag is provided to specify whether the model should conduct the trajectory calculations forward or backward in time.

Unless the initial positions of the trajectories are derived from measurements, a set of tools provided with MPTRAC can be used to create initial air parcel files. The tool atm_init creates a regular grid of air parcel positions in time, log-pressure height, longitude, and latitude. Around each grid point and time, the tool can create random distributions of multiple particles by applying either uniform distributions with given widths or normal distributions with given standard deviations. The total mass of all air parcels can be specified, which is distributed equally over all air parcels. Alternatively, a fixed volume mixing ratio for each air parcel can be specified. With this tool, initializations for a wide range of sources (e.g., point source, vertical column, surface, or volume source) with spontaneous or continuous emissions can be created.

A tool to modify air parcel positions is atm_split. It can be used to create a larger set of air parcel positions based on randomized resampling from a given, smaller set of air parcel positions. It splits the initial small set into a larger set of air parcels. The total number of air parcels that is desired for the large set can be specified, which is helpful because it provides control over the computational time needed for a simulation. The probability by which an air parcel is selected during the resampling can be weighted by its mass. The total mass of the initial air parcels can be retained or adjusted during the resampling process. During the resampling process, uniform or Gaussian stochastic perturbations in space and time can be applied to the air parcel positions. These stochastic perturbations can be used to spread the air parcels over a larger volume, which might represent the measurement volume covered by an instrument, for example. Finally, a vertical weighting function can be applied in the resampling process in order to redistribute the air parcel positions to follow the vertical sensitivity of a satellite sounder, for instance.

2.2.2 Requirements and preprocessing of meteorological input data

Next to the particle positions, the other main input data required by MPTRAC are meteorological data from a global forecasting or reanalysis system. At a minimum, MPTRAC requires 3-D fields of temperature T, zonal wind u, and meridional wind v. The vertical velocity ω, specific humidity q, ozone mass mixing ratio , cloud liquid water content (CLWC), cloud rain water content (CRWC), cloud ice water content (CIWC), and cloud snow water content (CSWC) are optional but often needed for specific applications. The model also requires the surface pressure ps or log-surface pressure (ln ps) and the geopotential height at the surface Zg to be provided as 2-D fields. Optionally, near-surface temperature T2 m and near-surface winds (u10 m,v10 m) can be ingested from the input files.

The model requires the input data in terms of separate NetCDF files at regular time steps. The meteorological data have to be provided on a regular horizontal latitude × longitude grid. The model internally uses pressure p as its vertical coordinate; i.e., the meteorological data should be provided on pressure levels. However, the model can also ingest meteorological data on other types of vertical levels, e.g., hybrid sigma vertical coordinates η as used by ECMWF's Integrated Forecast System (IFS). In this case, the model requires p as a 3-D coordinate on the input model levels in order to facilitate vertical interpolation of the data to a given list of pressure levels for further use in the model.

Once the mandatory and optional meteorological input variables have been read in from disk, MPTRAC provides the capability to calculate additional meteorological variables from the input data. This includes the options to calculate geopotential heights, potential vorticity, the tropopause height, cloud properties such as cloud layer depth and total column cloud water, the convective available potential energy (CAPE), and the planetary boundary layer (PBL). Having the option to calculate these additional meteorological data directly in the model helps to reduce the disk space needed to save them as input data. This is particularly relevant for large input data sets such as ERA5. It is also an advantage in terms of consistency, if the same algorithms can be applied to infer the additional meteorological variables from different meteorological data sets. The algorithms and selected examples on the meteorological data preprocessing are presented in the Supplement of this paper.

2.2.3 Boundary conditions for meteorological data

The upper boundary of the model is defined by the lowest pressure level of the meteorological input data. The lower boundary of the model is defined by the 2-D surface pressure field ps at a given time. If an air parcel leaves the pressure range covered by the model during the trajectory calculations, it will be pushed back to the lower or upper boundary of the model, respectively. Therefore, the particles will follow the horizontal wind and slide along the surface or the upper boundary until they encounter an up- or downdraft, respectively. For the surface, this approach follows Draxler and Hess (1997). The next release of the MPTRAC model will provide a new option to alternatively reflect particles at the top and bottom boundaries, in order to avoid accumulation of particles at the boundaries.

We recognize that pressure might not be the best choice for the vertical coordinate of a Lagrangian model, in particular for the boundary layer, because a broad range of surface pressure variations needs to be represented appropriately by a fixed set of pressure levels. However, as the scope of MPTRAC is on applications covering the free troposphere and the stratosphere, we consider the limitation of having reduced vertical resolution in terms of pressure levels near the surface acceptable for now. The next release of MPTRAC will allow the user to choose between pressure and the isentropic-sigma hybrid coordinate (ζ) of Mahowald et al. (2002) as vertical coordinate.

For global meteorological data sets, MPTRAC applies periodic boundary conditions in longitude. During the trajectory calculations, air parcels may sometimes cross either the pole or the longitudinal boundary of the meteorological data. If an air parcel crosses the North or South Pole, its longitude will be shifted by 180∘ and the latitude will be recalculated to match the ±90∘ latitude range, accordingly. If an air parcel leaves the longitude range, it will be repositioned by a shift of 360∘ to fit the range again. The model works with both meteorological data provided at −180∘…+180∘ or 0∘… 360∘ of longitude. Following Stohl et al. (2005), we mirror data from the western boundary at the eastern boundary of the model, in order to achieve full coverage of the 360∘ longitude range and to avoid extrapolation of the meteorological input data.

2.2.4 Interpolation and sampling of meteorological data

In MPTRAC, 4-D linear interpolation is applied to sample the meteorological data at any given position and time. Although higher-order interpolation schemes may achieve better accuracy, linear interpolation is considered a standard choice in Lagrangian particle dispersion models (Bowman et al., 2013). The approach requires that two time steps of the meteorological input data are kept in memory at each time. Higher-order interpolation schemes in time would require more time steps of the input data and would therefore require more memory.

Following the memory layout of the data structures used for the meteorological input data in our code and to make efficient use of memory caches, the linear interpolations are conducted first in pressure, followed by latitude and longitude, and finally in time. Interpolations can be conducted for a single variable or all the meteorological data at once. Two separate index look-up functions are implemented for regularly gridded data (longitude and latitude) and for irregularly gridded data (pressure). Interpolation weights are kept in caches to improve efficiency of the calculations.

For various studies, it is interesting to investigate the sensitivity of the results with respect to the spatial and temporal resolution of the meteorological input data. For instance, Hoffmann et al. (2019) assessed the sensitivity of Lagrangian transport simulations for the free troposphere and stratosphere with respect to downsampled versions of the ERA5 reanalysis data set. To enable such studies, we implemented an option for spatial downsampling of the meteorological input data. The downsampling is conducted in two steps. In the first step, the full resolution data are smoothed by applying triangular filters in the respective dimensions. Smoothing of the data is required in order to avoid aliasing effects. In the second step, the data are downsampled by selecting only each th grid point in longitude, latitude, and pressure, respectively.

Note that downsampling has not been implemented rigorously for the time domain. Nevertheless, the user can specify the time interval at which the meteorological input data should be ingested. If filtering of the downsampled data is required, downsampling in time needs to be done externally by the user by applying tools such as the Climate Data Operators (CDO) (Schulzweida, 2014) to preprocess the input data of different time steps accordingly. In any case, it needs to be carefully considered whether the meteorological input data represent instantaneous or time-averaged diagnostics at the given time steps of the data.

2.3 Chemistry and physics modules

2.3.1 Advection

The advection of an air parcel, i.e., the position x(t) at time t for a given wind and velocity field v(x,t), is given by the trajectory equation,

Here, the position x is provided in a meteorological coordinate system, , with longitude λ, latitude ϕ, and pressure p. The velocity vector is composed of the zonal wind component u, the meridional wind component v, and the vertical velocity ω, respectively. Based on its accuracy and computational efficiency for trajectory calculations (Rößler et al., 2018), we apply the explicit midpoint method to solve the trajectory equation,

The model time step Δt controls the trade-off between the accuracy of the solution and the computational effort. Similar to the Courant–Friedrichs–Lewy (CFL) condition, Δt should, in general, be selected such that the steps remain smaller than the grid box size of the meteorological data. MPTRAC will issue a warning, if for a longitudinal grid spacing Δλmet of the meteorological data, the mean radius of Earth RE, and a maximum zonal wind speed, . A default time step Δt=180 s has been selected to match the effective horizontal resolution of Δx≈31 km of ECMWF's ERA5 reanalysis.

Calculations of the numerical solution of the trajectory equation require coordinate transformations between Cartesian coordinate distances and spherical coordinate distances ,

The vertical coordinate transformation between Δp and Δz uses an average scale height of H0=7 km. However, note that the vertical transformation is currently not needed to solve Eq. (2), because the vertical velocity is already provided in terms of pressure change, , in the meteorological data. The transformation is reported here for completeness, as it is required in other parts of the model. For convenience, we assume λ and ϕ to be given in radians; i.e., scaling factors of ∘ and 180∘ to convert from degrees to radians, and vice versa, do not need to be introduced here.

The coordinate transformation between Δλ and Δx in Eq. (3) bears numerical problems due to the singularities near the poles. Although this problem might be solved by means of a change to a more suitable coordinate system at high latitudes, we implemented a rather simple solution. Near the poles, for , we discard the longitudinal distance Δλ calculated from Eq. (3) and use Δλ=0 instead. The high-latitude threshold was set to ϕmax=89.999∘, a distance of about 110 m from the poles, following an assessment of its effect on trajectory calculations conducted by Rößler (2015).

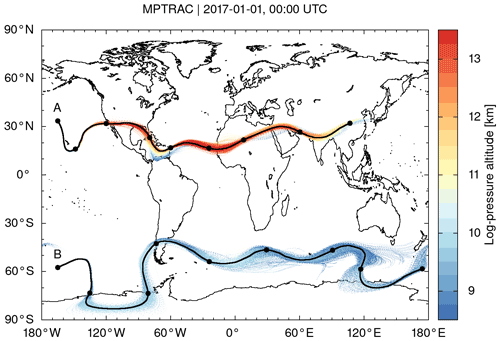

As an example, Fig. 2 shows the results of kinematic trajectory calculations for particles located in the vicinity of the Northern Hemisphere subtropical jet (case A) and the Southern Hemisphere polar jet (case B) in January 2017 using ERA5 data for input. The trajectories were launched at (33.5∘ N, 165∘ W) at 11.25 km log-pressure altitude for the subtropical jet and at (57.5∘ S, 165∘ W) at 8.5 km of log-pressure altitude for the polar jet on 1 January 2017, 00:00 UTC, respectively. The trajectory seeds are located close to the horizontal wind speed maxima at 165∘ W in the upper troposphere and lower stratosphere at the given time. In both cases, the trajectories nearly circumvent the Earth during the time period of 8 d covered by the trajectory calculations. Significant horizontal meandering and height oscillations with the jet streams are revealed. The trajectories were calculated with the explicit midpoint method and a default integration time step of 180 s. Varying the time step between 45, 90, 180, 360, and 720 s did not reveal any significant deviations of the results (not shown).

Figure 2Trajectory calculations for the Northern Hemisphere subtropical jet (case A) and the Southern Hemisphere polar jet (case B) from 1 January 2017, 00:00 UTC, to 9 January 2017, 00:00 UTC. Trajectories were launched at positions A and B at log-pressure altitudes of 11.25 and 8.5 km, respectively. Calculations used hourly ERA5 horizontal winds and vertical velocity data for input. Black curves show trajectories calculated without diffusion. Black dots indicate 24 h time intervals. Colored dots show calculations for two sets of 1000 trajectories for cases A and B with turbulent diffusion and subgrid-scale wind fluctuations being considered.

2.3.2 Turbulent diffusion

Rather complex parametrizations of atmospheric diffusivity are available for the planetary boundary layer (e.g., Draxler and Hess, 1997; Stohl et al., 2005), which have not been implemented in MPTRAC, so far. Much less is known about the diffusivities in the free troposphere and in the stratosphere, which are in the scope of our model. In MPTRAC, the effects of atmospheric diffusion are simulated by adding stochastic perturbations to the positions x of the air parcels at each time step Δt of the model,

This method requires a vector of random variates to be drawn from the standard normal distribution for each air parcel at each time step. The vector of diffusivities is composed of the horizontal diffusivity Dx and the vertical diffusivity Dz. The model allows us to specify Dx and Dz separately for the troposphere and stratosphere as control parameters. The following choices are made for the FLEXPART model (Stohl et al., 2005): default values of and Dz=0 are selected for the troposphere and Dx=0 and are selected for the stratosphere. Diffusivities will therefore change, if an air parcel intersects the tropopause. A smooth transition between tropospheric and stratospheric diffusivities is created by linear interpolation of Dx and Dz within a ±1 km log-pressure altitude range around the tropopause.

2.3.3 Subgrid-scale wind fluctuations

In addition to turbulent diffusion, the effects of unresolved subgrid-scale winds, also referred to as mesoscale wind perturbations, are considered. The starting point for this approach is the separation of the horizontal wind and vertical velocity vector v into the grid-scale mean and the subgrid-scale perturbations v′,

It is further assumed that the mean wind is given by the coarse-grid meteorological data, whereas the wind perturbations v′ need to be parameterized. The subgrid-scale wind perturbations are calculated by means of the Langevin equation,

From a mathematical point of view, this is a Markov chain or a random walk, which adds temporally correlated stochastic perturbations to the winds over time. The degree of correlation depends on the correlation coefficient r, and therefore on the time step Δt of the model and the time step Δtmet of the meteorological data. The variance (fσi)2 of the random component added at each time step depends on the grid-scale variance of the horizontal wind and vertical velocity data and a scaling factor f, which is used for downscaling to the subgrid scale. The scaling factor f needs to be specified as a control parameter of the model. The default value is f=40 %, following a choice made for the FLEXPART model (Stohl et al., 2005). For each air parcel, the grid-scale variance is calculated from the neighboring eight grid boxes and two time steps of the meteorological data. To make computations more efficient, the grid-scale variance of each parcel is kept in a cache. As before, ξ is a vector of random variates to be drawn from the standard normal distribution.

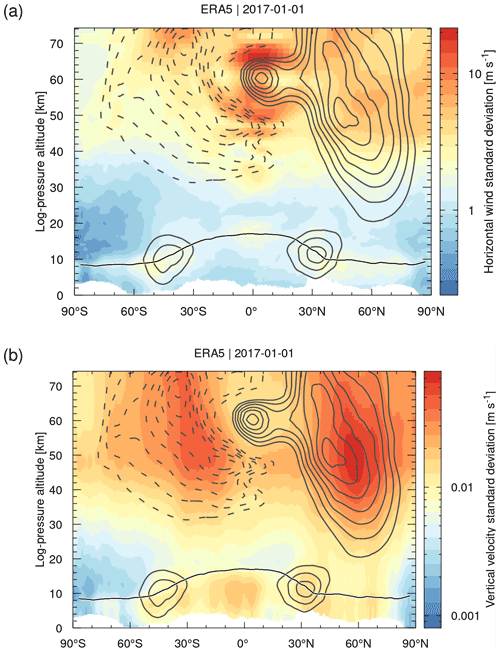

Figure 3 shows zonal means of the grid-scale standard deviations of the horizontal wind and the vertical velocity of ERA5 data for 1 January 2017. Although they are treated separately in the parametrization, we here combined the zonal and meridional wind components for convenience, noting that the standard deviations of the horizontal wind are largely dominated by the zonal wind component. The grid-scale standard deviations of the horizontal wind become as large 20 m s−1 in the tropical mesosphere. The vertical velocity standard deviations are largest (up to 0.08 m s−1) at the Northern Hemisphere midlatitude stratopause. Standard deviations are generally much lower in the troposphere and the lower stratosphere. However, some pronounced features of the horizontal wind (up to 3 m s−1) are found at the tropopause in the tropics and at northern and southern midlatitudes and for the vertical velocities (up to 0.02 m s−1) in the tropical and midlatitude upper troposphere. The parameterized subgrid-scale wind fluctuations vary significantly with time and location and the atmospheric background conditions.

Figure 3The contour surfaces show the zonal mean standard deviations of (a) the grid-scale horizontal wind and (b) the grid-scale vertical velocity on 1 January 2017 as calculated by MPTRAC from ERA5 data. Gray curves show positive (solid) and negative (dashed) zonal mean zonal wind at levels of ±20, 30, 40, 50, 60, 70, 80 m s−1, respectively. The black curve shows the zonal mean dynamical tropopause.

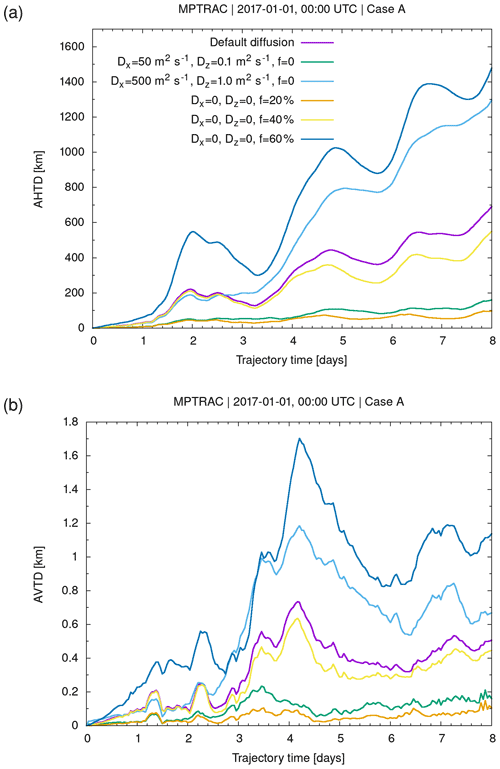

Figure 2 illustrates the effects of the simulated turbulent diffusion and the subgrid-scale winds on the trajectories calculated for the jet streams. Sets of 1000 particles were released at the same starting point for both cases. The trajectory sets reveal significant horizontal and vertical spread over time. The spread mostly increases in regions where the jets are meandering and where notable horizontal and vertical wind shears are present. We calculated absolute horizontal and vertical transport deviations (AHTDs and AVTDs; see Sect. 2.4.3) in order to quantify the effects of simulated diffusion and subgrid-scale wind fluctuations on the jet stream trajectory calculations. Results for case A, the Northern Hemisphere subtropical jet, are shown in Fig. 4. The analysis of the transport deviations showed that the subgrid-scale wind fluctuations were the major source of the spread of the particles. After 8 d of trajectory time, the AHTDs and AVTDs due to the subgrid-scale winds alone (i.e., and f=40 %) were about 550 km and 450 m, respectively. In contrast, the corresponding transport deviations due to the constant diffusivities only (i.e., f=0, in the troposphere, and in the stratosphere) were about 150 km for the AHTDs and 200 m for the AVTDs.

Figure 4Absolute horizontal transport deviations (AHTDs, a) and absolute vertical transport deviations (AVTDs, b) between trajectory calculations with and without simulated diffusion and subgrid-scale winds. Statistics refer to case A presented in Fig. 2. The simulations use different parameter choices of the diffusivities Dx and Dz and the scaling factor f for the subgrid-scale variances (see plot key). Default parameters (purple curve) are in the troposphere, in the stratosphere, and f=40 %.

Note that several studies with Lagrangian models provided estimates of atmospheric diffusivities (Legras et al., 2003, 2005; Pisso et al., 2009), but the data required for the free troposphere and stratosphere for the parametrizations are typically still not well constrained. In addition, in earlier work, we found that the parametrizations of diffusion and subgrid-scale winds applied here may yield different particle spread for different meteorological data sets, even if the same parameter choices are applied (Hoffmann et al., 2017). As an example, Fig. 4 shows results of sensitivity tests on the parameter choices for the diffusion and subgrid-scale wind parametrizations. The parameter choices may need careful tuning for each individual meteorological data set. However, this also opens the possibility for potentially interesting considerations regarding sensitivities to the relative importance of diffusion and subgrid wind fluctuations.

2.3.4 Convection

The spatial resolution of global meteorological input data is often too coarse to allow for explicit representation of convective up- and downdrafts. Although the downdrafts may occur on larger horizontal scales, the updrafts are usually confined to horizontal scales below a few kilometers. Furthermore, convection occurs on timescales of a few hours; i.e., hourly ERA5 data may better represent convection than 6-hourly ERA-Interim data. A parametrization to better represent unresolved convective up- and downdrafts in global simulations was implemented in MPTRAC, which is similar to the convection parametrization implemented in the Hybrid Single-Particle Lagrangian Integrated Trajectory (HYSPLIT) and Stochastic Time-Inverted Lagrangian Transport (STILT) models (Draxler and Hess, 1997; Gerbig et al., 2003). In the present study, the convection parametrization was applied for synthetic tracer simulations as discussed in Sect. 3.3.

The convection parametrization requires as input the convective available potential energy (CAPE) and the equilibrium level from the meteorological data. These data need to be interpolated to the horizontal position of each air parcel. If the interpolated CAPE value is larger than a threshold CAPE0, which is an important control parameter of the parametrization, it is assumed that the up- and downdrafts within the convective cloud are strong enough to yield a well-mixed vertical column. The parametrization will randomly redistribute the air parcels in the vertical column between the surface and the equilibrium level, which is taken as an estimate of the cloud-top height. In order to achieve a well-mixed column, the random distribution of the particles needs to be vertically weighted by air density.

The globally applied threshold CAPE0 can be set to a value near zero, implying that convection will always take place everywhere below the equilibrium level. This approach is referred to as the “extreme convection method”. It will provide an upper limit to the effects of unresolved convection. On the contrary, completely switching off the convection parametrization will provide a lower limit for the effects of unresolved convection. Intermediate states can be simulated by selecting other specific values of the threshold CAPE0, which is an important tuning parameter of the parametrization. The Supplement of this paper provides some guidance on how different choices of CAPE0 can affect the simulated convection.

The extreme convection parametrization implemented here is arguably a rather simple approach, as it relies only on CAPE and the equilibrium level from the meteorological input data. Nevertheless, first tests showed that this parametrization significantly improves transport patterns in the free troposphere. We also conducted experiments to further improve the parametrization by considering additional parameters such as the convective inhibition (CIN) to improve the onset of convection. More sophisticated convection parametrizations have been developed and implemented in Lagrangian transport models during recent years (Forster et al., 2007; Brinkop and Jöckel, 2019; Konopka et al., 2019; Wohltmann et al., 2019). These parametrizations consider additional meteorological variables such as convective mass fluxes and detrainment rates that originate from external convective parameterizations applied in state-of-the-art forecasting models. Future work may look at evaluating the extreme convection parametrization and implementing more advanced convection parametrizations in MPTRAC.

2.3.5 Sedimentation

In order to take into account the gravitational settling of particles, the sedimentation velocity vs needs to be calculated. Once vs is known, it can be used to calculate the change of the vertical position of the particles over the model time step Δt. In MPTRAC, vs is calculated for spherical particles following the method described by Jacobson (1999). In the first step, we calculate the density ρ of dry air,

from pressure p, temperature T, and the specific gas constant R of dry air. Next, the dynamic viscosity of air,

and the thermal velocity of an air molecule,

are used to calculate the mean free path of an air molecule,

as well as the Knudsen number for air,

where rp refers to the particle radius. The Cunningham slip-flow correction is calculated from

Finally, the sedimentation velocity is obtained by means of Stokes law and from the slip-flow correction,

with particle density ρp and conventional standard gravitational acceleration g. Note that rp and ρp can be specified individually for each air parcel. A larger set of parcels can be used to represent a size distribution of aerosol or cloud particles.

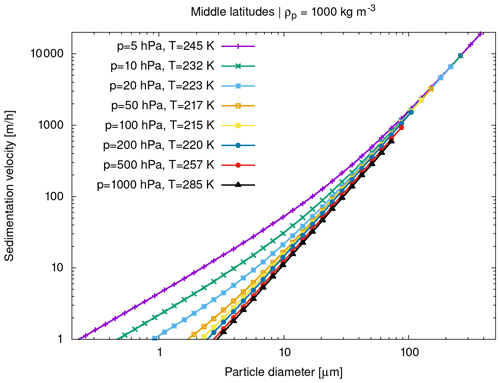

Figure 5 shows sedimentation velocities calculated by Eq. (16) for different particle diameters as well as pressure levels and mean temperatures at midlatitude atmospheric conditions. A standard particle density of ρp=1000 kg m−3 was considered. The sedimentation velocity vs scales nearly linearly with ρp; i.e., increasing ρp by a factor of 2 will also increase vs by the same factor. Note that the overall sensitivity on temperature T is much weaker than the sensitivity on pressure p (not shown). Sedimentation velocities are shown here up to particle Reynolds number Rep≤1, for which vs is expected to have accuracies better than 10 % (Hesketh, 1996). Larger particles will require additional corrections, which have not been implemented.

2.3.6 Wet deposition

Wet deposition causes the removal of trace gases and aerosol particles from the atmosphere within or below clouds by mixing with suspended water and following washout through rain, snow, or fog. In the first step, it is determined whether an air parcel is located below a cloud top. The cloud-top pressure pct is determined from the meteorological data as the highest vertical level where cloud water or ice (i.e., CLWC, CRWC, CIWC, or CSWC) is existent. For numerical efficiency, the level pct is inferred at the time when the meteorological input data are processed. During the model run, pct can therefore be determined quickly by interpolation from the preprocessed input data. Likewise, the cloud-bottom pressure pcb is determined quickly from precalculated data.

In the second step, the wet deposition parametrization determines an estimate of the subgrid-scale precipitation rate Is, which is needed to calculate the scavenging coefficient Λ. We estimated Is (in units of mm h−1) from the total column cloud water cl (in units of kg m−2) by means of a correlation function reported by Pisso et al. (2019),

which is based on a regression analysis using existing cloud and precipitation data. At the same time as determining pct and pcb, the total column cloud water cl is calculated by vertically integrating the cloud water content data when the meteorological input data are processed. During the model run, cl can therefore also be determined quickly by horizontal interpolation of the preprocessed input data to the locations of the air parcels. For efficiency, the parametrization of wet deposition is not further evaluated for a lower limit of .

In the third step, it is inferred whether the air parcel is located within or below the cloud because scavenging coefficients will be different under these conditions. The position of the air parcel within or below the cloud is determined by interpolating the cloud water content to the position of the air parcel and by testing whether the interpolated values are larger than zero.

In the fourth step, the scavenging coefficient Λ is calculated based on the precipitation rate Is,

For aerosol particles, the constants a and b need to be specified as control parameters for the “within-cloud” and “below-cloud” cases, respectively. Typical values of and b=0.79 are used in the HYSPLIT and Next-Generation Atmospheric Dispersion Model (NAME) models (Draxler and Hess, 1997; Webster and Thomson, 2014). For gases, wet deposition depends upon their solubility and the scavenging coefficient can be calculated from

where H is Henry's law constant, R is the universal gas constant, and Δzc is the depth of the cloud layer, which we calculate from pct and pcb. Henry's law constant is obtained from

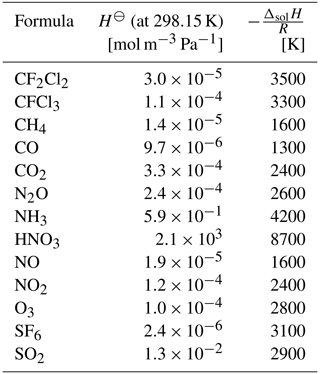

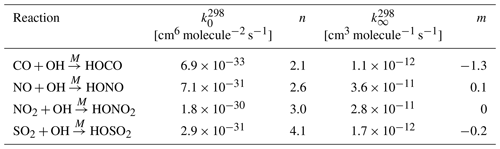

The constants H⊖ and with enthalpy of dissolution ΔsolH at the reference temperature K need to be specified as control parameters. Values for a wide range of species are tabulated by Sander (2015). The values of selected species of interest are summarized in Table 1 and included as default parameters in MPTRAC.

Finally, once the scavenging coefficient Λ for the gases or aerosol particles within or below a cloud is determined, an exponential loss of mass m over the time step Δt is calculated,

2.3.7 Dry deposition

Dry deposition leads to a loss of mass of aerosol particles or trace gases by gravitational settling or chemical and physical interactions with the surface in the dry phase. In the parametrization implemented in MPTRAC, dry deposition is calculated for air parcels located in the lowermost Δp=30 hPa layer above the surface. This corresponds to a layer width of Δz≈200 m at standard conditions.

For aerosol particles, the deposition velocity vdep will be calculated as described in Sect. 2.3.5 as a function of surface pressure p and temperature T as well as particle radius rp and particle density ρp. For trace gases, the deposition velocity vdep needs to be specified as a control parameter. Currently, this parameter is set to a constant value across the globe for each trace gas. For future applications with a stronger focus on the boundary layer, vdep will need to vary geographically to account for dependence on the surface characteristics and atmospheric conditions.

For both particles and gases, we calculated the loss of mass based on the deposition velocity vdep, the model time step Δt, and the surface layer width Δz from

Note that both the dry deposition and the wet deposition parametrization were implemented only very recently and need further testing and evaluation in future work. They will also have to be compared to more advanced parametrizations (e.g., Webster and Thomson, 2011, 2014). The parametrizations are described here for completeness, but they were not included in the model verification and performance analysis in Sect. 3.

2.3.8 Hydroxyl chemistry

In this section, we discuss the MPTRAC module that is used to simulate the loss of mass of a chemical species by means of reaction with the hydroxyl radical. The hydroxyl radical (OH) is an important oxidant in the atmosphere, causing the decomposition of many gas-phase species. The oxidation of different gas-phase species with OH can be classified into two main categories, bimolecular reactions (e.g., reactions of CH4 or NH3), and termolecular reactions (e.g., CO or SO2).

For bimolecular reactions, the rate constant is calculated from Arrhenius law,

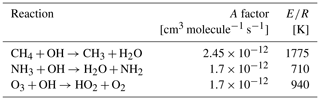

where A refers to the Arrhenius factor, E to the activation energy, and R to the universal gas constant. For bimolecular reactions, the Arrhenius factor A and the ratio need to be specified as control parameters. The reaction rate coefficients for various gas-phase species can be found in the Jet Propulsion Laboratory (JPL) data evaluation (Burkholder et al., 2019). Table 2 lists bimolecular reaction rate coefficients for some species of interest, which were also implemented as default values directly into MPTRAC.

Table 2Bimolecular reaction rate coefficients for the hydroxyl radical. See Burkholder et al. (2019) for reference.

Termolecular reactions require an inert component M (e.g., N2 or O2) to stabilize the excited intermediate state. Therefore, the reaction rate shows a temperature and pressure dependence and changes smoothly between the low-pressure-limiting rate k0 and high-pressure-limiting rate k∞. Based on the molecular density of air,

with Avogadro constant NA, the effective second-order rate constant k is calculated from

The low- and high-pressure limits of the reaction rate constant are given by

The constants and at the reference temperature of 298 K and the exponents n and m need to be specified as control parameters. The exponents can be set to zero in order to neglect the temperature dependence of the low- or high-pressure limits of k0 and k∞. The termolecular reaction rate coefficients implemented directly into MPTRAC are listed in Table 3.

Table 3Termolecular reaction rate coefficients for the hydroxyl radical. See Burkholder et al. (2019) for reference.

Based on the bimolecular reaction rate k=k(T) from Eq. (23) or the termolecular reaction rate from Eq. (25), the loss of mass of the gas-phase species over time is calculated from

The hydroxyl radical concentrations [OH] are obtained by bilinear interpolation from the monthly mean zonal mean climatology of Pommrich et al. (2014). This approach is suitable for global simulations covering at least several days, as hydroxyl concentrations may vary significantly between day- and nighttime as well as the local atmospheric composition.

2.3.9 Exponential decay

A rather generic module was implemented in MPTRAC, to simulate the loss of mass of an air parcel over a model time step Δt due to any kind of exponential decay process, e.g., chemical loss or radioactivity,

The e-folding lifetime te of the species needs to be specified as a control parameter. As typical lifetimes may differ, we implemented an option to specify separate lifetimes for the troposphere and stratosphere. A smooth transition between the tropospheric and stratospheric lifetime is created within a ±1 km log-pressure altitude range around the tropopause.

2.3.10 Boundary conditions

Finally, a module was implemented to impose constant boundary conditions on particle mass or volume mixing ratio. At each time step of the model, all particles that are located within a given latitude and pressure range can be assigned a constant mass or volume mixing ratio as defined by a control parameter of the model. Next to specifying fixed pressure ranges in the free troposphere, stratosphere, or mesosphere to define the boundary conditions, it is also possible to specify a fixed pressure range with respect to the surface pressure, in order to define a near-surface layer.

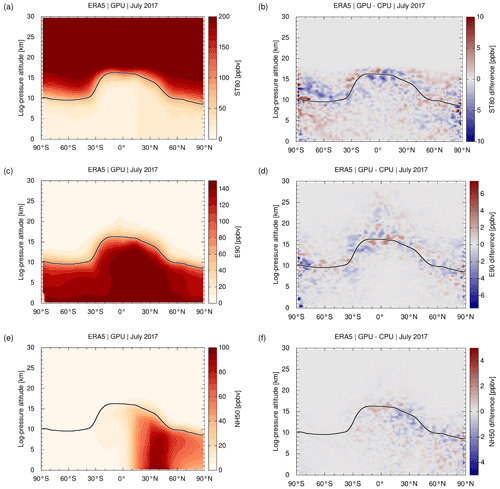

The boundary condition module can be used to conduct synthetic tracer simulations. For example, the synthetic tracer E90 (Prather et al., 2011; Abalos et al., 2017) is emitted uniformly at the surface and has a constant e-folding lifetime of 90 d throughout the entire atmosphere. In our study, we implemented this by assigning a constant volume mixing ratio of 150 ppb to all air parcels residing in the lowermost 30 hPa pressure range above the surface. With its 90 d lifetime, the tracer E90 quickly becomes well mixed in the troposphere. However, the lifetime is much shorter than typical timescales of stratospheric transport. The tracer E90 therefore exhibits sharp gradients across the tropopause. Being a passive tracer in the upper troposphere and lower stratosphere region, E90 is evaluated in studies related to the chemical tropopause and stratosphere–troposphere exchange.

Another example of a synthetic tracer is ST80, which is defined by a constant volume mixing ratio of 200 ppb above 80 hPa and a uniform, fixed 25 d e-folding lifetime in the troposphere (Eyring et al., 2013). Like E90, the tracer ST80 is of interest in investigating stratosphere–troposphere exchange. The Northern Hemisphere synthetic tracer (NH50) is considered to assess interhemispheric gradients in the troposphere. It is defined by a surface layer volume mixing ratio of 100 ppb over 30 to 50∘ N and a uniform 50 d e-folding lifetime (Eyring et al., 2013). Simulation results of E90, ST80, and NH50 obtained with the CPU and GPU code of MPTRAC will be discussed in Sect. 3.3.

2.4 Model output data

2.4.1 Sampling of meteorological data

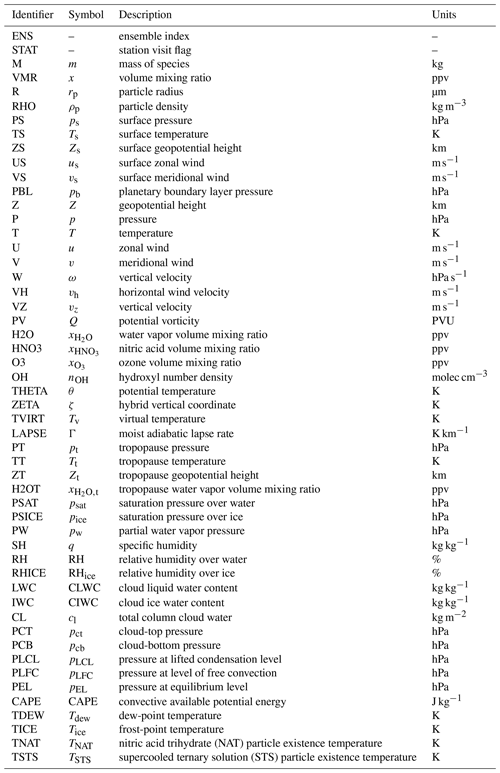

For diagnostic purposes, it is often necessary to obtain meteorological data along the trajectories. However, typically only a limited subset of the meteorological variables is of interest. Therefore, we introduced the concept of “quantities”, allowing the user to select, which variables should be calculated and stored in the output data files. Next to selecting the specific types of model output that are desired, also a list of the specific quantities that should be provided as output needs to be specified via control parameters. In total, about 50 output variables are implemented in MPTRAC (Table 4). In addition to the predefined output variables, it is possible to include user-defined variables. The user-defined variables are typically not modified during the simulations, but they are useful to store information that are required for further analyses. For example, the starting time and position of the particles can be stored as user-defined variables, and they can later be used to calculate the trajectory time or the distance from the origin.

A distinct module was implemented into MPTRAC that is used to sample the meteorological data along the trajectories. This module can become demanding in terms of computing time, as it requires the interpolation of up to 10 3-D variables and 16 2-D variables of meteorological data that are either read in from the meteorological input data files or calculated during the preprocessing of the meteorological data. However, the computing time needs of this module are typically strongly reduced by the fact that meteorological data along the trajectories are usually not required at every model time step but only at user-defined output intervals.

Next to the option of sampling of the meteorological data along the trajectories, we also implemented tools to provide direct output of the meteorological data. This includes tools to extract maps, zonal mean cross sections, and vertical profiles on pressure or isentropic levels. These tools allow for time averaging over multiple meteorological data files. Another tool is available to sample the meteorological data based on a list of given times and positions, without involving any trajectory calculations. Altogether, these tools provide a great flexibility in exploiting meteorological data in many applications.

2.4.2 Output from Lagrangian transport simulations

At present, MPTRAC offers seven output options, referred to as “atmospheric output”, “grid output”, “CSI output”, “ensemble output”, “profile output”, “sample output”, and “station output”. The most comprehensive output of MPTRAC is the atmospheric output. Atmospheric output files can be generated at user-defined time intervals, which need to be integer multiples of the model time step Δt. The atmospheric output files are the most comprehensive type of output because they contain the time, location, and the values of all user-defined quantities of each individual air parcel.

As the atmospheric output can easily become too large for further analyses, in particular if many air parcels are involved, the output of gridded data was implemented. This output will be generated by integrating over the mass of all parcels in regular longitude × latitude × log-pressure height grid boxes. From the total mass per grid box and the air density, the column density and the volume mixing ratio of the tracer are calculated. Alternatively, if the volume mixing ratio per air parcel is specified, the mean volume mixing ratio per grid box is reported. In the vertical, it is possible to select only a single layer for the grid output, in order to obtain total column data. Similarly, by selecting only one grid box in longitude, it is possible to calculate zonal means.

Another type of output that we used in several studies (Hoffmann et al., 2016; Heng et al., 2016) is the critical success index (CSI) output. This output is produced by analyzing model and observational data on a regular grid. The analysis is based on a 2×2 contingency table of model predictions and observations. Here, predictions and observations are counted as yes, if the model column density or the observed variable exceed user-defined thresholds. Otherwise, they would be counted as no. Next to the CSI, the counts allow us to calculate the probability of detection (POD) and the false alarm rate (FAR), which are additional skill scores that are often considered in model verification. More recently, the CSI output was extended to also include the equitable threat score (ETS), the linear and rank-order correlation coefficients, the bias, the root mean square (rms) difference, and the mean absolute error. A more detailed discussion of the skill scores is provided by Wilks (2011).

Another option to condense comprehensive particle data is provided by means of the ensemble output. This type of output requires a user-defined specific ensemble index value to be assigned to each air parcel. Instead of the individual air parcel data, the ensemble output will contain the mean positions as well as the means and standard deviations of the quantities selected for output for each set of air parcels having the same ensemble index. The ensemble output if of interest, if tracer dispersion from multiple point sources needs to be quantified by means of a single model run, for instance.

The profile output of MPTRAC is similar to the grid output as it creates vertical profiles from the model data on a regular longitude × latitude horizontal grid. However, the vertical profiles contain not only volume mixing ratios of the species of interest but also profiles of pressure, temperature, water vapor, and ozone as inferred from the meteorological input data. This output is compiled with the intention to be used as input for a radiative transfer model, in order to simulate satellite observations for the given model output. In combination with real satellite observations, this output can be used for model validation but also as a basis for radiance data assimilation.

The sample output of MPTRAC was implemented most recently. It allows the user to extract model information on a list of given locations and times, by calculating the column density and volume mixing ratio of all parcels located within a user-specified horizontal search radius and vertical height range. For large numbers of sampling locations and air parcels, this type of output can become rather time-consuming. It requires an efficient implementation and parallelization because it needs to be tested at each time step of the model whether an air parcel is located within a sampling volume or not. The numerical effort scales linearly with both the number of air parcels and the number of sampling volumes. The sample output was first applied in the study of Cai et al. (2021) to sample MPTRAC data directly on TROPOspheric Monitoring Instrument (TROPOMI) satellite observations.

Finally, the station output is collecting the data of air parcels that are located within a search radius around a given location (latitude, longitude). The vertical position is not considered here; i.e., the information of all air parcels within the vertical column over the station is collected. In order to avoid double counting of air parcels over multiple time steps, the quantity STAT (Table 4) has been introduced that keeps track on whether an air parcel has already been accounted for in the station output or not. We used this type of output in studies estimating volcanic emissions from satellite observations using the backward-trajectory method (Hoffmann et al., 2016; Wu et al., 2017, 2018).

By default, all output functions of MPTRAC create data files in an ASCII table format. This type of output is usually simple to understand and usable with many tools for data analysis and visualization. However, in the case of large-scale simulations, it is desirable to use more efficient file formats. Therefore, an option was implemented to write particle data to binary output files. Likewise, reading particle data from a binary file is much more efficient than from an ASCII file. The binary input and output is an efficient way to save or restore the state of the model during intermediate steps of a workflow. Another interesting option of output is to pipe the data directly from the model to a visualization tool. This will keep the output data in memory and directly forward it from MPTRAC to the visualization tool. This option has been successfully tested for the particle and the grid output in combination with the graphing utility gnuplot (Williams and Kelley, 2020).

2.4.3 Trajectory statistics

For diagnostic purposes, it is often helpful to calculate trajectory statistics. The tool atm_stat can calculate the mean, standard deviation, skewness, kurtosis, median, absolute deviation, median absolute deviation, minimum, and maximum of the positions and variables of air parcel data sets. The calculations can cover the entire set or a selection of air parcels, based on the ensemble variable or extracted by means of the tool atm_select. The tool atm_select allows the user to select air parcels based on a range of parcels, a log-pressure height × longitude × latitude box, and a search radius around a given geolocation. The trajectory statistics are calculated by applying the algorithms of the GNU Scientific Library (Gough, 2003).

The tool atm_dist allows us to calculate statistics of the deviations between two sets of trajectories. Foremost, this includes the AHTDs and AVTDs (Kuo et al., 1985; Rolph and Draxler, 1990; Stohl, 1998) over time,

Here, the horizontal distances are calculated approximately by converting the geographic longitudes and latitudes of the particles to Cartesian coordinates, and , followed by calculation of the Euclidean distance of the Cartesian coordinates. Vertical distances are calculated based on conversion of particle pressure to log-pressure altitude using the barometric formula.

Next to AHTDs and AVTDs, the tool atm_dist can calculate the relative horizontal and vertical transport deviations (RHTDs and RVTDs), which relate the absolute transport deviations to the horizontal and vertical path lengths of individual trajectories. It can also be used to quantify the mean absolute and relative deviations as well as the absolute and relative bias of meteorological variables or the relative tracer conservation errors of dynamical tracers over time. The definitions of these quantities and more detailed descriptions are provided by Hoffmann et al. (2019). In this study, we mostly present absolute transport deviations to evaluate the differences between CPU and GPU trajectory calculations (Sect. 3.2).

2.5 Parallelization strategy

Lagrangian particle dispersion models are well suited for parallel computing as large sets of air parcel trajectories can be calculated independently of each other. The workload is “embarrassingly parallel” or “perfectly parallel” as little to no effort is needed to separate the problem into a number of independent parallel computing tasks. In this section, we discuss the MPI–OpenMP–OpenACC hybrid parallelization implemented in MPTRAC. The term “hybrid parallelization” refers to the fact that several parallelization techniques are employed in a simulation at the same time.

MPI is a communication protocol and standardized interface to enable point-to-point and collective communication between computing tasks. MPI provides high performance, scalability, and portability. It is considered as a leading approach used for high-performance computing. The OpenMP application programming interface supports multi-platform shared-memory multiprocessing programming for various programming languages and computing architectures. It consists of a set of compiler directives, library routines, and environment variables that are used to distribute the workload over a set of computing threads on a compute node. An application built with an MPI–OpenMP hybrid approach can run on a compute cluster, such that OpenMP can be used to exploit the parallelism of all hardware threads within a multi-core node while MPI is used for parallelism between the nodes. Open accelerators (OpenACC) is a programming model to enable parallel programming of heterogeneous CPU–GPU systems. As in OpenMP, the source code of the program is annotated with “pragmas” to identify the areas that should be accelerated by using GPUs. In combination with MPI and OpenMP, OpenACC can be used to conduct multi-GPU simulations.

The GPU parallelization is a new feature since the release of version 2.0 of MPTRAC. Various concepts for employing GPUs are available for scientific high-performance computing. The simplest option is to replace standard computing libraries by GPU-enabled versions, which are provided by the vendors of the GPU hardware such as the NVIDIA company. A prominent example of such a library, which was ported to GPUs, is the Basic Linear Algebra Subprograms (BLAS) library for matrix–matrix, matrix–vector, and vector–vector operations. However, as MPTRAC does not employ the BLAS library, this simple parallelization technique was not considered helpful for porting our model to GPUs. At the other end of the options for GPU computing, the Compute Unified Device Architecture (CUDA) is a dedicated application programming model for GPUs. The CUDA programming model is often considered to be most flexible and allows for most detailed control over the GPU hardware. However, CUDA requires solid knowledge on GPU technology and it may cause error-prone and time-consuming work if legacy code needs to be rewritten (Li and Shih, 2018).

OpenACC can help to overcome the practical difficulties of CUDA and reduces the coding workload for developers significantly. We considered OpenACC to be a more practical choice for porting MPTRAC to GPUs as the code of the model should remain understandable and maintainable by students and domain scientists that may not be familiar with the details of more complex GPU programming concepts such as CUDA. By using OpenACC, we found that we were able to maintain the same code base of the MPTRAC model rather than having to develop a CPU and a GPU version of the model independently of each other. Next to easiness in terms of maintenance and portability of the code, the common code base is considered a significant advantage to check for bit-level agreement of CPU and GPU computations and reproducibility of simulation results.

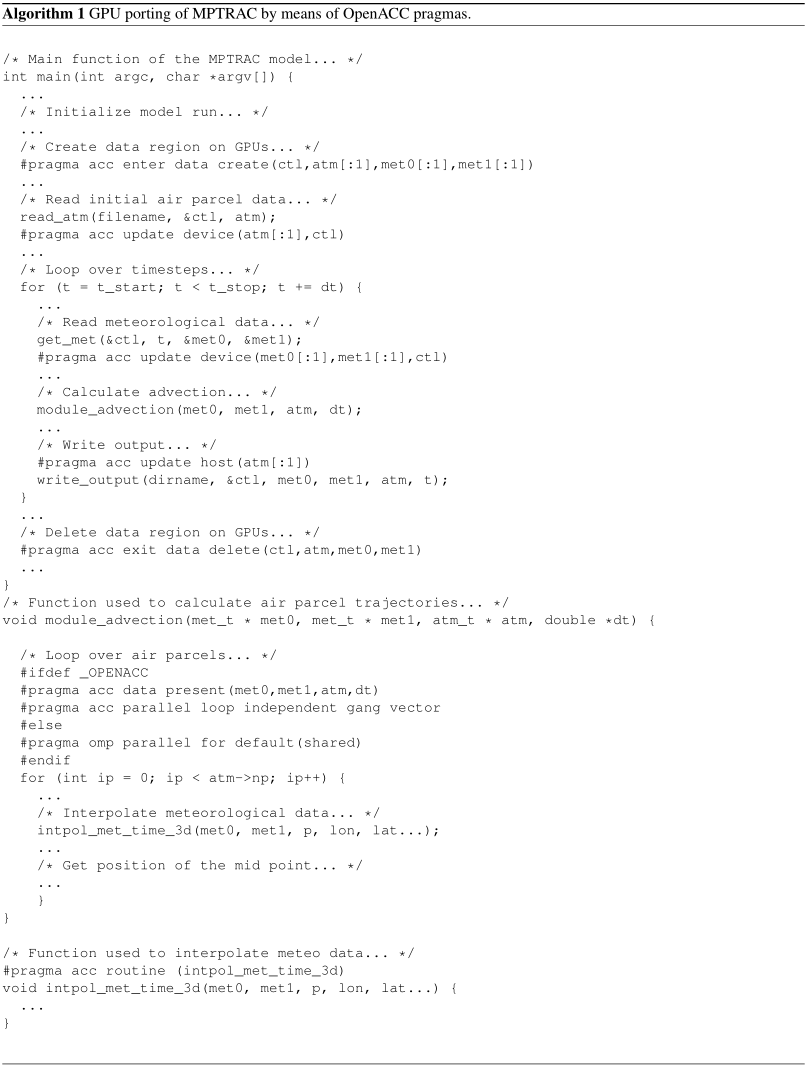

Algorithm 1 illustrates the GPU porting of the C code of MPTRAC by means of OpenACC pragmas. Two important aspects need to be considered. First, the pragma #pragma acc parallel loop independent gang vector is used to distribute the calculations within the loops over the particles over the compute elements of the GPU device. In this example, the loop over the particles within the advection module used to calculate the trajectories is offloaded to GPUs. For compute kernels that are used inside of a GPU parallelized code block, the compiler will create both a CPU and a GPU version of the kernel. In this example, the pragma #pragma acc routine (intpol_met_time_3d) instructs the compiler to create CPU and GPU code for the function used to interpolate meteorological data, as used within and outside of the advection module. Algorithm 1 also shows that if the code is compiled without GPU support (as identified by the _OPENACC flag), the fall-back option is to apply OpenMP parallelization for the compute loops on the CPUs (#pragma omp parallel for default(shared)). The OpenMP parallelization was already implemented in an earlier, CPU-only version of MPTRAC.

The second aspect of GPU porting by means of OpenACC is concerned with the data management. In principle, the data required for a calculation need to be copied from CPU to GPU memory before the calculation and need to be copied back from GPU to CPU memory after the computation finished. Although NVIDIA's “CUDA Unified Memory” technique makes sure, these data transfers can be done automatically, frequent data transfers can easily become a bottleneck of the code. Therefore, we implemented additional pragmas to instruct the compiler when a data transfer is actually required.

The pragma #pragma acc enter data create(ctl,atm[:1],met0[:1],met1[:1]) in Algorithm 1 creates a data region and allocates memory for the model control parameters (ctl), air parcel data (atm), and meteorological data (met0, met1) on the GPU. The data region is deleted and the GPU memory is freed by #pragma acc exit data delete(ctl,atm,met0,met1) at the end of the main function. Within the data region, the associated data will remain present in GPU memory. Within the data region, the pragma #pragma acc update device(atm[:1],ctl) is used to explicitly copy data from CPU to GPU memory, whereas #pragma acc update host(atm[:1]) is used to copy data from GPU to CPU memory. In the MPTRAC model, a single large data region was created around the main loop over the time steps of the model. As a consequence, the calls to all physics and chemistry modules will be conducted completely on GPU memory. Data transfers between CPUs and GPUs are needed only for updating of the meteorological data or for writing model output.

In total, about 60 pragmas had to be implemented in the code to parallelize the computational loops and to handle the data management to facilitate the GPU porting of MPTRAC by means of OpenACC. Code parts that were ported to GPUs are highlighted in the call graph shown in Fig. 1. Next to the OpenACC pragmas, some additional code changes were necessary to enable the GPU porting. In the original CPU code, the GNU Scientific Library (GSL) was applied to conduct a number of tasks, for instance, to compute statistics of data arrays. As the GSL is not ported to GPUs, the corresponding functions had to be rewritten without usage of the GSL. The GSL was also used to generate random numbers. Here, we implemented NVIDIA's CUDA random number generation library (cuRAND) as an efficient replacement for distributed random number generation on GPUs. Also, we found that some standard math library functions were not available with Portland Group, Inc. (PGI)'s C compiler; e.g., a replacement had to be implemented for the math function fmod used to calculate floating point modulo. For earlier versions of the PGI C compiler, we also found a severe bug when large data structures (>2 GB) were transferred from CPU to GPU memory. Nevertheless, despite a number of technical issues, we consider the porting of MPTRAC to GPUs by means of OpenACC to be straight forward and would recommend it for other applications.

3.1 Description of the GPU test system and software environment

The model verification and performance analysis described in this section was conducted on the JUWELS system (Jülich Supercomputing Centre, 2019). The JUWELS system is considered as a major target system for running Lagrangian transport simulations with MPTRAC in future work. The JUWELS system is composed of a CPU module, referred to as the JUWELS Cluster, and a GPU module, referred to as the JUWELS Booster. The JUWELS Booster and the JUWELS Cluster can be used jointly as a heterogeneous, modular supercomputer, or they can be used independently of each other. The JUWELS Booster was used as a test system in this study.

The JUWELS Booster consists of 936 compute nodes, each equipped with four NVIDIA A100 Tensor Core GPUs. Each NVIDIA A100 GPU comprises 6912 INT32 and 6912 FP32 compute cores as well as 3456 FP64 compute cores, the latter being most relevant for us because most calculations in MPTRAC are conducted at double precision. It also features 432 tensor cores, providing 8× peak speed-up for mixed-precision matrix multiplication compared to standard FP32 precision operations. The numbers of compute elements listed here indicate the large degree of parallelism required for the compute problem, which is exploited by large numbers of particles in the Lagrangian transport simulations. The A100 GPUs are equipped with 40 GB HBM2e memory per device, connected with the third-generation NVLink lossless, high-bandwidth, low-latency shared memory interconnect, providing 1555 GB s−1 of memory bandwidth. The A100 GPU supports the new Compute Capability 8.0. NVIDIA (2020) provides a detailed description of the Ampere architecture.

The JUWELS Booster GPUs are hosted by AMD EPYC Rome 7402 CPUs. Each compute node of the Booster comprises two CPU sockets, four non-uniform memory access (NUMA) domains per CPU, and six compute cores per NUMA domain, which provide two-way simultaneous multithreading. Up to 48 physical threads or 96 virtual threads can be executed on the CPUs of a compute node. The compute nodes are equipped with 512 GB DDR4-3200 RAM. They are connected by a Mellanox HDR200 InfiniBand ConnectX 6 network in a DragonFly+ topology. CPUs, GPUs, and network adapters are connected via two PCIe Gen 4 switches with 16 PCIe lanes going to each device. A 350 GB s−1 network connection IBM Spectrum Scale (GPFS) parallel file system is implemented. As of November 2020, the JUWELS Booster yields a peak performance (Rpeak) of 70.98 PFlop s−1 (see https://www.top500.org/lists/top500/2020/11/, last access: 21 May 2021).

The software environment on JUWELS is based on the CentOS 8 Linux distribution. Compute jobs are managed by the Slurm batch system with ParTec's ParaStation resource management. The JUWELS Booster software stack comprises the CUDA-aware ParTec ParaStation MPI and the NVIDIA HPC Software Development Kit (SDK), as used in this work. In particular, we used the PGI C Compiler (PGCC) version 21.5, which more recently was rebranded and integrated as NVIDIA C compiler (NVC) in NVIDIA's HPC SDK, and the GNU C compiler (GCC) version 10.3.0 to compile the GPU and CPU code, respectively. We applied the compile flag for strong optimization (-O3) for both GCC and PGCC. For other standard compile flags, please refer to the makefile in the MPTRAC code repository. MPTRAC was built with the NetCDF library version 4.7.4 and HDF5 library version 1.12.0 for file I/O. GSL version 2.7 was used for generating random numbers on the CPUs. The cuRAND library from the CUDA Toolkit release 11.0 was applied for random number generation on the GPU devices.

3.2 Comparison of kinematic trajectory calculations

In this section, we discuss comparisons of kinematic trajectory calculations conducted with the CPU and GPU versions of the MPTRAC model. For the CPU simulations, we considered binaries created with two different C compilers. The CPU code compiled with GCC is considered here as a reference, as this compiler has been used in most of the previous work and earlier studies with MPTRAC. The second version of the CPU binaries as well as the GPU binaries were compiled with PGCC, which is the recommended compiler for GPU applications on the JUWELS Booster.

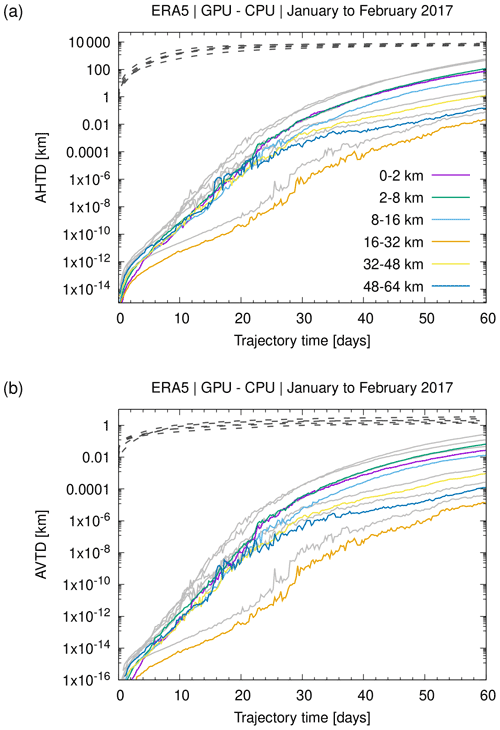

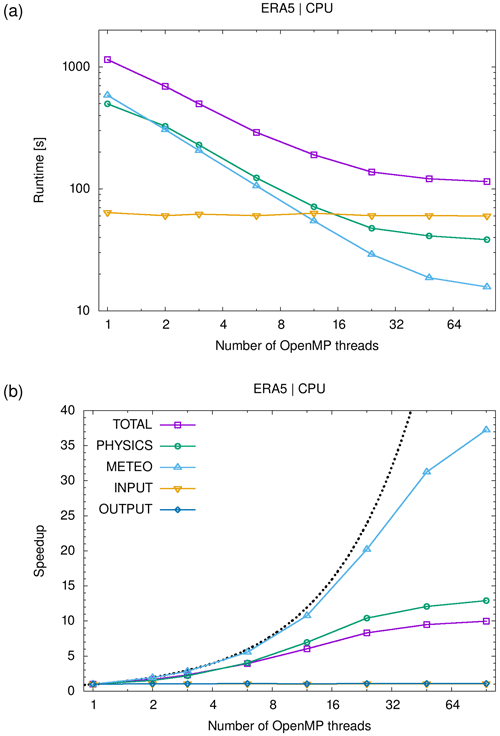

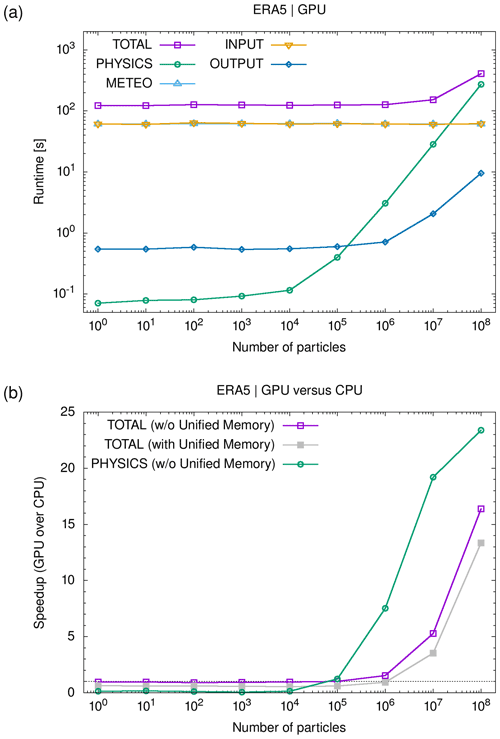

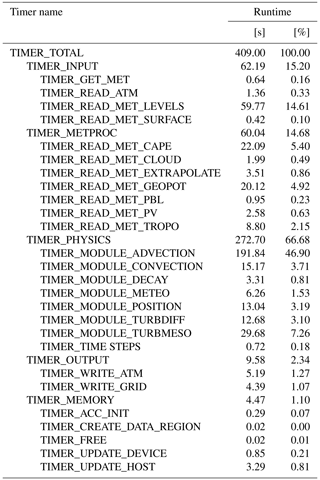

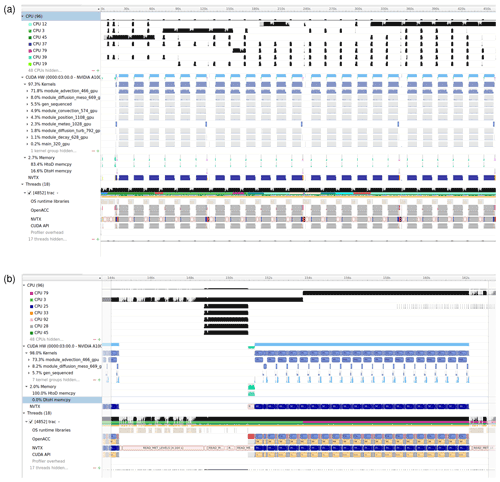

We first focus on comparisons of kinematic trajectories that were calculated with horizontal wind and vertical velocity fields of the ERA5 reanalysis. The calculations were conducted without subgrid-scale wind fluctuations, turbulent diffusion, or convection, as these parametrizations rely on different random number generators implemented in the cuRAND and GSL libraries and utilize a different parallelization approach for random number generation for the CPU and GPU code, respectively. Individual trajectories calculated with these modules being activated are therefore not directly comparable to each other. They can only be compared in a statistical sense, as in Sect. 3.3.