the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

GAN–argcPredNet v1.0: a generative adversarial model for radar echo extrapolation based on convolutional recurrent units

Yan Liu

Jinbiao Zhang

Cong Luo

Siyu Tang

Huihua Ruan

Qiya Tan

Yunlei Yi

Xiutao Ran

Precipitation nowcasting plays a vital role in preventing meteorological disasters, and Doppler radar data act as an important input for nowcasting models. When using the traditional extrapolation method it is difficult to model highly nonlinear echo movements. The key challenge of the nowcasting mission lies in achieving high-precision radar echo extrapolation. In recent years, machine learning has made great progress in the extrapolation of weather radar echoes. However, most of models neglect the multi-modal characteristics of radar echo data, resulting in blurred and unrealistic prediction images. This paper aims to solve this problem by utilizing the features of a generative adversarial network (GAN), which can enhance multi-modal distribution modeling, and design the radar echo extrapolation model GAN–argcPredNet v1.0. The model is composed of an argcPredNet generator and a convolutional neural network discriminator. In the generator, a gate controlling the memory and output is designed in the rgcLSTM component, thereby reducing the loss of spatiotemporal information. In the discriminator, the model uses a dual-channel input method, which enables it to strictly score according to the true echo distribution, and it thus has a more powerful discrimination ability. Through experiments on a radar dataset from Shenzhen, China, the results show that the radar echo hit rate (probability of detection; POD) and critical success index (CSI) have an average increase of 21.4 % and 19 %, respectively, compared with rgcPredNet under different intensity rainfall thresholds, and the false alarm rate (FAR) has decreased by an average of 17.9 %. We also found a problem during the comparison of the result graph and the evaluation index. The recursive prediction method will produce the phenomenon that the prediction result will gradually deviate from the true value over time. In addition, the accuracy of high-intensity echo extrapolation is relatively low. This is a question worthy of further investigation. In the future, we will continue to conduct research from these two directions.

- Article

(2039 KB) - Full-text XML

- BibTeX

- EndNote

Precipitation nowcasting refers to the prediction and analysis of rainfall in the target area over a short period of time (0–6 h) (Bihlo, 2019; Luo et al., 2020). The important data needed for this work come from Doppler weather radar with high temporal and spatial resolution (Wang et al., 2007). Relevant departments can issue early warning information through accurate nowcasting to avoid loss of life and destruction of infrastructure (Luo et al., 2021). However, this task is extremely challenging due to its very low tolerance for time and position errors (Sun et al., 2014).

The existing nowcasting systems mainly include two types, numerical weather prediction (NWP) and radar echo extrapolation (Chen et al., 2020). The widely used optical flow method several problems, such as its poor capture quality in fast echo change regions, the high complexity of the algorithm and its low efficiency (Shangzan et al., 2017). Since echo extrapolation can be considered a time series image-prediction problem, these shortcomings of the optical flow method are expected to be solved by using a recurrent neural network (RNN) (Giles et al., 1994).

With the continuous development of deep learning, more and more neural networks have been applied to the field of nowcasting. Forecast models such as ConvLSTM and EBGAN-Forecaster show that this new method's extrapolation effect is better than that of optical flow method (Shi et al., 2015; Chen et al., 2019). However, these models still have the problem of blurred and unrealistic prediction images (Tian et al., 2020; Xie et al., 2020; Jing et al., 2019). One of the main reasons is that radar echo maps are typically multi-modal data acquired by multiple sensors and different stations; some algorithms ignore this feature of radar echo maps, using the mean square error and mean absolute error as the loss function, which is better suited to a unimodal distribution.

The paper proposes a generative adversarial network-argcPredNet (GAN–argcPredNet) network model, which aims to solve this problem through GAN's ability to strengthen the characteristics of multi-modal data modeling. The generator adopts the same deep coding–decoding method as PredNet to establish a prediction model, and uses a new structure of convolutional long short-term memory (LSTM) as a predictive neuron, which can effectively reduce the loss of spatiotemporal information compared with rgcLSTM. The deep convolutional network is used as the discriminator to classify the distribution, and the dual-channel input mechanism is used to strictly judge the distribution of real radar echo images. Finally, based on the weather radar echo dataset, the generator and the discriminator are alternately trained to make the extrapolated radar echo map more real and precise.

2.1 Sequence prediction networks

The essence of radar echo image extrapolation is the problem of sequence image prediction, which can be solved by implementing an end-to-end sequence learning method (Shi et al., 2015; Sutskever et al., 2014). ConvLSTM introduces a convolution operation into the conversion of the internal data state of the LSTM, effectively utilizing the spatial information of the radar echo data (Shi et al., 2015). However, the trajectory gated recurrent unit (TraijGRU) has also been proposed as a solution (Shi et al. 2017) since the location-invariant nature of the convolutional recursive structure is inconsistent with the natural change motion. A GRU (gated recurrent unit), as a kind of recurrent neural network, performs a similar function to LSTM but is computationally cheaper (Group, 2017). Similarly, ConvGRU introduces convolution operations inside the GRU to enhance the sparse connectivity of the model unit and is used to learn video spatiotemporal features (Ballas et al., 2015). The RainNet network learns the movement and evolution of radar echo based on the U-NET convolutional network for extrapolation prediction (Ayzel et al., 2020). PredNet is based on a deep coding framework and adds error units to each network layer that can transmit error signals like the human brain structure (Lotter et al., 2016). In order to increase the depth of the network and the connections between modules, Skip-PredNet further introduces skip connections and uses ConvGRU as the core prediction unit. Experiments show that its effect is better than the TrajGRU benchmark (Sato et al., 2018). Although these networks can achieve echo prediction, they have problems with both blurring and producing unrealistic extrapolated images.

2.2 GAN-based radar echo extrapolation

The generative adversarial network (GAN) consists of two parts: a generator and a discriminator (Goodfellow et al., 2014). GAN can be an effective model for generating images. Using an additional GAN loss, a model can better achieve multi-modal data modeling and each of its outputs will be clearer and more realistic (Lotter et al., 2016). Multiple complementary learning strategies show that generative adversarial training can maintain the sharpness of future frames and solve the problem of lack of clarity in prediction (Michael et al., 2015). In this regard, the extrapolators built a generative adversarial network to solve the problem of extrapolated image blur by trying to use this adversarial training to extrapolate more detailed radar echo maps (Singh et al., 2017). Similarly, an adversarial network with ConvGRU as the core was proposed, mainly to solve the problem of ConvGRU's inability to achieve multi-modal data modeling (Tian et al., 2020). There are also researchers working on the idea of a four-level pyramid convolution structure that propose four pairs of models to generate an adversarial network for radar echo prediction (Chen et al., 2019). It should be noted that the traditional GAN network has the problem that it uses unstable training, which will cause the model unable to learn. Therefore, the design of the nowcasting model should be based on a stable and optimized GAN network.

In this section, we describe the model both overall and in detail. Section 3.1 introduces the overall structure and training process of the model. In Sect. 3.2, we describe the structure of the argcPredNet generator and focus on the argcLSTM neuron. In Sect. 3.3, we introduce the design of the discriminator and the loss function of the model.

3.1 GAN–argcPredNet model overview

Radar echo extrapolation refers to the prediction of the dissipation and distribution of future echoes based on the existing radar echo sequence diagram. If the problem is formulated, then each echo map can be regarded as a tensor, i.e., , where W, H and C represent the width, height and number of channels, respectively, and R represents the feature area. If M represents a given sequence of echo maps and N is used to predict the most likely changes in the future, this problem can be expressed as Eq. (1). This paper sets the input sequence M and output sequence N to 5 and 7, respectively.

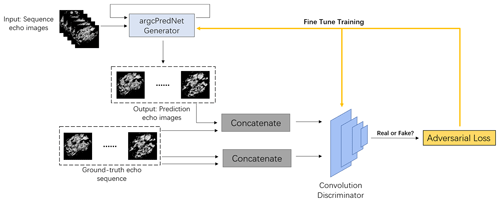

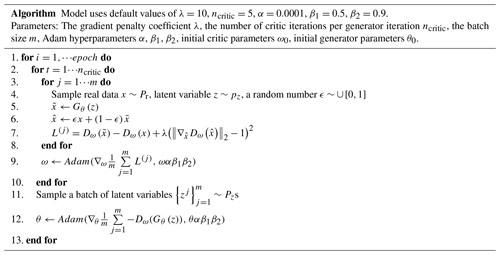

Unlike other forecasting models, GAN–argcPredNet uses WGAN-gp (Wasserstein generative adversarial network with gradient penalty) as a predictive framework. The model solves the problem of training instability through gradient penalty measures (Gulrajani et al., 2017). Our model mainly includes two parts: generator and discriminator. As shown in Fig. 1, the generator is composed of argcPredNet, which is responsible for learning the potential features of the data and simulating the data distribution to generate prediction samples. Following this, the predicted samples and the real samples are input into the discriminator to make a judgment, where the real data are judged to be true, and thus the predicted data are judged to be false. Finally, we use the Adam optimizer for training the adversarial loss and then update the parameters of the discriminator, optimize the loss function of the generator once every five updates and complete the update of the generator parameters. The algorithm flow is shown in Table 1 (Gulrajani et al., 2017).

3.2 argcPredNet generator

3.2.1 argcLSTM

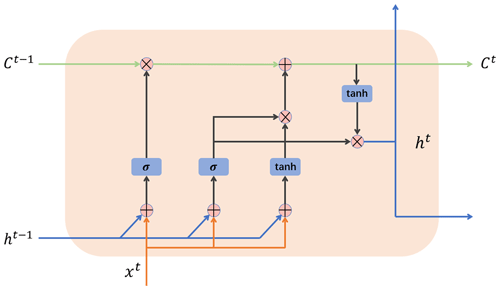

The internal structure of the argcLSTM neuron used in the model is shown in Fig. 2. In order to provide better feature-extraction capabilities, the structure contains two trainable gating units: the forget gate f(t) and the input gate g(t). The latter can calculate the weight of the current state independently and complete the feature retention of the input information. The peephole connection from the unit state to the forget gate is removed. This operation does not have a big impact on the result, but it simplifies the redundant parameters. The complete definition of the argcLSTM unit is as follows (Eqs. 2–5).

In Eqs. (2)–(6), ∗ represents convolution operation; ∘ represents the Hadamard product; σ represents sigmoid nonlinear activation function' f(t) and g(t) represent the forget gate and update gate, respectively; and x(t), h(t), and C(t) represent the input, hidden state, and unit state at time t, respectively.

3.2.2 argcPredNet

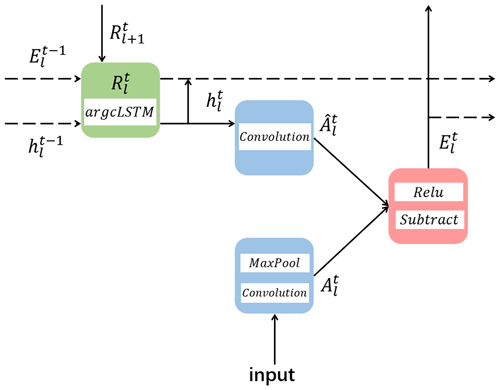

The argcPredNet generator has the same structure as PredNet, which is composed of a series of repeatedly stacked modules, with a total of three layers. The difference is that argcPredNet uses argcLSTM as the prediction unit. As shown in Fig. 3, each layer of the module contains the following four units: the input convolutional layer (Al), the recurrent representation layer (Rl), the prediction convolutional layer () and the error representation layer (El).

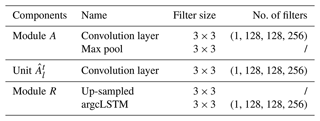

The recursive prediction layer uses the argcLSTM loop unit, which is used to generate the prediction of the next frame and the input of and Al+1. The network uses error calculation, where El will output an error representation, and the error representation is passed forward through the convolutional layer to become the input of the next layer Al+1. The hidden state of the recurrent unit is updated according to the output of , and the up-sampled . For Al, the input of the lowest target, namely A0, is set to the actual sequence itself. When l>0, the input of Al is the lower error signal El−1, which results from convolution calculation, linear rectification function (RELU) activation and the maximum pooling layer. The complete update rules are shown in Eqs. (7) to (10). The specific parameter settings of the generator are shown in Table 2. The numbers 1, 128, 128 and 256 represent the number of filters used from the first layer to the fourth layer.

3.3 Discriminator and loss

3.3.1 Convolutional discriminator

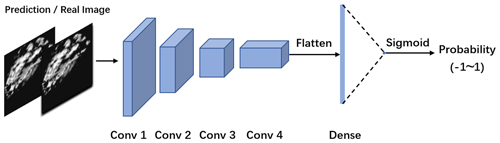

The purpose of the discriminator is to recognize images, which similar to the purpose to the classifier. In the GAN–argcPredNet model, a double-channel convolutional neural network (DC-CNN) network is designed for discrimination. The process is shown in Fig. 4. It is a four-layer convolution model with a dual-channel input method.

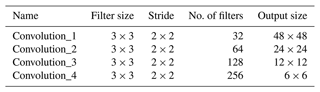

The DC-CNN network extracts a pair of images from the three pairs of images, inputs them to the fully connected layer through a four-layer convolution transformation and finally generates a probability output through the Sigmoid function, indicating the possibility that the input image is from a real image. When the input is a real image, the discriminator will maximize the probability, and thus the value will approach 1. If the input is a generator-synthesized image, the discriminator will minimize the probability, and thus the value will approach −1. The specific parameter settings of the discriminator are shown in Table 3.

3.3.2 Loss function

The generative adversarial network relies on the distribution of simulated data to generate images. It can retain more echo details, thereby realizing the modeling of multi-modal data. A gradient penalty term is added to GAN–argcPredNet, and the loss function of the discriminator is shown in Eq. (11).

The generator has the following loss function (Eq. 12):

The model has the following maximum–minimum loss function (Eq. 13):

where represents the distribution of generated samples, Pg represents the set of generated sample distributions, x represents the distribution of real samples and Pr represents the set of real sample distributions. The third term is the penalty item of the gradient penalty mechanism. In the penalty term, represents the actual data and generation of a new sample formed by random sampling between data. represents a set of randomly sampled samples. λ is a hyperparameter that represents the coefficient of the penalty term, and the value in the model is set to 10.

In order to verify the effectiveness of the model, the paper uses the radar echo data from January to July 2020 in Shenzhen, China, to conduct experiments on the four models of ConvGRU, rgcPredNet, argcPredNet, and GAN–argcPredNet. All experiments are implemented in Python and based on the Keras deep-learning library with TensorFlow as the back end for model training and testing.

4.1 Dataset description

This experiment uses the radar echo data of Shenzhen, China. The dataset is made up of rain images after quality control. The reflectivity range is 0–80 dBZ, the amplitude limit is between 0 and 255, and the data are collected every 6 min, with a total of one layer. The height above sea level is 3 km. A total of 600 000 echo images were collected, of which 550 000 were used as the training set and 50 000 were used as the test set. Each set of data contained 12 consecutive images. The horizontal resolution of the radar echo maps is 0.01∘ (about 1 km), the number of grids is 501×501 (i.e., an area of about 500 km × 500 km) and the image resolution is 96×96 pixels.

4.2 Evaluation metrics

In order to evaluate the accuracy of the model for precipitation nowcasting, the experiment uses three evaluation indicators to evaluate the prediction precision of the model, critical success index (CSI, Eq. 14), false alarm rate (FAR, Eq. 15) and hit rate (probability of detection, POD; Eq. 16).

In the formula, TP indicates that both the predicted value and the true value reach the specified threshold, FN means that the true value reaches the specified threshold but that the predicted value has been not reached, and FP indicates that the true value has not reached the specified threshold but that the predicted value has reached the specified threshold.

4.3 Results

The experiment comprehensively evaluates the prediction accuracy of precipitation with different thresholds. The radar reflectivity and rainfall intensity refer to the Z–R relationship (Shi et al., 2017). The calculation formula is as follows:

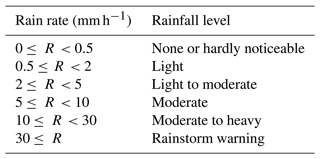

In this paper, a is set to 58.53, b is set to 1.56, Z represents the intensity of radar reflectivity, R represents the intensity of rainfall, and the corresponding relationship between rainfall and rainfall level is given in Table 4 (Shi et al., 2017).

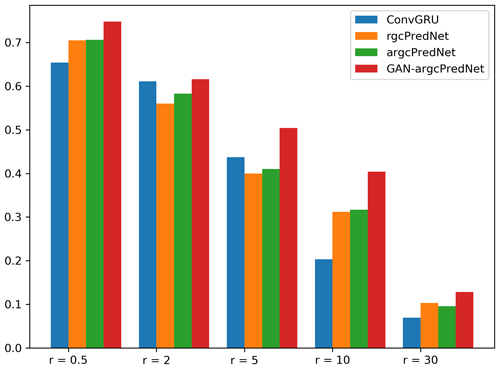

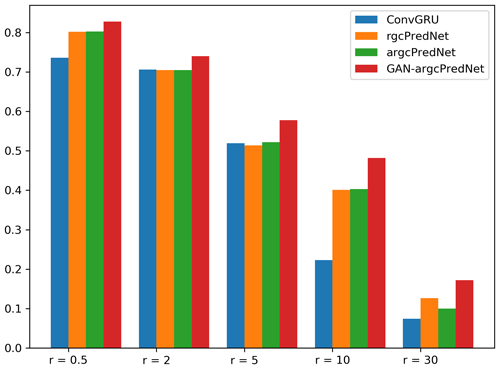

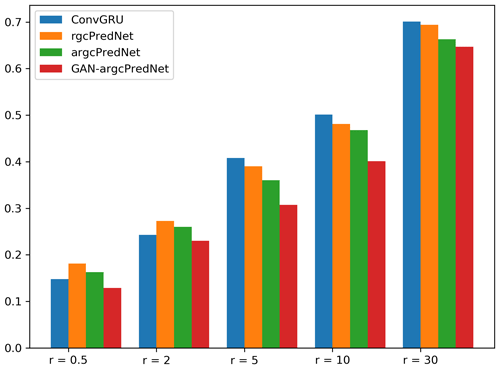

Figures 5, 6 and 7 compare the CSI, POD and FAR index scores, respectively in detail for each model at different rainfall thresholds.

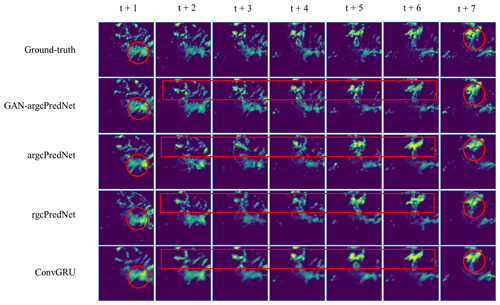

Figure 8Four prediction examples for the precipitation nowcasting problem. From top to bottom: ground truth frames, prediction by GAN–argcPredNet, prediction by argcPredNet, prediction by rgcPredNet, prediction by ConvGRU.

This result is calculated based on 50 000 test pictures (more than 4000 test sets), which is taken as a representative number. It can be seen that when the rainfall rate increases from 0.5 to 30 mm h−1, GAN–argcPredNet always performs best (by a significant margin advantage), argcPredNet is second, and the ConvGRU model is the worst. Another point worth noting is that as the rainfall intensity increases, the performance of all models shows a downward trend. In the comparison of CSI indicators, GAN–argcPredNet leads the rest of the models when the rainfall rate is lower than when the rainfall rate is lower than 30 mm h−1. When the rainfall level is 30≤ R, its leading advantage is the weakest. ArgcPredNet only leads rgcPredNet by a slight margin, and its performance with rgcPredNet is not as good as ConvGRU in the range of 2–5 mm h−1. For POD indicators, GAN–argcPredNet performs best, and its leading advantage is more prominent. The performance of argcPredNet is not so outstanding as it is almost the same as argcPredNet, but the index of the two is always better than ConvGRU. For the FAR score, the performance of GAN–argcPredNet is still the best, while argcPredNet and rgcPredNet are worse than ConvGRU in the range of 2–5 mm h−1, and the score for the rain rate 0.5–10 mm h−1 interval is slightly lower than that of GAN–argcPredNet.

To compare the three methods more intuitively, Fig. 8 shows the image prediction results of the three models on the same piece of test data.

Compared with the other three models, GAN–argcPredNet generates better image clarity and shows more detailed features on a small scale. The contrast between the areas marked by the red circle in Fig. 8 is more obvious. GAN–argcPredNet made the best prediction of the shape and intensity of the echo. The area selected by the rectangle mainly shows the echo changes in the northern region within 30 min. Both models correctly predict the movement of the echo to a certain extent, and the prediction process shown by GAN–argcPredNet is the most complete. In some mixed intensity and edge areas, our model clearly predicts the echo intensity information, which clearly shows the effect of the confrontation training.

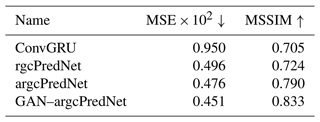

In order to compare the prediction results more specifically, the experiment uses mean square error (MSE) and mean structural similarity (MSSIM) to evaluate the quality of the generated images (Wang et al., 2004). The MSE and MSSIM index scores of the images generated by each model are shown in Table 5. ConvGRU has the lowest two indexes. Although the MSE index of rgcPredNet is slightly lower than that of the argcPredNet and GAN–argcPredNet models, the MSSIM index of the argcPredNet and GAN–argcPredNet models is 0.066 and 0.109 higher than that of the rgcPredNet network model, respectively.

This study demonstrated a radar echo extrapolation model. The main innovations are summarized as follows. First, the argcPredNet generator is established based on the time and space characteristics of radar data. The argcPredNet generator can predict future echo changes based on historical echo observations. Second, our model uses adversarial training methods to try to solve the problem of blurry predictions.

Based on the evaluation indicators and qualitative analysis results, GAN–argcPredNet has achieved excellent results. Our model can reduce the prediction loss in a small-scale space so that the prediction results have more detailed features. However, the recursive extrapolation method causes the error to accumulate as time goes by, and the prediction result deviates more and more from the true value. In addition, when the amount of high-intensity echo data is small, the prediction of high-risk and strong convective weather through machine learning is also a problem that we are very concerned about because it is more realistic. Therefore, we will carry out research into these two issues in the future.

The GAN–argcPredNet and argcPredNet models are free and open source. The current version number is GAN–argcPredNet v1.0, and the source code is provided through a GitHub repository https://github.com/LukaDoncic0/GAN-argcPredNet (last access: 14 February 2022) and can be accessed through a Zenodo repository https://doi.org/10.5281/zenodo.5035201 (Zheng and Liu, 2021a). The pretrained GAN–argcPredNet and argcPredNet weights are available at https://doi.org/10.5281/zenodo.4765575 (Zheng and Liu, 2021b). The radar data used in the article come from the Guangdong Meteorological Department. Due to the institutional confidentiality policy, the data will not be disclosed to the public. If you need access to the data, please contact Kun Zheng (zhengk@cug.edu.cn) and Yan Liu (liuyan_@cug.edu.cn).

KZ was responsible for developing the models and writing the manuscript. YL and QT conducted the model experiments and co-authored the manuscript. JZ, CL, ST, HR, YY and XR were responsible for data screening and preprocessing.

The contact author has declared that neither they nor their co-authors have any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was funded by Science and Technology Planning Project of Guangdong Province, China (grant no. 2018B020207012).

This research has been supported by the Science and Technology Planning Project of Guangdong Province (grant no. 2018B020207012).

This paper was edited by David Topping and reviewed by one anonymous referee.

Ayzel, G., Scheffer, T., and Heistermann, M.: RainNet v1.0: a convolutional neural network for radar-based precipitation nowcasting, Geosci. Model Dev., 13, 2631–2644, https://doi.org/10.5194/gmd-13-2631-2020, 2020.

Ballas, N., Yao, L., Pal, C., and Courville, A.: Delving Deeper into Convolutional Networks for Learning Video Representations, Computer Science, arXiv [preprint], arXiv:1511.06432, 2015.

Bihlo, A.: Precipitation nowcasting using a stochastic variational frame predictor with learned prior distribution, Computer Science, arXiv [preprint], arXiv:1905.05037, 2019.

Chen, L., Cao, Y., Ma L., and Jun, Z.: A Deep Learning based Methodology for Precipitation Nowcasting With Radar, Earth Space Sci., 7, https://doi.org/10.1029/2019EA000812, 2020.

Chen, Y. Z., Lin, L. X., Wang, R., Lan, H. P., Ye, Y. M., and Chen, X. L.: A study on the artificial intelligence nowcasting based on generative adversarial networks, T. Atmos. Sci., 42, 311–320, 2019.

Giles, C. L., Kuhn, G. M., and Williams, R. J.: Dynamic recurrent neural networks: Theory and applications, IEEE T. Neural Net., 5, 153–156, 1994.

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Sherjil, O., Aaron, C., and Yoshua, B.: Generative Adversarial Networks, in: Advances in neural information processing systems, edited by: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., and Weinberger, K. Q., arXiv:1406.2661 2672–2680, 2014.

Group, N. L. C.: R-NET: Machine Reading Comprehension with Self-matching Networks, https://www.microsoft.com/en-us/research/wp-content/uploads/2017/05/r-net.pdf (last access: 14 February 2022), 2017.

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., and Courville A.: Improved Training of Wasserstein GANs, Computer Science, arXiv [preprint], arXiv:1704.00028, 2017.

Jing, J., Li, Q., and Peng, X.: MLC-LSTM: Exploiting the Spatiotemporal Correlation between Multi-Level Weather Radar Echoes for Echo Sequence Extrapolation, Sensors, 19, 3988, https://doi.org/10.3390/s19183988, 2019.

Lotter, W., Kreiman, G., and Cox, D.: Deep predictive coding networks for video prediction and unsupervised learning, Computer Science, arXiv [preprint], arXiv:1605.08104v5, 2016.

Luo, C., Li, X., Ye, Y., Wen, Y., and Zhang, X.: A Novel LSTM Model with Interaction Dual Attention for Radar Echo Extrapolation, Remote Sensing, 13, 164, https://doi.org/10.3390/rs13020164, 2020.

Luo, C., Li, X., and Ye, Y.: PFST-LSTM: A Spatio Temporal LSTM Model With Pseudoflow Prediction for Precipitation Nowcasting, IEEE J. Sel. Top. Appl., 14, 843–857, https://doi.org/10.1109/JSTARS.2020.3040648, 2021.

Michael, M., Camille, C., and Yann, L.: Deep multi-scale video prediction beyond mean square error, Computer Science arXiv [preprint], arXiv:1712.09867v3, 2015.

Sato, R., Kashima, H., and Yamamoto, T.: Short-term precipitation prediction with skip-connected prednet, in: International Conference on Artificial Neural Networks, 373–382, https://doi.org/10.1007/978-3-030-01424-7_37, 2018.

Shangzan, G., Da, X., and Xingyuan, Y.: Short-Term Rainfall Prediction Method Based on Neural Networks and Model Ensemble, Advances in Meteorological Science and Technology, 7, 107–113, 2017.

Shi, X., Chen, Z., Wang, H., Dit-Yan, Y., Wai-Kin, W., and Wang-chun, W.: Convolutional LSTM network: A machine learning approach for precipitation nowcasting, in: Advances in neural information processing systems, edited by: Cortes, C., Lawrence, N. D., Lee, D. D., Sugiyama, M., and Garnett, R., 802–810, 2015.

Shi, X., Gao, Z., Lausen, L., Wang, H., Dit-Yan, Y., Wai-Kin, W., and Wang-chun, W.: Deep learning for precipitation nowcasting: A benchmark and a new model, in: Advances in neural information processing systems, edited by: Guyon, I., Luxburg, U. V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., and Garnett, R., 5617–5627, arXiv:1706.03458, 2017.

Singh, S., Sarkar, S., and Mitra, P.: A deep learning based approach with adversarial regularization for Doppler weather radar echo prediction, 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), 5205–5208, https://ieeexplore.ieee.org/document/8128174 (last access: 14 February 2022), 2017.

Sun, J., Xue, M., Wilson, J. W., ISztar, Z., Sue, P., Jeanette O., Paul, J., Dale, M., Ping-Wah, L., Brian, G., Mei, X., and James, P.: Use of NWP for nowcasting convective precipitation: Recent progress and challenges, B. Am. Meteorol. Soc., 95, 409–426, https://doi.org/10.1175/BAMS-D-11-00263.1, 2014.

Sutskever, I., Vinyals, O., and Le, Q. V.: Sequence to Sequence Learning with Neural Networks, in: Advances in Neural Information Processing Systems, edited by: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N. D., and Weinberger, K. Q., 3014–3112, https://doi.org/10.5555/2969033.2969173, 2014.

Tian, L., Xutao, L., Yunming, Y., Pengfei, X., and Yan, L.: A Generative Adversarial Gated Recurrent Unit Model for Precipitation Nowcasting, IEEE Geosci. Remote S., 17, 601–605, 2020.

Wang, G., Liu, L., and Ruan, Z.: Application of Doppler Radar Data to Nowcasting of Heavy Rainfall, J. Appl. Meteorol. Sci., 388, 395–417, 2007.

Wang, Z., Bovik, A. C., Sheikh, H. R., and Simoncelli, E. P.: Image quality assessment: from error visibility to structural similarity, IEEE T. Image Process., 13, 600–612, 2004.

Xie, P., Li, X., Ji, X., Chen, X., Chen, Y., Jia, L., and Ye, Y.: An Energy-Based Generative Adversarial Forecaster for Radar Echo Map Extrapolation, IEEE Geosci. Remote S., 99, 1–5, 2020.

Zheng, K. and Liu, Y.: GAN-argcPredNetv1.0 and argcPredNet models (v1.0), Zenodo [code], https://doi.org/10.5281/zenodo.5035201, 2021a.

Zheng, K. and Liu, Y.: Pretrained weights of GAN-argcPredNet and argcPredNet models (v1.0), Zenodo [code], https://doi.org/10.5281/zenodo.4765575, 2021b.