the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

ICONGETM v1.0 – flexible NUOPC-driven two-way coupling via ESMF exchange grids between the unstructured-grid atmosphere model ICON and the structured-grid coastal ocean model GETM

Tobias Peter Bauer

Peter Holtermann

Bernd Heinold

Hagen Radtke

Oswald Knoth

Knut Klingbeil

Two-way model coupling is important for representing the mutual interactions and feedbacks between atmosphere and ocean dynamics. This work presents the development of the two-way coupled model system ICONGETM, consisting of the atmosphere model ICON and the ocean model GETM. ICONGETM is built on the latest NUOPC coupling software with flexible data exchange and conservative interpolation via ESMF exchange grids. With ICON providing a state-of-the-art kernel for numerical weather prediction on an unstructured mesh and GETM being an established coastal ocean model, ICONGETM is especially suited for high-resolution studies. For demonstration purposes the newly developed model system has been applied to a coastal upwelling scenario in the central Baltic Sea.

- Article

(17961 KB) - Full-text XML

- BibTeX

- EndNote

In numerous studies, the added value of two-way coupled atmosphere–ocean models has been demonstrated. Interactive model coupling is important for representing the mutual interactions and feedbacks between atmosphere and ocean dynamics (e.g. Chelton and Xie, 2010). The sea surface temperature (SST) of the ocean determines moisture fluxes into the atmosphere and the stability of the atmospheric boundary layer (Fallmann et al., 2019). The modulated surface wind in turn affects surface currents and mixing in the ocean, both altering SST patterns. This air–sea interaction is very dynamic and strongly sensitive to fronts and eddies (Small et al., 2008; Shao et al., 2019). In the coastal ocean, fronts are further pronounced due to upwelling and river run-off. Therefore, especially high-resolution coastal applications, where sharp gradients and small-scale eddies are resolved, can benefit from two-way coupled atmosphere–ocean models.

The atmosphere model COAMPS (Hodur, 1997) and the regional ocean model ROMS (Shchepetkin and McWilliams, 2005) were coupled with the Model Coupling Toolkit (MCT; Larson et al., 2005) for investigating an upwelling event with a 1 km high resolution (Perlin et al., 2007); see Appendix A for a list of model and software abbreviations. In the following decade, numerous high-resolution studies were performed with the two-way coupled model system COAMPS-NCOM, in which COAMPS was originally coupled via MCT with the coastal ocean model NCOM (Barron et al., 2006). Pullen et al. (2006, 2007) demonstrated the improved skill of the two-way coupled model system during bora events in the Adriatic Sea, simulated down to a resolution of 4 km in the atmosphere and 2 km in the ocean. With the same resolution and a coupling time step of 12 min, the model system has been applied to the Ligurian Sea and confirmed the importance of the interactive model coupling in the coastal zone (Small et al., 2011). The impact of coastal orography was investigated in a 2 km simulation of the island of Madeira (Pullen et al., 2017). Another two-way coupled model system widely applied in high-resolution studies is COAWST (Warner et al., 2010). It couples the atmosphere model WRF (Skamarock et al., 2005), the ocean model ROMS and the wave model SWAN (Booij et al., 1999) with MCT. COAWST has been applied for a realistic hindcast of a storm event over the Gulf of Lion and the Balearic Sea with a resolution of 3 km in the atmosphere and 1.8 km in the ocean (Renault et al., 2012). In another application, a bora event and the dense water formation in the Adriatic sea with 7 km resolution in the atmosphere and 1 km in the ocean was simulated (Carniel et al., 2016). Both studies investigated the effects of different coupling strategies and demonstrated the benefit of the fully coupled model system. Recently, the high-resolution regional coupled environmental prediction system UKC for the northwest European Shelf has been developed (Lewis et al., 2018, 2019a). On a 1.5 km high resolution, the atmosphere model MetUM (Cullen, 1993; Brown et al., 2012) was coupled with the ocean model NEMO (Madec et al., 2017) via OASIS3-MCT (Valcke et al., 2012; Craig et al., 2017). First results demonstrate reduced bias in SST fields (Lewis et al., 2019b) and impacts on cloud and fog formation over the North Sea (Fallmann et al., 2017, 2019).

Key technical aspects of coupled model systems are the coordinated execution of the individual model components and the data exchange among them. Required infrastructure for time management, communication between different nodes and interpolation between different grids is provided by various coupling software, e.g. MCT, OASIS-MCT and ESMF. Coupling frameworks, like the Earth System Modeling Framework (ESMF; Hill et al., 2004), provide an additional superstructure layer which offers a standardized execution of models as model components and data exchange in coupler components. On top of ESMF, the National Unified Operational Prediction Capability layer (NUOPC; Theurich et al., 2016) defines generic components which offer a unified and automated driving of coupled model systems. The generic components require only minimum specialization for the individual models, e.g. registration of routines for initialization, time step advance, and definition of required import and possible export data. NUOPC automatically negotiates the data exchange between individual model components based on standard names and synonyms from a dictionary. All required information about model grids and distribution among processors is received during runtime from the models. Therefore, models once equipped with an NUOPC-compliant interface can be plugged into any other coupled model system driven by NUOPC, without the need to adapt coupling specifications.

NUOPC supports a seamless data exchange and interpolation between models operating on different grids via so-called connectors. In addition, NUOPC offers mediator components to perform, e.g., merging, time-averaging and interface flux calculations on a hub between several models. With ESMF/NUOPC, it is also possible to perform these calculations on automatically generated exchange grids. They have been introduced in Balaji et al. (2006) as the union of the vertices of the individual model grids. ESMF provides this functionality for unstructured grids, with the final exchange grid obtained by a triangulation of the union. This triangulation is the basis for conservative interpolation. Moreover, the ESMF exchange grid considers the masking of the original grids, e.g. land/sea masks, and excludes fractions that are not required for the interpolation.

There is an ongoing effort to implement the new NUOPC layer into model systems and equip many popular models with an NUOPC interface under the umbrella of the Earth System Prediction Suite (ESPS; Theurich et al., 2016). However, until now, there exists only a limited number of publications about its integration. The functioning of the NUOPC layer within the earth system model RegESM was described by Turuncoglu (2019). Sun et al. (2019) developed the regional integrated prediction system SKRIPS based on NUOPC, coupling the atmosphere model WRF and the non-hydrostatic ocean model MITgcm (Marshall et al., 1997). Only very recently, a coupled unstructured-grid model application consisting of the ocean model ADCIRC (Luettich et al., 1992) and the wave model WAVEWATCH III (WW3DG, 2019) within the NUOPC-based NOAA Environmental Modeling System (NEMS) was reported by Moghimi et al. (2020).

Despite the potential of the ESMF exchange grid, its implementation and use in a mediator component has not been published, yet. This paper presents the newly developed model system ICONGETM, consisting of the atmosphere model ICON (Zängl et al., 2015) and the coastal ocean model GETM (Burchard and Bolding, 2002). With ICON providing a state-of-the-art kernel for numerical weather prediction on an unstructured mesh and GETM being one of the leading Baltic Sea models, a so far missing coupled model system for high-resolution studies in the Baltic has been developed. The model system is based on an NUOPC-Mediator, taking care of the data exchange via an ESMF exchange grid.

First, the technical structure of ICONGETM including a short overview of ICON and GETM as well as the automated coupling with ESMF/NUOPC is described in Sect. 2. The details of the data exchange and interpolation using the ESMF exchange grid are explained in Sect. 3. In Sect. 4, a demonstration of the coupled model system for the central Baltic Sea is presented. The added value and potential of using the ESMF exchange grid in ICONGETM are discussed in detail in Sect. 5. And finally, the paper is summarized in Sect. 6.

2.1 The atmospheric model ICON

The ICOsahedral Non-hydrostatic modelling framework (ICON) was developed by the German Weather Service (DWD) and the Max Planck Institute for Meteorology (MPI-M) as a unified modelling system for global numerical weather prediction (NWP) and climate modelling, including exact local mass conservation, mass-consistent tracer transport, a flexible grid nesting capability and the use of non-hydrostatic Euler equations on global domains (e.g. Gassmann and Herzog, 2008; Dipankar et al., 2015; Zängl et al., 2015; Heinze et al., 2017; Giorgetta et al., 2018; Crueger et al., 2018; Borchert et al., 2019). The details of the model are given in Zängl et al. (2015). They have been summarized in Ullrich et al. (2017) for the dynamical core model inter-comparison project (DCMIP) 2016.

The atmospheric component of ICON allows various user configurations for different modelling scenarios, e.g. large eddy simulations, numerical weather prediction or climate simulations, by coupling a common dynamical core with different physics packages. The model used in this study is a configuration led by DWD, mainly used for high-resolution NWP applications. Some physics schemes largely inherit the fast-physics parameterizations from the atmosphere model COSMO; see Zängl et al. (2015).

ICON solves the 2D vector-invariant equations on an icosahedral triangular grid with Arakawa C-grid staggering and terrain-following vertical discretization. A predictor–corrector scheme is employed, which is explicit in all terms except for those describing the vertical propagation of sound waves. The nesting capability in ICON includes a bisection of the simulation time step from one nest to the other.

The DWD applies ICON as a member of the operative weather forecast system in Germany (DWD, 2021). High-resolution simulations were conducted to understand the physical feedbacks due to clouds (e.g. Dipankar et al., 2015; Heinze et al., 2017). MPI-M uses the ICON Earth system model (ICON-ESM; e.g. Hanke et al., 2016; Giorgetta et al., 2018; Crueger et al., 2018), where individual model components for the atmosphere (ICON-A), ocean (ICON-O) and land (ICON-L) are coupled with the YAC library (Hanke et al., 2016).

For the coupling in ICONGETM, an interface to ESMF was implemented for the non-hydrostatic NWP core.

2.2 The ocean model GETM

The General Estuarine Transport Model (GETM) is an open-source ocean model for coastal and regional applications. Originally developed for solving the primitive equations as well as transport equations for temperature and salinity on C-staggered finite volumes (Burchard and Bolding, 2002), it nowadays also offers a non-hydrostatic extension of the dynamic kernel for high-resolution applications (Klingbeil and Burchard, 2013). GETM supports boundary-following vertical coordinates with adaptive interior model layers (Hofmeister et al., 2010; Gräwe et al., 2015). The nonlinear free surface is computed by a split-explicit mode-splitting technique with drying-and-flooding capability; see the review about numerics of coastal ocean models by Klingbeil et al. (2018). GETM uses efficient second-order transport schemes with minimized spurious mixing (Klingbeil et al., 2014). State-of-the-art turbulence closure is provided from the General Ocean Turbulence Model (GOTM). Via an interface to the Framework for Aquatic Biogeochemical Models (FABM), GETM can act as a hydrodynamic host model for a variety of biogeochemical models. An efficient decomposition into subdomains offers high-performance computing on massively parallel systems for high-resolution and climate-scale simulations (e.g. Gräwe et al., 2019; Lange et al., 2020). For coupling to other models, GETM already provides an interface to ESMF (Lemmen et al., 2018).

2.3 Coupling with ESMF/NUOPC

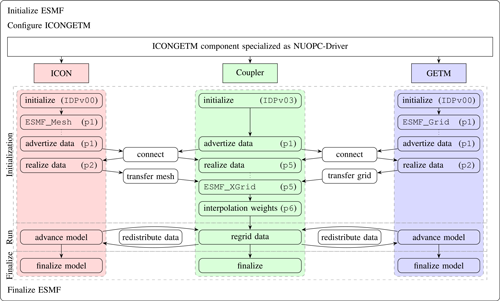

ICONGETM is built on ESMF/NUOPC. It is hierarchically structured into main program, driver, model and coupler components; see Fig. 1.

Figure 1Structure of ICONGETM. The ICONGETM component created by the main program is specialized as NUOPC-Driver and consists of NUOPC-Model components for ICON and GETM as well as an NUOPC-Mediator for the Coupler. For all components the implemented specialized routines for initialization, run and finalization are indicated. The initialization phases of the NUOPC layer are given in parentheses. Automated generic NUOPC operations are represented by arrows. In the pdf version of this article the central NUOPC components presented in the figure are linked to the corresponding locations in the source code.

The NUOPC layer controls the execution and interaction of the components by triggering different phases for their initialization (Sect. 2.3.1), run (Sect. 2.3.2) and finalization (Sect. 2.3.3).

Generic actions are performed automatically and only individual specification routines need to be implemented for the components.

The implementation of the NUOPC layer in ICONGETM was inspired by the prototype codes AtmOcnMedPetListProto, AtmOcnTransferGridProto, AtmOcnConProto, and CustomFieldDictionaryProto, as well as AtmOcnFDSynoProto from https://earthsystemmodeling.org/nuopc/#prototype-applications (last access: 22 June 2021).

2.3.1 Initialization

ICONGETM is initialized and configured in different stages. At first, ESMF itself is initialized. Next, the coupled model is configured from a user-provided configuration file with the number of processes for each model component, the names of the data to be received by each model component and the coupling time step.

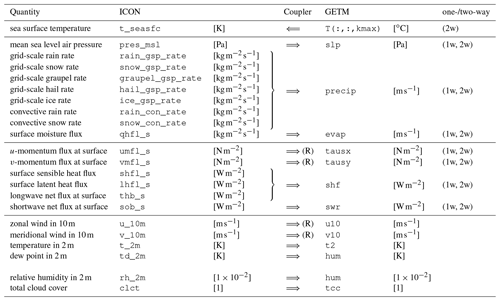

Table 1

List of quantities which can be exchanged in ICONGETM.

The direction is indicated by the arrow.

The units of the source and target variables are given in square brackets.

Data conversion and aggregation is done automatically in the coupler.

precip and evap are obtained by division with the reference density of freshwater.

The corresponding contributions to precipitation from graupel, hail and ice are only considered for the coupling if they are activated in ICON.

Wind data need to be rotated (R) to the local coordinate system in GETM.

The exchanged humidity quantity (dew point or relative humidity) is correctly identified by the name attribute of the connected ESMF field.

The possibility to exchange either flux data (third block) or state variables (last block) offers the comparison of different coupling strategies within the same model environment.

The last column indicates which data are exchanged during the performed one- and two-way coupled simulations.

The exchange of state variables is not applied in the simulations presented in Sect. 4.

An NUOPC-Driver is applied, which creates NUOPC-Model components for ICON and GETM as well as an NUOPC-Mediator, which serves as a data exchange component between the model components. The current implementation only supports a concurrent distribution of the components among all available computing units. For the time management, a run sequence defines in which order the mediator and model components will interact during the simulation.

Next, the initialization routines of each NUOPC-Model component are called.

They have access to the initializing routines of the individual models themselves.

Additionally, the horizontal grid structures are translated into an ESMF_Grid and ESMF_Mesh for structured and unstructured discretizations, respectively; see Sect. 3.1 and 3.2.

Moreover, ESMF_Fields are created to advertise all data which are available for exchange.

However, based on the user-specified lists of data that should be received by each model component, the model system automatically detects the required subset of fields which are finally connected and realized.

The current implementation supports the exchange of flux and state data.

The exchange of state variables is not applied in the simulations presented in Sect. 4 since only flux data are transferred.

For a list of exchangeable quantities and their optional conversion by the mediator, see Table 1.

The data transfer between the NUOPC-Models via the NUOPC-Mediator is then prepared generically, i.e. by the NUOPC layer.

NUOPC-Connectors are set up to redistribute the data between the different computing units used by the coupler and model components.

For the actual regridding (interpolation) between the horizontal triangular grid from ICON and the horizontal latitude–longitude grid from GETM, one ESMF exchange grid (ESMF_XGRID) is created for each direction; for details see Sect. 3.

The interpolation weights are calculated only once during the initialization and are used in the run phase.

The generation of the ESMF_XGRID and the interpolation weights is the most expensive part of the overall overhead due to coupling.

The later performed interpolation in the run phase is relatively cheap.

In the present implementation, no model receives data during the initialization phase. However, the first data exchange takes place at the beginning of the run phase, as specified in the run sequence. All model components update their export fields at the end of the initialization phase.

2.3.2 Run

During runtime the coupled model system is integrated in time by repeating the prescribed run sequence with the given coupling intervals until the simulation end time is reached.

At the beginning of the run sequence, new input data are provided to each model component by data exchange and regridding via the mediator component.

In ICON, the received data must be copied to model internal memory locations.

For GETM, the ESMF_Fields already contain pointers to the internal memory.

With the new data from the import fields, each model advances with its own time step until the next coupling time point is reached.

At the end of the run sequence, all model components prepare the following data exchange by updating their export fields from

the internal model memory.

2.3.3 Finalization

This phase finalizes all ESMF and NUOPC components. The finalization of the model components is included by calling the finalizing interface in ICON and GETM. The overall last step is the finalization of ESMF.

The data exchange between ICON and GETM is based on the regridding from the source model grid to an exchange grid and the regridding from the exchange grid to the target model grid.

The ESMF exchange grid (ESMF_XGRID) infrastructure is used for the conservative interpolation at the air–sea interface; see the NUOPC-Mediator in Fig. 1.

The aim is to apply an interpolation approach which is independent of any horizontal resolution in ICON and GETM.

Before the ESMF_XGRID and how it is utilized in ICONGETM are explained in detail, the horizontal discretization of ICON and GETM is presented.

Furthermore, the interpolation is schematically described.

3.1 Triangular mesh in ICON

The horizontal grid structure of ICON is described in detail by Linardakis et al. (2011). The very first assumption for the horizontal grid is that the Earth is approximated as a sphere. It is based on the projection of an icosahedron onto the sphere. The edges of each triangle of the icosahedron can now be interpreted as an arc of great circles on the sphere. A refinement of the grid, i.e. to increase the resolution by using smaller triangles, is achieved by a combination of two steps. The first step is an initial division of the original icosahedron triangle edges by n∈ℕ. The second step is k∈ℕ bisections of the remaining smaller triangles. The final grid is then described by RnBk. The number of triangles on the sphere for an RnBk grid is given by 20n24k; see Zängl et al. (2015). The effective grid resolution is given by

with earth radius rE. Table 1 in Zängl et al. (2015) shows different R2Bk grids with effective grid resolutions. The DWD applies a global R3B07 grid, a R3B08 Europe grid and a R3B09 Germany grid for the weather forecast simulations, which have effective resolutions of 13.15, 6.58 and 3.29 km, respectively.

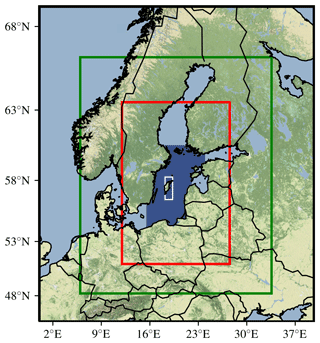

The construction of refined grids supports a straight-forward nesting. An example for the Baltic Sea region based on R2B08, R2B09 and R2B10 grids with effective resolutions of 9.89, 4.93 and 2.47 km is shown in Fig. 2.

Figure 2Nesting of different ICON domains with effective resolutions of 9.89 km (black frame), 4.93 km (green frame) and 2.47 km (red frame) over the Baltic Sea region. The dark-blue area in the central Baltic Sea represents the model domain of GETM. The white rectangle frames the area shown in Fig. 3.

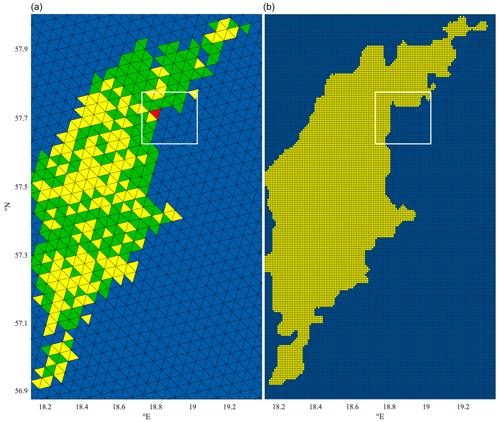

Figure 3Triangular grid with an effective resolution of 2.47 km used in ICON (a) and rectangular grid with a resolution of approximately 600 m used in GETM (b) over the island of Gotland in the central Baltic Sea; see Fig. 2. In the ICON grid, the different colouring represents cells that consist of more than 50% of ocean (blue), forest (green), urban areas (red) or non-specific land classifications (yellow). GETM only distinguishes between ocean (blue) and land (yellow). The white rectangles frame the area shown in Fig. 4.

Figure 3 shows the R2B10 grid over the island of Gotland in the central Baltic Sea.

Based on various external datasets (e.g. Reinert et al., 2020) every grid cell is associated with a set of fraction values for different land classifications, e.g. forest, urban areas and others.

Cells with less than 50 % of land fraction are regarded entirely as ocean cells and vice versa.

The triangular grid and the associated cell classification are stored in an ESMF_Mesh object, which also contains information about the domain decomposition onto computing units.

The creation of the ESMF_Mesh is computing unit specific.

Therefore, the domain distribution among the available computing units performed by ICON is kept in the ESMF_Mesh in ICONGETM.

3.2 Structured grid in GETM

The grid in GETM is structured and supports curvilinear horizontal coordinates in Cartesian and latitude–longitude space.

For coupling with ICON, only grids in spherical coordinates can be used.

A land mask defines land and water cells; see Fig. 3.

Coordinate, area (defined through rhumb lines) and mask data as well as information about the domain distribution on computing units are stored in an ESMF_Grid object.

3.3 Exchange grid in the coupler

Based on the information provided by the mesh from ICON and the grid from GETM, an exchange grid is created in the coupler.

The ESMF library constructs the exchange grid by overlaying both meshes (see Fig. 4), calculating the intersection points and conducting a final triangulation of all elements.

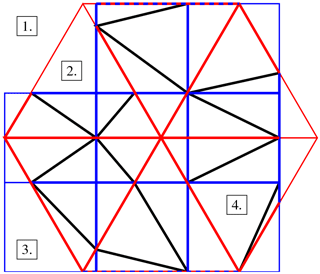

For a schematic representation see Fig. 5.

The ESMF_XGRID object only consists of elements that are required for the data exchange between the ocean cells in ICON and GETM.

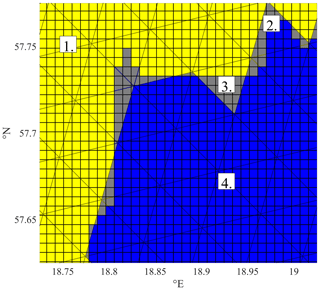

Figure 4Overlay of the triangular ICON grid and the rectangular GETM grid at the eastern coast of the island of Gotland in the central Baltic Sea; see Fig. 3. The four possible combinations of land/ocean masks are labelled. Grey areas mark different land/ocean masks: ICON ocean and GETM land (case 2); ICON land and GETM ocean (case 3).

Figure 5 Exemplary 2D exchange grid formed by a triangular atmosphere (red) and a rectangular ocean (blue) grid. The exchange grid consists of edges from the original triangular and rectangular grids (thick red and blue) and additional edges from the triangulation (black). Assuming that only water cells are shown, the four possible combinations of land/ocean masks are labelled. Here the exchange grid is shown for the interpolation from the ocean to the atmosphere grid, therefore, excluding the elements of case 3.

As indicated in Figs. 4 and 5, the overlay of the different grids yields four possible combinations of land/ocean masks:

-

land cells in ICON and GETM;

-

ocean cell in ICON and land cell in GETM;

-

land cell in ICON and ocean cell in GETM;

-

ocean cells in ICON and GETM.

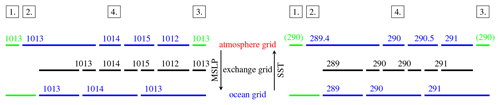

Elements of cases 1 and 2 are excluded from the exchange grid, while elements of case 4 are included. Whether the elements of case 3 belong to the exchange grid depends on the direction of interpolation. Therefore, two different exchange grids are created and used: one for the interpolation from ICON to GETM, which includes the elements of case 3, and one vice versa, excluding elements of case 3; see Fig. 6.

Figure 6 Schematic representation of the regridding between ICON and GETM. In the atmosphere and ocean grids active ocean cells are coloured in blue and land cells in green. As shown for the transfer of mean sea level pressure (MSLP in hPa) and sea surface temperature (SST in K), the exchange grid can consist of different cells for each direction. The four possible combinations of land/ocean masks are indicated. On land (cases 1 and 3) an ICON-internal SST (here 290 K) is used. This ICON-internal SST is also considered for fractions of ocean cells not covered by GETM ocean cells (case 2).

3.4 Regridding

One major challenge for the coupling between the unstructured grid of ICON and the structured grid of GETM is the interpolation of data on scattered nodes.

The irregularity of the unstructured grid complicates the selection of the stencil.

The correct interpolation weights for a conservative interpolation require the determination of the intersections of the source and target grids and the calculation of the resulting areas.

The processing of distributed neighbour information in unstructured grids also requires performant data structures and algorithms.

The ESMF exchange grid (ESMF_XGRID) and the associated interpolation weights stored in the ESMF_RouteHandle hide all these aspects from the user and provide an efficient and automatic conservative interpolation infrastructure.

The ESMF_XGRID class supports first- and second-order conservative interpolation.

Currently, only the first-order method has been applied in ICONGETM.

The interpolation weights are calculated during the initialization, based on the areas of the grid cells.

The connecting edges between the vertices in the exchange grid are defined on arcs of great circles, which differ from the rhumb lines used in GETM.

However, the interpolation between GETM and the exchange grid is still conservative because the weights are scaled in terms of the area provided by GETM.

3.4.1 Regridding from ICON to GETM

As sketched in Fig. 6, the interpolation of the mean sea level pressure (MSLP) from ICON to GETM is straight-forward in principle because ICON provides all quantities over the whole domain. However, in case sea surface fluxes are exchanged, there are two issues if the land/sea masks do not match between ICON and GETM. First, there is a physical inconsistency, when surface fluxes parameterized over land cells in ICON are transferred to ocean cells in GETM (case 3). Second, when ICON applies sea surface fluxes in ocean areas that are represented by land in GETM (case 2), the fluxes are not conserved in the global atmosphere–ocean system. This latter case demonstrates that the conservative interpolation via the exchange grid is not sufficient to guarantee a conservative flux exchange.

3.4.2 Regridding from GETM to ICON

Figure 6 sketches the regridding of the sea surface temperature (SST). The update of an ICON ocean cell that is partly covered by a GETM land cell (case 2) needs some remarks. For the contribution from a GETM land cell to an ICON ocean cell, the SST value of the ICON cell is applied. This value can be either a user-provided ICON-internal SST, if the climatological update is activated, or simply the SST from the last time step. For the first time step this is the initial ICON-internal SST.

For demonstration purposes, the newly developed model system ICONGETM is applied to the central Baltic Sea. High-resolution uncoupled, one-way and two-way coupled simulations are carried out and compared. The modelling period 1–21 July 2012 is chosen to evaluate the model results with measurement data from a field campaign with research vessel (RV) Meteor (cruise M87).

4.1 Coupled central Baltic Sea setup

4.1.1 ICON configuration

The ICON setup is based on the operational non-hydrostatic numerical weather prediction configuration from the German Weather Service (DWD) but covers a different model domain. For the coupled Baltic Sea setup, ICON is run in limited area mode with three nested domains with effective resolutions of 9.89, 4.93 and 2.47 km; see Fig. 2. The vertical terrain-following hybrid grids consist of 90, 65 and 54 height-based vertical levels. The heights are pre-defined depending on the associated pressure in the US 1976 standard atmosphere, with the top boundary of the model domain depending on the numbers of levels (Reinert et al., 2020, Fig. 3.5). At the open boundaries, the outermost domain is driven by 6-hourly IFS data from ECMWF. The designed nesting guarantees a smooth transition from this coarse boundary forcing, provided with 16 km resolution, to the innermost domain over the central Baltic Sea. The feedback from refined nesting levels is relaxation-based. The model time steps are 60, 30 and 15 s. For all domains, initial conditions are obtained by interpolation from IFS data. In contrast to long-term hindcast applications, ICON is not re-initialized and the ICON-internal SST is not updated during the model run.

The settings also include the subgrid-scale cloud scheme as well as the vertical diffusion and transfer turbulent coefficients from COSMO. For the performed summer simulations, COSMO microphysics (Bechtold et al., 2008; Doms et al., 2013; Zängl et al., 2015) with only two frozen water substances (cloud ice and snow) are applied. The Rapid Radiation Transfer Model (RRTM) of Mlawer et al. (1997) is used. The convection parameterization is switched off for the finest-resolved domain.

The complete configuration can be found in the code. The run scripts include the namelist settings. A detailed description of the namelist options are provided through the ICON documentation which is part of the ICON model code.

ICON does not need any specific settings when run two-way coupled in ICONGETM because the coupler will simply overwrite the ICON-internal sea surface temperature with the data provided from GETM.

4.1.2 GETM configuration

The GETM setup for the central Baltic Sea is taken from Holtermann et al. (2014). The model domain is shown in Fig. 2. Based on an equidistant spherical grid, the horizontal resolution varies between 500 and 600 m. In the vertical, 100 terrain-following layers with adaptive zooming towards stratification are applied. At the open boundaries, hourly data for temperature, salinity, sea surface elevation and normal depth-averaged velocity from the Baltic Sea setup of Gräwe et al. (2019) are prescribed. Furthermore, the freshwater discharge of the five major rivers entering the model domain is prescribed; see Chrysagi et al. (2021) for details. The initial temperature and salinity distribution for the present study was obtained by continuing the original simulations of Holtermann et al. (2014) and subsequent distance-weighted nudging with available measurements from the HELCOM database below 50 m depths. The 3D model time step is 45 s.

During a spin-up period from 20 May–30 June 2012, GETM is run uncoupled. In the GETM configuration file, two namelist parameters have to be changed for the uncoupled and coupled simulations. The first one specifies whether atmospheric data should be read from file or whether an external coupler will take care of the data provision. A second one specifies whether GETM needs to compute the air–sea fluxes during runtime or whether air–sea fluxes are already provided. In the uncoupled simulation, GETM calculates the air–sea fluxes according to the bulk parameterization of Kondo (1975) in terms of hourly meteorological CFSv2 data (Saha et al., 2014) read from file. During the one- and two-way coupled simulations the coupler will process the air–sea fluxes from ICON.

4.1.3 ICONGETM configuration

The exchanged data for the one- and two-way coupled simulations are listed in Table 1. In order to temporally resolve the fast feedbacks between atmosphere and ocean dynamics, the coupling time step is set to 3 min, the least common multiplier of the time steps from ICON and GETM. For the present setup, a good concurrent load-balancing with minimum idle/waiting times for ICON and GETM was empirically obtained through the log-file time information resulting in 864 and 384 processes, respectively.

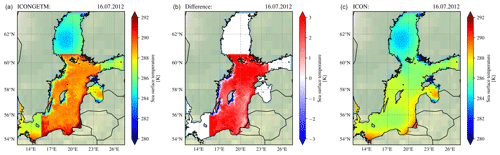

Figure 7 Daily mean sea surface temperature (SST) from the two-way coupled ICONGETM run (a) and the uncoupled/one-way coupled ICON run (c) as well as the difference ICONGETM minus ICON (b) for 16 July 2012. Outside the domain of simulated SST in the central Baltic Sea, the two-way coupled ICONGETM run also uses the prescribed ICON-internal SST.

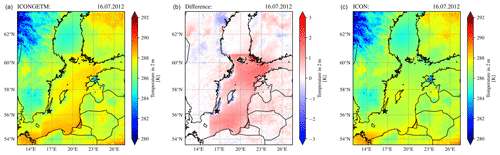

Figure 8Daily mean 2 m air temperature from the two-way coupled ICONGETM simulation (a) and the uncoupled/one-way coupled ICON simulation (c) as well as the difference ICONGETM minus ICON (b) for 16 July 2012. The black star south-east of the island of Öland marks the position of the vertical profiles shown in Fig. 12.

4.2 Results

4.2.1 Effects of interactive coupling on meteorology

In the uncoupled and one-way coupled simulations, ICON uses its prescribed internal sea surface temperature (SST), which does not show any pronounced temperature gradients due to oceanic eddies or coastal upwelling. Short-term and small-scale variations are only considered in the two-way coupled ICONGETM run (see Fig. 7), with the SST simulated and provided in high-resolution by GETM.

In July 2012, the simulated SST ranged around 289 K, with values below 282 K in the upwelling areas south of the coast of mainland Sweden and the islands of Öland and Gotland. The ICON-internal SST is between 0.5 and 2 K colder. The overall warmer surface of the Baltic Sea in the two-way coupled ICONGETM run causes a predominantly warmer lower troposphere. As a result, the daily mean 2 m temperature is about 0.5 to 2 K higher; cf. Fig. 8.

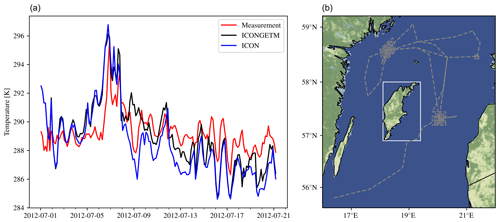

Figure 9 Air temperature at 29.1 m height in the central Baltic Sea over the period 1–21 July 2012 (a). Compared are model results from the two-way coupled ICONGETM and uncoupled/one-way coupled ICON simulations with measurements onboard the RV Meteor; ship track shown in (b). The white frame shows the island of Gotland, similar to Fig. 2.

Over the upwelling regions, however, where cold deep water has risen to the surface, only the two-way coupled ICONGETM run is able to reproduce the cooling in the 2 m temperatures by 1 to 2 K against the surroundings. Thus, the two-way coupled atmosphere–ocean simulation provides a more realistic representation of actual weather conditions. This is also reflected when comparing the model results with air temperature measured onboard the RV Meteor off the island of Gotland during the above-mentioned field campaign; see Fig. 9. In the uncoupled ICON simulation, the temperature is significantly underestimated by up to 2.5 K. In contrast, the values from the two-way coupled ICONGETM run are in a much better agreement with the measurements. The temporal development agrees also more with the observations (see Fig. 9), especially after 10 d of simulation. The average deviation between the modelled and measured temperature in the period from 1 till 21 July 2012 is decreased from 1.9 K for the uncoupled to 1.6 K for the two-way coupled simulation. This represents an improvement of about 15 %. On the other hand, the Pearson correlation coefficient is only slightly improved from 0.7 for the uncoupled to 0.72 for the two-way coupled simulation. Figure 9 indicates that the coupled ICONGETM system needs some spin-up time to adapt to the coupling before the improvement with respect to the uncoupled simulation becomes visible. Within the period from 10 till 21 July 2012, the average deviation between the modelled and measured temperature decreases from 2.0 K for the uncoupled to 1.5 K for the two-way coupled simulation. Thus, after the spin-up, the model results are significantly improved due to the coupling by 25 %. The removal of the spin-up period also increases the correlation coefficients to 0.73 for the uncoupled and to 0.75 for the two-way coupled simulation.

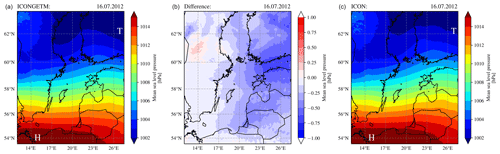

The interactive coupling between ICON and GETM also affects the synoptic-scale dynamic meteorology and leads to local effects in the atmospheric boundary layer. The warmer Baltic Sea and higher lower-troposphere temperatures in the two-way coupled ICONGETM simulation result in a mean sea level pressure that is up to 1 hPa lower over sea and adjacent land than in the uncoupled/one-way coupled ICON run; cf. Fig. 10.

Figure 10Daily mean sea level pressure from the two-way coupled ICONGETM simulation (a) and the uncoupled/one-way coupled ICON simulation (c) as well as the difference ICONGETM minus ICON (b) for 16 July 2012. “T” and “H” mark surface lows and highs, respectively.

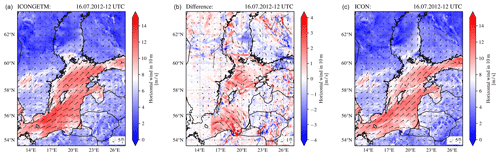

Therefore, the low-pressure area over the northern Baltic Sea, which causes the observed upwelling event, is even stronger in the two-way coupled simulation. The resulting higher pressure gradient between the Baltic low and the high over western Europe (cf. Fig. 10) leads to an increase in the near-surface wind field over a large part of the water surface, while locally wind velocity is reduced in the upwelling regions; see Fig. 11.

Figure 11Horizontal wind field at 10 m height from the two-way coupled ICONGETM simulation (a) and the uncoupled/one-way coupled ICON simulation (c) as well as the difference ICONGETM minus ICON (b) for 16 July 2012 12:00 UTC. Displayed are the wind vectors (reference vector at the bottom of the figure, units of m s−1) and the wind speed (coloured).

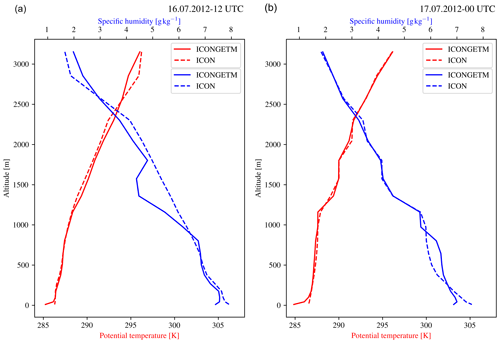

The weather conditions leading to the upwelling event are therefore more pronounced in the two-way coupled model run. The effects of the interactive atmosphere–ocean coupling on the boundary layer dynamics is most evident for the upwelling regions. Figure 12 shows vertical profiles of potential temperature and specific humidity over the upwelling area east of Öland; see star marker in Fig. 8.

Figure 12Atmospheric vertical profiles of potential temperature and specific humidity from the two-way coupled ICONGETM run and the uncoupled/one-way coupled ICON run for 16 (a) and 17 (b) July 2012 at 12:00 and 00:00 UTC, respectively. The profiles are obtained south-east of the island of Öland; see black star in Fig. 8.

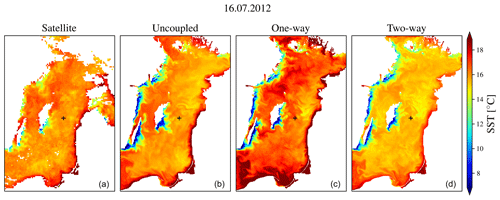

Figure 13 Daily mean sea surface temperature from satellites (a) and simulated by GETM in the uncoupled (b), one-way (c) and two-way (d) coupled simulation for 16 July 2012. The colour bar is identical to Fig. 7. The SST derived from satellite data was provided by the Federal Maritime and Hydrographic Agency of Germany (BSH). The black cross marks the position of station TF271 in the Eastern Gotland Basin.

Compared are the profiles for 16 July 2012 at noon and midnight, when the upwelling event was most pronounced in this area. As a result of the upwelling of cold deep water, the potential temperature is reduced by up to 1.5 to 2 K and atmospheric stratification is increased in the lowermost 50 to 150 m at noon and midnight, respectively. The two-way coupled ICONGETM run also shows slightly enhanced gradients in the potential temperature profile at the upper boundary layer. The more stable stratification has an effect on the boundary layer mixing, whereby humid air is more concentrated in the central to upper part of the boundary layer, between 900 and 2400 m in the left panel of Fig. 12. Due to reduced evaporation, it is less in the lowermost part: below 500 m in the left panel of Fig. 12. In addition, there is less momentum mixed downwards (not shown), which is a likely explanation for the locally reduced wind velocity in the upwelling regions at Sweden’s mainland coast and Öland and Gotland, shown by negative differences in the central panel of Fig. 11. In the coupled case, the temperature gradient between land and sea is increased in the area of the upwelling (cf. Fig. 8), with almost the same land temperatures but significantly lower SSTs, which locally increases the onshore wind component and thus weakens the overall more easterly wind in Fig. 11.

Hence, the evolution/stratification of the marine boundary layer is reproduced more realistically. Similarly, the boundary layer wind conditions, in particular over upwelling regions, are also better represented using two-way atmosphere–ocean coupling. The wind stress being coupled with the SST is largely related to atmospheric stability effects rather than to the change of the surface wind speed. This has also been shown recently in Fallmann et al. (2019).

4.2.2 Coupling effects in the ocean

In Fig. 13, the sea surface temperature (SST) from all model simulations are compared to satellite data.

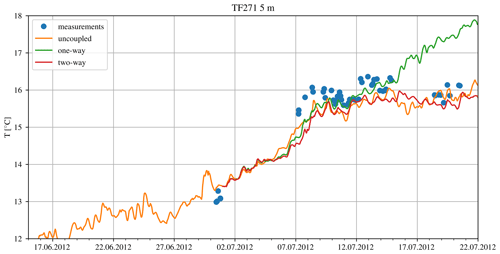

Due to the forcing with meteorological reanalysis data, the SST from the uncoupled simulation shows the best agreement with the satellite data and the most pronounced upwelling activity. The SST from the two-way coupled simulation is only slightly colder but is clearly overestimated in the one-way coupled simulation. This overestimation results from a continuous increase in near-surface temperature; see Fig. 14 for the evolution in the Eastern Gotland Basin.

Figure 14 Temperature in 5 m depth at station TF271 (see black cross in Fig. 13) from CTD measurements and the three model simulations. The one- and two-way coupled simulations are started at 1 July 2012, after the uncoupled spin-up period.

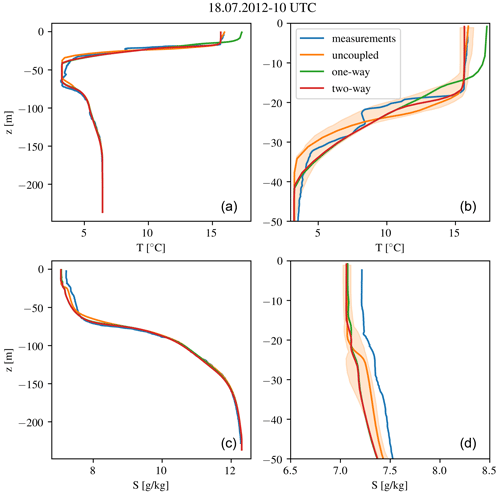

The evolution indicates that the surface heat flux (not shown) used in the one-way coupled GETM simulation is overestimated after 12 July 2012. For the one-way coupled simulation, the heat flux provided by ICON is calculated in terms of the too cold ICON-internal SST; see Fig. 7. In the uncoupled and two-way coupled simulations, the surface heat flux is calculated in terms of the SST from GETM, either within GETM or ICON, respectively. Henceforth, the fluxes are adapting more conveniently to the warming ocean. The temperature differences are not only confined to the sea surface; see Fig. 15 for vertical profiles of temperature and salinity in the Eastern Gotland Basin.

In the upper 20 m, the temperatures from the uncoupled and two-way coupled simulations are very similar and do excellently agree with the measurements; cf. Fig. 15b. The temperature from the one-way coupled simulation is approximately 1.5 K too warm. Within the thermocline, 20–40 m depth, the temperature profiles show a stronger difference. When these deviations are compared against the temporal variability of the temperature in an 8 d interval, it becomes clear that the differences can be attributed to the natural variability of the thermocline in the central Baltic Sea; see Fig. 15b. For a better visibility, only the variability of the uncoupled simulation is shown. A slightly different excitation timing of wind-driven processes, i.e. near inertial internal waves, is subsequently causing the differences between the analysed profiles.

The salinity differences between the simulations show deviations in the thermocline, in analogy to the temperature, but are also within the variability observed over an 8 d time period; see Fig. 15d. In contrast to the surface, the deep water below the thermocline is virtually not affected by the different atmospheric forcing; see Fig. 15a and c, which is due to the strong density gradients in the thermo- and halocline, inhibiting a significant turbulent transport of heat and salt on the timescales analysed here (Reissmann et al., 2009; Holtermann et al., 2020).

Figure 15 Temperature and salinity profiles at station TF271 from CTD measurements and the three model simulations. Panels (a) and (c) depict the whole water column; (b) and (d) are a zoom towards the sea surface. The light-orange-shaded area depicts the variability of the uncoupled simulation within an 8 d interval (14–21 July 2012).

ICONGETM supports the exchange of fluxes and state variables across the air–sea interface. The applied ESMF exchange grids guarantee a conservative flux exchange, except in the area where the land/sea masks of ICON and GETM do not match. The NUOPC-Mediator performs additional unit conversion and merging of precipitation fluxes; see Table 1. In ICONGETM v1.0, the air–sea fluxes are taken from the atmosphere model ICON. Their calculation in ICON is very complex and deeply nested in the model core.

Ideally all fluxes, air–sea and land fluxes, should be calculated directly on one unique ESMF exchange grid in the mediator and applied as boundary conditions to the corresponding individual models (Best et al., 2004). On the exchange grid, a unique land/sea mask of the coupled system can be defined. If the land/sea mask of the exchange grid is adjusted to the mask of the ocean model (Balaji et al., 2006), the associated sea surface areas will be identical. In this case, the conservative interpolation between the exchange and model grids finally offers a fully conservative flux exchange between the atmosphere and ocean, despite originally non-matching land/sea masks in the individual models. Moreover, physical consistency will be ensured in the sense that only air–sea fluxes, i.e. fluxes influenced by the sea surface temperature, are provided to the ocean. If the sea surface area on the exchange grid does not cover the one of the ocean model, creep, nearest-neighbour or other extrapolation methods are required to avoid the application of land fluxes to the ocean (see, e.g., Kara et al., 2007; Chen et al., 2010; Turuncoglu, 2019). In any case, fluxes provided by the mediator can be applied in the atmosphere and ocean over the same period until new fluxes are calculated in the next coupling time step. The flux calculation on the ESMF exchange grid in a central mediator component also offers the most straight-forward extension of the coupled system by models for, e.g., waves and sea ice. One drawback of the flux calculation outside the individual models can be stability issues for explicit time-stepping schemes or complex coupling implementations for implicit time-stepping schemes.

Alternatively, a conservative exchange of air–sea fluxes calculated in the atmosphere model is possible if the mask of the exchange grid can be emulated in the atmosphere model due to mixed land/ocean cells. For this, the water fraction in each cell must be obtained by conservative interpolation of the sea surface area from the ocean model via the ESMF exchange grid.

In its present state, ICON supports neither the described ideal modular coupling nor the alternative. Both approaches require non-trivial modifications to the ICON code. It is expected that they will become available in future releases of ICON, such that the full potential of ICONGETM for a flexible conservative flux exchange via the ESMF exchange grid can be exploited.

The two-way coupled simulation presented in the previous section was conducted with a coupling time step of 3 min and showed an overhead of approximately 15 % compared to the uncoupled simulation. The majority is spent for the initialization. This demonstrates the excellent performance of the developed model system based on ESMF/NUOPC and its potential for future high-resolution coupled atmosphere–ocean simulations with fast feedback integration.

With ICONGETM, consisting of the state-of-the-art operational atmosphere model ICON and the established coastal ocean model GETM, a new model system especially suited for high-resolution studies has been developed. The two-way coupled model system is driven by latest NUOPC coupling technology. The data exchange between the unstructured grid of ICON and the structured grid of GETM is carried out via a central mediator component. In contrast to other model systems with interpolation directly between model grids, the new implementation and use of ESMF exchange grids in the mediator component have been described in detail. The added value and the potential of ESMF exchange grids for conservative interpolation, flux calculations and coherent land/sea masks have been discussed. The functioning and performance of ICONGETM have been demonstrated. Thanks to NUOPC, future extensions of the model system by wave or sea ice models require only minimal implementational effort.

| ADCIRC | ADvanced CIRCulation |

| COAMPS | Coupled Ocean/Atmosphere Mesoscale Prediction System |

| COAWST | Coupled Ocean-Atmosphere-Wave-Sediment Transport Modeling System |

| COSMO | Consortium for Small-scale Modelling |

| ESMF | Earth System Modeling Framework; see also https://earthsystemmodeling.org/ (last access: 22 June 2021) |

| ESPS | Earth System Prediction Suite; see also https://www.icams-portal.gov/resources/espc/esps/table.htm (last access: 22 June 2021) |

| FABM | Framework for Aquatic Biogeochemical Models; see also https://fabm.net (last access: 22 June 2021) |

| GETM | General Estuarine Transport Model; see also https://getm.io-warnemuende.de(last access: 22 June 2021) |

| GOTM | General Ocean Turbulence Model; see also https://gotm.net (last access: 22 June 2021) |

| ICON | ICOsahedral Non-hydrostatic modelling framework; see also https://code.mpimet.mpg.de/projects/iconpublic (last access: 22 June 2021) |

| ICON-ESM | ICON Earth system model |

| ICONGETM | ICON coupled with GETM |

| MCT | Model Coupling Toolkit |

| MetUM | Met Office Unified Model |

| MITgcm | MIT General Circulation Model |

| NCOM | Navy Coastal Ocean Model |

| NEMO | Nucleus for European Modelling of the Ocean |

| NEMS | NOAA Environmental Modeling System; see also https://www.emc.ncep.noaa.gov/emc/pages/infrastructure/nems.php (last access: 22 June 2021) |

| NUOPC | National Unified Operational Prediction Capability; see also https://earthsystemmodeling.org/nuopc/ (last access: 22 June 2021) |

| OASIS | Ocean Atmosphere Sea Ice Soil |

| RegESM | Regional Earth System Model |

| ROMS | Regional Ocean Modeling System |

| SKRIPS | Scripps–KAUST Regional Integrated Prediction System |

| SWAN | Simulating WAves Nearshore |

| UKC | UK environmental prediction system |

| WRF | Weather Research and Forecasting model |

| YAC | Yet Another Coupler |

The source code of ICONGETM is available from https://gitlab.com/modellers-tropos/icongetm.git (last access: 22 June 2021) under GPL license. A frozen version of the code and run scripts as used in this paper is archived on Zenodo (https://doi.org/10.5281/zenodo.4516568, Bauer and Klingbeil, 2021). The modified source code of ICON is also archived on Zenodo (https://doi.org/10.5281/zenodo.4432739, Bauer, 2021) and available if a valid Software License Agreement, obtained via https://code.mpimet.mpg.de/projects/icon-license (last access: 22 June 2021), is presented to the first author. The source code of GETM was not modified and is available from https://sourceforge.net/p/getm/code/ci/iow/tree/ (last access: 22 June 2021) under GPL license. The version used for the simulations in this paper is available from https://doi.org/10.5281/zenodo.5017784 (Klingbeil, 2020).

The high-resolution model output data have a size of several terabytes and are too large for public storage. Parts of reasonable size can be extracted and provided on request. The data used from the operational Integrated Forecasting System (IFS) at ECMWF are available at https://www.ecmwf.int/en/forecasts/dataset/atmospheric-model-high-resolution-10-day-forecast (ECMWF, 2018). NCEP Climate Forecast System data (CFSv2) are available from https://doi.org/10.5065/D69021ZF (Saha et al., 2012).

The code has been designed, developed and implemented by TPB in cooperation with KK. The demonstration setup was provided by TPB and BH for ICON and PH and KK for GETM. The coupling configuration has been prepared by TPB and KK and discussed with all authors. BH and TPB evaluated the meteorological results of the simulation, i.e. ICON. PH and KK evaluated the simulation results on the ocean side, i.e. GETM. HR advised and discussed the flux exchange as well as the coupling strategy. OK advised the code development and supported the implementation of mathematical utility routines. All authors contributed to this paper in the sections corresponding to their part during the workflow.

The authors declare that they have no conflict of interest.

The authors would like to acknowledge Fei Lui, Robert Oehmke, Rocky Dunlap and Gerhard Theurich from the ESMF support, who replied to every request and continuously improved the ESMF library. Knut Klingbeil is grateful for the inspiring collaboration and discussions with Carsten Lemmen. We thank the DWD for support with the ICON model setup. The authors further acknowledge the computing time granted by the German Climate Computing Centre (Deutsches Klimarechenzentrum, DKRZ), by the North German Supercomputing Alliance (HLRN) and by the Forschungszentrum Jülich at the supercomputer JUQUEEN (JARA-HPC). The authors appreciate the constructive comments from Sophie Valcke and one anonymous referee.

This work is the outcome of the model coupling initiative LOCUS (Land-ocean interaction mediated by coastal upwelling and sea breeze) funded by the Leibniz institutes TROPOS and IOW.

The authors gratefully acknowledge additional financial support by the German Research Foundation for the Collaborative Research Center TRR181 on Energy Transfers in Atmosphere and Ocean (Project 274762653) and the funding of Peter Holtermann by grant HO 5891/1-1 as well as the project MOSSCO (Modular System for Shelves and Coasts; FKZ 03F0740B, funded by the German Federal Ministry of Research and Education).

The publication of this article was funded by the Open Access Fund of the Leibniz Association.

This paper was edited by Xiaomeng Huang and reviewed by Sophie Valcke and one anonymous referee.

Balaji, V., Anderson, J., Held, I., Winton, M., Durachta, J., Malyshev, S., and Stouffer, R. J.: The Exchange Grid: A mechanism for data exchange between Earth System components on independent grids, in: Parallel Computational Fluid Dynamics 2005, Elsevier, 179–186, https://doi.org/10.1016/B978-044452206-1/50021-5, 2006. a, b

Barron, C. N., Kara, A. B., Martin, P. J., Rhodes, R. C., and Smedstad, L. F.: Formulation, implementation and examination of vertical coordinate choices in the Global Navy Coastal Ocean Model (NCOM), Ocean Model., 11, 347–375, https://doi.org/10.1016/j.ocemod.2005.01.004, 2006. a

Bauer, T. P.: The atmosphere model ICON as used in the two-way coupled atmosphere-ocean model ICONGETM (Version v2.5), Geoscientific Model Development, Zenodo, https://doi.org/10.5281/zenodo.4432739, 2021. a

Bauer, T. P. and Klingbeil, K.: ICONGETM v1.0 – Flexible NUOPC-driven two-way coupling via ESMF exchange grids between the unstructured-grid atmosphere model ICON and the structured-grid coastal ocean model GETM (Version v1.0), Geoscientific Model Development, Zenodo, https://doi.org/10.5281/zenodo.4516568, 2021. a

Bechtold, P., Köhler, M., Jung, T., Doblas-Reyes, F., Leutbecher, M., Rodwell, M. J., Vitart, F., and Balsamo, G.: Advances in simulating atmospheric variability with the ECMWF model: From synoptic to decadal time-scales, Q. J. Roy. Meteor. Soc., 134, 1337–1351, https://doi.org/10.1002/qj.289, 2008. a

Best, M. J., Beljaars, A., Polcher, J., and Viterbo, P.: A Proposed Structure for Coupling Tiled Surfaces with the Planetary Boundary Layer, J. Hydrometeorol., 5, 1271–1278, https://doi.org/10.1175/JHM-382.1, 2004. a

Booij, N., Ris, R. C., and Holthuijsen, L. H.: A third-generation wave model for coastal regions: 1. Model description and validation, J. Geophys. Res.-Oceans, 104, 7649–7666, https://doi.org/10.1029/98JC02622, 1999. a

Borchert, S., Zhou, G., Baldauf, M., Schmidt, H., Zängl, G., and Reinert, D.: The upper-atmosphere extension of the ICON general circulation model (version: ua-icon-1.0), Geosci. Model Dev., 12, 3541–3569, https://doi.org/10.5194/gmd-12-3541-2019, 2019. a

Brown, A., Milton, S., Cullen, M., Golding, B., Mitchell, J., and Shelly, A.: Unified Modeling and Prediction of Weather and Climate: A 25-Year Journey, B. Am. Meteorol. Soc., 93, 1865–1877, https://doi.org/10.1175/BAMS-D-12-00018.1, 2012. a

Burchard, H. and Bolding, K.: GETM – a General Estuarine Transport Model. Scientific Documentation, Tech. rep., European Commission, available at: http://publications.jrc.ec.europa.eu/repository/handle/JRC23237 (last access: 22 June 2021), 2002. a, b

Carniel, S., Benetazzo, A., Bonaldo, D., Falcieri, F. M., Miglietta, M. M., Ricchi, A., and Sclavo, M.: Scratching beneath the surface while coupling atmosphere, ocean and waves: Analysis of a dense water formation event, Ocean Model., 101, 101–112, https://doi.org/10.1016/j.ocemod.2016.03.007, 2016. a

Chelton, D. B. and Xie, S.-P.: Coupled Ocean-Atmosphere Interaction at Oceanic Mesoscales, Oceanography, 23, 52–69, https://doi.org/10.5670/oceanog.2010.05, 2010. a

Chen, S., Campbell, T. J., Jin, H., Gaberšek, S., Hodur, R. M., and Martin, P.: Effect of Two-Way Air–Sea Coupling in High and Low Wind Speed Regimes, Mon. Weather Rev., 138, 3579–3602, https://doi.org/10.1175/2009MWR3119.1, 2010. a

Chrysagi, E., Umlauf, L., Holtermann, P. L., Klingbeil, K., and Burchard, H.: High‐resolution simulations of submesoscale processes in the Baltic Sea: The role of storm events, J. Geophys. Res.-Oceans, 126, e2020JC016411, https://doi.org/10.1029/2020JC016411, 2021. a

Craig, A., Valcke, S., and Coquart, L.: Development and performance of a new version of the OASIS coupler, OASIS3-MCT_3.0, Geosci. Model Dev., 10, 3297–3308, https://doi.org/10.5194/gmd-10-3297-2017, 2017. a

Crueger, T., Giorgetta, M. A., Brokopf, R., Esch, M., Fiedler, S., Hohenegger, C., Kornblueh, L., Mauritsen, T., Nam, C., Naumann, A. K., Peters, K., Rast, S., Roeckner, E., Sakradzija, M., Schmidt, H., Vial, J., Vogel, R., and Stevens, B.: ICON-A, The Atmosphere Component of the ICON Earth System Model: II. Model Evaluation, J. Adv. Model. Earth Sy., 10, 1638–1662, https://doi.org/10.1029/2017MS001233, 2018. a, b

Cullen, M. J.: The unified forecast/climate model, Meteorol. Mag., 122, 81–94, 1993. a

Dipankar, A., Stevens, B., Heinze, R., Moseley, C., Zängl, G., Giorgetta, M. A., and Brdar, S.: Large eddy simulation using the general circulation model ICON, J. Adv. Model. Earth Sy., 7, 963–986, https://doi.org/10.1002/2015MS000431, 2015. a, b

Doms, G., Förstner, J., Heise, E., Herzog, H.-J., Mironov, D., Raschendorfer, M., Reinhardt, T., Ritter, B., Schrodin, R., Schulz, J.-P., and Vogel, G.: A description of the Nonhydrostatic Regional COSMOS Model, Part II: Physical parameterization, Tech. rep., Deutscher Wetterdienst, Offenbach, https://doi.org/10.5676/DWD_pub/nwv/cosmo-doc_5.00_II, 2013. a

Donlon, C. J., Martin, M., Stark, J., Roberts-Jones, J., Fiedler, E., and Wimmer, W.: The Operational Sea Surface Temperature and Sea Ice Analysis (OSTIA) system, Remote Sens. Environ., 116, 140–158, https://doi.org/10.1016/j.rse.2010.10.017, 2012.

DWD: Weather Forecast System, available at: https://www.dwd.de/EN/research/weatherforecasting/num_modelling/01_num_weather_prediction_modells/icon_description.html, last access: 22 June 2021. a

ECMWF: Atmospheric Model high resolution 10-day forecast (Set I – HRES), available at: https://www.ecmwf.int/en/forecasts/dataset/atmospheric-model-high-resolution-10-day-forecast, last access: 21 December 2018. a

Fallmann, J., Lewis, H. W., Castillo, J. M., Arnold, A., and Ramsdale, S.: Impact of sea surface temperature on stratiform cloud formation over the North Sea, Geophys. Res. Lett., 44, 4296–4303, https://doi.org/10.1002/2017GL073105, 2017. a

Fallmann, J., Lewis, H. W., Sanchez, J. C., and Lock, A.: Impact of high‐resolution ocean–atmosphere coupling on fog formation over the North Sea, Q. J. Roy. Meteor. Soc., 145, 1180–1201, https://doi.org/10.1002/qj.3488, 2019. a, b, c

Gassmann, A. and Herzog, H.-J.: Towards a consistent numerical compressible non-hydrostatic model using generalized Hamiltonian tools, Q. J. Roy. Meteor. Soc., 134, 1597–1613, https://doi.org/10.1002/qj.297, 2008. a

Giorgetta, M. A., Brokopf, R., Crueger, T., Esch, M., Fiedler, S., Helmert, J., Hohenegger, C., Kornblueh, L., Köhler, M., Manzini, E., Mauritsen, T., Nam, C., Raddatz, T., Rast, S., Reinert, D., Sakradzija, M., Schmidt, H., Schneck, R., Schnur, R., Silvers, L., Wan, H., Zängl, G., and Stevens, B.: ICON-A, the Atmosphere Component of the ICON Earth System Model: I. Model Description, J. Adv. Model. Earth Sy., 10, 1613–1637, https://doi.org/10.1029/2017MS001242, 2018. a, b

Gräwe, U., Holtermann, P. L., Klingbeil, K., and Burchard, H.: Advantages of vertically adaptive coordinates in numerical models of stratified shelf seas, Ocean Model., 92, 56–68, https://doi.org/10.1016/j.ocemod.2015.05.008, 2015. a

Gräwe, U., Klingbeil, K., Kelln, J., and Dangendorf, S.: Decomposing Mean Sea Level Rise in a Semi-Enclosed Basin, the Baltic Sea, J. Climate, 32, 3089–3108, https://doi.org/10.1175/JCLI-D-18-0174.1, 2019. a, b

Hanke, M., Redler, R., Holfeld, T., and Yastremsky, M.: YAC 1.2.0: new aspects for coupling software in Earth system modelling, Geosci. Model Dev., 9, 2755–2769, https://doi.org/10.5194/gmd-9-2755-2016, 2016. a, b

Heinze, R., Dipankar, A., Henken, C. C., Moseley, C., Sourdeval, O., Trömel, S., Xie, X., Adamidis, P., Ament, F., Baars, H., Barthlott, C., Behrendt, A., Blahak, U., Bley, S., Brdar, S., Brueck, M., Crewell, S., Deneke, H., Di Girolamo, P., Evaristo, R., Fischer, J., Frank, C., Friederichs, P., Göcke, T., Gorges, K., Hande, L., Hanke, M., Hansen, A., Hege, H.-c., Hoose, C., Jahns, T., Kalthoff, N., Klocke, D., Kneifel, S., Knippertz, P., Kuhn, A., van Laar, T., Macke, A., Maurer, V., Mayer, B., Meyer, C. I., Muppa, S. K., Neggers, R. A. J., Orlandi, E., Pantillon, F., Pospichal, B., Röber, N., Scheck, L., Seifert, A., Seifert, P., Senf, F., Siligam, P., Simmer, C., Steinke, S., Stevens, B., Wapler, K., Weniger, M., Wulfmeyer, V., Zängl, G., Zhang, D., and Quaas, J.: Large-eddy simulations over Germany using ICON: a comprehensive evaluation, Q. J. Roy. Meteor. Soc., 143, 69–100, https://doi.org/10.1002/qj.2947, 2017. a, b

Hill, C., DeLuca, C., Balaji, V., Suarez, M., and Da Silva, A. M.: The architecture of the earth system modeling framework, Comput. Sci. Eng., 6, 18–28, https://doi.org/10.1109/MCISE.2004.1255817, 2004. a

Hodur, R. M.: The Naval Research Laboratory's Coupled Ocean/Atmosphere Mesoscale Prediction System (COAMPS), Mon. Weather Rev., 125, 1414–1430, https://doi.org/10.1175/1520-0493(1997)125<1414:TNRLSC>2.0.CO;2, 1997. a

Hofmeister, R., Burchard, H., and Beckers, J.-M.: Non-uniform adaptive vertical grids for 3D numerical ocean models, Ocean Model., 33, 70–86, https://doi.org/10.1016/j.ocemod.2009.12.003, 2010. a

Holtermann, P. L., Burchard, H., Gräwe, U., Klingbeil, K., and Umlauf, L.: Deep-water dynamics and boundary mixing in a nontidal stratified basin: A modeling study of the Baltic Sea, J. Geophys. Res.-Oceans, 119, 1465–1487, https://doi.org/10.1002/2013JC009483, 2014. a, b

Holtermann, P. L., Prien, R., Naumann, M., and Umlauf, L.: Interleaving of oxygenized intrusions into the Baltic Sea redoxcline, Limnol. Oceanogr., 65, 482–503, https://doi.org/10.1002/lno.11317, 2020. a

Kara, A. B., Wallcraft, A. J., and Hurlburt, H. E.: A Correction for Land Contamination of Atmospheric Variables near Land–Sea Boundaries, J. Phys. Oceanogr., 37, 803–818, https://doi.org/10.1175/JPO2984.1, 2007. a

Klingbeil, K.: Source code for the coastal ocean model GETM (iow branch) (Version 2.5.0-0.1), Zenodo [code], https://doi.org/10.5281/zenodo.5017784, 2020. a

Klingbeil, K. and Burchard, H.: Implementation of a direct nonhydrostatic pressure gradient discretisation into a layered ocean model, Ocean Model., 65, 64–77, https://doi.org/10.1016/j.ocemod.2013.02.002, 2013. a

Klingbeil, K., Mohammadi-Aragh, M., Gräwe, U., and Burchard, H.: Quantification of spurious dissipation and mixing – Discrete variance decay in a Finite-Volume framework, Ocean Model., 81, 49–64, https://doi.org/10.1016/j.ocemod.2014.06.001, 2014. a

Klingbeil, K., Lemarié, F., Debreu, L., and Burchard, H.: The numerics of hydrostatic structured-grid coastal ocean models: State of the art and future perspectives, Ocean Model., 125, 80–105, https://doi.org/10.1016/j.ocemod.2018.01.007, 2018. a

Kondo, J.: Air-sea bulk transfer coefficients in diabatic conditions, Bound.-Lay. Meteorol., 9, 91–112, https://doi.org/10.1007/BF00232256, 1975. a

Lange, X., Klingbeil, K., and Burchard, H.: Inversions of Estuarine Circulation Are Frequent in a Weakly Tidal Estuary With Variable Wind Forcing and Seaward Salinity Fluctuations, J. Geophys. Res.-Oceans, 125, e2019JC015789, https://doi.org/10.1029/2019JC015789, 2020. a

Larson, J., Jacob, R., and Ong, E.: The Model Coupling Toolkit: A New Fortran90 Toolkit for Building Multiphysics Parallel Coupled Models, The Int. J. High Perform. C., 19, 277–292, https://doi.org/10.1177/1094342005056115, 2005. a

Lemmen, C., Hofmeister, R., Klingbeil, K., Nasermoaddeli, M. H., Kerimoglu, O., Burchard, H., Kösters, F., and Wirtz, K. W.: Modular System for Shelves and Coasts (MOSSCO v1.0) – a flexible and multi-component framework for coupled coastal ocean ecosystem modelling, Geosci. Model Dev., 11, 915–935, https://doi.org/10.5194/gmd-11-915-2018, 2018. a

Lewis, H. W., Castillo Sanchez, J. M., Graham, J., Saulter, A., Bornemann, J., Arnold, A., Fallmann, J., Harris, C., Pearson, D., Ramsdale, S., Martínez-de la Torre, A., Bricheno, L., Blyth, E., Bell, V. A., Davies, H., Marthews, T. R., O'Neill, C., Rumbold, H., O'Dea, E., Brereton, A., Guihou, K., Hines, A., Butenschon, M., Dadson, S. J., Palmer, T., Holt, J., Reynard, N., Best, M., Edwards, J., and Siddorn, J.: The UKC2 regional coupled environmental prediction system, Geosci. Model Dev., 11, 1–42, https://doi.org/10.5194/gmd-11-1-2018, 2018. a

Lewis, H. W., Castillo Sanchez, J. M., Arnold, A., Fallmann, J., Saulter, A., Graham, J., Bush, M., Siddorn, J., Palmer, T., Lock, A., Edwards, J., Bricheno, L., Martínez-de la Torre, A., and Clark, J.: The UKC3 regional coupled environmental prediction system, Geosci. Model Dev., 12, 2357–2400, https://doi.org/10.5194/gmd-12-2357-2019, 2019a. a

Lewis, H. W., Siddorn, J., Castillo Sanchez, J. M., Petch, J., Edwards, J. M., and Smyth, T.: Evaluating the impact of atmospheric forcing and air–sea coupling on near-coastal regional ocean prediction, Ocean Sci., 15, 761–778, https://doi.org/10.5194/os-15-761-2019, 2019b. a

Linardakis, L., Reinert, D., and Gassmann, A.: ICON Grid Documentation, Tech. rep., DKRZ, Hamburg, available at: https://www.dkrz.de/SciVis/hd-cp-2/en-icon_grid.pdf?lang=en (last access: 22 June 2021), 2011. a

Luettich Jr., R. A., Westerink, J. J., and Scheffner, N. W.: ADCIRC: An Advanced Three-Dimensional Circulation Model for Shelves, Coasts, and Estuaries. Report 1. Theory and Methodology of ADCIRC-2DDI and ADCIRC-3DL., Tech. rep., Coastal Engineering Research Center, Vicksburg, MS, available at: https://apps.dtic.mil/docs/citations/ADA261608 (last access: 22 June 2021), 1992. a

Madec, G., Bourdallé-Badie, R., Bouttier, P.-A., Bricaud, C., Bruciaferri, D., Calvert, D., Chanut, J., Clementi, E., Coward, A., Delrosso, D., Ethé, C., Flavoni, S., Graham, T., Harle, J., Iovino, D., Lea, D., Lévy, C., Lovato, T., Martin, N., Masson, S., Mocavero, S., Paul, J., Rousset, C., Storkey, D., Storto, A., and Vancoppenolle, M.: NEMO ocean engine (Version v3.6-patch), Tech. rep., Pôle De Modélisation De L'institut Pierre-simon Laplace (IPSL), Zenodo, https://doi.org/10.5281/ZENODO.3248739, 2017. a

Marshall, J., Adcroft, A. J., Hill, C., Perelman, L., and Heisey, C.: A finite-volume, incompressible Navier Stokes model for studies of the ocean on parallel computers, J. Geophys. Res.-Oceans, 102, 5753–5766, https://doi.org/10.1029/96JC02775, 1997. a

Mlawer, E. J., Taubman, S. J., Brown, P. D., Iacono, M. J., and Clough, S. A.: Radiative transfer for inhomogeneous atmospheres: RRTM, a validated correlated-k model for the longwave, J. Geophys. Res.-Atmos., 102, 16663–16682, https://doi.org/10.1029/97JD00237, 1997. a

Moghimi, S., Van der Westhuysen, A., Abdolali, A., Myers, E., Vinogradov, S., Ma, Z., Liu, F., Mehra, A., and Kurkowski, N.: Development of an ESMF Based Flexible Coupling Application of ADCIRC and WAVEWATCH III for High Fidelity Coastal Inundation Studies, J. Mar. Sci. Eng., 8, 308, https://doi.org/10.3390/jmse8050308, 2020. a

Perlin, N., Skyllingstad, E. D., Samelson, R. M., and Barbour, P. L.: Numerical Simulation of Air–Sea Coupling during Coastal Upwelling, J. Phys. Oceanogr., 37, 2081–2093, https://doi.org/10.1175/JPO3104.1, 2007. a

Pullen, J., Doyle, J. D., and Signell, R. P.: Two-Way Air–Sea Coupling: A Study of the Adriatic, Mon. Weather Rev., 134, 1465–1483, https://doi.org/10.1175/MWR3137.1, 2006. a

Pullen, J., Doyle, J. D., Haack, T., Dorman, C., Signell, R. P., and Lee, C. M.: Bora event variability and the role of air-sea feedback, J. Geophys. Res., 112, C03S18, https://doi.org/10.1029/2006JC003726, 2007. a

Pullen, J., Caldeira, R., Doyle, J. D., May, P., and Tomé, R.: Modeling the air‐sea feedback system of Madeira Island, J. Adv. Model. Earth Sy., 9, 1641–1664, https://doi.org/10.1002/2016MS000861, 2017. a

Reinert, D., Prill, F., Frank, H., Denhard, M., and Zängl, G.: Database Reference Manual for ICON and ICON-EPS, Tech. rep., DWD, Offenbach, https://doi.org/10.5676/DWD_pub/nwv/icon_1.2.12, 2020. a, b

Reissmann, J. H., Burchard, H., Feistel, R., Hagen, E., Lass, H. U., Mohrholz, V., Nausch, G., Umlauf, L., and Wieczorek, G.: Vertical mixing in the Baltic Sea and consequences for eutrophication – A review, Prog. Oceanogr., 82, 47–80, https://doi.org/10.1016/j.pocean.2007.10.004, 2009. a

Renault, L., Chiggiato, J., Warner, J. C., Gomez, M., Vizoso, G., and Tintoré, J.: Coupled atmosphere-ocean-wave simulations of a storm event over the Gulf of Lion and Balearic Sea, J. Geophys. Res.-Oceans, 117, C09019, https://doi.org/10.1029/2012JC007924, 2012. a

Saha, S., Moorthi, S., Wu, X., Wang, J., Nadiga, S., Tripp, P., Behringer, D., Hou, Y., Chuang, H., Iredell, M., Ek, M., Meng, J., Yang, R., Mendez, M. P., van den Dool, H., Zhang, Q., Wang, W., Chen, M., and Becker, E.: NCEP Climate Forecast System Version 2 (CFSv2) Monthly Products, Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory, https://doi.org/10.5065/D69021ZF, 2012. a

Saha, S., Moorthi, S., Wu, X., Wang, J., Nadiga, S., Tripp, P., Behringer, D., Hou, Y.-T., Chuang, H.-y., Iredell, M., Ek, M., Meng, J., Yang, R., Mendez, M. P., van den Dool, H., Zhang, Q., Wang, W., Chen, M., and Becker, E.: The NCEP Climate Forecast System Version 2, J. Climate, 27, 2185–2208, https://doi.org/10.1175/JCLI-D-12-00823.1, 2014. a

Shao, M., Ortiz‐Suslow, D. G., Haus, B. K., Lund, B., Williams, N. J., Özgökmen, T. M., Laxague, N. J. M., Horstmann, J., and Klymak, J. M.: The Variability of Winds and Fluxes Observed Near Submesoscale Fronts, J. Geophys. Res.-Oceans, 124, 7756–7780, https://doi.org/10.1029/2019JC015236, 2019. a

Shchepetkin, A. F. and McWilliams, J. C.: The regional oceanic modeling system (ROMS): a split-explicit, free-surface, topography-following-coordinate oceanic model, Ocean Model., 9, 347–404, https://doi.org/10.1016/j.ocemod.2004.08.002, 2005. a

Skamarock, W. C., Klemp, J. B., Dudhia, J., Gill, D. O., Barker, D. M., Wang, W., and Powers, J. G.: A Description of the Advanced Research WRF Version 2, Tech. rep., UCAR, https://doi.org/10.5065/D6DZ069T, 2005. a

Small, R. J., DeSzoeke, S., Xie, S.-P., O'Neill, L., Seo, H., Song, Q., Cornillon, P., Spall, M., and Minobe, S.: Air–sea interaction over ocean fronts and eddies, Dynam. Atmos. Oceans, 45, 274–319, https://doi.org/10.1016/j.dynatmoce.2008.01.001, 2008. a

Small, R. J., Campbell, T., Teixeira, J., Carniel, S., Smith, T. A., Dykes, J., Chen, S., and Allard, R.: Air–Sea Interaction in the Ligurian Sea: Assessment of a Coupled Ocean–Atmosphere Model Using In Situ Data from LASIE07, Mon. Weather Rev., 139, 1785–1808, https://doi.org/10.1175/2010MWR3431.1, 2011. a

Sun, R., Subramanian, A. C., Miller, A. J., Mazloff, M. R., Hoteit, I., and Cornuelle, B. D.: SKRIPS v1.0: a regional coupled ocean–atmosphere modeling framework (MITgcm–WRF) using ESMF/NUOPC, description and preliminary results for the Red Sea, Geosci. Model Dev., 12, 4221–4244, https://doi.org/10.5194/gmd-12-4221-2019, 2019. a

Theurich, G., DeLuca, C., Campbell, T., Liu, F., Saint, K., Vertenstein, M., Chen, J., Oehmke, R., Doyle, J. D., Whitcomb, T., Wallcraft, A. J., Iredell, M., Black, T., Da Silva, A. M., Clune, T., Ferraro, R., Li, P., Kelley, M., Aleinov, I., Balaji, V., Zadeh, N., Jacob, R., Kirtman, B., Giraldo, F., McCarren, D., Sandgathe, S., Peckham, S., and Dunlap, R.: The earth system prediction suite: Toward a coordinated U.S. modeling capability, B. Am. Meteorol. Soc., 97, 1229–1247, https://doi.org/10.1175/BAMS-D-14-00164.1, 2016. a, b

Turuncoglu, U. U.: Toward modular in situ visualization in Earth system models: the regional modeling system RegESM 1.1, Geosci. Model Dev., 12, 233–259, https://doi.org/10.5194/gmd-12-233-2019, 2019. a, b

Ullrich, P. A., Jablonowski, C., Kent, J., Lauritzen, P. H., Nair, R., Reed, K. A., Zarzycki, C. M., Hall, D. M., Dazlich, D., Heikes, R., Konor, C., Randall, D., Dubos, T., Meurdesoif, Y., Chen, X., Harris, L., Kühnlein, C., Lee, V., Qaddouri, A., Girard, C., Giorgetta, M., Reinert, D., Klemp, J., Park, S.-H., Skamarock, W., Miura, H., Ohno, T., Yoshida, R., Walko, R., Reinecke, A., and Viner, K.: DCMIP2016: a review of non-hydrostatic dynamical core design and intercomparison of participating models, Geosci. Model Dev., 10, 4477–4509, https://doi.org/10.5194/gmd-10-4477-2017, 2017. a

Valcke, S., Balaji, V., Craig, A., DeLuca, C., Dunlap, R., Ford, R. W., Jacob, R., Larson, J., O'Kuinghttons, R., Riley, G. D., and Vertenstein, M.: Coupling technologies for Earth System Modelling, Geosci. Model Dev., 5, 1589–1596, https://doi.org/10.5194/gmd-5-1589-2012, 2012. a

Warner, J. C., Armstrong, B., He, R., and Zambon, J. B.: Development of a Coupled Ocean–Atmosphere–Wave–Sediment Transport (COAWST) Modeling System, Ocean Model., 35, 230–244, https://doi.org/10.1016/j.ocemod.2010.07.010, 2010. a

WW3DG (The WAVEWATCH III Development Group): User Manual and System Documentation of WAVEWATCH III version 6.07, Tech. Rep. Tech. Note 33, NOAA/NWS/NCEP/MMAB, available at: https://github.com/NOAA-EMC/WW3/wiki/files/manual.pdf (last access: 22 June 2021), 2019. a

Zängl, G., Reinert, D., Rípodas, P., and Baldauf, M.: The ICON (ICOsahedral Non-hydrostatic) modelling framework of DWD and MPI-M: Description of the non-hydrostatic dynamical core, Q. J. Roy. Meteor. Soc., 141, 563–579, https://doi.org/10.1002/qj.2378, 2015. a, b, c, d, e, f, g

- Abstract

- Introduction

- The coupled model system ICONGETM

- Data exchange between ICON and GETM

- Demonstration

- Discussion

- Conclusions

- Appendix A: List of model and software abbreviations

- Code availability

- Data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References

- Abstract

- Introduction

- The coupled model system ICONGETM

- Data exchange between ICON and GETM

- Demonstration

- Discussion

- Conclusions

- Appendix A: List of model and software abbreviations

- Code availability

- Data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References