the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

The Cloud Feedback Model Intercomparison Project Observational Simulator Package: Version 2

Dustin J. Swales

Robert Pincus

Alejandro Bodas-Salcedo

The Cloud Feedback Model Intercomparison Project Observational Simulator Package (COSP) gathers together a collection of observation proxies or “satellite simulators” that translate model-simulated cloud properties to synthetic observations as would be obtained by a range of satellite observing systems. This paper introduces COSP2, an evolution focusing on more explicit and consistent separation between host model, coupling infrastructure, and individual observing proxies. Revisions also enhance flexibility by allowing for model-specific representation of sub-grid-scale cloudiness, provide greater clarity by clearly separating tasks, support greater use of shared code and data including shared inputs across simulators, and follow more uniform software standards to simplify implementation across a wide range of platforms. The complete package including a testing suite is freely available.

- Article

(2115 KB) - Full-text XML

- BibTeX

- EndNote

The most recent revision to the protocols for the Coupled Model Intercomparison Project (Eyring et al., 2016) includes a set of four experiments for the Diagnosis, Evaluation, and Characterization of Klima (Climate). As the name implies, one intent of these experiments is to evaluate model fields against observations, especially in simulations in which sea-surface temperatures are prescribed to follow historical observations. Such an evaluation is particularly important for clouds since these are a primary control on the Earth's radiation budget.

But such a comparison is not straightforward. The most comprehensive views of clouds are provided by satellite remote sensing observations. Comparisons to these observations are hampered by the large discrepancy between the model representation, as profiles of bulk macro- and micro-physical cloud properties, and the information available in the observations which may, for example, be sensitive only to column-integrated properties or be subject to sampling issues caused by limited measurement sensitivity or signal attenuation. To make comparisons more robust, the Cloud Feedback Model Intercomparison Project (CFMIP, https://www.earthsystemcog.org/projects/cfmip/) has led efforts to apply observation proxies or “instrument simulators” to climate model simulations made in support of the Climate Model Intercomparison Project (CMIP) and CFMIP.

Instrument simulators are diagnostic tools that map the model state into synthetic observations. The ISCCP (International Satellite Cloud Climatology Project) simulator (Klein and Jakob, 1999; Webb et al., 2001), for example, maps a specific representation of cloudiness to aggregated estimates of cloud-top pressure and optical thickness as would be provided by a particular satellite observing program, accounting for sampling artifacts such as the masking of high clouds by low clouds and providing statistical summaries computed in precise analogy to the observational datasets. Subsequent efforts have produced simulators for other passive instruments that include the Multi-angle Imaging SpectroRadiometer (MISR: Marchand and Ackerman, 2010) and Moderate Resolution Imaging Spectroradiometer (MODIS; Pincus et al., 2012) and for the active platforms Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation (CALIPSO; Chepfer et al., 2008) and CloudSat (Haynes et al., 2007).

Some climate models participating in the initial phase of CFMIP provided results from the ISCCP simulator. To ease the way for adoption of multiple simulators, CFMIP organized the development of the Observation Simulator Package (COSP; Bodas-Salcedo et al., 2011). A complete list of the instrument simulator diagnostics available in COSP1, and also in COSP2, can be found in Bodas-Salcedo et al. The initial implementation, hereafter COSP1, supported more widespread and thorough diagnostic output requested as part of the second phase of CFMIP associated with CMIP5 (Taylor et al., 2012). Similar but somewhat broader requests are made as part of CFMIP3 (Webb et al., 2017) and CMIP6 (Eyring et al., 2016).

The view of model clouds enabled by COSP has enabled important advances. Results from COSP have been useful in identifying biases in the distribution of model-simulated clouds within individual models (Kay et al., 2012; Nam and Quaas, 2012), across the collection of models participating in coordinated experiments (Nam et al., 2012), and across model generations (Klein et al., 2013). Combined results from active and passive sensors have highlighted tensions between process fidelity and the ability of models to reproduce historical warming (Suzuki et al., 2013), while synthetic observations from the CALIPSO simulator have demonstrated how changes in vertical structure may provide the most robust measure of climate change on clouds (Chepfer et al., 2014). Results from the ISCCP simulator have been used to estimate cloud feedbacks and adjustments (Zelinka et al., 2013) through the use of radiative kernels (Zelinka et al., 2012).

COSP1 simplified the implementation of multiple simulators within climate models but treated many components, especially the underlying simulators contributed by a range of collaborators, as inviolate. After most of a decade this approach was showing its age, as we detail in the next section. Section 3 describes details the conceptual model underlying a new implementation of COSP and a design that addresses these issues. Section 4 provides some details regarding implementation. Section 5 contains a summary of COSP2 and provides information about obtaining and building the software.

Especially in the context of cloud feedbacks, diagnostic information about clouds is most helpful when it is consistent with the radiative fluxes to which the model is subject. COSP2 primarily seeks to address a range of difficulties that arose in maintaining this consistency in COSP1 as the package became used in an increasingly wide range of models. For example, as COSP1 was implemented in a handful of models, it became clear that differing cloud microphysics across models would often require sometimes quite substantial code changes to maintain consistency between COSP1 and the host model.

The satellite observations COSP emulates are derived from individual observations made on spatial scales of order kilometers (for active sensors, tens of meters) and statistically summarized at ∼ 100 km scales commensurate with model predictions. To represent this scale bridging, the ISSCP simulator introduced the idea of subcolumns – discrete, homogenous samples constructed so that a large ensemble reproduces the profile of bulk cloud properties within a model grid column and any overlap assumptions made about vertical structure. COSP1 inherited the specific methods for generating subcolumns from the ISCCP simulator, including a fixed set of inputs (convective and stratiform cloud fractions, visible-wavelength optical thickness for ice and liquid, mid-infrared emissivity) describing the distribution of cloudiness. Models for which this description was not appropriate, for example a model in which more than one category of ice was considered in the radiation calculation (Kay et al., 2012), had to make extensive changes to COSP if the diagnostics were to be informative.

The fixed set of inputs limited models' ability to remain consistent with the radiation calculations. Many global models now use the Monte Carlo independent column approximation (Pincus et al., 2003) to represent subgrid-scale cloud variability in radiation calculations. Inspired by the ISCCP simulator, McICA randomly assigns subcolumns to spectral intervals, replacing a two-dimensional integral over cloud state and wavelength with a Monte Carlo sample. Models using McICA for radiation calculations must implement methods for generating subcolumns, and the inability to share these calculations between radiation and diagnostic calculations was neither efficient nor self-consistent.

COSP1 was effective in packaging together a set of simulators developed independently and without coordination, but this had its costs. COSP1 contains three independent routines for computing joint histograms, for example. Simulators required inputs, some closely related (relative and specific humidity, for example), and produced arbitrary mixes of outputs at the column and subcolumn scale, making multi-sensor analyses difficult.

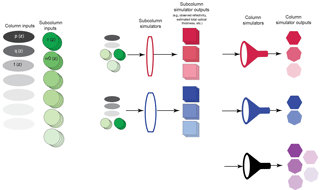

Figure 1Organizational view of COSP2. Within each grid cell host models provide a range of physical inputs at the grid scale (grey ovals, one profile per variable) and optical properties at the cloud scale (green circles, Nsubcol profiles per variable). Individual subcolumn simulators (lens shapes, colored to indicate simulator types) produce Nsubcol synthetic retrievals (squares) which are then summarized by aggregation routines (funnel shapes) taking input from one or more subcolumn simulators.

Though the division was not always apparent in COSP1, all satellite simulators perform four discrete tasks within each column:

-

sampling of cloud properties to create homogenous subcolumns;

-

mapping of cloud physical properties (e.g., condensate concentrations and particle sizes) to relevant optical properties (optical depth, single scattering albedo, radar reflectivity, etc.);

-

synthetic retrievals of individual observations (e.g., profiles of attenuated lidar backscatter or cloud-top pressure/column optical thickness pairs); and

-

statistical summarization (e.g., appropriate averaging or computation of histograms).

The first two steps require detailed knowledge as to how a host model represents cloud physical properties; the last two steps mimic the observational process.

The design of COSP2 reflects this conceptual model. The primary inputs to COSP2 are subcolumns of optical properties (i.e., the result of step 2 above), and it is the host model's responsibility to generate subcolumns and map physical to optical properties consistent with model formulation. This choice allows models to leverage infrastructure for radiation codes using McICA, making radiation and diagnostic calculations consistent with one another. Just as with previous versions of COSP, using subcolumns is only necessary for models with coarser resolutions (e.g., GCMs) and for high-resolution models (e.g., cloud-resolving models); model columns can be provided directly to COSP2. The instrument simulator components were reorganized to eliminate any internal dependencies on the host model, and subsequently on a model scale. COSP2 also requires as input a small set of column-scale quantities including surface properties and thermodynamic profiles. These are used, for example, by the ISCCP simulator to mimic the retrieval of cloud-top pressure from infrared brightness temperature.

Simulators within COSP2 are explicitly divided into two components (Fig. 1). The subcolumn simulators, shown as lenses with colors representing the sensor being mimicked, take a range of column inputs (ovals) and subcolumn inputs (circles, with stacks representing multiple samples) and produce synthetic retrievals on the subcolumn scale, shown as stacks of squares. Column simulators, drawn as funnels, reduce these subcolumn synthetic retrievals to statistical summaries (hexagons). Column simulators may summarize information from a single observing system, as indicated by shared colors. Other column simulators may synthesize subcolumn retrievals from multiple sources, as suggested by the black funnel.

This division mirrors the processing of satellite observations by space agencies. At NASA, for example, these processing steps correspond to the production of Level 2 and Level 3 data, respectively. Implementation required the restructuring of many of the component simulators from COSP1. This allowed for modest code simplification by using common routines to make statistical calculations.

Separating the computation of optical properties from the description of individual simulators allows for modestly increased efficiency because inputs shared across simulators, for example the 0.67 µm optical depth required by the ISCCP, MODIS, and MISR simulators, do not need to be recomputed or copied. The division also allowed us to make some simulators more generic. In particular, the CloudSat simulator used by COSP is based on the Quickbeam package (Haynes et al., 2007). Quickbeam is quite generic with respect to radar frequency and the location of a sensor, but this flexibility was lost in COSP1. COSP2 exposes the generic nature of the underlying subcolumn lidar and radar simulators and introduces configuration variables that provide instrument-specific information to the subcolumn calculation.

4.1 Interface and control flow

The simplest call to COSP now makes use of three Fortran-derived types representing the column and subcolumn inputs and the desired outputs. The components of these types are PUBLIC (that is, accessible by user code) and are, with few exceptions, pointers to appropriately dimensioned arrays. COSP determines which subcolumn and column simulators are to be run based on the allocation status of these arrays, as described below. All required subcolumn simulators are invoked, followed by all subcolumn simulators. Optional arguments can be provided to restrict work to a subset of the provided domain (set of columns) to limit memory use.

COSP2 has no explicit way of controlling which simulators are to be invoked. Instead, column simulators are invoked if space for one or more outputs is allocated – that is, if one or more of the output variables (themselves components of the output-derived type) are associated with array memory of the correct shape. The set of column simulators determines which subcolumn simulators are to be run. Not providing the inputs to these subcolumn simulators is an error.

The use of derived types allows COSP's capabilities to be expanded incrementally. Adding a new simulator, for example, requires adding new components to the derived type representing inputs and outputs, but codes referring to existing components of those types need not be changed. This functionality is already in use – the output fields available in COSP2 extend COSP1's capabilities to include the joint histograms of optical thickness and effective radius requested as part of CFMIP3.

4.2 Enhancing portability

COSP2 also includes a range of changes aimed at providing more robust, portable, and/or flexible code, many of which were suggested by one or more modeling centers using COSP. These include the following.

-

Robust error checking, implemented as a single routine which validates array shapes and physical bounds on values.

-

Error reporting standardized to return strings, where non-null values indicate failure.

-

Parameterized precision for all REAL variables (KIND = wp), where the value of wp can be set in a single location to correspond to 32 or 64 byte real values.

-

Explicit INTENT for all subroutine arguments.

-

Standardization of vertical ordering for arrays in which the top of the domain is index 1.

-

Conformity to Fortran 2003 standards.

COSP2 must also be explicitly initialized before use. The initialization routine calls routines for each simulator in turn. This allows for more flexible updating of ancillary data such as lookup tables.

Version 2 of the CFMIP Observational Simulator Package, COSP2, represents a substantial revision of the COSP platform. The primary goal was to allow a more flexible representation of clouds, so that the diagnostics produced by COSP can be fully consistent with radiation calculations made by the host model, even in the face of increasingly complex descriptions of cloud macro- and micro-physical properties. Consistency requires that host models generate subcolumns and compute optical properties, so that the interface to the host model is entirely revised relative to COSP1. As an example and a bridge to past efforts, COSP2 includes an optional layer that provides compatibility with COSP 1.4.1 (the version to be used for CFMIP3), accepting the same inputs and implementing sampling and optical property calculations in the same way.

Simulators in COSP2 are divided into those that compute subcolumn (pixel) scale synthetic retrievals and those that compute column (grid) scale statistical summaries. This distinction, and the use of extensible derived types in the interface to the host model, are designed to make it easier to extend COSP's capabilities by adding new simulators at either scale, including analysis making use of observations from multiple sources.

The source code for COSP2, along with downloading and installation instructions, is available in a GitHub repository (https://github.com/CFMIP/COSPv2.0). Previous versions of COSP (e.g., v1.3.1, v1.3.2, v1.4.0 and v1.4.1) are available in a parallel repository (https://github.com/CFMIP/COSPv1). But these versions have reached the end of their life, and COSP2 provides the basis for future development. Models updating or implementing COSP, or developers wishing to add new capabilities, are best served by starting with COSP2.

The authors declare that they have no conflict of interest.

The authors thank the COSP Project Management Committee for guidance and Tomoo Ogura for testing the implementation of

COSP2 in the MIROC climate model. Dustin Swales and Robert Pincus were financially supported by NASA under award

NNX14AF17G. Alejandro Bodas-Salcedo

received funding from the IS-ENES2 project, European

FP7-INFRASTRUCTURES-2012-1 call (grant agreement

312979).

Edited by: Klaus Gierens

Reviewed by: Bastian Kern and one anonymous referee

Bodas-Salcedo, A., Webb, M. J., Bony, S., Chepfer, H., Dufrense, J. L., Klein, S. A., Zhang, Y., Marchand, R., Haynes, J. M., Pincus, R., and John, V.: COSP: satellite simulation software for model assessment, B. Am. Meteorol. Soc., 92, 1023–1043, https://doi.org/10.1175/2011BAMS2856.1, 2011.

Chepfer, H., Bony, S., Winker, D., Chiriaco, M., Dufresne, J.-L., and Seze, G.: Use of CALIPSO lidar observations to evaluate the cloudiness simulated by a climate model, Geophys. Res. Lett., 35, L15704, https://doi.org/10.1029/2008GL034207, 2008.

Chepfer, H., Noel, V., Winker, D., and Chiriaco, M.: Where and when will we observe cloud changes due to climate warming?, Geophys. Res. Lett., 41, 8387–8395, https://doi.org/10.1002/2014GL061792, 2014.

Eyring, V., Bony, S., Meehl, G. A., Senior, C. A., Stevens, B., Stouffer, R. J., and Taylor, K. E.: Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization, Geosci. Model Dev., 9, 1937–1958, https://doi.org/10.5194/gmd-9-1937-2016, 2016.

Haynes, J. M., Marchand, R., Luo, Z., Bodas-Salcedo, A., and Stephens, G. L.: A multipurpose radar simulation package: QuickBeam, B. Am. Meteorol. Soc., 88, 1723–1727, https://doi.org/10.1175/BAMS-88-11-1723, 2007.

Kay, J. E., Hillman, B. R., Klein, S. A., Zhang, Y., Medeiros, B. P., Pincus, R., Gettelman, A., Eaton, B., Boyle, J., Marchand, R., and Ackerman, T. P.: Exposing global cloud biases in the Community Atmosphere Model (CAM) using satellite observations and their corresponding instrument simulators, J. Climate, 25, 5190–5207, https://doi.org/10.1175/JCLI-D-11-00469.1, 2012.

Klein, S. A. and Jakob, C.: Validation and sensitivities of frontal clouds simulated by the ECMWF model, Mon. Weather Rev., 127, 2514–2531, https://doi.org/10.1175/1520-0493(1999)127<2514:VASOFC>2.0.CO;2, 1999.

Klein, S. A., Zhang, Y., Zelinka, M. D., Pincus, R., Boyle, J., and Gleckler, P. J.: Are climate model simulations of clouds improving? An evaluation using the ISCCP simulator, J. Geophys. Res., 118, 1329–1342, https://doi.org/10.1002/jgrd.50141, 2013.

Marchand, R. and Ackerman, T. P.: An analysis of cloud cover in Multiscale Modeling Framework Global Climate Model Simulations using 4 and 1 km horizontal grids, J. Geophys. Res., 115, D16207, https://doi.org/10.1029/2009JD013423, 2010.

Nam, C. C. W. and Quaas, J.: Evaluation of clouds and precipitation in the ECHAM5 general circulation model using CALIPSO and CloudSat satellite data, J. Climate, 25, 4975–4992, https://doi.org/10.1175/JCLI-D-11-00347.1, 2012.

Nam, C., Bony, S., Dufresne, J.-L., and Chepfer, H.: The “too few, too bright” tropical low-cloud problem in CMIP5 models, Geophys. Res. Lett., 39, L21801, https://doi.org/10.1029/2012GL053421, 2012.

Pincus, R., Barker, H. W., and Morcrette, J.-J.: A fast, flexible, approximate technique for computing radiative transfer in inhomogeneous cloud fields, J. Geophys. Res., 108, 4376, https://doi.org/10.1029/2002JD003322, 2003.

Pincus, R., Platnick, S., Ackerman, S. A., Hemler, R. S., and Hofmann, R. J. P.: Reconciling simulated and observed views of clouds: MODIS, ISCCP, and the limits of instrument simulators, J. Climate, 25, 4699–4720, https://doi.org/10.1175/JCLI-D-11-00267.1, 2012.

Suzuki, K., Golaz, J.-C., and Stephens, G. L.: Evaluating cloud tuning in a climate model with satellite observations, Geophys. Res. Lett., 40, 4464–4468, https://doi.org/10.1002/grl.50874, 2013.

Taylor, K. E., Stouffer, R. J., and Meehl, G. A.: An overview of CMIP5 and the experiment design, B. Am. Meteorol. Soc., 93, 485–498, https://doi.org/10.1175/BAMS-D-11-00094.1, 2012.

Webb, M. J., Andrews, T., Bodas-Salcedo, A., Bony, S., Bretherton, C. S., Chadwick, R., Chepfer, H., Douville, H., Good, P., Kay, J. E., Klein, S. A., Marchand, R., Medeiros, B., Siebesma, A. P., Skinner, C. B., Stevens, B., Tselioudis, G., Tsushima, Y., and Watanabe, M.: The Cloud Feedback Model Intercomparison Project (CFMIP) contribution to CMIP6, Geosci. Model Dev., 10, 359–384, https://doi.org/10.5194/gmd-10-359-2017, 2017.

Webb, M. J., Senior, C., Bony, S., and Morcrette, J.-J.: Combining ERBE and ISCCP data to assess clouds in the Hadley Centre, ECMWF and LMD atmospheric climate models, Clim. Dynam., 17, 905–922, https://doi.org/10.1007/s003820100157, 2001.

Zelinka, M. D., Klein, S. A., and Hartmann, D. L.: Computing and partitioning cloud feedbacks using cloud property histograms. Part I: Cloud radiative kernels, J. Climate, 25, 3715–3735, https://doi.org/10.1175/JCLI-D-11-00248.1, 2012.

Zelinka, M. D., Klein, S. A., Taylor, K. E., Andrews, T., Webb, M. J., Gregory, J. M., and Forster, P. M.: Contributions of different cloud types to feedbacks and rapid adjustments in CMIP5, J. Climate, 26, 5007–5027, https://doi.org/10.1175/JCLI-D-12-00555.1, 2013.